大數據數據科學家常用面試題

During my time as a Data Scientist, I had the chance to interview my fair share of candidates for data-related roles. While doing this, I started noticing a pattern: some kinds of (simple) mistakes were overwhelmingly frequent among candidates! In striking disagreement with a famous quote by Tolstoy, it seems to me, “most unhappy mistakes in case studies look alike”.

在擔任數據科學家期間,我有機會采訪了相當一部分與數據相關的職位的候選人。 在這樣做的同時,我開始注意到一種模式:候選人中絕大多數(簡單)的錯誤非常頻繁! 在我看來,與托爾斯泰的一句名言大相徑庭的是,“案例研究中最不幸的錯誤看起來是相似的”。

In my mind, I started picturing the kind of candidate that I would hire in a heartbeat. No, not a Rockstar/Guru/Evangelist with 12 years of professional experience managing Kubernetes clusters and working with Hadoop/Spark, while simultaneously contributing to TensorFlow’s development, obtaining 2 PhDs, and publishing at least 3 Deep Learning papers per year. Nope; I would just instantly be struck by a person who at least does not make the kind of mistakes I am about to describe… And I can imagine the same happening in other companies, with other interviewers.

在我的腦海中,我開始想象自己會心動的候選人。 不,不是擁有12年管理Kubernetes集群和Hadoop / Spark的專業經驗的Rockstar / Guru / Evangelist,同時又為TensorFlow的發展做出了貢獻,獲得了2個博士學位,并每年發表至少3篇Deep Learning論文。 不; 我將立即被至少沒有犯我將要描述的那種錯誤的人打動……我可以想象在其他公司和其他面試官中也發生了同樣的情況。

Although this is a personal and quite opinionated list, I hope these few tips and tricks can be of some help to people at the start of their data science career! I am putting here only the more DS-related things that came to my mind, but of course writing Pythonic, readable, and expressive code is also something that will please immensely whomever is interviewing you!

盡管這是一份個人且頗為自以為是的清單,但我希望這些提示和技巧對人們在數據科學事業開始時能夠有所幫助! 我只想起更多與DS相關的事情,但是當然編寫Python式,可讀性和表達性代碼也將極大地取悅與您面談的任何人!

馬虎使用熊貓 (Sloppy use of Pandas)

Let’s face it: for most of your day-to-day tasks as a data scientist you will be manipulating tables, slicing them, grouping them by the values contained in a column, applying transformations to them, and so on. This almost automatically implies that Pandas is one of the most important foundational tools for a data scientist, and if you are able to showcase some mastery with it, well, people will take you quite seriously.

讓我們面對現實:作為數據科學家,您在日常的大部分工作中都會處理表格,對其進行切片,將它們按列中包含的值進行分組,對其進行轉換等等。 這幾乎自動意味著,Pandas是數據科學家最重要的基礎工具之一,如果您能夠展示它的精通知識,那么人們會非常重視您的。

On the contrary, if you systematically do very low-level manipulations on your DataFrames where a built-in Pandas command exist, you will potentially raise all kinds of red flags.

相反,如果您在存在內置Pandas命令的DataFrame上系統地進行非常低級的操作,則可能會引發各種危險信號。

Here are a few tricks to improve with Pandas:

以下是熊貓改進的一些技巧:

USE IT!

用它!

- Whenever you have to do any manipulation of a DataFrame or Series, stop for a couple of minutes and read the docs to check whether there are already built-in methods that can save you 90% of the work. Even if you don’t find them, in the process of reading through the documentation you will learn tons of stuff that will very likely come in handy in the future. 每當您需要對DataFrame或Series進行任何處理時,都請停幾分鐘并閱讀文檔,以檢查是否已經有內置方法可以節省90%的工作。 即使您找不到它們,在閱讀文檔的過程中,您還將學到很多東西,這些東西將來很有可能會派上用場。

Read tutorials written by trustworthy people, see how they do some operations. Especially, Part II of Tom Augspurger’s Modern Pandas tutorial is quite a good place to start with. Even better, read not just part II, but the whole series. Also, this talk by Vincent D. Warmerdam is worth looking at.

閱讀可信賴人員撰寫的教程,了解他們如何進行某些操作。 特別是, Tom Augspurger的Modern Pandas教程的第二部分是一個很好的起點。 更好的是,不僅閱讀第二部分,還閱讀整個系列。 此外, 文森特·D·沃默丹(Vincent D. Warmerdam)的演講值得一看。

If you have to perform some complicated, maybe not built-in, transformation of your data, consider wrapping it in a function! After you do that,

.pipe(...)and.apply(...)are your friends.如果您必須執行一些復雜的(也許不是內置的)數據轉換,請考慮將其包裝在函數中! 完成之后,

.pipe(...)和.apply(...)是您的朋友。

Final tip: do not use inplace=True anywhere. Contrary to popular belief, it doesn’t bring any performance bonus and it naturally makes you write unclear code, as it hinders your ability to chain methods. Hopefully this feature will be discontinued sometime in the future.

最后提示:請勿在任何地方使用inplace=True 。 與流行的看法相反,它不會帶來任何性能上的好處,并且自然會使您編寫不清楚的代碼,因為這會妨礙您鏈接方法的能力。 希望此功能將來會停止 。

信息從測試儀泄漏 (Information leaking from the test set)

The test set is sacred; while building models or selecting the best one you got so far, it should not even be looked at. Think about it: the reason why we have a test set in the first place is that we want to have an unbiased estimate of the generalization error of a model. If we are allowed to get a sneak peek into “the future” (i.e., data that during training and model building fundamentally we should not have access to) it’s almost guaranteed that we will get influenced by that, and bias our error estimates.

測試集是神圣的; 在構建模型或選擇迄今為止獲得的最佳模型時,甚至不應該考慮它。 想想看:我們之所以首先擁有一個測試集,是因為我們想要對模型的泛化誤差進行無偏估計。 如果允許我們窺視“未來”(即從根本上講我們在培訓和模型構建過程中不應該使用的數據),幾乎可以保證我們會受到此影響,并偏離我們的錯誤估計。

Although I’ve never seen anybody directly fit a model on the test set, quite commonly instead candidates performed hyperparameter tuning and model selection by looking at some metric on the test set. Please do not do that, but rather save part of the data as a validation set instead, or even better, perform cross-validation.

盡管我從未見過有人直接將模型擬合到測試集上,但相當普遍的是,考生通過查看測試集上的某些指標來執行超參數調整和模型選擇。 請不要這樣做,而是將部分數據保存為驗證集,或者甚至更好地執行交叉驗證。

Another quite common thing which causes leakage of information from the test set is fitting scalers (like sklearn.preprocessing.StandardScaler) or oversampling routines (e.g., imblearn.over_sampling.SMOTE) on the whole dataset. Again, feature engineering, resampling, and so on are part of how a model is built and trained: keep the test set out of it.

導致信息從測試集中泄漏的另一種非常普遍的情況是整個數據集上的擬合縮放器(例如sklearn.preprocessing.StandardScaler )或過采樣例程(例如, imblearn.over_sampling.SMOTE )。 同樣,特征工程,重采樣等也是模型構建和訓練的一部分:將測試集保留在模型之外。

平均缺陷 (Flaw of averages)

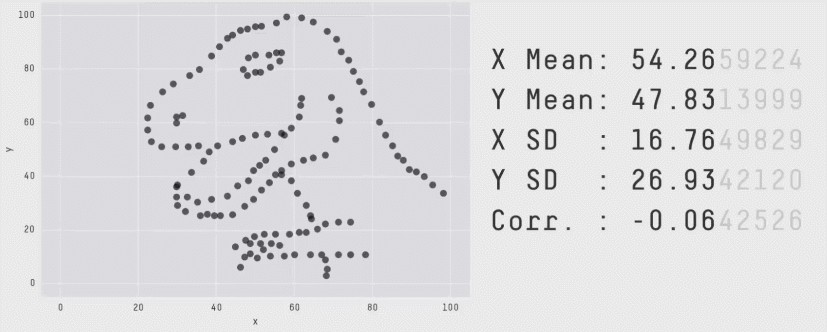

Although summary statistics, like averages, quantiles, and so on, are useful to get a first impression of the data, don’t make the mistake of reducing distributions to a single number when this doesn’t make sense. A classic cautionary example to showcase this is Anscombe’s quartet, but my favorite is the Datasaurus Dozen.

盡管摘要統計信息(例如平均值,分位數等)對于獲得數據的第一印象很有用,但不要犯這樣的錯誤,即在沒有意義的情況下將分布簡化為單個數。 一個典型的警示示例就是Anscombe的四重奏 ,但我最喜歡的是Datasaurus Dozen 。

More often than not, the distribution of your data points matters more than their average value, and especially in some applications the shape of the tails of your distributions is what at the end of the day governs decisions.

通常,數據點的分布比其平均值更重要,尤其是在某些應用程序中,分布的尾部形狀最終決定了決策。

If you show that you take this kind of issues in consideration, and don’t even wink when somebody mentions Jensen’s inequality, only good things can happen.

如果您證明自己考慮了此類問題,甚至在有人提到詹森的不平等時甚至都不眨眼,那么只會發生好事。

盲目使用圖書館 (Blind use of libraries)

When you are given a case study, you often have an advantage you can capitalize on: you choose the model(s) to use. That means that you can anticipate some of the questions interviewers might ask you!

在進行案例研究時,通常會具有一個可以利用的優勢:選擇要使用的模型。 這意味著您可以預見面試官可能會問您的一些問題!

For example, if you end up using an XGBClassifier for your task, try to understand how it works, as deeply as you can. Everyone knows it’s based on decision trees, but which other “ingredients” do you need for it? Do you know how XGBoost handles missing values? Could you explain Bagging and Boosting in layman’s terms?

例如,如果最終為任務使用XGBClassifier ,請嘗試盡可能深入地了解其工作方式。 每個人都知道它基于決策樹,但是您還需要其他“成分”嗎? 您知道XGBoost如何處理缺失值嗎? 您能用外行人的術語解釋裝袋和提振嗎?

Even if you end up using linear regression, you should have a clear idea about what is happening under the hood, and the meaning behind the parameters you set. If you say “I set the learning rate to X”, and somebody follows with “What’s a learning rate?”, it’s quite bad if you cannot at least spend a few words on it.

即使最終使用線性回歸,也應該對幕后情況以及所設置參數的含義有一個清晰的了解。 如果您說“我將學習率設置為X”,然后有人說“什么是學習率?”,那么您至少不能在上面花幾個字就很不好了。

可視化選擇差 (Poor visualization choices)

Choosing the correct options for your plots goes a long way too. Ultimately, I think the most common mistakes here are due to poor choice of normalization or not using the correct scales for the axes.

為您的繪圖選擇正確的選項還有很長的路要走。 最終,我認為這里最常見的錯誤是由于歸一化選擇不當或未使用正確的軸比例。

Let’s look at an example; the following snippet of code

讓我們看一個例子; 以下代碼片段

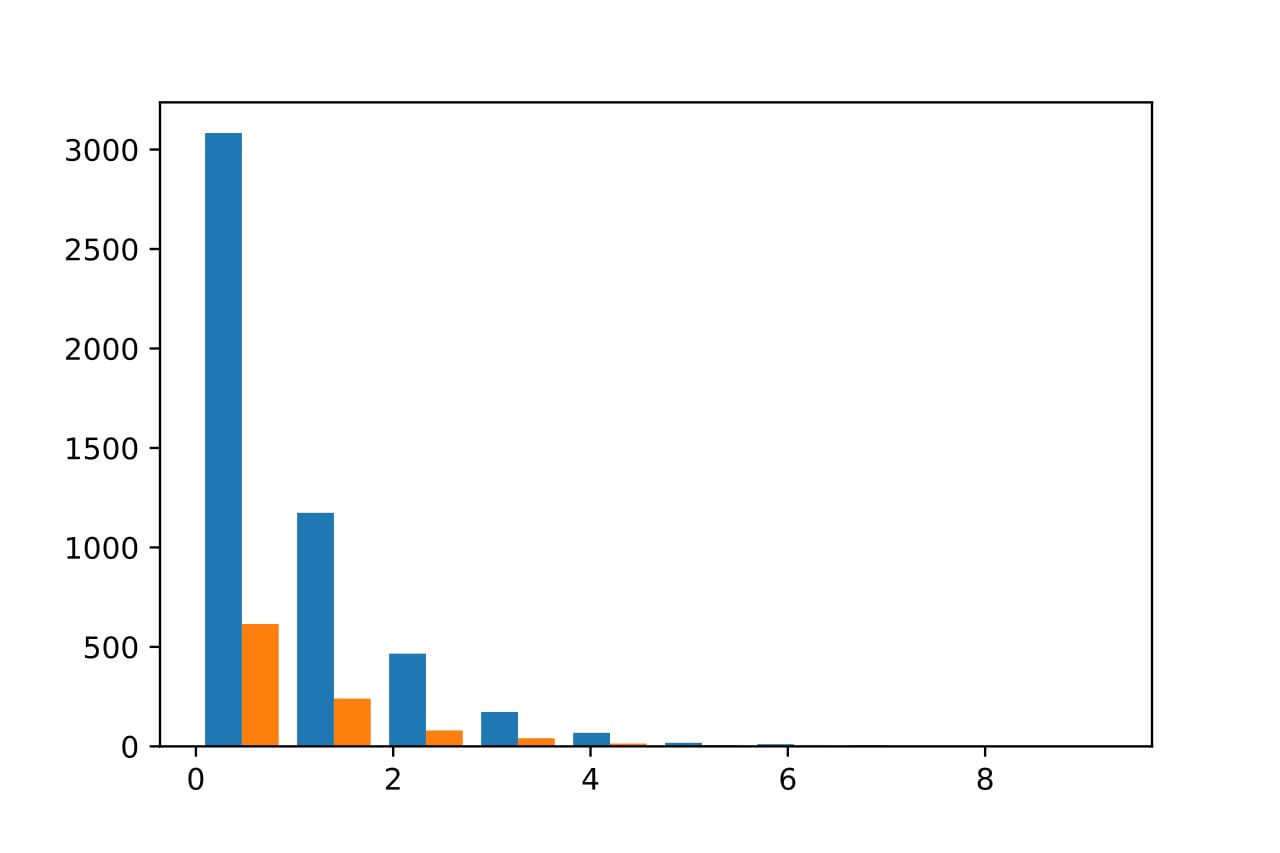

just creates two arrays with samples from an exponential distribution; then, it generates the following plot

只是創建兩個具有指數分布樣本的數組; 然后,生成以下圖

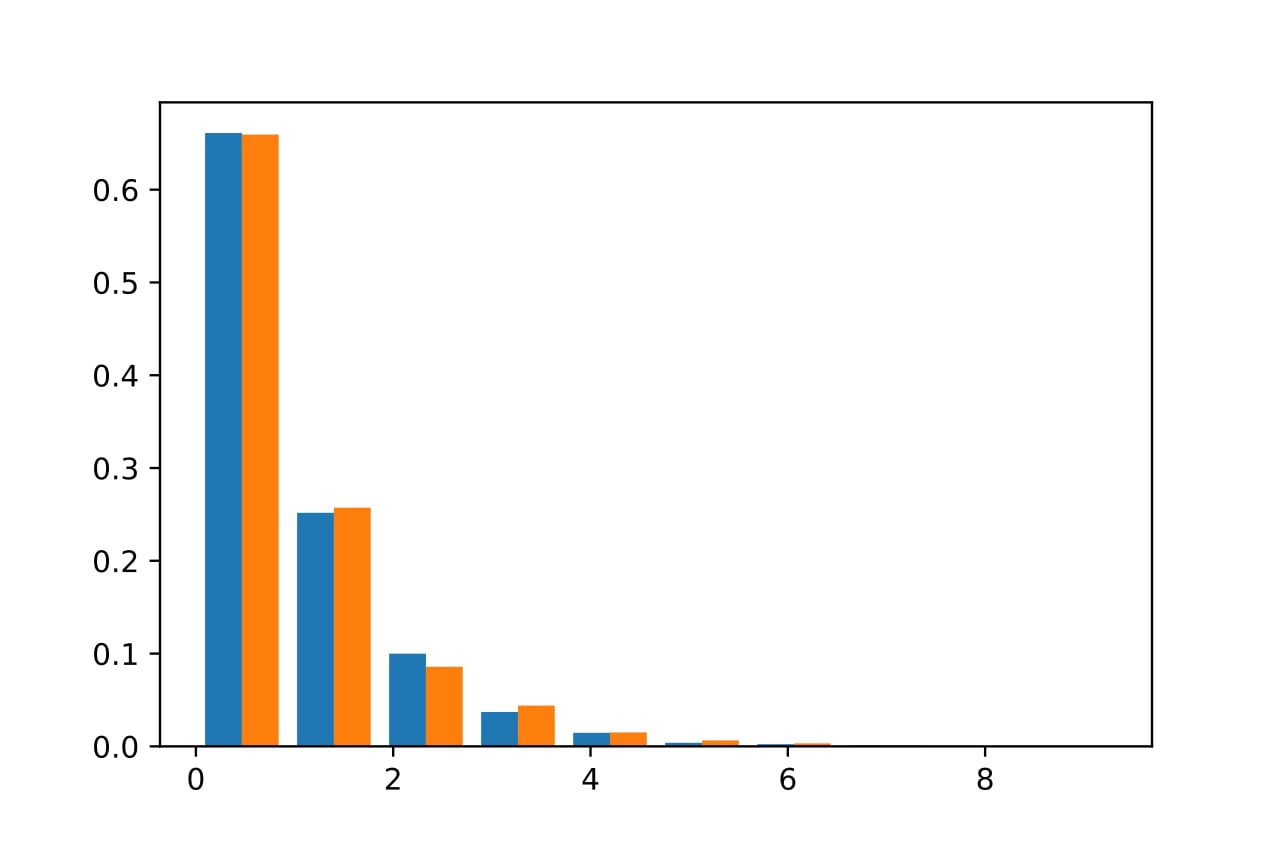

I saw some variation of this an enormous amount of times; basically, what we would really like to do is compare the distribution of something among two groups, but in this plot we are only showing raw counts of observed values. If one of the groups has more samples than the other, a plot like this is meaningless to get an idea of the underlying distributions. A better choice would be to normalize what we are displaying in a sensible way: in this case, just setting the parameter density=True transforms the raw counts into relative frequencies, and gives us the following:

我看到了很多次這種變化。 基本上,我們真正想做的是比較兩組之間某物的分布,但是在此圖中,我們僅顯示了觀測值的原始計數。 如果一組中的一個樣本比另一組中的樣本更多,則這樣的圖對于了解基本分布毫無意義。 更好的選擇是以一種明智的方式對顯示的內容進行規范化:在這種情況下,只需將參數density=True設置即可將原始計數轉換為相對頻率,并提供以下信息:

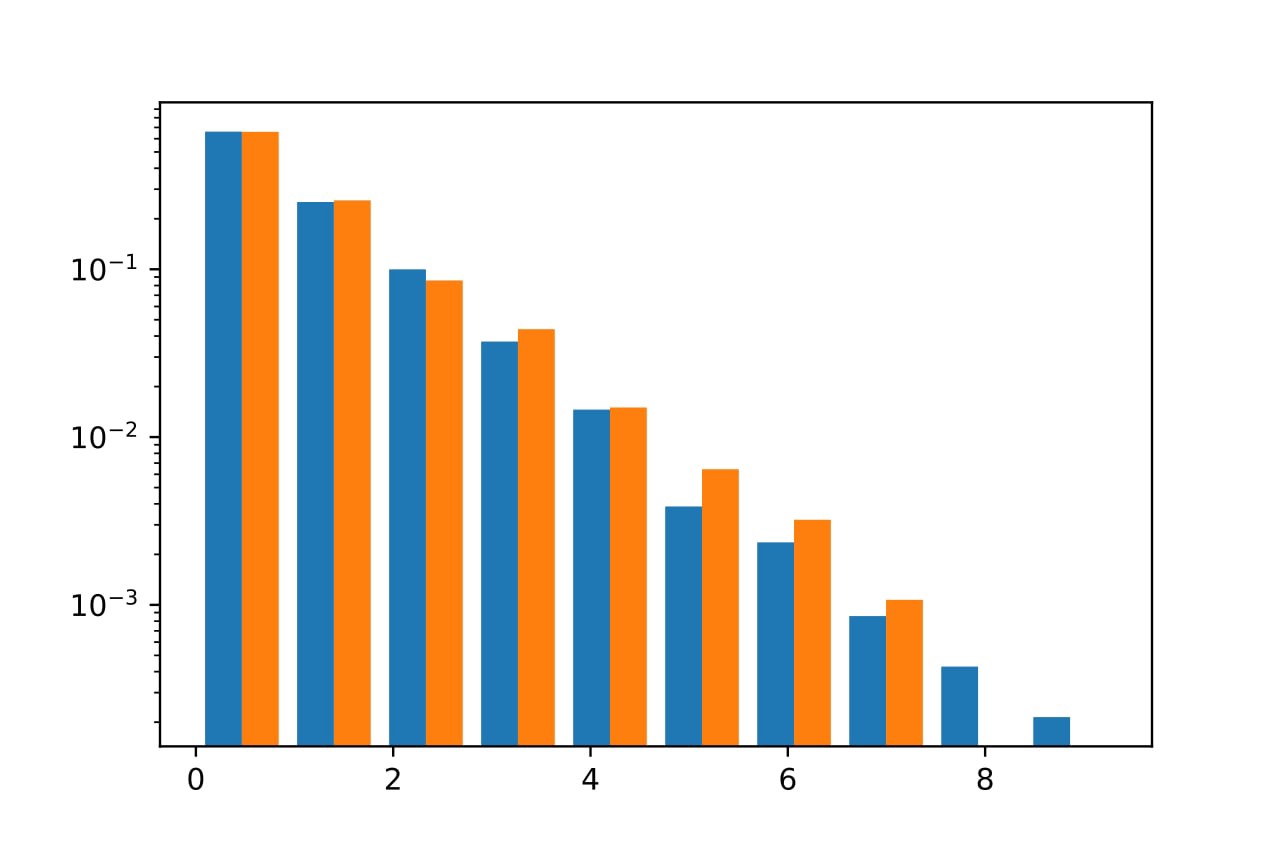

Nice! Now we can explicitly see that, after all, a and b are samples from the same distribution. There is still something that I dislike here: a lot of white space, and the fact that for values of a or b larger than 4, I cannot really see any bar clearly. Luckily, since 1614 Logarithms are a common mathematical operation… So common that we even have a dedicated keyword argument in plt.hist(...) that just transforms our linear y-axis to a logarithmic one:

真好! 現在我們可以明確地看到, a和b畢竟是來自同一分布的樣本。 在這里,我仍然不喜歡某些東西:很多空白,而且對于大于4的a或b值,我看不到任何清晰的條形。 幸運的是,自1614年以來,對數是一種常見的數學運算...如此普遍,以至于我們甚至在plt.hist(...)中都有一個專用的關鍵字參數, plt.hist(...)參數僅將線性y軸轉換為對數:

Notice that this is by no means a “perfect” plot: our axes are unlabeled, no legend, and it just looks kinda ugly! But hey, at least we can extract insights that we would have never been able to see with just a call to plt.hist([a,b]).

請注意,這絕不是一個“完美”的圖:我們的軸是未標記的,沒有圖例,而且看起來有點難看! 但是,至少我們可以通過調用plt.hist([a,b])來提取我們從未見過的見解。

結論 (Conclusion)

What all the above-listed mistakes have in common is that they are easily avoidable with some thought and knowledge of the subject, so my advice for your next data science case study is: relax, focus, try to be one step ahead of whatever mind game they’re playing with you, and Google for stuff (a lot!). Interviewing can be stressful, but if both parties are fair (especially people interviewing and coming up with assignments) it’s almost never lost time.

上面列出的所有錯誤的共同點在于,只要對主題有一定的了解和了解,就可以輕松避免這些錯誤,因此,我對下一個數據科學案例研究的建議是:放松,集中注意力,力爭領先一步他們與您一起玩的游戲,還有Google提供的東西(很多!)。 面試可能會帶來壓力,但如果雙方都公平( 尤其是面試和提出任務的人),則幾乎不會浪費時間。

Any feedback on this article would be much appreciated; did I miss anything that you think is particularly important?

對于本文的任何反饋將不勝感激; 我是否錯過了您認為特別重要的事情?

To conclude, I wish you all the best in your career, whatever job you happen to be doing now! Maybe see you at an interview :-)

最后,祝您事業順利,無論您現在正從事什么工作! 也許在面試中見到你:-)

翻譯自: https://towardsdatascience.com/acing-a-data-science-job-interview-b37e8b68869b

大數據數據科學家常用面試題

本文來自互聯網用戶投稿,該文觀點僅代表作者本人,不代表本站立場。本站僅提供信息存儲空間服務,不擁有所有權,不承擔相關法律責任。 如若轉載,請注明出處:http://www.pswp.cn/news/389418.shtml 繁體地址,請注明出處:http://hk.pswp.cn/news/389418.shtml 英文地址,請注明出處:http://en.pswp.cn/news/389418.shtml

如若內容造成侵權/違法違規/事實不符,請聯系多彩編程網進行投訴反饋email:809451989@qq.com,一經查實,立即刪除!