1.圖像分類網絡模型框架解讀

- 分類網絡的基本結構

- 數據加載模塊:對訓練數據加載

- 數據重組:組合成網絡需要的形式,例如預處理、增強、各種網絡處理、loss函數計算

- 優化器

- 數據加載模塊

- 使用公開數據集:torchvision.datasets

- 使用自定義數據集:torch.utils.data下的Dataset、DataLoader

- 數據增強模塊

- 使用torchvision.transforms

2.Cifar10數據讀取

Cifar10數據集下載鏈接:https://pan.baidu.com/s/1Dc6eQ54CCLFdCA2ORuFChg 提取碼: 5279

下在好的數據集解壓后的文件

創建兩個文件夾dataTrain和dataTest用于存儲數據集的圖片

將數據集中的訓練集圖片和測試集圖片存入自建的文件夾中,代碼如下:

import os

import cv2

import numpy as np

import glob

def unpickle(file):import picklewith open(file, 'rb') as fo:dict = pickle.load(fo, encoding='bytes')return dict

label_name = ['airplane','automobile','bird','cat','deer','dog','frog','horse','ship','truck'

]

# train_list = glob.glob('cifar-10-batches-py/data_batch_*') #下載訓練集圖片時使用此行

train_list = glob.glob('cifar-10-batches-py/test_batch*')

# save_path = 'cifar-10-batches-py/dataTrain' #下載訓練集圖片時使用此行

save_path = 'cifar-10-batches-py/dataTest'

for l in train_list:l_dict = unpickle(l)for im_idx,im_data in enumerate(l_dict[b'data']):im_label = l_dict[b'labels'][im_idx]im_name = l_dict[b'filenames'][im_idx]im_label_name = label_name[im_label]im_data = np.reshape(im_data,[3,32,32])im_data = np.transpose(im_data,(1,2,0))if not os.path.exists("{}/{}".format(save_path,im_label_name)):os.mkdir("{}/{}".format(save_path,im_label_name))cv2.imwrite("{}/{}/{}".format(save_path,im_label_name,im_name.decode("utf-8")),im_data)3.自定數據集加載

from torchvision import transforms

from torch.utils.data import DataLoader, Dataset

import os

from PIL import Image

import numpy as np

import glob

label_name = ['airplane','automobile','bird','cat','deer','dog','frog','horse','ship','truck'

]

label_dict = {}

for idx,name in enumerate(label_name):label_dict[name] = idx

def default_loader(path):return Image.open(path).convert('RGB')

#數據增強方法

train_transform = transforms.Compose([transforms.RandomResizedCrop((28,28)), #隨機裁剪transforms.RandomHorizontalFlip(), #隨機水平翻轉transforms.RandomVerticalFlip(), #隨機垂直翻轉transforms.RandomRotation(90), #隨機旋轉transforms.RandomGrayscale(0.1), #隨機灰度化transforms.ColorJitter(0.3,0.3,0.3,0.3), #隨機顏色調整transforms.ToTensor() #轉換為張量

])

class MyDataset(Dataset):def __init__(self,im_list,transform=None,loader=default_loader): super(MyDataset,self).__init__()imgs = []for im_item in im_list:im_label_name = im_item.split("/")[-2]imgs.append([im_item,label_dict[im_label_name]])self.imgs = imgsself.transform = transformself.loader = loaderdef __getitem__(self,index):im_path,im_label = self.img[index]im_data = self.loader(im_path)if self.transform is not None:im_data = self.transform(im_data)return im_data,im_labeldef __len__(self):return len(self.imgs)

im_train_list = glob.glob("cifar-10-batches-py/dataTrain/*/*.png") #獲取訓練集圖片路徑列表

im_test_list = glob.glob("cifar-10-batches-py/dataTest/*/*.png") #獲取測試集圖片路徑列表

train_dataset = MyDataset(im_train_list,transform=train_transform) #創建訓練集數據集

test_dataset = MyDataset(im_test_list,transform=transforms.ToTensor()) #創建測試集數據集

train_data_loader = DataLoader(dataset=train_dataset,batch_size=6,shuffle=True,num_workers=4)#創建訓練集數據加載器

test_data_loader = DataLoader(dataset=train_dataset,batch_size=6,shuffle=False,num_workers=4)#創建測試集數據加載器

print("num_of_train:",len(train_dataset))

print("num_of_test:",len(test_dataset))

代碼運行結果:

num_of_train: 50000

num_of_test: 10000?4.VGG網絡搭建

- 模型網絡搭建

import torch

import torch.nn as nn

import torch.nn.functional as F

#定義vgg網絡

class VGGbase(nn.Module):#定義vgg網絡的初始化函數def __init__(self):super(VGGbase,self).__init__() #調用父類的初始化函數#定義第一個卷積層,圖像大小:28*28self.conv1 = nn.Sequential(nn.Conv2d(3,64,kernel_size=3,stride=1,padding=1),nn.BatchNorm2d(64),nn.ReLU())self.max_pooling1 = nn.MaxPool2d(kernel_size=2,stride=2) #定義最大池化層#定義第二個卷積層,圖像大小:14*14self.conv2_1 = nn.Sequential(nn.Conv2d(64,128,kernel_size=3,stride=1,padding=1),nn.BatchNorm2d(128),nn.ReLU())self.conv2_2 = nn.Sequential(nn.Conv2d(128,128,kernel_size=3,stride=1,padding=1),nn.BatchNorm2d(128),nn.ReLU())self.max_pooling2 = nn.MaxPool2d(kernel_size=2,stride=2) #定義最大池化層#定義第三個卷積層,圖像大小:7*7self.conv3_1 = nn.Sequential(nn.Conv2d(128,256,kernel_size=3,stride=1,padding=1),nn.BatchNorm2d(256),nn.ReLU())self.conv3_2 = nn.Sequential(nn.Conv2d(256,256,kernel_size=3,stride=1,padding=1),nn.BatchNorm2d(256),nn.ReLU())self.max_pooling3 = nn.MaxPool2d(kernel_size=2,stride=2,padding=1) #定義最大池化層##定義第四個卷積層,圖像大小:4*4self.conv4_1 = nn.Sequential(nn.Conv2d(256,512,kernel_size=3,stride=1,padding=1),nn.BatchNorm2d(512),nn.ReLU())self.conv4_2 = nn.Sequential(nn.Conv2d(512,512,kernel_size=3,stride=1,padding=1),nn.BatchNorm2d(512),nn.ReLU())self.max_pooling4 = nn.MaxPool2d(kernel_size=2,stride=2,padding=1) #定義最大池化層#定義FC層self.fc = nn.Linear(4608, 10)#定義vgg網絡的前向傳播函數def forward(self,x):batchsize = x.size(0)out = self.conv1(x)out = self.max_pooling1(out)out = self.conv2_1(out)out = self.conv2_2(out)out = self.max_pooling2(out)out = self.conv3_1(out)out = self.conv3_2(out)out = self.max_pooling3(out)out = self.conv4_1(out)out = self.conv4_2(out)out = self.max_pooling4(out)out = out.view(batchsize,-1) #將輸出的三維特征圖轉換為一維向量out = self.fc(out)out = F.log_softmax(out,dim=1) #使用log_softmax函數作為激活函數return out

def VGGNet():return VGGbase()- 模型訓練

import torch

import torch.nn as nn

import torchvision

from vggnet import VGGNet

from cifar10Data import train_data_loader, test_data_loader

import os

import tensorboardX

# 定義訓練設備

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

# 訓練輪數

epoch_num = 200

# 學習率

lr = 0.01

# 加載網絡

net = VGGNet().to(device)

#定義loss

loss_func = nn.CrossEntropyLoss()

#定義優化器

optimizer = torch.optim.Adam(net.parameters(), lr=lr)

# 學習率衰減

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=5, gamma=0.9)

if not os.path.exists("log"):os.mkdir("log")

writer = tensorboardX.SummaryWriter("log")

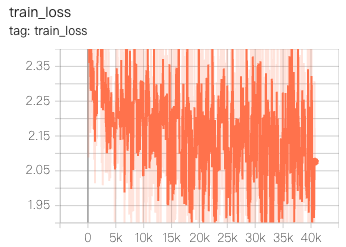

step_n = 0

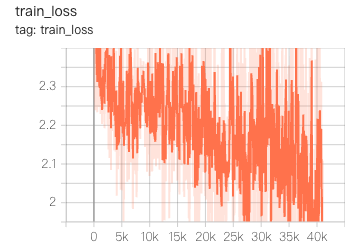

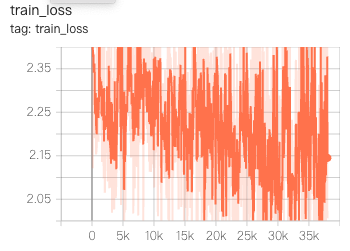

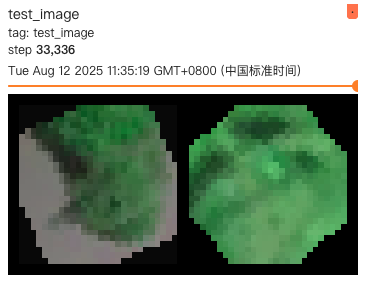

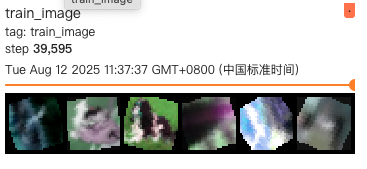

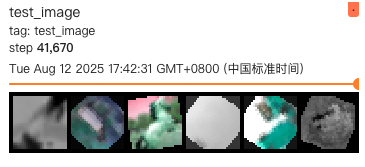

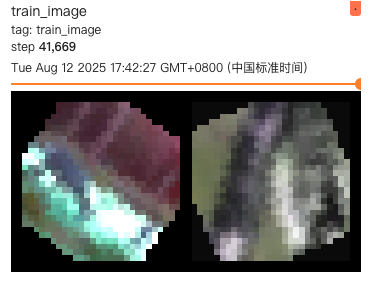

for epoch in range(epoch_num):print("epoch is:",epoch)#訓練for i,data in enumerate(train_data_loader):net.train()inputs,labels = datainputs,labels = inputs.to(device),labels.to(device)outputs = net(inputs)loss = loss_func(outputs,labels)optimizer.zero_grad()loss.backward()optimizer.step()writer.add_scalar("train loss",loss.item(),global_step=step_n)im = torchvision.utils.make_grid(inputs)writer.add_image("train image",im,global_step=step_n)step_n += 1if not os.path.exists("models"):os.mkdir("models")torch.save(net.state_dict(),"models/{}.path".format(epoch+1))scheduler.step()sum_loss = 0#測試for i,data in enumerate(train_data_loader):net.eval()inputs,labels = datainputs,labels = inputs.to(device),labels.to(device)outputs = net(inputs)loss = loss_func(outputs,labels)optimizer.zero_grad()loss.backward()optimizer.step()sum_loss += loss.item()im = torchvision.utils.make_grid(inputs)writer.add_image("test image", im, global_step=step_n)test_loss = sum_loss * 1.0 / len(train_data_loader)writer.add_scalar("teest loss", test_loss, global_step=epoch+1)print('test_step:', i, 'loss is:', test_loss)

writer.close()

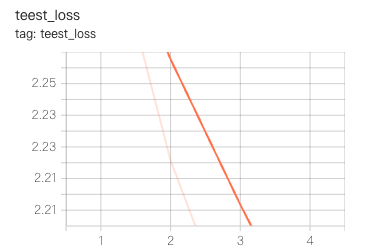

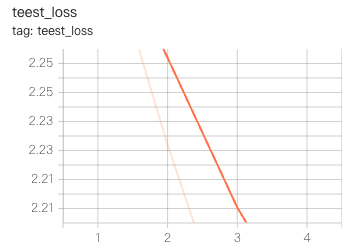

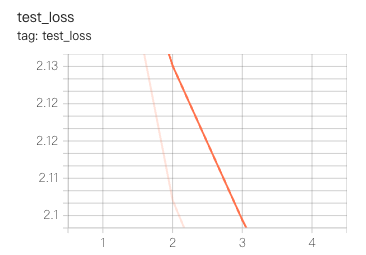

- 訓練結果

epoch is: 0

test_step: 8333 loss is: 2.306014501994137

epoch is: 1

test_step: 8333 loss is: 2.220694358253868

epoch is: 2

test_step: 8333 loss is: 2.1626519183618202

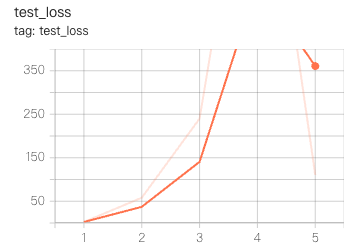

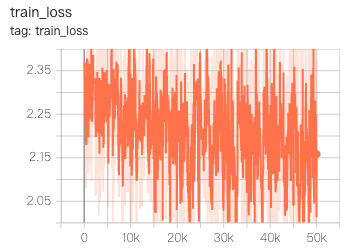

epoch is: 3- 圖表結果

5.?ResNet網絡搭建

- 模型網絡搭建

import torch

import torch.nn as nn

import torch.nn.functional as F

# 定義ResNet內部結構

class ResBlock(nn.Module):def __init__(self,in_channel,out_channel,stride=1):super(ResBlock,self).__init__()#主干分支self.layer = nn.Sequential(nn.Conv2d(in_channel,out_channel,kernel_size=3,stride=stride,padding=1),nn.BatchNorm2d(out_channel),nn.ReLU(),nn.Conv2d(out_channel, out_channel, kernel_size=3, stride=1, padding=1),nn.BatchNorm2d(out_channel),)#跳連分支,需要判斷是否需要跳連分支self.shortcut = nn.Sequential()if in_channel != out_channel or stride > 1:self.shortcut = nn.Sequential(nn.Conv2d(in_channel, out_channel, kernel_size=3, stride=stride, padding=1),nn.BatchNorm2d(out_channel),)def forward(self, x):out1 = self.layer(x)out2 = self.shortcut(x)out = out1 + out2out = F.relu(out)return out#ResNet模型搭建

class ResNet(nn.Module):def make_layer(self,block,out_channel,stride,num_block):layers_list = []for i in range(num_block):if i == 0:in_stride = strideelse:in_stride = 1layers_list.append(block(self.in_channel,out_channel,in_stride))self.in_channel = out_channelreturn nn.Sequential(*layers_list)def __init__(self,ResBlock):super(ResNet,self).__init__()self.in_channel = 32self.conv1 = nn.Sequential(nn.Conv2d(3, 32, kernel_size=3, stride=1, padding=1),nn.BatchNorm2d(32),nn.ReLU(),)self.layer1 = self.make_layer(ResBlock,64,2,2)self.layer2 = self.make_layer(ResBlock, 128, 2, 2)self.layer3 = self.make_layer(ResBlock, 256, 2, 2)self.layer4 = self.make_layer(ResBlock, 512, 2, 2)self.fc = nn.Linear(512,10)def forward(self, x):out = self.conv1(x)out = self.layer1(out)out = self.layer2(out)out = self.layer3(out)out = self.layer4(out)out = F.avg_pool2d(out, 2)out = out.view(out.size(0), -1)out = self.fc(out)return out

def resnet():return ResNet(ResBlock)- 模型訓練

import torch

import torch.nn as nn

import torchvision

from resnet import resnet

from cifar10Data import train_data_loader, test_data_loader

import os

import tensorboardX

# 定義訓練設備

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

# 訓練輪數

epoch_num = 200

# 學習率

lr = 0.01

# 加載網絡

net = resnet().to(device)

#定義loss

loss_func = nn.CrossEntropyLoss()

#定義優化器

optimizer = torch.optim.Adam(net.parameters(), lr=lr)

# 學習率衰減

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=5, gamma=0.9)

if not os.path.exists("log1"):os.mkdir("log1")

writer = tensorboardX.SummaryWriter("log1")

step_n = 0

for epoch in range(epoch_num):print("epoch is:",epoch)#訓練for i,data in enumerate(train_data_loader):net.train()inputs,labels = datainputs,labels = inputs.to(device),labels.to(device)outputs = net(inputs)loss = loss_func(outputs,labels)optimizer.zero_grad()loss.backward()optimizer.step()writer.add_scalar("train loss",loss.item(),global_step=step_n)im = torchvision.utils.make_grid(inputs)writer.add_image("train image",im,global_step=step_n)step_n += 1if not os.path.exists("models"):os.mkdir("models")torch.save(net.state_dict(),"models/{}.path".format(epoch+1))scheduler.step()sum_loss = 0#測試for i,data in enumerate(train_data_loader):net.eval()inputs,labels = datainputs,labels = inputs.to(device),labels.to(device)outputs = net(inputs)loss = loss_func(outputs,labels)optimizer.zero_grad()loss.backward()optimizer.step()sum_loss += loss.item()im = torchvision.utils.make_grid(inputs)writer.add_image("test image", im, global_step=step_n)test_loss = sum_loss * 1.0 / len(train_data_loader)writer.add_scalar("teest loss", test_loss, global_step=epoch+1)print('test_step:', i, 'loss is:', test_loss)

writer.close()

- 訓練結果

epoch is: 0

test_step: 8333 loss is: 2.3071022295024948

epoch is: 1

test_step: 8333 loss is: 2.226925660673022

epoch is: 2

test_step: 8333 loss is: 2.155742327815656

epoch is: 3

test_step: 8333 loss is: 2.11763518281998

epoch is: 4

test_step: 8333 loss is: 2.0863706607283063- 圖表結果

6.MobileNetv1網絡搭建

- 模型網絡搭建

import torch

import torch.nn.functional as F

import torch.nn as nn

class mobilenet(nn.Module):def conv_dw(self,in_channel, out_channel, stride):return nn.Sequential(nn.Conv2d(in_channels=in_channel, out_channels=in_channel, kernel_size=3, stride=stride, padding=1,groups=in_channel, bias=False),nn.BatchNorm2d(in_channel),nn.ReLU(),nn.Conv2d(in_channels=in_channel, out_channels=out_channel, kernel_size=1, stride=1, padding=0, bias=False),nn.BatchNorm2d(out_channel),nn.ReLU(),)def __init__(self):super(mobilenet, self).__init__()self.conv1 = nn.Sequential(nn.Conv2d(in_channels=3, out_channels=32, kernel_size=3, stride=1, padding=1),nn.BatchNorm2d(32),nn.ReLU(),)self.conv_dw2 = self.conv_dw(32, 32, 1)self.conv_dw3 = self.conv_dw(32, 64, 2)self.conv_dw4 = self.conv_dw(64, 64, 1)self.conv_dw5 = self.conv_dw(64, 128, 2)self.conv_dw6 = self.conv_dw(128, 128, 1)self.conv_dw7 = self.conv_dw(128, 256, 2)self.conv_dw8 = self.conv_dw(256, 256, 1)self.conv_dw9 = self.conv_dw(256, 512, 2)self.fc = nn.Linear(512,10)def forward(self, x):out = self.conv1(x)out = self.conv_dw2(out)out = self.conv_dw3(out)out = self.conv_dw4(out)out = self.conv_dw5(out)out = self.conv_dw6(out)out = self.conv_dw7(out)out = self.conv_dw8(out)out = self.conv_dw9(out)out = F.avg_pool2d(out, 2)out = out.view(-1,512)out = self.fc(out)return out

def mobilenetv1_small():return mobilenet()- 模型訓練

import torch

import torch.nn as nn

import torchvision

from mobilenetv1 import mobilenetv1_small

from cifar10Data import train_data_loader, test_data_loader

import os

import tensorboardX

# 定義訓練設備

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

# 訓練輪數

epoch_num = 200

# 學習率

lr = 0.01

# 加載網絡

net = mobilenetv1_small().to(device)

#定義loss

loss_func = nn.CrossEntropyLoss()

#定義優化器

optimizer = torch.optim.Adam(net.parameters(), lr=lr)

# 學習率衰減

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=5, gamma=0.9)

if not os.path.exists("log2"):os.mkdir("log2")

writer = tensorboardX.SummaryWriter("log2")

step_n = 0

for epoch in range(epoch_num):print("epoch is:",epoch)#訓練for i,data in enumerate(train_data_loader):net.train()inputs,labels = datainputs,labels = inputs.to(device),labels.to(device)outputs = net(inputs)loss = loss_func(outputs,labels)optimizer.zero_grad()loss.backward()optimizer.step()writer.add_scalar("train loss",loss.item(),global_step=step_n)im = torchvision.utils.make_grid(inputs)writer.add_image("train image",im,global_step=step_n)step_n += 1if not os.path.exists("models"):os.mkdir("models")torch.save(net.state_dict(),"models/{}.path".format(epoch+1))scheduler.step()sum_loss = 0#測試for i,data in enumerate(train_data_loader):net.eval()inputs,labels = datainputs,labels = inputs.to(device),labels.to(device)outputs = net(inputs)loss = loss_func(outputs,labels)optimizer.zero_grad()loss.backward()optimizer.step()sum_loss += loss.item()im = torchvision.utils.make_grid(inputs)writer.add_image("test image", im, global_step=step_n)test_loss = sum_loss * 1.0 / len(train_data_loader)writer.add_scalar("test loss", test_loss, global_step=epoch+1)print('test_step:', i, 'loss is:', test_loss)

writer.close()

- 訓練結果

epoch is: 0

test_step: 8333 loss is: 2.3168991455678207

epoch is: 1

test_step: 8333 loss is: 58.0813152680072

epoch is: 2

test_step: 8333 loss is: 239.99653513472458

epoch is: 3

test_step: 8333 loss is: 1036.717976929159

epoch is: 4

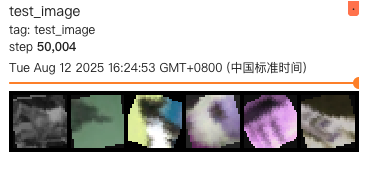

test_step: 8333 loss is: 110.44223031090523- 圖表結果

7.Inception網絡搭建

- 模型網絡搭建

import torch

import torch.nn as nn

import torch.nn.functional as F

def ConvBNRelu(in_channel, out_channel, kernel_size):return nn.Sequential(nn.Conv2d(in_channel, out_channel, kernel_size, padding=kernel_size//2),nn.BatchNorm2d(out_channel),nn.ReLU(inplace=True),)

class BaseInception(nn.Module):def __init__(self,in_channel,out_channel_list,reduce_channel_list):super(BaseInception, self).__init__()self.branch1_conv = ConvBNRelu(in_channel, out_channel_list[0], 1)self.branch2_conv1 = ConvBNRelu(in_channel, reduce_channel_list[0], 1)self.branch2_conv2 = ConvBNRelu(reduce_channel_list[0], out_channel_list[1], 3)self.branch3_conv1 = ConvBNRelu(in_channel, reduce_channel_list[1], 1)self.branch3_conv2 = ConvBNRelu(reduce_channel_list[1], out_channel_list[2], 5)self.branch4_pool = nn.MaxPool2d(3, 1, padding=1)self.branch4_conv = ConvBNRelu(in_channel, out_channel_list[3], 3)def forward(self, x):out1 = self.branch1_conv(x)out2 = self.branch2_conv1(x)out2 = self.branch2_conv2(out2)out3 = self.branch3_conv1(x)out3 = self.branch3_conv2(out3)out4 = self.branch4_pool(x)out4 = self.branch4_conv(out4)out = torch.cat([out1, out2, out3, out4], 1)return outclass InceptionNet(nn.Module):def __init__(self):super(InceptionNet, self).__init__()self.block1 = nn.Sequential(nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=1),nn.BatchNorm2d(64),nn.ReLU(),)self.block2 = nn.Sequential(nn.Conv2d(64,128,kernel_size=3,stride=2,padding=1),nn.BatchNorm2d(128),nn.ReLU(),)self.block3 = nn.Sequential(BaseInception(in_channel=128, out_channel_list=[64, 64, 64, 64], reduce_channel_list=[16, 16]),nn.MaxPool2d(3, stride=2, padding=1))self.block4 = nn.Sequential(BaseInception(in_channel=256, out_channel_list=[96, 96, 96, 96], reduce_channel_list=[32, 32]),nn.MaxPool2d(3, stride=2, padding=1))self.fc = nn.Linear(384,10)def forward(self, x):out = self.block1(x)out = self.block2(out)out = self.block3(out)out = self.block4(out)out = F.avg_pool2d(out, 2)out = out.view(out.size(0), -1)out = self.fc(out)return out

def InceptionNetSmall():return InceptionNet()- 模型訓練

import torch

import torch.nn as nn

import torchvision

from inception import InceptionNetSmall

from cifar10Data import train_data_loader, test_data_loader

import os

import tensorboardX

# 定義訓練設備

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

# 訓練輪數

epoch_num = 200

# 學習率

lr = 0.01

# 加載網絡

net = InceptionNetSmall().to(device)

#定義loss

loss_func = nn.CrossEntropyLoss()

#定義優化器

optimizer = torch.optim.Adam(net.parameters(), lr=lr)

# 學習率衰減

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=5, gamma=0.9)

if not os.path.exists("log3"):os.mkdir("log3")

writer = tensorboardX.SummaryWriter("log3")

step_n = 0

for epoch in range(epoch_num):print("epoch is:",epoch)#訓練for i,data in enumerate(train_data_loader):net.train()inputs,labels = datainputs,labels = inputs.to(device),labels.to(device)outputs = net(inputs)loss = loss_func(outputs,labels)optimizer.zero_grad()loss.backward()optimizer.step()writer.add_scalar("train loss",loss.item(),global_step=step_n)im = torchvision.utils.make_grid(inputs)writer.add_image("train image",im,global_step=step_n)step_n += 1if not os.path.exists("models"):os.mkdir("models")torch.save(net.state_dict(),"models/{}.path".format(epoch+1))scheduler.step()sum_loss = 0#測試for i,data in enumerate(train_data_loader):net.eval()inputs,labels = datainputs,labels = inputs.to(device),labels.to(device)outputs = net(inputs)loss = loss_func(outputs,labels)optimizer.zero_grad()loss.backward()optimizer.step()sum_loss += loss.item()im = torchvision.utils.make_grid(inputs)writer.add_image("test image", im, global_step=step_n)test_loss = sum_loss * 1.0 / len(train_data_loader)writer.add_scalar("test loss", test_loss, global_step=epoch+1)print('test_step:', i, 'loss is:', test_loss)

writer.close()

- 訓練結果

epoch is: 0

test_step: 8333 loss is: 2.1641721504324485

epoch is: 1

test_step: 8333 loss is: 2.106510695047678

epoch is: 2

test_step: 8333 loss is: 2.0794332600881478

epoch is: 3

test_step: 8333 loss is: 2.0550003183926497- 圖表結果

8.Pytorch提供的ResNet18模型

- pytorch中提供了很多模型,都在torchvision的models中

- 訓練代碼與前面的相同,只需要將模型引入,替換net的賦值即可,訓練結果也與此前無太大差異,此處就不過多贅述,只給出模型代碼

import torch.nn as nn

from torchvision import models

class resnet18(nn.Module):def __init__(self):super(resnet18, self).__init__()self.model = models.resnet18(pretrained=True)self.num_features = self.model.fc.in_featuresself.model.fc = nn.Linear(self.num_features, 10)def forward(self, x):out = self.model(x)return out

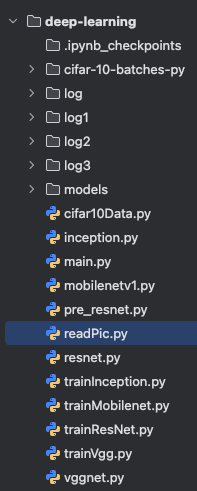

def pytorch_resnet18():return resnet18()全部代碼的文件結構為:

知識點為聽課總結筆記,課程為B站“2025最新整合!公認B站講解最強【PyTorch】入門到進階教程,從環境配置到算法原理再到代碼實戰逐一解讀,比自學效果強得多!”:2025最新整合!公認B站講解最強【PyTorch】入門到進階教程,從環境配置到算法原理再到代碼實戰逐一解讀,比自學效果強得多!_嗶哩嗶哩_bilibili

其實課程后續還有檢測和分割,但是這兩部分是在講別人訓練好的模型,不好做筆記,大家如果需要可以自己去看看!

所以,Pytorch學習完結撒花!!!!!!!!!!!!

-- 基礎概念)

![week1-[分支結構]中位數](http://pic.xiahunao.cn/week1-[分支結構]中位數)

![[激光原理與應用-259]:理論 - 幾何光學 - 平面鏡的反射、平面透鏡的折射、平面鏡的反射成像、平面透鏡的成像的規律](http://pic.xiahunao.cn/[激光原理與應用-259]:理論 - 幾何光學 - 平面鏡的反射、平面透鏡的折射、平面鏡的反射成像、平面透鏡的成像的規律)

)