目錄

結果指標解讀

一、為什么選擇這些指標?

二、各指標的定義和解讀

1. 準確率(Accuracy)

2. 損失(Loss)

3. 精確率(Precision)

4. 召回率(Recall)

三、這些指標說明什么?

四、如果要進一步優化模型,可以關注:

分類任務中指標的分工合作

1. 先理解一個關鍵:為什么不能只用一個指標?

2. 每個指標的 “不可替代性”

(1)準確率(Accuracy):看整體 “蒙對” 的概率

(2)損失(Loss):看模型 “心里有數沒數”

(3)精確率(Precision):看 “說有就真有” 的概率

(4)召回率(Recall):看 “有病就不會漏” 的概率

3. 總結:4 個指標的 “分工合作”

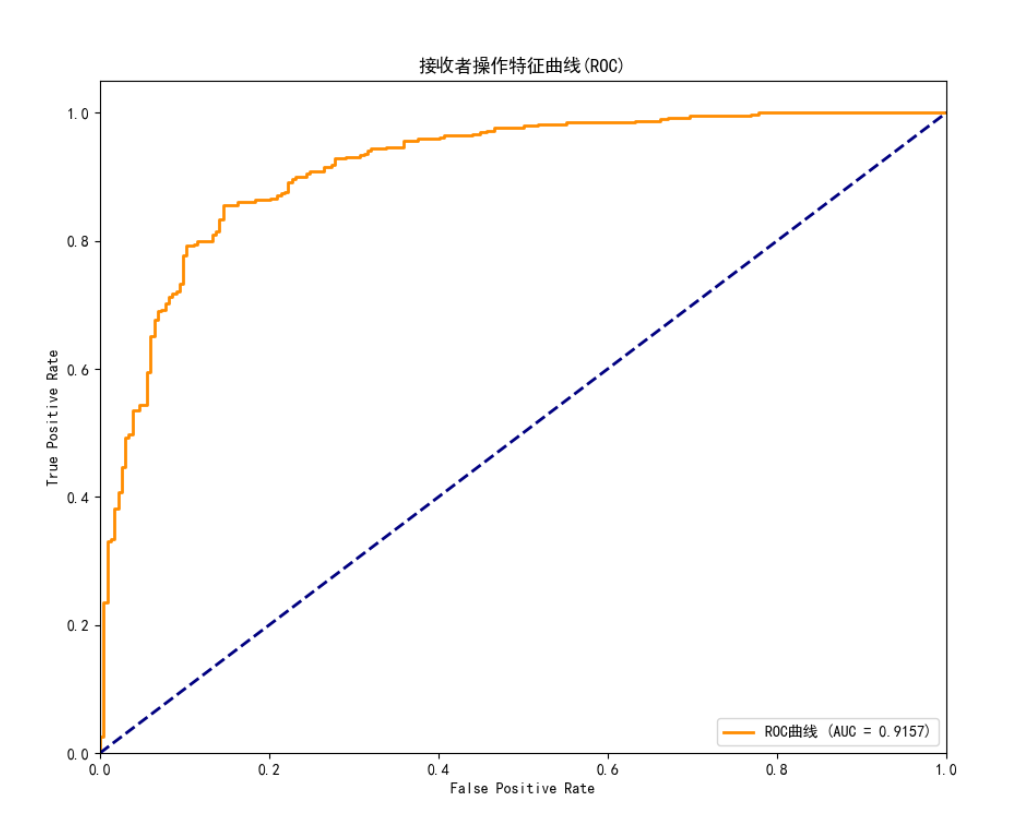

ROC 曲線與模型性能分析

一、核心概念

二、圖中元素解讀

三、這張圖說明什么?

四、和之前指標的關系

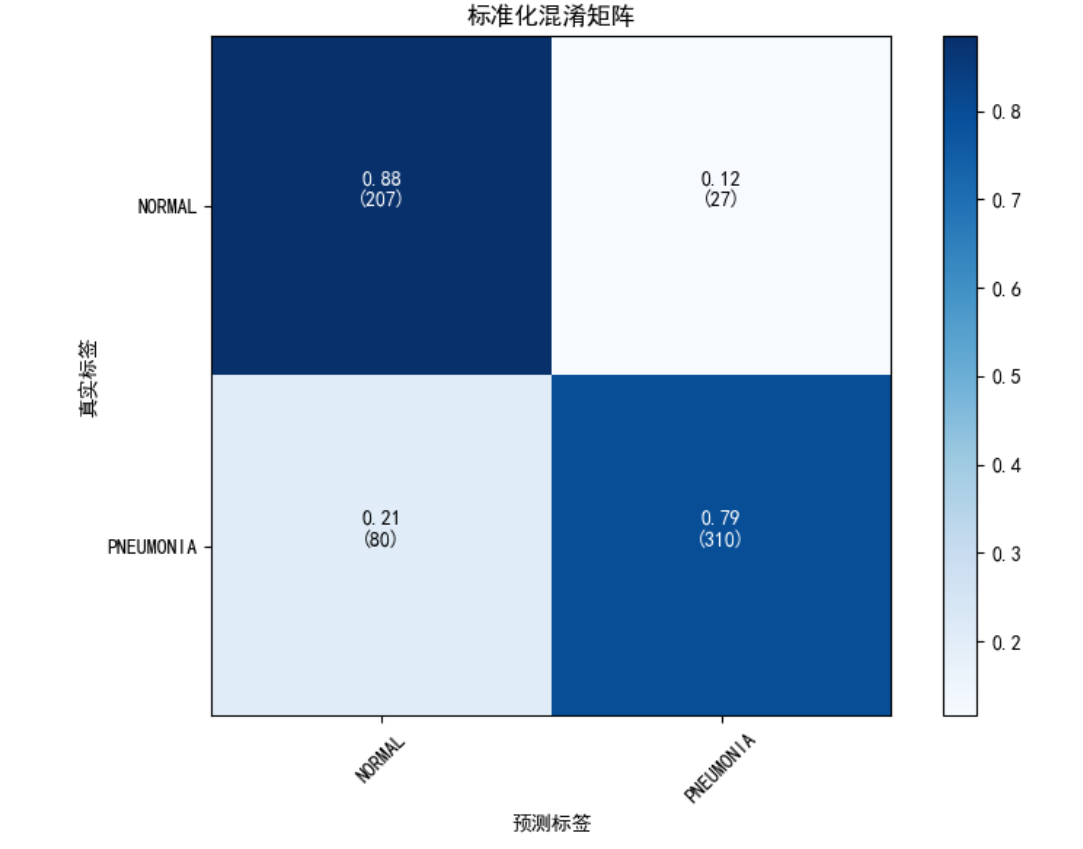

標準化混淆矩陣解析

一、核心概念

二、圖中元素解讀

三、具體數值解讀

四、這張圖說明什么?

五、和之前指標的關系

F1-score 模型綜合分

通過控制臺信息了解肺炎診斷模型評估

一、數據準備階段

二、模型構建階段

三、訓練過程

四、測試結果

五、總結

結果指標解讀

《模型訓練指標解析:肺炎診斷》

好的!這四個指標(準確率、損失、精確率、召回率)是評估模型性能的關鍵指標,我來為你逐一解釋它們的定義、用途和解讀方法。

一、為什么選擇這些指標?

這些指標是機器學習中最常用的評估標準,特別是在醫療診斷等場景中:

- 準確率(Accuracy):最直觀的指標,反映模型整體的判斷能力

- 損失(Loss):反映模型預測值與真實值的差距,指導訓練過程

- 精確率(Precision):關注 "預測為正的樣本中真正正確的比例",適合關注 "不誤診" 的場景

- 召回率(Recall):關注 "實際為正的樣本中被正確識別的比例",適合關注 "不漏診" 的場景

在肺炎診斷中,我們既需要 "不誤診"(精確率),也需要 "不漏診"(召回率),所以這四個指標能全面評估模型性能。

二、各指標的定義和解讀

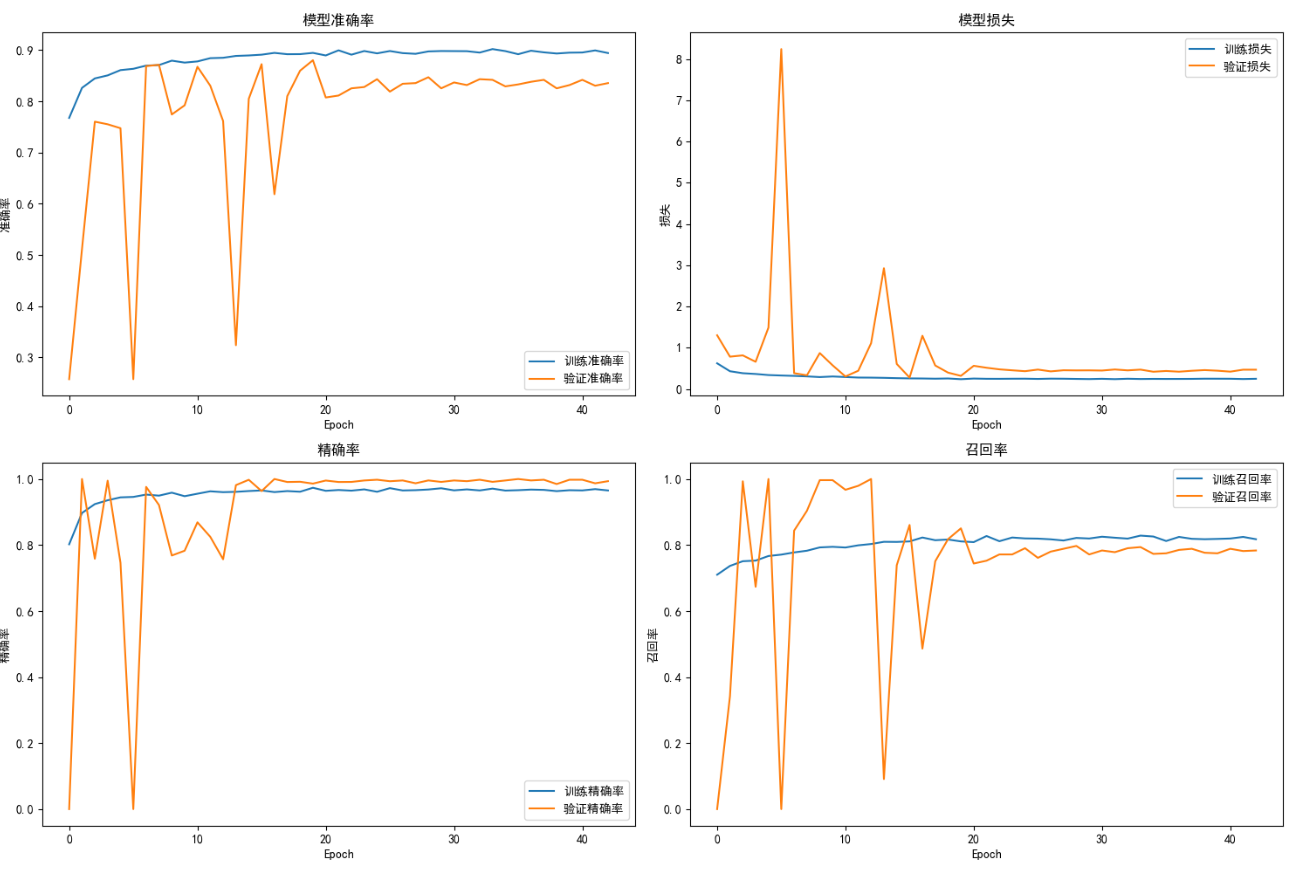

1. 準確率(Accuracy)

定義:模型正確預測的樣本數占總樣本數的比例

公式:

解讀:

- 左上子圖中,藍色線(訓練準確率)最終穩定在 0.9 以上,說明模型在訓練集上判斷正確的比例超過 90%

- 橙色線(驗證準確率)也接近 0.9,說明模型在新數據上的泛化能力不錯

- 兩條線的差距不大,說明模型沒有明顯過擬合

2. 損失(Loss)

定義:模型預測值與真實值之間的誤差,值越小說明預測越準確

(具體計算方式取決于損失函數,如交叉熵損失)

大白話解釋:

想象你是老師,讓學生做 10 道數學題。

- 學生第一次做,錯了 7 道題 → 損失很大(7/10)

- 學生復習后再做,只錯了 2 道 → 損失變小(2/10)

- 學生第三次做,全對 → 損失為 0

例子:

假設模型預測 "張三有 80% 概率得肺炎",但實際張三沒得肺炎:

- 預測值(80%)和真實值(0%)之間的差距就是損失

- 如果用均方誤差計算:(0.8-0)2 = 0.64 → 損失為 0.64

- 如果用交叉熵損失計算:-ln (0.2) ≈ 1.61 → 損失更大(因為模型錯得很離譜)

解讀:

- 右上子圖中,藍色線(訓練損失)持續下降并趨于平穩,說明模型在訓練集上的預測誤差在減小

- 橙色線(驗證損失)雖然波動較大,但整體也呈下降趨勢,說明模型在驗證集上的誤差也在降低

- 訓練損失和驗證損失最終都維持在較低水平,說明模型收斂良好

3. 精確率(Precision)

定義:預測為肺炎的樣本中,真正是肺炎的比例

公式:

解讀:

- 左下子圖中,兩條線都接近 1.0,說明模型預測為肺炎的樣本中,大部分確實是肺炎

- 這對醫療診斷很重要:如果模型說 "是肺炎",那么有很高的概率是正確的(減少不必要的治療)

4. 召回率(Recall)

定義:實際是肺炎的樣本中,被模型正確識別的比例

公式:

解讀:

- 右下子圖中,兩條線也接近 0.8-0.9,說明模型能識別出大部分的肺炎病例

- 這在醫療診斷中至關重要:盡量減少 "漏診"(把肺炎當成正常)的情況

- 雖然存在波動,但最終穩定在較高水平,說明模型對肺炎的識別能力不錯

三、這些指標說明什么?

- 模型整體表現良好:四個指標都達到了較高水平,說明模型既沒有 "漏診" 太多肺炎,也沒有 "誤診" 太多正常樣本

- 泛化能力尚可:訓練集和驗證集的指標差距不大,說明模型沒有過度 "死記硬背" 訓練數據

- 仍有優化空間:驗證集的指標波動較大,可能說明模型對某些樣本的識別能力不穩定,或者訓練過程中存在噪聲

四、如果要進一步優化模型,可以關注:

- 驗證集指標的波動原因(是否有某些樣本特別難識別?)

- 精確率和召回率的權衡(是否需要犧牲一點精確率來提高召回率?)

- 損失函數的選擇是否合適(是否需要調整損失函數來更關注某些樣本?)

分類任務中指標的分工合作

選擇這 4 個指標(準確率、損失、精確率、召回率),核心原因是它們能從不同維度互補,全面反映模型的真實性能,尤其是在分類任務(比如之前提到的肺炎診斷)中,單一指標往往會 “騙人”,而這四個指標組合能避免這種情況。

1. 先理解一個關鍵:為什么不能只用一個指標?

舉個極端例子:

假設醫院里 100 個病人中只有 1 個是肺炎患者,99 個是健康人。

如果模型偷懶,不管啥情況都預測 “健康”,那么:

- 正確率(準確率)能達到 99%(因為 99 個健康人都對了),但這模型毫無意義 —— 它漏診了唯一的病人。

這說明:單一指標(比如只看準確率)會掩蓋模型的關鍵問題。而這 4 個指標的組合,就是為了從不同角度 “拷問” 模型,讓它的真實能力無所遁形。

2. 每個指標的 “不可替代性”

(1)準確率(Accuracy):看整體 “蒙對” 的概率

- 作用:快速判斷模型的 “整體靠譜度”。比如準確率 90%,說明 100 個預測里平均 90 個是對的。

- 為什么必須有:它是最直觀的 “入門指標”,能讓你第一時間知道模型大概在什么水平(比如準確率 30% 就是瞎猜,90% 可能還不錯)。

- 局限:當數據中 “正反樣本比例懸殊”(比如上面 1 個病人 99 個健康人)時,準確率會失真,所以需要其他指標補充。

(2)損失(Loss):看模型 “心里有數沒數”

- 作用:比準確率更細膩地反映 “預測和真實的差距”。比如同樣是 “預測錯誤”,模型猜 “90% 概率患病” 但實際健康,比猜 “51% 概率患病” 的錯誤更嚴重(損失更大)。

- 為什么必須有:

- 訓練時靠它 “導航”:損失下降,說明模型在進步;損失不變,說明模型 “學不進去了”。

- 反映模型的 “自信度”:比如兩個模型準確率都是 80%,但一個損失小(錯誤時也猜得接近真實),另一個損失大(錯誤時完全瞎猜),顯然前者更可靠。

(3)精確率(Precision):看 “說有就真有” 的概率

- 作用:模型說 “是肺炎” 時,到底有多大概率真的是肺炎?(比如精確率 95%,意味著 100 個被預測為肺炎的人里,95 個真患病)。

- 為什么必須有:避免 “誤診”。比如在醫療中,如果精確率低,會把大量健康人當成病人,導致過度治療(浪費資源 + 病人焦慮)。

(4)召回率(Recall):看 “有病就不會漏” 的概率

- 作用:所有真正的肺炎患者中,模型能抓出多少?(比如召回率 90%,意味著 100 個真病人里,90 個能被正確識別)。

- 為什么必須有:避免 “漏診”。在醫療中,漏診比誤診更危險 —— 如果召回率低,會有很多病人被當成健康人,耽誤治療。

3. 總結:4 個指標的 “分工合作”

- 準確率:給模型打個 “總體分”,看大概靠不靠譜;

- 損失:看模型 “學習過程” 和 “預測細膩度”,指導訓練;

- 精確率:盯著 “別冤枉好人”(少誤診);

- 召回率:盯著 “別放過壞人”(少漏診)。

這四個指標一起,既能反映模型的整體表現,又能暴露它在關鍵場景(比如醫療中的誤診 / 漏診)中的短板,所以成為分類任務中最經典的評估組合。

ROC 曲線與模型性能分析

這張圖是ROC 曲線(受試者工作特征曲線),是評估二分類模型性能的重要工具。我來用大白話給你解釋:

一、核心概念

- 橫軸(False Positive Rate, FPR):

實際為負樣本(比如 "健康人")中,被模型錯誤預測為正樣本(比如 "肺炎患者")的比例。

公式:

FPR = \frac{\text{被誤診的健康人數}}{\text{總健康人數}}

(FPR 越低,說明模型越不容易 "冤枉好人")

- 縱軸(True Positive Rate, TPR):

實際為正樣本(比如 "肺炎患者")中,被模型正確識別的比例(也就是 "召回率")。

公式:

TPR = \frac{\text{被正確識別的肺炎患者數}}{\text{總肺炎患者數}}

(TPR 越高,說明模型越不容易 "漏掉病人")

二、圖中元素解讀

- 橙色曲線(ROC 曲線):

模型在不同 "判斷閾值" 下的 FPR 和 TPR 組合。

-

- 曲線越 "靠近左上角",說明模型在相同 FPR 下能達到更高的 TPR(更優秀)

- 圖中曲線快速上升并接近頂部,說明模型性能很好

- 藍色虛線(對角線):

代表 "隨機猜測" 的模型(比如拋硬幣)。

-

- 如果 ROC 曲線在虛線下方,說明模型比隨機猜測還差

- 圖中曲線明顯在虛線上方,說明模型遠優于隨機猜測

- AUC(曲線下面積):

圖中顯示 AUC=0.9157,代表 ROC 曲線下的面積。

-

- AUC 越接近 1,模型性能越好

- 0.9157 是非常優秀的結果,說明模型有很強的區分能力

三、這張圖說明什么?

- 模型區分能力強:

AUC=0.9157 > 0.9,說明模型能很好地區分 "肺炎患者" 和 "健康人"

- 漏診率低:

曲線快速上升到接近 1.0,說明在 FPR 較低的情況下(比如 <0.2),TPR 已經很高(>0.8),即模型能識別出大部分肺炎患者,漏診率低

- 誤診率可控:

當 TPR 達到 0.9 時,FPR 仍低于 0.1,說明模型在識別出 90% 肺炎患者的同時,只誤診了不到 10% 的健康人

四、和之前指標的關系

- 與召回率(Recall)的關系:

TPR 就是召回率,所以 ROC 曲線的縱軸直接反映了模型的召回能力

- 與精確率(Precision)的關系:

雖然 ROC 曲線不直接顯示精確率,但 AUC 高通常意味著模型在各種閾值下都能保持較好的精確率和召回率平衡

- 與準確率(Accuracy)的關系:

AUC 和準確率都是模型性能的綜合指標,但 AUC 更關注模型的 "區分能力",而準確率更關注 "整體正確率"

這張 ROC 曲線和 AUC 值表明:

- 模型具有很強的區分肺炎患者和健康人的能力

- 模型在控制誤診率的同時,能有效降低漏診率

- 整體性能達到優秀水平(AUC>0.9)

如果要進一步優化,可以關注:

- 是否需要在 "減少漏診" 和 "減少誤診" 之間做權衡

- 不同閾值下的精確率表現

標準化混淆矩陣解析

這張圖是標準化混淆矩陣(Normalized Confusion Matrix),是評估分類模型性能的核心工具。我來用大白話給你解釋:

一、核心概念

混淆矩陣是用來展示模型預測結果與真實標簽之間對應關系的表格,包含四個關鍵部分:

- 真正例(True Positive, TP):實際是肺炎,模型也預測為肺炎

- 假正例(False Positive, FP):實際是正常,模型錯誤預測為肺炎

- 真負例(True Negative, TN):實際是正常,模型也預測為正常

- 假負例(False Negative, FN):實際是肺炎,模型錯誤預測為正常

二、圖中元素解讀

- 行(真實標簽):

- NORMAL:實際是正常的樣本

- PNEUMONIA:實際是肺炎的樣本

- 列(預測標簽):

- NORMAL:模型預測為正常的樣本

- PNEUMONIA:模型預測為肺炎的樣本

- 顏色深淺:

- 深藍色:數值接近 1(預測準確)

- 淺藍色:數值接近 0(預測錯誤)

- 顏色條顯示數值與顏色的對應關系

- 數值含義:

- 每個格子顯示兩個數值:標準化比例(如 0.88)和原始數量(如 207)

- 標準化比例 = 該格子數量 / 對應行的總樣本數

三、具體數值解讀

- 真實為 NORMAL 的樣本(第一行):

- 預測為 NORMAL:0.88(207 個)→ 模型正確識別了 88% 的正常樣本

- 預測為 PNEUMONIA:0.12(27 個)→ 模型將 12% 的正常樣本錯誤預測為肺炎

- 真實為 PNEUMONIA 的樣本(第二行):

- 預測為 NORMAL:0.21(80 個)→ 模型將 21% 的肺炎樣本錯誤預測為正常

- 預測為 PNEUMONIA:0.79(310 個)→ 模型正確識別了 79% 的肺炎樣本

四、這張圖說明什么?

- 正常樣本識別能力強:

- 0.88 的準確率說明模型在識別正常樣本方面表現不錯

- 只有 12% 的正常樣本被誤診為肺炎

- 肺炎樣本識別能力尚可但有提升空間:

- 79% 的肺炎樣本被正確識別,說明模型對肺炎有一定識別能力

- 但仍有 21% 的肺炎樣本被漏診(預測為正常)

- 整體表現:

- 模型在正常樣本上的表現優于肺炎樣本

- 漏診率(21%)高于誤診率(12%)

五、和之前指標的關系

- 與準確率(Accuracy)的關系:

- 準確率 = (TP+TN)/(TP+TN+FP+FN) = (310+207)/(310+207+27+80) ≈ 0.84

- 與之前看到的準確率指標(約 0.8-0.9)一致

- 與精確率(Precision)的關系:

- 精確率(肺炎)= TP/(TP+FP) = 310/(310+27) ≈ 0.92

- 說明模型預測為肺炎的樣本中,92% 確實是肺炎

- 與召回率(Recall)的關系:

- 召回率(肺炎)= TP/(TP+FN) = 310/(310+80) ≈ 0.79

- 與混淆矩陣中第二行的 0.79 直接對應

這張混淆矩陣表明:

- 模型對正常樣本的識別準確率較高(88%)

- 對肺炎樣本的識別準確率尚可(79%),但漏診率偏高(21%)

- 誤診率相對較低(12%)

如果要進一步優化,可以關注:

- 如何減少肺炎樣本的漏診率(假負例)

- 是否需要在誤診率和漏診率之間做權衡

- 結合臨床需求調整模型決策閾值

D:\ProgramData\anaconda3\envs\tf_env\python.exe D:\workspace_py\deeplean\medical_image_classification_fixed2.py

Found 4448 images belonging to 2 classes.

Found 784 images belonging to 2 classes.

Found 624 images belonging to 2 classes.

原始樣本分布: 正常=1147, 肺炎=3301

過采樣后分布: 正常=3301, 肺炎=3301

類別權重: 正常=1.94, 肺炎=0.67

使用本地權重文件: models/resnet50_weights_tf_dim_ordering_tf_kernels_notop.h5

2025-07-25 14:47:12.955043: I tensorflow/core/platform/cpu_feature_guard.cc:182] This TensorFlow binary is optimized to use available CPU instructions in performance-critical operations.

To enable the following instructions: SSE SSE2 SSE3 SSE4.1 SSE4.2 AVX AVX2 FMA, in other operations, rebuild TensorFlow with the appropriate compiler flags.

Model: "sequential"

_________________________________________________________________Layer (type) Output Shape Param #

=================================================================resnet50 (Functional) (None, 7, 7, 2048) 23587712 global_average_pooling2d ( (None, 2048) 0 GlobalAveragePooling2D) dense (Dense) (None, 512) 1049088 batch_normalization (Batch (None, 512) 2048 Normalization) dropout (Dropout) (None, 512) 0 dense_1 (Dense) (None, 256) 131328 batch_normalization_1 (Bat (None, 256) 1024 chNormalization) dropout_1 (Dropout) (None, 256) 0 dense_2 (Dense) (None, 1) 257 =================================================================

Total params: 24771457 (94.50 MB)

Trainable params: 1182209 (4.51 MB)

Non-trainable params: 23589248 (89.99 MB)

_________________________________________________________________

Epoch 1/50

207/207 [==============================] - ETA: 0s - loss: 0.6233 - accuracy: 0.7675 - precision: 0.8022 - recall: 0.7101 - auc: 0.8398

Epoch 1: val_auc improved from -inf to 0.87968, saving model to best_pneumonia_model.h5

207/207 [==============================] - 245s 1s/step - loss: 0.6233 - accuracy: 0.7675 - precision: 0.8022 - recall: 0.7101 - auc: 0.8398 - val_loss: 1.3033 - val_accuracy: 0.2577 - val_precision: 0.0000e+00 - val_recall: 0.0000e+00 - val_auc: 0.8797 - lr: 1.0000e-04

Epoch 2/50

207/207 [==============================] - ETA: 0s - loss: 0.4313 - accuracy: 0.8261 - precision: 0.8971 - recall: 0.7367 - auc: 0.9035

Epoch 2: val_auc improved from 0.87968 to 0.92927, saving model to best_pneumonia_model.h5

207/207 [==============================] - 257s 1s/step - loss: 0.4313 - accuracy: 0.8261 - precision: 0.8971 - recall: 0.7367 - auc: 0.9035 - val_loss: 0.7843 - val_accuracy: 0.5115 - val_precision: 1.0000 - val_recall: 0.3419 - val_auc: 0.9293 - lr: 1.0000e-04

Epoch 3/50

207/207 [==============================] - ETA: 0s - loss: 0.3833 - accuracy: 0.8446 - precision: 0.9240 - recall: 0.7510 - auc: 0.9174

Epoch 3: val_auc did not improve from 0.92927

207/207 [==============================] - 242s 1s/step - loss: 0.3833 - accuracy: 0.8446 - precision: 0.9240 - recall: 0.7510 - auc: 0.9174 - val_loss: 0.8157 - val_accuracy: 0.7602 - val_precision: 0.7585 - val_recall: 0.9931 - val_auc: 0.8778 - lr: 1.0000e-04

Epoch 4/50

207/207 [==============================] - ETA: 0s - loss: 0.3648 - accuracy: 0.8505 - precision: 0.9356 - recall: 0.7528 - auc: 0.9254

Epoch 4: val_auc improved from 0.92927 to 0.94531, saving model to best_pneumonia_model.h5

207/207 [==============================] - 242s 1s/step - loss: 0.3648 - accuracy: 0.8505 - precision: 0.9356 - recall: 0.7528 - auc: 0.9254 - val_loss: 0.6605 - val_accuracy: 0.7551 - val_precision: 0.9949 - val_recall: 0.6735 - val_auc: 0.9453 - lr: 1.0000e-04

Epoch 5/50

207/207 [==============================] - ETA: 0s - loss: 0.3387 - accuracy: 0.8608 - precision: 0.9444 - recall: 0.7667 - auc: 0.9325

Epoch 5: val_auc did not improve from 0.94531

207/207 [==============================] - 239s 1s/step - loss: 0.3387 - accuracy: 0.8608 - precision: 0.9444 - recall: 0.7667 - auc: 0.9325 - val_loss: 1.4892 - val_accuracy: 0.7474 - val_precision: 0.7462 - val_recall: 1.0000 - val_auc: 0.7024 - lr: 1.0000e-04

Epoch 6/50

207/207 [==============================] - ETA: 0s - loss: 0.3275 - accuracy: 0.8634 - precision: 0.9457 - recall: 0.7710 - auc: 0.9403

Epoch 6: val_auc did not improve from 0.94531

207/207 [==============================] - 240s 1s/step - loss: 0.3275 - accuracy: 0.8634 - precision: 0.9457 - recall: 0.7710 - auc: 0.9403 - val_loss: 8.2440 - val_accuracy: 0.2577 - val_precision: 0.0000e+00 - val_recall: 0.0000e+00 - val_auc: 0.5095 - lr: 1.0000e-04

Epoch 7/50

207/207 [==============================] - ETA: 0s - loss: 0.3184 - accuracy: 0.8696 - precision: 0.9529 - recall: 0.7776 - auc: 0.9406

Epoch 7: val_auc improved from 0.94531 to 0.94572, saving model to best_pneumonia_model.h5

207/207 [==============================] - 240s 1s/step - loss: 0.3184 - accuracy: 0.8696 - precision: 0.9529 - recall: 0.7776 - auc: 0.9406 - val_loss: 0.3840 - val_accuracy: 0.8686 - val_precision: 0.9761 - val_recall: 0.8436 - val_auc: 0.9457 - lr: 1.0000e-04

Epoch 8/50

207/207 [==============================] - ETA: 0s - loss: 0.3061 - accuracy: 0.8706 - precision: 0.9497 - recall: 0.7828 - auc: 0.9458

Epoch 8: val_auc did not improve from 0.94572

207/207 [==============================] - 238s 1s/step - loss: 0.3061 - accuracy: 0.8706 - precision: 0.9497 - recall: 0.7828 - auc: 0.9458 - val_loss: 0.3327 - val_accuracy: 0.8712 - val_precision: 0.9212 - val_recall: 0.9038 - val_auc: 0.9368 - lr: 1.0000e-04

Epoch 9/50

207/207 [==============================] - ETA: 0s - loss: 0.2896 - accuracy: 0.8793 - precision: 0.9586 - recall: 0.7928 - auc: 0.9498

Epoch 9: val_auc did not improve from 0.94572

207/207 [==============================] - 236s 1s/step - loss: 0.2896 - accuracy: 0.8793 - precision: 0.9586 - recall: 0.7928 - auc: 0.9498 - val_loss: 0.8718 - val_accuracy: 0.7742 - val_precision: 0.7682 - val_recall: 0.9966 - val_auc: 0.8534 - lr: 1.0000e-04

Epoch 10/50

207/207 [==============================] - ETA: 0s - loss: 0.3042 - accuracy: 0.8755 - precision: 0.9480 - recall: 0.7946 - auc: 0.9467

Epoch 10: val_auc did not improve from 0.94572

207/207 [==============================] - 237s 1s/step - loss: 0.3042 - accuracy: 0.8755 - precision: 0.9480 - recall: 0.7946 - auc: 0.9467 - val_loss: 0.5748 - val_accuracy: 0.7921 - val_precision: 0.7827 - val_recall: 0.9966 - val_auc: 0.9386 - lr: 1.0000e-04

Epoch 11/50

207/207 [==============================] - ETA: 0s - loss: 0.2909 - accuracy: 0.8778 - precision: 0.9554 - recall: 0.7925 - auc: 0.9501

Epoch 11: val_auc improved from 0.94572 to 0.95169, saving model to best_pneumonia_model.h5

207/207 [==============================] - 237s 1s/step - loss: 0.2909 - accuracy: 0.8778 - precision: 0.9554 - recall: 0.7925 - auc: 0.9501 - val_loss: 0.3041 - val_accuracy: 0.8673 - val_precision: 0.8688 - val_recall: 0.9674 - val_auc: 0.9517 - lr: 1.0000e-04

Epoch 12/50

207/207 [==============================] - ETA: 0s - loss: 0.2779 - accuracy: 0.8841 - precision: 0.9628 - recall: 0.7992 - auc: 0.9537

Epoch 12: val_auc did not improve from 0.95169

207/207 [==============================] - 236s 1s/step - loss: 0.2779 - accuracy: 0.8841 - precision: 0.9628 - recall: 0.7992 - auc: 0.9537 - val_loss: 0.4429 - val_accuracy: 0.8304 - val_precision: 0.8249 - val_recall: 0.9794 - val_auc: 0.9420 - lr: 1.0000e-04

Epoch 13/50

207/207 [==============================] - ETA: 0s - loss: 0.2761 - accuracy: 0.8849 - precision: 0.9602 - recall: 0.8031 - auc: 0.9530

Epoch 13: val_auc did not improve from 0.95169

207/207 [==============================] - 236s 1s/step - loss: 0.2761 - accuracy: 0.8849 - precision: 0.9602 - recall: 0.8031 - auc: 0.9530 - val_loss: 1.1105 - val_accuracy: 0.7615 - val_precision: 0.7568 - val_recall: 1.0000 - val_auc: 0.8080 - lr: 1.0000e-04

Epoch 14/50

207/207 [==============================] - ETA: 0s - loss: 0.2710 - accuracy: 0.8885 - precision: 0.9612 - recall: 0.8098 - auc: 0.9555

Epoch 14: ReduceLROnPlateau reducing learning rate to 1.9999999494757503e-05.Epoch 14: val_auc did not improve from 0.95169

207/207 [==============================] - 236s 1s/step - loss: 0.2710 - accuracy: 0.8885 - precision: 0.9612 - recall: 0.8098 - auc: 0.9555 - val_loss: 2.9286 - val_accuracy: 0.3240 - val_precision: 0.9815 - val_recall: 0.0911 - val_auc: 0.8606 - lr: 1.0000e-04

Epoch 15/50

207/207 [==============================] - ETA: 0s - loss: 0.2634 - accuracy: 0.8894 - precision: 0.9636 - recall: 0.8095 - auc: 0.9567

Epoch 15: val_auc improved from 0.95169 to 0.95699, saving model to best_pneumonia_model.h5

207/207 [==============================] - 237s 1s/step - loss: 0.2634 - accuracy: 0.8894 - precision: 0.9636 - recall: 0.8095 - auc: 0.9567 - val_loss: 0.6077 - val_accuracy: 0.8048 - val_precision: 0.9977 - val_recall: 0.7388 - val_auc: 0.9570 - lr: 2.0000e-05

Epoch 16/50

207/207 [==============================] - ETA: 0s - loss: 0.2576 - accuracy: 0.8909 - precision: 0.9654 - recall: 0.8110 - auc: 0.9600

Epoch 16: val_auc did not improve from 0.95699

207/207 [==============================] - 236s 1s/step - loss: 0.2576 - accuracy: 0.8909 - precision: 0.9654 - recall: 0.8110 - auc: 0.9600 - val_loss: 0.2776 - val_accuracy: 0.8724 - val_precision: 0.9635 - val_recall: 0.8608 - val_auc: 0.9566 - lr: 2.0000e-05

Epoch 17/50

207/207 [==============================] - ETA: 0s - loss: 0.2553 - accuracy: 0.8944 - precision: 0.9604 - recall: 0.8228 - auc: 0.9601

Epoch 17: val_auc did not improve from 0.95699

207/207 [==============================] - 238s 1s/step - loss: 0.2553 - accuracy: 0.8944 - precision: 0.9604 - recall: 0.8228 - auc: 0.9601 - val_loss: 1.2915 - val_accuracy: 0.6186 - val_precision: 1.0000 - val_recall: 0.4863 - val_auc: 0.9440 - lr: 2.0000e-05

Epoch 18/50

207/207 [==============================] - ETA: 0s - loss: 0.2505 - accuracy: 0.8919 - precision: 0.9635 - recall: 0.8146 - auc: 0.9608

Epoch 18: val_auc did not improve from 0.95699

207/207 [==============================] - 236s 1s/step - loss: 0.2505 - accuracy: 0.8919 - precision: 0.9635 - recall: 0.8146 - auc: 0.9608 - val_loss: 0.5704 - val_accuracy: 0.8099 - val_precision: 0.9909 - val_recall: 0.7509 - val_auc: 0.9460 - lr: 2.0000e-05

Epoch 19/50

207/207 [==============================] - ETA: 0s - loss: 0.2557 - accuracy: 0.8920 - precision: 0.9615 - recall: 0.8167 - auc: 0.9593

Epoch 19: ReduceLROnPlateau reducing learning rate to 3.999999898951501e-06.Epoch 19: val_auc did not improve from 0.95699

207/207 [==============================] - 236s 1s/step - loss: 0.2557 - accuracy: 0.8920 - precision: 0.9615 - recall: 0.8167 - auc: 0.9593 - val_loss: 0.3977 - val_accuracy: 0.8597 - val_precision: 0.9917 - val_recall: 0.8179 - val_auc: 0.9558 - lr: 2.0000e-05

Epoch 20/50

207/207 [==============================] - ETA: 0s - loss: 0.2383 - accuracy: 0.8944 - precision: 0.9735 - recall: 0.8110 - auc: 0.9643

Epoch 20: val_auc improved from 0.95699 to 0.96287, saving model to best_pneumonia_model.h5

207/207 [==============================] - 236s 1s/step - loss: 0.2383 - accuracy: 0.8944 - precision: 0.9735 - recall: 0.8110 - auc: 0.9643 - val_loss: 0.3187 - val_accuracy: 0.8801 - val_precision: 0.9861 - val_recall: 0.8505 - val_auc: 0.9629 - lr: 4.0000e-06

Epoch 21/50

207/207 [==============================] - ETA: 0s - loss: 0.2539 - accuracy: 0.8894 - precision: 0.9642 - recall: 0.8088 - auc: 0.9606

Epoch 21: val_auc did not improve from 0.96287

207/207 [==============================] - 236s 1s/step - loss: 0.2539 - accuracy: 0.8894 - precision: 0.9642 - recall: 0.8088 - auc: 0.9606 - val_loss: 0.5630 - val_accuracy: 0.8074 - val_precision: 0.9954 - val_recall: 0.7440 - val_auc: 0.9522 - lr: 4.0000e-06

Epoch 22/50

207/207 [==============================] - ETA: 0s - loss: 0.2480 - accuracy: 0.8994 - precision: 0.9667 - recall: 0.8273 - auc: 0.9616

Epoch 22: ReduceLROnPlateau reducing learning rate to 7.999999979801942e-07.Epoch 22: val_auc did not improve from 0.96287

207/207 [==============================] - 236s 1s/step - loss: 0.2480 - accuracy: 0.8994 - precision: 0.9667 - recall: 0.8273 - auc: 0.9616 - val_loss: 0.5155 - val_accuracy: 0.8112 - val_precision: 0.9910 - val_recall: 0.7526 - val_auc: 0.9588 - lr: 4.0000e-06

Epoch 23/50

207/207 [==============================] - ETA: 0s - loss: 0.2474 - accuracy: 0.8909 - precision: 0.9647 - recall: 0.8116 - auc: 0.9634

Epoch 23: val_auc improved from 0.96287 to 0.96317, saving model to best_pneumonia_model.h5

207/207 [==============================] - 238s 1s/step - loss: 0.2474 - accuracy: 0.8909 - precision: 0.9647 - recall: 0.8116 - auc: 0.9634 - val_loss: 0.4768 - val_accuracy: 0.8253 - val_precision: 0.9912 - val_recall: 0.7715 - val_auc: 0.9632 - lr: 8.0000e-07

Epoch 24/50

207/207 [==============================] - ETA: 0s - loss: 0.2496 - accuracy: 0.8981 - precision: 0.9686 - recall: 0.8228 - auc: 0.9612

Epoch 24: val_auc improved from 0.96317 to 0.96399, saving model to best_pneumonia_model.h5

207/207 [==============================] - 236s 1s/step - loss: 0.2496 - accuracy: 0.8981 - precision: 0.9686 - recall: 0.8228 - auc: 0.9612 - val_loss: 0.4538 - val_accuracy: 0.8278 - val_precision: 0.9956 - val_recall: 0.7715 - val_auc: 0.9640 - lr: 8.0000e-07

Epoch 25/50

207/207 [==============================] - ETA: 0s - loss: 0.2504 - accuracy: 0.8935 - precision: 0.9613 - recall: 0.8201 - auc: 0.9618

Epoch 25: ReduceLROnPlateau reducing learning rate to 1.600000018697756e-07.Epoch 25: val_auc did not improve from 0.96399

207/207 [==============================] - 237s 1s/step - loss: 0.2504 - accuracy: 0.8935 - precision: 0.9613 - recall: 0.8201 - auc: 0.9618 - val_loss: 0.4323 - val_accuracy: 0.8431 - val_precision: 0.9978 - val_recall: 0.7904 - val_auc: 0.9596 - lr: 8.0000e-07

Epoch 26/50

207/207 [==============================] - ETA: 0s - loss: 0.2448 - accuracy: 0.8981 - precision: 0.9723 - recall: 0.8194 - auc: 0.9617

Epoch 26: val_auc did not improve from 0.96399

207/207 [==============================] - 236s 1s/step - loss: 0.2448 - accuracy: 0.8981 - precision: 0.9723 - recall: 0.8194 - auc: 0.9617 - val_loss: 0.4714 - val_accuracy: 0.8189 - val_precision: 0.9933 - val_recall: 0.7612 - val_auc: 0.9618 - lr: 1.6000e-07

Epoch 27/50

207/207 [==============================] - ETA: 0s - loss: 0.2514 - accuracy: 0.8940 - precision: 0.9653 - recall: 0.8173 - auc: 0.9611

Epoch 27: val_auc improved from 0.96399 to 0.96583, saving model to best_pneumonia_model.h5

207/207 [==============================] - 236s 1s/step - loss: 0.2514 - accuracy: 0.8940 - precision: 0.9653 - recall: 0.8173 - auc: 0.9611 - val_loss: 0.4280 - val_accuracy: 0.8342 - val_precision: 0.9956 - val_recall: 0.7801 - val_auc: 0.9658 - lr: 1.6000e-07

Epoch 28/50

207/207 [==============================] - ETA: 0s - loss: 0.2496 - accuracy: 0.8926 - precision: 0.9662 - recall: 0.8137 - auc: 0.9627

Epoch 28: ReduceLROnPlateau reducing learning rate to 1e-07.Epoch 28: val_auc did not improve from 0.96583

207/207 [==============================] - 235s 1s/step - loss: 0.2496 - accuracy: 0.8926 - precision: 0.9662 - recall: 0.8137 - auc: 0.9627 - val_loss: 0.4548 - val_accuracy: 0.8355 - val_precision: 0.9871 - val_recall: 0.7887 - val_auc: 0.9576 - lr: 1.6000e-07

Epoch 29/50

207/207 [==============================] - ETA: 0s - loss: 0.2448 - accuracy: 0.8973 - precision: 0.9682 - recall: 0.8216 - auc: 0.9635

Epoch 29: val_auc did not improve from 0.96583

207/207 [==============================] - 236s 1s/step - loss: 0.2448 - accuracy: 0.8973 - precision: 0.9682 - recall: 0.8216 - auc: 0.9635 - val_loss: 0.4514 - val_accuracy: 0.8469 - val_precision: 0.9957 - val_recall: 0.7973 - val_auc: 0.9533 - lr: 1.0000e-07

Epoch 30/50

207/207 [==============================] - ETA: 0s - loss: 0.2409 - accuracy: 0.8981 - precision: 0.9720 - recall: 0.8198 - auc: 0.9636

Epoch 30: val_auc did not improve from 0.96583

207/207 [==============================] - 236s 1s/step - loss: 0.2409 - accuracy: 0.8981 - precision: 0.9720 - recall: 0.8198 - auc: 0.9636 - val_loss: 0.4526 - val_accuracy: 0.8253 - val_precision: 0.9912 - val_recall: 0.7715 - val_auc: 0.9612 - lr: 1.0000e-07

Epoch 31/50

207/207 [==============================] - ETA: 0s - loss: 0.2476 - accuracy: 0.8979 - precision: 0.9656 - recall: 0.8252 - auc: 0.9615

Epoch 31: val_auc did not improve from 0.96583

207/207 [==============================] - 236s 1s/step - loss: 0.2476 - accuracy: 0.8979 - precision: 0.9656 - recall: 0.8252 - auc: 0.9615 - val_loss: 0.4479 - val_accuracy: 0.8367 - val_precision: 0.9956 - val_recall: 0.7835 - val_auc: 0.9585 - lr: 1.0000e-07

Epoch 32/50

207/207 [==============================] - ETA: 0s - loss: 0.2393 - accuracy: 0.8978 - precision: 0.9686 - recall: 0.8222 - auc: 0.9645

Epoch 32: val_auc did not improve from 0.96583

207/207 [==============================] - 236s 1s/step - loss: 0.2393 - accuracy: 0.8978 - precision: 0.9686 - recall: 0.8222 - auc: 0.9645 - val_loss: 0.4738 - val_accuracy: 0.8316 - val_precision: 0.9934 - val_recall: 0.7784 - val_auc: 0.9583 - lr: 1.0000e-07

Epoch 33/50

207/207 [==============================] - ETA: 0s - loss: 0.2492 - accuracy: 0.8950 - precision: 0.9654 - recall: 0.8194 - auc: 0.9614

Epoch 33: val_auc did not improve from 0.96583

207/207 [==============================] - 236s 1s/step - loss: 0.2492 - accuracy: 0.8950 - precision: 0.9654 - recall: 0.8194 - auc: 0.9614 - val_loss: 0.4522 - val_accuracy: 0.8431 - val_precision: 0.9978 - val_recall: 0.7904 - val_auc: 0.9589 - lr: 1.0000e-07

Epoch 34/50

207/207 [==============================] - ETA: 0s - loss: 0.2429 - accuracy: 0.9018 - precision: 0.9709 - recall: 0.8285 - auc: 0.9618

Epoch 34: val_auc did not improve from 0.96583

207/207 [==============================] - 239s 1s/step - loss: 0.2429 - accuracy: 0.9018 - precision: 0.9709 - recall: 0.8285 - auc: 0.9618 - val_loss: 0.4706 - val_accuracy: 0.8418 - val_precision: 0.9914 - val_recall: 0.7938 - val_auc: 0.9541 - lr: 1.0000e-07

Epoch 35/50

207/207 [==============================] - ETA: 0s - loss: 0.2455 - accuracy: 0.8979 - precision: 0.9650 - recall: 0.8258 - auc: 0.9627

Epoch 35: val_auc improved from 0.96583 to 0.97260, saving model to best_pneumonia_model.h5

207/207 [==============================] - 237s 1s/step - loss: 0.2455 - accuracy: 0.8979 - precision: 0.9650 - recall: 0.8258 - auc: 0.9627 - val_loss: 0.4193 - val_accuracy: 0.8291 - val_precision: 0.9956 - val_recall: 0.7732 - val_auc: 0.9726 - lr: 1.0000e-07

Epoch 36/50

207/207 [==============================] - ETA: 0s - loss: 0.2442 - accuracy: 0.8919 - precision: 0.9661 - recall: 0.8122 - auc: 0.9631

Epoch 36: val_auc did not improve from 0.97260

207/207 [==============================] - 234s 1s/step - loss: 0.2442 - accuracy: 0.8919 - precision: 0.9661 - recall: 0.8122 - auc: 0.9631 - val_loss: 0.4375 - val_accuracy: 0.8329 - val_precision: 1.0000 - val_recall: 0.7749 - val_auc: 0.9634 - lr: 1.0000e-07

Epoch 37/50

207/207 [==============================] - ETA: 0s - loss: 0.2447 - accuracy: 0.8987 - precision: 0.9680 - recall: 0.8246 - auc: 0.9628

Epoch 37: val_auc did not improve from 0.97260

207/207 [==============================] - 235s 1s/step - loss: 0.2447 - accuracy: 0.8987 - precision: 0.9680 - recall: 0.8246 - auc: 0.9628 - val_loss: 0.4201 - val_accuracy: 0.8380 - val_precision: 0.9956 - val_recall: 0.7852 - val_auc: 0.9673 - lr: 1.0000e-07

Epoch 38/50

207/207 [==============================] - ETA: 0s - loss: 0.2460 - accuracy: 0.8955 - precision: 0.9671 - recall: 0.8188 - auc: 0.9621

Epoch 38: val_auc did not improve from 0.97260

207/207 [==============================] - 236s 1s/step - loss: 0.2460 - accuracy: 0.8955 - precision: 0.9671 - recall: 0.8188 - auc: 0.9621 - val_loss: 0.4425 - val_accuracy: 0.8418 - val_precision: 0.9978 - val_recall: 0.7887 - val_auc: 0.9652 - lr: 1.0000e-07

Epoch 39/50

207/207 [==============================] - ETA: 0s - loss: 0.2503 - accuracy: 0.8932 - precision: 0.9632 - recall: 0.8176 - auc: 0.9622

Epoch 39: val_auc did not improve from 0.97260

207/207 [==============================] - 234s 1s/step - loss: 0.2503 - accuracy: 0.8932 - precision: 0.9632 - recall: 0.8176 - auc: 0.9622 - val_loss: 0.4590 - val_accuracy: 0.8253 - val_precision: 0.9847 - val_recall: 0.7766 - val_auc: 0.9571 - lr: 1.0000e-07

Epoch 40/50

207/207 [==============================] - ETA: 0s - loss: 0.2499 - accuracy: 0.8949 - precision: 0.9660 - recall: 0.8185 - auc: 0.9611

Epoch 40: val_auc did not improve from 0.97260

207/207 [==============================] - 235s 1s/step - loss: 0.2499 - accuracy: 0.8949 - precision: 0.9660 - recall: 0.8185 - auc: 0.9611 - val_loss: 0.4443 - val_accuracy: 0.8316 - val_precision: 0.9978 - val_recall: 0.7749 - val_auc: 0.9639 - lr: 1.0000e-07

Epoch 41/50

207/207 [==============================] - ETA: 0s - loss: 0.2489 - accuracy: 0.8952 - precision: 0.9654 - recall: 0.8198 - auc: 0.9612

Epoch 41: val_auc did not improve from 0.97260

207/207 [==============================] - 240s 1s/step - loss: 0.2489 - accuracy: 0.8952 - precision: 0.9654 - recall: 0.8198 - auc: 0.9612 - val_loss: 0.4213 - val_accuracy: 0.8418 - val_precision: 0.9978 - val_recall: 0.7887 - val_auc: 0.9677 - lr: 1.0000e-07

Epoch 42/50

207/207 [==============================] - ETA: 0s - loss: 0.2420 - accuracy: 0.8993 - precision: 0.9694 - recall: 0.8246 - auc: 0.9637

Epoch 42: val_auc did not improve from 0.97260

207/207 [==============================] - 234s 1s/step - loss: 0.2420 - accuracy: 0.8993 - precision: 0.9694 - recall: 0.8246 - auc: 0.9637 - val_loss: 0.4687 - val_accuracy: 0.8304 - val_precision: 0.9870 - val_recall: 0.7818 - val_auc: 0.9554 - lr: 1.0000e-07

Epoch 43/50

207/207 [==============================] - ETA: 0s - loss: 0.2483 - accuracy: 0.8941 - precision: 0.9653 - recall: 0.8176 - auc: 0.9614Restoring model weights from the end of the best epoch: 35.Epoch 43: val_auc did not improve from 0.97260

207/207 [==============================] - 234s 1s/step - loss: 0.2483 - accuracy: 0.8941 - precision: 0.9653 - recall: 0.8176 - auc: 0.9614 - val_loss: 0.4686 - val_accuracy: 0.8355 - val_precision: 0.9935 - val_recall: 0.7835 - val_auc: 0.9582 - lr: 1.0000e-07

Epoch 43: early stopping

20/20 [==============================] - 20s 998ms/step - loss: 0.4608 - accuracy: 0.8285 - precision: 0.9199 - recall: 0.7949 - auc: 0.9151測試集評估結果:

準確率: 0.8285

精確率: 0.9199

召回率: 0.7949

AUC: 0.9151F1-score: 0.8528

AUC-ROC: 0.9157分類報告:precision recall f1-score supportNORMAL 0.72 0.88 0.79 234PNEUMONIA 0.92 0.79 0.85 390accuracy 0.83 624macro avg 0.82 0.84 0.82 624

weighted avg 0.85 0.83 0.83 624混淆矩陣:

[[207 27][ 80 310]]Process finished with exit code 0F1-score 模型綜合分

F1-score 簡單說就是模型 “抓對的” 和 “沒漏的” 之間的平衡分。

打個比方:假設你要做一個模型,用來識別 “垃圾郵件”。

- 假設實際有 100 封郵件,其中 20 封是垃圾郵件,80 封是正常郵件。

- 模型識別出 15 封垃圾郵件,但其中有 5 封其實是正常郵件(錯判),同時還有 10 封真正的垃圾郵件沒識別出來(漏判)。

這時候:

- “抓對的”(精準率):模型說的 “垃圾郵件” 里,真正是垃圾的有 10 封(15-5),所以精準率是 10/15≈67%。

- “沒漏的”(召回率):所有真正的垃圾郵件里,模型抓到了 10 封,所以召回率是 10/20=50%。

F1-score 就是把這兩個數 “中和” 一下,算出來一個綜合分(公式是:2× 精準率 × 召回率 ÷(精準率 + 召回率)),這里就是 2×67%×50%÷(67%+50%)≈57%。

它的作用是:當數據不平衡時(比如垃圾郵件只占 20%),光看 “準確率”(比如模型把所有郵件都判為正常,準確率也有 80%,但毫無意義)會騙人,而 F1-score 能更真實反映模型的好壞 —— 既不能亂判(精準率低),也不能漏判(召回率低),得分越高說明平衡得越好。

通過控制臺信息了解肺炎診斷模型評估

一、數據準備階段

| Found 4448 images belonging to 2 classes. Found 784 images belonging to 2 classes. Found 624 images belonging to 2 classes. |

- 說明:程序找到了 4448 張訓練圖片、784 張驗證圖片和 624 張測試圖片,分為 "正常" 和 "肺炎" 兩類

| 原始樣本分布: 正常=1147, 肺炎=3301 過采樣后分布: 正常=3301, 肺炎=3301 |

- 說明:原始數據中肺炎樣本比正常樣本多很多(3301 vs 1147)

- 解決方法:用 "過采樣" 技術生成更多正常樣本,使兩類樣本數量相等(3301 vs 3301)

| 類別權重: 正常=1.94, 肺炎=0.67 |

- 說明:給樣本數量少的 "正常" 類分配更高權重(1.94),讓模型更重視它

- 作用:解決樣本不平衡問題,避免模型只學肺炎樣本

二、模型構建階段

| Model: "sequential" _________________________________________________________________ ?Layer (type)??????????????? Output Shape????????????? Param #?? ================================================================= ?resnet50 (Functional)?????? (None, 7, 7, 2048)??????? 23587712? ?global_average_pooling2d (? (None, 2048)????????????? 0???????? ?GlobalAveragePooling2D)???????????????????????????????????????? ?dense (Dense)?????????????? (None, 512)?????????????? 1049088?? ?batch_normalization (Batch? (None, 512)?????????????? 2048????? ?Normalization)????????????????????????????????????????????????? ?dropout (Dropout)?????????? (None, 512)?????????????? 0???????? ?dense_1 (Dense)???????????? (None, 256)?????????????? 131328??? ?batch_normalization_1 (Bat? (None, 256)?????????????? 1024????? ?chNormalization)??????????????????????????????????????????????? ?dropout_1 (Dropout)???????? (None, 256)?????????????? 0???????? ?dense_2 (Dense)???????????? (None, 1)???????????????? 257?????? ================================================================= Total params: 24771457 (94.50 MB) Trainable params: 1182209 (4.51 MB) Non-trainable params: 23589248 (89.99 MB) |

- 說明:這是一個基于 ResNet50 的模型,包含多個層:

- ResNet50:預訓練的圖像特征提取網絡

- 全局平均池化:將特征圖轉為向量

- 全連接層 + 批歸一化 + dropout:處理特征

- 最后一層輸出:預測是否為肺炎(0 或 1)

- 參數:總共有約 2400 萬參數,其中 118 萬可訓練

三、訓練過程

| Epoch 1/50 207/207 [==============================] - ETA: 0s - loss: 0.6233 - accuracy: 0.7675 - precision: 0.8022 - recall: 0.7101 - auc: 0.8398 Epoch 1: val_auc improved from -inf to 0.87968, saving model to best_pneumonia_model.h5 207/207 [==============================] - 245s 1s/step - loss: 0.6233 - accuracy: 0.7675 - precision: 0.8022 - recall: 0.7101 - auc: 0.8398 - val_loss: 1.3033 - val_accuracy: 0.2577 - val_precision: 0.0000e+00 - val_recall: 0.0000e+00 - val_auc: 0.8797 - lr: 1.0000e-04 |

- 說明:開始第 1 輪訓練(共 50 輪)

- 訓練結果:

- 訓練集:損失 0.62,準確率 76.75%,精確率 80.22%,召回率 701%

- 驗證集:損失 1.30,準確率 25.77%(這一輪驗證結果很差)

- 特殊事件:驗證集的 AUC 指標從無窮大提升到 0.8797,所以保存當前模型

| Epoch 14: ReduceLROnPlateau reducing learning rate to 1.9999999494757503e-05. |

- 說明:第 14 輪時,驗證集性能沒有提升,學習率從 0.0001 降到 0.00002

- 作用:防止模型 "學不進去",幫助找到更好的參數

| Epoch 35: val_auc improved from 0.96583 to 0.97260, saving model to best_pneumonia_model.h5 |

- 說明:第 35 輪時,驗證集的 AUC 指標從 0.9658 提升到 0.9726,保存模型

| Epoch 43: early stopping |

- 說明:第 43 輪時,驗證集性能連續多輪沒有提升,訓練提前停止

- 作用:防止模型 "過擬合"(只記住訓練數據,不會舉一反三)

四、測試結果

| 20/20 [==============================] - 20s 998ms/step - loss: 0.4608 - accuracy: 0.8285 - precision: 0.9199 - recall: 0.7949 - auc: 0.9151 |

- 說明:在 624 張測試圖片上的最終結果

- 關鍵指標:

- 準確率:82.85%(約 83% 的圖片分類正確)

- 精確率:91.99%(模型說 "是肺炎" 的樣本中,92% 確實是肺炎)

- 召回率:79.49%(所有肺炎樣本中,79% 被正確識別)

- AUC:0.9151(ROC 曲線下面積,0.9 以上說明模型性能優秀)

| F1-score: 0.8528 |

- 說明:精確率和召回率的綜合指標,0.85 表示模型在 "不錯判" 和 "不漏判" 之間平衡得很好

| 分類報告: ????????????? precision??? recall? f1-score?? support ????? NORMAL?????? 0.72????? 0.88????? 0.79?????? 234 ?? PNEUMONIA?????? 0.92????? 0.79????? 0.85?????? 390 ??? accuracy?????????????????????????? 0.83?????? 624 ?? macro avg?????? 0.82????? 0.84????? 0.82?????? 624 weighted avg?????? 0.85????? 0.83????? 0.83?????? 624 |

- 說明:詳細的分類結果

- 正常樣本:精確率 72%,召回率 88%(模型對正常樣本的識別更 "保守")

- 肺炎樣本:精確率 92%,召回率 79%(模型對肺炎樣本的識別更 "積極")

| 混淆矩陣: [[207? 27] ?[ 80 310]] |

- 說明:模型預測結果的詳細分布

- 第一行(實際正常):207 個正確識別,27 個被誤診為肺炎

- 第二行(實際肺炎):80 個被漏診為正常,310 個正確識別

五、總結

- 模型性能:

- 整體準確率 83%,AUC>0.9,說明模型性能優秀

- 對肺炎樣本的精確率 92%(很少誤診),但召回率 79%(漏診率 21%)

- 對正常樣本的召回率 88%(很少漏診),但精確率 72%(誤診率 28%)

- 改進空間:

- 可以嘗試降低肺炎樣本的漏診率(比如調整決策閾值)

- 可以嘗試提高正常樣本的精確率(減少誤診)

- 可以考慮使用更復雜的模型或數據增強技術

- 實際應用建議:

- 在醫療場景中,可能需要優先保證肺炎樣本的召回率(寧可多診斷,不能漏診)

- 可以結合臨床醫生的經驗,調整模型的決策標準

)

![[CSS]讓overflow不用按shift可以滾輪水平滾動(純CSS)](http://pic.xiahunao.cn/[CSS]讓overflow不用按shift可以滾輪水平滾動(純CSS))

)

)

)

![[java 常用類API] 新手小白的編程字典](http://pic.xiahunao.cn/[java 常用類API] 新手小白的編程字典)