基于 YOLO12 與 OpenCV 的實時點擊目標跟蹤系統

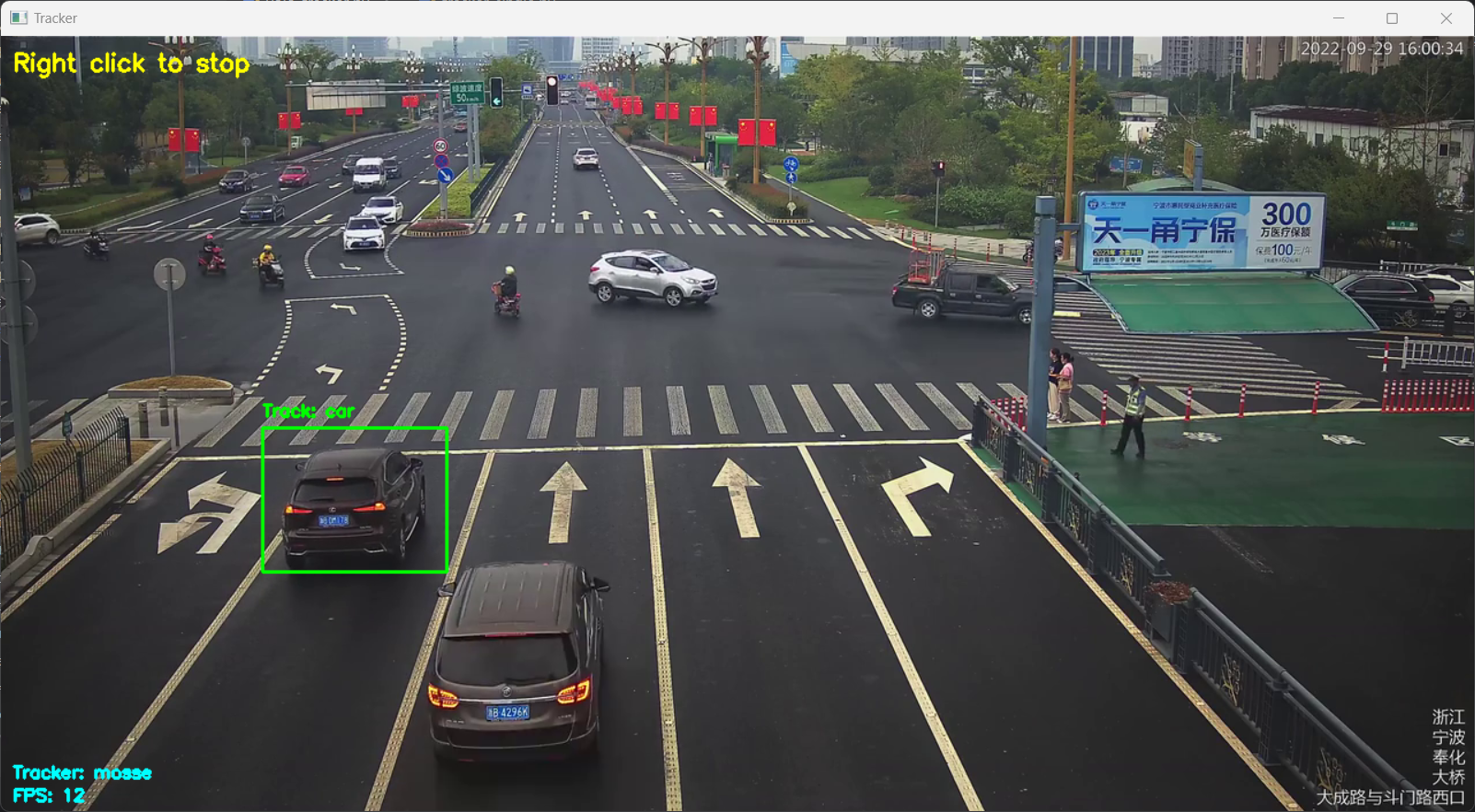

在計算機視覺領域,目標檢測與跟蹤是兩個核心任務。本文將介紹一個結合 YOLO 目標檢測模型與 OpenCV 跟蹤算法的實時目標跟蹤系統,該系統允許用戶通過鼠標交互選擇特定目標進行持續跟蹤,支持多種跟蹤算法切換,適用于視頻監控、行為分析等場景。

【圖像算法 - 13】基于 YOLO12 與 OpenCV 的實時目標點擊跟蹤系統

系統功能概述

該系統主要實現以下功能:

-

使用 YOLO 模型對視頻幀進行目標檢測,識別出畫面中的各類物體

-

支持用戶通過左鍵點擊選擇特定目標進行跟蹤

-

提供 MOSSE、CSRT、KCF 三種經典跟蹤算法供選擇

-

實時顯示跟蹤狀態、目標類別及 FPS 等信息

-

支持右鍵點擊停止跟蹤,回到目標檢測模式

技術原理

系統采用 “檢測 + 跟蹤” 的混合架構:

- 首先利用 YOLO 模型進行目標檢測,獲取畫面中所有目標的邊界框和類別信息

- 當用戶選擇特定目標后,啟動選定的跟蹤算法對該目標進行持續跟蹤

- 跟蹤過程中實時更新目標位置,若跟蹤失敗則提示 “Lost”

- 整個過程通過可視化界面展示,支持用戶交互操作

這種架構結合了 YOLO 檢測精度高和傳統跟蹤算法速度快的優點,在保證一定精度的同時兼顧了實時性。

代碼解析

核心依賴庫

import cv2 # 用于視頻處理和跟蹤算法

import numpy as np # 用于數值計算

import argparse # 用于命令行參數解析

from ultralytics import YOLO # 用于YOLO目標檢測

全局變量與交互設計

定義全局變量存儲跟蹤狀態和用戶交互信息:

selected_box = None # 選中的目標邊界框

tracking = False # 跟蹤狀態標志

target_class = None # 目標類別

click_x, click_y = -1, -1 # 鼠標點擊坐標

mouse_clicked = False # 鼠標點擊標志

debug = False # 調試模式標志

鼠標回調函數處理用戶交互:

def mouse_callback(event, x, y, flags, param):global click_x, click_y, mouse_clicked, trackingif event == cv2.EVENT_LBUTTONDOWN: # 左鍵點擊選擇目標click_x, click_y = x, ymouse_clicked = Trueif debug:print(f"Left click at: ({x}, {y})")elif event == cv2.EVENT_RBUTTONDOWN: # 右鍵點擊停止跟蹤tracking = Falseif debug:print("Tracking stopped")

跟蹤器創建

針對不同的跟蹤算法,創建對應的跟蹤器實例(注意 OpenCV 新版本中跟蹤器位于 legacy 模塊):

def create_tracker(tracker_type):"""使用cv2.legacy模塊創建跟蹤器,兼容新版OpenCV"""try:if tracker_type == "mosse":return cv2.legacy.TrackerMOSSE_create()elif tracker_type == "csrt":return cv2.legacy.TrackerCSRT_create()elif tracker_type == "kcf":return cv2.legacy.TrackerKCF_create()else:print(f"Unsupported tracker: {tracker_type}, using MOSSE")return cv2.legacy.TrackerMOSSE_create()except AttributeError as e:print(f"Failed to create tracker: {e}")print("Please check OpenCV installation (must include opencv-contrib-python)")return None

OpenCV 跟蹤demo源碼

#!/usr/bin/env python

'''

Tracker demoFor usage download models by following links

For GOTURN:goturn.prototxt and goturn.caffemodel: https://github.com/opencv/opencv_extra/tree/c4219d5eb3105ed8e634278fad312a1a8d2c182d/testdata/tracking

For DaSiamRPN:network: https://www.dropbox.com/s/rr1lk9355vzolqv/dasiamrpn_model.onnx?dl=0kernel_r1: https://www.dropbox.com/s/999cqx5zrfi7w4p/dasiamrpn_kernel_r1.onnx?dl=0kernel_cls1: https://www.dropbox.com/s/qvmtszx5h339a0w/dasiamrpn_kernel_cls1.onnx?dl=0

For NanoTrack:nanotrack_backbone: https://github.com/HonglinChu/SiamTrackers/blob/master/NanoTrack/models/nanotrackv2/nanotrack_backbone_sim.onnxnanotrack_headneck: https://github.com/HonglinChu/SiamTrackers/blob/master/NanoTrack/models/nanotrackv2/nanotrack_head_sim.onnxUSAGE:tracker.py [-h] [--input INPUT_VIDEO][--tracker_algo TRACKER_ALGO (mil, goturn, dasiamrpn, nanotrack, vittrack)][--goturn GOTURN_PROTOTXT][--goturn_model GOTURN_MODEL][--dasiamrpn_net DASIAMRPN_NET][--dasiamrpn_kernel_r1 DASIAMRPN_KERNEL_R1][--dasiamrpn_kernel_cls1 DASIAMRPN_KERNEL_CLS1][--nanotrack_backbone NANOTRACK_BACKBONE][--nanotrack_headneck NANOTRACK_TARGET][--vittrack_net VITTRACK_MODEL][--vittrack_net VITTRACK_MODEL][--tracking_score_threshold TRACKING SCORE THRESHOLD FOR ONLY VITTRACK][--backend CHOOSE ONE OF COMPUTATION BACKEND][--target CHOOSE ONE OF COMPUTATION TARGET]

'''# Python 2/3 compatibility

from __future__ import print_functionimport sysimport numpy as np

import cv2 as cv

import argparsefrom video import create_capture, presetsbackends = (cv.dnn.DNN_BACKEND_DEFAULT, cv.dnn.DNN_BACKEND_HALIDE, cv.dnn.DNN_BACKEND_INFERENCE_ENGINE, cv.dnn.DNN_BACKEND_OPENCV,cv.dnn.DNN_BACKEND_VKCOM, cv.dnn.DNN_BACKEND_CUDA)

targets = (cv.dnn.DNN_TARGET_CPU, cv.dnn.DNN_TARGET_OPENCL, cv.dnn.DNN_TARGET_OPENCL_FP16, cv.dnn.DNN_TARGET_MYRIAD,cv.dnn.DNN_TARGET_VULKAN, cv.dnn.DNN_TARGET_CUDA, cv.dnn.DNN_TARGET_CUDA_FP16)class App(object):def __init__(self, args):self.args = argsself.trackerAlgorithm = args.tracker_algoself.tracker = self.createTracker()def createTracker(self):if self.trackerAlgorithm == 'mil':tracker = cv.TrackerMIL_create()elif self.trackerAlgorithm == 'goturn':params = cv.TrackerGOTURN_Params()params.modelTxt = self.args.goturnparams.modelBin = self.args.goturn_modeltracker = cv.TrackerGOTURN_create(params)elif self.trackerAlgorithm == 'dasiamrpn':params = cv.TrackerDaSiamRPN_Params()params.model = self.args.dasiamrpn_netparams.kernel_cls1 = self.args.dasiamrpn_kernel_cls1params.kernel_r1 = self.args.dasiamrpn_kernel_r1params.backend = args.backendparams.target = args.targettracker = cv.TrackerDaSiamRPN_create(params)elif self.trackerAlgorithm == 'nanotrack':params = cv.TrackerNano_Params()params.backbone = args.nanotrack_backboneparams.neckhead = args.nanotrack_headneckparams.backend = args.backendparams.target = args.targettracker = cv.TrackerNano_create(params)elif self.trackerAlgorithm == 'vittrack':params = cv.TrackerVit_Params()params.net = args.vittrack_netparams.tracking_score_threshold = args.tracking_score_thresholdparams.backend = args.backendparams.target = args.targettracker = cv.TrackerVit_create(params)else:sys.exit("Tracker {} is not recognized. Please use one of three available: mil, goturn, dasiamrpn, nanotrack.".format(self.trackerAlgorithm))return trackerdef initializeTracker(self, image):while True:print('==> Select object ROI for tracker ...')bbox = cv.selectROI('tracking', image)print('ROI: {}'.format(bbox))if bbox[2] <= 0 or bbox[3] <= 0:sys.exit("ROI selection cancelled. Exiting...")try:self.tracker.init(image, bbox)except Exception as e:print('Unable to initialize tracker with requested bounding box. Is there any object?')print(e)print('Try again ...')continuereturndef run(self):videoPath = self.args.inputprint('Using video: {}'.format(videoPath))camera = create_capture(cv.samples.findFileOrKeep(videoPath), presets['cube'])if not camera.isOpened():sys.exit("Can't open video stream: {}".format(videoPath))ok, image = camera.read()if not ok:sys.exit("Can't read first frame")assert image is not Nonecv.namedWindow('tracking')self.initializeTracker(image)print("==> Tracking is started. Press 'SPACE' to re-initialize tracker or 'ESC' for exit...")while camera.isOpened():ok, image = camera.read()if not ok:print("Can't read frame")breakok, newbox = self.tracker.update(image)#print(ok, newbox)if ok:cv.rectangle(image, newbox, (200,0,0))cv.imshow("tracking", image)k = cv.waitKey(1)if k == 32: # SPACEself.initializeTracker(image)if k == 27: # ESCbreakprint('Done')if __name__ == '__main__':print(__doc__)parser = argparse.ArgumentParser(description="Run tracker")parser.add_argument("--input", type=str, default="vtest.avi", help="Path to video source")parser.add_argument("--tracker_algo", type=str, default="nanotrack", help="One of available tracking algorithms: mil, goturn, dasiamrpn, nanotrack, vittrack")parser.add_argument("--goturn", type=str, default="goturn.prototxt", help="Path to GOTURN architecture")parser.add_argument("--goturn_model", type=str, default="goturn.caffemodel", help="Path to GOTERN model")parser.add_argument("--dasiamrpn_net", type=str, default="dasiamrpn_model.onnx", help="Path to onnx model of DaSiamRPN net")parser.add_argument("--dasiamrpn_kernel_r1", type=str, default="dasiamrpn_kernel_r1.onnx", help="Path to onnx model of DaSiamRPN kernel_r1")parser.add_argument("--dasiamrpn_kernel_cls1", type=str, default="dasiamrpn_kernel_cls1.onnx", help="Path to onnx model of DaSiamRPN kernel_cls1")parser.add_argument("--nanotrack_backbone", type=str, default="nanotrack_backbone_sim.onnx", help="Path to onnx model of NanoTrack backBone")parser.add_argument("--nanotrack_headneck", type=str, default="nanotrack_head_sim.onnx", help="Path to onnx model of NanoTrack headNeck")parser.add_argument("--vittrack_net", type=str, default="vitTracker.onnx", help="Path to onnx model of vittrack")parser.add_argument('--tracking_score_threshold', type=float, help="Tracking score threshold. If a bbox of score >= 0.3, it is considered as found ")parser.add_argument('--backend', choices=backends, default=cv.dnn.DNN_BACKEND_DEFAULT, type=int,help="Choose one of computation backends: ""%d: automatically (by default), ""%d: Halide language (http://halide-lang.org/), ""%d: Intel's Deep Learning Inference Engine (https://software.intel.com/openvino-toolkit), ""%d: OpenCV implementation, ""%d: VKCOM, ""%d: CUDA"% backends)parser.add_argument("--target", choices=targets, default=cv.dnn.DNN_TARGET_CPU, type=int,help="Choose one of target computation devices: "'%d: CPU target (by default), ''%d: OpenCL, ''%d: OpenCL fp16 (half-float precision), ''%d: VPU, ''%d: VULKAN, ''%d: CUDA, ''%d: CUDA fp16 (half-float preprocess)'% targets)args = parser.parse_args()App(args).run()cv.destroyAllWindows()

目標檢測與處理

使用 YOLO 模型進行目標檢測,并將結果轉換為便于處理的格式:

def detect_objects(frame, model, confidence):results = model(frame, conf=confidence)boxes = [] # 存儲邊界框 (x, y, w, h)class_names = [] # 存儲目標類別for result in results:for box in result.boxes:x1, y1, x2, y2 = box.xyxy[0].tolist()boxes.append((int(x1), int(y1), int(x2 - x1), int(y2 - y1)))class_names.append(model.names[int(box.cls[0])])return boxes, class_names

判斷鼠標點擊是否在某個目標框內:

def point_in_box(x, y, box):bx, by, bw, bh = boxreturn bx <= x <= bx + bw and by <= y <= by + bh

主程序邏輯

主函數協調整個系統的工作流程:

def main():global selected_box, tracking, target_class, mouse_clicked, debugargs = parse_args()debug = args.debug# 驗證跟蹤器是否可用test_tracker = create_tracker(args.tracker)if test_tracker is None:returndel test_tracker# 加載YOLO模型try:model = YOLO(args.model)except Exception as e:print(f"Failed to load YOLO model: {e}")return# 打開視頻源cap = cv2.VideoCapture(args.video)if not cap.isOpened():print(f"Cannot open video source: {args.video}")return# 創建窗口和鼠標回調window_name = "Tracker"cv2.namedWindow(window_name)cv2.setMouseCallback(window_name, mouse_callback)tracker = Nonefont = cv2.FONT_HERSHEY_SIMPLEXwhile True:ret, frame = cap.read()if not ret:print("End of video")break# 檢測目標boxes, class_names = detect_objects(frame, model, args.confidence)# 處理點擊選擇目標if mouse_clicked and not tracking:for i, (box, cls) in enumerate(zip(boxes, class_names)):if point_in_box(click_x, click_y, box):selected_box = boxtarget_class = clstracker = create_tracker(args.tracker)if tracker:tracker.init(frame, selected_box)tracking = Trueprint(f"Tracking {target_class} with {args.tracker}")breakmouse_clicked = False# 跟蹤邏輯if tracking and tracker:ok, bbox = tracker.update(frame)if ok:x, y, w, h = [int(v) for v in bbox]cv2.rectangle(frame, (x, y), (x + w, y + h), (0, 255, 0), 2)cv2.putText(frame, f"Track: {target_class}", (x, y - 10),font, 0.5, (0, 255, 0), 2)else:cv2.putText(frame, "Lost", (10, 30), font, 0.7, (0, 0, 255), 2)tracking = False# 未跟蹤時顯示所有目標if not tracking:for box, cls in zip(boxes, class_names):x, y, w, h = boxcv2.rectangle(frame, (x, y), (x + w, y + h), (255, 0, 0), 2)cv2.putText(frame, cls, (x, y - 10), font, 0.5, (255, 0, 0), 2)# 顯示提示信息hint = "Right click to stop" if tracking else "Left click to select"cv2.putText(frame, hint, (10, 30), font, 0.7, (0, 255, 255), 2)cv2.putText(frame, f"Tracker: {args.tracker}", (10, frame.shape[0] - 30),font, 0.5, (255, 255, 0), 2)cv2.putText(frame, f"FPS: {int(cap.get(cv2.CAP_PROP_FPS))}",(10, frame.shape[0] - 10), font, 0.5, (255, 255, 0), 2)# 顯示窗口cv2.imshow(window_name, frame)# 退出條件if cv2.waitKey(1) & 0xFF == ord('q'):breakcap.release()cv2.destroyAllWindows()

使用方法

環境配置

首先安裝必要的依賴庫:

pip install opencv-python opencv-contrib-python ultralytics numpy

運行參數

程序支持以下命令行參數:

--video:視頻源路徑,默認為 “xxxxx.mp4”,使用 “0” 可調用攝像頭--confidence:目標檢測置信度閾值,默認 0.5--model:YOLO 模型路徑,默認 “yolo12n.pt”(會自動下載)--tracker:跟蹤算法選擇,可選 “mosse”、“csrt”、“kcf”,默認 “mosse”--debug:啟用調試模式,打印額外信息

操作指南

- 運行程序后,系統默認處于目標檢測模式,所有檢測到的目標用藍色框標記

- 左鍵點擊某個目標框,系統將開始用綠色框跟蹤該目標

- 右鍵點擊可停止跟蹤,回到目標檢測模式

- 按 “q” 鍵退出程序

算法特性對比

三種跟蹤算法各有特點:

- MOSSE:速度最快,適合實時性要求高的場景,但精度相對較低

- CSRT:精度最高,但速度較慢,適合對精度要求高的場景

- KCF:介于前兩者之間,平衡了速度和精度

根據實際應用場景選擇合適的跟蹤算法可以獲得更好的效果。

總結與擴展

本文介紹的目標跟蹤系統結合了 YOLO 的強檢測能力和傳統跟蹤算法的高效性,通過簡單的交互實現了靈活的目標跟蹤功能。該系統可進一步擴展,例如:

- 增加多目標跟蹤功能

- 結合 ReID(重識別)技術解決目標遮擋問題

- 加入目標行為分析模塊

- 優化跟蹤失敗后的自動重新檢測機制

通過這個系統,不僅可以快速實現實用的目標跟蹤應用,也有助于理解目標檢測與跟蹤相結合的技術路線,為更復雜的計算機視覺系統開發奠定基礎。

)

——右值引用和移動語義)

![[激光原理與應用-250]:理論 - 幾何光學 - 透鏡成像的優缺點,以及如克服缺點](http://pic.xiahunao.cn/[激光原理與應用-250]:理論 - 幾何光學 - 透鏡成像的優缺點,以及如克服缺點)

)