數據中心細節

定義不平衡數據 (Definition Imbalanced Data)

When we speak of imbalanced data, what we mean is that at least one class is underrepresented. For example, when considering the problem of building a classifier, let’s call it the Idealisstic-Voter. We give it the task of identifying politicians that the American public finds trustworthy. When looking at the dataset, we realize that almost every sample is hugely unpopular.

當我們談到數據不平衡時,我們的意思是說至少有一個 類別 的代表性不足 。 例如,在考慮構建分類器的問題時,我們稱其為Idealisstic-Voter。 我們的任務是確定美國公眾認為值得信賴的政治人物。 當查看數據集時,我們意識到幾乎每個樣本都不受歡迎。

In classifying terminology we call the classes with very few samples the minority-classes and the ones with many the majority-classes.

在對術語進行分類時,我們將很少樣本的類別稱為少數類別 ,而將多數樣本稱為多數類別 。

那么問題是什么呢? (So what is the issue?)

Let’s assume there are 100 politicians, and only one of them is considered trustworthy by the broad public; let’s call him Abraham. We could now quickly build an Idealisstic-Voter. That achieves a Precision of 99% by simply saying “all politicians are liars,” i.e. classifying all politicians as untrustworthy. The loss will be low, and we can make an excellent presentation about how great our Idealisstic-Voter scores on the most common metrics.

假設有100位政治人物,而其中只有一位被廣大公眾信任。 我們叫他亞伯拉罕。 現在,我們可以快速構建理想選民。 只需說“所有政客都是騙子”,即將所有政客歸類為不信任,即可達到99%的精確度。 損失將很小,我們可以就最常見的指標上的Idealisstic-Voter得分有多出色做一個很好的介紹。

PRECISION = TP / (TP+FP) = 99%

ACCURACY = TP / (TP + FN) = 99 %

F1 = (2*PRECISION*RECALL)/(PRECISION+RECALL) = 99.5 %But that is not the goal! What we want is to split the good from the bad, and we really did not split anything. So how can we detect that we have a problem? That we did injustice to Abraham, and we all know he does not like injustices …

但這不是目標! 我們想要的是將好事與壞事分開,而我們實際上并沒有將任何事情分開。 那么,如何檢測到我們有問題呢? 我們對亞伯拉罕造成了不公正,我們都知道他不喜歡不公正……

平衡指標 (Balanced Metrics)

Whenever you are working with imbalanced data, make it a habit to also look at the balanced metrics. They do the same as the ones you are familiar with, but additionally, they take the average over all classes.

每當您使用不平衡的數據時,請養成也要注意平衡的指標的習慣。 它們的作用與您熟悉的方法相同,但此外,它們取所有類的平均值。

BALANCED_PRECISION=(P_TRUSTWORTHY + P_UNTRUSTWORTHY)/2=50%Another metric that works very nicely in this context is the False Positive Rate in comparison with the True Positive Rate. In the multilabel case, you will mostly opt for some macro-averaged score.

在這種情況下,另一個非常有效的指標是誤報率與誤報率。 在多標簽的情況下,您通常會選擇一些宏觀平均得分。

We realized that we have a problem, how can we solve it?

我們意識到自己有問題,如何解決?

上采樣和下采樣 (Upsampling and Downsampling)

Probably the simplest way you can use to make the Idealisstic-Voter solve the problem by himself is by presenting him with more reasonable options. This would mean either collecting more data or using the lovely Upsampling technique.

也許使您的Idealisstic-Voter自己解決問題的最簡單方法就是為他提供更合理的選擇。 這意味著要么收集更多數據,要么使用可愛的升采樣技術。

This technique works such that we clone our beloved Abraham, meaning that we duplicate the members of the minority class. Once we balanced the dataset to a sufficient degree, we will notice that our model has actually to learn something to attain a good score!

這種技術的工作原理是我們克隆了我們心愛的亞伯拉罕,這意味著我們復制了少數群體的成員。 一旦我們將數據集平衡到足夠的程度,我們就會注意到我們的模型實際上必須學習一些東西才能獲得高分!

下采樣 (Downsampling)

The opposite of Upsampling is Downsampling, aka Undersampling. This technique is great if you have more than enough data to do your job (Which never happened to me, but I assume the big data collectors of this world may sometimes be in this position).

上采樣的相反是下采樣,也就是欠采樣。 如果您有足夠多的數據來完成工作,則此技術非常有用(這對我而言從未發生過,但我認為這個世界的大數據收集者有時可能會處于這個位置)。

In this case, you can eliminate some of the instances of the majority class. You don’t have to go 50–50, but enough to make the Idealisstic-Voter motivated to learn something. The same holds for all of the techniques we are discussing today.

在這種情況下,您可以消除多數類的某些實例。 您不必走50–50,但足以使Idealisstic-Voter有動力學習一些東西。 我們今天討論的所有技術都一樣。

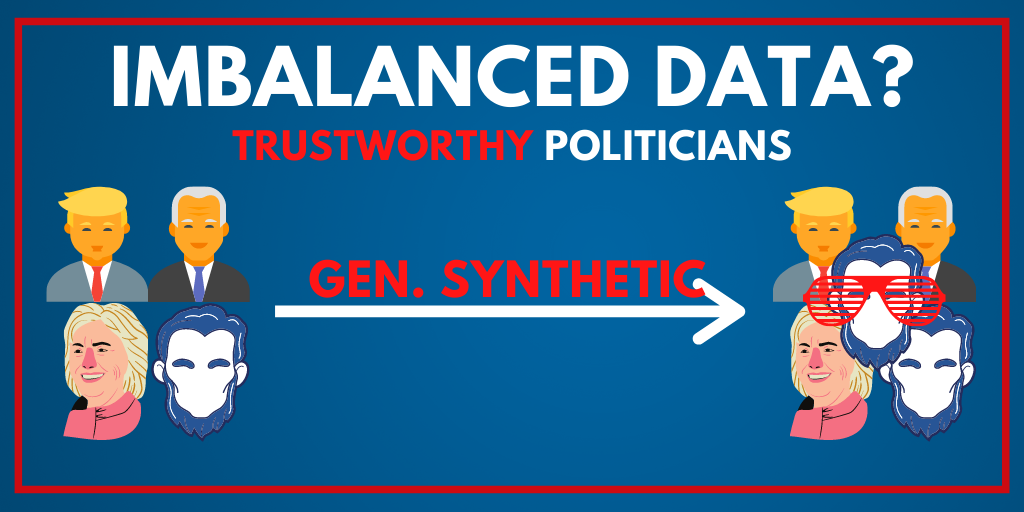

生成合成樣本 (Generating Synthetic Samples)

Ok, now we are getting into the cool things. How about we generate data out of thin air? How about we add some Abrahams with cool techno glasses? He’d surely be just as trustworthy and additionally win the Youth Vote!

好的,現在我們進入了很酷的事情。 我們如何憑空生成數據呢? 我們如何添加一些帶有酷炫技術眼鏡的亞伯拉罕呢? 他肯定會同樣值得信賴,并且還會贏得青年投票!

加一點?ps (Adding a little bit of ?ps)

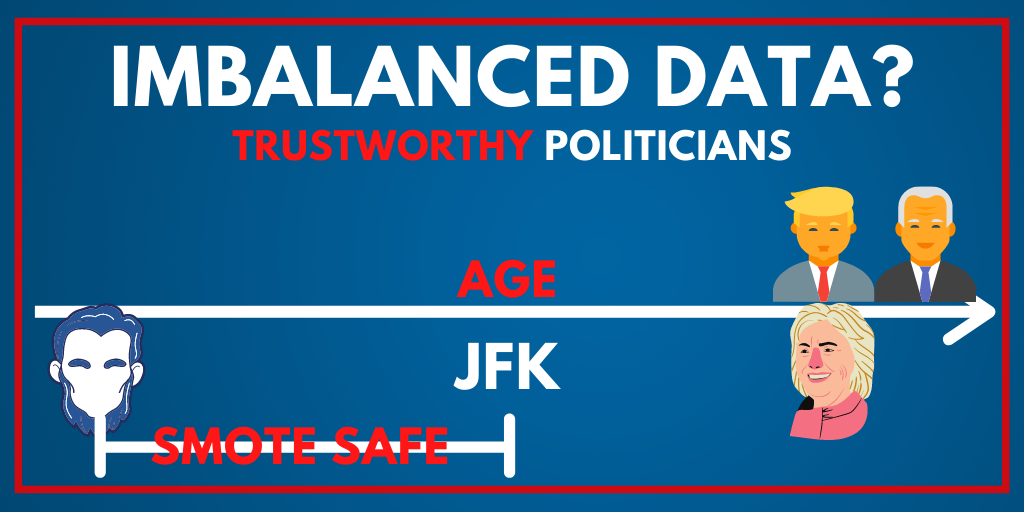

Let’s say our dataset has the feature age. We can clearly see that the only trustworthy politicians are 2020–1809 = 211 years old, 1809 being Abraham’s birth year. But what about politicians that have all the same accomplishments but are 211.001 and 210.999 years old?

假設我們的數據集具有特征年齡。 我們可以清楚地看到,唯一值得信賴的政客是2020- 1809年= 211歲,而1809年是亞伯拉罕的出生年。 但是,具有相同成就但分別具有211.001和210.999歲的政治家呢?

Probably the same! But be extremely careful when using this technique since you are effectively falsifying your data. This technique can easily backfire, and you should only use it when you are very confident that it is the right choice. But this brings us to a slightly more elaborate and automated technique.

大概一樣! 但是,使用此技術時要格外小心,因為您實際上是在篡改數據。 此技術很容易適得其反,只有在非常確信它是正確的選擇時,才應使用它。 但這將我們帶入了一種更加精細和自動化的技術。

冒煙 (SMOTE)

SMOTE stands for Synthetic Minority Over-sampling Technique. It constructs new samples by carefully observing the attributes of all samples. It then proceeds to alter the values just so that they stay within the range observed in our minority-class. Sounds complicated, let’s look at a simple example, and it should become as clear as your mouse clicks on the clap. Let’s assume we add JFK to the dataset and look again at the age of trustworthy politicians.

SMOTE代表合成少數族裔過采樣技術。 它通過仔細觀察所有樣本的屬性來構造新樣本。 然后,它繼續更改這些值,以使它們保持在我們的少數群體觀察到的范圍內。 聽起來很復雜,讓我們看一個簡單的示例,它應該就像您的鼠標單擊拍手一樣清晰。 假設我們將JFK添加到數據集中,然后再次查看可信賴的政治人物的年齡。

As we can see, the area indicated as SMOTE SAFE is the area in which we can freely generate new synthetic samples! You can find a more in-depth explanation here. It is fascinating, but I think it is most important to know that such methods exist.

如我們所見,標為“ SMOTE SAFE”的區域是我們可以自由生成新的合成樣本的區域! 您可以在這里找到更深入的解釋。 令人著迷,但是我認為最重要的是要知道這種方法的存在。

發揮創意 (Get Creative)

We can find some incredible examples of this technique in the autonomous driving scene. While all the major competitors in this field have collected several petabytes of data, conditions such as kids running in front of cars should be tested before actually encountering them. These cases may also happen so rarely that you will never find enough training data.

我們可以在自動駕駛場景中找到這種技術的一些令人難以置信的例子。 盡管該領域的所有主要競爭對手都收集了數PB的數據,但在實際遇到汽車前奔跑的孩子等情況之前,應先進行測試。 這些情況可能也很少發生,以至于您永遠找不到足夠的培訓數據。

Generating Synthetic data to test such and various other edge cases under many different lighting and weather conditions is a must. One often uses GAN’s or similar networks to convert already existing samples into various other forms, e.g., in winter or when raining. There are multiple ways of how this can be done. A short video that illustrates this can be seen here (I am not affiliated with them nor know how good they are, but they have an excellent short video ;)

必須生成合成數據以測試在許多不同的光照和天氣條件下的此類以及其他各種邊緣情況。 人們經常使用GAN或類似的網絡將已經存在的樣本轉換為其他各種形式,例如在冬天或下雨天。 有多種方法可以做到這一點。 可以在此處看到一個簡短的視頻來說明這一點(我不隸屬于他們,也不知道他們有多棒,但是他們有一個很棒的短視頻;)

更改損失函數 (Change The Loss Function)

But we do have one more thing! What if I told you that instead of dealing with the problem at the data level, we could deal with it at the heart of our algorithm, the loss function.

但是,我們還有一件事! 如果我告訴您,我們可以在算法的核心(損失函數)處處理問題,而不是在數據級別處理問題。

Over the years, many approaches have been proposed, and there truly is no one size fits all. It quite often depends heavily on how important it is to identify the minority-class, and what kind of costs occur when you misclassify a sample for the majority class.

多年以來,已經提出了許多方法,并且確實沒有一種方法能適合所有情況。 通常,這在很大程度上取決于識別少數群體的重要性,以及當您對多數群體的樣本進行錯誤分類時會產生何種成本。

Weighted Loss Function, Define It Yourself

加權損失函數,自己定義

One way of mixing such a loss is simply by multiplying the terms in which a minority-class occurs with a constant>1. What this effectively does is encourage the model to pay more attention to the instances of the minority class. In Keras this is built in an can be applied without additional hassle

混合這種損失的一種方法就是簡單地將出現少數類的項與常數> 1相乘。 這實際上是在鼓勵模型更加關注少數群體的情況。 在Keras中,這是內置的,可以輕松應用

class_weights = {0: 1.,

1: 50.}

model.fit(X_train, Y_train,class_weights=class_weights)In this particular case, it tells Keras that it should treat one occurrence of class 1 as 50 instances of class 0. In some easy python pseudo-code, we could think of it as something like this

在這種特殊情況下,它告訴Keras,應將出現的1類事件視為50個0類實例。在一些簡單的python偽代碼中,我們可以將其視為這樣的事情

def loss_weighted(x,y_true):y_pred=model.forward(x)

if y_true==1:

return 50*loss(y_pred,y_true)

elif y_true==0:

return 1*loss(y_pred,y_true)類平衡損失 (Class Balanced Loss)

As the amount of classes becomes bigger, it is tedious to defined things explicitly, as we did above. For this, a variety of solutions have been proposed, and one of the most notable works is “Class-Balanced Loss Based on Effective Number of Samples.”

隨著類數量的增加,像我們上面所做的那樣,顯式定義事物很繁瑣。 為此,提出了多種解決方案,其中最著名的作品之一是“ 基于有效樣本數的類平衡損失 ”。

What they effectively proposed is reweighting the loss function. The authors do this in such a way that the effective amount of samples per class are taken into account. To control on what side of the balancing the loss ends up, one can additionally tune it with a hyperparameter.

他們有效建議的是對損失函數進行加權。 作者這樣做的方式是考慮到每類樣本的有效量。 為了控制損耗的平衡方向,可以另外使用超參數對其進行調諧。

While this is a very simplistic view of their process, I feel that it grasped the most important aspects to keep in your memory. If you ever encounter a case where you have a lot of classes and significant imbalances between the classes, make sure to give this approach a go.

盡管這是他們過程的非常簡單的視圖,但我認為它掌握了最重要的方面,可以保留在您的記憶中。 如果遇到類別過多且類別之間存在嚴重不平衡的情況,請確保采取這種方法。

結論 (Conclusion)

Congratulations, you know now what you can do when you encounter imbalanced data. Several approaches exist, and choosing the right one for your problem may not always be an easy task. It will heavily depend on your business needs to decide how much time and resources you want to put into balancing things more fairly. Being aware of potential solutions is the first step to solving them!

恭喜,您現在知道遇到不平衡數據時可以做什么。 存在幾種方法,為您的問題選擇正確的方法可能并不總是一件容易的事。 這將在很大程度上取決于您的業務需求,以決定您要花費多少時間和資源來更公平地平衡事務。 意識到潛在的解決方案是解決它們的第一步!

翻譯自: https://towardsdatascience.com/imbalanced-data-when-details-matter-16bd3ec7ef74

數據中心細節

本文來自互聯網用戶投稿,該文觀點僅代表作者本人,不代表本站立場。本站僅提供信息存儲空間服務,不擁有所有權,不承擔相關法律責任。 如若轉載,請注明出處:http://www.pswp.cn/news/389940.shtml 繁體地址,請注明出處:http://hk.pswp.cn/news/389940.shtml 英文地址,請注明出處:http://en.pswp.cn/news/389940.shtml

如若內容造成侵權/違法違規/事實不符,請聯系多彩編程網進行投訴反饋email:809451989@qq.com,一經查實,立即刪除!

)