線性回歸非線性回歸

Let’s say you’re looking to buy a new PC from an online store (and you’re most interested in how much RAM it has) and you see on their first page some PCs with 4GB at $100, then some with 16 GB at $1000. Your budget is $500. So, you estimate in your head that given the prices you saw so far, a PC with 8 GB RAM should be around $400. This will fit your budget and decide to buy one such PC with 8 GB RAM.

假設您要從網上商店購買一臺新PC(并且您最感興趣的是它有多少RAM),并且在他們的首頁上看到一些4GB價格為100美元的PC,然后一些16GB價格為1000美元的PC 。 您的預算是$ 500。 因此,您估計自己的價格,考慮到到目前為止的價格,一臺具有8 GB RAM的PC應該約為400美元。 這將適合您的預算,并決定購買一臺具有8 GB RAM的PC。

This kind of estimations can happen almost automatically in your head without knowing it’s called linear regression and without explicitly computing a regression equation in your head (in our case: y = 75x - 200).

這種估算幾乎可以在您的頭腦中自動發生,而無需知道這是線性回歸,也無需在您的頭腦中顯式計算回歸方程(在我們的情況下:y = 75x-200)。

So, what is linear regression?

那么,什么是線性回歸?

I will attempt to answer this question simply:

我將嘗試簡單地回答這個問題:

Linear regression is just the process of estimating an unknown quantity based on some known ones (this is the regression part) with the condition that the unknown quantity can be obtained from the known ones by using only 2 operations: scalar multiplication and addition (this is the linear part). We multiply each known quantity by some number, and then we add all those terms to obtain an estimate of the unknown one.

線性回歸只是基于一些已知量(這是回歸部分)估算未知量的過程,條件是只能使用以下兩個操作從已知量中獲取未知量:標量乘法和加法(這是線性部分)。 我們將每個已知數量乘以某個數字,然后將所有這些項相加以獲得未知數量的估計值。

It may seem a little complicated when it is described in its formal mathematical way or code, but, in fact, the simple process of estimation as described above you probably already knew way before even hearing about machine learning. Just that you didn’t know that it is called linear regression.

以正式的數學方式或代碼描述它似乎有些復雜,但是,實際上,如上所述的簡單估算過程,您甚至在聽說機器學習之前就已經知道了。 只是您不知道它稱為線性回歸。

Now, let’s dive into the math behind linear regression.

現在,讓我們深入了解線性回歸背后的數學原理。

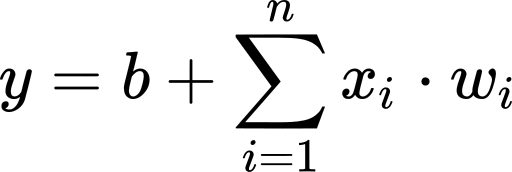

In linear regression, we obtain an estimate of the unknown variable (denoted by y; the output of our model) by computing a weighted sum of our known variables (denoted by x?; the inputs) to which we add a bias term.

在線性回歸中,我們通過計算已知變量(以x?表示;輸入)的加權和來獲得未知變量(以y表示;模型的輸出)的估計值,并在該變量上加上偏差項。

Where n is the number of data points we have.

其中n是我們擁有的數據點數。

Adding a bias is the same thing as imagining we have an extra input variable that’s always 1 and using only the weights. We will consider this case to make the math notation a little easier.

添加一個偏差與想象我們有一個總是為1且僅使用權重的額外輸入變量相同。 我們將考慮這種情況,以使數學符號更容易些。

Where x? is always 1, and w? is our previous b.

其中X 0始終為1,W 0和是我們以前灣

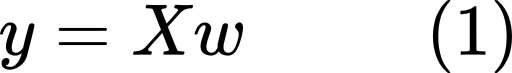

To make the notation a little easier, we will transition from the above sum notation to matrix notation. The weighted sum in the equation above is equivalent to the multiplication of a row-vector of all the input variables with a column-vector of all the weights. That is:

為了使表示法更容易一點,我們將從上面的總和表示法轉換為矩陣表示法。 上式中的加權和等于所有輸入變量的行向量與所有權重的列向量相乘。 那是:

The equation above is for just one data point. If we want to compute the outputs of more data points at once, we can concatenate the input rows into one matrix which we will denote by X. The weights vector will remain the same for all those different input rows and we will denote it by w. Now y will be used to denote a column-vector with all the outputs instead of just a single value. This new equation, the matrix form, is given below:

上面的等式僅適用于一個數據點。 如果要一次計算更多數據點的輸出,可以將輸入行連接到一個矩陣中,用X表示。 對于所有這些不同的輸入行,權重向量將保持相同,我們將用w表示它。 現在, y將用于表示具有所有輸出的列向量,而不僅僅是單個值。 這個新的方程,矩陣形式,如下:

Given an input matrix X and a weights vector w, we can easily get a value for y using the formula above. The input values are assumed to be known, or at least to be easy to obtain.

給定一個輸入矩陣X和一個權重向量w ,我們可以使用上面的公式輕松得出y的值。 假定輸入值是已知的,或者至少易于獲得。

But the problem is: How do we obtain the weights vector?

但是問題是:我們如何獲得權重向量?

We will learn them from examples. To learn the weights, we need a dataset in which we know both x and y values, and based on those we will find the weights vector.

我們將從示例中學習它們。 要學習權重,我們需要一個既知道x值又知道y值的數據集,然后根據這些數據集找到權重向量。

If our data points are the minimum required to define our regression line (one more than the number of inputs), then we can simply solve equation (1) for w:

如果我們的數據點是定義回歸線所需的最小值(比輸入數多一個),那么我們可以簡單地為w求解方程式(1):

We call this thing a regression line, but actually, it is a line only for 1 input. For 2 inputs it will be a plane, for 3 inputs it will be some kind of “3D plane”, and so on.

我們稱此為回歸線,但實際上,這是僅用于1個輸入的線。 對于2個輸入,它將是一個平面,對于3個輸入,它將是某種“ 3D平面”,依此類推。

Most of the time the requirement for the solution above will not hold. Most of the time, our data points will not perfectly fit a line. There will be some random noise around our regression line, and we will not be able to obtain an exact solution for w. However, we will try to obtain the best possible solution for w so that the error is minimal.

在大多數情況下,上述解決方案的要求將不成立。 在大多數情況下,我們的數據點不會完全符合一條線。 我們的回歸線附近會有一些隨機噪聲,因此我們將無法獲得w的精確解。 但是,我們將嘗試獲得w 的最佳解決方案 ,以使誤差最小。

If equation (1) doesn’t have a solution, this means that y doesn’t belong to the column space of X. So, instead of y, we will use the projection of y onto the column space of X. This is the closest vector to y that also belongs to the column space of X. If we multiply (on the left) both sides of eq. (1) by the transpose of X, we will get an equation in which this projection is considered. You can find out more about the linear algebra approach of solving this problem in this lecture by Gilbert Strang from MIT.

如果等式(1)沒有解,則意味著y不屬于X的列空間。 因此,我們將使用y到X的列空間上的投影代替y 。 這是最接近y的向量,它也屬于X的列空間。 如果我們(在左邊)乘以等式的兩邊。 (1)通過X的轉置,我們將得到一個考慮該投影的方程。 您可以在MIT的Gilbert Strang的講座中找到有關解決此問題的線性代數方法的更多信息。

Although this solution requires fewer restrictions on X than our previous one, there are some cases in which it still doesn’t work; we will see more about this issue below.

盡管此解決方案對X的限制比我們以前的解決方案要少,但是在某些情況下它仍然不起作用。 我們將在下面看到有關此問題的更多信息。

Another way to get a solution for w is by using calculus. The main idea is to define an error function, then use calculus to find the weights that minimize this error function.

獲得w解決方案的另一種方法是使用微積分。 主要思想是定義一個誤差函數,然后使用微積分找到使該誤差函數最小的權重。

We will define a function f that takes as input a weights vector and gives us the squared error these weights will generate on our linear regression problem. This function simply looks at the difference between each true y from our dataset and the estimated y of the regression model. Then squares all these differences and adds them up. In matrix notation, this function can be written as:

我們將定義一個函數f ,該函數將權重向量作為輸入,并給出這些權重將在線性回歸問題上產生的平方誤差。 此函數只是查看數據集中每個真實y與回歸模型的估計y之間的差異。 然后將所有這些差異平方并加總。 用矩陣表示法,該函數可以寫為:

If this function has a minimum, it should be at one of the critical points (the points where the gradient ?f is 0). So, let’s find the critical points. If you’re not familiar with matrix differentiation, you can have a look at this Wikipedia article.

如果此函數具有最小值,則應位于臨界點之一(梯度?f為0的點)上。 因此,讓我們找到關鍵點。 如果您不熟悉矩陣微分,可以看看這篇 Wikipedia文章。

We start by computing the gradient:

我們首先計算梯度:

Then we set it equal to 0, and solve for w:

然后將其設置為0,并求解w :

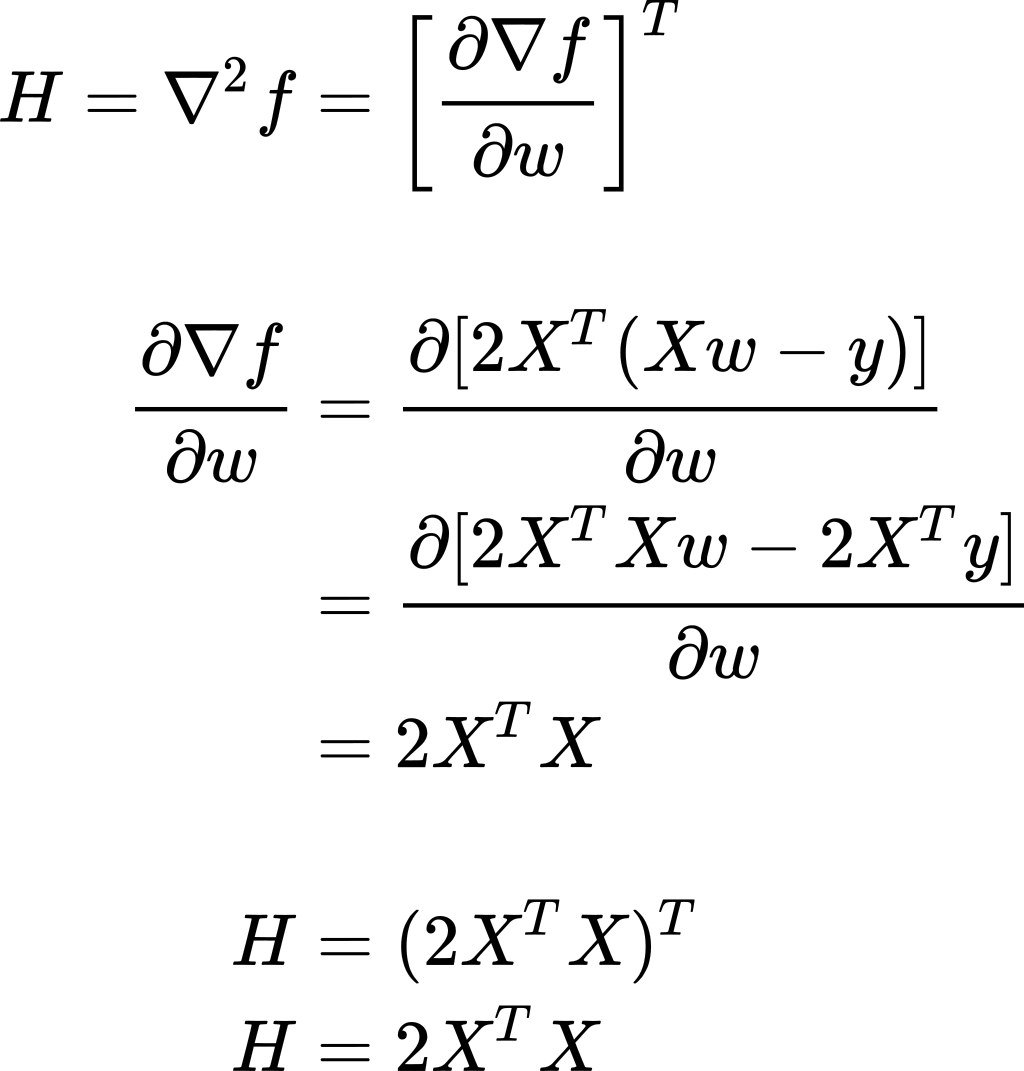

We got one critical point. Now we should figure out if it is a minimum or maximum point. To do so, we will compute the Hessian matrix and establish the convexity/concavity of the function f.

我們有一個關鍵點。 現在我們應該確定它是最小還是最大點。 為此,我們將計算Hessian矩陣并建立函數f的凸/凹度。

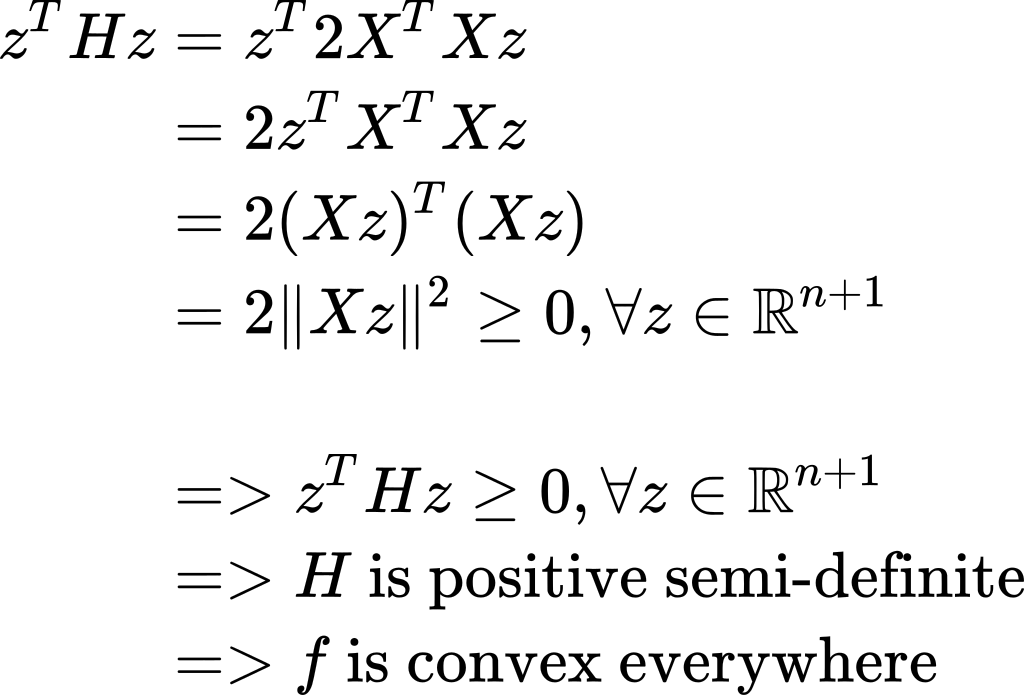

Now, what can we observe about H? If we take any real-valued vector z and multiply it on both sides of H, we will get:

現在,我們可以觀察到關于H的什么? 如果我們取任何實值向量z并將其在H的兩邊相乘,我們將得到:

Because f is a convex function, this means that our above-found solution for w is a minimum point and that’s exactly what we were looking for.

因為f是一個凸函數,所以這意味著我們上面找到的w的解決方案是一個最小點,而這正是我們想要的。

As you probably noticed, we got the same solution for w by using both the previous linear algebra approach and this calculus way of finding the weights. We can think of it as either the solution of the matrix equation when we replace y by the projection of y onto the column space of X or the point that minimizes the sum of squared errors.

您可能已經注意到,通過使用以前的線性代數方法和這種求權的演算方法,我們得到了與w相同的解決方案。 我們可以將其視為矩陣方程的解,當我們將y替換為y在X的列空間上的投影或最小化平方誤差之和的點時。

Does this solution always work? No.

此解決方案是否始終有效? 沒有。

It is less restrictive than the trivial solution: w = X?1 y in which we need X to be a square non-singular matrix, but it still needs some conditions to hold. We need X? X to be invertible, and for that X needs to have full column rank; that is, all its columns to be linearly independent. This condition is typically met if we have more rows than columns. But if we have fewer data examples than input variables, this condition cannot be true.

它比一般解的約束要小: w = X y ,其中我們需要X為正方形非奇異矩陣,但仍然需要一些條件來保持。 我們需要X?X是可逆的,并且為此X 需要具有完整的列秩 ; 也就是說,其所有列都是線性獨立的。 如果我們的行多于列,通常會滿足此條件。 但是,如果我們的數據示例少于輸入變量,則此條件不能成立。

This requirement that X has full column rank is closely related to the convexity of f. If you look above at the little proof that f is convex, you can notice that, if X has full column rank, then X z cannot be the zero vector (assuming z ≠ 0), and this implies that H is positive definite, hence f is strictly convex. If f is strictly convex it can have only one minimum point, and this explains why this is the case in which we can have a closed-form solution.

X具有完整列等級的要求與f的凸性密切相關。 如果您在上面看f是凸的小證明,您會注意到,如果X具有完整的列秩,則X z不能為零向量(假設z≠0 ),這意味著H是正定的,因此f 嚴格是凸的。 如果f是嚴格凸的,則它只能有一個最小點,這解釋了為什么我們可以有一個封閉形式的解。

On the other hand, if X doesn’t have full column rank, then there will be some z ≠ 0 for which X z = 0, and therefore f is non-strictly convex. This means that f may not have a single minimum point, but a valley of minimum points which are equally good, and our closed-form solution is not able to capture all of them. Visually, the case of a not full column rank X looks something like this in 3D:

另一方面,如果X沒有完整的列級,則將存在z≠0且 X z = 0 ,因此f是非嚴格凸的。 這意味著f可能沒有一個最小點,但是一個最小點的谷值同樣好,并且我們的封閉式解決方案無法捕獲所有這些點。 從視覺上看,列級別X不完整的情況在3D中看起來像這樣:

A method that will give us a solution even in this scenario is Stochastic Gradient Descent (SGD). This is an iterative method that starts at a random point on the surface of the error function f, and then, at each iteration, it goes in the negative direction of the gradient ?f towards the bottom of the valley.

即使在這種情況下,也可以為我們提供解決方案的一種方法是隨機梯度下降 (SGD)。 這是一種迭代方法,從誤差函數f的表面上的隨機點開始,然后在每次迭代時,它沿梯度?f的負方向向谷底移動。

This method will always give us a result (even if sometimes it requires a large number of iterations to get to the bottom); it doesn’t need any condition on X.

這種方法將始終為我們提供結果(即使有時它需要進行大量迭代才能達到最低要求); 它在X上不需要任何條件。

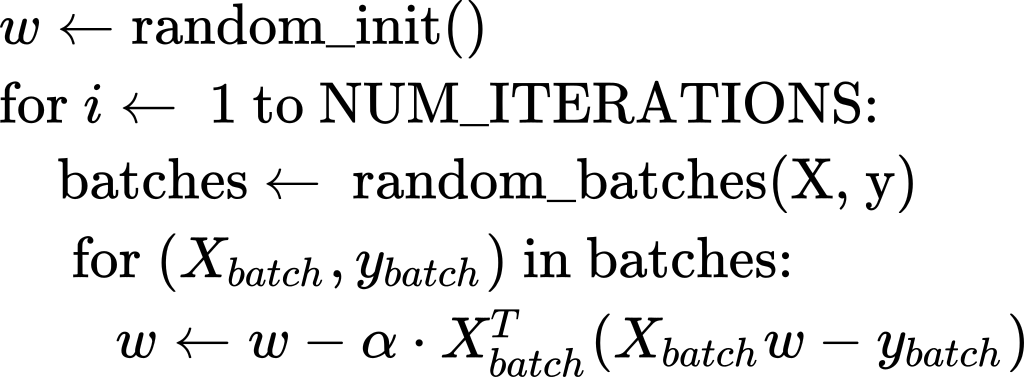

Also, to be more efficient computationally, it doesn’t use all the data at once. Our data matrix X is split vertically into batches. At each iteration, an update is done based only on one such batch.

另外,為了提高計算效率,它不會一次使用所有數據。 我們的數據矩陣X垂直分為幾批。 在每次迭代中,僅基于一個這樣的批次進行更新。

In the case of not full column rank X, the solution will not be unique; among all those points in the “minimum valley”, SGD will give us only one that depends on the random initialization and the randomization of the batches.

如果列級別X不完整,則解決方案將不是唯一的; 在“最小谷”中的所有這些點中,SGD將只給我們一個依賴于批次的隨機初始化和隨機化的點。

SGD is a more general method that is not tied only to linear regression; it is also used in more complex machine learning algorithms like neural networks. But an advantage that we have here, in the case of least-squares linear regression, is that, due to the convexity of the error function, SGD cannot get stuck into local minima, which is often the case in neural networks. When this method will reach a minimum, it will be a global one. Below is a brief sketch of this algorithm:

SGD是一種更通用的方法,不僅限于線性回歸; 它也用于更復雜的機器學習算法(如神經網絡)中。 但是在最小二乘線性回歸的情況下,我們在這里擁有的一個優勢是,由于誤差函數的凸性,SGD不會陷入局部最小值,這在神經網絡中通常是這樣。 當此方法達到最小值時,它將是全局方法。 下面是該算法的簡要示意圖:

Where α is a constant called learning rate.

其中α是一個常數,稱為學習率 。

Now, if we plug in the gradient as computed above in this article, we get the following which is specifically for least-squares linear regression:

現在,如果我們按照本文上面的計算方法插入漸變,則會得到以下內容,這些內容專門用于最小二乘線性回歸:

And that’s it for now. In the next couple of articles, I will also show how to implement linear regression using some numerical libraries like NumPy, TensorFlow, and PyTorch.

僅此而已。 在接下來的幾篇文章中,我還將展示如何使用一些數字庫(如NumPy,TensorFlow和PyTorch)實現線性回歸。

I hope you found this information useful and thanks for reading!

我希望您發現此信息有用,并感謝您的閱讀!

翻譯自: https://towardsdatascience.com/understanding-linear-regression-eaaaed2d983e

線性回歸非線性回歸

本文來自互聯網用戶投稿,該文觀點僅代表作者本人,不代表本站立場。本站僅提供信息存儲空間服務,不擁有所有權,不承擔相關法律責任。 如若轉載,請注明出處:http://www.pswp.cn/news/389458.shtml 繁體地址,請注明出處:http://hk.pswp.cn/news/389458.shtml 英文地址,請注明出處:http://en.pswp.cn/news/389458.shtml

如若內容造成侵權/違法違規/事實不符,請聯系多彩編程網進行投訴反饋email:809451989@qq.com,一經查實,立即刪除!

)