搜索引擎優化學習原理

Search Engine Optimisation (SEO) is the discipline of using knowledge gained around how search engines work to build websites and publish content that can be found on search engines by the right people at the right time.

搜索引擎優化(SEO)是一門學科,它使用有關搜索引擎如何工作的知識來構建網站和發布內容,這些內容可以由合適的人在正確的時間在搜索引擎上找到。

Some people say that you don’t really need SEO and they take a Field of Dreams ‘build it and they shall come’ approach. The size of the SEO industry is predicted to be $80 billion by the end of 2020. There are at least some people who like to hedge their bets.

有人說您真的不需要SEO,而他們卻選擇了“夢想之場 ”來構建它,然后他們就會來。 到2020年底,SEO行業的規模預計將達到800億美元。至少有些人喜歡對沖自己的賭注。

An often-quoted statistic is that Google’s ranking algorithm contains more than 200 factors for ranking web pages and SEO is often seen as an ‘arms race’ between its practitioners and the search engines. With people looking for the next ‘big thing’ and putting themselves into tribes (white hat, black hat and grey hat).

經常被引用的統計數據是Google的排名算法包含200多個用于對網頁進行排名的因素 ,而SEO通常被視為其從業者與搜索引擎之間的“軍備競賽”。 人們正在尋找下一個“大事情”,并將自己納入部落( 白帽子 , 黑帽子和灰帽子 )。

There is a huge amount of data generated by SEO activity and its plethora of tools. For context, the industry-standard crawling tool Screaming Frog has 26 different reports filled with web page metrics on things you wouldn’t even think are important (but are). That is a lot of data to munge and find interesting insights from.

SEO活動及其大量工具生成了大量數據。 就上下文而言,行業標準的爬網工具Screaming Frog有26種不同的報告,其中包含關于您甚至不認為很重要(但很重要)的內容的網頁指標。 需要大量的數據來進行整理并從中找到有趣的見解。

The SEO mindset also lends itself well to the data science ideal of munging data and using statistics and algorithms to derive insights and tell stories. SEO practitioners have been pouring over all of this data for 2 decades trying to figure out the next best thing to do and to demonstrate value to clients.

SEO的思維方式也非常適合數據科學的理想,即處理數據并使用統計數據和算法來獲得見解和講故事。 SEO從業人員已經傾注了所有這些數據長達20年之久,試圖找出下一步要做的事情,并向客戶展示價值。

Despite access to all of this data, there is still a lot of guesswork in SEO and while some people and agencies test different ideas to see what performs well, a lot of the time it comes down to the opinion of the person with the best track record and overall experience on the team.

盡管可以訪問所有這些數據,但SEO仍然存在很多猜測,盡管有些人和機構測試不同的想法以查看效果良好,但很多時候卻取決于最佳跟蹤者的意見。記錄和團隊的整體經驗。

I’ve found myself in this position a lot in my career and this is something I would like to address now that I have acquired some data science skills of my own. In this article, I will point you to some resources that will allow you to take more data-led approach to your SEO efforts.

在我的職業生涯中,我經常擔任這個職位,這是我現在要解決的問題,因為我已經掌握了一些數據科學技能。 在本文中,我將為您指出一些資源,這些資源將使您可以采用更多以數據為主導的方法來進行SEO。

SEO測試 (SEO Testing)

One of the most often asked questions in SEO is ‘We’ve implemented these changes on a client’s webaite, but did they have an effect?’. This often leads to the idea that if the website traffic went up ‘it worked’ and if the traffic went down it was ‘seasonality’. That is hardly a rigorous approach.

SEO中最常被問到的問題之一是“我們已經在客戶的Webaite上實施了這些更改,但是它們有效果嗎?”。 這通常導致這樣的想法:如果網站流量上升,則“正常”,如果流量下降,則為“季節性”。 那不是嚴格的方法。

A better approach is to put some maths and statistics behind it and analyse it with a data science approach. A lot of the maths and statistics behind data science concepts can be difficult, but luckily there are a lot of tools out there that can help and I would like to introduce one that was made by Google called Causal Impact.

更好的方法是將一些數學和統計信息放在后面,并使用數據科學方法進行分析。 數據科學概念背后的許多數學和統計數據可能很困難,但是幸運的是,那里有很多工具可以提供幫助,我想介紹一下由Google制造的名為因果影響的工具 。

The Causal Impact package was originally an R package, however, there is a Python version if that is your poison and that is what I will be going through in this post. To install it in your Python environment using Pipenv, use the command:

因果影響包最初是R包 ,但是,如果有毒,那就有一個Python版本 ,這就是我將在本文中介紹的內容。 要使用Pipenv在Python環境中安裝它,請使用以下命令:

pipenv install pycausalimpactIf you want to learn more about Pipenv, see a post I wrote on it here, otherwise, Pip will work just fine too:

如果您想了解有關Pipenv的更多信息,請參閱我在此處寫的一篇文章,否則,Pip也可以正常工作:

pip install pycausalimpact什么是因果影響? (What is Causal Impact?)

Causal Impact is a library that is used to make predictions on time-series data (such as web traffic) in the event of an ‘intervention’ which can be something like campaign activity, a new product launch or an SEO optimisation that has been put in place.

因果影響是一個庫,用于在發生“干預”時對時間序列數據(例如網絡流量)進行預測,該干預可以是諸如活動活動,新產品發布或已經進行的SEO優化之類的事情。到位。

You supply two-time series as data to the tool, one time series could be clicks over time for the part of a website that experienced the intervention. The other time series acts as a control and in this example that would be clicks over time for a part of the website that didn’t experience the intervention.

您向工具提供了兩個時間序列作為數據,一個時間序列可能是隨著時間的流逝而發生的涉及網站干預的部分。 其他時間序列用作控制,在此示例中,將是一段時間內未經歷干預的網站的點擊次數。

You also supply a data to the tool when the intervention took place and what it does is it trains a model on the data called a Bayesian structural time series model. This model uses the control group as a baseline to try and build a prediction about what the intervention group would have looked like if the intervention hadn’t taken place.

您還可以在發生干預時向工具提供數據,它所做的是在數據上訓練一個稱為貝葉斯結構時間序列模型的模型 。 該模型以對照組為基準,以嘗試建立關于如果未進行干預的情況下干預組的狀況的預測。

The original paper on the maths behind it is here, however, I recommend watching this video below by a guy at Google, which is far more accessible:

關于它背后的數學原理的原始文章在這里 ,但是,我建議下面由Google的一個人觀看此視頻,該視頻更容易獲得:

在Python中實現因果影響 (Implementing Causal Impact in Python)

After installing the library into your environment as outlined above, using Causal Impact with Python is pretty straightforward, as can be seen in the notebook below by Paul Shapiro:

在如上所述將庫安裝到您的環境中之后,將因果影響與Python結合使用非常簡單,如Paul Shapiro在下面的筆記本中所示:

After pulling in a CSV with the control group data, intervention group data and defining the pre/post periods you can train the model by calling:

在輸入包含控制組數據,干預組數據的CSV并定義前后期間后,您可以通過調用以下方法來訓練模型:

ci = CausalImpact(data[data.columns[1:3]], pre_period, post_period)This will train the model and run the predictions. If you run the command:

這將訓練模型并運行預測。 如果運行命令:

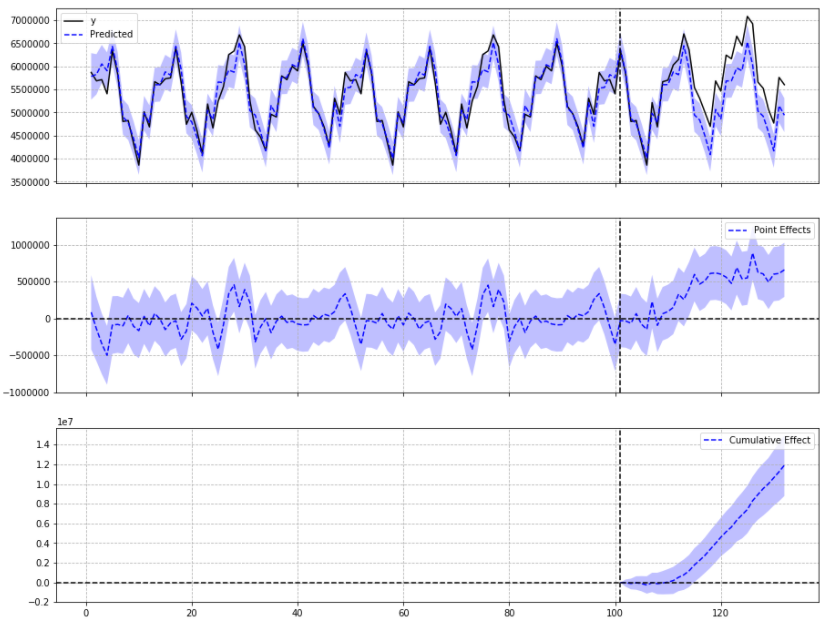

ci.plot()You will get a chart that looks like this:

您將獲得一個如下所示的圖表:

You have three panels here, the first panel showing the intervention group and the prediction of what would have happened without the intervention.

您在此處有三個面板,第一個面板顯示干預組,并預測沒有干預的情況。

The second panel shows the pointwise effect, which means the difference between what happened and the prediction made by the model.

第二個面板顯示了逐點效應,這意味著發生的事情與模型所做的預測之間的差異。

The final panel shows the cumulative effect of the intervention as predicted by the model.

最后一個面板顯示了模型所預測的干預措施的累積效果。

Another useful command to know is:

另一個有用的命令是:

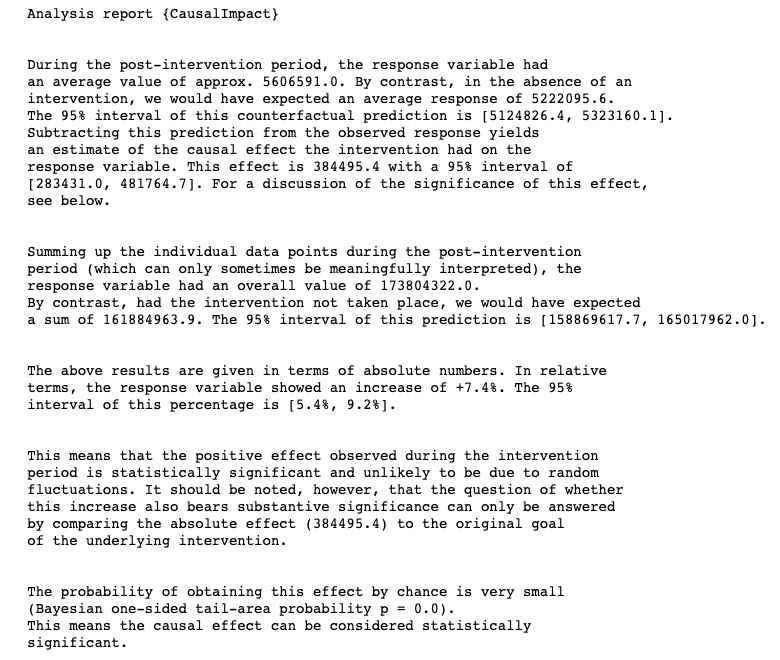

print(ci.summary('report'))This prints out a full report that is human readable and ideal for summarising and dropping into client slides:

這將打印出一份完整的報告,該報告易于閱讀,是匯總和放入客戶端幻燈片的理想選擇:

選擇一個對照組 (Selecting a control group)

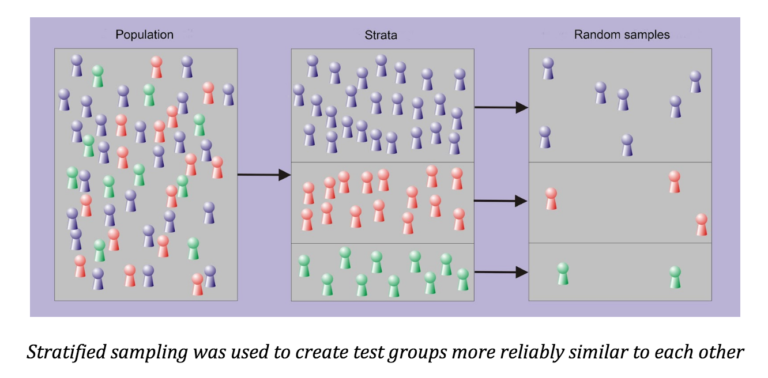

The best way to build your control group is to pick pages which aren’t affected by the intervention at random using a method called stratified random sampling.

建立對照組的最佳方式是使用一種稱為分層隨機抽樣的方法隨機選擇不受干預影響的頁面。

Etsy has done a post on how they’ve used Causal Impact for SEO split testing and they recommend using this method. Random stratified sampling is as the name implies where you pick from the population at random to build the sample. However if what we’re sampling is segmented in some way, we try and maintain the same proportions in the sample as in the population for these segments:

Etsy發表了一篇關于他們如何將因果影響用于SEO拆分測試的文章,他們建議使用此方法。 顧名思義,隨機分層抽樣是您從總體中隨機選擇以構建樣本的地方。 但是,如果以某種方式對樣本進行了細分,則我們將嘗試在樣本中保持與這些細分中的總體相同的比例:

An ideal way to segment web pages for stratified sampling is to use sessions as a metric. If you load your page data into Pandas as a data frame, you can use a lambda function to label each page:

細分網頁以進行分層抽樣的理想方法是使用會話作為指標。 如果將頁面數據作為數據框加載到Pandas中,則可以使用lambda函數標記每個頁面:

df["label"] = df["Sessions"].apply(lambda x:"Less than 50" if x<=50 else ("Less than 100" if x<=100 else ("Less than 500" if x<=500 else ("Less than 1000" if x<=1000 else ("Less than 5000" if x<=5000 else "Greater than 5000")))))

df["label"] = df["Sessions"].apply(lambda x:"Less than 50" if x<=50 else ("Less than 100" if x<=100 else ("Less than 500" if x<=500 else ("Less than 1000" if x<=1000 else ("Less than 5000" if x<=5000 else "Greater than 5000")))))

From there, you can use test_train_split in sklearn to build your control and test groups:

從那里,您可以在sklearn中使用test_train_split來構建您的控制和測試組:

from sklearn.model_selection import train_test_split

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(selectedPages["URL"],selectedPages["label"], test_size=0.01, stratify=selectedPages["label"])

X_train, X_test, y_train, y_test = train_test_split(selectedPages["URL"],selectedPages["label"], test_size=0.01, stratify=selectedPages["label"])

Note that stratify is set and if you have a list of pages you want to test already then your sample pages should equal the number of pages you want to test. Also, the more pages you have in your sample, the better the model will be. If you use too few pages, the less accurate the model will be.

請注意,已設置分層 ,并且如果您已經有要測試的頁面列表,則示例頁面應等于要測試的頁面數。 另外,樣本中的頁面越多,模型越好。 如果使用的頁面太少,則模型的準確性將降低。

It is is worth noting that JC Chouinard gives a good background on how to do all of this in Python using a method similar to Etsy:

值得注意的是,JC Chouinard為如何使用類似于Etsy的方法在Python中完成所有這些操作提供了良好的背景知識:

結論 (Conclusion)

There are a couple of different use cases that you could use this type of testing. The first would be to test ongoing improvements using split testing and this is similar to the approach that Etsy uses above.

您可以使用幾種類型的測試來使用這種類型的測試。 首先是使用拆分測試來測試正在進行的改進,這與Etsy上面使用的方法類似。

The second would be to test an improvement that was made on-site as part of ongoing work. This is similar to an approach outlined in this post, however with this approach you need to ensure your sample size is sufficiently large otherwise your predictions will be very inaccurate. So please do bear that in mind.

第二個是測試正在進行的工作中在現場進行的改進。 這類似于在此列出的方法后 ,但是這種方法,你需要確保你的樣本規模足夠大,否則你的預測將是非常不準確的。 因此,請記住這一點。

Both ways are valid ways of doing SEO testing, with the former being a type of A/B split test for ongoing optimisation and the latter being an test for something that has already been implemented.

兩種方法都是進行SEO測試的有效方法,前一種是用于進行持續優化的A / B拆分測試,而后一種是針對已經實施的測試。

I hope this has given you some insight into how to apply data science principles to your SEO efforts. Do read around these interesting topics and try and come up with other ways to use this library to validate your efforts. If you need background on the Python used in this post I recommend this course.

我希望這使您對如何將數據科學原理應用于SEO有所了解。 請閱讀這些有趣的主題,并嘗試使用其他方法來使用此庫來驗證您的工作。 如果您需要本文中使用的Python的背景知識,我建議您學習本課程 。

翻譯自: https://towardsdatascience.com/how-to-use-data-science-principles-to-improve-your-search-engine-optimisation-efforts-927712ed0b12

搜索引擎優化學習原理

本文來自互聯網用戶投稿,該文觀點僅代表作者本人,不代表本站立場。本站僅提供信息存儲空間服務,不擁有所有權,不承擔相關法律責任。 如若轉載,請注明出處:http://www.pswp.cn/news/389260.shtml 繁體地址,請注明出處:http://hk.pswp.cn/news/389260.shtml 英文地址,請注明出處:http://en.pswp.cn/news/389260.shtml

如若內容造成侵權/違法違規/事實不符,請聯系多彩編程網進行投訴反饋email:809451989@qq.com,一經查實,立即刪除!詳解)

)

:解析)