Rethinking the Paradigm of Content Constraints in Unpaired?Image-to-Image Translation

非成對意象翻譯中的內容制約范式再思考

蔡秀定?1 2?、朱瑤瑤?1 2?、苗東?1 2?、付林杰?1 2?、余瑤?1 2

Corresponding author.??通訊作者。

Abstract?摘要? ? ?

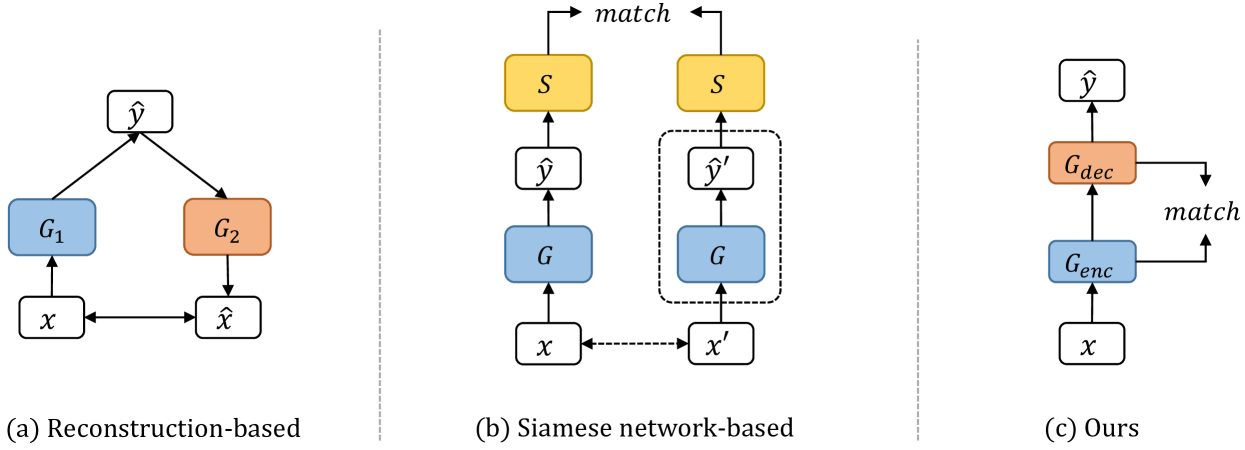

In an unpaired setting, lacking sufficient content constraints for image-to-image translation (I2I) tasks, GAN-based approaches are usually prone to model collapse. Current solutions can be divided into two categories, reconstruction-based and Siamese network-based. The former requires that the transformed or transforming image can be perfectly converted back to the original image, which is sometimes too strict and limits the generative performance. The latter involves feeding the original and generated images into a feature extractor and then matching their outputs. This is not efficient enough, and a universal feature extractor is not easily available. In this paper, we propose EnCo, a simple but efficient way to maintain the content by constraining the representational similarity in the latent space of patch-level features from the same stage of the?Encoder and deCoder of the generator. For the similarity function, we use a simple MSE loss instead of contrastive loss, which is currently widely used in I2I tasks. Benefits from the design, EnCo training is extremely efficient, while the features from the encoder produce a more positive effect on the decoding, leading to more satisfying generations. In addition, we rethink the role played by discriminators in sampling patches and propose a discriminative attention-guided (DAG) patch sampling strategy to replace random sampling. DAG is parameter-free and only requires negligible computational overhead, while significantly improving the performance of the model. Extensive experiments on multiple datasets demonstrate the effectiveness and advantages of EnCo, and we achieve multiple state-of-the-art compared to previous methods. Our code is available at?https://github.com/XiudingCai/EnCo-pytorch.

在不成對的設置中,缺乏足夠的圖像到圖像翻譯(I2I)任務的內容約束,基于GAN的方法通常容易出現模型崩潰。目前的解決方案可以分為兩類,基于重建和基于暹羅網絡。前者要求變換后或變換后的圖像能夠完美地轉換回原始圖像,這有時過于嚴格,限制了生成性能。后者涉及將原始圖像和生成的圖像饋送到特征提取器中,然后匹配它們的輸出。這是不夠有效的,并且通用特征提取器不容易獲得。在本文中,我們提出了EnCo,一個簡單但有效的方式來保持內容的約束代表相似性的潛在空間的補丁級功能從同一階段的編碼器和解碼器的發電機。 對于相似性函數,我們使用簡單的MSE損失而不是目前廣泛用于I2I任務的對比損失。得益于這種設計,EnCo訓練非常高效,而編碼器的特征對解碼產生了更積極的影響,從而產生了更令人滿意的生成。此外,我們重新思考的作用所發揮的鑒別器在采樣補丁,并提出了一個歧視性的注意力引導(DAG)補丁采樣策略,以取代隨機采樣。DAG是無參數的,只需要微不足道的計算開銷,同時顯著提高模型的性能。在多個數據集上的大量實驗證明了EnCo的有效性和優勢,與以前的方法相比,我們實現了多個最先進的方法。我們的代碼可在https://github.com/XiudingCai/EnCo-pytorch上獲得。

Introduction?介紹

Image-to-image translation (I2I) aims to convert images from one domain to another with content preserved as much as possible. I2I tasks have received a lot of attention given their wide range of applications, such as style transfer?(Ulyanov, Vedaldi, and Lempitsky?2016), semantic segmentation?(Yu, Koltun, and Funkhouser?2017; Kirillov et?al.?2020), super resolution?(Yuan et?al.?2018), colorization?(Zhang, Isola, and Efros?2016), dehazing?(Dong et?al.?2020)?and image restoration?(Liang et?al.?2021)?etc.

圖像到圖像翻譯(I2I)旨在將圖像從一個域轉換到另一個域,并盡可能保留內容。I2I任務由于其廣泛的應用而受到了廣泛的關注,例如樣式傳輸(Ulyanov,Vedaldi和Lempitsky 2016),語義分割(Yu,Koltun和Funkhouser 2017;Kirillov等人,2020年),超分辨率(Yuan等人,2018)、彩色化(Zhang,Isola和Efros,2016)、去霧(Dong等人,2020)和圖像恢復(Liang等人,2021)等。

In an unpaired setting, lacking sufficient content constraints for the I2I task, using an adversarial loss?(Goodfellow et?al.?2014)?alone is often prone to model collapse. To ensure content constraints, current generative adversarial networks (GAN)-based approaches can be broadly classified into two categories. One is reconstruction-based solutions. Typical approaches are CycleGAN?(Zhu et?al.?2017)?and UNIT?(Liu, Breuel, and Kautz?2017). They propose the cycle consistency or shared-latent space assumption, which requires that the transformed image, or the transforming image, should be able to map back to the original image perfectly. However, these assumptions are sometimes too strict?(Park et?al.?2020). For instance, the city street view is converted into a certain pixel-level annotated label, but reconverting a label to a city street view has yet countless possibilities. Such ill-posed setting limits the performance of reconstruction-based GANs, leading to unsatisfactory generations?(Chen et?al.?2020a).

在未配對的環境中,缺乏足夠的I2I任務內容約束,單獨使用對抗性損失(Goodfellow et al. 2014)往往容易導致模型崩潰。為了確保內容約束,當前基于生成對抗網絡(GAN)的方法可以大致分為兩類。一種是基于重建的解決方案。典型的方法是CycleGAN(Zhu et al. 2017)和UNIT(Liu,Breuel和考茨2017)。他們提出了循環一致性或共享潛在空間假設,這要求變換后的圖像或變換圖像應該能夠完美地映射回原始圖像。然而,這些假設有時過于嚴格(Park et al. 2020)。例如,城市街道視圖被轉換為某個像素級注釋標簽,但將標簽重新轉換為城市街道視圖還有無數可能性。 這種不適定的設置限制了基于重建的GAN的性能,導致不令人滿意的生成(Chen et al. 2020a)。

Another solution for content constraints is Siamese networks?(Bromley et?al.?1993). Siamese networks are weight sharing neural networks that accept two or more inputs. They are natural tools for comparing entity differences. For the I2I task, the input image and the generated image are fed to some Siamese networks separately, and the content consistency is ensured by matching the output features. CUT?(Park et?al.?2020)?re-exploit the encoder of the generator as a feature extractor and propose the PatchNCE loss to maximize the mutual information between the patches of the input and generated images and achieve superior performance over the reconstruction-based methods. Some studies?(Mechrez, Talmi, and Zelnik-Manor?2018; Zheng, Cham, and Cai?2021)?repurpose the pre-trained VGG network?(Simonyan and Zisserman?2015)?as a feature extractor to constrain the feature correlation between the source and generated images. Given the strength and flexibility of Siamese networks, such content-constrained methods are increasingly widely used. However, Siamese network-based GANs mean that the source and generated images need to be fed into the Siamese networks again separately, which entails additional computational costs for training. In addition, an ideal Siamese network that can measure the differences in images well, is not always available.

另一種解決內容約束的方法是連體網絡(Bromley et al. 1993)。Siamese網絡是一種權重共享神經網絡,它接受兩個或多個輸入。它們是比較實體差異的自然工具。對于I2I任務,將輸入圖像和生成圖像分別饋送到一些Siamese網絡,并通過匹配輸出特征來確保內容一致性。CUT(Park et al. 2020)重新利用生成器的編碼器作為特征提取器,并提出PatchNCE損失,以最大化輸入圖像和生成圖像的補丁之間的互信息,并實現優于基于重建的方法的上級性能。一些研究(Mechrez,Talmi和Zelnik—Manor 2018;Zheng,Cham和Cai 2021)將預訓練的VGG網絡(Simonyan和Zisserman 2015)重新用作特征提取器,以約束源圖像和生成圖像之間的特征相關性。 由于連體網絡的強度和靈活性,這種內容約束方法越來越廣泛地使用。然而,基于暹羅網絡的GAN意味著源圖像和生成的圖像需要再次單獨輸入暹羅網絡,這需要額外的訓練計算成本。此外,一個理想的暹羅網絡,可以衡量圖像的差異,并不總是可用的。

Figure 1:A comparison of different content constraints frameworks.?(a) Reconstruction-based methods require that?𝑥?𝐺2?(𝐺1?(𝑥)), a??1?loss or??2?loss is always used. Typical methods are CycleGAN?(Zhu et?al.?2017), UNIT?(Zhu et?al.?2017),?etc. (b) Siamese network-based methods like CUT?(Park et?al.?2020)?or LSeSim?(Zheng, Cham, and Cai?2021)?complete the content constraint through a defined feature extractor?𝑆,?i.e.,?match?(𝑆?(𝐺?(𝑥),𝑥′))?or?match(𝑆(𝐺(𝑥),𝑆(𝐺(𝑥′))), where?𝑥′?is the augmented?𝑥. Note that the augmentation of?𝑥?is optional (dashed box). (c) EnCo completes the content constraint by agreeing on the representational similarity of features from the encoder and decoder of the generator.

圖1:不同內容約束框架的比較。(a)基于重建的方法要求始終使用?𝑥?𝐺2?(𝐺1?(𝑥))?、??1?損失或??2?損失。典型的方法是CycleGAN(Zhu等人,2017),UNIT(Zhu等人,2017)等(B)基于Siamese網絡的方法,如CUT(Park等人,2020)或LSeSim(Zheng,Cham和Cai 2021)通過定義的特征提取器?𝑆?完成內容約束,即,?match?(𝑆?(𝐺?(𝑥),𝑥′))?或?match(𝑆(𝐺(𝑥),𝑆(𝐺(𝑥′)))?,其中?𝑥′?是增強的?𝑥?。請注意,?𝑥?的增強是可選的(虛線框)。(c)EnCo通過同意來自生成器的編碼器和解碼器的特征的表示相似性來完成內容約束。

Can we explicitly constrain the content inside the generator network? Inspired by U-Net?(Ronneberger, Fischer, and Brox?2015), a popular modern network architecture design that integrates features from different stages of the encoder and the decoder by skip connections, we make the encoding-decoding symmetry assumption for the I2I tasks. We assume that the semantic levels of encoder and decoder features from the same stage are the same (note that the number of encoder and decoder stages are opposite).

我們可以顯式地約束生成器網絡內部的內容嗎?受U—Net(Ronneberger,Fischer和Brox 2015)的啟發,U—Net是一種流行的現代網絡架構設計,通過跳過連接集成了編碼器和解碼器的不同階段的功能,我們為I2I任務做出了編碼—解碼對稱性假設。我們假設來自同一階段的編碼器和解碼器特征的語義級別是相同的(注意,編碼器和解碼器階段的數量是相反的)。

Based on the assumption, we present EnCo, a simple but efficient way to constrain the content by agreeing on the representational similarity in the latent space of features from the same stage of the?Encoder and deCoder of the generator. Specifically, we map the multi-stage intermediate features of the network to the latent space through a projection head, in which we aim to bring closer the representation of the same-stage features from the encoder and decoder, respectively. To prevent the networks from falling into a collapse solution, where the projection learns to output constants to minimize the similarity loss, we stop the gradient of the feature branch from the encoder, as well as add a prediction head for the decoder branch. It is worth mentioning that we find that negative samples are not necessary for the EnCo framework for the content constraint. As a result, we use a simple mean squared error loss instead of the contrastive loss that is widely used in current I2I tasks. This eludes the problems associated with negative sample selection?(Hu et?al.?2022).

基于這一假設,我們提出了EnCo,一種簡單但有效的方式來約束內容,通過同意在潛在空間中的特征的表示相似性,從同一階段的編碼器和解碼器的生成器。具體來說,我們通過投影頭將網絡的多級中間特征映射到潛在空間,我們的目標是分別使編碼器和解碼器的同級特征的表示更接近。為了防止網絡陷入崩潰解決方案,其中投影學習輸出常數以最小化相似性損失,我們停止編碼器的特征分支的梯度,并為解碼器分支添加預測頭。值得一提的是,我們發現,負樣本是不必要的EnCo框架的內容約束。因此,我們使用簡單的均方誤差損失,而不是當前I2I任務中廣泛使用的對比損失。 這避免了與陰性樣本選擇相關的問題(Hu等人,2022)。

Benefits from the design, the training of EnCo is efficient and lightweight, as the content constraint is accomplished inside the generative network and no reconstruction or Siamese networks are required. To train more efficiently, similar to CUT, we sample patches from the intermediate features of the generative network, and perform patch-level features matching instead of the entire feature map-level. An ensuing question is from which locations we sample the patches. A simple approach is random sampling, which has been adopted by many methods?(Park et?al.?2020; Han et?al.?2021; Zheng, Cham, and Cai?2021). However, this may not be the most efficient?(Hu et?al.?2022). We note that the discriminator provides key information for the truthfulness of the generated images. However, most of the current GAN-based approaches ignore the potential role of the discriminator in sampling patches (if involved). To this end, we propose a parameter-free discriminative attention-guided patch sampling strategy (DAG). DAG takes advantage of the discriminative information provided by the discriminator and attentively selects more informative patches for optimization. Experimentally, we show that our proposed patch sampling strategy can accelerate the convergence of model training and improve the model generation performance with almost negligible computational effort.

得益于這種設計,EnCo的訓練是高效和輕量級的,因為內容約束是在生成網絡內部完成的,不需要重建或連體網絡。為了更有效地訓練,類似于CUT,我們從生成網絡的中間特征中采樣補丁,并執行補丁級特征匹配,而不是整個特征映射級。隨之而來的問題是我們從哪些位置采樣補丁。一種簡單的方法是隨機抽樣,已被許多方法采用(Park等人,2020; Han等人,2021; Zheng,Cham和Cai,2021)。然而,這可能不是最有效的(Hu等人,2022)。我們注意到,所生成的圖像的真實性,提供了關鍵信息。然而,目前大多數基于GAN的方法忽略了采樣補丁中的潛在作用(如果涉及)。 為此,我們提出了一個無參數的歧視性注意引導補丁采樣策略(DAG)。DAG充分利用了神經網絡提供的判別信息,并仔細選擇信息量更大的補丁進行優化。實驗表明,我們提出的補丁采樣策略可以加速模型訓練的收斂,提高模型生成性能,幾乎可以忽略不計的計算工作量。

- ??

We propose EnCo, a simple yet efficient way for content constrains by agreeing on the representational similarity of features from the same stage of the encoder and decoder of the generator in the latent space.

·我們提出了EnCo,這是一種簡單而有效的內容約束方法,通過同意潛在空間中來自生成器的編碼器和解碼器的同一階段的特征的表示相似性。 - ??

We rethink the potential role of discriminators in patch sampling and propose a parameter-free DAG sampling strategy. DAG improves the generative performance significantly while only requiring an almost negligible computational cost.

·我們重新思考了判別器在斑塊采樣中的潛在作用,并提出了一種無參數的DAG采樣策略。DAG顯著提高了生成性能,同時只需要幾乎可以忽略不計的計算成本。 - ??

Extensive experiments on several popular I2I benchmarks reveal the effectiveness and advantages of EnCo. We achieve several state-of-the-art compared to previous methods.

·在幾個流行的I2I基準測試上進行的大量實驗揭示了EnCo的有效性和優勢。與以前的方法相比,我們實現了幾個國家的最先進的。

Related Works?相關作品

Image-to-Image Translation?Image-to-image translation ?(Isola et?al.?2017; Wang et?al.?2018; Zhu et?al.?2017; Park et?al.?2020; Wang et?al.?2021)?aims to transform images from the source domain to the target domain with the semantic content preserved. Pix2Pix?(Isola et?al.?2017)?was the first framework to accomplish the I2I task using paired data with an adversarial loss?(Goodfellow et?al.?2014)?and a reconstruction loss. However, paired data across domains are infeasible to be collected in most settings. Methods such as CycleGAN?(Zhu et?al.?2017), DiscoGAN?(Kim et?al.?2017)?and DualGAN?(Yi et?al.?2017)?extend the I2I task to an unsupervised setting based on cycle consistency assumption that the generated image should be able to be converted back to the original image again. In addition, UNIT?(Liu, Breuel, and Kautz?2017)?and MUNIT?(Huang et?al.?2018)?propose to learn a shared-latent space in which the hidden variable,?i.e., the encoded image, can be decoded as both the target image and the original image. These methods can be classified as reconstruction-based solutions, and they implicitly assume that the process of conversion should be able to reconvert to the original image. However, perfect reconstruction is unlikely to be possible in many cases, which can potentially limit the performance of the generative networks?(Park et?al.?2020). In addition, such methods usually require additional auxiliary generators and discriminators.

圖像到圖像翻譯(Isola et al. 2017; Wang et al. 2018; Zhu et al. 2017; Park et al. 2020; Wang et al. 2021)旨在將圖像從源域轉換到目標域,并保留語義內容。Pix 2 Pix(Isola et al. 2017)是第一個使用具有對抗性損失(Goodfellow et al. 2014)和重建損失的配對數據完成I2 I任務的框架。然而,在大多數情況下,跨域的配對數據是不可行的。CycleGAN(Zhu et al. 2017)、DiscoGAN(Kim et al. 2017)和DualGAN(Yi et al. 2017)等方法將I2 I任務擴展到基于循環一致性假設的無監督設置,即生成的圖像應該能夠再次轉換回原始圖像。此外,UNIT(Liu,Breuel,and考茨2017)和MUNIT(Huang et al. 2018)提出學習一個共享潛在空間,其中隱藏變量,即編碼圖像可以被解碼為目標圖像和原始圖像。這些方法可以歸類為基于重建的解決方案,它們隱含地假設轉換過程應該能夠重新轉換為原始圖像。然而,在許多情況下,完美的重建是不可能的,這可能會限制生成網絡的性能(Park et al. 2020)。此外,這種方法通常需要額外的輔助發生器和鑒別器。

Siamese Networks for Content Constraints?Another solution for content constraints can be attributed to Siamese network-based approaches, and they can effectively address the challenges posed by reconstruction-based ones. Siamese networks usually consist of networks with shared weights that accept two or more inputs, extract features, and compare differences. The selection of Siamese networks for content constraints can be different. For instance, the Siamese network of DistanceGAN and GcGAN is their generator. They require that the distances between the input images and the distances between the output images after generation should be consistent. CUT reuses the encoder of the generator as the Siamese network and proposes PatchNCE loss, aiming to maximize the mutual information between the patches of the input and output images. DCL?(Han et?al.?2021)?extends CUT to a dual-way settings that exploiting two independent encoders and projectors for input and generated images respectively, but doubles the number of network parameters. Some recent studies have also attempted to re-purposed the pre-trained VGGNet?(Simonyan and Zisserman?2015)?as a perceptual loss to require that the input and output images should be visually consistent?(Zheng, Cham, and Cai?2021). There may be a priori limitations in these methods, such as the frozen network weights of the loss function cannot adapt to the data and thus may not be the most appropriate?(Zheng, Cham, and Cai?2021). Our work is quite different from the current approaches, as shown in Figure?1, where we impose constraints inside the generative network,?i.e., between the encoder and decoder, without requiring additional networks for reconstruction or feature extraction. Therefore, EnCo has a higher training efficiency.

內容約束的暹羅網絡內容約束的另一種解決方案可以歸因于基于暹羅網絡的方法,它們可以有效地解決基于重建的方法所帶來的挑戰。暹羅網絡通常由具有共享權重的網絡組成,這些網絡接受兩個或更多個輸入,提取特征并比較差異。用于內容約束的連體網絡的選擇可以不同。例如,DistanceGAN和GcGAN的Siamese網絡是它們的生成器。它們要求輸入圖像之間的距離和生成后的輸出圖像之間的距離應該一致。CUT重用生成器的編碼器作為Siamese網絡,并提出PatchNCE損失,旨在最大化輸入和輸出圖像的補丁之間的互信息。DCL(Han et al. 2021)將CUT擴展到雙向設置,利用兩個獨立的編碼器和投影儀分別用于輸入和生成圖像,但網絡參數的數量增加了一倍。最近的一些研究也試圖重新利用預先訓練的VGGNet(Simonyan和Zisserman 2015)作為感知損失,要求輸入和輸出圖像在視覺上保持一致(Zheng,Cham和Cai 2021)。這些方法可能存在先驗限制,例如損失函數的凍結網絡權重無法適應數據,因此可能不是最合適的(Zheng,Cham和Cai 2021)。我們的工作與當前的方法完全不同,如圖1所示,我們在生成網絡內部施加約束,即,在編碼器和解碼器之間,而不需要用于重構或特征提取的附加網絡。因此,EnCo具有更高的培訓效率。

Contrastive Learning?Recently, contrastive learning (CL) has achieved impressive results in the field of unsupervised representation learning?(Hjelm et?al.?2018; Chen et?al.?2020b; He et?al.?2020; Henaff?2020; Oord, Li, and Vinyals?2018). Based on the idea of discriminative, CL aims to bring closer the representation of two correlated signals (known as positive pair) in the embedding space while pushing away the representation of uncorrelated signals (known as negative pair). CUT first introduced contrastive learning to the I2I task and has been continuously improved since then?(Han et?al.?2021; Hu et?al.?2022; Zhan et?al.?2022). QS-Attn?(Hu et?al.?2022)?improved the negative sampling strategy of CUT, by computing the?𝑄?𝐾?𝑉?matrix to dynamically selects relevant anchor points as positive and negatives. MoNCE?(Zhan et?al.?2022)?proposed modulated noise contrastive estimation loss to re-weight the pushing force of negatives adaptively according to their similarity to the anchor. However, the performance of CL-based GANs approaches is still affected by the negative sample selection and poor negative may lead to slow convergence and even counter-optimization?(Robinson et?al.?2020). Therefore, some studies have raised the question whether using of the negative is necessary. BYOL?(Grill et?al.?2020)?successfully trained a discriminative network using only positive pairs with a moment encoder. SimSiam?(Chen and He?2020)?pointed out that the stopping gradient is an important component for successful training without negatives, thus removing the moment encoder. EnCo is trained without negatives and only considerate the same stage features from the encoder and decoder of the generator as a positive pair, ensuring content consistency.

最近,對比學習(CL)在無監督表示學習領域取得了令人印象深刻的成果(Hjelm et al. 2018;Chen et al. 2020 b;He et al. 2020;Henaff 2020;Oord,Li和Vinyals 2018)。基于判別的思想,CL旨在使嵌入空間中兩個相關信號(稱為正對)的表示更接近,同時推開不相關信號(稱為負對)的表示。CUT首先將對比學習引入I2I任務,此后一直在不斷改進(Han et al. 2021;Hu et al. 2022;Zhan et al. 2022)。QS—Attn(Hu et al. 2022)通過計算?𝑄?𝐾?𝑉?矩陣來動態選擇相關錨點作為陽性和陰性,從而改進了CUT的陰性采樣策略。MoNCE(Zhan等人,2022)提出了調制噪聲對比估計損失,以根據其與錨的相似性自適應地重新加權底片的推力。 然而,基于CL的GANs方法的性能仍然受到負樣本選擇的影響,并且不良的負可能導致收斂緩慢甚至反優化(羅賓遜等人2020)。因此,一些研究提出了是否有必要使用否定的問題。BYOL(Grill et al. 2020)成功地訓練了一個僅使用正對和矩編碼器的判別網絡。SimSiam(Chen and He 2020)指出,停止梯度是成功訓練而沒有負數的重要組成部分,因此刪除了矩編碼器。EnCo在沒有否定的情況下進行訓練,并且只考慮來自生成器的編碼器和解碼器的相同階段特征作為肯定對,以確保內容一致性。

Methods?方法

Main Idea?主要思想

Given an image from the source domain?𝑥∈𝒳, our goal is to learn a mapping function (also called a generator)?𝐺𝒳→𝒴?that converts the image from the source domain to the target domain?𝒴,?i.e.,?𝑦^=𝐺𝒳→𝒴?(𝑥), and with as much content semantic information preserved as possible.

給定來自源域?𝑥∈𝒳?的圖像,我們的目標是學習將圖像從源域轉換到目標域?𝒴?的映射函數(也稱為生成器)?𝐺𝒳→𝒴?,即,?𝑦^=𝐺𝒳→𝒴?(𝑥)?,并盡可能多地保留內容語義信息。

Traditional content constraint methods based on Siamese networks, such as CUT, intend to constrain the content consistency of an image after generation with the source image. EnCo aims to constrain the content consistency of features generated in the intermediate process from the source image to the target image. Our approach is more efficient to train and achieves better performance. The overall architecture is shown in Figure?2?and contains the generator?𝐺, the discriminator?𝐷, the projection head??, and the prediction head?𝑔. We decompose the generator into two parts, the encoder and the decoder, each of which consists of?𝐿-stage sub-networks. For any input source domain image?𝑥, after passing through?𝐿-stage sub-networks of the encoder, a sequence of features of different semantic levels are produced,?i.e.,?{?𝑙}1𝐿={𝐺𝑒?𝑛?𝑐𝑙?(?𝑙?1)}1𝐿. where?𝑥=?0. Then feeding the output of the last stage of the encoder,?i.e.,??𝐿, into the decoder, we can also obtain a sequence of features of different semantic levels in the decoder?{?𝑙}𝐿+12?𝐿={𝐺𝑑?𝑒?𝑐𝑙?(?𝑙?1)}𝐿+12?𝐿, where?𝑦^=?2?𝐿. For the I2I task, we make the encoding-decoding symmetry assumption that the semantic levels of the encoder and decoder features?𝑓𝑙?and?𝑓2?𝐿?𝑙?from the same stage are the same (note that the number of stages of the encoder and decoder is opposite). For brevity, we abbreviate?2?𝐿?𝑙?as?𝑙~?and denote?(𝑓𝑙,𝑓𝑙~)?as a pair of same-stage features in the following.

傳統的基于Siamese網絡的內容約束方法,如CUT,旨在約束生成后的圖像與源圖像的內容一致性。EnCo旨在約束從源圖像到目標圖像的中間過程中生成的特征的內容一致性。我們的方法更有效地訓練,并實現更好的性能。整個架構如圖2所示,包含生成器?𝐺?、預測頭?𝐷?、投影頭???和預測頭?𝑔?。我們將生成器分解為兩部分,編碼器和解碼器,每個部分由?𝐿?級子網絡組成。對于任何輸入源域圖像?𝑥?,在通過編碼器的?𝐿?級子網絡之后,產生不同語義級別的特征序列,即,七號。?𝑥=?0?的地方。然后將編碼器的最后一級的輸出,即,??𝐿?,輸入到解碼器中,我們還可以在解碼器?{?𝑙}𝐿+12?𝐿={𝐺𝑑?𝑒?𝑐𝑙?(?𝑙?1)}𝐿+12?𝐿?中獲得不同語義級別的特征序列,其中?𝑦^=?2?𝐿?。對于I2 I任務,我們進行編碼-解碼對稱性假設,即來自同一階段的編碼器和解碼器特征?𝑓𝑙?和?𝑓2?𝐿?𝑙?的語義級別相同(注意,編碼器和解碼器的階段數量相反)。為了簡潔起見,我們將?2?𝐿?𝑙?表示為?𝑙~?,并將?(𝑓𝑙,𝑓𝑙~)?表示為下面的一對相同階段的特征。

Figure 2:(a) The overview of EnCo framework. EnCo constrain the content by agreeing on the representational similarity in the latent space of features from the same stage of the encoder and decoder of the generator. (b) The architecture of the projection. (c) The architecture of the prediction.

圖2:(a)EnCo框架概述。EnCo通過同意來自生成器的編碼器和解碼器的相同階段的特征的潛在空間中的表示相似性來約束內容。(b)投影的架構。(c)預測的架構。

We consider that the content of the transformed image can be preserved by constraining?(𝑓𝑙,𝑓𝑙~). However, this direct approach may degrade the optimization of the generative network. With this view, we propose to guarantee the content constraint by constraining the representational similarity of the encoder and decoder features of the generator in the latent space.

我們認為可以通過約束?(𝑓𝑙,𝑓𝑙~)?來保留變換圖像的內容。然而,這種直接方法可能會降低生成網絡的優化。有了這個觀點,我們建議通過約束潛在空間中生成器的編碼器和解碼器特征的表示相似性來保證內容約束。

As shown in Figure?2, for any pair of same-stage features?(𝑓𝑙,𝑓𝑙~), we map them to the?𝐾-dimensional latent space by a shared two-layer projection head???(?)?to obtain?𝑧𝑙???(𝑓𝑙)?and?𝑧𝑙~???(𝑓𝑙~). Inspired by?(Grill et?al.?2020), we further add a prediction head?𝑔?(?)?to?𝑧𝑙~?to enhance the non-linear expression of?𝑧𝑙~, and obtain?𝑝𝑙~?𝑔?(𝑧𝑙~).

如圖2所示,對于任何一對同級特征?(𝑓𝑙,𝑓𝑙~)?,我們通過共享的雙層投影頭???(?)?將它們映射到?𝐾?維潛在空間,以獲得?𝑧𝑙???(𝑓𝑙)?和?𝑧𝑙~???(𝑓𝑙~)?。受(Grill et al. 2020)的啟發,我們進一步在?𝑧𝑙~?中添加預測頭?𝑔?(?)?,以增強?𝑧𝑙~?的非線性表達,并獲得?𝑝𝑙~?𝑔?(𝑧𝑙~)?。

To avoid collapsing or expanding, we??2-normalize both?𝑧𝑙?and?𝑝𝑙~?and map them to the unit sphere space to obtain?𝑧𝑙ˉ?𝑧𝑙/‖𝑧𝑙‖2?and?𝑝ˉ𝑙~?𝑝𝑙~/‖𝑝𝑙~‖2. Finally, we define the following mean-squared error loss aiming to constrain the representational similarity of a pair of same-stage normalized hidden variables from the encoder and decoder,

為了避免折疊或膨脹,我們對?𝑧𝑙?和?𝑝𝑙~?進行??2?-歸一化,并將它們映射到單位球面空間,以獲得?𝑧𝑙ˉ?𝑧𝑙/‖𝑧𝑙‖2?和?𝑝ˉ𝑙~?𝑝𝑙~/‖𝑝𝑙~‖2?。最后,我們定義了以下均方誤差損失,旨在約束來自編碼器和解碼器的一對同級歸一化隱變量的表示相似性,

| ??(𝑧𝑙~,𝑧𝑙)=‖𝑝ˉ𝑙~?𝑧𝑙ˉ‖22=2?2??𝑔?(𝑧𝑙~),𝑧𝑙?‖𝑔?(𝑧𝑙~)‖2?‖𝑧𝑙‖2. | (1) |

To prevent a collapse solution,?i.e., the networks learn to output constants to minimize the loss. Following?(Chen and He?2020), we solve this problem by introducing the key component of stopping gradient. We modify Eq. (1) as follows,

為了防止崩潰解決方案,即,網絡學習輸出常數以最小化損失。根據(Chen和He 2020),我們通過引入停止梯度的關鍵分量來解決這個問題。我們修改Eq. (1)如下所述,

| ??(𝑧𝑙~,𝚜𝚝𝚘𝚙𝚐𝚛𝚊𝚍?(𝑧𝑙)). | (2) |

This means that during optimization,?𝑧𝑙?is constant and?𝑧𝑙~?is expected to be able to predict?𝑧𝑙?through the prediction head?𝑔?(?). Therefore,?𝑧𝑙~?cannot vary too much from?𝑧𝑙, in such a way that the content constraint is achieved.

這意味著在優化期間,?𝑧𝑙?是恒定的,并且?𝑧𝑙~?被期望能夠通過預測頭?𝑔?(?)?預測?𝑧𝑙?。因此,?𝑧𝑙~?不能以實現內容約束的方式與?𝑧𝑙?變化太多。

Multi-stage, Patch-based Content Constraints

多階段、基于補丁的內容約束

Consider a more efficient training approach, given features pair?(𝑓𝑙,𝑓𝑙~), we sample?𝑆?patches from different positions of?𝑓𝑙~, feed to the projection and prediction head, and obtain the set?𝐪𝐥~={𝑞𝑙~(1),?,𝑞𝑙~(𝑆)}, where the subscript indicates which stage to sample and the superscript denotes where to sample from the feature map. Similarly, we can sample from the same position, from?𝑓𝑙, and feed to the projection to get?𝐤𝐥={𝑘𝑙~(1),?,𝑘𝑙(𝑆)}. We implement content constraints on patch-level features, rather than the entire feature map-level. Therefore, we just need to use?𝐤𝐥,𝐪𝐥~?to replace?𝑧𝑙,𝑧𝑙~?in Eq. (2), respectively, and get

考慮一種更有效的訓練方法,給定特征對?(𝑓𝑙,𝑓𝑙~)?,我們從?𝑓𝑙~?的不同位置采樣?𝑆?塊,饋送到投影和預測頭,并獲得集合?𝐪𝐥~={𝑞𝑙~(1),?,𝑞𝑙~(𝑆)}?,其中下標表示要采樣的階段,上標表示從特征圖的哪里采樣。類似地,我們可以從相同的位置,從?𝑓𝑙?采樣,并饋送到投影以獲得?𝐤𝐥={𝑘𝑙~(1),?,𝑘𝑙(𝑆)}?。我們在補丁級別的功能上實現內容約束,而不是整個功能映射級別。因此,我們只需要使用?𝐤𝐥,𝐪𝐥~?來替換等式中的?𝑧𝑙,𝑧𝑙~?。(2),并得到

| ??(𝐪𝐥~,𝚜𝚝𝚘𝚙𝚐𝚛𝚊𝚍?(𝐤𝐥)). | (3) |

We can further extend Eq. (3) to a multi-stage version,?i.e.,

我們可以進一步擴展Eq。(3)到多級版本,即,

| ?MultiStage?(𝐺,?,𝑔,𝐗)=𝔼𝑥~𝐗?∑𝑙𝕃∑𝑠𝕊𝑙??(𝑞𝑙~(𝑠),𝚜𝚝𝚘𝚙𝚐𝚛𝚊𝚍?(𝑘𝑙(𝑠))), | (4) |

where,?𝕃?is the set of chosen same-stage pairwise features to calculate the mean-squared error loss, and?𝕊𝑙?is the set of sampled positions of patches from?(𝑓𝑙,𝑓𝑙ˉ).

其中,?𝕃?是用于計算均方誤差損失的所選同級成對特征的集合,并且?𝕊𝑙?是來自?(𝑓𝑙,𝑓𝑙ˉ)?的貼片的采樣位置的集合。

Discriminative Attention-guided Patch Sampling Strategy

區分性注意引導的塊采樣策略

We further propose an efficient discriminative attention-guided (DAG) patch sampling strategy to replace the current widely used random sampling strategy used in Eq. (4). The idea of DAG is simple. DAG mainly takes good advantage of the important information from the discriminator: the truthfulness of the generated images, and attentively selects more informative patches for optimization.

我們進一步提出了一個有效的判別注意力引導(DAG)補丁采樣策略,以取代目前廣泛使用的隨機采樣策略中使用的方程。(4). DAG的概念很簡單。DAG主要利用了圖像的重要信息:生成圖像的真實性,并仔細選擇更多的信息塊進行優化。

Assuming that a total of?𝐾?patches would be sampled, for any pairwise features?(𝑓𝑙,𝑓𝑙~), DAG proceeds as follows: 1) obtaining the attention scores: interpolating the output of the discriminator to the same resolution size as?𝑓𝑙?and?𝑓𝑙~, thus each position on?𝑓𝑙?receives a attention score; 2) oversampling: uniformly sampling?𝑘?𝐾?(𝑘>1)?patches from?𝑓𝑙~, where?𝑘?is the oversampling ratio; 3) ranking: sorting all sampled patches in ascending order according to their corresponding attention scores; 4) importance sampling: selecting the top?𝛽?𝐾?(0≤𝛽≤1)?patches with the highest scores, where?𝛽?is the importance sampling ratio; 5) Covering: uniformly sample the remaining?(1?𝛽)?𝐾?patches. Note that the DAG is parameter-free, while requiring only an almost negligible computational cost.

假設將對總共?𝐾?個塊進行采樣,對于任何成對特征?(𝑓𝑙,𝑓𝑙~)?,DAG如下進行:1)獲得注意力分數:將QR的輸出內插到與?𝑓𝑙?和?𝑓𝑙~?相同的分辨率大小,因此?𝑓𝑙?上的每個位置接收注意力分數; 2)過采樣:從?𝑓𝑙~?中均勻地采樣?𝑘?𝐾?(𝑘>1)?塊,其中?𝑘?是過采樣率; 3)排序:根據它們對應的注意力分數將所有采樣塊以升序排序; 4)重要性采樣:選擇具有最高分數的前?𝛽?𝐾?(0≤𝛽≤1)?塊,其中?𝛽?是重要性采樣率; 5)覆蓋:對剩余的?(1?𝛽)?𝐾?貼片進行均勻采樣。請注意,DAG是無參數的,同時只需要幾乎可以忽略不計的計算成本。

Figure 3:Results of qualitative comparison.?We compare EnCo with existing methods on the?Horse→Zebra,?Cat→Dog, and?Cityscapes?datasets. EnCo achieves more satisfactory visual results. For example, in the case of?Cat→Dog, EnCo generates a clearer nose for the dog. And in the case of?Cityscapes, EnCo successfully generates the traffic cone represented in yellow in the semantic annotation, while the other methods yielded only suboptimal results.

圖3:定性比較結果。我們將EnCo與現有方法在馬?→?斑馬,貓?→?狗和城市景觀數據集上進行比較。EnCo獲得了更令人滿意的視覺效果。例如,在貓?→?狗的情況下,EnCo為狗生成更清晰的鼻子。在Cityscapes的情況下,EnCo成功地生成了語義注釋中以黃色表示的交通錐,而其他方法只產生了次優的結果。

| Method | CityScapes | Cat→Dog?貓?→?狗 | Horse→Zebra?馬?→?斑馬 | |||||

|---|---|---|---|---|---|---|---|---|

| mAP↑ | pixAcc↑ | classAcc↑ | FID↓?FID編號0# | FID↓?FID編號0# | FID↓?FID編號0# | Mem(GB)↓?內存(GB)?↓ | sec/iter↓?秒/iter?↓ | |

| CycleGAN?(Zhu et?al. 2017) CycleGAN(Zhu等人,2017) | 20.4 | 55.9 | 25.4 | 68.6 | 85.9 | 66.8 | 4.81 | 0.40 |

| MUNIT?(Huang et?al. 2018) MUNIT(Huang等人,2018) | 16.9 | 56.5 | 22.5 | 91.4 | 104.4 | 133.8 | 3.84 | 0.39 |

| \cdashline1-9 GCGAN?(Fu et?al. 2019) \cdashline1-9 GCGAN(Fu等人,2019) | 21.2 | 63.2 | 26.6 | 105.2 | 96.6 | 86.7 | 2.67 | 0.62 |

| CUT?(Park et?al. 2020) CUT(Park等人,2020) | 24.7 | 68.8 | 30.7 | 56.4 | 76.2 | 45.5 | 3.33 | 0.24 |

| DCLGAN?(Han et?al. 2021) DCLGAN(Han等人,2021) | 22.9 | 77.0 | 29.6 | 49.4 | 60.7 | 43.2 | 7.45 | 0.41 |

| FSeSim?(Zheng, Cham, and Cai 2021) FSeSim(Zheng,Cham和Cai 2021) | 22.1 | 69.4 | 27.8 | 54.3 | 87.8 | 43.4 | 2.92 | 0.17 |

| MoNCE?(Zhan et?al. 2022) MoNCE(Zhan等人,2022) | 25.6 | 78.4 | 33.0 | 54.7 | 74.2 | 41.9 | 4.03 | 0.28 |

| QS-Attn?(Hu et?al. 2022) QS-Attn(Hu等人,2022) | 25.5 | 79.9 | 31.2 | 53.5 | 72.8 | 41.1 | 2.98 | 0.35 |

| \cdashline1-9 EnCo (Ours) \cdashline1-9 EnCo(我們的) | 28.4 | 77.3 | 37.2 | 45.4 | 54.7 | 38.7 | 2.83 | 0.14 |

Table 1:Comparison with baselines on unpaired image translation.?We compare our approach to the state-of-the-art methods on three datasets. We show multiple metrics, where the?↑?indicates higher is better and the?↓?indicates lower is better. It is worth noting that our method outperforms all baselines on the FID metric and shows superior results on the?Cityscapes?for the semantic segmentation metric. Also, our method shows a fast training speed.

表1:未配對圖像平移與基線的比較。我們將我們的方法與三個數據集上的最先進方法進行比較。我們展示了多個指標,其中?↑?表示越高越好,?↓?表示越低越好。值得注意的是,我們的方法在FID指標上優于所有基線,并在Cityscapes上顯示出語義分割指標的上級結果。此外,我們的方法顯示出快速的訓練速度。

Full Objective?完整目標

In addition to the MultiStage loss presented above, we also use an adversarial loss to complete the domain transfer, and we add an identity mapping loss as a regularization term to stabilize the network training.

除了上面介紹的MultiStage損失,我們還使用對抗損失來完成域轉移,并添加身份映射損失作為正則化項來穩定網絡訓練。

Generative adversarial loss?We use LSGAN loss?(Mao et?al.?2017)?as the adversarial loss to encourage the generated images that are as visually similar to images in the target domain as possible, which is formalized as follows,

生成性對抗損失我們使用LSGAN損失(Mao et al. 2017)作為對抗損失,以鼓勵生成的圖像盡可能與目標域中的圖像在視覺上相似,形式化如下,

| ?GAN=𝔼𝑦~𝑌?[𝐷?(𝑦)2]+𝔼𝑥~𝑋?[(1?𝐷?(𝐺?(𝑥)))2]. | (5) |

Identity mapping loss?In order to stable the training and accelerate the convergence, we add an identity mapping loss.

為了穩定訓練和加速收斂,我們添加了一個恒等映射損失。

| ?identity?(𝐺)=𝔼𝑦~𝑌?‖𝐺?(𝑦)?𝑦‖1. | (6) |

We only use this regular term in the first half of the training phase because we find that it impacts the generative performance of the network to some extent.

我們只在訓練階段的前半部分使用這個正則項,因為我們發現它在一定程度上影響了網絡的生成性能。

Overall loss?Our final objective function is as follows:

總損失我們的最終目標函數如下:

| ?total?(𝐺,𝐷,?,𝑔)=?GAN?(𝐺,𝐷,𝑋,𝑌)+𝜆𝑁?𝐶?𝐸??MultiStage?(𝐺,?,𝑔,𝑋)+𝜆𝐼?𝐷?𝑇??identity?(𝐺,𝑌), | (7) |

where?𝜆𝑁?𝐶?𝐸?and?𝜆𝐼?𝐷?𝑇?are set to 2 and 10, respectively.

其中?𝜆𝑁?𝐶?𝐸?和?𝜆𝐼?𝐷?𝑇?分別設置為2和10。

Discussion?討論

EnCo achieves content consistency by constraining the similarity between representations of the encoder and decoder features at multiple stages in the latent space. In fact, there are two additional perspectives on how EnCo achieves content consistency that we would like to offer. Firstly, in relation to reconstruction-based methods, EnCo requires decoding features that should be able to predict their corresponding encoded features in turn through the prediction MLP, which is somehow similar to the reconstruction-based approach.?However, EnCo conducts the reconstruction at the feature level rather than the pixel level, which provides more freedom when enforcing content consistency.?EnCo degenerates into a special CycleGAN approach when we only constrain the consistency of the input and generated images, with projection being an identity network and prediction network being another generator. Secondly, EnCo can also be regarded as an implicit and lightweight Siamese network paradigm (similar to CUT), where encoder and decoder features are constrained to have similar representations in the latent space through the shared projection MLP.

EnCo通過在潛在空間中的多個階段約束編碼器和解碼器特征的表示之間的相似性來實現內容一致性。事實上,關于EnCo如何實現內容一致性,我們想提供兩個額外的觀點。首先,關于基于重構的方法,EnCo需要解碼特征,這些解碼特征應當能夠通過預測MLP來依次預測其對應的編碼特征,這在某種程度上類似于基于重構的方法。然而,EnCo在特征級而不是像素級進行重構,這在強制內容一致性時提供了更多的自由度。當我們只約束輸入和生成圖像的一致性時,EnCo退化為一種特殊的CycleGAN方法,投影是一個恒等網絡,預測網絡是另一個生成器。 其次,EnCo也可以被視為一種隱式和輕量級的連體網絡范式(類似于CUT),其中編碼器和解碼器特征被約束為通過共享投影MLP在潛在空間中具有相似的表示。

:TIF圖像轉JPG,TIF標簽轉PNG,圖像重疊裁剪)

)

)

)

. name () 與 typeid() . raw_name () 測試數據類型的區別)

)