? ? ? ?前篇我們學習了SLS的核心用途及概念,本篇以將一個linux服務器的json格式日志接入阿里云SLS為例,繼續學習SLS接入中的關鍵設置及注意事項,以及如何將其實現簡單自動化快速操作。

一、 SLS 日志接入流程

[1] 準備工作(確定日志路徑和格式等)↓

[2] 在 Linux 服務器安裝 Logtail 客戶端↓

[3] 創建 SLS Project[4] 創建 Logstore↓

[5] 創建機器組↓

[6] 創建采集配置(Logtail Config 或 Pipeline)↓

[7] 機器組綁定↓

[8] 開啟索引↓

[9] 在 SLS 控制臺驗證日志數據↓

[10] 授權用戶訪問/讀寫↓

[11] 日志投遞/冷熱分層(可選)- 其中1和2主要為用戶操作,本篇不會涉及,詳細可以參考阿里云官方文檔

- 后續代碼按照流程節點將其拆分出了單獨腳本,如有需要完全可以進行合并

二、?創建 SLS Project

? ? ? ?這步關鍵點是名字,project名字創建后不能修改,只能刪除重建。如果等一切都接完了發現project名字不合規范,那真是無語了。

? ? ? ?另外注意刪除project后默認會在回收站放7天,雖說在回收站中,阿里云還是會將其部分設置視為已生效。例如配置了多個project中的logtail接入相同日志文件,即使舊project已經在回收站,也會報配置沖突。

from aliyun.log import LogClient

import os# 本示例從環境變量中獲取AccessKey ID和AccessKey Secret。

access_key_id = os.environ.get('ALIBABA_CLOUD_ACCESS_KEY_ID', '')

access_key_secret = os.environ.get('ALIBABA_CLOUD_ACCESS_KEY_SECRET', '')# 日志服務的服務接入點

endpoint = "cn-shenzhen.log.aliyuncs.com"

# 創建日志服務Client。

client = LogClient(endpoint, access_key_id, access_key_secret)# Project名稱。

project_name = "slsproject-1"# Project描述。project_des=""代表描述值為空

project_des = "your describe"# 調用create_project接口,如果沒有拋出異常,則說明執行成功。

def main():try:res = client.create_project(project_name, project_des)res.log_print()res = client.get_project(project_name)res.log_print()except Exception as error:print(error)if __name__ == '__main__':main()

三、?創建 Logstore

這步關鍵點:

- 保留時間 ttl:熱日志保留時間,與費用相關。例如設置為15,若不配置日志投遞及冷熱分層,則15天前的日志將無法從SLS中查詢

- webtracking:控制是否允許 通過 HTTP/HTTPS API 直接向 Logstore 寫日志。如果開啟,你無需安裝 Logtail、Flume、Beats 等采集程序,客戶端(例如瀏覽器 JS、手機 App、小程序、第三方后端)就可以 直接調用 SLS API 發送日志數據到這個 Logstore

看起來 webtracking 似乎更簡單,為什么阿里云不將其作為默認方式?

創建代碼

from aliyun.log import LogClient, LogException

import os# 本示例從環境變量中獲取AccessKey ID和AccessKey Secret

access_key_id = os.environ.get('ALIBABA_CLOUD_ACCESS_KEY_ID', '')

access_key_secret = os.environ.get('ALIBABA_CLOUD_ACCESS_KEY_SECRET', '')# 日志服務的服務接入點

endpoint = "cn-shenzhen.log.aliyuncs.com"client = LogClient(endpoint, access_key_id, access_key_secret)# Project名稱

project_name = 'sls-project'# 待創建 logstore 列表

logstore_names = ['a-http-vm', 'b-http-vm', 'c-http-vm', 'd-http-vm']ttl=15

shard_count=2

append_meta=True

enable_tracking=Falsedef main():for logstore_name in logstore_names:print(f'正在創建 logstore: {logstore_name}')try:res = client.create_logstore(project_name, logstore_name, ttl=ttl, shard_count=shard_count,enable_tracking=enable_tracking, append_meta=append_meta)print(f'成功創建 logstore: {logstore_name}')except LogException as e:if e.get_error_code() == 'LogStoreAlreadyExist':print(f'logstore 已存在: {logstore_name}')else:print(f'創建 logstore 失敗: {logstore_name}, 錯誤: {e}')if __name__ == '__main__':main()四、?創建機器組

顧名思義,要從哪些機器采集日志數據

from aliyun.log import LogClient, MachineGroupDetail

import os# 本示例從環境變量中獲取AccessKey ID和AccessKey Secret

access_key_id = os.environ.get('ALIBABA_CLOUD_ACCESS_KEY_ID', '')

access_key_secret = os.environ.get('ALIBABA_CLOUD_ACCESS_KEY_SECRET', '')

# 日志服務的服務接入點

endpoint = "cn-shenzhen.log.aliyuncs.com"# 實例化LogClient類

client = LogClient(endpoint, access_key_id, access_key_secret)project_name = "sls-project"# 組名和IP列表

group_names = ["a-http-vm","b-http-vm","c-http-vm","d-http-vm"

]

machine_ips = ["192.168.1.126","192.168.1.127","192.168.1.128","192.168.1.129"

]def main():client = LogClient(endpoint, access_key_id, access_key_secret)for group_name, ip in zip(group_names, machine_ips):machine_type = "ip"machine_list = [ip] # 每組對應一個IPgroup_detail = MachineGroupDetail(group_name, machine_type, machine_list)try:res = client.create_machine_group(project_name, group_detail)print(f'創建機器組 {group_name} 成功,IP: {ip}')res.log_print()except Exception as e:print(f'創建機器組 {group_name} 失敗,IP: {ip}。錯誤:{e}')if __name__ == '__main__':main()五、?創建采集配置 & 機器組綁定

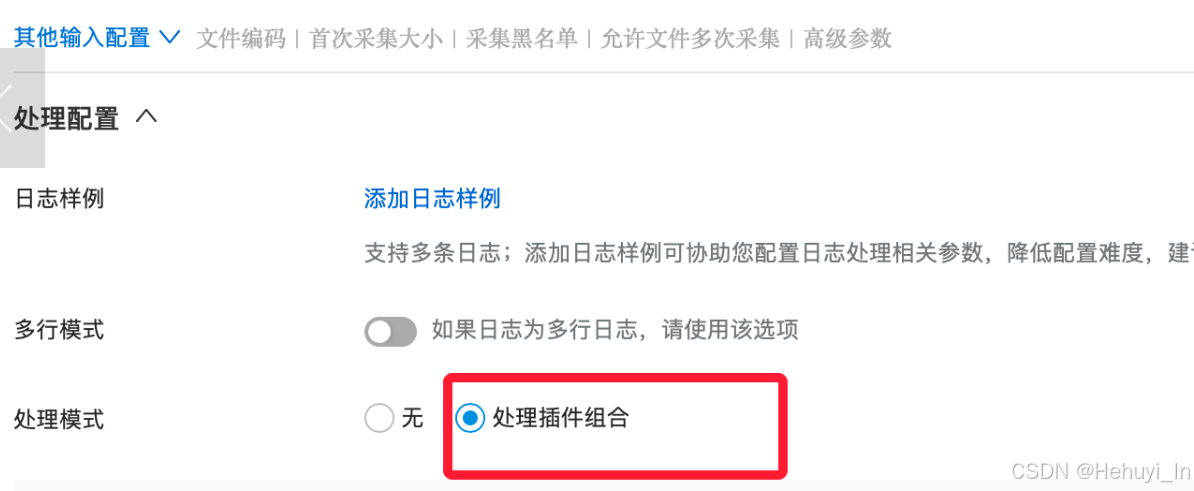

相對來說這步是參數最多最復雜的,核心參數設置如下

代碼如下

from aliyun.log import LogClient

from aliyun.log.logtail_config_detail import SimpleFileConfigDetail

import os# 本示例從環境變量中獲取AccessKey ID和AccessKey Secret

access_key_id = os.environ.get('ALIBABA_CLOUD_ACCESS_KEY_ID', '')

access_key_secret = os.environ.get('ALIBABA_CLOUD_ACCESS_KEY_SECRET', '')# 日志服務的服務接入點

endpoint = "cn-shenzhen.log.aliyuncs.com"client = LogClient(endpoint, access_key_id, access_key_secret)project = "sls-project"

# 數據源:一一對應

logstore_names = ["a-http-vm","b-http-vm","c-http-vm","d-http-vm"

]

group_names = ["a-http-vm","b-http-vm","c-http-vm","d-http-vm"

]

logs = ["/var/log/nginx/logstash_access.log","/var/log/nginx/logstash_access.log","/var/log/nginx/logstash_access.log","/var/lib/gerrit/logs/httpd_log.json"

]# 公共配置

timeFormat = ''

timeKey = ''

localStorage = True

enableRawLog = False

topicFormat = 'none'

fileEncoding = 'utf8'

maxDepth = 0

preserve = True

preserveDepth = 0

filterKey = []

filterRegex = []

adjustTimezone = False

delayAlarmBytes = 0

delaySkipBytes = 0

discardNonUtf8 = False

discardUnmatch = False

dockerFile = False

logBeginRegex = '.*'

logTimezone = ''

logType = 'json_log'

maxSendRate = -1

mergeType = 'topic'

priority = 0

sendRateExpire = 0

sensitive_keys = []

tailExisted = Falsedef main():for logstore, group, logfile in zip(logstore_names, group_names, logs):logPath = os.path.dirname(logfile) # 獲取目錄前綴filePattern = os.path.basename(logfile) # 獲取日志文件名configName = logstore # 這里用 logstore 作為配置名try:config_detail = SimpleFileConfigDetail(logstoreName=logstore,configName=configName,logPath=logPath,filePattern=filePattern,timeFormat=timeFormat,timeKey=timeKey,localStorage=localStorage,enableRawLog=enableRawLog,topicFormat=topicFormat,fileEncoding=fileEncoding,maxDepth=maxDepth,preserve=preserve,preserveDepth=preserveDepth,filterKey=filterKey,filterRegex=filterRegex,adjustTimezone=adjustTimezone,delayAlarmBytes=delayAlarmBytes,delaySkipBytes=delaySkipBytes,discardNonUtf8=discardNonUtf8,discardUnmatch=discardUnmatch,dockerFile=dockerFile,logBeginRegex=logBeginRegex,logTimezone=logTimezone,logType=logType,maxSendRate=maxSendRate,mergeType=mergeType,priority=priority,sendRateExpire=sendRateExpire,sensitive_keys=sensitive_keys,tailExisted=tailExisted)# 創建 logtail 配置print(f"創建 Logtail 配置: {configName}, 日志路徑: {logPath}, 文件: {filePattern}")res = client.create_logtail_config(project, config_detail)res.log_print()# 綁定到機器組print(f"綁定日志采集配置 {configName} 到機器組 {group}")res = client.apply_config_to_machine_group(project, configName, group)res.log_print()# 查詢配置res = client.get_logtail_config(project, configName)res.log_print()print("="*80)except Exception as e:print(f"[錯誤] 創建 {configName} 失敗: {e}")if __name__ == '__main__':main()六、?開啟索引

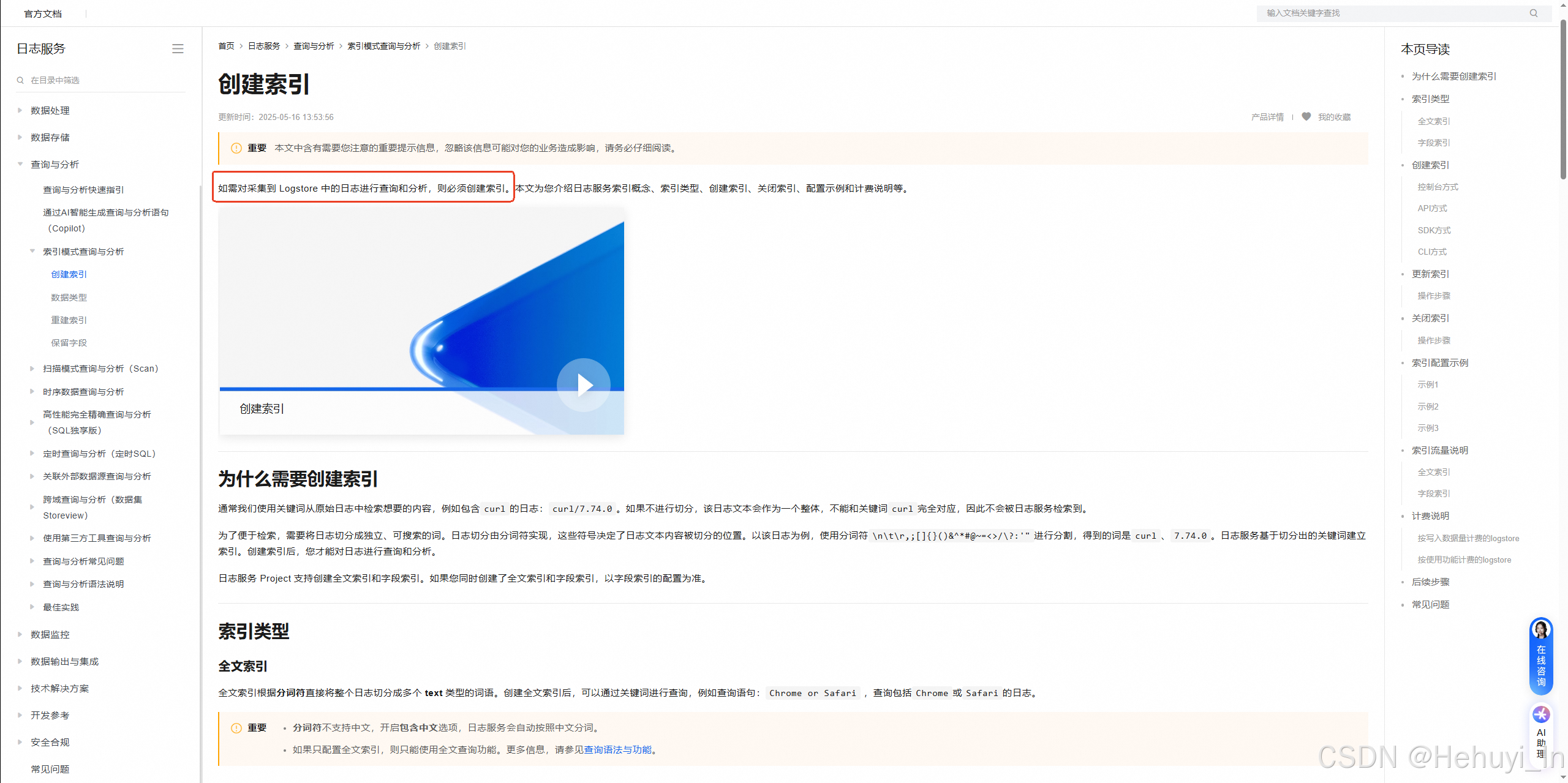

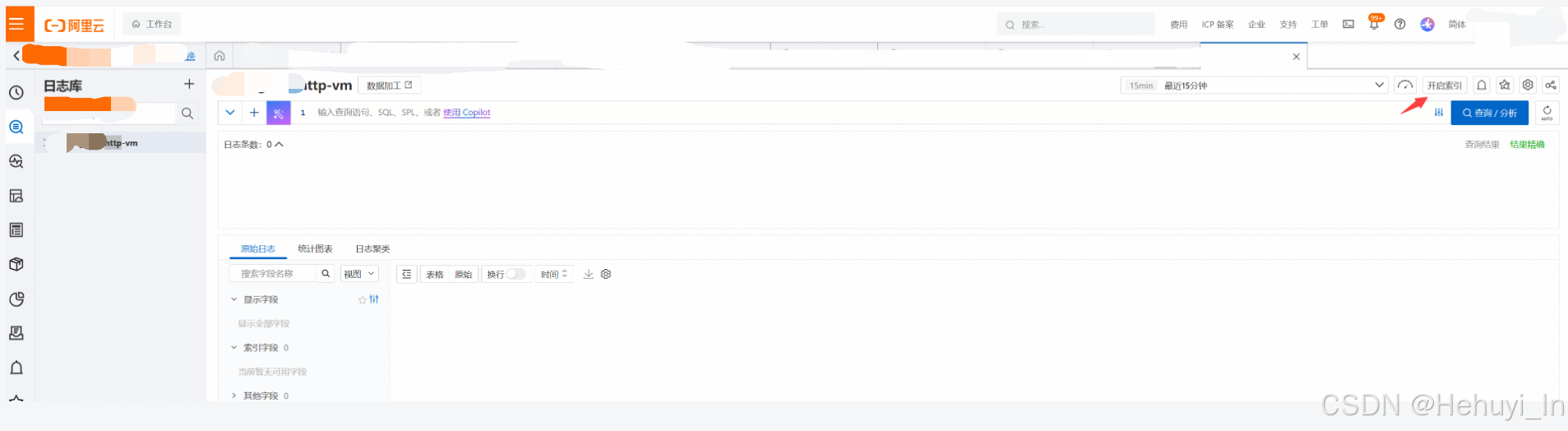

如果要在查看和分析日志,必須先開啟索引

? ? ? ?這塊雖然也可以用代碼實現,但接口目前無“自動生成索引”功能,需要自行分析日志結構并寫入代碼處理。如果是大批量標準化的日志,這塊可以實現,如果非標,直接點擊界面效率更高。

開啟后等兩分鐘,若接入正常,應該可以看到日志

七、?授權用戶訪問/讀寫

這里一般按project或logstore授權

1. 只讀權限

{"Version": "1","Statement": [{"Effect": "Allow","Action": ["log:ListProjects","log:GetAcceleration","log:ListDomains","log:GetLogging","log:ListTagResources"],"Resource": "acs:log:*:*:project/*"},{"Effect": "Allow","Action": "log:GetProject","Resource": "acs:log:*:*:project/sls-project"},{"Effect": "Allow","Action": "log:ListLogStores","Resource": "acs:log:*:*:project/sls-project/logstore/*"},{"Effect": "Allow","Action": ["log:GetLogStore","log:GetLogStoreHistogram","log:GetIndex","log:ListShards","log:GetLogStoreContextLogs","log:GetLogStoreLogs","log:GetCursorOrData"],"Resource": "acs:log:*:*:project/sls-project/logstore/a-http-vm"},{"Effect": "Allow","Action": "log:*SavedSearch","Resource": "acs:log:*:*:project/sls-project/*"},{"Effect": "Allow","Action": "log:*","Resource": "acs:log:*:*:project/sls-project/dashboard/*"}]

}2. 讀寫權限

{"Version": "1","Statement": [{"Effect": "Allow","Action": "log:*","Resource": ["acs:log:*:*:project/sls-project/logstore/a-http-vm","acs:log:*:*:project/sls-project/logstore/a-http-vm/*"]},{"Effect": "Allow","Action": "log:ListProject","Resource": "*"}]

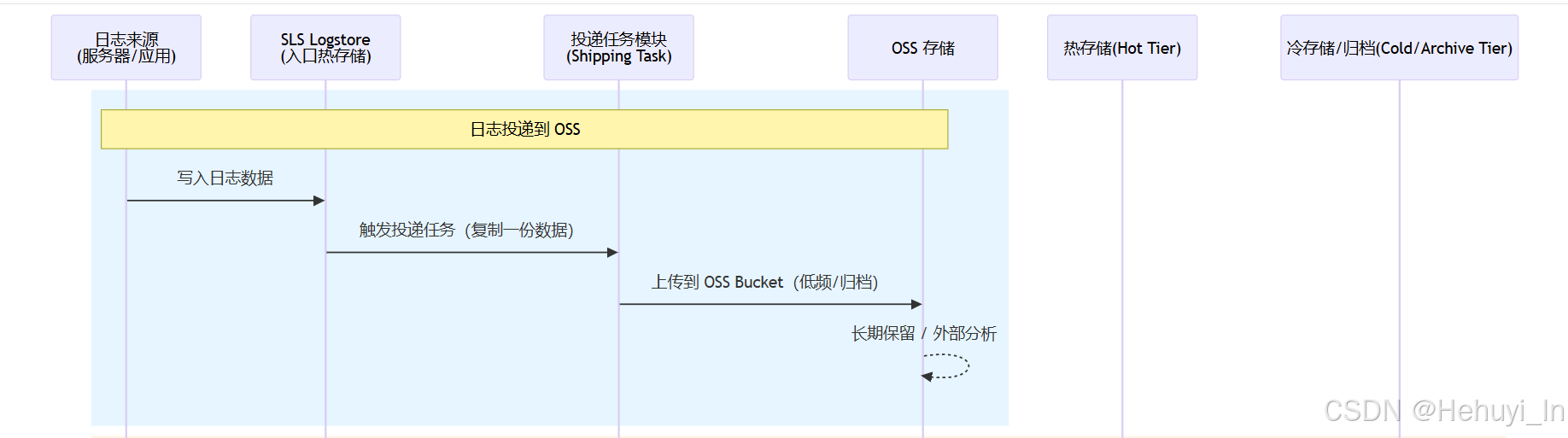

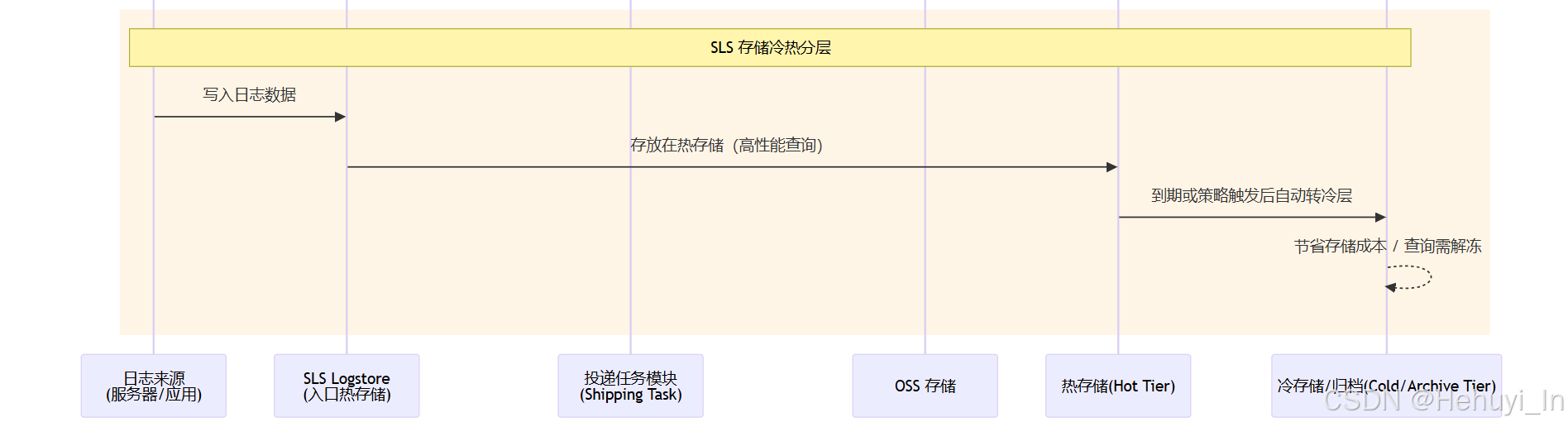

}八、?日志投遞/冷熱分層

1. 數據流轉方式

2. 相似及區別

參考

創建Project_日志服務(SLS)-阿里云幫助中心

管理日志項目Project_日志服務(SLS)-阿里云幫助中心

GPT 5

—— Nginx反向代理與負載均衡實戰指南)

:人工智能、機器學習與深度學習)

![[網絡入侵AI檢測] 純卷積神經網絡(CNN)模型 | CNN處理數據](http://pic.xiahunao.cn/[網絡入侵AI檢測] 純卷積神經網絡(CNN)模型 | CNN處理數據)

:用戶界面及系統管理界面布局)