對比學習Yolo 和 faster rcnn 兩種目標檢測

要求

Image Processing with Python Final Project

Derek TanLoad several useful packages that are used in this notebook:Image Processing with Python Final Project Project Goals: ? Gain an understanding of the object detection pipeline. ? Learn to develop anchor-based single-stage object detectors. ? Learn to develop a two-stage object detector that combines a region proposal network with a recognition network. Coding:

Q1: The notebook single_stage_detector_yolo. ipynb will guide you through the implementation of a fully-convolutional single-stage object detector similar to YOLO (Redmon et al, CVPR 2016). You will train and evaluate your detector on the PASCAL VOC 2007 object detection dataset.

Q2: The notebook two_stage_detector_faster_rcnn. ipynb will guide you through the implementation of a two-stage object detector similar to Faster R-CNN (Ren et al, NeurIPS 2015). This will combine a fully-convolutional Region Proposal Network (RPN) and a second-stage recognition network.

Steps:

- Unzip the P24 file. You will find three *.py files, two *. ipynb files, and an ip24 folder which includes seven *.py files.

- Carefully read the two *. ipynb notebooks.

- Write the code in the *.py files as indicated. The Python files have clearly marked blocks where you are expected to write code. Do not write or modify any code outside of these blocks. You may only be allowed to ADD one block of code to save the final results. You will only get credit for code that has been run.

- Evaluate your implementation. When you are done, save single_stage_detector.py, two_stage_detector.py, and all the outputs (folder Results) in one folder called Coding. Writing: Write a comparative analysis paper comparing the two object detection algorithms

Steps:

Your paper should be written in English and should be clear and concise, with proper structure and formatting.Include images, graphs, and tables as necessary to support your analysis.Avoid extensive use of AI-generated content and plagiarism or copying from classmates.Save your final paper and the references that you used in one folder called Writing.The paper should be no less than 5 pages, and you will follow the template from (CVPR 2024), which can be found in PaperForReview.docx.Paper Structure:? Title,Abstract,Introduction? Methodology: ?Description of YOLO-based single-stage object detector implementation.? Description of Faster R-CNN-based two-stage object detector implementation.? Experimental Setup: ? Description of dataset: PASCAL VOC 2007 object detection dataset. ? Evaluation metrics: mAP, inference speed, training time.? Results and Discussion: ? Performance comparison between YOLO and Faster R-CNN. ? Analysis of detection accuracy.? Conclusion, References Grading Criteria:Implementation of object detection algorithms (40%).Clarity and coherence of comparative analysis paper (30%).Depth of analysis and insights provided (20%).Presentation, formatting, and adherence to submission requirements (10%).Final Submission: 1. Zip file that should include the Coding folder and the Writing folder. The zip file should be named using your Chinese name and the English name. 2. No late submissions. Deadline: 1 June 2024, 8 p.m.

可以看到上面內容是英文。。。《Python圖像處理》,一做一個不吱聲。

下面只是我+AI的一個參考,并不是正確答案,畢竟也找不到正確答案,而且感覺這個代碼好多年了,不僅不知道哪里少代碼,還有幾個版本上的問題。

關鍵都是英文,看起來不是很方便,為了方便,下面的介紹就中英結合了。

首先下載作業,壓縮包。

Ⅰ [可選] 下載數據集

If you download the datasets yourself.

Datasets from VOC2007.

The PASCAL Visual Object Classes Challenge 2007 (VOC2007) (ox.ac.uk)

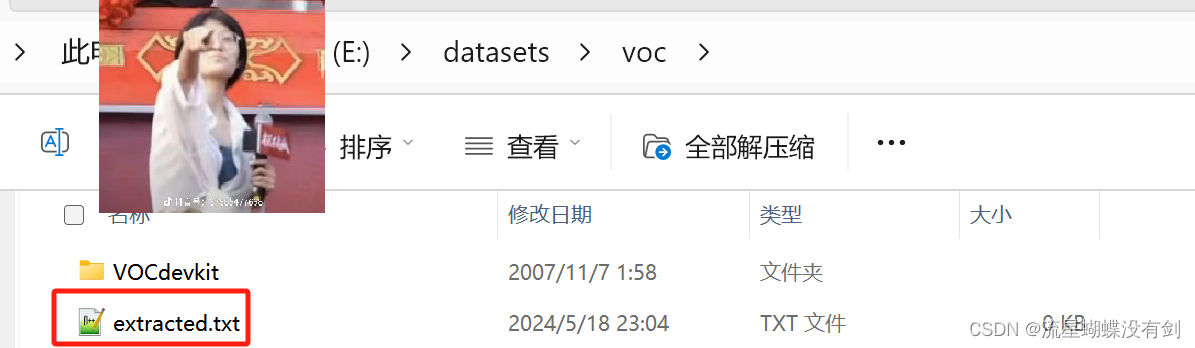

Unzip to a your local DIR.

Example: E:\datasets\voc

Then create a empty TXT file in this DIR.

Because it’s in the a5_helper.py has function get_pascal_voc2007_data()

def get_pascal_voc2007_data(image_root, split='train'):"""Use torchvision.datasetshttps://pytorch.org/docs/stable/torchvision/datasets.html#torchvision.datasets.VOCDetection"""check_file = os.path.join(image_root, "extracted.txt")download = not os.path.exists(check_file)train_dataset = datasets.VOCDetection(image_root, year='2007', image_set=split,download=download)open(check_file, 'a').close()return train_dataset

Ⅱ 使用Pycharm 打開代碼

此處省略1萬字。。。

Ⅲ 安裝 Jupyter lab

這個主要是為了執行筆記本文件.ipynb。這個也不介紹,省略。

Ⅳ 開始修改代碼

-

將

IP24目錄下所有導入eecs598都刪掉。刪除所有的

import eecs598 -

修改

single_stage_detector.pyEdit

single_stage_detector.py

This code is long long, you can typing code yourself or get in my CSDN resource: 1. single-stage-detector.py.

看文章最后。Note: It’s best to write your own code for this part. Mine is just a reference code.

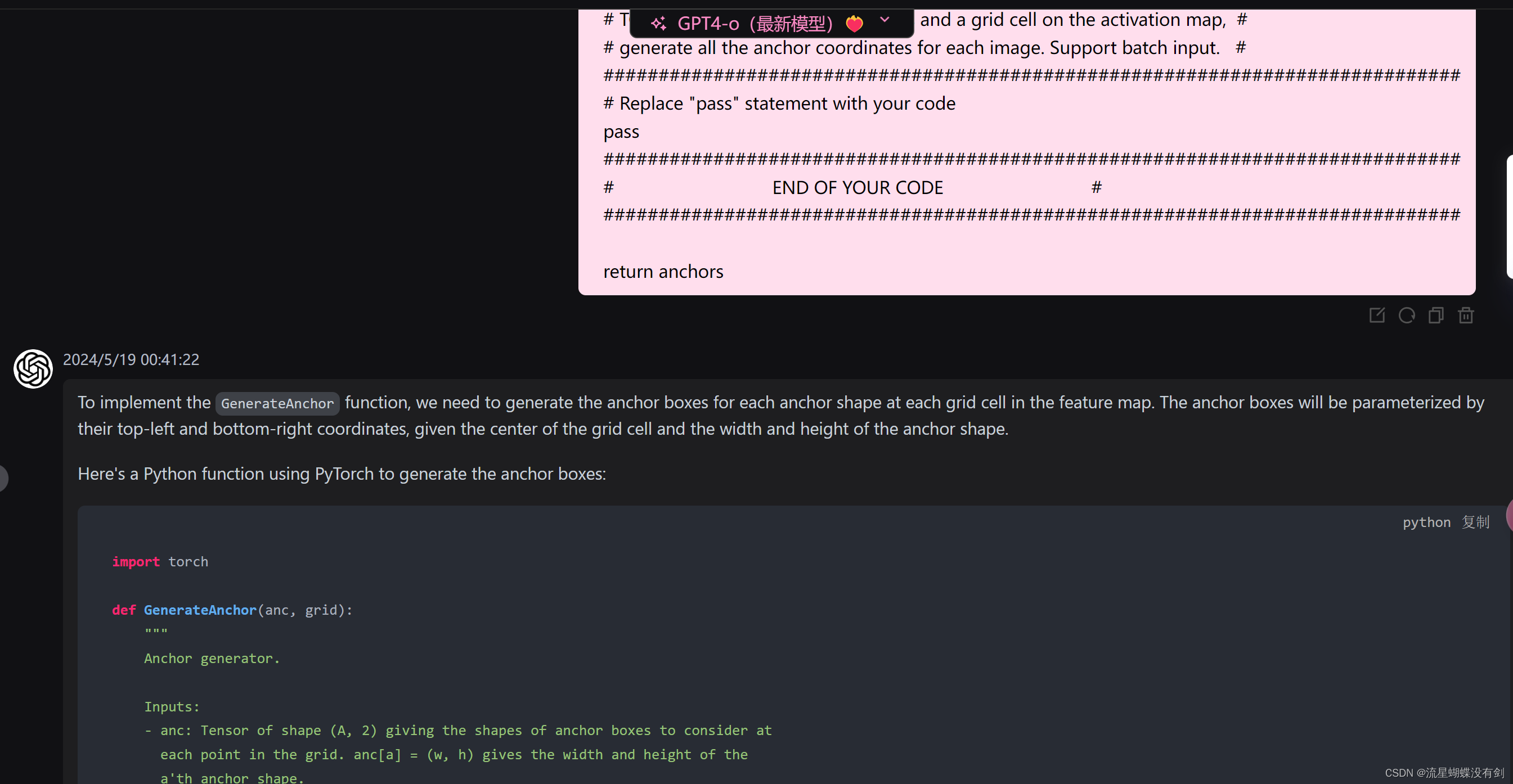

簡單介紹一下幾個函數的實現:AI 解釋哈。

single_stage_detector.pydef GenerateAnchor(anc, grid): 為每個圖像生成所有錨框(anchor boxes)的坐標,它們是在圖像的不同位置和尺度上預定義的邊界框,用于預測目標的位置和大小。輸入: anc: 一個形狀為 (A, 2) 的張量,表示要考慮的每個網格點上的錨框形狀。anc[a] = (w, h) 給出第 a 個錨框形狀的寬度和高度。 grid: 一個形狀為 (B, H', W', 2) 的張量,給出從主干特征圖中每個特征的中心坐標 (x, y)。這是從 GenerateGrid 函數返回的張量。 輸出: anchors: 一個形狀為 (B, A, H', W', 4) 的張量,給出整個圖像的所有錨框的位置。anchors[b, a, h, w] 是一個中心位于 grid[b, h, w] 的錨框,其形狀由 anc[a] 給出。錨框參數化為 (x_tl, y_tl, x_br, y_br),其中 (x_tl, y_tl) 和 (x_br, y_br) 分別給出框的左上角和右下角的 xy 坐標。實現: 代碼獲取輸入張量 grid 和 anc 的形狀信息,分別存儲在 B, H, W 和 A 變量中。 然后,創建一個形狀為 (B, A, H, W, 4) 的全零張量 anchors,用于存儲所有錨框的坐標。這里假設使用 CUDA 設備進行計算。 使用四重嵌套循環遍歷每個批次、每個錨框、每個高度和寬度。 在循環內部,計算每個錨框的左上角和右下角坐標: x_tl = grid[b, h, w, 0] - anc[a, 0] / 2:錨框左上角的 x 坐標,從網格中心的 x 坐標減去錨框寬度的一半。 y_tl = grid[b, h, w, 1] - anc[a, 1] / 2:錨框左上角的 y 坐標,從網格中心的 y 坐標減去錨框高度的一半。 x_br = grid[b, h, w, 0] + anc[a, 0] / 2:錨框右下角的 x 坐標,從網格中心的 x 坐標加上錨框寬度的一半。 y_br = grid[b, h, w, 1] + anc[a, 1] / 2:錨框右下角的 y 坐標,從網格中心的 y 坐標加上錨框高度的一半。 將計算出的坐標存儲在 anchors 張量中。返回計算出的所有錨框的坐標張量 anchors。def GenerateProposal(anchors, offsets, method='YOLO'):用于根據給定的錨框(anchors)和偏移量(offsets)生成區域提議(proposals)。這個函數支持兩種不同的轉換方法:'YOLO' 和 'FasterRCNN'。輸入: anchors: 一個形狀為 (B, A, H', W', 4) 的張量,表示錨框的位置,其中 B 是批次大小,A 是錨框的數量,H' 和 W' 是特征圖的高度和寬度,4 表示每個錨框由左上角和右下角坐標組成。 offsets: 一個形狀為 (B, A, H', W', 4) 的張量,表示應用于每個錨框的偏移量。對于每個錨框,偏移量包括 (tx, ty, tw, th),分別表示中心點 x 和 y 的偏移以及寬度和高度的縮放因子。 method: 一個字符串,指定使用的轉換方法,可以是 'YOLO' 或 'FasterRCNN'。輸出: proposals: 一個形狀為 (B, A, H', W', 4) 的張量,表示轉換后的區域提議。代碼實現: 首先,代碼檢查 method 是否為 'YOLO' 或 'FasterRCNN',并初始化 proposals 張量為零。 使用四重嵌套循環遍歷每個批次、每個錨框、每個高度和寬度。 在循環內部,從 anchors 和 offsets 中提取相應的坐標和偏移量。 根據 method 的不同,應用不同的轉換公式: 對于 'FasterRCNN',中心點的偏移量 tx 和 ty 是相對于錨框原始寬度和高度的比例。 對于 'YOLO',中心點的偏移量 tx 和 ty 是直接加到錨框的中心點上。 計算新的寬度和高度,使用 torch.exp(tw) 和 torch.exp(th) 來確保寬度和高度始終為正。 計算新的左上角和右下角坐標,并將它們存儲在 proposals 張量中。返回值: 函數返回計算出的所有區域提議的坐標張量 proposals。 -

開始修改運行文件

single_stage_detector_yolo.ipynbYou can also write them together in

main.py.# 這里導入了包 import torch from a5_helper import *# 設置了路徑 train_dataset = r"E:\datasets\voc" val_dataset = train_datasettrain_dataset = get_pascal_voc2007_data(train_dataset, 'train') # val_dataset = get_pascal_voc2007_data(train_dataset, 'val')train_dataset = torch.utils.data.Subset(train_dataset, torch.arange(0, 2500)) # use 2500 samples for training train_loader = pascal_voc2007_loader(train_dataset, 10) val_loader = pascal_voc2007_loader(train_dataset, 10) print("加載完成!")# 創建迭代器 train_loader_iter = iter(train_loader)# 獲取下一個批次 img, ann, _, _, _ = train_loader_iter.__next__()print('img has shape: ', img.shape) print('ann has shape: ', ann.shape)print('Image 1 has only two annotated objects, so ann[1] is padded with -1:') print(ann[1])print('\nImage 2 has six annotated objects:, so ann[2] is not padded:') print(ann[2])print('\nEach row in the annotation tensor indicates (x_tl, y_tl, x_br, y_br, class).')……

評估

這部分有問題,我也不懂,反正寫出來了,不知道啥問題。ap最后0.19

- 將上面的結果轉一下格式,用于評估,所有類一樣的放在一起

#!/usr/bin/python3.6

# -*- coding: utf-8 -*-

#

# Copyright (C) 2021 #

# @Time : 2024/5/20 16:47

# @Author : # @Email : # @File : 結果txt轉換.py

# @Software: PyCharm

import osdef process_files(directory, outdir):# 創建一個字典來保存每個類型的數據data_by_type = {}# 遍歷目錄中的所有文件for filename in os.listdir(directory):if filename.endswith('.txt'):with open(os.path.join(directory, filename), 'r') as file:for line in file:# 解析每一行,提取類型和數據parts = line.strip().split()if len(parts) == 6:type_name, confidence, x1, y1, x2, y2 = parts# 將數據添加到對應類型的字典中if type_name not in data_by_type:data_by_type[type_name] = []data_by_type[type_name].append(' '.join([filename.split(".txt")[0], confidence, x1, y1, x2, y2]))# 將每個類型的數據寫入到單獨的文件中for type_name, data in data_by_type.items():print(type_name)with open(f'{outdir + type_name}.txt', 'w') as output_file:output_file.write('\n'.join(data))# 使用函數

process_files(r'./mAP/input/detection-results/', r'./mAP/input/detection-results-cls/')- 計算

import pickleimport osimport numpy as np

import matplotlib.pyplot as plt

import torchfrom a5_helper import idx_to_class, pascal_voc2007_loader, class_to_idx

from faster_rcnn_pytorch_master.lib.datasets.voc_eval import voc_ap, parse_rec

from faster_rcnn_pytorch_master.lib.datasets.voc_eval import voc_ap, parse_rec, voc_evaldef plot_pr_curve(precisions, recalls):plt.figure()plt.plot(recalls, precisions, lw=2)plt.xlabel('Recall')plt.ylabel('Precision')plt.title('Precision-Recall Curve')plt.savefig('pr_curve.png')plt.close()def evaluate_map(output_dir):# Compute AP for each categoryaps = []for i, cls in enumerate(class_to_idx):print(i, cls)if cls == '__background__':continuerec, prec, ap = voc_eval(r'D:\Python\work\python圖像處理實踐\Coding\mAP\input\detection-results-cls\{:s}.txt',r"E:\datasets\voc\VOCdevkit\VOC2007\Annotations\{:s}.xml",r"E:\datasets\voc\VOCdevkit\VOC2007\ImageSets\Main\train.txt", cls,"./cachedir", ovthresh=0.5, )# print(rec, prec, ap)print(cls, ap)aps += [ap]print(('AP for {} = {:.4f}'.format(cls, ap)))with open(os.path.join(output_dir, cls + '_pr.pkl'), 'wb') as f:pickle.dump({'rec': rec, 'prec': prec, 'ap': ap}, f)plot_pr_curve(prec, rec)print(('Mean AP = {:.4f}'.format(np.mean(aps))))print('~~~~~~~~')# print('Results:')# for ap in aps:# print(('{:.3f}'.format(ap)))print(('{:.3f}'.format(np.mean(aps))))print('~~~~~~~~')print('')print('--------------------------------------------------------------')print('Results computed with the **unofficial** Python eval code.')print('Results should be very close to the official MATLAB eval code.')print('Recompute with `./tools/reval.py --matlab ...` for your paper.')print('-- Thanks, The Management')print('--------------------------------------------------------------')# Compute mAPmAP = np.mean(aps)return mAPdef main():mAP = evaluate_map('mAP/input')print(f'Mean Average Precision (mAP): {mAP}')if __name__ == '__main__':main()Okay,這個代碼真的太長了,不想粘貼了。

果然、訓練模型花了十來個小時。。。這是作業?服!

Do you feel bad when you see this?

所有文件

It’s time for a showdown: I should give the attachment, otherwise I may be attacked by the Internet!

VOC2007 訓練數據集

VOCtrainval_06-Nov-2007.tar

鏈接:https://pan.baidu.com/s/19hMEn-fwBjT5ikbWauSIfA?pwd=vapt

提取碼:vapt

–來自百度網盤超級會員V6的分享

Yolo 和 Faster R-CNN 目標檢測對比學習——作業原壓縮包

IPFinal Project.rar

鏈接:https://pan.baidu.com/s/1h0g2SRsWfBZZ4VHziisGnw?pwd=sdvl

提取碼:sdvl

–來自百度網盤超級會員V6的分享

CVPR 2024 word模板

鏈接:https://pan.baidu.com/s/1OAVuCH35UXTKNidsag4ELg?pwd=nycl

提取碼:nycl

–來自百度網盤超級會員V6的分享

訓練修改的文件、訓練的結果

鏈接:https://pan.baidu.com/s/1r-dPd9W70LrNVsfeNDvd3A?pwd=li16

提取碼:li16

–來自百度網盤超級會員V6的分享

)

:.NET Core 有什么優勢?)

)

: 現代IT管理的革命)