數據存儲加密和傳輸加密

I’m not going to string you along until the end, dear reader, and say “Didn’t achieve anything groundbreaking but thanks for reading ;)”.

親愛的讀者,我不會一直待到最后,然后說: “沒有取得任何開創性的成就,但感謝您閱讀;)”。

This network isn’t exactly a get-rich-quick scheme yet — when I make one, I probably won’t blog about it.

這個網絡還不是一個快速致富的計劃-當我建立一個網絡時,我可能不會寫博客。

It did, however, shed some interesting light on the 2018 Bitcoin crash. The car we’ve built runs, even if it often runs into walls and off cliffs. From here, it’s a question of tuning.

但是,它確實為2018年比特幣崩潰提供了一些有趣的啟示。 我們制造的汽車可以行駛,即使它經常撞到墻壁和懸崖上也是如此。 從這里開始,這是一個調優問題。

—

-

Last week I went through a good example of Hierarchical Temporal Memory algorithms predicting power consumption based only on the date+time & previous consumption. It went pretty well, very low mean-squared-error. HTM tech, unsurprisingly, does best when there’s a temporal element to data — some cause and effect patterns to “remember”.

上周,我看了一個很好的示例,該示例采用了分層時間記憶算法,該算法僅根據日期和時間以及先前的功耗來預測功耗。 它運行得很好,均方誤差非常低。 毫不奇怪,HTM技術在存在數據臨時元素(“記住”某些因果模式)的情況下效果最佳。

So I wondered: “What’s some other hot topic with temporal data hanging around for any random lad to download?” Bitcoin.

所以我想知道:“還有什么其他熱門話題,其中有時間數據懸空供任何小伙子下載?” 比特幣 。

Now hold on, is this a surmountable challenge in the first place? Surely nobody can predict the stock market, otherwise everyone would be doing it.But then that begs the question: why is there an entire industry of people moving money around at the proper time, and how are they afloat — if not for some degree of prediction?

現在堅持下去,這首先是一個可克服的挑戰嗎? 當然,沒有人能預測股市,否則每個人都會做。但是,這引出了一個問題:為什么會有整個行業的人在適當的時候到處流動錢,他們如何生存-如果不是在某種程度上預測?

I’m fairly certain that crypto prices, at least, can be “learned” to some degree, because:

我相當確定,至少可以在某種程度上“學習”加密價格,因為:

- If anyone had a magic algorithm to reliably predict the prices, they’d keep it to themselves (or lease it out proprietarily) 如果有人使用魔術算法來可靠地預測價格,他們會自行保留價格(或專有地出租)

There’s a company that already does this

有一家公司已經做到了

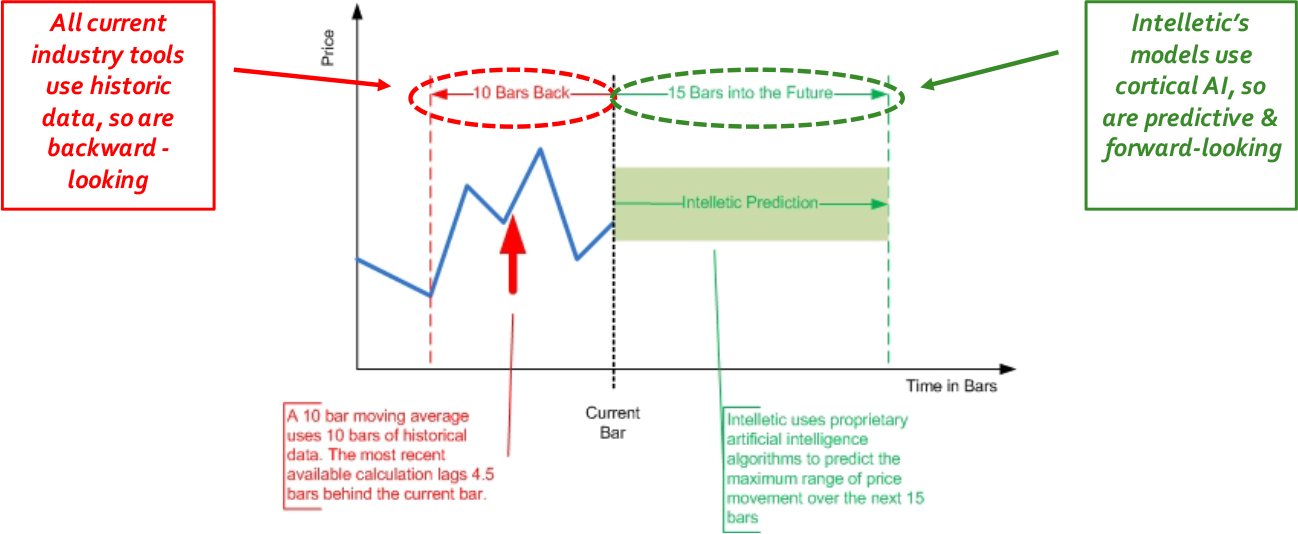

Intelletic is an interesting real-world example. Their listed ‘cortical algorithms’ are based on HTM (neocortex) tech, and they list “Price Prediction Alerts” as a main product for investors to make use of.

Intelletic是一個有趣的現實示例。 他們列出的“皮質算法”是基于HTM(新皮質 )技術的,他們將“價格預測警報”列為供投資者使用的主要產品。

These are quite fascinating promises, and not too hard to believe, either. As we saw last week, HTM models learn & predict on the fly — no need to train 80/20 splits on historical data. In a landscape where high-frequency trading algorithms have started to compete with day traders, integrating near-present data is certainly a formidable advantage.

這些都是令人著迷的承諾,也不是很難相信。 正如我們在上周看到的那樣,HTM模型可以實時進行學習和預測-無需根據歷史數據訓練80/20分割。 在高頻交易算法已開始與日間交易者競爭的情況下,集成近乎當前的數據無疑是一個巨大的優勢。

Can we quickly whip up an HTM network that predicts Bitcoin prices? Sure. Does it make great predictions?

我們可以快速建立一個預測比特幣價格的HTM網絡嗎? 當然。 它能做出很好的預測嗎?

Woe unto anyone who listens to a model that took 10 minutes to train on a laptop.

那些聽模型花費了10分鐘才能在筆記本電腦上進行訓練的人都會感到不適。

I would describe this model as cute. Maybe “scrappy”.

我認為這個模特很可愛。 也許是“草率”。

I’ll dig into what makes the model bad & what makes it viable at all. My previous articles on HTM tech will get you up to speed with what I’m writing about, but the best and easiest way to understand any of this is the HTM School videos.

我將深入研究導致模型失效的原因以及使其可行的原因。 我之前關于HTM技術的文章可以使您快速掌握我的寫作內容,但是了解其中任何內容的最佳,最簡便的方法是HTM School視頻。

I found some data online for the past 3 years of Coinbase’s BTC data, including opening & closing prices, high & low, and volume of USD & BTC for each hour since July 2017. Cleaned it up a little in pandas, standard stuff.

我發現了過去三年Coinbase BTC數據的一些在線數據,包括開盤價和收盤價,最高價和最低價以及自2017年7月以來每小時的美元和BTC交易量。在熊貓中清理了一些標準內容。

What’s interesting is that we’ve got 6 scalars & 1 DateTime to encode into an SDR:

有趣的是,我們有6個標量和1個DateTime可以編碼為SDR:

#

dateEncoder = DateEncoder(

timeOfDay = (30,1)

weekend = 21scalarEncoderParams = RDSE_Parameters() # random distributed scalar encoder

scalarEncoderParams.size = 800

scalarEncoderParams.sparsity = 0.02

scalarEncoderParams.resolution = 0.88

scalarEncoder = RDSE(scalarEncoderParams) # create the encoderencodingWidth = (dateEncoder.size + scalarEncoder.size*6) # since we're using the scalarEncoder 6 timesThe dateEncoder runs much the same as power consumption prediction — coding for Year isn’t really going to give semantic meaning when there’s only 3 years to work with. These are the interesting questions to ask when you make SDRs — is the info I’m including worth it? Why?

dateEncoder的運行與功耗預測非常相似,只有3年的工作時間,Year的編碼實際上并沒有給出語義上的含義。 這些是制作SDR時會問的有趣問題-我所包括的信息值得嗎? 為什么?

HTM.core has a good amount of Nupic’s Python 2.7 code translated to Python 3, but lacks their MultiEncoder — a neat wrapper that combines several encoders together for easy individual tuning. So I used the same ScalarEncoder for all 6 floats like a complete barbarian. A better plan would be to create 6 different encoders, one for each variable.

HTM.core擁有大量的Nupic的Python 2.7代碼轉換為Python 3,但缺少MultiEncoder —一種簡潔的包裝程序,將多個編碼器組合在一起,可以輕松地進行單獨調整。 因此,我對所有6個浮動對象都使用了相同的ScalarEncoder ,就像一個完整的野蠻人一樣。 更好的計劃是創建6個不同的編碼器,每個變量一個。

I also increased the size of the SpatialPooler and Temporal Memory by ~30%, and boosted the TM’s number of cells per column from 13 to 20. My reasoning is that a good predictive model should look relatively further back when we’re dealing with one-hour timestamps, so longer columns makes a difference.20 hours might not be enough, in retrospect, considering many standard stock algorithms use 7–10 day rolling averages and other longer metrics.

我還將SpatialPooler和時間記憶的大小增加了約30%,并將TM每列的單元數從13增加到20。我的理由是,當我們處理一個模型時,一個好的預測模型應該相對地往后看時戳,因此較長的列會有所作為。回想起來,考慮到許多標準股票算法使用7-10天的滾動平均值和其他更長的指標,20小時可能還不夠。

This changed my training time from 7 minutes to 10, by the way. Seems like there’s a lot of room to add computationally heavy parameters, but I don’t think that’s quite the problem here.

順便說一下,這將我的培訓時間從7分鐘更改為10分鐘。 似乎有很大的空間可以添加計算繁重的參數,但是我認為這并不是問題所在。

你得到你投入的東西 (You get out what you put in)

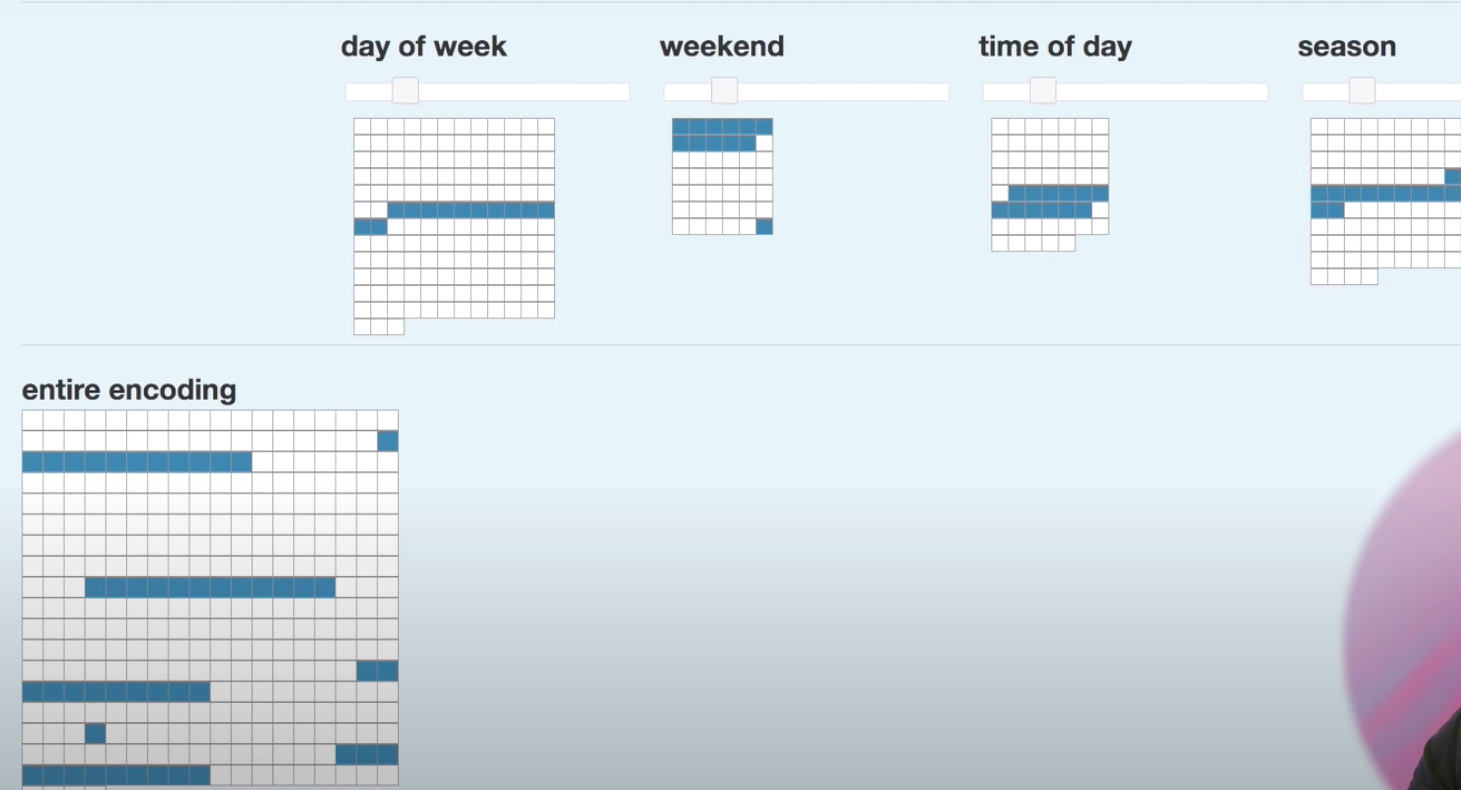

Here’s a neat visual example of SDR concatenation, shameless stolen from episode 6 of HTM School:

這是SDR串聯的簡潔直觀示例,從HTM School第6集中偷偷偷偷偷偷地偷了:

A DateTime encoder creates mini-SDRs for each part of information contained by the timestamp, and combines it to form a larger encoding. As long as the order of concatenation is preserved (first X bits are day_of_week, next Y bits are weekend, etc) then you can combine any encoded datatypes into a coherent SDR to feed to the SpatialPooler.

DateTime編碼器為時間戳所包含的信息的每個部分創建mini-SDR,并將其組合以形成更大的編碼。 只要保留串聯的順序(前X個位是day_of_week ,下一個Y位是weekend , SpatialPooler ),那么您就可以將任何編碼的數據類型組合成一致的SDR,以饋送給SpatialPooler 。

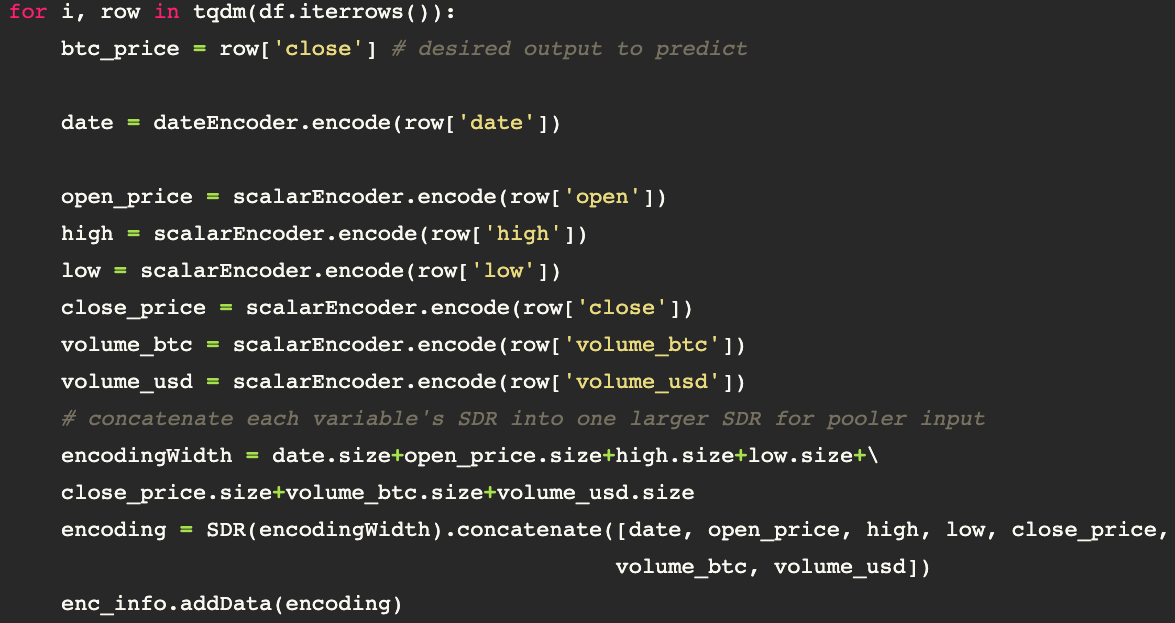

Here’s the first half of the training loop. We start by designating the output value to be predicted, and then encode the data, running 6 values through the scalarEncoder.

這是訓練循環的前半部分。 我們首先指定要預測的輸出值,然后對數據進行編碼,并通過scalarEncoder運行6個值。

The neat part is creating encoding=SDR(encodingWidth).concatenate([list_of_SDRs]) . We only have to pre-define encodingWidth as the sum of each variable’s SDR length (or size), create an SDR with that size, then use .concatenate() to fill the empty SDR with bits.

整潔的部分是創建encoding=SDR(encodingWidth).concatenate([list_of_SDRs]) 。 我們只需要預定義encodingWidth作為每個變量的SDR長度(或大小)的總和,創建具有該大小的SDR,然后使用.concatenate()即可用位填充空的SDR。

錯位 (Misalignment)

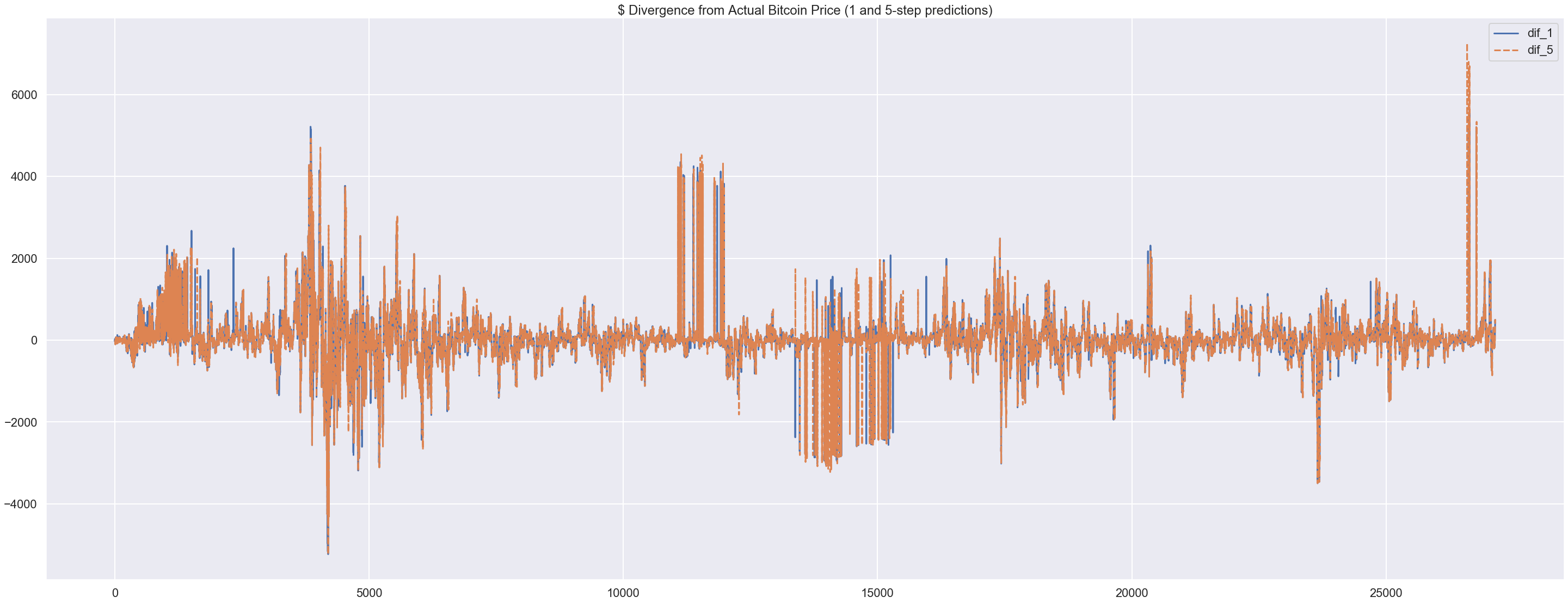

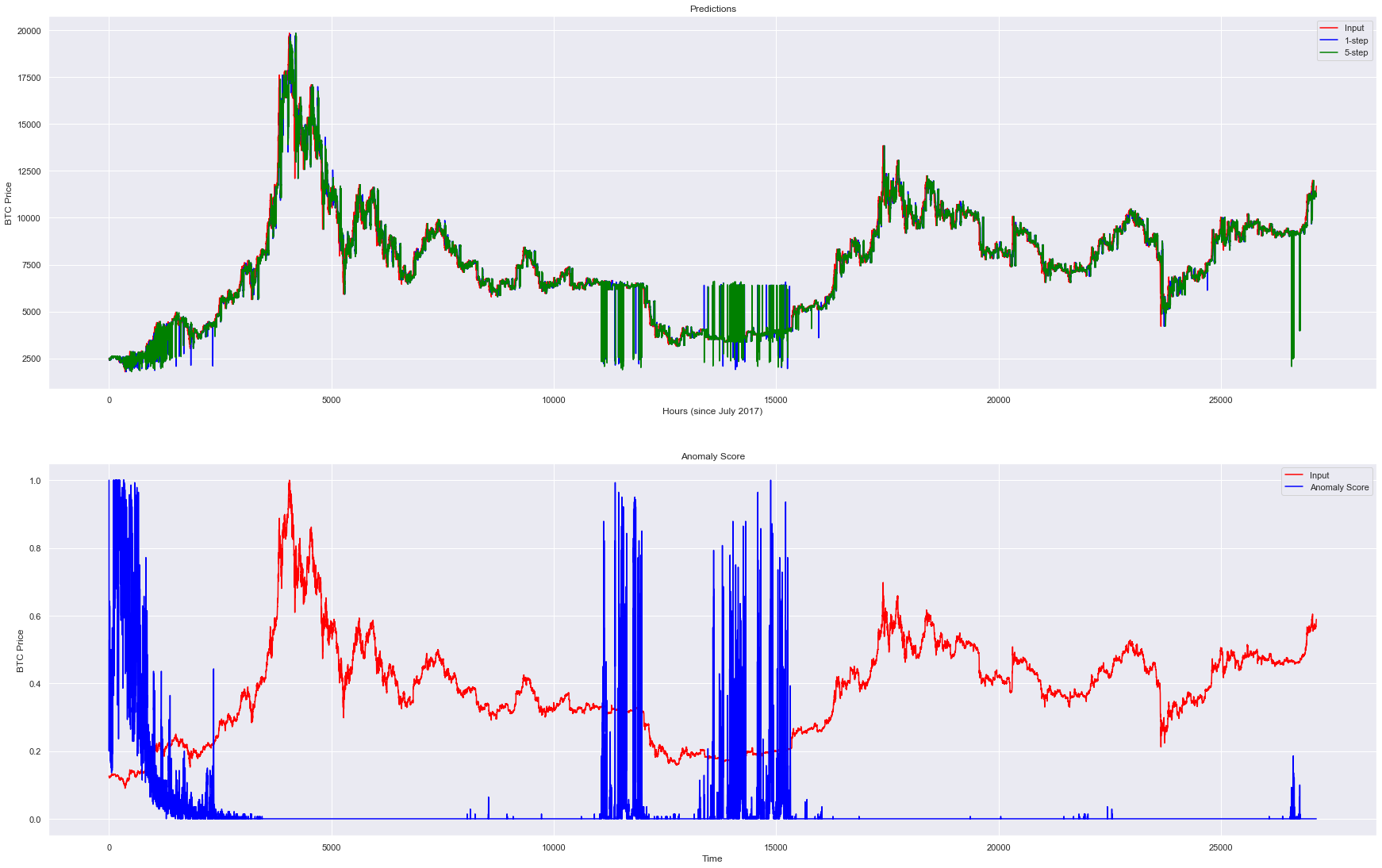

After training on 27,000 hours of price data, our accuracy didn’t seem too bad:

經過27,000小時的價格數據訓練后,我們的準確性似乎還不錯:

Root Mean Squared Error for 1-step, 5-step predictions:

{1: 2.8976319767388525, 5: 2.8978454708090684}Considering the units are anywhere from ~4,000 to 10,000, this seemed a little too good to be true. I graphed it to find out:

考慮到單位在從4,000到10,000的范圍內,這似乎有點不真實。 我將其繪制圖形以找出:

It takes ~2000 hours to start outputting any decent predictions — you can see 1-step and 5-step blue/green lines jumping up and down in the very beginning.

開始輸出任何體面的預測大約需要2000個小時-一開始,您會看到1步和5步藍/綠線上下跳躍。

What’s quite interesting is the huge anomaly predictions around the 12,000–15,000 hour regions. In terms of price spikes, here’s nothing huge going on while it’s predicting anomalous next-hour values.

有趣的是,在12,000–15,000小時區域周圍的巨大異常預測。 就價格飆升而言,在預測下一小時的價值異常時 ,沒有什么大不了的。

But after the first of the two big anomaly spikes, there’s a relatively sharp crash— from $7,000 to a bottom of under $3,000.

但是,在兩次大的異常峰值中的第一個之后,出現了一個相對急劇的崩潰-從7,000美元跌至底部3,000美元以下。

This is the machine learning equivalent of walking your dog in the woods at night, when suddenly it starts whining and growling, looking off into the darkness. You don’t sense anything and keep walking. Out of the corner of your eye you see two bright red eyes staring at you, so you run like hell and wish you’d listened to the dog sooner.

這是機器學習的等效方法,它相當于在晚上dog狗到樹林里,突然之間它開始抱怨和咆哮,直視黑暗。 您什么都沒感覺到并且繼續走。 從您的眼角可以看到兩只鮮紅的眼睛凝視著您,所以您像地獄般奔跑,希望您早日聽見那只狗。

We’re predicting closing_price for each hour, but the model’s also learning from the high, low, currency volume, and the recent patterns and relationships of each. It picked up on some relatively strange activity — perhaps those stretches had anomalous fluctuations in high-low span or rapid micro-deviations in price.

我們正在預測每小時的closing_price ,但是該模型還在從高,低,貨幣數量以及每個貨幣的近期模式和關系中學習。 它開始了一些相對奇怪的活動-也許這些延伸段的高低跨度出現了異常波動,或者價格出現了微小的快速波動。

It also predicted anomalous activity for a while before the prices started to recover, although it’s a little less clear-cut. It could be said that sudden drops in price tend to change people’s speculative trading behavior, so anomalies would be likely occurrences. In that case, I wonder what caused the pre-crash anomaly readings.

該公司還預測,在價格開始回升之前的一段時間內會出現異常活動,盡管這一點不太明確。 可以說,價格突然下跌往往會改變人們的投機交易行為,因此很可能會出現異常情況。 在那種情況下,我想知道是什么原因導致了崩潰前的異常讀數。

我們沒有在看什么 (What We’re Not Looking At)

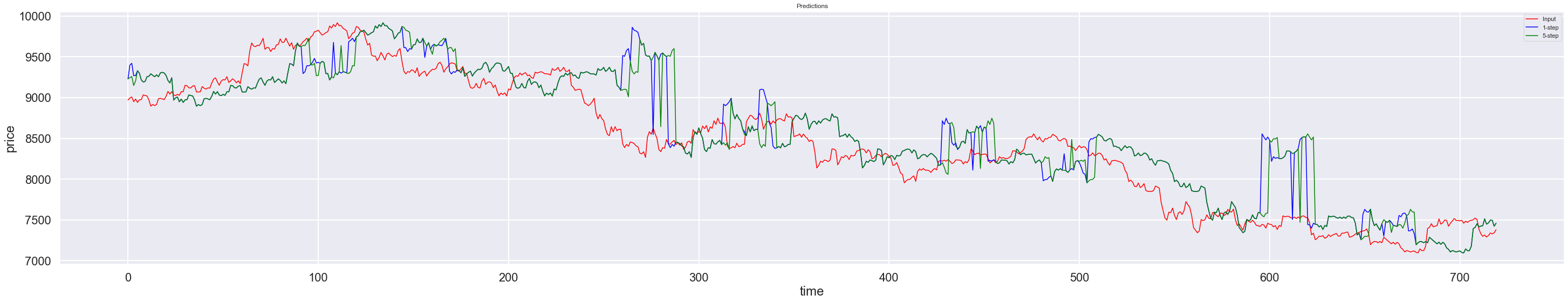

We had some interesting ideas from the large-scale graph. But if the goal is predict prices for trading, we need to look at the day-to-day use case. Here’s May 2018:

我們從大型圖表中獲得了一些有趣的想法。 但是,如果目標是預測交易價格,則我們需要查看日常用例。 這是2018年5月:

The 5-step and 1-step predictions don’t follow the input that well, to be honest. It’s normally within a couple hundred dollars, but that’s not a very reassuring metric when you’re g?a?m?b?l?i?n?g? investing. There’s far too many deviations for my adorable model to compete with Intelletic’s price predictions. But why?

老實說,五步預測和一步預測并沒有很好地遵循輸入。 通常在幾百美元以內,但是當您進行大量投資時,這并不是一個令人放心的指標。 我的可愛模型有太多差異,無法與Intelletic的價格預測競爭。 但為什么?

The biggest weakness of this model is that it’s trying to put together a puzzle without all of the pieces. We only encoded Bitcoin price data, but the price of the most popular cryptocurrency is a result of many diverse factors — public confidence, private investors, whatever clickbait article was published, whether the president/Musk tweeted about crypto, etc.

該模型的最大缺點是,它試圖在沒有所有碎片的情況下拼湊一個難題。 我們僅對比特幣價格數據進行編碼,但是最受歡迎的加密貨幣的價格是多種因素共同作用的結果-公眾信心,私人投資者,發表的任何點擊誘餌文章,總統/馬斯克是否發表過有關加密貨幣的推文等。

A decent metric to look at might be the prices of other cryptocurrencies, however. Many coins tend to swing in accordance with each other.

然而,一個不錯的指標可能是其他加密貨幣的價格。 許多硬幣傾向于相互擺動。

What is certain is that there’s much more room to encode more complex data into SDR input. Increasing the input size and model complexity by ~30% changed runtime from 7 to 10 minutes. This is largely due to the ease of bitwise comparisons between SDRs & the synaptic configuration of Temporal Memory systems; the exploding-runtime architecture complexity problem doesn’t apply in quite the same way.

可以肯定的是,還有更多的空間可以將更復雜的數據編碼為SDR輸入。 輸入大小和模型復雜度增加約30%,將運行時間從7分鐘更改為10分鐘。 這主要是由于SDR之間的按位比較容易以及時間記憶系統的突觸配置所致。 爆炸式運行時體系結構復雜性問題不適用于完全相同的方式。

So what have we learned?

所以我們學了什么?

It’s near impossible to get close to 100% accuracy with predicting markets like these, but some companies manage to get high enough certainty to make significant gains over time. That means the process works, and it’s worth pushing to improve it further.

預測這樣的市場幾乎不可能達到100%的準確性,但是有些公司設法獲得足夠高的確定性,以隨著時間的流逝取得可觀的收益。 這意味著該流程有效,值得進一步改進它。

Don’t underestimate the complexity of publicly-traded commodities, for a start. Train on more than 3 years of data, certainly.

首先,請不要低估公開交易商品的復雜性。 當然,要訓練3年以上的數據。

But when in doubt, find more data. If your model is already at a certain point of complexity & you’re not getting the results you want, you’re either not looking at the whole picture (data) and/or not asking the right questions.

但是,如果有疑問,請查找更多數據。 如果您的模型已經處于某個復雜的點上,并且您沒有得到想要的結果,那么您要么沒有查看整個圖片(數據)和/或沒有提出正確的問題。

翻譯自: https://medium.com/swlh/applying-temporal-memory-networks-to-crypto-prediction-24f924c3a014

數據存儲加密和傳輸加密

本文來自互聯網用戶投稿,該文觀點僅代表作者本人,不代表本站立場。本站僅提供信息存儲空間服務,不擁有所有權,不承擔相關法律責任。 如若轉載,請注明出處:http://www.pswp.cn/news/389200.shtml 繁體地址,請注明出處:http://hk.pswp.cn/news/389200.shtml 英文地址,請注明出處:http://en.pswp.cn/news/389200.shtml

如若內容造成侵權/違法違規/事實不符,請聯系多彩編程網進行投訴反饋email:809451989@qq.com,一經查實,立即刪除!

)