k8s下搭建Redis高可用

- 1. 部署redis服務

- 創建ConfigMap

- 創建 Redis

- 創建 k8s 集群外部

- 2. 創建 Redis 集群

- 自動創建 redis 集群

- 手動創建 redis 集群

- 驗證集群狀態

- 3. 集群功能測試

- 壓力測試

- 故障切換測試

- 4. 安裝管理客戶端

- 編輯資源清單

- 部署 RedisInsight

- 控制臺初始化

- 控制臺概覽

實戰環境使用 NFS 作為 k8s 集群的持久化存儲(或者通過新創建PV—PVC),Redis 集群所有資源部署在命名空間 chengke 內

1. 部署redis服務

創建ConfigMap

redis的兩種存儲模式:rdb、aof

-

創建Redis配置文件

appenonly:yes(aof模式)

-

創建資源

-

驗證資源

-

創建 Redis 配置文件

創建資源清單文件

[root@master ~]# cd 14

[root@master 14]# mkdir 1

[root@master 14]# cd 1

[root@master 1]# vim redis-cluster-cm.yaml

[root@master 1]# cat redis-cluster-cm.yaml

apiVersion: v1

kind: ConfigMap

metadata:name: redis-cluster-config

data:redis-config: |appendonly yesprotected-mode nodir /dataport 6379cluster-enabled yescluster-config-file /data/nodes.confcluster-node-timeout 5000masterauth Chengke2025requirepass Chengke2025- 創建資源

[root@master 1]# kubectl apply -f redis-cluster-cm.yaml

configmap/redis-cluster-config created

- 驗證資源

[root@master 1]# kubectl get cm

NAME DATA AGE

kube-root-ca.crt 1 23d

redis-cluster-config 1 26s

創建 Redis

使用 StatefulSet 部署 Redis 服務,需要創建 StatefulSet 和 HeadLess 兩種資源

- 創建資源清單文件

- 創建資源

- 驗證資源

前提:保證一下配置成功運行中

[root@master 1]# kubectl get storageclass

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-client k8s-sigs.io/nfs-subdir-external-provisioner Delete Immediate false 8d

[root@master 1]# kubectl get pod -n nfs-storageclass

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-cdf554dd7-bm678 1/1 Running 0 134m

- 創建資源清單文件

[root@master 1]# cat redis-cluster-sts.yaml

apiVersion: v1

kind: Service

metadata:name: redis-headlesslabels:app.kubernetes.io/name: redis-cluster

spec:ports:- name: redis-6379protocol: TCPport: 6379targetPort: 6379selector:app.kubernetes.io/name: redis-clusterclusterIP: Nonetype: ClusterIP

---

apiVersion: apps/v1

kind: StatefulSet

metadata:name: redis-clusterlabels:app.kubernetes.io/name: redis-cluster

spec:serviceName: redis-headlessreplicas: 6selector:matchLabels:app.kubernetes.io/name: redis-clustertemplate:metadata:labels:app.kubernetes.io/name: redis-clusterspec:affinity:podAntiAffinity:preferredDuringSchedulingIgnoredDuringExecution:- weight: 100podAffinityTerm:labelSelector:matchExpressions:- key: app.kubernetes.io/nameoperator: Invalues:- redis-clustertopologyKey: kubernetes.io/hostnamecontainers:- name: redisimage: redis:8.0.0imagePullPolicy: IfNotPresentcommand:- "redis-server"args:- "/etc/redis/redis.conf"- "--protected-mode"- "no"- "--cluster-announce-ip"- "$(POD_IP)"env:- name: POD_IPvalueFrom:fieldRef:fieldPath: status.podIPports:- name: redis-6379containerPort: 6379protocol: TCPvolumeMounts:- name: configmountPath: /etc/redis- name: redis-cluster-datamountPath: /dataresources:requests:cpu: 50mmemory: 500Milimits:cpu: "2"memory: 4Givolumes:- name: configconfigMap:name: redis-cluster-configitems:- key: redis-configpath: redis.confvolumeClaimTemplates:- metadata:name: redis-cluster-dataspec:accessModes:- ReadWriteOncestorageClassName: nfs-clientresources:requests:storage: 5Gi

## 各個節點需要有redis:8.0.0,node1,node2要開啟

POD_IP 是重點,如果不配置會導致線上的 POD 重啟換 IP 后,集群狀態無法自動同步

- 創建資源

## 各個節點需要有redis:8.0.0,node1,node2要開啟

[root@master 1]# kubectl apply -f redis-cluster-sts.yaml

service/redis-headless created

statefulset.apps/redis-cluster created

- 驗證資源

## 各個節點需要有redis:8.0.0,node1,node2要開啟[root@master 1]# kubectl get svc,pod,sts

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 7d1h

service/redis-headless ClusterIP None <none> 6379/TCP 49sNAME READY STATUS RESTARTS AGE

pod/redis-cluster-0 1/1 Running 0 29s

pod/redis-cluster-1 1/1 Running 0 27s

pod/redis-cluster-2 1/1 Running 0 26s

pod/redis-cluster-3 1/1 Running 0 24s

pod/redis-cluster-4 1/1 Running 0 23s

pod/redis-cluster-5 1/1 Running 0 21sNAME READY AGE

statefulset.apps/redis-cluster 6/6 49s創建 k8s 集群外部

- 編寫資源

- 創建資源(創建 Service 資源)

-

編寫資源(創建資源清單文件 redis-cluster-svc-external.yaml)

采用 NodePort 方式在 Kubernetes 集群外發布 Redis 服務,指定的端口為 31379

[root@master 1]# vim redis-cluster-svc-external.yaml

- 創建資源(創建 Service 資源)

kind: Service

apiVersion: v1

metadata:name: redis-cluster-externallabels:app: redis-cluster-external

spec:ports:- protocol: TCPport: 6379targetPort: 6379nodePort: 31379selector:app.kubernetes.io/name: redis-clustertype: NodePort[root@master 1]# kubectl apply -f redis-cluster-svc-external.yaml

service/redis-cluster-external created

- 驗證資源

查看 Service 創建結果:

[root@master 1]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 7d1h

redis-cluster-external NodePort 10.0.63.134 <none> 6379:31379/TCP 23s

redis-headless ClusterIP None <none> 6379/TCP 3m33s

[root@master 1]# kubectl get endpointslice

NAME ADDRESSTYPE PORTS ENDPOINTS AGE

kubernetes IPv4 6443 192.168.86.11 24d

redis-cluster-external-dbl7h IPv4 6379 10.224.166.144,10.224.104.45,10.224.104.47 + 3 more... 31s

redis-headless-dgzdc IPv4 6379 10.224.104.42,10.224.166.135,10.224.104.47 + 3 more... 3m41s

2. 創建 Redis 集群

Redis Pod 創建完成后,不會自動創建 Redis 集群,需要手工執行集群初始化的命令,有自動創建和手工創建兩種方式,二選一,建議選擇自動

自動創建 redis 集群

自動創建 3 個 master 和 3 個 slave 的集群,中間需要輸入一次 yes,命令如下:

kubectl exec -it redis-cluster-0 -- redis-cli -a Chengke2025 --cluster create --cluster-replicas 1 $(kubectl get pods -l app.kubernetes.io/name=redis-cluster -o jsonpath='{range.items[*]} {.status.podIP}:6379 {end}')

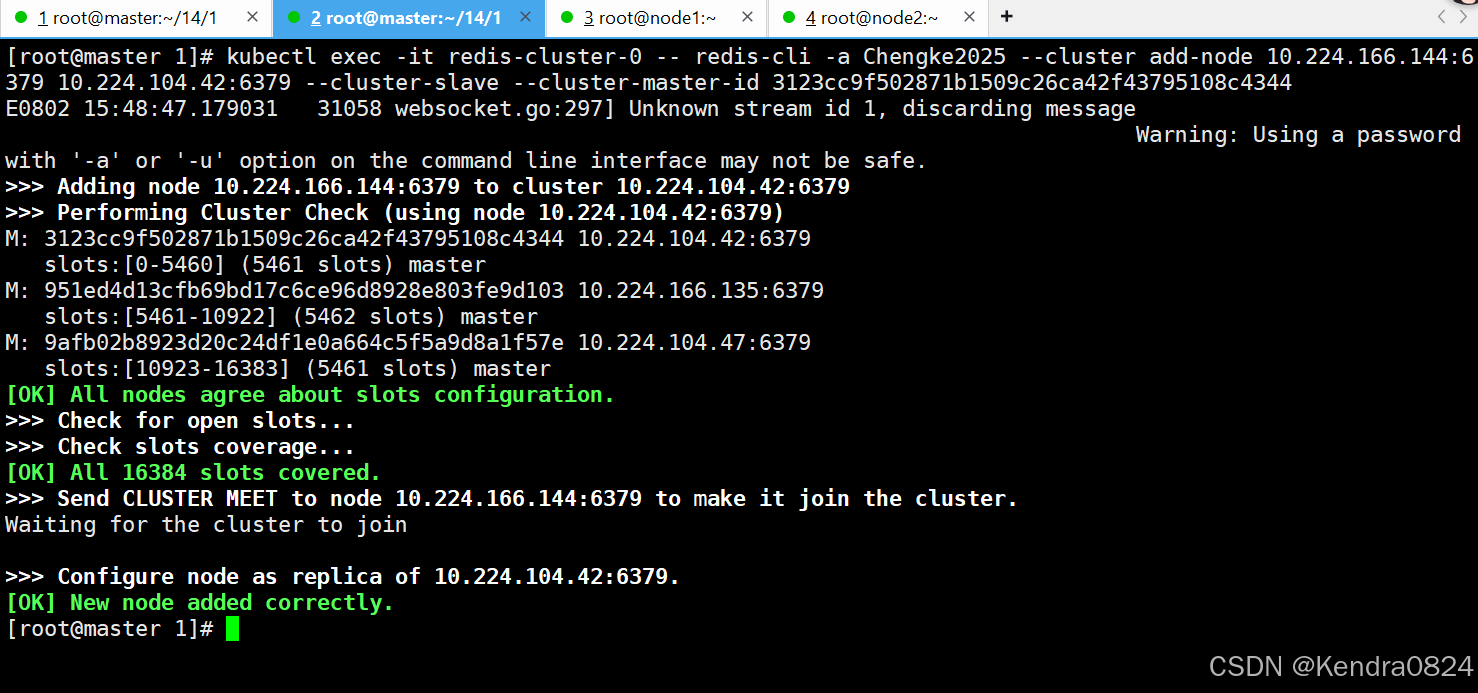

手動創建 redis 集群

手動配置 3 個 master 和 3 個 slave 的集群,一共創建了 6 個 Redis pod,集群主-> 從配置的規則為 0->3,1->4,2->5

-

查詢 Redis pod 分配的 IP

-

創建 3 個 master 節點的集群

-

為每個 master 添加 slave 節點(共三組)

由于命令太長,配置過程中,使用手工查詢 pod IP 并進行相關配置

- 查詢 Redis pod 分配的 IP

[root@master 1]# kubectl get pod -o wide | grep redis

redis-cluster-0 1/1 Running 0 9m23s 10.224.104.42 node2 <none> <none>

redis-cluster-1 1/1 Running 0 9m21s 10.224.166.135 node1 <none> <none>

redis-cluster-2 1/1 Running 0 9m20s 10.224.104.47 node2 <none> <none>

redis-cluster-3 1/1 Running 0 9m18s 10.224.166.144 node1 <none> <none>

redis-cluster-4 1/1 Running 0 9m17s 10.224.104.45 node2 <none> <none>

redis-cluster-5 1/1 Running 0 9m15s 10.224.166.130 node1 <none> <none>

[root@master 1]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

redis-cluster-0 1/1 Running 0 9m33s 10.224.104.42 node2 <none> <none>

redis-cluster-1 1/1 Running 0 9m31s 10.224.166.135 node1 <none> <none>

redis-cluster-2 1/1 Running 0 9m30s 10.224.104.47 node2 <none> <none>

redis-cluster-3 1/1 Running 0 9m28s 10.224.166.144 node1 <none> <none>

redis-cluster-4 1/1 Running 0 9m27s 10.224.104.45 node2 <none> <none>

redis-cluster-5 1/1 Running 0 9m25s 10.224.166.130 node1 <none> <none>

- 創建 3 個 master 節點的集群

#下面的命令中,三個 IP 地址分別為 redis-cluster-0 redis-cluster-1 redis-cluster-2 對應的IP, 中間需要輸入一次yeskubectl exec -it redis-cluster-0 -- redis-cli -a Chengke2025 --cluster create 10.224.104.42:6379 10.224.166.135:6379 10.224.104.47:6379[root@master 1]# kubectl exec -it redis-cluster-0 -- redis-cli -a Chengke2025 --cluster create 10.224.104.42:6379 10.224.166.135:6379 10.224.104.47:6379

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

>>> Performing hash slots allocation on 3 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

M: 3123cc9f502871b1509c26ca42f43795108c4344 10.224.104.42:6379slots:[0-5460] (5461 slots) master

M: 951ed4d13cfb69bd17c6ce96d8928e803fe9d103 10.224.166.135:6379slots:[5461-10922] (5462 slots) master

M: 9afb02b8923d20c24df1e0a664c5f5a9d8a1f57e 10.224.104.47:6379slots:[10923-16383] (5461 slots) master

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

.

>>> Performing Cluster Check (using node 10.224.104.42:6379)

M: 3123cc9f502871b1509c26ca42f43795108c4344 10.224.104.42:6379slots:[0-5460] (5461 slots) master

M: 951ed4d13cfb69bd17c6ce96d8928e803fe9d103 10.224.166.135:6379slots:[5461-10922] (5462 slots) master

M: 9afb02b8923d20c24df1e0a664c5f5a9d8a1f57e 10.224.104.47:6379slots:[10923-16383] (5461 slots) master

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.- 為每個 master 添加 slave 節點(共三組)

# 第一組 redis0 -> redis3,先指定從節點IP:port,再指定主節點IP:port

kubectl exec -it redis-cluster-0 -- redis-cli -a Chengke2025 --cluster add-node 10.224.166.144:6379 10.224.104.42:6379 --cluster-slave --cluster-master-id 3123cc9f502871b1509c26ca42f43795108c4344# 參數說明

# 10.224.104.42:6379 任意一個 master 節點的 ip 地址,一般用 redis-cluster-0 的 IP 地址

# 10.224.166.144:6379 添加到某個 Master 的 Slave 節點的 IP 地址

# --cluster-master-id 添加 Slave 對應 Master 的 ID,如果不指定則隨機分配到任意一個主節點

[root@master 1]# kubectl exec -it redis-cluster-0 -- redis-cli -a Chengke2025 --cluster add-node 10.224.166.144:6379 10.224.104.42:6379 --cluster-slave --cluster-master-id 3123cc9f502871b1509c26ca42f43795108c4344

E0802 15:48:47.179031 31058 websocket.go:297] Unknown stream id 1, discarding messageWarning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

>>> Adding node 10.224.166.144:6379 to cluster 10.224.104.42:6379

>>> Performing Cluster Check (using node 10.224.104.42:6379)

M: 3123cc9f502871b1509c26ca42f43795108c4344 10.224.104.42:6379slots:[0-5460] (5461 slots) master

M: 951ed4d13cfb69bd17c6ce96d8928e803fe9d103 10.224.166.135:6379slots:[5461-10922] (5462 slots) master

M: 9afb02b8923d20c24df1e0a664c5f5a9d8a1f57e 10.224.104.47:6379slots:[10923-16383] (5461 slots) master

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 10.224.166.144:6379 to make it join the cluster.

Waiting for the cluster to join>>> Configure node as replica of 10.224.104.42:6379.

[OK] New node added correctly.

依次執行另外兩組的配置:

# 第二組 redis1 -> redis4

kubectl exec -it redis-cluster-0 -- redis-cli -a Chengke2025 --cluster add-node 10.224.104.45:6379 10.224.166.135:6379 --cluster-slave --cluster-master-id 951ed4d13cfb69bd17c6ce96d8928e803fe9d103[root@master 1]# kubectl exec -it redis-cluster-0 -- redis-cli -a Chengke2025 --cluster add-node 10.224.104.45:6379 10.224.166.135:6379 --cluster-slave --cluster-master-id 951ed4d13cfb69bd17c6ce96d8928e803fe9d103

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

>>> Adding node 10.224.104.45:6379 to cluster 10.224.166.135:6379

>>> Performing Cluster Check (using node 10.224.166.135:6379)

M: 951ed4d13cfb69bd17c6ce96d8928e803fe9d103 10.224.166.135:6379slots:[5461-10922] (5462 slots) master

S: bcfd42fad721d0b6925588f8f596fe5612d46be5 10.224.166.144:6379slots: (0 slots) slavereplicates 3123cc9f502871b1509c26ca42f43795108c4344

M: 9afb02b8923d20c24df1e0a664c5f5a9d8a1f57e 10.224.104.47:6379slots:[10923-16383] (5461 slots) master

M: 3123cc9f502871b1509c26ca42f43795108c4344 10.224.104.42:6379slots:[0-5460] (5461 slots) master1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 10.224.104.45:6379 to make it join the cluster.

Waiting for the cluster to join>>> Configure node as replica of 10.224.166.135:6379.

[OK] New node added correctly.# 第三組 redis2 -> redis5

kubectl exec -it redis-cluster-0 -- redis-cli -a Chengke2025 --cluster add-node 10.224.166.130:6379 10.224.104.47:6379 --cluster-slave --cluster-master-id 9afb02b8923d20c24df1e0a664c5f5a9d8a1f57e[root@master 1]# kubectl exec -it redis-cluster-0 -- redis-cli -a Chengke2025 --cluster add-node 10.224.166.130:6379 10.224.104.47:6379 --cluster-slave --cluster-master-id 9afb02b8923d20c24df1e0a664c5f5a9d8a1f57e

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

>>> Adding node 10.224.166.130:6379 to cluster 10.224.104.47:6379

>>> Performing Cluster Check (using node 10.224.104.47:6379)

M: 9afb02b8923d20c24df1e0a664c5f5a9d8a1f57e 10.224.104.47:6379slots:[10923-16383] (5461 slots) master

M: 951ed4d13cfb69bd17c6ce96d8928e803fe9d103 10.224.166.135:6379slots:[5461-10922] (5462 slots) master1 additional replica(s)

S: bcfd42fad721d0b6925588f8f596fe5612d46be5 10.224.166.144:6379slots: (0 slots) slavereplicates 3123cc9f502871b1509c26ca42f43795108c4344

S: 9cbec45dcd271f17a532985883c05a8ab8daf60a 10.224.104.45:6379slots: (0 slots) slavereplicates 951ed4d13cfb69bd17c6ce96d8928e803fe9d103

M: 3123cc9f502871b1509c26ca42f43795108c4344 10.224.104.42:6379slots:[0-5460] (5461 slots) master1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 10.224.166.130:6379 to make it join the cluster.

Waiting for the cluster to join>>> Configure node as replica of 10.224.104.47:6379.

[OK] New node added correctly.

驗證集群狀態

kubectl exec -it redis-cluster-0 -- redis-cli -p 6379 -a Chengke2025 cluster info

[root@master 1]# kubectl exec -it redis-cluster-0 -- redis-cli -p 6379 -a Chengke2025 cluster info

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6

cluster_size:3

cluster_current_epoch:3

cluster_my_epoch:1

cluster_stats_messages_ping_sent:345

cluster_stats_messages_pong_sent:354

cluster_stats_messages_sent:699

cluster_stats_messages_ping_received:351

cluster_stats_messages_pong_received:345

cluster_stats_messages_meet_received:3

cluster_stats_messages_received:699

total_cluster_links_buffer_limit_exceeded:0# 或者

kubectl exec -it redis-cluster-0 -- redis-cli -a Chengke2025 --cluster check $(kubectl get pods -l app.kubernetes.io/name=redis-cluster -o jsonpath='{range.items[0]}{.status.podIP}:6379{end}')

[root@master 1]# kubectl exec -it redis-cluster-0 -- redis-cli -a Chengke2025 --cluster check $(kubectl get pods -l app.kubernetes.io/name=redis-cluster -o jsonpath='{range.items[0]}{.status.podIP}:6379{end}')

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

10.224.104.42:6379 (3123cc9f...) -> 0 keys | 5461 slots | 1 slaves.

10.224.166.135:6379 (951ed4d1...) -> 0 keys | 5462 slots | 1 slaves.

10.224.104.47:6379 (9afb02b8...) -> 0 keys | 5461 slots | 1 slaves.

[OK] 0 keys in 3 masters.

0.00 keys per slot on average.

>>> Performing Cluster Check (using node 10.224.104.42:6379)

M: 3123cc9f502871b1509c26ca42f43795108c4344 10.224.104.42:6379slots:[0-5460] (5461 slots) master1 additional replica(s)

M: 951ed4d13cfb69bd17c6ce96d8928e803fe9d103 10.224.166.135:6379slots:[5461-10922] (5462 slots) master1 additional replica(s)

S: d2b90b963280b055eaf72541cefd0d95c107640f 10.224.166.130:6379slots: (0 slots) slavereplicates 9afb02b8923d20c24df1e0a664c5f5a9d8a1f57e

M: 9afb02b8923d20c24df1e0a664c5f5a9d8a1f57e 10.224.104.47:6379slots:[10923-16383] (5461 slots) master1 additional replica(s)

S: 9cbec45dcd271f17a532985883c05a8ab8daf60a 10.224.104.45:6379slots: (0 slots) slavereplicates 951ed4d13cfb69bd17c6ce96d8928e803fe9d103

S: bcfd42fad721d0b6925588f8f596fe5612d46be5 10.224.166.144:6379slots: (0 slots) slavereplicates 3123cc9f502871b1509c26ca42f43795108c4344

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.3. 集群功能測試

壓力測試

使用 Redis 自帶的壓力測試工具,測試 Redis 集群是否可用,并簡單測試性能

- 測試 set 場景

- ping

- get

- 測試 set 場景

使用 set 命令,發送100000次請求,每個請求包含一個鍵值對,其中鍵是隨機生成的,值的大小是100 字節,同時有20個客戶端并發執行

kubectl exec -it redis-cluster-0 -- redis-benchmark -h 192.168.86.11 -p 31379 -a Chengke2025 -t set -n 100000 -c 20 -d 100 --cluster[root@master 1]# kubectl exec -it redis-cluster-0 -- redis-benchmark -h 192.168.86.11 -p 31379 -a Chengke2025 -t set -n 100000 -c 20 -d 100 --cluster

Cluster has 3 master nodes:Master 0: 951ed4d13cfb69bd17c6ce96d8928e803fe9d103 10.224.166.135:6379

Master 1: 9afb02b8923d20c24df1e0a664c5f5a9d8a1f57e 10.224.104.47:6379

Master 2: 3123cc9f502871b1509c26ca42f43795108c4344 10.224.104.42:6379====== SET ====== 100000 requests completed in 1.50 seconds20 parallel clients100 bytes payloadkeep alive: 1cluster mode: yes (3 masters)node [0] configuration:save: 3600 1 300 100 60 10000appendonly: yesnode [1] configuration:save: 3600 1 300 100 60 10000appendonly: yesnode [2] configuration:save: 3600 1 300 100 60 10000appendonly: yesmulti-thread: yesthreads: 3Latency by percentile distribution:

0.000% <= 0.015 milliseconds (cumulative count 1)

50.000% <= 0.175 milliseconds (cumulative count 52274)

75.000% <= 0.303 milliseconds (cumulative count 75564)

87.500% <= 0.455 milliseconds (cumulative count 87874)

93.750% <= 0.607 milliseconds (cumulative count 93833)

96.875% <= 0.783 milliseconds (cumulative count 96951)

98.438% <= 1.007 milliseconds (cumulative count 98445)

99.219% <= 1.335 milliseconds (cumulative count 99222)

99.609% <= 1.759 milliseconds (cumulative count 99611)

99.805% <= 2.495 milliseconds (cumulative count 99806)

99.902% <= 3.319 milliseconds (cumulative count 99903)

99.951% <= 3.743 milliseconds (cumulative count 99952)

99.976% <= 4.671 milliseconds (cumulative count 99977)

99.988% <= 5.735 milliseconds (cumulative count 99988)

99.994% <= 9.023 milliseconds (cumulative count 99994)

99.997% <= 9.087 milliseconds (cumulative count 99997)

99.998% <= 9.167 milliseconds (cumulative count 99999)

99.999% <= 9.215 milliseconds (cumulative count 100000)

100.000% <= 9.215 milliseconds (cumulative count 100000)Cumulative distribution of latencies:

11.894% <= 0.103 milliseconds (cumulative count 11894)

61.107% <= 0.207 milliseconds (cumulative count 61107)

75.564% <= 0.303 milliseconds (cumulative count 75564)

84.816% <= 0.407 milliseconds (cumulative count 84816)

90.304% <= 0.503 milliseconds (cumulative count 90304)

93.833% <= 0.607 milliseconds (cumulative count 93833)

95.855% <= 0.703 milliseconds (cumulative count 95855)

97.198% <= 0.807 milliseconds (cumulative count 97198)

97.935% <= 0.903 milliseconds (cumulative count 97935)

98.445% <= 1.007 milliseconds (cumulative count 98445)

98.778% <= 1.103 milliseconds (cumulative count 98778)

99.021% <= 1.207 milliseconds (cumulative count 99021)

99.167% <= 1.303 milliseconds (cumulative count 99167)

99.322% <= 1.407 milliseconds (cumulative count 99322)

99.433% <= 1.503 milliseconds (cumulative count 99433)

99.518% <= 1.607 milliseconds (cumulative count 99518)

99.583% <= 1.703 milliseconds (cumulative count 99583)

99.630% <= 1.807 milliseconds (cumulative count 99630)

99.675% <= 1.903 milliseconds (cumulative count 99675)

99.716% <= 2.007 milliseconds (cumulative count 99716)

99.730% <= 2.103 milliseconds (cumulative count 99730)

99.872% <= 3.103 milliseconds (cumulative count 99872)

99.955% <= 4.103 milliseconds (cumulative count 99955)

99.987% <= 5.103 milliseconds (cumulative count 99987)

99.993% <= 6.103 milliseconds (cumulative count 99993)

99.997% <= 9.103 milliseconds (cumulative count 99997)

100.000% <= 10.103 milliseconds (cumulative count 100000)Summary:throughput summary: 66533.60 requests per secondlatency summary (msec):avg min p50 p95 p99 max0.255 0.008 0.175 0.663 1.199 9.215- ping

kubectl exec -it redis-cluster-0 -- redis-benchmark -h 192.168.86.11 -p 31379 -a Chengke2025 -t ping -n 100000 -c 20 -d 100 --cluster[root@master 1]# kubectl exec -it redis-cluster-0 -- redis-benchmark -h 192.168.86.11 -p 31379 -a Chengke2025 -t ping -n 100000 -c 20 -d 100 --cluster

Cluster has 3 master nodes:Master 0: 951ed4d13cfb69bd17c6ce96d8928e803fe9d103 10.224.166.135:6379

Master 1: 9afb02b8923d20c24df1e0a664c5f5a9d8a1f57e 10.224.104.47:6379

Master 2: 3123cc9f502871b1509c26ca42f43795108c4344 10.224.104.42:6379====== PING_INLINE ====== 100000 requests completed in 1.00 seconds20 parallel clients100 bytes payloadkeep alive: 1cluster mode: yes (3 masters)node [0] configuration:save: 3600 1 300 100 60 10000appendonly: yesnode [1] configuration:save: 3600 1 300 100 60 10000appendonly: yesnode [2] configuration:save: 3600 1 300 100 60 10000appendonly: yesmulti-thread: yesthreads: 3Latency by percentile distribution:

0.000% <= 0.015 milliseconds (cumulative count 574)

50.000% <= 0.087 milliseconds (cumulative count 51254)

75.000% <= 0.183 milliseconds (cumulative count 75182)

87.500% <= 0.287 milliseconds (cumulative count 87876)

93.750% <= 0.383 milliseconds (cumulative count 93941)

96.875% <= 0.487 milliseconds (cumulative count 96964)

98.438% <= 0.615 milliseconds (cumulative count 98438)

99.219% <= 0.783 milliseconds (cumulative count 99222)

99.609% <= 0.959 milliseconds (cumulative count 99614)

99.805% <= 1.167 milliseconds (cumulative count 99805)

99.902% <= 1.519 milliseconds (cumulative count 99904)

99.951% <= 1.871 milliseconds (cumulative count 99952)

99.976% <= 2.135 milliseconds (cumulative count 99977)

99.988% <= 2.319 milliseconds (cumulative count 99988)

99.994% <= 2.591 milliseconds (cumulative count 99994)

99.997% <= 4.903 milliseconds (cumulative count 99997)

99.998% <= 4.935 milliseconds (cumulative count 99999)

99.999% <= 4.943 milliseconds (cumulative count 100000)

100.000% <= 4.943 milliseconds (cumulative count 100000)Cumulative distribution of latencies:

57.666% <= 0.103 milliseconds (cumulative count 57666)

78.729% <= 0.207 milliseconds (cumulative count 78729)

89.225% <= 0.303 milliseconds (cumulative count 89225)

94.861% <= 0.407 milliseconds (cumulative count 94861)

97.219% <= 0.503 milliseconds (cumulative count 97219)

98.377% <= 0.607 milliseconds (cumulative count 98377)

98.919% <= 0.703 milliseconds (cumulative count 98919)

99.296% <= 0.807 milliseconds (cumulative count 99296)

99.512% <= 0.903 milliseconds (cumulative count 99512)

99.684% <= 1.007 milliseconds (cumulative count 99684)

99.778% <= 1.103 milliseconds (cumulative count 99778)

99.820% <= 1.207 milliseconds (cumulative count 99820)

99.844% <= 1.303 milliseconds (cumulative count 99844)

99.868% <= 1.407 milliseconds (cumulative count 99868)

99.899% <= 1.503 milliseconds (cumulative count 99899)

99.926% <= 1.607 milliseconds (cumulative count 99926)

99.939% <= 1.703 milliseconds (cumulative count 99939)

99.944% <= 1.807 milliseconds (cumulative count 99944)

99.955% <= 1.903 milliseconds (cumulative count 99955)

99.969% <= 2.007 milliseconds (cumulative count 99969)

99.975% <= 2.103 milliseconds (cumulative count 99975)

99.996% <= 3.103 milliseconds (cumulative count 99996)

100.000% <= 5.103 milliseconds (cumulative count 100000)Summary:throughput summary: 99900.09 requests per secondlatency summary (msec):avg min p50 p95 p99 max0.140 0.008 0.087 0.415 0.727 4.943

====== PING_MBULK ====== 100000 requests completed in 1.00 seconds20 parallel clients100 bytes payloadkeep alive: 1cluster mode: yes (3 masters)node [0] configuration:save: 3600 1 300 100 60 10000appendonly: yesnode [1] configuration:save: 3600 1 300 100 60 10000appendonly: yesnode [2] configuration:save: 3600 1 300 100 60 10000appendonly: yesmulti-thread: yesthreads: 3Latency by percentile distribution:

0.000% <= 0.015 milliseconds (cumulative count 532)

50.000% <= 0.063 milliseconds (cumulative count 52347)

75.000% <= 0.151 milliseconds (cumulative count 75855)

87.500% <= 0.255 milliseconds (cumulative count 87863)

93.750% <= 0.351 milliseconds (cumulative count 94118)

96.875% <= 0.439 milliseconds (cumulative count 96957)

98.438% <= 0.543 milliseconds (cumulative count 98485)

99.219% <= 0.671 milliseconds (cumulative count 99225)

99.609% <= 0.823 milliseconds (cumulative count 99617)

99.805% <= 0.999 milliseconds (cumulative count 99808)

99.902% <= 1.247 milliseconds (cumulative count 99904)

99.951% <= 1.743 milliseconds (cumulative count 99952)

99.976% <= 2.663 milliseconds (cumulative count 99976)

99.988% <= 4.959 milliseconds (cumulative count 99988)

99.994% <= 5.023 milliseconds (cumulative count 99996)

99.997% <= 5.055 milliseconds (cumulative count 99997)

99.998% <= 5.111 milliseconds (cumulative count 99999)

99.999% <= 5.199 milliseconds (cumulative count 100000)

100.000% <= 5.199 milliseconds (cumulative count 100000)Cumulative distribution of latencies:

67.607% <= 0.103 milliseconds (cumulative count 67607)

82.977% <= 0.207 milliseconds (cumulative count 82977)

91.576% <= 0.303 milliseconds (cumulative count 91576)

96.133% <= 0.407 milliseconds (cumulative count 96133)

98.036% <= 0.503 milliseconds (cumulative count 98036)

98.953% <= 0.607 milliseconds (cumulative count 98953)

99.322% <= 0.703 milliseconds (cumulative count 99322)

99.593% <= 0.807 milliseconds (cumulative count 99593)

99.726% <= 0.903 milliseconds (cumulative count 99726)

99.813% <= 1.007 milliseconds (cumulative count 99813)

99.869% <= 1.103 milliseconds (cumulative count 99869)

99.897% <= 1.207 milliseconds (cumulative count 99897)

99.913% <= 1.303 milliseconds (cumulative count 99913)

99.929% <= 1.407 milliseconds (cumulative count 99929)

99.942% <= 1.503 milliseconds (cumulative count 99942)

99.946% <= 1.607 milliseconds (cumulative count 99946)

99.951% <= 1.703 milliseconds (cumulative count 99951)

99.953% <= 1.807 milliseconds (cumulative count 99953)

99.955% <= 1.903 milliseconds (cumulative count 99955)

99.959% <= 2.007 milliseconds (cumulative count 99959)

99.982% <= 3.103 milliseconds (cumulative count 99982)

99.986% <= 4.103 milliseconds (cumulative count 99986)

99.998% <= 5.103 milliseconds (cumulative count 99998)

100.000% <= 6.103 milliseconds (cumulative count 100000)Summary:throughput summary: 99800.40 requests per secondlatency summary (msec):avg min p50 p95 p99 max0.117 0.008 0.063 0.375 0.615 5.199- get

kubectl exec -it redis-cluster-0 -- redis-benchmark -h 192.168.86.11 -p 31379 -a Chengke2025 -t get -n 100000 -c 20 -d 100 --cluster[root@master 1]# kubectl exec -it redis-cluster-0 -- redis-benchmark -h 192.168.86.11 -p 31379 -a Chengke2025 -t get -n 100000 -c 20 -d 100 --cluster

Cluster has 3 master nodes:Master 0: 951ed4d13cfb69bd17c6ce96d8928e803fe9d103 10.224.166.135:6379

Master 1: 9afb02b8923d20c24df1e0a664c5f5a9d8a1f57e 10.224.104.47:6379

Master 2: 3123cc9f502871b1509c26ca42f43795108c4344 10.224.104.42:6379====== GET ====== 100000 requests completed in 1.00 seconds20 parallel clients100 bytes payloadkeep alive: 1cluster mode: yes (3 masters)node [0] configuration:save: 3600 1 300 100 60 10000appendonly: yesnode [1] configuration:save: 3600 1 300 100 60 10000appendonly: yesnode [2] configuration:save: 3600 1 300 100 60 10000appendonly: yesmulti-thread: yesthreads: 3Latency by percentile distribution:

0.000% <= 0.015 milliseconds (cumulative count 211)

50.000% <= 0.095 milliseconds (cumulative count 52867)

75.000% <= 0.175 milliseconds (cumulative count 75366)

87.500% <= 0.271 milliseconds (cumulative count 87592)

93.750% <= 0.367 milliseconds (cumulative count 93946)

96.875% <= 0.463 milliseconds (cumulative count 96897)

98.438% <= 0.583 milliseconds (cumulative count 98462)

99.219% <= 0.751 milliseconds (cumulative count 99242)

99.609% <= 0.927 milliseconds (cumulative count 99613)

99.805% <= 1.159 milliseconds (cumulative count 99805)

99.902% <= 1.439 milliseconds (cumulative count 99904)

99.951% <= 1.719 milliseconds (cumulative count 99952)

99.976% <= 2.503 milliseconds (cumulative count 99976)

99.988% <= 2.703 milliseconds (cumulative count 99988)

99.994% <= 3.071 milliseconds (cumulative count 99994)

99.997% <= 3.135 milliseconds (cumulative count 99997)

99.998% <= 3.191 milliseconds (cumulative count 99999)

99.999% <= 5.999 milliseconds (cumulative count 100000)

100.000% <= 5.999 milliseconds (cumulative count 100000)Cumulative distribution of latencies:

56.336% <= 0.103 milliseconds (cumulative count 56336)

80.115% <= 0.207 milliseconds (cumulative count 80115)

90.259% <= 0.303 milliseconds (cumulative count 90259)

95.459% <= 0.407 milliseconds (cumulative count 95459)

97.567% <= 0.503 milliseconds (cumulative count 97567)

98.640% <= 0.607 milliseconds (cumulative count 98640)

99.095% <= 0.703 milliseconds (cumulative count 99095)

99.373% <= 0.807 milliseconds (cumulative count 99373)

99.568% <= 0.903 milliseconds (cumulative count 99568)

99.705% <= 1.007 milliseconds (cumulative count 99705)

99.774% <= 1.103 milliseconds (cumulative count 99774)

99.821% <= 1.207 milliseconds (cumulative count 99821)

99.856% <= 1.303 milliseconds (cumulative count 99856)

99.895% <= 1.407 milliseconds (cumulative count 99895)

99.914% <= 1.503 milliseconds (cumulative count 99914)

99.940% <= 1.607 milliseconds (cumulative count 99940)

99.950% <= 1.703 milliseconds (cumulative count 99950)

99.959% <= 1.807 milliseconds (cumulative count 99959)

99.960% <= 1.903 milliseconds (cumulative count 99960)

99.971% <= 2.007 milliseconds (cumulative count 99971)

99.996% <= 3.103 milliseconds (cumulative count 99996)

99.999% <= 4.103 milliseconds (cumulative count 99999)

100.000% <= 6.103 milliseconds (cumulative count 100000)Summary:throughput summary: 99601.60 requests per secondlatency summary (msec):avg min p50 p95 p99 max0.138 0.008 0.095 0.399 0.679 5.999故障切換測試

- 測試前查看集群狀態(以一組 Master/Slave 為例)

kubectl exec -it redis-cluster-0 -- redis-cli -a Chengke2025 --cluster check $(kubectl get pods -l app.kubernetes.io/name=redis-cluster -o jsonpath='{range.items[0]}{.status.podIP}:6379{end}')[root@master 1]# kubectl exec -it redis-cluster-0 -- redis-cli -a Chengke2025 --cluster check $(kubectl get pods -l app.kubernetes.io/name=redis-cluster -o jsonpath='{range.items[0]}{.status.podIP}:6379{end}')

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

10.224.104.42:6379 (3123cc9f...) -> 5460 keys | 5461 slots | 1 slaves.

10.224.166.135:6379 (951ed4d1...) -> 5212 keys | 5462 slots | 1 slaves.

10.224.104.47:6379 (9afb02b8...) -> 5458 keys | 5461 slots | 1 slaves.

[OK] 16130 keys in 3 masters.

0.98 keys per slot on average.

>>> Performing Cluster Check (using node 10.224.104.42:6379)

M: 3123cc9f502871b1509c26ca42f43795108c4344 10.224.104.42:6379slots:[0-5460] (5461 slots) master1 additional replica(s)

M: 951ed4d13cfb69bd17c6ce96d8928e803fe9d103 10.224.166.135:6379slots:[5461-10922] (5462 slots) master1 additional replica(s)

S: d2b90b963280b055eaf72541cefd0d95c107640f 10.224.166.130:6379slots: (0 slots) slavereplicates 9afb02b8923d20c24df1e0a664c5f5a9d8a1f57e

M: 9afb02b8923d20c24df1e0a664c5f5a9d8a1f57e 10.224.104.47:6379slots:[10923-16383] (5461 slots) master1 additional replica(s)

S: 9cbec45dcd271f17a532985883c05a8ab8daf60a 10.224.104.45:6379slots: (0 slots) slavereplicates 951ed4d13cfb69bd17c6ce96d8928e803fe9d103

S: bcfd42fad721d0b6925588f8f596fe5612d46be5 10.224.166.144:6379slots: (0 slots) slavereplicates 3123cc9f502871b1509c26ca42f43795108c4344

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.- 測試場景1: 手動刪除一個 Master 的 Slave,觀察 Slave Pod 是否會自動重建并加入原有 Master

刪除 Slave 后,查看集群狀態:

kubectl get pod -o wide

[root@master 1]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

redis-cluster-0 1/1 Running 0 20m 10.224.104.42 node2 <none> <none>

redis-cluster-1 1/1 Running 0 20m 10.224.166.135 node1 <none> <none>

redis-cluster-2 1/1 Running 0 20m 10.224.104.47 node2 <none> <none>

redis-cluster-3 1/1 Running 0 20m 10.224.166.144 node1 <none> <none>

redis-cluster-4 1/1 Running 0 20m 10.224.104.45 node2 <none> <none>

redis-cluster-5 1/1 Running 0 20m 10.224.166.130 node1 <none> <none>

# 刪除 Slave

kubectl delete pod redis-cluster-3

[root@master 1]# kubectl delete pod redis-cluster-3

pod "redis-cluster-3" deletedkubectl get pod -o wide

[root@master 1]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

redis-cluster-0 1/1 Running 0 21m 10.224.104.42 node2 <none> <none>

redis-cluster-1 1/1 Running 0 20m 10.224.166.135 node1 <none> <none>

redis-cluster-2 1/1 Running 0 20m 10.224.104.47 node2 <none> <none>

redis-cluster-3 0/1 ContainerCreating 0 2s <none> node1 <none> <none>

redis-cluster-4 1/1 Running 0 20m 10.224.104.45 node2 <none> <none>

redis-cluster-5 1/1 Running 0 20m 10.224.166.130 node1 <none> <none>

[root@master 1]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

redis-cluster-0 1/1 Running 0 21m 10.224.104.42 node2 <none> <none>

redis-cluster-1 1/1 Running 0 21m 10.224.166.135 node1 <none> <none>

redis-cluster-2 1/1 Running 0 21m 10.224.104.47 node2 <none> <none>

redis-cluster-3 1/1 Running 0 6s 10.224.166.182 node1 <none> <none>

redis-cluster-4 1/1 Running 0 20m 10.224.104.45 node2 <none> <none>

redis-cluster-5 1/1 Running 0 20m 10.224.166.130 node1 <none> <none>kubectl exec -it redis-cluster-0 -- redis-cli -a Chengke2025 --cluster check $(kubectl get pods -l app.kubernetes.io/name=redis-cluster -o jsonpath='{range.items[0]}{.status.podIP}:6379{end}')[root@master 1]# kubectl exec -it redis-cluster-0 -- redis-cli -a Chengke2025 --cluster check $(kubectl get pods -l app.kubernetes.io/name=redis-cluster -o jsonpath='{range.items[0]}{.status.podIP}:6379{end}')

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

10.224.104.42:6379 (3123cc9f...) -> 5460 keys | 5461 slots | 1 slaves.

10.224.166.135:6379 (951ed4d1...) -> 5212 keys | 5462 slots | 1 slaves.

10.224.104.47:6379 (9afb02b8...) -> 5458 keys | 5461 slots | 1 slaves.

[OK] 16130 keys in 3 masters.

0.98 keys per slot on average.

>>> Performing Cluster Check (using node 10.224.104.42:6379)

M: 3123cc9f502871b1509c26ca42f43795108c4344 10.224.104.42:6379slots:[0-5460] (5461 slots) master1 additional replica(s)

M: 951ed4d13cfb69bd17c6ce96d8928e803fe9d103 10.224.166.135:6379slots:[5461-10922] (5462 slots) master1 additional replica(s)

S: d2b90b963280b055eaf72541cefd0d95c107640f 10.224.166.130:6379slots: (0 slots) slavereplicates 9afb02b8923d20c24df1e0a664c5f5a9d8a1f57e

M: 9afb02b8923d20c24df1e0a664c5f5a9d8a1f57e 10.224.104.47:6379slots:[10923-16383] (5461 slots) master1 additional replica(s)

S: 9cbec45dcd271f17a532985883c05a8ab8daf60a 10.224.104.45:6379slots: (0 slots) slavereplicates 951ed4d13cfb69bd17c6ce96d8928e803fe9d103

S: bcfd42fad721d0b6925588f8f596fe5612d46be5 10.224.166.182:6379slots: (0 slots) slavereplicates 3123cc9f502871b1509c26ca42f43795108c4344

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.結果: 原有 Slave IP 為 10.224.166.144,刪除后自動重建,IP 變更為 10.224.166.182,并自動加入原有的 Master。

- 測試場景2: 手動刪除 Master ,觀察 Master Pod 是否會自動重建并重新變成 Master。 刪除 Master 后,查看集群狀態

kubectl get pod -o wide

[root@master 1]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

redis-cluster-0 1/1 Running 0 22m 10.224.104.42 node2 <none> <none>

redis-cluster-1 1/1 Running 0 22m 10.224.166.135 node1 <none> <none>

redis-cluster-2 1/1 Running 0 22m 10.224.104.47 node2 <none> <none>

redis-cluster-3 1/1 Running 0 90s 10.224.166.182 node1 <none> <none>

redis-cluster-4 1/1 Running 0 22m 10.224.104.45 node2 <none> <none>

redis-cluster-5 1/1 Running 0 22m 10.224.166.130 node1 <none> <none>kubectl delete pod redis-cluster-2

[root@master 1]# kubectl delete pod redis-cluster-2

pod "redis-cluster-2" deletedkubectl get pod -o wide

[root@master 1]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

redis-cluster-0 1/1 Running 0 22m 10.224.104.42 node2 <none> <none>

redis-cluster-1 1/1 Running 0 22m 10.224.166.135 node1 <none> <none>

redis-cluster-2 1/1 Running 0 6s 10.224.104.2 node2 <none> <none>

redis-cluster-3 1/1 Running 0 118s 10.224.166.182 node1 <none> <none>

redis-cluster-4 1/1 Running 0 22m 10.224.104.45 node2 <none> <none>

redis-cluster-5 1/1 Running 0 22m 10.224.166.130 node1 <none> <none>

kubectl exec -it redis-cluster-0 -- redis-cli -a Chengke2025 --cluster check $(kubectl get pods -l app.kubernetes.io/name=redis-cluster -o jsonpath='{range.items[0]}{.status.podIP}:6379{end}')[root@master 1]# kubectl exec -it redis-cluster-0 -- redis-cli -a Chengke2025 --cluster check $(kubectl get pods -l app.kubernetes.io/name=redis-cluster -o jsonpath='{range.items[0]}{.status.podIP}:6379{end}')

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

10.224.104.42:6379 (3123cc9f...) -> 5460 keys | 5461 slots | 1 slaves.

10.224.166.135:6379 (951ed4d1...) -> 5212 keys | 5462 slots | 1 slaves.

10.224.104.2:6379 (9afb02b8...) -> 5458 keys | 5461 slots | 1 slaves.

[OK] 16130 keys in 3 masters.

0.98 keys per slot on average.

>>> Performing Cluster Check (using node 10.224.104.42:6379)

M: 3123cc9f502871b1509c26ca42f43795108c4344 10.224.104.42:6379slots:[0-5460] (5461 slots) master1 additional replica(s)

M: 951ed4d13cfb69bd17c6ce96d8928e803fe9d103 10.224.166.135:6379slots:[5461-10922] (5462 slots) master1 additional replica(s)

S: d2b90b963280b055eaf72541cefd0d95c107640f 10.224.166.130:6379slots: (0 slots) slavereplicates 9afb02b8923d20c24df1e0a664c5f5a9d8a1f57e

M: 9afb02b8923d20c24df1e0a664c5f5a9d8a1f57e 10.224.104.2:6379slots:[10923-16383] (5461 slots) master1 additional replica(s)

S: 9cbec45dcd271f17a532985883c05a8ab8daf60a 10.224.104.45:6379slots: (0 slots) slavereplicates 951ed4d13cfb69bd17c6ce96d8928e803fe9d103

S: bcfd42fad721d0b6925588f8f596fe5612d46be5 10.224.166.182:6379slots: (0 slots) slavereplicates 3123cc9f502871b1509c26ca42f43795108c4344

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

結果: 原有 Master IP 為 10.224.104.47,刪除后自動重建, IP 變更為 10.224.104.2,并重新變成 Master

4. 安裝管理客戶端

Redis 官方提供的圖形化工具 RedisInsight

由于 RedisInsight 默認并不提供登錄驗證功能,因此,在系統安全要求比較高的環境會有安全風險,請慎用!建議生產環境使用命令行工具

編輯資源清單

- 創建資源清單

- 創建外部訪問服務

- 創建資源清單文件 redisinsight-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: redisinsightlabels:app.kubernetes.io/name: redisinsight

spec:replicas: 1selector:matchLabels:app.kubernetes.io/name: redisinsighttemplate:metadata:labels:app.kubernetes.io/name: redisinsightspec:containers:- name: redisinsightimage: redislabs/redisinsightports:- name: redisinsightcontainerPort: 5540protocol: TCPresources:limits:cpu: '2'memory: 4Girequests:cpu: 100mmemory: 500Mi

node節點拉取鏡像:

[root@node1 ~]# docker pull docker.io/redislabs/redisinsight:2.60

2.60: Pulling from redislabs/redisinsight

ec99f8b99825: Already exists

826542d541ab: Already exists

dffcc26d5732: Already exists

db472a6f05b5: Already exists

17e2cb71ec43: Pull complete

325fd90e4460: Pull complete

1fa3aae28619: Pull complete

8b6e450d580b: Pull complete

7c313c1d0eac: Pull complete

b6136ebb7018: Pull complete

79ef91675e49: Pull complete

Digest: sha256:ef29a4cae9ce79b659734f9e3e77db3a5f89876601c89b1ee8fdf18d2f92bb9d

Status: Downloaded newer image for redislabs/redisinsight:2.60

docker.io/redislabs/redisinsight:2.60

- 創建外部訪問服務

采用 NodePort 方式在 Kubernetes 集群中對外發布 RedisInsight 服務,指定的端口為 31380。 使用 vi 編輯器,創建資源清單文件 redisinsight-svc-external.yaml,并輸入以下內容:

kind: Service

apiVersion: v1

metadata:name: redisinsight-externallabels:app: redisinsight-external

spec:ports:- name: redisinsightprotocol: TCPport: 5540targetPort: 5540nodePort: 31380selector:app.kubernetes.io/name: redisinsighttype: NodePort

部署 RedisInsight

- 創建資源

- 驗證資源

- 創建 RedisInsight 資源

[root@master 1]# kubectl apply -f redisinsight-deploy.yaml -f redisinsight-svc-external.yaml

deployment.apps/redisinsight created

service/redisinsight-external created

- 查看 Deployment、Pod、Service 創建結果

kubectl get pod -o wide | grep redisinsight

[root@master 1]# kubectl get pod -o wide | grep redisinsight

redisinsight-c88d4774c-hwd7w 1/1 Running 0 27s 10.224.104.16 node2 <none> <none>

[root@master 1]# kubectl get pod,deploy,svc

NAME READY STATUS RESTARTS AGE

pod/redis-cluster-0 1/1 Running 1 (32m ago) 87m

pod/redis-cluster-1 1/1 Running 1 (32m ago) 87m

pod/redis-cluster-2 1/1 Running 1 (32m ago) 64m

pod/redis-cluster-3 1/1 Running 1 (32m ago) 66m

pod/redis-cluster-4 1/1 Running 1 (32m ago) 87m

pod/redis-cluster-5 1/1 Running 1 (32m ago) 87m

pod/redisinsight-c88d4774c-hwd7w 1/1 Running 0 33sNAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/redisinsight 1/1 1 1 33sNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.0.0.1 <none> 443/TCP 7d2h

service/redis-cluster-external NodePort 10.0.63.134 <none> 6379:31379/TCP 84m

service/redis-headless ClusterIP None <none> 6379/TCP 87m

service/redisinsight-external NodePort 10.14.245.72 <none> 5540:31380/TCP 33s

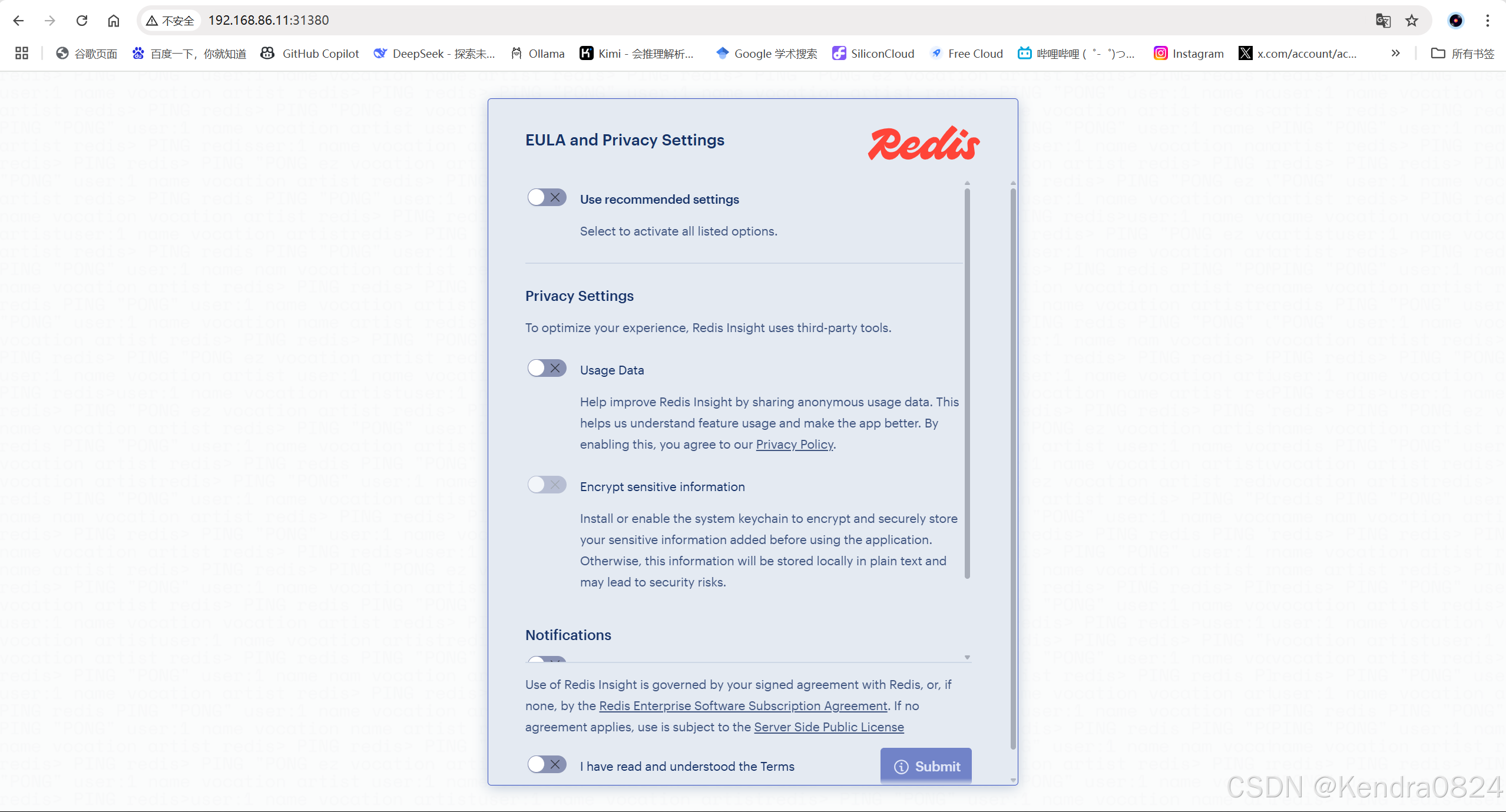

控制臺初始化

打開 RedisInsight 控制臺, NodePort http://192.168.86.11:31380(master主機IP)

進入默認配置頁面,只勾選最后一個按鈕,點擊 Submit。

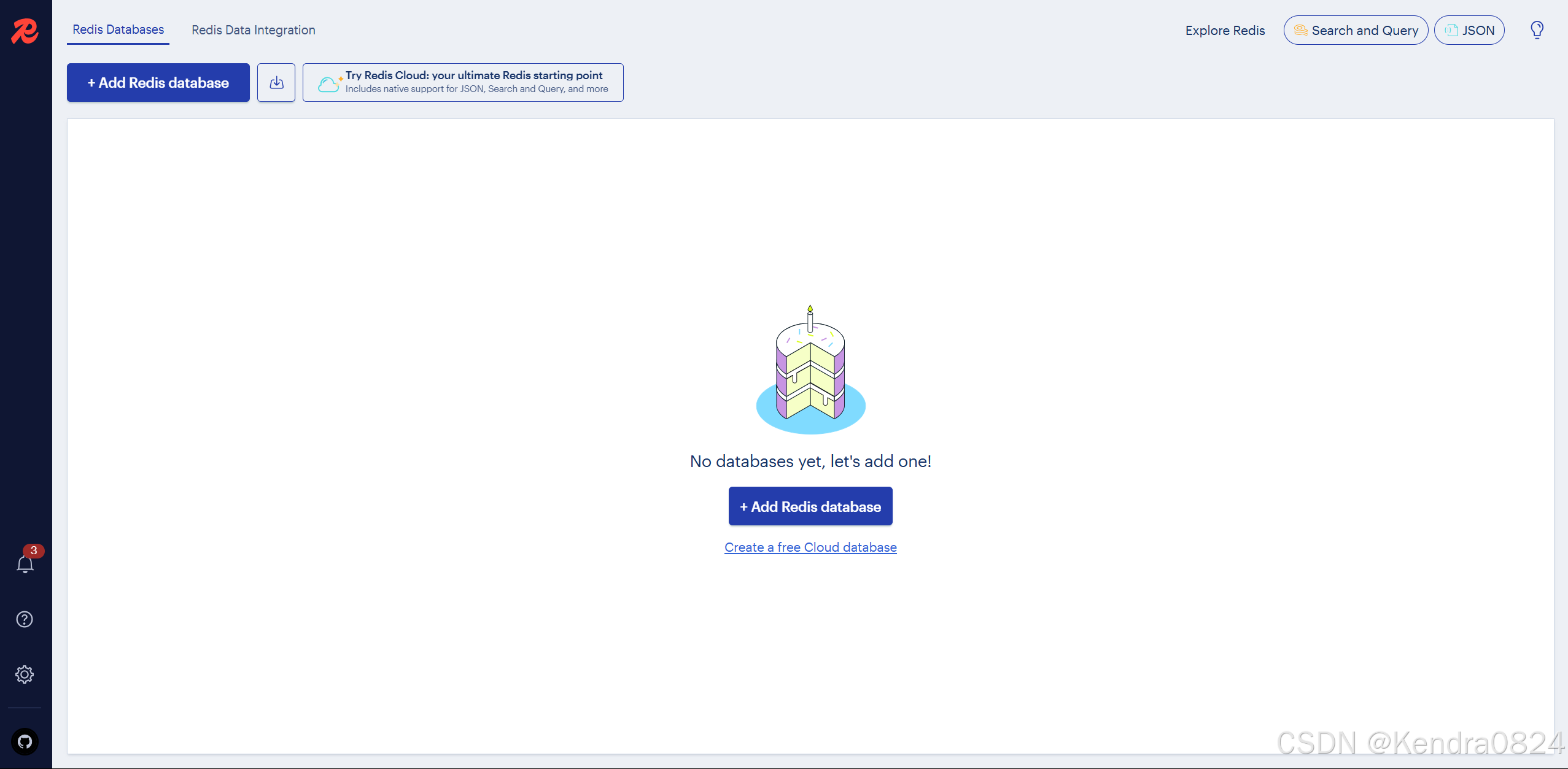

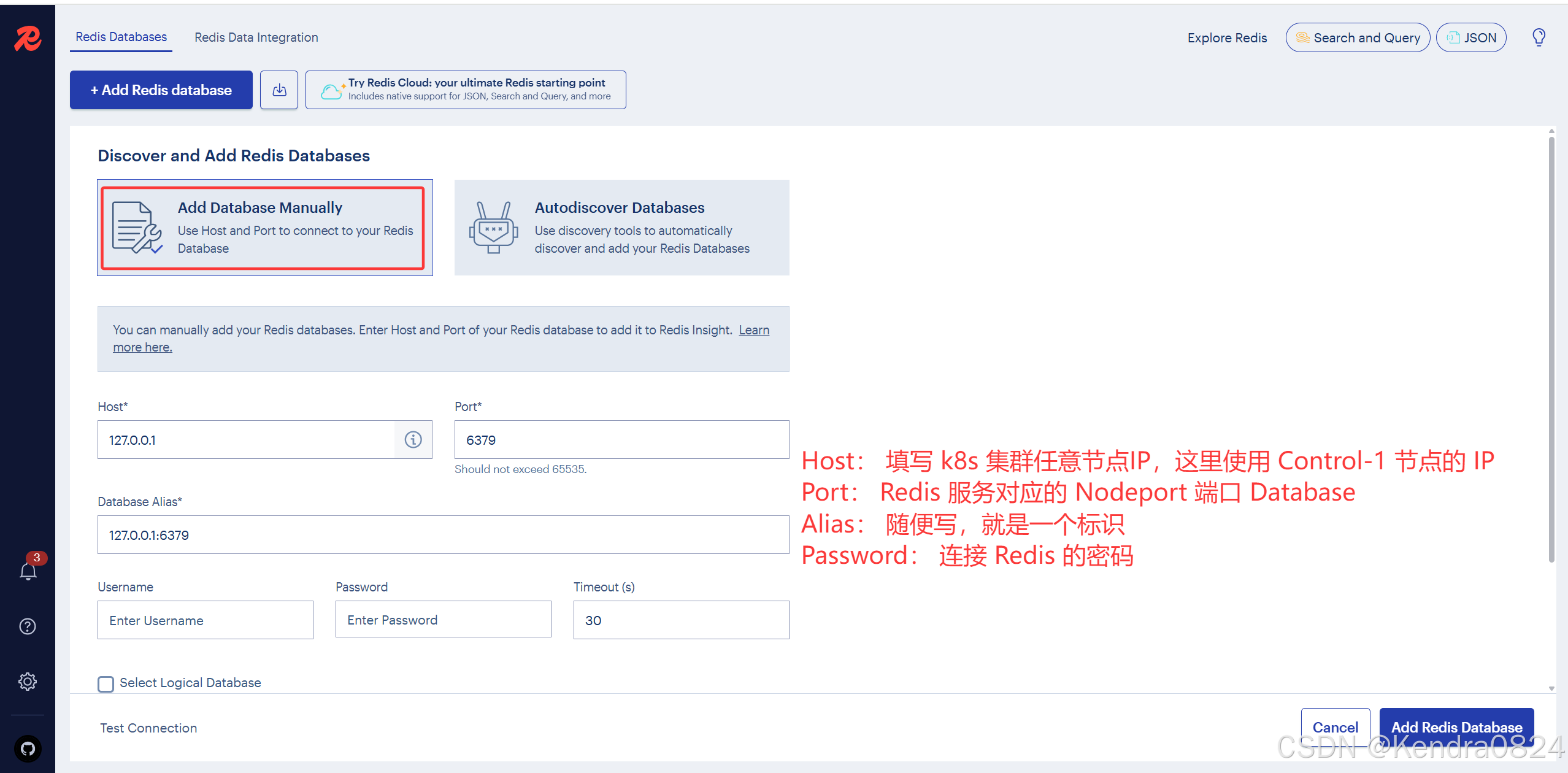

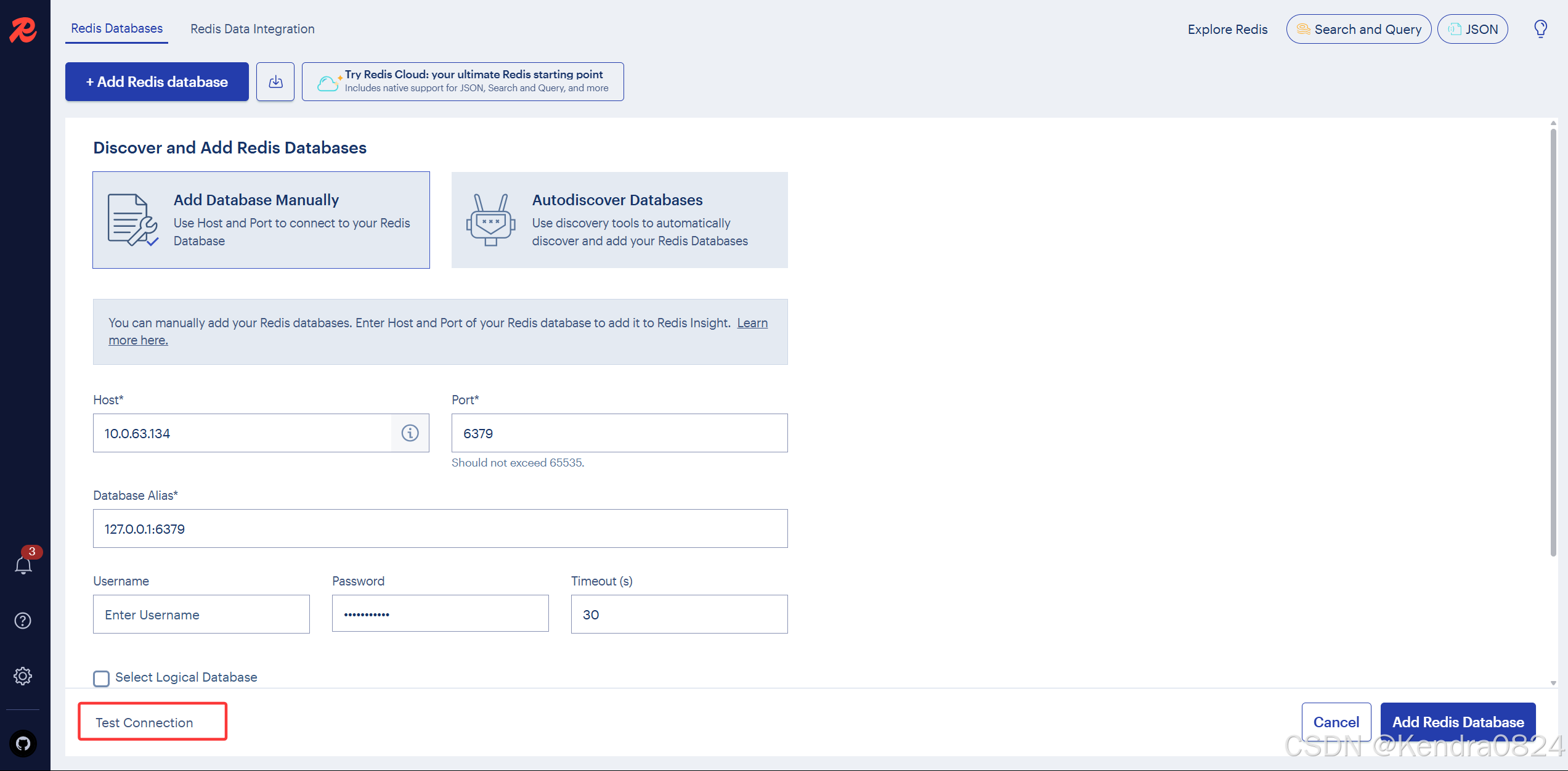

添加 Redis 數據庫: 點擊「Add Redis database」

選擇「Add Database Manually」,按提示填寫信息。

- Host: 填寫 k8s 集群任意節點IP,這里使用 Control-1 節點的 IP

(service/redis-cluster-external NodePort 10.0.63.134) - Port: Redis 服務對應的 Nodeport 端口 Database

- Alias: 隨便寫,就是一個標識

- Password: 連接 Redis 的密碼

點擊「Test Connection」,驗證 Redis 是否可以連接。確認無誤后,點擊「Add Redis Database」

控制臺概覽

在 Redis Databases 列表頁,點擊新添加的 Redis 數據庫,進入 Redis 管理頁面

- Workbench(可以執行 Redis 管理命令)

- Analytics

- Pub-Sub

:mybaits if標簽test條件判斷等號=解析異常解決方案)

)

)