首先需要將yolov8/yolov11的pt文件轉為onnx文件

from ultralytics import YOLO

model = YOLO("best.pt")

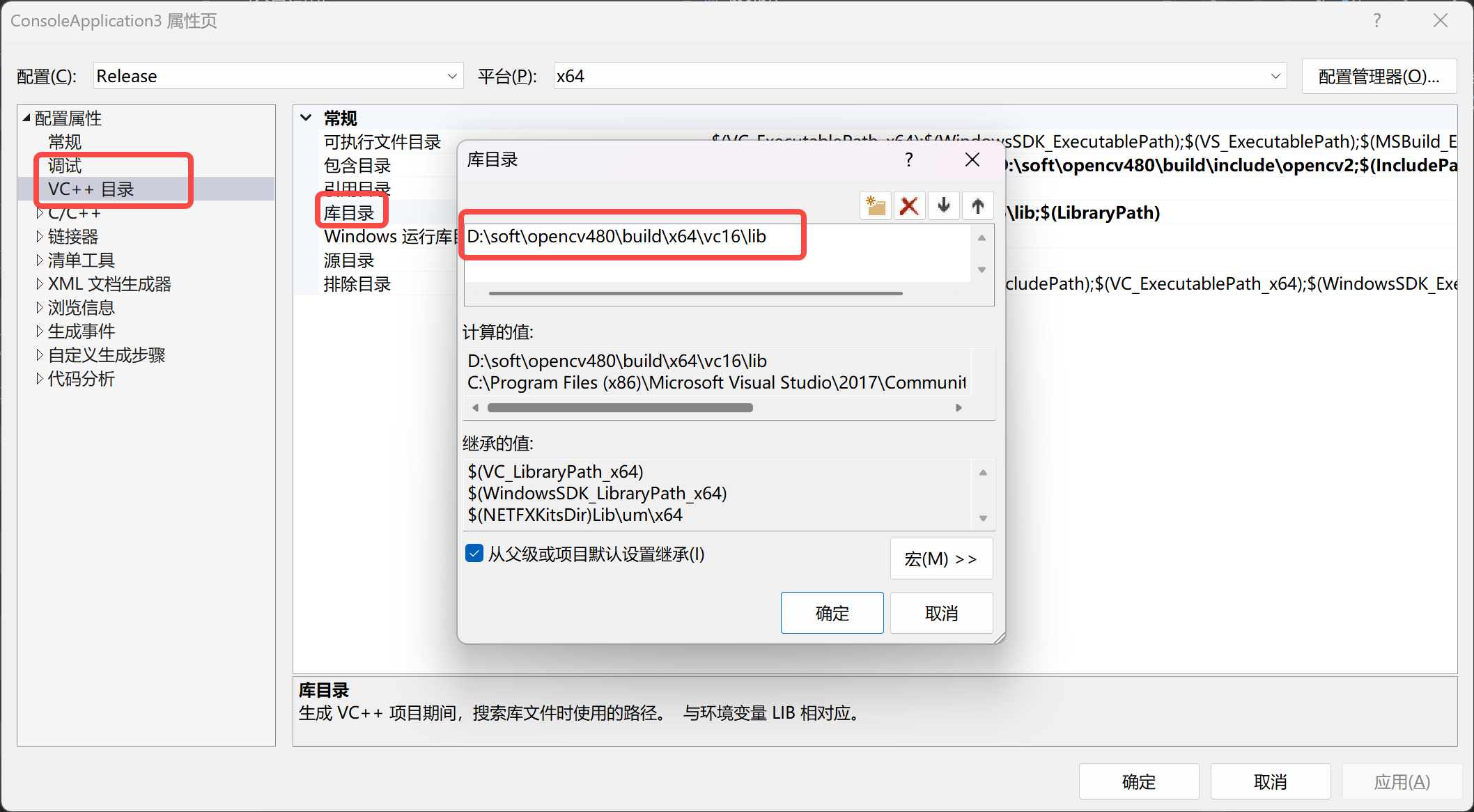

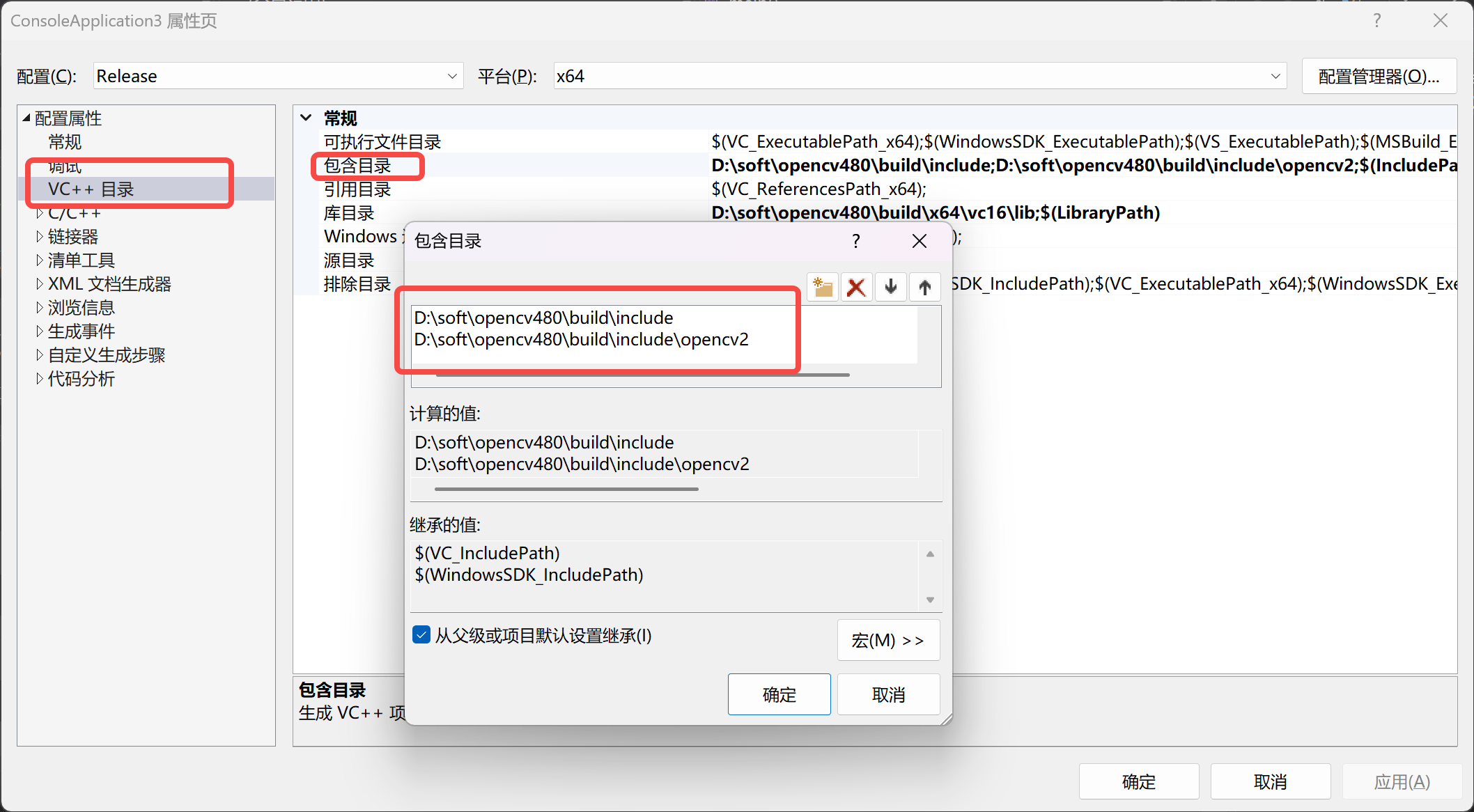

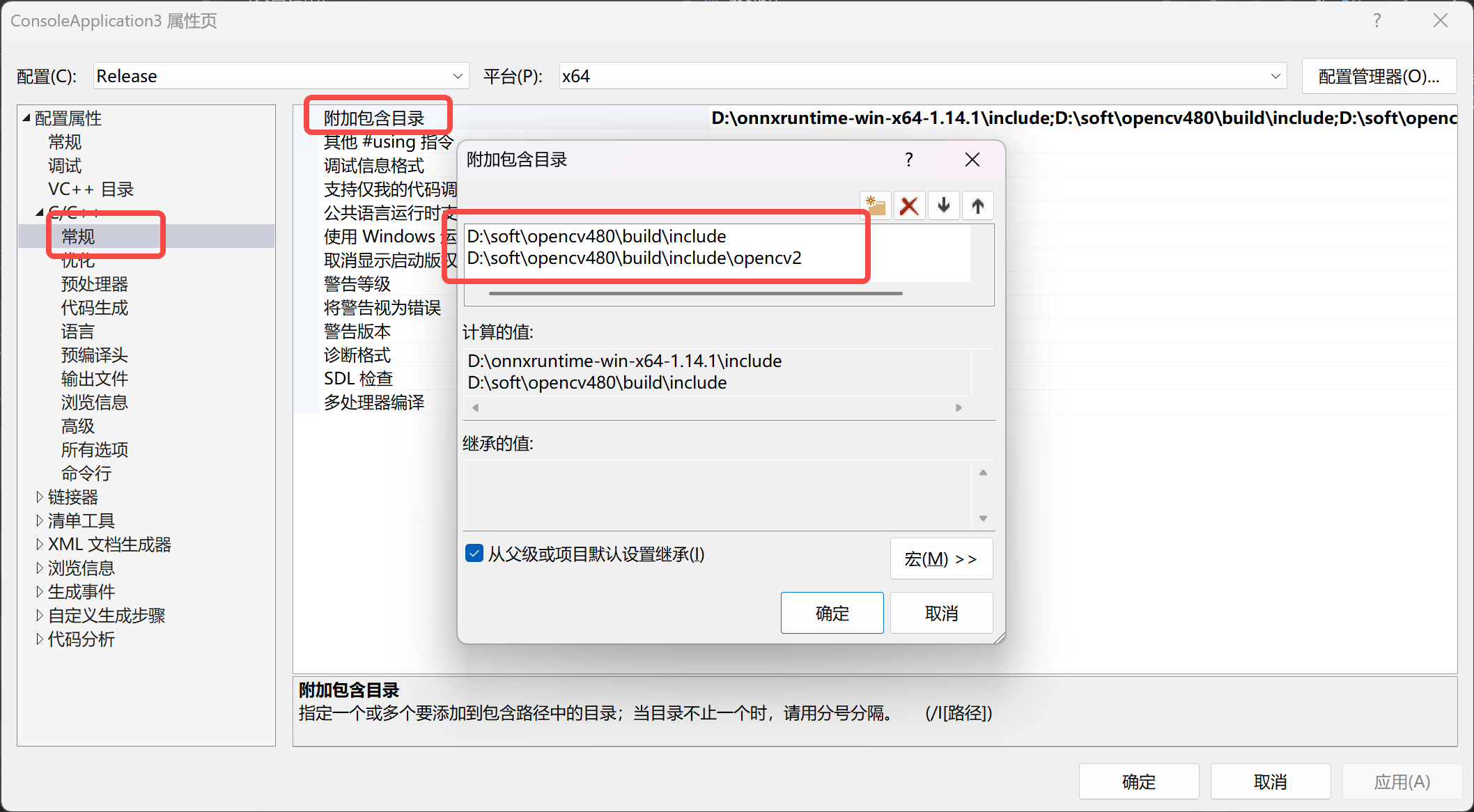

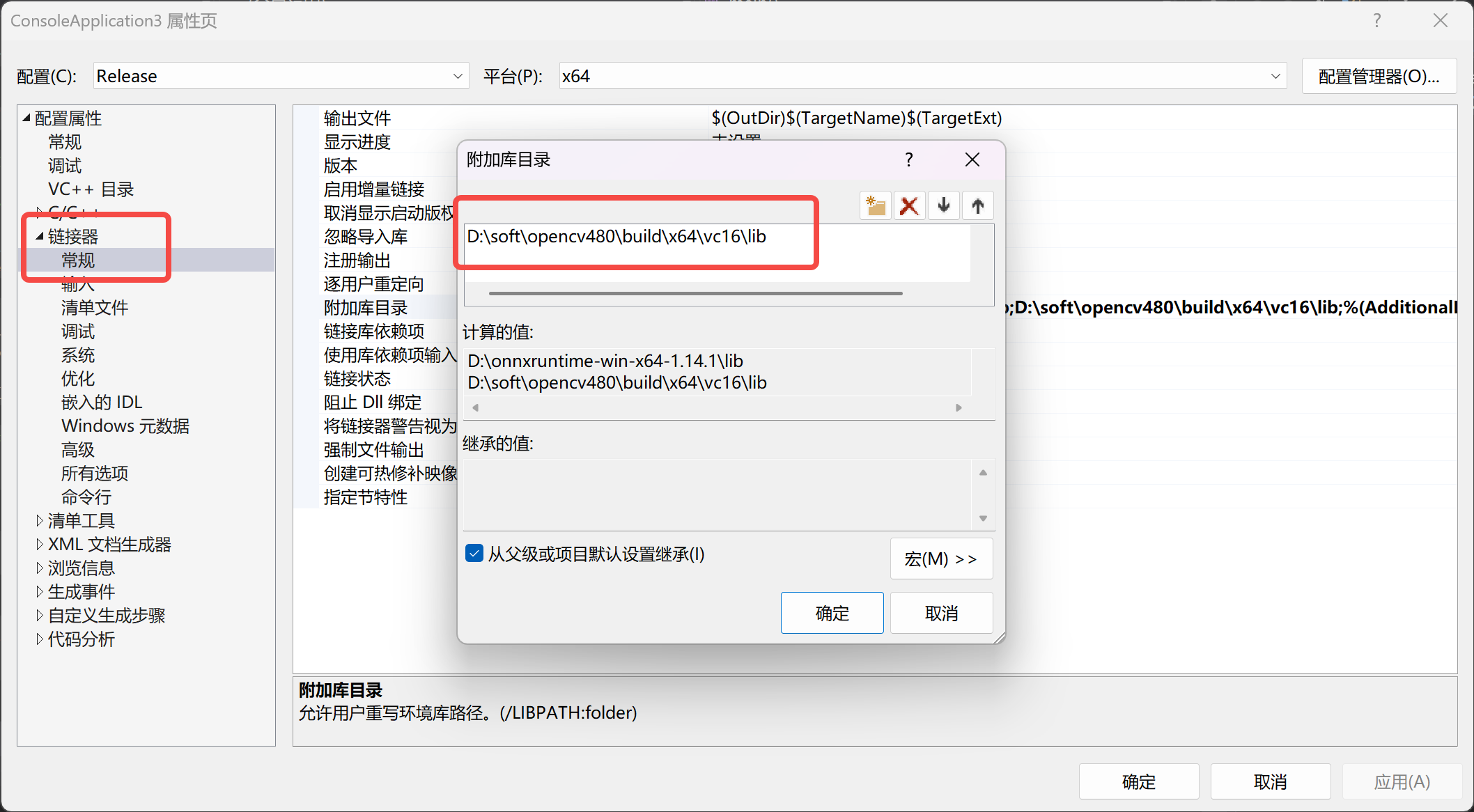

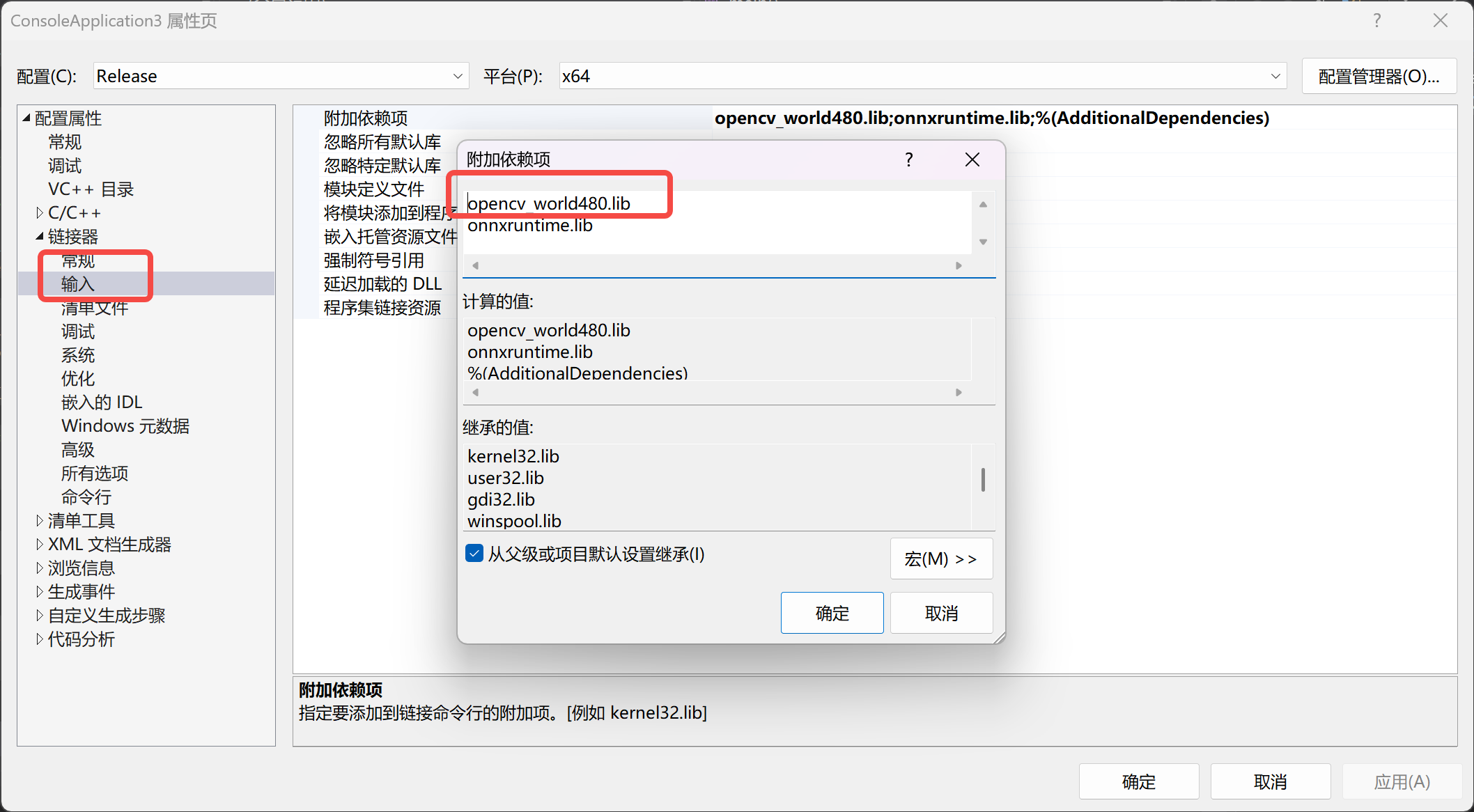

model.export(format="onnx",opset=11,dynamic=False)本次C++工具使用vs2017,需要下載OpenCV包:https://opencv.org/releases/,下在windows包即可,本次代碼opencv4.7.0和opencv4.8.0均正常運行,下載好后跟著下面的步驟進行配置。

#include <opencv2/opencv.hpp>

#include <opencv2/dnn.hpp>

#include <iostream>

#include <vector>

#include <string>

#include <random>

#include <chrono>

#include <fstream>using namespace cv;

using namespace cv::dnn;

using namespace std;

using namespace chrono;class YOLO {

private:float confidenceThreshold;float iouThreshold;Net net;int inputHeight;int inputWidth;vector<string> classes;vector<Scalar> colors;// 初始化類別void initClasses() {classes = { "black", "cue", "solid", "stripe" };//填入你需要的類別}// 生成隨機顏色void initColors() {random_device rd;mt19937 gen(rd());uniform_int_distribution<int> dist(0, 255);for (size_t i = 0; i < classes.size(); ++i) {colors.push_back(Scalar(dist(gen), dist(gen), dist(gen)));}}public:// 構造函數YOLO(const string& onnxModelPath, float confThreshold = 0.5f, float iouThreshold = 0.5f): confidenceThreshold(confThreshold), iouThreshold(iouThreshold),inputHeight(640), inputWidth(640) { //默認640,640try {// 加載模型net = readNetFromONNX(onnxModelPath);if (net.empty()) {throw runtime_error("無法加載ONNX模型: " + onnxModelPath);}// 設置計算后端和目標設備net.setPreferableBackend(DNN_BACKEND_OPENCV);net.setPreferableTarget(DNN_TARGET_CPU);// 初始化類別和顏色initClasses();initColors();// 打印網絡信息vector<String> layerNames = net.getLayerNames();vector<String> outputNames = net.getUnconnectedOutLayersNames();cout << "模型加載成功!" << endl;cout << "輸入尺寸: " << inputWidth << "x" << inputHeight << endl;cout << "網絡層數: " << layerNames.size() << endl;cout << "輸出層數: " << outputNames.size() << endl;for (size_t i = 0; i < outputNames.size(); i++) {cout << "輸出層[" << i << "]: " << outputNames[i] << endl;}}catch (const Exception& e) {cerr << "初始化YOLOv8失敗: " << e.what() << endl;throw;}}// 預處理圖像Mat preprocess(const Mat& image) {Mat blob;// 創建blob,BGR->RGB,歸一化到[0,1]blobFromImage(image, blob, 1.0 / 255.0, Size(inputWidth, inputHeight), Scalar(), true, false, CV_32F);return blob;}// 輸出張量信息用于調試void printTensorInfo(const Mat& tensor, const string& name) {cout << name << " 信息:" << endl;cout << " 維度: " << tensor.dims << endl;cout << " 形狀: [";for (int i = 0; i < tensor.dims; i++) {cout << tensor.size[i];if (i < tensor.dims - 1) cout << ", ";}cout << "]" << endl;cout << " 類型: " << tensor.type() << endl;cout << " 總元素數: " << tensor.total() << endl;}// 后處理void postprocess(const Mat& image, const vector<Mat>& outputs,vector<Rect>& boxes, vector<float>& confidences, vector<int>& classIds) {boxes.clear();confidences.clear();classIds.clear();if (outputs.empty()) {cerr << "錯誤: 模型輸出為空" << endl;return;}int imageHeight = image.rows;int imageWidth = image.cols;// 打印所有輸出的信息for (size_t i = 0; i < outputs.size(); i++) {printTensorInfo(outputs[i], "輸出[" + to_string(i) + "]");}// 獲取第一個輸出Mat output = outputs[0];// 確保輸出是浮點型if (output.type() != CV_32F) {output.convertTo(output, CV_32F);}int numClasses = classes.size();int numDetections = 0;int featuresPerDetection = 0;// 處理不同維度的輸出Mat processedOutput;if (output.dims == 3) {// 3維輸出: [batch, features, detections] 或 [batch, detections, features]int dim1 = output.size[1];int dim2 = output.size[2];cout << "處理3維輸出: [" << output.size[0] << ", " << dim1 << ", " << dim2 << "]" << endl;// 判斷格式if (dim1 == numClasses + 4) {// 格式: [1, 8, 8400] -> 轉換為 [8400, 8]numDetections = dim2;featuresPerDetection = dim1;processedOutput = Mat::zeros(numDetections, featuresPerDetection, CV_32F);// 手動轉置數據for (int i = 0; i < numDetections; i++) {for (int j = 0; j < featuresPerDetection; j++) {// 安全地訪問3D張量數據const float* data = output.ptr<float>(0);int index = j * numDetections + i;processedOutput.at<float>(i, j) = data[index];}}}else if (dim2 == numClasses + 4) {// 格式: [1, 8400, 8] -> 直接重塑為 [8400, 8]numDetections = dim1;featuresPerDetection = dim2;// 創建2D視圖processedOutput = Mat(numDetections, featuresPerDetection, CV_32F,(void*)output.ptr<float>(0));}else {cerr << "無法識別的3D輸出格式" << endl;return;}}else if (output.dims == 2) {// 2維輸出: [detections, features]cout << "處理2維輸出: [" << output.size[0] << ", " << output.size[1] << "]" << endl;numDetections = output.size[0];featuresPerDetection = output.size[1];processedOutput = output;}else {cerr << "不支持的輸出維度: " << output.dims << endl;return;}cout << "處理格式: " << numDetections << " 個檢測, 每個 " << featuresPerDetection << " 個特征" << endl;// 檢查特征數量是否正確if (featuresPerDetection != numClasses + 4) {cerr << "警告: 特征數量(" << featuresPerDetection << ")與期望值(" << numClasses + 4 << ")不匹配" << endl;}float x_factor = float(imageWidth) / float(inputWidth);float y_factor = float(imageHeight) / float(inputHeight);// 處理每個檢測for (int i = 0; i < numDetections; ++i) {const float* detection = processedOutput.ptr<float>(i);// 前4個值是邊界框坐標 [cx, cy, w, h]float cx = detection[0];float cy = detection[1];float w = detection[2];float h = detection[3];// 找到最高分的類別float maxScore = 0;int classId = -1;int availableClasses = min(numClasses, featuresPerDetection - 4);for (int j = 0; j < availableClasses; ++j) {float score = detection[4 + j];if (score > maxScore) {maxScore = score;classId = j;}}// 過濾低置信度if (maxScore > confidenceThreshold && classId >= 0 && classId < numClasses) {// 轉換坐標:中心點坐標轉換為左上角坐標float x1 = (cx - w / 2) * x_factor;float y1 = (cy - h / 2) * y_factor;float width = w * x_factor;float height = h * y_factor;// 確保邊界框在圖像范圍內x1 = max(0.0f, x1);y1 = max(0.0f, y1);width = min(width, float(imageWidth) - x1);height = min(height, float(imageHeight) - y1);if (width > 0 && height > 0) {boxes.push_back(Rect(int(x1), int(y1), int(width), int(height)));confidences.push_back(maxScore);classIds.push_back(classId);}}}cout << "NMS前檢測到 " << boxes.size() << " 個候選框" << endl;// 非極大值抑制vector<int> indices;if (!boxes.empty()) {NMSBoxes(boxes, confidences, confidenceThreshold, iouThreshold, indices);}// 應用NMS結果vector<Rect> tempBoxes;vector<float> tempConfidences;vector<int> tempClassIds;for (int i : indices) {tempBoxes.push_back(boxes[i]);tempConfidences.push_back(confidences[i]);tempClassIds.push_back(classIds[i]);}boxes = tempBoxes;confidences = tempConfidences;classIds = tempClassIds;cout << "NMS后保留 " << boxes.size() << " 個檢測框" << endl;}// 繪制檢測結果void drawDetections(Mat& image, const vector<Rect>& boxes,const vector<float>& confidences, const vector<int>& classIds) {for (size_t i = 0; i < boxes.size(); ++i) {Rect box = boxes[i];int classId = classIds[i];if (classId >= 0 && classId < colors.size()) {Scalar color = colors[classId];// 繪制邊界框rectangle(image, box, color, 2);// 繪制類別和置信度string label = classes[classId] + ": " +to_string(int(confidences[i] * 100)) + "%";// 計算文本尺寸int baseline;Size textSize = getTextSize(label, FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseline);// 繪制文本背景rectangle(image,Point(box.x, box.y - textSize.height - 10),Point(box.x + textSize.width, box.y),color, FILLED);// 繪制文本putText(image, label, Point(box.x, box.y - 5),FONT_HERSHEY_SIMPLEX, 0.5, Scalar(255, 255, 255), 1);}}}// 執行檢測 void detect(Mat& image, Mat& resultImage,vector<Rect>& boxes, vector<float>& confidences, vector<int>& classIds) {try {// 預處理cout << "開始預處理..." << endl;Mat blob = preprocess(image);cout << "預處理完成: [" << blob.size[0] << ", " << blob.size[1]<< ", " << blob.size[2] << ", " << blob.size[3] << "]" << endl;// 設置輸入net.setInput(blob);// 方法1: 使用簡單的forward()方法cout << "開始推理(方法1)..." << endl;auto start = high_resolution_clock::now();try {Mat output = net.forward();auto end = high_resolution_clock::now();vector<Mat> outputs;outputs.push_back(output);// 計算推理時間duration<double> inferenceTime = end - start;cout << "推理完成,耗時: " << inferenceTime.count() * 1000 << " 毫秒" << endl;// 后處理cout << "開始后處理..." << endl;postprocess(image, outputs, boxes, confidences, classIds);}catch (const Exception& e1) {cout << "方法1失敗: " << e1.what() << endl;// 方法2: 使用指定輸出層名稱的forward()方法cout << "嘗試方法2..." << endl;try {vector<String> outputNames = net.getUnconnectedOutLayersNames();if (!outputNames.empty()) {cout << "使用輸出層: " << outputNames[0] << endl;start = high_resolution_clock::now();vector<Mat> outputs;net.forward(outputs, outputNames);auto end = high_resolution_clock::now();duration<double> inferenceTime = end - start;cout << "推理完成,耗時: " << inferenceTime.count() * 1000 << " 毫秒" << endl;postprocess(image, outputs, boxes, confidences, classIds);}else {throw runtime_error("無法獲取輸出層名稱");}}catch (const Exception& e2) {cout << "方法2也失敗: " << e2.what() << endl;// 方法3: 使用所有輸出層cout << "嘗試方法3..." << endl;vector<int> outLayerIds = net.getUnconnectedOutLayers();vector<String> layerNames = net.getLayerNames();vector<String> outLayerNames;for (int id : outLayerIds) {outLayerNames.push_back(layerNames[id - 1]);}start = high_resolution_clock::now();vector<Mat> outputs;net.forward(outputs, outLayerNames);auto end = high_resolution_clock::now();duration<double> inferenceTime = end - start;cout << "推理完成,耗時: " << inferenceTime.count() * 1000 << " 毫秒" << endl;postprocess(image, outputs, boxes, confidences, classIds);}}// 繪制結果resultImage = image.clone();drawDetections(resultImage, boxes, confidences, classIds);cout << "最終檢測到 " << boxes.size() << " 個目標" << endl;}catch (const Exception& e) {cerr << "檢測過程中出錯: " << e.what() << endl;resultImage = image.clone();}}

};int main() {try {// 模型和圖像路徑string onnxModelPath = "yolov8.onnx";//填入你需要的onnx權重文件string imagePath = "test.jpg";//測試圖片// 檢查文件是否存在ifstream modelFile(onnxModelPath);if (!modelFile.good()) {cerr << "錯誤: 找不到模型文件 " << onnxModelPath << endl;return -1;}// 初始化YOLOv8模型cout << "初始化YOLOv8模型..." << endl;YOLO yolo(onnxModelPath, 0.5f, 0.4f);// 讀取圖像Mat image = imread(imagePath);if (image.empty()) {cerr << "無法讀取圖像: " << imagePath << endl;return -1;}cout << "圖像尺寸: " << image.cols << "x" << image.rows << endl;// 執行檢測Mat resultImage;vector<Rect> boxes;vector<float> confidences;vector<int> classIds;yolo.detect(image, resultImage, boxes, confidences, classIds);// 顯示結果if (!resultImage.empty()) {imshow("YOLOv8 Detection", resultImage);cout << "按任意鍵繼續..." << endl;waitKey(0);// 保存結果imwrite("result.jpg", resultImage);cout << "檢測結果已保存為 result.jpg" << endl;}destroyAllWindows();return 0;}catch (const exception& e) {cerr << "程序異常: " << e.what() << endl;return -1;}

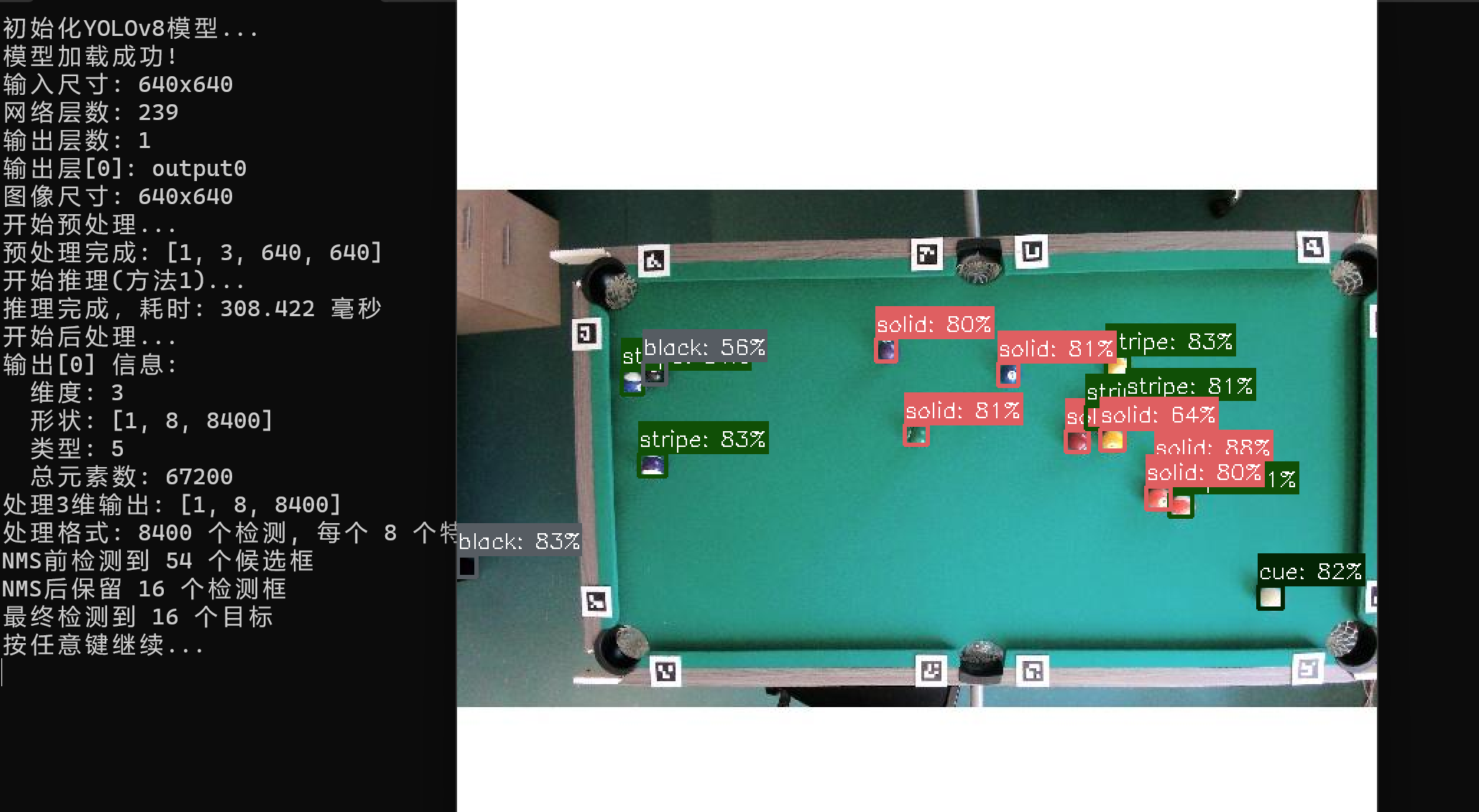

}運行結果:

)

)

![[自動化Adapt] 錄制引擎 | iframe 穿透 | NTP | AIOSQLite | 數據分片](http://pic.xiahunao.cn/[自動化Adapt] 錄制引擎 | iframe 穿透 | NTP | AIOSQLite | 數據分片)

)