概述

已完成

- 基礎 Text2SQL 功能實現

實現用戶輸入自然語言問題后,系統能夠自動生成 SQL 并執行返回結果。 - 用戶交互優化

支持用戶通過補充信息對查詢進行調整,提升易用性。 - 模糊時間處理機制

對“最近”“近期”等模糊時間關鍵詞進行補全或引導,提升時間表達準確性。 - 預定義高頻問題與回答

對一些可預見的問題設置高質量回復,提高響應質量和效率。 - 高質量測試集

- 引入高質量的測試集來測試Text2SQL的效果與準確率

后續優化

- 構建高質量知識庫

針對數據結構、字段定義、樣例問題等,持續完善知識庫,確保 SQL 生成的準確率。 - 引入持續學習機制

通過用戶反饋和歷史數據,不斷優化模型能力與表現。 - 智能體節點,自動糾錯

- 避免現在一次性生成SQL,多次生成糾錯,提高質量

- 多路徑打分機制

- 讓模型從多個角度(或不同 Prompt/Few-shot)生成多個 SQL 候選

- 再讓模型自己“評估哪一個最優”,從而選出最靠譜的一條執行

- 用戶意圖識別,問題泛化(系統級別)

- 對專業的業務知識、以及縮寫的理解:

例如:查詢四大行信息、四大銀行信息,其中四大行和四大銀行都是簡稱,需要轉化為數據庫存儲的內容,需要做映射

- 對專業的業務知識、以及縮寫的理解:

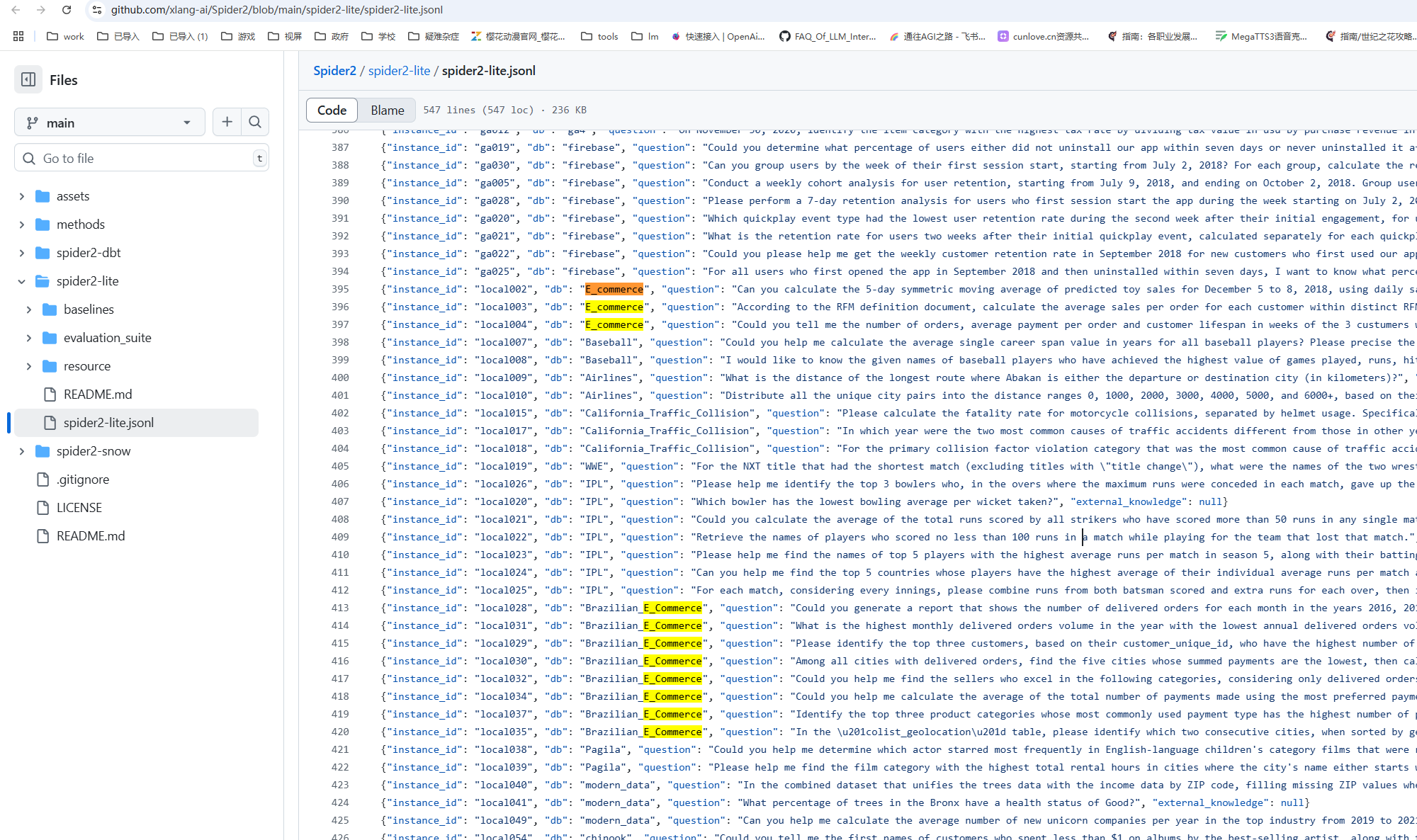

spider驗證集準備

spider驗證集github開源地址:https://github.com/xlang-ai/Spider2/blob/main/spider2-lite/spider2-lite.jsonl

- Download local database, unzip and put all the

<font style="color:rgb(31, 35, 40);background-color:rgba(129, 139, 152, 0.12);">.sqlite</font>files into directory<font style="color:rgb(31, 35, 40);background-color:rgba(129, 139, 152, 0.12);">spider2-lite/resource/databases/spider2-localdb</font>. - 寫python腳本,將.sqlite文件的內容存儲到pgsql,本次驗證集使用brazilian_E_Commerce數據庫作為測試數據庫,因此只創建和初始化brazilian_E_Commerce數據庫

創建表和數據庫

CREATE TABLE olist_customers ( customer_id TEXT, customer_unique_id TEXT, customer_zip_code_prefix BIGINT, customer_city TEXT, customer_state TEXT );CREATE TABLE olist_sellers ( seller_id TEXT, seller_zip_code_prefix BIGINT, seller_city TEXT, seller_state TEXT );CREATE TABLE olist_order_reviews ( review_id TEXT, order_id TEXT, review_score BIGINT, review_comment_title TEXT, review_comment_message TEXT, review_creation_date TEXT, review_answer_timestamp TEXT );CREATE TABLE olist_order_items ( order_id TEXT, order_item_id BIGINT, product_id TEXT, seller_id TEXT, shipping_limit_date TEXT, price FLOAT, freight_value FLOAT );CREATE TABLE olist_products ( product_id TEXT, product_category_name TEXT, product_name_lenght FLOAT, product_description_lenght FLOAT, product_photos_qty FLOAT, product_weight_g FLOAT, product_length_cm FLOAT, product_height_cm FLOAT, product_width_cm FLOAT );CREATE TABLE olist_geolocation ( geolocation_zip_code_prefix BIGINT, geolocation_lat FLOAT, geolocation_lng FLOAT, geolocation_city TEXT, geolocation_state TEXT );CREATE TABLE product_category_name_translation ( product_category_name TEXT, product_category_name_english TEXT );CREATE TABLE olist_orders ( order_id TEXT, customer_id TEXT, order_status TEXT, order_purchase_timestamp TEXT, order_approved_at TEXT, order_delivered_carrier_date TEXT, order_delivered_customer_date TEXT, order_estimated_delivery_date TEXT );CREATE TABLE olist_order_payments ( order_id TEXT, payment_sequential BIGINT, payment_type TEXT, payment_installments BIGINT, payment_value FLOAT );CREATE TABLE olist_products_dataset ( index BIGINT, product_id TEXT, product_category_name TEXT, product_name_lenght FLOAT, product_description_lenght FLOAT, product_photos_qty FLOAT, product_weight_g FLOAT, product_length_cm FLOAT, product_height_cm FLOAT, product_width_cm FLOAT );

python腳本將sqlite數據存儲到pgsql

import sqlite3

import psycopg2

from psycopg2.extensions import ISOLATION_LEVEL_AUTOCOMMITSQLITE_FILE = "Brazilian_E_Commerce.sqlite"

PG_HOST = "127.0.0.1"

PG_PORT = 5432

PG_USER = "postgres"

PG_PASSWORD = "difyai123456"

PG_DB_NAME = "brazilian_e_commerce"

BATCH_SIZE = 1000 # 每批插入多少行def create_postgres_database():conn = psycopg2.connect(dbname='postgres', user=PG_USER, password=PG_PASSWORD,host=PG_HOST, port=PG_PORT)conn.set_isolation_level(ISOLATION_LEVEL_AUTOCOMMIT)cur = conn.cursor()cur.execute(f"SELECT 1 FROM pg_database WHERE datname = '{PG_DB_NAME}';")if not cur.fetchone():cur.execute(f"CREATE DATABASE {PG_DB_NAME};")print(f" 數據庫 {PG_DB_NAME} 已創建")else:print(f"數據庫 {PG_DB_NAME} 已存在")cur.close()conn.close()# ---------- 連接 SQLite 和 PostgreSQL ----------

sqlite_conn = sqlite3.connect(SQLITE_FILE)

sqlite_cursor = sqlite_conn.cursor()create_postgres_database()pg_conn = psycopg2.connect(dbname=PG_DB_NAME, user=PG_USER, password=PG_PASSWORD,host=PG_HOST, port=PG_PORT

)

pg_cursor = pg_conn.cursor()# ---------- 獲取 SQLite 所有表 ----------

sqlite_cursor.execute("SELECT name FROM sqlite_master WHERE type='table';")

tables = [row[0] for row in sqlite_cursor.fetchall()]# ---------- 遍歷所有表 ----------

for table in tables:print(f"\n 正在遷移表:{table}")# 獲取字段信息sqlite_cursor.execute(f"PRAGMA table_info({table})")columns_info = sqlite_cursor.fetchall()col_names = [col[1] for col in columns_info]placeholders = ','.join(['%s'] * len(col_names))insert_sql = f'INSERT INTO "{table}" ({", ".join(col_names)}) VALUES ({placeholders})'# 檢查目標表是否存在pg_cursor.execute("SELECT EXISTS (SELECT FROM information_schema.tables WHERE table_schema = 'public' AND table_name = %s);",(table,))exists = pg_cursor.fetchone()[0]if not exists:print(f" PostgreSQL 中未找到表 {table},請先手動建表。")continue# 分批讀取和寫入sqlite_cursor.execute(f'SELECT * FROM "{table}"')total_inserted = 0while True:batch = sqlite_cursor.fetchmany(BATCH_SIZE)if not batch:breakpg_cursor.executemany(insert_sql, batch)pg_conn.commit()total_inserted += len(batch)print(f"已導入 {total_inserted} 行...", end="\r")print(f"表 {table} 導入完成,共 {total_inserted} 行")# ---------- 清理資源 ----------

sqlite_cursor.close()

sqlite_conn.close()

pg_cursor.close()

pg_conn.close()print("\n🎉 所有表已成功遷移到 PostgreSQL!")- 關于spider問題的說明:

示例問題存儲地址

示例sql存儲地址

測試問題

{"instance_id": "local028", "db": "Brazilian_E_Commerce", "question": "Could you generate a report that shows the number of delivered orders for each month in the years 2016, 2017, and 2018? Each column represents a year, and each row represents a month", "external_knowledge": null}

{"instance_id": "local031", "db": "Brazilian_E_Commerce", "question": "What is the highest monthly delivered orders volume in the year with the lowest annual delivered orders volume among 2016, 2017, and 2018?", "external_knowledge": null}

{"instance_id": "local029", "db": "Brazilian_E_Commerce", "question": "Please identify the top three customers, based on their customer_unique_id, who have the highest number of delivered orders, and provide the average payment value, city, and state for each of these customers.", "external_knowledge": null}

{"instance_id": "local030", "db": "Brazilian_E_Commerce", "question": "Among all cities with delivered orders, find the five cities whose summed payments are the lowest, then calculate the average of their total payments and the average of their total delivered order counts.", "external_knowledge": null}

{"instance_id": "local032", "db": "Brazilian_E_Commerce", "question": "Could you help me find the sellers who excel in the following categories, considering only delivered orders: the seller with the highest number of distinct customer unique IDs, the seller with the highest profit (calculated as price minus freight value), the seller with the highest number of distinct orders, and the seller with the most 5-star ratings? For each category, please provide the seller ID and the corresponding value, labeling each row with a description of the achievement.", "external_knowledge": null}

{"instance_id": "local034", "db": "Brazilian_E_Commerce", "question": "Could you help me calculate the average of the total number of payments made using the most preferred payment method for each product category, where the most preferred payment method in a category is the one with the highest number of payments?", "external_knowledge": null}

{"instance_id": "local037", "db": "Brazilian_E_Commerce", "question": "Identify the top three product categories whose most commonly used payment type has the highest number of payments across all categories, and specify the number of payments made in each category using that payment type.", "external_knowledge": null}

{"instance_id": "local035", "db": "Brazilian_E_Commerce", "question": "In the \u201colist_geolocation\u201d table, please identify which two consecutive cities, when sorted by geolocation_state, geolocation_city, geolocation_zip_code_prefix, geolocation_lat, and geolocation_lng, have the greatest distance between them based on the difference in distance computed between each city and its immediate predecessor in that ordering.", "external_knowledge": "spherical_law.md"}

local028(通過,基本符合)

Could you generate a report that shows the number of delivered orders for each month in the years 2016, 2017, and 2018? Each column represents a year, and each row represents a month

# 生成SQL

SELECTTO_CHAR( TO_DATE( order_delivered_customer_date, 'YYYY-MM-DD' ), 'Month' ) AS MONTH,COUNT ( CASE WHEN TO_DATE( order_delivered_customer_date, 'YYYY-MM-DD' ) BETWEEN '2016-01-01' AND '2016-12-31' THEN order_id END ) AS year_2016,COUNT ( CASE WHEN TO_DATE( order_delivered_customer_date, 'YYYY-MM-DD' ) BETWEEN '2017-01-01' AND '2017-12-31' THEN order_id END ) AS year_2017,COUNT ( CASE WHEN TO_DATE( order_delivered_customer_date, 'YYYY-MM-DD' ) BETWEEN '2018-01-01' AND '2018-12-31' THEN order_id END ) AS year_2018

FROMolist_orders

WHEREorder_status = 'delivered' AND order_delivered_customer_date IS NOT NULL AND TO_DATE( order_delivered_customer_date, 'YYYY-MM-DD' ) BETWEEN '2016-01-01' AND '2018-12-31'

GROUP BYTO_CHAR( TO_DATE( order_delivered_customer_date, 'YYYY-MM-DD' ), 'Month' )

ORDER BYTO_DATE( TO_CHAR( TO_DATE( order_delivered_customer_date, 'YYYY-MM-DD' ), 'Month' ), 'Month' );

分析

TO_CHAR(..., 'Month') 的問題

- 返回的是字符串,比如

'January '(注意:固定長度,右側填充空格); - 導致排序不準:字符串

'April '會比'February '排前面。

優化版本

SELECTTO_CHAR(order_delivered_customer_date, 'MM') AS month_num,TO_CHAR(order_delivered_customer_date, 'Month') AS month_name,COUNT(CASE WHEN EXTRACT(YEAR FROM order_delivered_customer_date) = 2016 THEN order_id END) AS year_2016,COUNT(CASE WHEN EXTRACT(YEAR FROM order_delivered_customer_date) = 2017 THEN order_id END) AS year_2017,COUNT(CASE WHEN EXTRACT(YEAR FROM order_delivered_customer_date) = 2018 THEN order_id END) AS year_2018

FROM olist_orders

WHERE order_status = 'delivered'AND order_delivered_customer_date BETWEEN '2016-01-01' AND '2018-12-31'

GROUP BY month_num, month_name

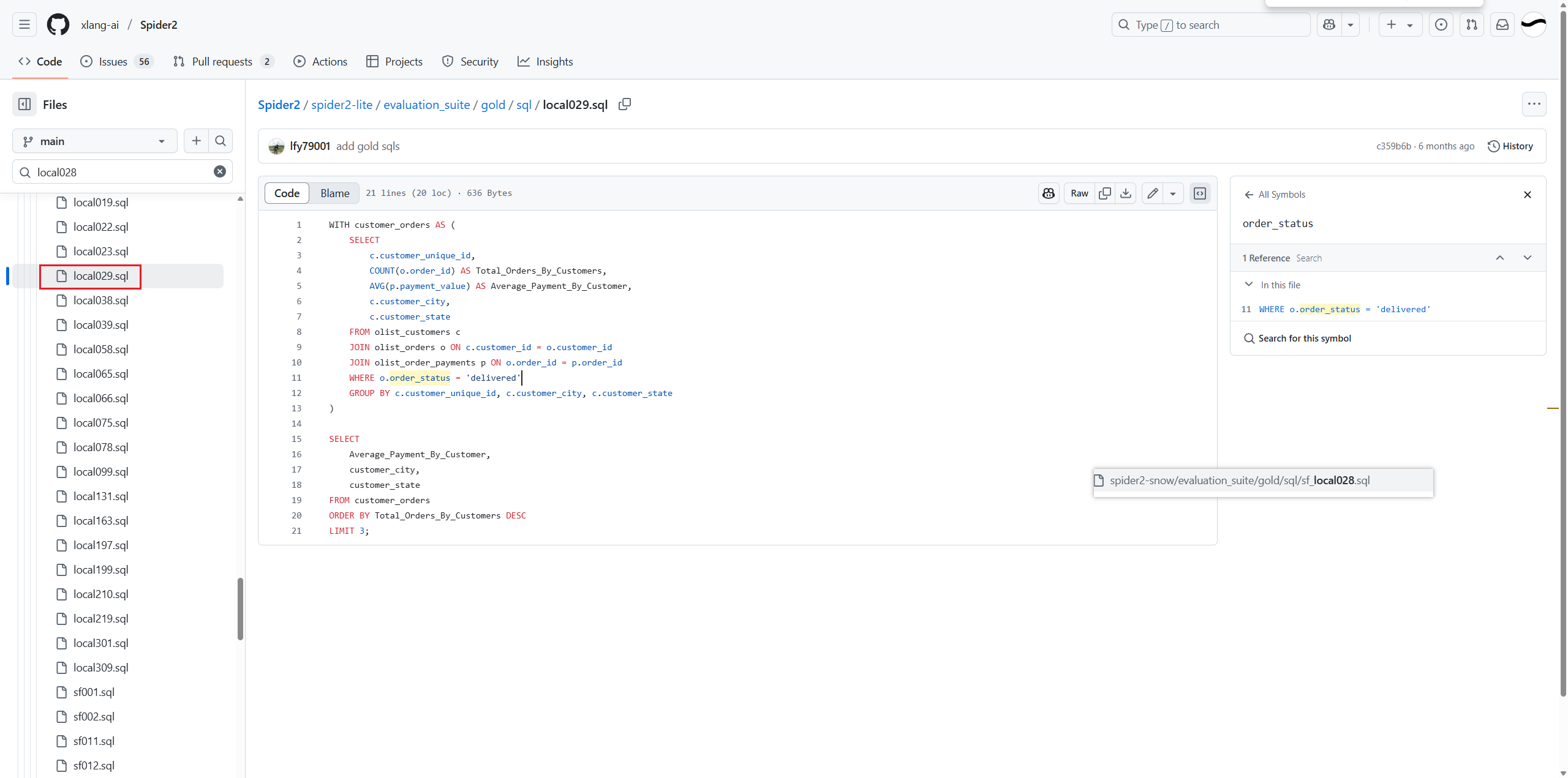

ORDER BY month_num;local029(通過-完全符合)

Please identify the top three customers, based on their customer_unique_id, who have the highest number of delivered orders, and provide the average payment value, city, and state for each of these customers.

# 預期SQL

WITH customer_orders AS (SELECTc.customer_unique_id,COUNT(o.order_id) AS Total_Orders_By_Customers,AVG(p.payment_value) AS Average_Payment_By_Customer,c.customer_city,c.customer_stateFROM olist_customers cJOIN olist_orders o ON c.customer_id = o.customer_idJOIN olist_order_payments p ON o.order_id = p.order_idWHERE o.order_status = 'delivered'GROUP BY c.customer_unique_id, c.customer_city, c.customer_state

)SELECT Average_Payment_By_Customer,customer_city,customer_state

FROM customer_orders

ORDER BY Total_Orders_By_Customers DESC

LIMIT 3;# 模型生成SQL

SELECT C.customer_unique_id,COUNT ( o.order_id ) AS delivered_orders_count,ROUND( AVG ( CAST ( P.payment_value AS NUMERIC ) ), 2 ) AS avg_payment_value,C.customer_city,C.customer_state

FROMolist_customersC JOIN olist_orders o ON C.customer_id = o.customer_idJOIN olist_order_payments P ON o.order_id = P.order_id

WHEREo.order_status = 'delivered'

GROUP BYC.customer_unique_id,C.customer_city,C.customer_state

ORDER BYdelivered_orders_count DESC LIMIT 3;

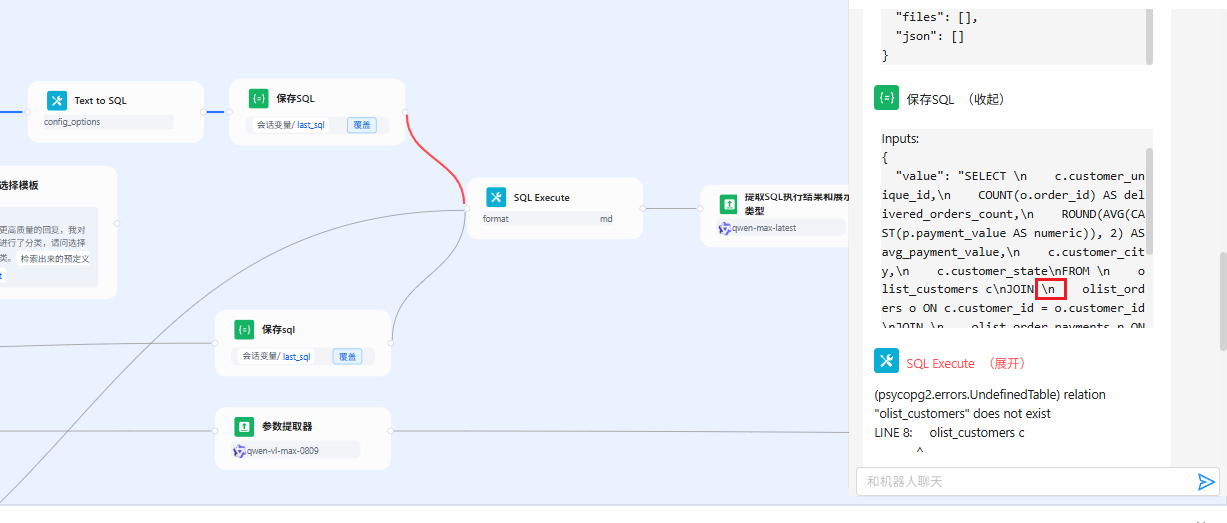

錯誤部分:

生成\n導致SQL執行出錯

local030(通過-完全符合-但是流程有小瑕疵)

Among all cities with delivered orders, find the five cities whose summed payments are the lowest, then calculate the average of their total payments and the average of their total delivered order counts.

輸出結果:有點問題,這和我寫的Prompt有點出入,看來在text2sql這個節點還是改為智能體能夠循環糾錯比較好。

Here’s the PostgreSQL query to find the five cities with the lowest summed payments for delivered orders, then calculate the averages of their total payments and delivered order counts:\n\nSELECT \n AVG(total_payment) AS avg_total_payment,\n AVG(order_count) AS avg_order_count\nFROM (\n SELECT \n c.customer_city,\n SUM(p.payment_value) AS total_payment,\n COUNT(DISTINCT o.order_id) AS order_count\n FROM olist_orders o\n JOIN olist_order_payments p ON o.order_id = p.order_id\n JOIN olist_customers c ON o.customer_id = c.customer_id\n WHERE o.order_status = ‘delivered’\n GROUP BY c.customer_city\n ORDER BY total_payment ASC\n LIMIT 5\n) AS lowest_payment_cities;\n\nNote: I noticed a potential typo in the condition - I used ‘delivered’ as the order_status value based on the context, but please verify if this is the correct status value for delivered orders in your dataset. If it should be ‘delivered’ or another value, please adjust accordingly.

# 拆解出來的SQL如下

SELECT AVG( total_payment ) AS avg_total_payment,AVG ( order_count ) AS avg_order_count

FROM(SELECT C.customer_city,SUM ( P.payment_value ) AS total_payment,COUNT ( DISTINCT o.order_id ) AS order_count FROMolist_orders oJOIN olist_order_payments P ON o.order_id = P.order_idJOIN olist_customers C ON o.customer_id = C.customer_id WHEREo.order_status = 'delivered' GROUP BYC.customer_city ORDER BYtotal_payment ASC LIMIT 5 ) AS lowest_payment_cities;

分析

需要再text2sql的時候顯示指出不允許生成解釋信息的提示

local031(語法報錯-已解決)

What is the highest monthly delivered orders volume in the year with the lowest annual delivered orders volume among 2016, 2017, and 2018?

WITH annual_orders AS (SELECT EXTRACT( YEAR FROM CAST ( order_delivered_customer_date AS DATE ) ) AS YEAR,COUNT ( * ) AS annual_count FROMolist_orders WHEREorder_status = 'delivered' AND EXTRACT ( YEAR FROM CAST ( order_delivered_customer_date AS DATE ) ) IN ( 2016, 2017, 2018 ) GROUP BYEXTRACT ( YEAR FROM CAST ( order_delivered_customer_date AS DATE ) ) SELECT EXTRACT( MONTH FROM CAST ( o.order_delivered_customer_date AS DATE ) ) AS MONTH,COUNT ( * ) AS monthly_count FROMolist_orders o WHEREorder_status = 'delivered' AND EXTRACT ( YEAR FROM CAST ( o.order_delivered_customer_date AS DATE ) ) = ( SELECT YEAR FROM annual_orders ORDER BY annual_count ASC LIMIT 1 ) GROUP BYEXTRACT ( MONTH FROM CAST ( o.order_delivered_customer_date AS DATE ) ) ORDER BYmonthly_count DESC LIMIT 1;

解決方案:增加一個校驗LLM

## 角色定義:

你是一個 SQL 質量審查助手,擅長根據用戶提出的自然語言問題,判斷其對應 SQL 語句是否準確實現了意圖,并在不吻合時對SQL進行修改,修改成吻合用戶意圖的SQL, 最終輸出為可執行SQL, 不需要任何解釋。你使用的SQL引擎是 環境變量 / sql_engine_type ;## 輸入內容:

自然語言問題(用戶需求): 會話變量 / last_question ;

用戶提供的 SQL 語句: Text to SQL / text ;

? 任務目標

你需要:

1. 判斷 SQL 是否完整且準確地實現了自然語言問題中的要求;

2. 找出不匹配的部分或潛在缺陷(如缺失的篩選條件、錯誤的聚合邏輯、多字段誤用等);

3. 如果發現問題,提供一份優化后的可直接執行的 SQL ,不需要任何解釋;

4. 如果沒有問題,則原樣輸出SQL;

5. 性能優化,如果有多余的join可以嘗試去除,優化SQL執行效率;

WITH YearlyDeliveries AS (SELECT EXTRACT( YEAR FROM order_delivered_customer_date :: TIMESTAMP ) AS YEAR,COUNT ( * ) AS annual_delivery_count FROMolist_orders WHEREorder_status = 'delivered' AND order_delivered_customer_date IS NOT NULL AND EXTRACT ( YEAR FROM order_delivered_customer_date :: TIMESTAMP ) IN ( 2016, 2017, 2018 ) GROUP BYYEAR ),LowestYear AS ( SELECT YEAR FROM YearlyDeliveries ORDER BY annual_delivery_count ASC LIMIT 1 ),MonthlyDeliveries AS (SELECT EXTRACT( YEAR FROM order_delivered_customer_date :: TIMESTAMP ) AS YEAR,EXTRACT ( MONTH FROM order_delivered_customer_date :: TIMESTAMP ) AS MONTH,COUNT ( * ) AS monthly_delivery_count FROMolist_orders WHEREorder_status = 'delivered' AND order_delivered_customer_date IS NOT NULL AND EXTRACT ( YEAR FROM order_delivered_customer_date :: TIMESTAMP ) = ( SELECT YEAR FROM LowestYear ) GROUP BYYEAR,MONTH ) SELECT MAX( monthly_delivery_count ) AS highest_monthly_delivery_count

FROMMonthlyDeliveries;

local032(語法錯誤-已解決)

Could you help me find the sellers who excel in the following categories, considering only delivered orders: the seller with the highest number of distinct customer unique IDs, the seller with the highest profit (calculated as price minus freight value), the seller with the highest number of distinct orders, and the seller with the most 5-star ratings? For each category, please provide the seller ID and the corresponding value, labeling each row with a description of the achievement.

生成結果:

Here’s the PostgreSQL query to find the top sellers in each requested category for delivered orders:\n\nSELECT \n ‘Seller with most distinct customers’ AS achievement,\n seller_id,\n COUNT(DISTINCT customer_id) AS value\nFROM olist_order_items oi\nJOIN olist_orders o ON oi.order_id = o.order_id\nWHERE o.order_status = ‘delivered’\nGROUP BY seller_id\nORDER BY value DESC\nLIMIT 1\n\nUNION ALL\n\nSELECT \n ‘Seller with highest profit’ AS achievement,\n seller_id,\n SUM(price - freight_value) AS value\nFROM olist_order_items oi\nJOIN olist_orders o ON oi.order_id = o.order_id\nWHERE o.order_status = ‘delivered’\nGROUP BY seller_id\nORDER BY value DESC\nLIMIT 1\n\nUNION ALL\n\nSELECT \n ‘Seller with most distinct orders’ AS achievement,\n seller_id,\n COUNT(DISTINCT oi.order_id) AS value\nFROM olist_order_items oi\nJOIN olist_orders o ON oi.order_id = o.order_id\nWHERE o.order_status = ‘delivered’\nGROUP BY seller_id\nORDER BY value DESC\nLIMIT 1\n\nUNION ALL\n\nSELECT \n ‘Seller with most 5-star ratings’ AS achievement,\n seller_id,\n COUNT(*) AS value\nFROM olist_order_items oi\nJOIN olist_orders o ON oi.order_id = o.order_id\nJOIN olist_order_reviews r ON o.order_id = r.order_id\nWHERE o.order_status = ‘delivered’ AND r.review_score = 5\nGROUP BY seller_id\nORDER BY value DESC\nLIMIT 1;\n\nNote: I noticed the schema didn’t include the olist_order_reviews table which would be needed for the 5-star ratings query. You would need to provide that table’s schema for that part of the query to work. The other three queries will work with the provided schema.

SELECT'Seller with most distinct customers' AS achievement,seller_id,COUNT ( DISTINCT customer_id ) AS

VALUEFROMolist_order_items oiJOIN olist_orders o ON oi.order_id = o.order_id

WHEREo.order_status = 'delivered'

GROUP BYseller_id

ORDER BYVALUE

DESC LIMIT 1 UNION ALL

SELECT'Seller with highest profit' AS achievement,seller_id,SUM ( price - freight_value ) AS

VALUEFROMolist_order_items oiJOIN olist_orders o ON oi.order_id = o.order_id

WHEREo.order_status = 'delivered'

GROUP BYseller_id

ORDER BYVALUE

DESC LIMIT 1 UNION ALL

SELECT'Seller with most distinct orders' AS achievement,seller_id,COUNT ( DISTINCT oi.order_id ) AS

VALUEFROMolist_order_items oiJOIN olist_orders o ON oi.order_id = o.order_id

WHEREo.order_status = 'delivered'

GROUP BYseller_id

ORDER BYVALUE

DESC LIMIT 1 UNION ALL

SELECT'Seller with most 5-star ratings' AS achievement,seller_id,COUNT ( * ) AS

VALUEFROMolist_order_items oiJOIN olist_orders o ON oi.order_id = o.order_idJOIN olist_order_reviews r ON o.order_id = r.order_id

WHEREo.order_status = 'delivered' AND r.review_score = 5

GROUP BYseller_id

ORDER BYVALUE

DESC LIMIT 1

解決方案:校驗LLM

WITH distinct_customers AS (SELECTseller_id,COUNT ( DISTINCT customer_unique_id ) AS VALUEFROMolist_order_items oiJOIN olist_orders o ON oi.order_id = o.order_idJOIN olist_customers C ON o.customer_id = C.customer_id WHEREo.order_status = 'delivered' GROUP BYseller_id ORDER BYCOUNT ( DISTINCT customer_unique_id ) DESC LIMIT 1 ),highest_profit AS (SELECTseller_id,SUM ( price - freight_value ) AS VALUEFROMolist_order_items oiJOIN olist_orders o ON oi.order_id = o.order_id WHEREo.order_status = 'delivered' GROUP BYseller_id ORDER BYSUM ( price - freight_value ) DESC LIMIT 1 ),distinct_orders AS (SELECTseller_id,COUNT ( DISTINCT oi.order_id ) AS VALUEFROMolist_order_items oiJOIN olist_orders o ON oi.order_id = o.order_id WHEREo.order_status = 'delivered' GROUP BYseller_id ORDER BYCOUNT ( DISTINCT oi.order_id ) DESC LIMIT 1 ),most_5_star_ratings AS (SELECToi.seller_id,COUNT ( * ) AS VALUEFROMolist_order_items oiJOIN olist_orders o ON oi.order_id = o.order_idJOIN olist_order_reviews r ON o.order_id = r.order_id WHEREo.order_status = 'delivered' AND r.review_score = 5 GROUP BYoi.seller_id ORDER BYCOUNT ( * ) DESC LIMIT 1 ) SELECT'Highest number of distinct customers' AS achievement,seller_id,VALUEFROMdistinct_customers UNION ALL

SELECT'Highest profit' AS achievement,seller_id,VALUEFROMhighest_profit UNION ALL

SELECT'Highest number of distinct orders' AS achievement,seller_id,VALUEFROMdistinct_orders UNION ALL

SELECT'Most 5-star ratings' AS achievement,seller_id,VALUEFROMmost_5_star_ratings;

local034(語法報錯-邏輯錯誤)

Could you help me calculate the average of the total number of payments made using the most preferred payment method for each product category, where the most preferred payment method in a category is the one with the highest number of payments?

你能幫我計算每個產品類別中最常用的支付方式的支付總次數的平均值嗎?

其中,“最常用的支付方式”指的是在該類別中支付次數最多的那種支付方式。

SELECT的P應該是payment_counts表

然后最終查詢出來的AVG并不是真正的AVG

SELECT P.product_category_name,AVG ( payment_counts.total_payments ) AS avg_total_payments

FROM(SELECTop.payment_type,P.product_category_name,COUNT ( * ) AS total_payments,RANK ( ) OVER ( PARTITION BY P.product_category_name ORDER BY COUNT ( * ) DESC ) AS payment_rank FROMolist_order_payments opJOIN olist_orders o ON op.order_id = o.order_idJOIN olist_products_dataset P ON o.order_id = P.product_id GROUP BYop.payment_type,P.product_category_name ) AS payment_counts

WHEREpayment_counts.payment_rank = 1

GROUP BYP.product_category_name LIMIT 5;

解決方案:增加一個校驗LLM

SUM(op.payment_sequential):這個字段是支付的序列號,不是支付金額也不是支付次數。應該是使用 COUNT(*) 來統計支付次數。

SELECT AVG( total_payments ) AS avg_payments

FROM(SELECT P.product_category_name,SUM ( op.payment_sequential ) AS total_payments FROMolist_order_payments opJOIN olist_order_items oi ON op.order_id = oi.order_idJOIN olist_products_dataset P ON oi.product_id = P.product_idJOIN (SELECT P.product_category_name,op.payment_type,COUNT ( * ) AS payment_count,RANK ( ) OVER ( PARTITION BY P.product_category_name ORDER BY COUNT ( * ) DESC ) AS RANK FROMolist_order_payments opJOIN olist_order_items oi ON op.order_id = oi.order_idJOIN olist_products_dataset P ON oi.product_id = P.product_id GROUP BYP.product_category_name,op.payment_type ) preferred ON P.product_category_name = preferred.product_category_name AND op.payment_type = preferred.payment_type AND preferred.RANK = 1 GROUP BYP.product_category_name ) category_totals;

local035(通過-完全符合)

In the \u201colist_geolocation\u201d table, please identify which two consecutive cities, when sorted by geolocation_state, geolocation_city, geolocation_zip_code_prefix, geolocation_lat, and geolocation_lng, have the greatest distance between them based on the difference in distance computed between each city and its immediate predecessor in that ordering.

輸出內容:

WITH ordered_locations AS (SELECTgeolocation_city,geolocation_lat,geolocation_lng,LAG ( geolocation_city ) OVER ( ORDER BY geolocation_state, geolocation_city, geolocation_zip_code_prefix, geolocation_lat, geolocation_lng ) AS prev_city,LAG ( geolocation_lat ) OVER ( ORDER BY geolocation_state, geolocation_city, geolocation_zip_code_prefix, geolocation_lat, geolocation_lng ) AS prev_lat,LAG ( geolocation_lng ) OVER ( ORDER BY geolocation_state, geolocation_city, geolocation_zip_code_prefix, geolocation_lat, geolocation_lng ) AS prev_lng FROMolist_geolocation ),distance_calculations AS (SELECTgeolocation_city,prev_city,SQRT ( POWER ( geolocation_lat - prev_lat, 2 ) + POWER ( geolocation_lng - prev_lng, 2 ) ) AS distance FROMordered_locations WHEREprev_city IS NOT NULL ) SELECTprev_city AS city1,geolocation_city AS city2,distance

FROMdistance_calculations

ORDER BYdistance DESC LIMIT 1;

local037(邏輯不匹配)

Identify the top three product categories whose most commonly used payment type has the highest number of payments across all categories, and specify the number of payments made in each category using that payment type.

輸出SQL

SELECT P.product_category_name,pop.payment_type,COUNT ( * ) AS payment_count

FROMolist_order_payments popJOIN olist_order_items ooi ON pop.order_id = ooi.order_idJOIN olist_products_dataset P ON ooi.product_id = P.product_id

GROUP BYP.product_category_name,pop.payment_type

ORDER BYpayment_count DESC LIMIT 3;

分析

需求;

- 每個 product category 內,先找出最常用的 payment_type;

- 然后在所有 category 的“最常用 payment_type”中,選出支付次數最多的前 3 個 category;

- 最終輸出:這些 category 的名稱、它們各自最常用的 payment_type,以及該支付方式的支付次數。

輸出的SQL:

- 統計 每個產品類別、每種支付方式的支付次數;

- 然后選出全表中支付次數最多的前 3 個“產品類別 + 支付方式”組合。

正確的SQL應該為

WITH category_payment_counts AS (SELECT p.product_category_name,pop.payment_type,COUNT(*) AS payment_countFROMolist_order_payments popJOIN olist_order_items ooi ON pop.order_id = ooi.order_idJOIN olist_products_dataset p ON ooi.product_id = p.product_idGROUP BY p.product_category_name, pop.payment_type

),

most_used_payment_per_category AS (SELECT DISTINCT ON (product_category_name)product_category_name,payment_type,payment_countFROM category_payment_countsORDER BY product_category_name, payment_count DESC

)

SELECT product_category_name,payment_type,payment_count

FROM most_used_payment_per_category

ORDER BY payment_count DESC

LIMIT 3;總結

在本輪 Text2SQL 能力驗證中,選取了 8 個具有代表性的問題進行測試,涵蓋了常見的數據統計、聚合查詢、過濾條件、多表關聯等場景。

測試結果:

準確生成 SQL 的問題數量:6 個

生成錯誤或不符合預期的問題數量:2 個

準確率:75%

錯誤案例分析:

出錯的兩個問題均為邏輯較復雜或歧義較大的自然語言表達,主要問題包括:

問題語義不夠清晰,例如涉及多個隱含條件,模型在缺乏上下文的情況下難以準確理解用戶意圖。

推理鏈條較長,需要多步轉換或子查詢邏輯,超出了當前 LLM 在單步推理中的穩定能力范圍。

初步結論:

當前 Text2SQL 系統在常規查詢任務中具備較強的生成能力,尤其是在表結構清晰、問題意圖明確的情況下表現良好。

對于復雜語義、多層條件嵌套的問題,仍需進一步增強模型的“問題理解”和“邏輯推理”能力。

已經能夠勝任比較簡單的應用場景

)

![[自動化Adapt] 錄制引擎 | iframe 穿透 | NTP | AIOSQLite | 數據分片](http://pic.xiahunao.cn/[自動化Adapt] 錄制引擎 | iframe 穿透 | NTP | AIOSQLite | 數據分片)

)