打開anaconda prompt

conda activate pytorchpip install -i https://pypi.tuna.tsinghua.edu.cn/simple transformers datasets tokenizers

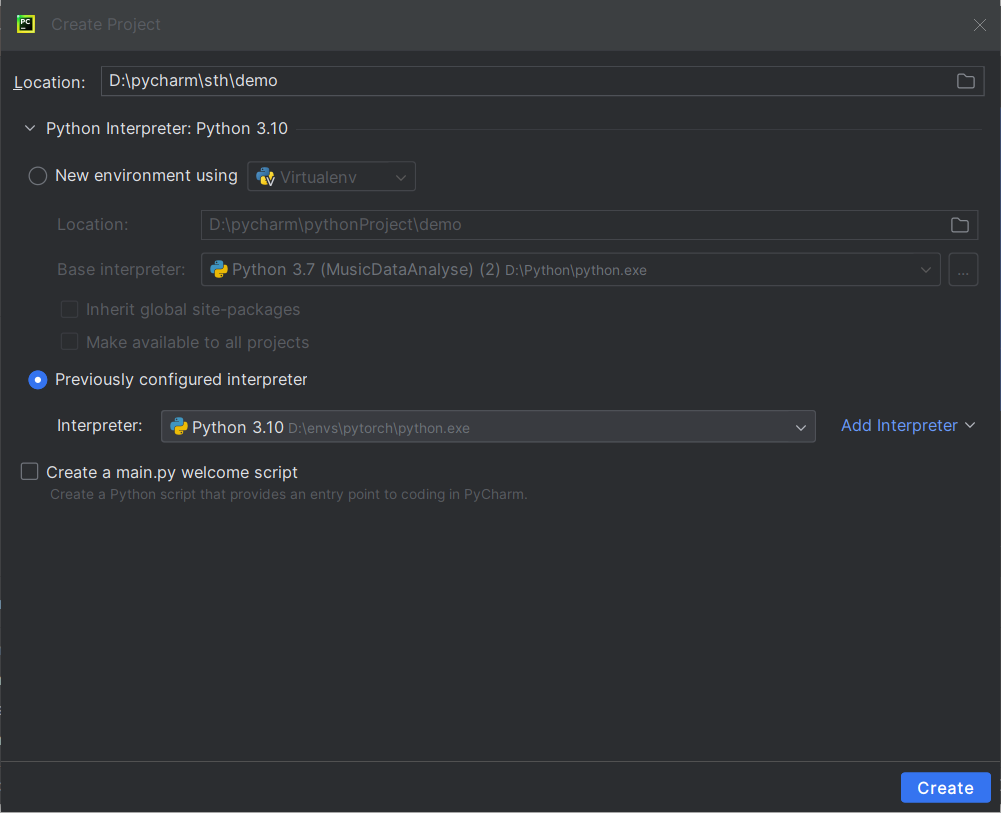

pycharm

?找到pytorch下的python.exe

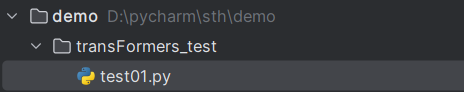

#將模型下載到本地調用 from transformers import AutoModelForCausalLM,AutoTokenizer#將模型和分詞工具下載到本地,并指定保存路徑 model_name = "uer/gpt2-chinese-cluecorpussmall" cache_dir = "model/uer/gpt2-chinese-cluecorpussmall"#下載模型 AutoModelForCausalLM.from_pretrained(model_name, cache_dir=cache_dir) #下載分詞工具 AutoTokenizer.from_pretrained(model_name,cache_dir=cache_dir)print(f"模型分詞器已下載到:{cache_dir}")

from transformers import AutoModelForCausalLM, AutoTokenizer, pipeline# 設置具體包含config.json的目錄 model_dir = r"D:\pycharm\sth\demo\transFormers_test\model\uer\gpt2-chinese-cluecorpussmall\models--uer--gpt2-chinese-cluecorpussmall\snapshots\c2c0249d8a2731f269414cc3b22dff021f8e07a3"# 將模型和分詞工具下載到本地,并指定保存路徑 model = AutoModelForCausalLM.from_pretrained(model_dir) tokenizer = AutoTokenizer.from_pretrained(model_dir)# 使用模型和分詞器創建生成文本的pipeline generator = pipeline("text-generation", model=model, tokenizer=tokenizer,device="cuda")# 生成內容 # output = generator("你好,我是一款語言模型,",max_length=50,num_return_sequences=1)output = generator("你好,我是一款語言模型,",num_return_sequences=1, # 設置返回多少個獨立的生成序列max_length=50,truncation=True,# 生成文本以適應文本最大長度temperature=0.7,# 控制文本生成的隨機性,值越高,生成多樣性越好top_k=50, # 限制模型在每一步生成時僅從概率最高的k個詞中隨機選擇下一個詞top_p=0.9, # 進一步限制模型生成時的詞匯選擇范圍,選擇一組概率累計達到p的詞匯,模型只會從這個概率集合中采樣clean_up_tokenization_spaces=True # 設置生成文本分詞時的空格是否保留 ) print(output)

from datasets import load_dataset, load_from_disk# # 在線加載 dataset = load_dataset("lansinuote/ChnSentiCorp", cache_dir="E:/DeepLearning/data") # # 保存為可本地加載的格式 save_path = "E:/DeepLearning/data/my_chn_senti_corp" # dataset.save_to_disk(save_path) # 從本地加載 loaded_dataset = load_from_disk(save_path) print(loaded_dataset)test_data = dataset["test"] print(test_data) for data in test_data:print(data)

直方圖均衡化,模板匹配,霍夫變換,圖像亮度變換,形態學變換)

)

)