一 Kubernetes 簡介及部署方法

1.1 應用部署方式演變

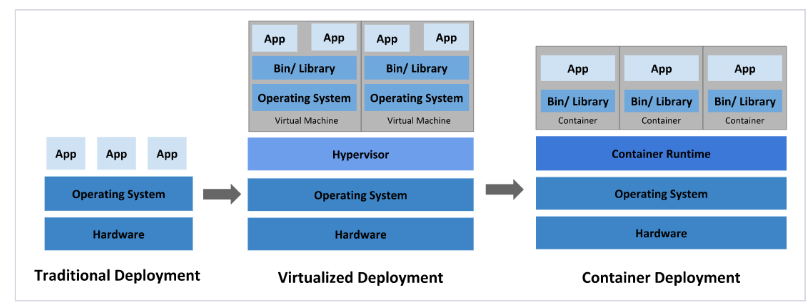

在部署應用程序的方式上,主要經歷了三個階段:

傳統部署:互聯網早期,會直接將應用程序部署在物理機上

-

優點:簡單,不需要其它技術的參與

-

缺點:不能為應用程序定義資源使用邊界,很難合理地分配計算資源,而且程序之間容易產生影響

虛擬化部署:可以在一臺物理機上運行多個虛擬機,每個虛擬機都是獨立的一個環境

-

優點:程序環境不會相互產生影響,提供了一定程度的安全性

-

缺點:增加了操作系統,浪費了部分資源

容器化部署:與虛擬化類似,但是共享了操作系統

容器化部署方式給帶來很多的便利,但是也會出現一些問題,比如說:

一個容器故障停機了,怎么樣讓另外一個容器立刻啟動去替補停機的容器

當并發訪問量變大的時候,怎么樣做到橫向擴展容器數量

1.2 容器編排應用

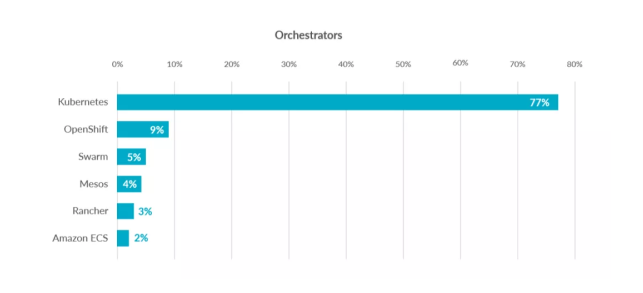

為了解決這些容器編排問題,就產生了一些容器編排的軟件:

-

Swarm:Docker自己的容器編排工具

-

Mesos:Apache的一個資源統一管控的工具,需要和Marathon結合使用

-

Kubernetes:Google開源的的容器編排工具

1.3 kubernetes 簡介

-

在Docker 作為高級容器引擎快速發展的同時,在Google內部,容器技術已經應用了很多年

-

Borg系統運行管理著成千上萬的容器應用。

-

Kubernetes項目來源于Borg,可以說是集結了Borg設計思想的精華,并且吸收了Borg系統中的經驗和教訓。

-

Kubernetes對計算資源進行了更高層次的抽象,通過將容器進行細致的組合,將最終的應用服務交給用戶。

kubernetes的本質是一組服務器集群,它可以在集群的每個節點上運行特定的程序,來對節點中的容器進行管理。目的是實現資源管理的自動化,主要提供了如下的主要功能:

-

自我修復:一旦某一個容器崩潰,能夠在1秒中左右迅速啟動新的容器

-

彈性伸縮:可以根據需要,自動對集群中正在運行的容器數量進行調整

-

服務發現:服務可以通過自動發現的形式找到它所依賴的服務

-

負載均衡:如果一個服務起動了多個容器,能夠自動實現請求的負載均衡

-

版本回退:如果發現新發布的程序版本有問題,可以立即回退到原來的版本

-

存儲編排:可以根據容器自身的需求自動創建存儲卷

1.4 K8S的設計架構

1.4.1 K8S各個組件用途

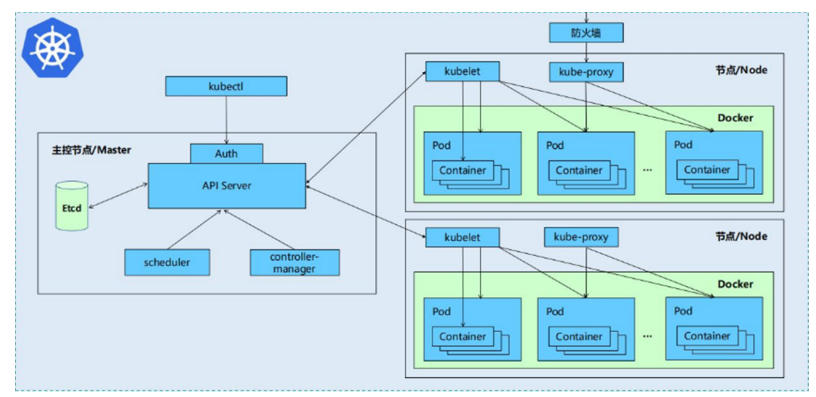

一個kubernetes集群主要是由控制節點(master)、工作節點(node)構成,每個節點上都會安裝不同的組件

1 master:集群的控制平面,負責集群的決策

-

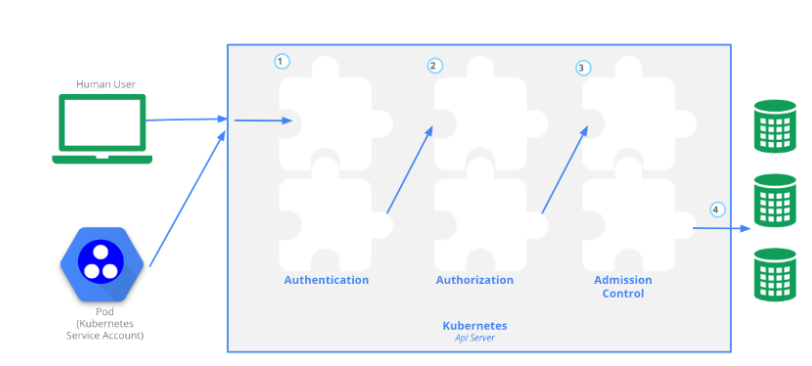

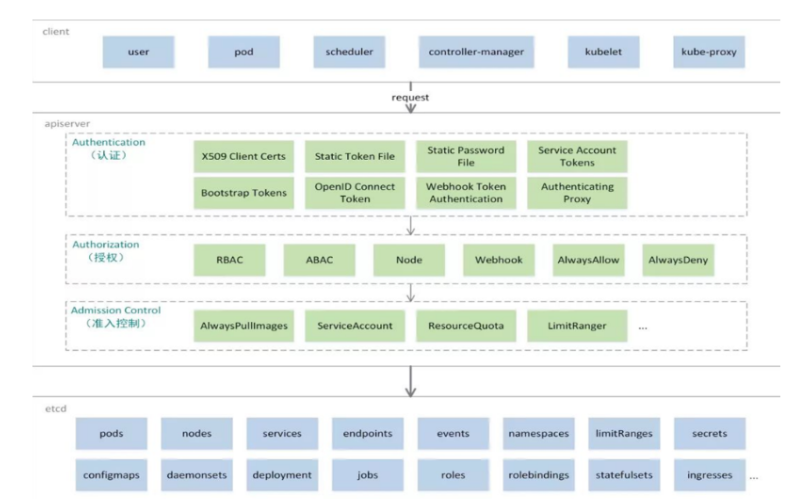

ApiServer : 資源操作的唯一入口,接收用戶輸入的命令,提供認證、授權、API注冊和發現等機制

-

Scheduler : 負責集群資源調度,按照預定的調度策略將Pod調度到相應的node節點上

-

ControllerManager : 負責維護集群的狀態,比如程序部署安排、故障檢測、自動擴展、滾動更新等

-

Etcd :負責存儲集群中各種資源對象的信息

2 node:集群的數據平面,負責為容器提供運行環境

-

kubelet:負責維護容器的生命周期,同時也負責Volume(CVI)和網絡(CNI)的管理

-

Container runtime:負責鏡像管理以及Pod和容器的真正運行(CRI)

-

kube-proxy:負責為Service提供cluster內部的服務發現和負載均衡

1.4.2 K8S 各組件之間的調用關系

當我們要運行一個web服務時

-

kubernetes環境啟動之后,master和node都會將自身的信息存儲到etcd數據庫中

-

web服務的安裝請求會首先被發送到master節點的apiServer組件

-

apiServer組件會調用scheduler組件來決定到底應該把這個服務安裝到哪個node節點上

在此時,它會從etcd中讀取各個node節點的信息,然后按照一定的算法進行選擇,并將結果告知apiServer

-

apiServer調用controller-manager去調度Node節點安裝web服務

-

kubelet接收到指令后,會通知docker,然后由docker來啟動一個web服務的pod

-

如果需要訪問web服務,就需要通過kube-proxy來對pod產生訪問的代理

1.4.3 K8S 的 常用名詞感念

-

Master:集群控制節點,每個集群需要至少一個master節點負責集群的管控

-

Node:工作負載節點,由master分配容器到這些node工作節點上,然后node節點上的

-

Pod:kubernetes的最小控制單元,容器都是運行在pod中的,一個pod中可以有1個或者多個容器

-

Controller:控制器,通過它來實現對pod的管理,比如啟動pod、停止pod、伸縮pod的數量等等

-

Service:pod對外服務的統一入口,下面可以維護者同一類的多個pod

-

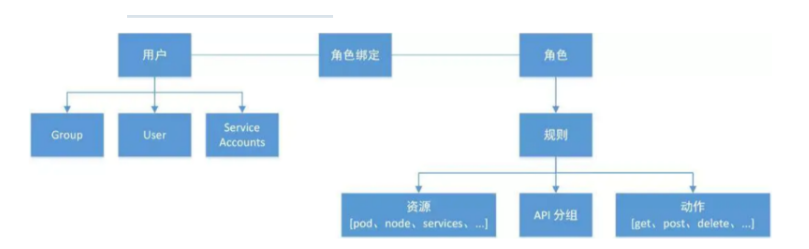

Label:標簽,用于對pod進行分類,同一類pod會擁有相同的標簽

-

NameSpace:命名空間,用來隔離pod的運行環

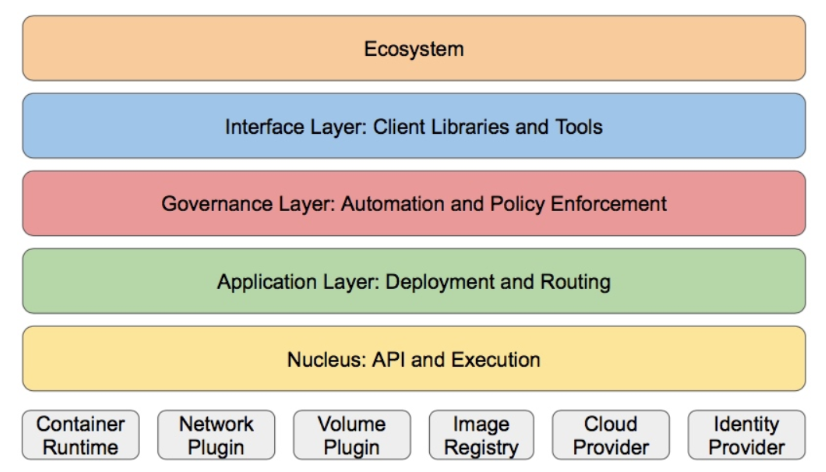

1.4.4 k8S的分層架構

-

核心層:Kubernetes最核心的功能,對外提供API構建高層的應用,對內提供插件式應用執行環境

-

應用層:部署(無狀態應用、有狀態應用、批處理任務、集群應用等)和路由(服務發現、DNS解析等)

-

管理層:系統度量(如基礎設施、容器和網絡的度量),自動化(如自動擴展、動態Provision等)以及策略管理(RBAC、Quota、PSP、NetworkPolicy等)

-

接口層:kubectl命令行工具、客戶端SDK以及集群聯邦

-

生態系統:在接口層之上的龐大容器集群管理調度的生態系統,可以劃分為兩個范疇

-

Kubernetes外部:日志、監控、配置管理、CI、CD、Workflow、FaaS、OTS應用、ChatOps等

-

Kubernetes內部:CRI、CNI、CVI、鏡像倉庫、Cloud Provider、集群自身的配置和管理等

二 K8S集群環境搭建

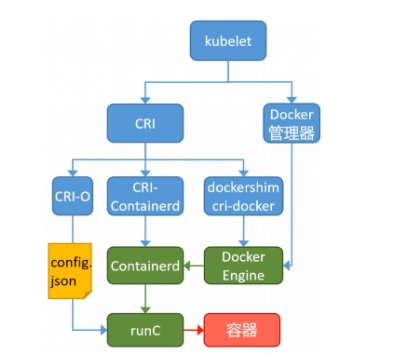

2.1 k8s中容器的管理方式

K8S 集群創建方式有3種:

centainerd

默認情況下,K8S在創建集群時使用的方式

docker

Docker使用的普記錄最高,雖然K8S在1.24版本后已經費力了kubelet對docker的支持,但時可以借助cri-docker方式來實現集群創建

cri-o

CRI-O的方式是Kubernetes創建容器最直接的一種方式,在創建集群的時候,需要借助于cri-o插件的方式來實現Kubernetes集群的創建。

注意“docker 和cri-o 這兩種方式要對kubelet程序的啟動參數進行設置

2.2 k8s 集群部署

2.2.1 k8s 環境部署說明

K8S中文官網:Kubernetes

| 主機名 | ip | 角色 |

|---|---|---|

| harbor | 192.168.121.200 | harbor倉庫 |

| master | 192.168.121.100 | master,k8s集群控制節點 |

| node1 | 192.168.121.10 | worker,k8s集群工作節點 |

| node2 | 192.168.121.20 | worker,k8s集群工作節點 |

-

所有節點禁用selinux和防火墻

-

所有節點同步時間和解析

-

所有節點安裝docker-ce

-

所有節點禁用swap,注意注釋掉/etc/fstab文件中的定義

2.2.2 集群環境初始化

2.2.2.1.配置時間同步

在配置 Kubernetes(或任何分布式系統)時間同步時,通常會選一臺主機作為“內部時間服務器(NTP Server)”,這臺主機本身會先從公網或更上層 NTP 服務器同步時間,然后再讓集群中的其他節點作為客戶端,同步到這臺內部 server,從而保證整個集群時間一致、可靠、高效。

這里我選擇harbor作為server

(1)server配置

下載chrony用于時間同步

[root@harbor ~]#yum install chrony修改配置文件運行其他主機跟server同步時間

[root@harbor ~]# cat /etc/chrony.conf

# Allow NTP client access from local network.

allow 192.168.121.0/24

(2)client配置

全部下載chrony

[root@master+node1+node2 ~]#yum install chrony

修改配置文件

[root@master+node1+node2 ~]# cat /etc/chrony.conf

# Use public servers from the pool.ntp.org project.

# Please consider joining the pool (https://www.pool.ntp.org/join.html).

#pool 2.rhel.pool.ntp.org iburst

server 192.168.121.200 iburst

查看當前系統通過 ??chrony?? 服務同步時間的??時間源列表及同步狀態

[root@master+node1+node2 ~]# chronyc sources

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^* 192.168.121.200 3 6 377 14 -54us[ -100us] +/- 17ms

2.2.2.2.所有禁用swap和設置本地域名解析

]# systemctl mask swap.target

]# swapoff -a

]# vim /etc/fstab#

# /etc/fstab

# Created by anaconda on Sun Feb 19 17:38:40 2023

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/rhel-root / xfs defaults 0 0

UUID=ddb06c77-c9da-4e92-afd7-53cd76e6a94a /boot xfs defaults 0 0

#/dev/mapper/rhel-swap swap swap defaults 0 0

/dev/cdrom /media iso9660 defaults 0 0~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.121.200 reg.timingy.org #這里是你的harbor倉庫域名

192.168.121.100 master

192.168.121.10 node1

192.168.121.20 node2

2.2.2.3.所有安裝docker

~]# vim /etc/yum.repos.d/docker.repo

[docker]

name=docker

baseurl=https://mirrors.aliyun.com/docker-ce/linux/rhel/9/x86_64/stable/

gpgcheck=0~]# dnf install docker-ce -y~]# cat /lib/systemd/system/docker.service

ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --iptables=true#--iptables=true是 Docker 的一個啟動參數,??表示讓 Docker 自動管理系統的 iptables 規則??,用于實現端口映射、容器網絡通信等功能。??默認開啟,一般不要修改,否則可能導致網絡功能(如端口轉發)失效。?~]# systemctl enable --now docker

2.2.2.4.harbor倉庫搭建和設置registry加密傳輸

[root@harbor packages]# ll

total 3621832

-rw-r--r-- 1 root root 131209386 Aug 23 2024 1panel-v1.10.13-lts-linux-amd64.tar.gz

-rw-r--r-- 1 root root 4505600 Aug 26 2024 busybox-latest.tar.gz

-rw-r--r-- 1 root root 211699200 Aug 26 2024 centos-7.tar.gz

-rw-r--r-- 1 root root 22456832 Aug 26 2024 debian11.tar.gz

-rw-r--r-- 1 root root 693103681 Aug 26 2024 docker-images.tar.gz

-rw-r--r-- 1 root root 57175040 Aug 26 2024 game2048.tar.gz

-rw-r--r-- 1 root root 102946304 Aug 26 2024 haproxy-2.3.tar.gz

-rw-r--r-- 1 root root 738797440 Aug 17 2024 harbor-offline-installer-v2.5.4.tgz

-rw-r--r-- 1 root root 207404032 Aug 26 2024 mario.tar.gz

-rw-r--r-- 1 root root 519596032 Aug 26 2024 mysql-5.7.tar.gz

-rw-r--r-- 1 root root 146568704 Aug 26 2024 nginx-1.23.tar.gz

-rw-r--r-- 1 root root 191849472 Aug 26 2024 nginx-latest.tar.gz

-rw-r--r-- 1 root root 574838784 Aug 26 2024 phpmyadmin-latest.tar.gz

-rw-r--r-- 1 root root 26009088 Aug 17 2024 registry.tag.gz

drwxr-xr-x 2 root root 277 Aug 23 2024 rpm

-rw-r--r-- 1 root root 80572416 Aug 26 2024 ubuntu-latest.tar.gz#解壓Harbor 私有鏡像倉庫的離線安裝包??

[root@harbor packages]# tar zxf harbor-offline-installer-v2.5.4.tgz

[root@harbor packages]# mkdir -p /data/certs生成一個有效期為 365 天的自簽名 HTTPS 證書(timingy.org.crt)和對應的私鑰(timingy.org.key),該證書可用于域名 reg.timingy.org,私鑰不加密,密鑰長度 4096 位,使用 SHA-256 簽名。?

[root@harbor packages]# openssl req -newkey rsa:4096 -nodes -sha256 -keyout /data/certs/timingy.org.key --addext "subjectAltName = DNS:reg.timingy.org" -x509 -days 365 -out /data/certs/timingy.org.crtCommon Name (eg, your name or your server's hostname) []:reg.timingy.org #這里域名不能填錯[root@harbor harbor]# ls

common.sh harbor.v2.5.4.tar.gz harbor.yml.tmpl install.sh LICENSE prepare

[root@harbor harbor]# cp harbor.yml.tmpl harbor.yml #復制模板文件為harbor.yml

??harbor.yml是 Harbor 的核心配置文件,你通過編輯它來定義 Harbor 的訪問域名、是否啟用 HTTPS、管理員密碼、數據存儲位置等關鍵信息。編輯完成后,運行 ./install.sh即可基于該配置完成 Harbor 的安裝部署。?[root@harbor harbor]# vim harbor.yml

hostname: reg.timingy.org #harbor倉庫域名

https: #https設置# https port for harbor, default is 443port: 443certificate: /data/certs/timingy.org.crt #公鑰位置private_key: /data/certs/timingy.org.key #私鑰位置

harbor_admin_password: 123 #harbor倉庫admin用戶密碼#在使用 Harbor 離線安裝腳本時,??顯式要求安裝并啟用 ChartMuseum 組件,用于支持 Helm Chart(Kubernetes 應用包)的存儲與管理??

[root@harbor harbor]# ./install.sh --with-chartmuseum#為集群中的多個 Docker 節點配置私有鏡像倉庫的信任證書,解決 HTTPS 訪問時的證書驗證問題,保證鏡像拉取流程正常。

[root@harbor ~]# for i in 100 200 10 20

> do

> ssh -l root 192.168.121.$i mkdir -p /etc/docker/certs.d/reg.timingy.org

> scp /data/certs/timingy.org.crt root@192.168.121.$i:/etc/docker/certs.d/reg.timingy.org/ca.crt

> done#設置搭建的harbor倉庫為docker默認倉庫(所有主機)

~]# vim /etc/docker/daemon.json

{"registry-mirrors":["https://reg.timingy.org"]

}#重啟docker讓配置生效

~]# systemctl restart docker.service#查看docker信息

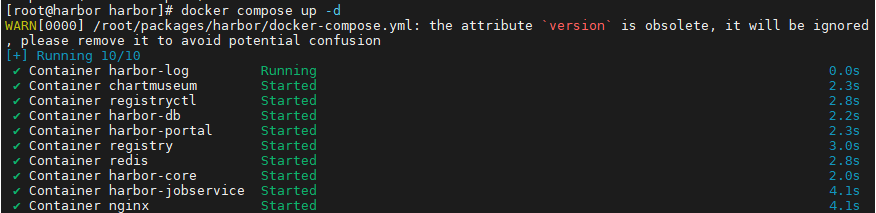

~]# docker infoRegistry Mirrors:https://reg.timingy.org/Harbor 倉庫的啟動本質上就是通過?

docker-compose按照你配置的參數(源自 harbor.yml)來拉起一組 Docker 容器,組成完整的 Harbor 服務。?當你執行 Harbor 的安裝腳本:

./install.sh1. 讀取你的配置:harbor.yml

- 你之前編輯的?

harbor.yml文件是 Harbor 的核心配置文件,用于定義如下內容:- Harbor 的訪問域名(hostname)

- 是否啟用 HTTPS,以及證書和私鑰路徑

- 數據存儲目錄(data_volume)

- 是否啟用 ChartMuseum(用于 Helm Chart 存儲)

- 管理員密碼等

它決定了 Harbor 的運行方式,例如使用什么域名訪問、是否啟用加密、數據存放在哪里等。

2. 生成 docker-compose 配置 & 加載 Docker 鏡像

install.sh腳本會根據?harbor.yml中的配置:

- 自動生成一份?

docker-compose.yml文件(通常在內部目錄如?./make/下生成,不直接展示給用戶)- 將 Harbor 所需的各個服務(如 UI、Registry、數據庫、Redis、ChartMuseum 等)打包為 Docker 鏡像

- 如果你使用的是 ??離線安裝包??,這些鏡像通常已經包含在包中,無需聯網下載

- 腳本會將這些鏡像通過?

docker load命令加載到本地 Docker 環境中

3. 調用 docker-compose 啟動服務

最終,

install.sh會調用類似于下面的命令(或內部等效邏輯)來啟動 Harbor 服務:docker-compose up -d該命令會根據生成的?

docker-compose.yml配置,以后臺模式啟動 Harbor 所需的多個容器些容器共同構成了一個完整的 Harbor 私有鏡像倉庫服務,支持鏡像管理、用戶權限、Helm Chart 存儲等功能。

在harbor安裝目錄下啟用harbor倉庫

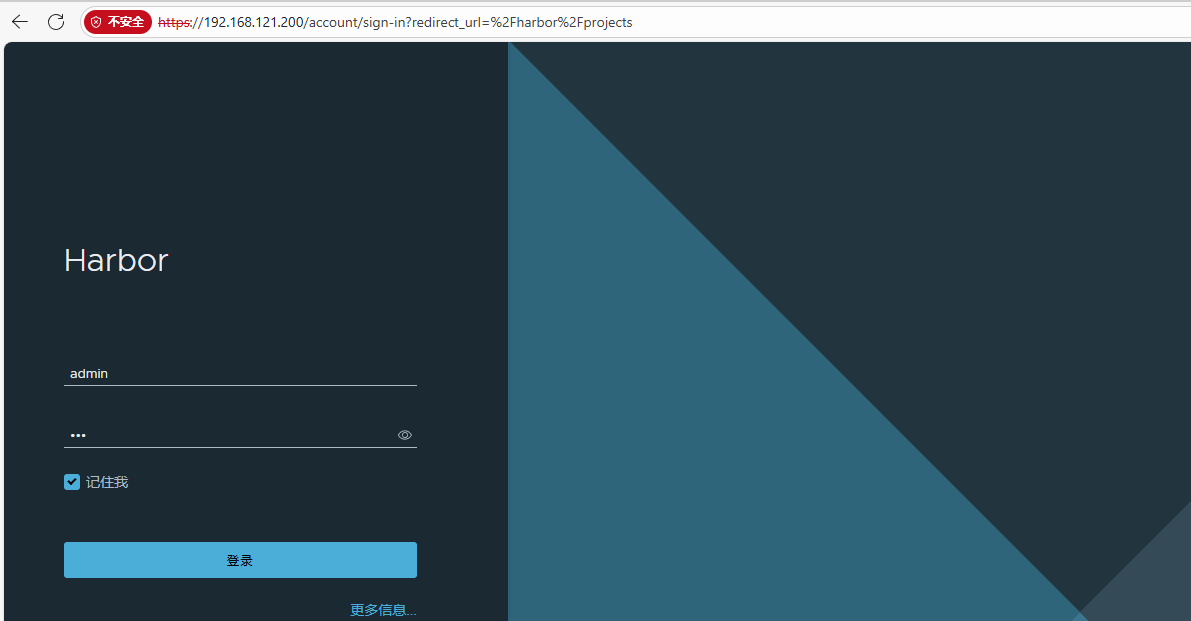

訪問測試

點擊高級-->繼續訪問

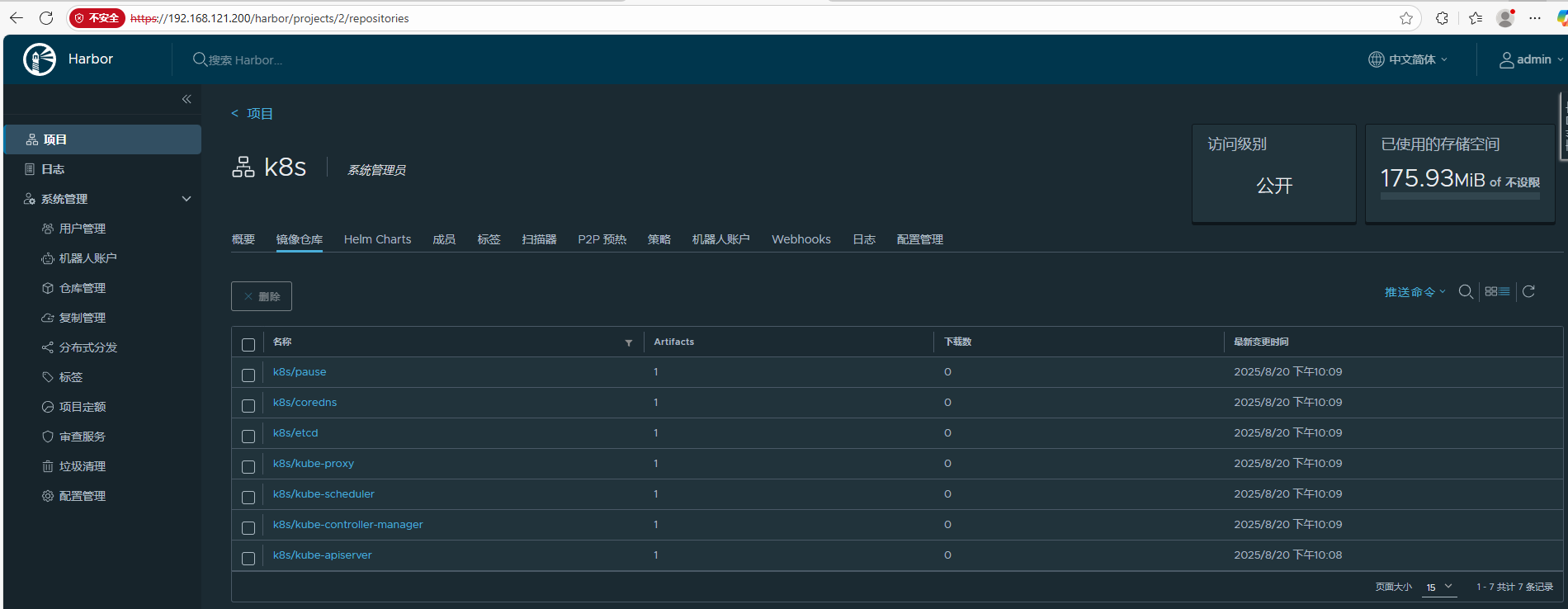

登錄后創建公開項目k8s用于k8s集群搭建

2.2.2.5 安裝K8S部署工具

#部署軟件倉庫,添加K8S源

~]# vim /etc/yum.repos.d/k8s.repo

[k8s]

name=k8s

baseurl=https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.30/rpm

gpgcheck=0#安裝軟件

~]# dnf install kubelet-1.30.0 kubeadm-1.30.0 kubectl-1.30.0 -y

2.2.2.6 設置kubectl命令補齊功能

[root@k8s-master ~]# dnf install bash-completion -y

[root@k8s-master ~]# echo "source <(kubectl completion bash)" >> ~/.bashrc

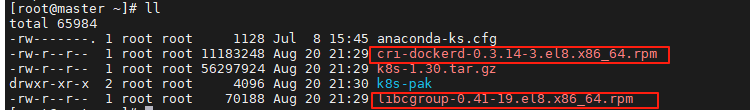

[root@k8s-master ~]# source ~/.bashrc2.2.2.7 在所有節點安裝cri-docker

k8s從1.24版本開始移除了dockershim,所以需要安裝cri-docker插件才能使用docker

軟件下載:https://github.com/Mirantis/cri-dockerd

下載docker連接插件及其依賴(讓k8s支持docker容器):

所有節點~] #dnf install libcgroup-0.41-19.el8.x86_64.rpm \

> cri-dockerd-0.3.14-3.el8.x86_64.rpm -y所有節點~]# cat /lib/systemd/system/cri-docker.service

[Unit]

Description=CRI Interface for Docker Application Container Engine

Documentation=https://docs.mirantis.com

After=network-online.target firewalld.service docker.service

Wants=network-online.target

Requires=cri-docker.socket[Service]

Type=notify#指定網絡插件名稱及基礎容器鏡像

ExecStart=/usr/bin/cri-dockerd --container-runtime-endpoint fd:// --network-plugin=cni --pod-infra-container-image=reg.timingy.org/k8s/pause:3.9

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=always所有節點~]# systemctl daemon-reload

所有節點~]# systemctl enable --now cri-docker

所有節點~]# ll /var/run/cri-dockerd.sock

srw-rw---- 1 root docker 0 Aug 20 21:44 /var/run/cri-dockerd.sock #cri-dockerd的套接字文件

2.2.2.8 在master節點拉取K8S所需鏡像

方法1.在線拉取

#拉取k8s集群所需要的鏡像

[root@k8s-master ~]# kubeadm config images pull \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.30.0 \

--cri-socket=unix:///var/run/cri-dockerd.sock#上傳鏡像到harbor倉庫

[root@k8s-master ~]# docker images | awk '/google/{ print $1":"$2}' \

| awk -F "/" '{system("docker tag "$0" reg.timinglee.org/k8s/"$3)}'[root@k8s-master ~]# docker images | awk '/k8s/{system("docker push "$1":"$2)}'方法2:離線導入

[root@master k8s-img]# ll

total 650320

-rw-r--r-- 1 root root 84103168 Aug 20 21:55 flannel-0.25.5.tag.gz

-rw-r--r-- 1 root root 581815296 Aug 20 21:55 k8s_docker_images-1.30.tar

-rw-r--r-- 1 root root 4406 Aug 20 21:55 kube-flannel.yml#導入鏡像

[root@master k8s-img]# docker load -i k8s_docker_images-1.30.tar

3d6fa0469044: Loading layer 327.7kB/327.7kB

49626df344c9: Loading layer 40.96kB/40.96kB

945d17be9a3e: Loading layer 2.396MB/2.396MB

4d049f83d9cf: Loading layer 1.536kB/1.536kB

af5aa97ebe6c: Loading layer 2.56kB/2.56kB

ac805962e479: Loading layer 2.56kB/2.56kB

bbb6cacb8c82: Loading layer 2.56kB/2.56kB

2a92d6ac9e4f: Loading layer 1.536kB/1.536kB

1a73b54f556b: Loading layer 10.24kB/10.24kB

f4aee9e53c42: Loading layer 3.072kB/3.072kB

b336e209998f: Loading layer 238.6kB/238.6kB

06ddf169d3f3: Loading layer 1.69MB/1.69MB

c0cb02961a3c: Loading layer 112.9MB/112.9MB

Loaded image: registry.aliyuncs.com/google_containers/kube-apiserver:v1.30.0

7b631378e22a: Loading layer 107.4MB/107.4MB

Loaded image: registry.aliyuncs.com/google_containers/kube-controller-manager:v1.30.0

62baa24e327e: Loading layer 58.3MB/58.3MB

Loaded image: registry.aliyuncs.com/google_containers/kube-scheduler:v1.30.0

3113ebfbe4c2: Loading layer 28.35MB/28.35MB

f76f3fb0cfaa: Loading layer 57.58MB/57.58MB

Loaded image: registry.aliyuncs.com/google_containers/kube-proxy:v1.30.0

e023e0e48e6e: Loading layer 327.7kB/327.7kB

6fbdf253bbc2: Loading layer 51.2kB/51.2kB

7bea6b893187: Loading layer 3.205MB/3.205MB

ff5700ec5418: Loading layer 10.24kB/10.24kB

d52f02c6501c: Loading layer 10.24kB/10.24kB

e624a5370eca: Loading layer 10.24kB/10.24kB

1a73b54f556b: Loading layer 10.24kB/10.24kB

d2d7ec0f6756: Loading layer 10.24kB/10.24kB

4cb10dd2545b: Loading layer 225.3kB/225.3kB

aec96fc6d10e: Loading layer 217.1kB/217.1kB

545a68d51bc4: Loading layer 57.16MB/57.16MB

Loaded image: registry.aliyuncs.com/google_containers/coredns:v1.11.1

e3e5579ddd43: Loading layer 746kB/746kB

Loaded image: registry.aliyuncs.com/google_containers/pause:3.9

54ad2ec71039: Loading layer 327.7kB/327.7kB

6fbdf253bbc2: Loading layer 51.2kB/51.2kB

accc3e6808c0: Loading layer 3.205MB/3.205MB

ff5700ec5418: Loading layer 10.24kB/10.24kB

d52f02c6501c: Loading layer 10.24kB/10.24kB

e624a5370eca: Loading layer 10.24kB/10.24kB

1a73b54f556b: Loading layer 10.24kB/10.24kB

d2d7ec0f6756: Loading layer 10.24kB/10.24kB

4cb10dd2545b: Loading layer 225.3kB/225.3kB

a9f9fc6d48ba: Loading layer 2.343MB/2.343MB

b48a138a7d6b: Loading layer 124.2MB/124.2MB

b4b40553581c: Loading layer 20.36MB/20.36MB

Loaded image: registry.aliyuncs.com/google_containers/etcd:3.5.12-0#打標簽

[root@master k8s-img]# docker images | awk '/google/{print $1":"$2}' | awk -F / '{system("docker tag "$0" reg.timingy.org/k8s/"$3)}'

[root@master k8s-img]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

reg.timingy.org/k8s/kube-apiserver v1.30.0 c42f13656d0b 16 months ago 117MB

registry.aliyuncs.com/google_containers/kube-apiserver v1.30.0 c42f13656d0b 16 months ago 117MB

reg.timingy.org/k8s/kube-controller-manager v1.30.0 c7aad43836fa 16 months ago 111MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.30.0 c7aad43836fa 16 months ago 111MB

reg.timingy.org/k8s/kube-scheduler v1.30.0 259c8277fcbb 16 months ago 62MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.30.0 259c8277fcbb 16 months ago 62MB

reg.timingy.org/k8s/kube-proxy v1.30.0 a0bf559e280c 16 months ago 84.7MB#推送鏡像

[root@master k8s-img]# docker images | awk '/timingy/{system("docker push " $1":"$2)}'

2.2.2.9 集群初始化

#執行初始化命令

[root@master k8s-img]# kubeadm init --pod-network-cidr=10.244.0.0/16 \

> --image-repository reg.timingy.org/k8s \

> --kubernetes-version v1.30.0 \

> --cri-socket=unix:///var/run/cri-dockerd.sock#指定集群配置文件變量

[root@master ~]# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

[root@master k8s-img]# source ~/.bash_profile#當前節點沒有就緒,因為還沒有安裝網絡插件,容器沒有運行

[root@master k8s-img]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady control-plane 3m v1.30.0[root@master k8s-img]# kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-7c677d6c78-7n96p 0/1 Pending 0 3m2s

kube-system coredns-7c677d6c78-jp6c5 0/1 Pending 0 3m2s

kube-system etcd-master 1/1 Running 0 3m16s

kube-system kube-apiserver-master 1/1 Running 0 3m18s

kube-system kube-controller-manager-master 1/1 Running 0 3m16s

kube-system kube-proxy-rjzl9 1/1 Running 0 3m2s

kube-system kube-scheduler-master 1/1 Running 0 3m16s

Note:

在此階段如果生成的集群token找不到了可以重新生成

[root@master ~]# kubeadm token create --print-join-command kubeadm join 192.168.121.100:6443 --token slx36w.np3pg2xzfhtj8hsr \ --discovery-token-ca-cert-hash sha256:29389ead6392e0bb1f68adb025e3a6817c9936a26f9140f8a166528e521addb3 --cri-socket=unix:///var/run/cri-dockerd.sock

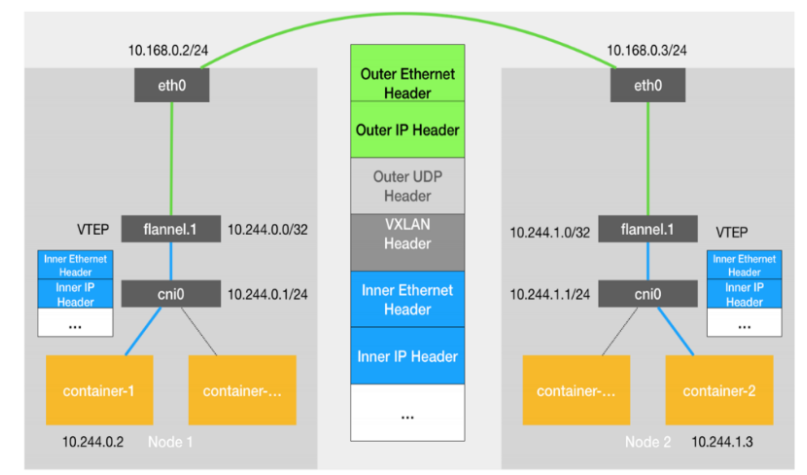

2.2.2.10 安裝flannel網絡插件

官方網站:https://github.com/flannel-io/flannel

#下載flannel的yaml部署文件

[root@k8s-master ~]# wget https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml#下載鏡像:

[root@k8s-master ~]# docker pull docker.io/flannel/flannel:v0.25.5

[root@k8s-master ~]# docekr docker.io/flannel/flannel-cni-plugin:v1.5.1-flannel1#注意得現在harbor中建立flannel公開項目

[root@master k8s-img]# docker tag flannel/flannel:v0.25.5 reg.timingy.org/flannel/flannel:v0.25.5

[root@master k8s-img]# docker tag flannel/flannel-cni-plugin:v1.5.1-flannel1 reg.timingy.org/flannel/flannel-cni-plugin:v1.5.1-flannel1#推送

[root@master k8s-img]# docker push reg.timingy.org/flannel/flannel:v0.25.5

[root@master k8s-img]# docker push reg.timingy.org/flannel/flannel-cni-plugin:v1.5.1-flannel1#修改yml配置文件指定鏡像倉庫,官方的是docker.io,刪掉這里就行,docker會從默認的倉庫也就是我們的harbor倉庫拉取鏡像

[root@master k8s-img]# vim kube-flannel.yml

image: flannel/flannel:v0.25.5

image: flannel/flannel-cni-plugin:v1.5.1-flannel1

image: flannel/flannel:v0.25.5[root@master k8s-img]# kubectl apply -f kube-flannel.yml

namespace/kube-flannel created

serviceaccount/flannel created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created#查看pods運行情況

[root@master k8s-img]# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-flannel kube-flannel-ds-jqz8p 1/1 Running 0 15s

kube-system coredns-7c677d6c78-7n96p 1/1 Running 0 18m

kube-system coredns-7c677d6c78-jp6c5 1/1 Running 0 18m

kube-system etcd-master 1/1 Running 0 18m

kube-system kube-apiserver-master 1/1 Running 0 18m

kube-system kube-controller-manager-master 1/1 Running 0 18m

kube-system kube-proxy-rjzl9 1/1 Running 0 18m

kube-system kube-scheduler-master 1/1 Running 0 18m#查看節點是否ready

[root@master k8s-img]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready control-plane 18m v1.30.02.2.2.11 節點擴容

在所有的worker節點中

1 確認部署好以下內容

2 禁用swap

3 安裝:

-

kubelet-1.30.0

-

kubeadm-1.30.0

-

kubectl-1.30.0

-

docker-ce

-

cri-dockerd

4 修改cri-dockerd啟動文件添加

-

--network-plugin=cni

-

--pod-infra-container-image=reg.timinglee.org/k8s/pause:3.9

5 啟動服務

-

kubelet.service

-

cri-docker.service

以上信息確認完畢后即可加入集群

[root@master k8s-img]# kubeadm token create --print-join-command

kubeadm join 192.168.121.100:6443 --token p3kfyl.ljipmtsklr21r9ah --discovery-token-ca-cert-hash sha256:e01d3ac26e5c7b3100487dae6e14ce16e49f183b1b35f18cacd2be8006177293[root@node1 ~]# kubeadm join 192.168.121.100:6443 --token p3kfyl.ljipmtsklr21r9ah --discovery-token-ca-cert-hash sha256:e01d3ac26e5c7b3100487dae6e14ce16e49f183b1b35f18cacd2be8006177293 --cri-socket=unix:///var/run/cri-dockerd.sock[root@node2 ~]# kubeadm join 192.168.121.100:6443 --token p3kfyl.ljipmtsklr21r9ah --discovery-token-ca-cert-hash sha256:e01d3ac26e5c7b3100487dae6e14ce16e49f183b1b35f18cacd2be8006177293 --cri-socket=unix:///var/run/cri-dockerd.sock

[preflight] Running pre-flight checks[WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service'

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-check] Waiting for a healthy kubelet. This can take up to 4m0s

[kubelet-check] The kubelet is healthy after 2.50498435s

[kubelet-start] Waiting for the kubelet to perform the TLS BootstrapThis node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

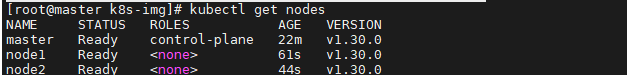

在master節點中查看所有node的狀態

Note:

所有階段的STATUS為Ready狀態,那么恭喜你,你的kubernetes就裝好了!!

測試集群運行情況

[root@harbor ~]# cd packages/

[root@harbor packages]# ll

total 3621832

-rw-r--r-- 1 root root 131209386 Aug 23 2024 1panel-v1.10.13-lts-linux-amd64.tar.gz

-rw-r--r-- 1 root root 4505600 Aug 26 2024 busybox-latest.tar.gz

-rw-r--r-- 1 root root 211699200 Aug 26 2024 centos-7.tar.gz

-rw-r--r-- 1 root root 22456832 Aug 26 2024 debian11.tar.gz

-rw-r--r-- 1 root root 693103681 Aug 26 2024 docker-images.tar.gz

-rw-r--r-- 1 root root 57175040 Aug 26 2024 game2048.tar.gz

-rw-r--r-- 1 root root 102946304 Aug 26 2024 haproxy-2.3.tar.gz

drwxr-xr-x 3 root root 180 Aug 20 20:06 harbor

-rw-r--r-- 1 root root 738797440 Aug 17 2024 harbor-offline-installer-v2.5.4.tgz

-rw-r--r-- 1 root root 207404032 Aug 26 2024 mario.tar.gz

-rw-r--r-- 1 root root 519596032 Aug 26 2024 mysql-5.7.tar.gz

-rw-r--r-- 1 root root 146568704 Aug 26 2024 nginx-1.23.tar.gz

-rw-r--r-- 1 root root 191849472 Aug 26 2024 nginx-latest.tar.gz

-rw-r--r-- 1 root root 574838784 Aug 26 2024 phpmyadmin-latest.tar.gz

-rw-r--r-- 1 root root 26009088 Aug 17 2024 registry.tag.gz

drwxr-xr-x 2 root root 277 Aug 23 2024 rpm

-rw-r--r-- 1 root root 80572416 Aug 26 2024 ubuntu-latest.tar.gz#加載壓縮包為鏡像

[root@harbor packages]# docker load -i nginx-latest.tar.gz

#打標簽并推送

[root@harbor packages]# docker tag nginx:latest reg.timingy.org/library/nginx:latest

[root@harbor packages]# docker push reg.timingy.org/library/nginx:latest#建立一個pod

[root@master k8s-img]# kubectl run test --image=nginx

pod/test created#查看pod狀態[root@master k8s-img]# kubectl get pods

NAME READY STATUS RESTARTS AGE

test 1/1 Running 0 35s#刪除pod

[root@master k8s-img]# kubectl delete pod test

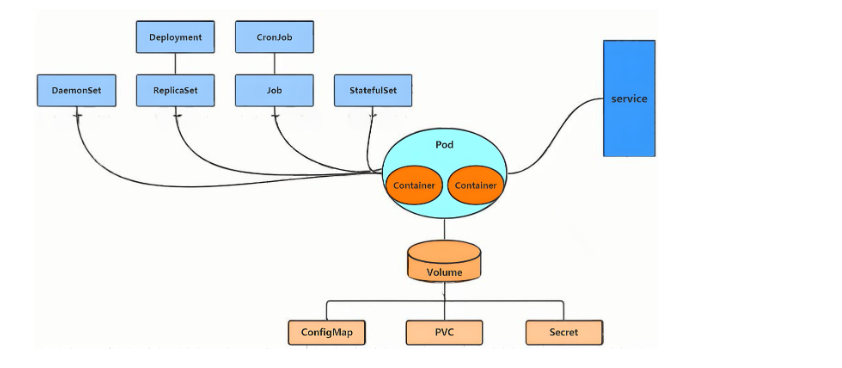

pod "test" deleted三 kubernetes 中的資源

3.1 資源管理介紹

-

在kubernetes中,所有的內容都抽象為資源,用戶需要通過操作資源來管理kubernetes。

-

kubernetes的本質上就是一個集群系統,用戶可以在集群中部署各種服務

-

所謂的部署服務,其實就是在kubernetes集群中運行一個個的容器,并將指定的程序跑在容器中。

-

kubernetes的最小管理單元是pod而不是容器,只能將容器放在

Pod中, -

kubernetes一般也不會直接管理Pod,而是通過

Pod控制器來管理Pod的。 -

Pod中服務的訪問是由kubernetes提供的

Service資源來實現。 -

Pod中程序的數據需要持久化是由kubernetes提供的各種存儲系統來實現

3.2 資源管理方式

-

命令式對象管理:直接使用命令去操作kubernetes資源

kubectl run nginx-pod --image=nginx:latest --port=80 -

命令式對象配置:通過命令配置和配置文件去操作kubernetes資源

kubectl create/patch -f nginx-pod.yaml -

聲明式對象配置:通過apply命令和配置文件去操作kubernetes資源

kubectl apply -f nginx-pod.yaml

| 類型 | 適用環境 | 優點 | 缺點 |

|---|---|---|---|

| 命令式對象管理 | 測試 | 簡單 | 只能操作活動對象,無法審計、跟蹤 |

| 命令式對象配置 | 開發 | 可以審計、跟蹤 | 項目大時,配置文件多,操作麻煩 |

| 聲明式對象配置 | 開發 | 支持目錄操作 | 意外情況下難以調試 |

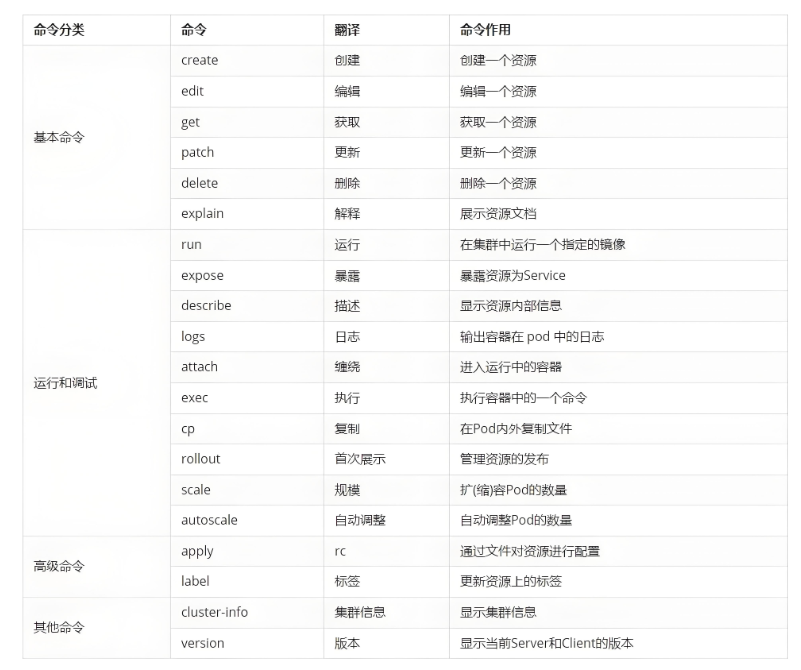

3.2.1 命令式對象管理

kubectl是kubernetes集群的命令行工具,通過它能夠對集群本身進行管理,并能夠在集群上進行容器化應用的安裝部署

kubectl命令的語法如下:

kubectl [command] [type] [name] [flags]comand:指定要對資源執行的操作,例如create、get、delete

type:指定資源類型,比如deployment、pod、service

name:指定資源的名稱,名稱大小寫敏感

flags:指定額外的可選參數

# 查看所有pod

kubectl get pod # 查看某個pod

kubectl get pod pod_name# 查看某個pod,以yaml格式展示結果

kubectl get pod pod_name -o yaml3.2.2 資源類型

kubernetes中所有的內容都抽象為資源

kubectl api-resources常用資源類型

kubect 常見命令操作

3.2.3 基本命令示例

kubectl的詳細說明地址:Kubectl Reference Docs

[root@master ~]# kubectl version

Client Version: v1.30.0

Kustomize Version: v5.0.4-0.20230601165947-6ce0bf390ce3

Server Version: v1.30.0

#顯示集群信息

[root@master ~]# kubectl cluster-info

Kubernetes control plane is running at https://192.168.121.100:6443

CoreDNS is running at https://192.168.121.100:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxyTo further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

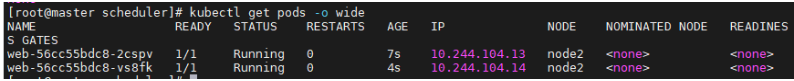

#創建一個webcluster控制器,控制器中pod數量為2

[root@master ~]# kubectl create deployment webcluster --image nginx --replicas 2#查看控制器

[root@master ~]# kubectl get deployments.apps

NAME READY UP-TO-DATE AVAILABLE AGE

webcluster 2/2 2 2 22s

[root@master ~]# kubectl get deployments.apps

NAME READY UP-TO-DATE AVAILABLE AGE

webcluster 2/2 2 2 22s

#查看資源幫助

[root@master ~]# kubectl explain deployment

GROUP: apps

KIND: Deployment

VERSION: v1DESCRIPTION:Deployment enables declarative updates for Pods and ReplicaSets.FIELDS:apiVersion <string>APIVersion defines the versioned schema of this representation of an object.Servers should convert recognized schemas to the latest internal value, andmay reject unrecognized values. More info:https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#resourceskind <string>Kind is a string value representing the REST resource this objectrepresents. Servers may infer this from the endpoint the client submitsrequests to. Cannot be updated. In CamelCase. More info:https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#types-kindsmetadata <ObjectMeta>Standard object's metadata. More info:https://git.k8s.io/community/contributors/devel/sig-architecture/api-conventions.md#metadataspec <DeploymentSpec>Specification of the desired behavior of the Deployment.status <DeploymentStatus>Most recently observed status of the Deployment.#查看控制器參數幫助

[root@master ~]# kubectl explain deployment.spec

GROUP: apps

KIND: Deployment

VERSION: v1FIELD: spec <DeploymentSpec>DESCRIPTION:Specification of the desired behavior of the Deployment.DeploymentSpec is the specification of the desired behavior of theDeployment.FIELDS:minReadySeconds <integer>Minimum number of seconds for which a newly created pod should be readywithout any of its container crashing, for it to be considered available.Defaults to 0 (pod will be considered available as soon as it is ready)paused <boolean>Indicates that the deployment is paused.progressDeadlineSeconds <integer>The maximum time in seconds for a deployment to make progress before it isconsidered to be failed. The deployment controller will continue to processfailed deployments and a condition with a ProgressDeadlineExceeded reasonwill be surfaced in the deployment status. Note that progress will not beestimated during the time a deployment is paused. Defaults to 600s.replicas <integer>Number of desired pods. This is a pointer to distinguish between explicitzero and not specified. Defaults to 1.revisionHistoryLimit <integer>The number of old ReplicaSets to retain to allow rollback. This is a pointerto distinguish between explicit zero and not specified. Defaults to 10.selector <LabelSelector> -required-Label selector for pods. Existing ReplicaSets whose pods are selected bythis will be the ones affected by this deployment. It must match the podtemplate's labels.strategy <DeploymentStrategy>The deployment strategy to use to replace existing pods with new ones.template <PodTemplateSpec> -required-Template describes the pods that will be created. The only allowedtemplate.spec.restartPolicy value is "Always".#編輯控制器配置

[root@master ~]# kubectl edit deployments.apps webcluster

@@@@省略內容@@@@@@

spec:progressDeadlineSeconds: 600replicas: 3 #pods數量改為3

@@@@省略內容@@@@@@#查看控制器

[root@master ~]# kubectl get deployments.apps

NAME READY UP-TO-DATE AVAILABLE AGE

webcluster 3/3 3 3 4m56s

#利用補丁更改控制器配置

[root@master ~]# kubectl patch deployments.apps webcluster -p '{"spec":{"replicas":4}}'

deployment.apps/webcluster patched[root@master ~]# kubectl get deployments.apps webcluster

NAME READY UP-TO-DATE AVAILABLE AGE

webcluster 4/4 4 4 7m6s

#刪除資源

[root@master ~]# kubectl delete deployments.apps webcluster

deployment.apps "webcluster" deleted

[root@master ~]# kubectl get deployments.apps

No resources found in default namespace.

3.2.4 運行和調試命令示例

#拷貝文件到pod中

[root@master ~]# kubectl cp anaconda-ks.cfg nginx:/

[root@master ~]# kubectl exec -it pods/nginx /bin/bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

root@nginx:/# ls

anaconda-ks.cfg boot docker-entrypoint.d etc lib media opt root sbin sys usr

bin dev docker-entrypoint.sh home lib64 mnt proc run srv tmp var#拷貝pod中的文件到本機

[root@master ~]# kubectl cp nginx:/anaconda-ks.cfg ./

tar: Removing leading `/' from member names3.2.5 高級命令示例

#利用命令生成yaml模板文件

[root@master ~]# kubectl create deployment webcluster --image nginx --dry-run=client -o yaml > webcluster.yml#利用yaml文件生成資源

(刪除不需要的配置后)

[root@master podsManager]# cat webcluster.yml

apiVersion: apps/v1

kind: Deployment

metadata:labels:app: webclustername: webcluster

spec:replicas: 1selector:matchLabels:app: webclustertemplate:metadata:labels:app: webclusterspec:containers:- image: nginxname: nginx#利用 YAML 文件定義并創建 Kubernetes 資源

[root@master podsManager]# kubectl apply -f webcluster.yml

deployment.apps/webcluster created#查看控制器

[root@master podsManager]# kubectl get deployments.apps

NAME READY UP-TO-DATE AVAILABLE AGE

webcluster 1/1 1 1 6s#刪除資源

[root@master podsManager]# kubectl delete -f webcluster.yml

deployment.apps "webcluster" deleted

#管理資源標簽

[root@master podsManager]# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

nginx 1/1 Running 0 102s run=nginx[root@master podsManager]# kubectl label pods nginx app=xxy

pod/nginx labeled

[root@master podsManager]# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

nginx 1/1 Running 0 2m47s app=xxy,run=nginx#更改標簽

[root@master podsManager]# kubectl label pods nginx app=webcluster --overwrite

pod/nginx labeled

[root@master podsManager]# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

nginx 1/1 Running 0 5m55s app=webcluster,run=nginx#刪除標簽

[root@master podsManager]# cat webcluster.yml

apiVersion: apps/v1

kind: Deployment

metadata:labels:app: webclustername: webcluster

spec:replicas: 2selector:matchLabels:app: webclustertemplate:metadata:labels:app: webclusterspec:containers:- image: nginxname: nginx[root@master podsManager]# kubectl apply -f webcluster.yml

deployment.apps/webcluster created[root@master podsManager]# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

webcluster-7c584f774b-7ncbj 1/1 Running 0 15s app=webcluster,pod-template-hash=7c584f774b

webcluster-7c584f774b-gxktm 1/1 Running 0 15s app=webcluster,pod-template-hash=7c584f774b#刪除pod上的標簽

[root@master podsManager]# kubectl label pods webcluster-7c584f774b-7ncbj app-

pod/webcluster-7c584f774b-7ncbj unlabeled#控制器會重新啟動新pod

[root@master podsManager]# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

webcluster-7c584f774b-52bbq 1/1 Running 0 26s app=webcluster,pod-template-hash=7c584f774b

webcluster-7c584f774b-7ncbj 1/1 Running 0 4m28s pod-template-hash=7c584f774b

webcluster-7c584f774b-gxktm 1/1 Running 0 4m28s app=webcluster,pod-template-hash=7c584f774b四 pod

4.1 什么是pod

-

Pod是可以創建和管理Kubernetes計算的最小可部署單元

-

一個Pod代表著集群中運行的一個進程,每個pod都有一個唯一的ip。

-

一個pod類似一個豌豆莢,包含一個或多個容器(通常是docker)

-

多個容器間共享IPC、Network和UTC namespace。

4.1.1 創建自主式pod (生產不推薦)

優點:

靈活性高:

-

可以精確控制 Pod 的各種配置參數,包括容器的鏡像、資源限制、環境變量、命令和參數等,滿足特定的應用需求。

學習和調試方便:

-

對于學習 Kubernetes 的原理和機制非常有幫助,通過手動創建 Pod 可以深入了解 Pod 的結構和配置方式。在調試問題時,可以更直接地觀察和調整 Pod 的設置。

適用于特殊場景:

-

在一些特殊情況下,如進行一次性任務、快速驗證概念或在資源受限的環境中進行特定配置時,手動創建 Pod 可能是一種有效的方式。

缺點:

管理復雜:

-

如果需要管理大量的 Pod,手動創建和維護會變得非常繁瑣和耗時。難以實現自動化的擴縮容、故障恢復等操作。

缺乏高級功能:

-

無法自動享受 Kubernetes 提供的高級功能,如自動部署、滾動更新、服務發現等。這可能導致應用的部署和管理效率低下。

#查看所有pods(當前namespace)

[root@master podsManager]# kubectl get pods

No resources found in default namespace.#建立一個名為timingy的pod

[root@master podsManager]# kubectl run timingy --image nginx

pod/timingy created[root@master podsManager]# kubectl get pods

NAME READY STATUS RESTARTS AGE

timingy 1/1 Running 0 5s#顯示pod的較為詳細的信息

[root@master podsManager]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

timingy 1/1 Running 0 14s 10.244.1.5 node1 <none> <none>

4.1.2 利用控制器管理pod(推薦)

高可用性和可靠性:

-

自動故障恢復:如果一個 Pod 失敗或被刪除,控制器會自動創建新的 Pod 來維持期望的副本數量。確保應用始終處于可用狀態,減少因單個 Pod 故障導致的服務中斷。

-

健康檢查和自愈:可以配置控制器對 Pod 進行健康檢查(如存活探針和就緒探針)。如果 Pod 不健康,控制器會采取適當的行動,如重啟 Pod 或刪除并重新創建它,以保證應用的正常運行。

可擴展性:

-

輕松擴縮容:可以通過簡單的命令或配置更改來增加或減少 Pod 的數量,以滿足不同的工作負載需求。例如,在高流量期間可以快速擴展以處理更多請求,在低流量期間可以縮容以節省資源。

-

水平自動擴縮容(HPA):可以基于自定義指標(如 CPU 利用率、內存使用情況或應用特定的指標)自動調整 Pod 的數量,實現動態的資源分配和成本優化。

版本管理和更新:

-

滾動更新:對于 Deployment 等控制器,可以執行滾動更新來逐步替換舊版本的 Pod 為新版本,確保應用在更新過程中始終保持可用。可以控制更新的速率和策略,以減少對用戶的影響。

-

回滾:如果更新出現問題,可以輕松回滾到上一個穩定版本,保證應用的穩定性和可靠性。

聲明式配置:

-

簡潔的配置方式:使用 YAML 或 JSON 格式的聲明式配置文件來定義應用的部署需求。這種方式使得配置易于理解、維護和版本控制,同時也方便團隊協作。

-

期望狀態管理:只需要定義應用的期望狀態(如副本數量、容器鏡像等),控制器會自動調整實際狀態與期望狀態保持一致。無需手動管理每個 Pod 的創建和刪除,提高了管理效率。

服務發現和負載均衡:

-

自動注冊和發現:Kubernetes 中的服務(Service)可以自動發現由控制器管理的 Pod,并將流量路由到它們。這使得應用的服務發現和負載均衡變得簡單和可靠,無需手動配置負載均衡器。

-

流量分發:可以根據不同的策略(如輪詢、隨機等)將請求分發到不同的 Pod,提高應用的性能和可用性。

多環境一致性:

-

一致的部署方式:在不同的環境(如開發、測試、生產)中,可以使用相同的控制器和配置來部署應用,確保應用在不同環境中的行為一致。這有助于減少部署差異和錯誤,提高開發和運維效率。

示例:

#建立控制器并自動運行pod

[root@master ~]# kubectl create deployment timingy --image nginx

deployment.apps/timingy created

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

timingy-5bb68ff8f9-swfjk 1/1 Running 0 22s#為timingy擴容

[root@master ~]# kubectl scale deployment timingy --replicas 6

deployment.apps/timingy scaled

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

timingy-5bb68ff8f9-8gc4z 1/1 Running 0 4m15s

timingy-5bb68ff8f9-hvn2j 1/1 Running 0 4m15s

timingy-5bb68ff8f9-mr48h 1/1 Running 0 4m15s

timingy-5bb68ff8f9-nsf4g 1/1 Running 0 4m15s

timingy-5bb68ff8f9-pnmk2 1/1 Running 0 4m15s

timingy-5bb68ff8f9-swfjk 1/1 Running 0 5m20s#為timinglee縮容

[root@master ~]# kubectl scale deployment timingy --replicas 2

deployment.apps/timingy scaled[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

timingy-5bb68ff8f9-hvn2j 1/1 Running 0 5m5s

timingy-5bb68ff8f9-swfjk 1/1 Running 0 6m10s

4.1.3 應用版本的更新

#利用控制器建立pod

[root@master ~]# kubectl create deployment timingy --image myapp:v1 --replicas 2

deployment.apps/timingy created#暴漏端口

[root@master ~]# kubectl expose deployment timingy --port 80 --target-port 80

service/timingy exposed#訪問服務

[root@master ~]# curl 10.107.166.185

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

[root@master ~]# curl 10.107.166.185

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>#產看歷史版本

[root@master ~]# kubectl rollout history deployment timingy

deployment.apps/timingy

REVISION CHANGE-CAUSE

1 <none>#更新控制器鏡像版本

[root@master ~]# kubectl set image deployments/timingy myapp=myapp:v2

deployment.apps/timingy image updated#查看歷史版本

[root@master ~]# kubectl rollout history deployment timingy

deployment.apps/timingy

REVISION CHANGE-CAUSE

1 <none>

2 <none>#訪問內容測試

[root@master ~]# curl 10.107.166.185

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>#版本回滾

[root@master ~]# kubectl rollout undo deployment timingy --to-revision 1

deployment.apps/timingy rolled back

[root@master ~]# curl 10.107.166.185

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>#不過還是建議在yaml文件中修改鏡像版本4.1.4 利用yaml文件部署應用

4.1.4.1 用yaml文件部署應用有以下優點

聲明式配置:

-

清晰表達期望狀態:以聲明式的方式描述應用的部署需求,包括副本數量、容器配置、網絡設置等。這使得配置易于理解和維護,并且可以方便地查看應用的預期狀態。

-

可重復性和版本控制:配置文件可以被版本控制,確保在不同環境中的部署一致性。可以輕松回滾到以前的版本或在不同環境中重復使用相同的配置。

-

團隊協作:便于團隊成員之間共享和協作,大家可以對配置文件進行審查和修改,提高部署的可靠性和穩定性。

靈活性和可擴展性:

-

豐富的配置選項:可以通過 YAML 文件詳細地配置各種 Kubernetes 資源,如 Deployment、Service、ConfigMap、Secret 等。可以根據應用的特定需求進行高度定制化。

-

組合和擴展:可以將多個資源的配置組合在一個或多個 YAML 文件中,實現復雜的應用部署架構。同時,可以輕松地添加新的資源或修改現有資源以滿足不斷變化的需求。

與工具集成:

-

與 CI/CD 流程集成:可以將 YAML 配置文件與持續集成和持續部署(CI/CD)工具集成,實現自動化的應用部署。例如,可以在代碼提交后自動觸發部署流程,使用配置文件來部署應用到不同的環境。

-

命令行工具支持:Kubernetes 的命令行工具

kubectl對 YAML 配置文件有很好的支持,可以方便地應用、更新和刪除配置。同時,還可以使用其他工具來驗證和分析 YAML 配置文件,確保其正確性和安全性。

4.1.4.2 資源清單參數

| 參數名稱 | 類型 | 參數說明 |

|---|---|---|

| version | String | 這里是指的是K8S API的版本,目前基本上是v1,可以用kubectl api-versions命令查詢 |

| kind | String | 這里指的是yaml文件定義的資源類型和角色,比如:Pod |

| metadata | Object | 元數據對象,固定值就寫metadata |

| metadata.name | String | 元數據對象的名字,這里由我們編寫,比如命名Pod的名字 |

| metadata.namespace | String | 元數據對象的命名空間,由我們自身定義 |

| Spec | Object | 詳細定義對象,固定值就寫Spec |

| spec.containers[] | list | 這里是Spec對象的容器列表定義,是個列表 |

| spec.containers[].name | String | 這里定義容器的名字 |

| spec.containers[].image | string | 這里定義要用到的鏡像名稱 |

| spec.containers[].imagePullPolicy | String | 定義鏡像拉取策略,有三個值可選: (1) Always: 每次都嘗試重新拉取鏡像 (2) IfNotPresent:如果本地有鏡像就使用本地鏡像 (3) )Never:表示僅使用本地鏡像 |

| spec.containers[].command[] | list | 指定容器運行時啟動的命令,若未指定則運行容器打包時指定的命令 |

| spec.containers[].args[] | list | 指定容器運行參數,可以指定多個 |

| spec.containers[].workingDir | String | 指定容器工作目錄 |

| spec.containers[].volumeMounts[] | list | 指定容器內部的存儲卷配置 |

| spec.containers[].volumeMounts[].name | String | 指定可以被容器掛載的存儲卷的名稱 |

| spec.containers[].volumeMounts[].mountPath | String | 指定可以被容器掛載的存儲卷的路徑 |

| spec.containers[].volumeMounts[].readOnly | String | 設置存儲卷路徑的讀寫模式,ture或false,默認為讀寫模式 |

| spec.containers[].ports[] | list | 指定容器需要用到的端口列表 |

| spec.containers[].ports[].name | String | 指定端口名稱 |

| spec.containers[].ports[].containerPort | String | 指定容器需要監聽的端口號 |

| spec.containers[] ports[].hostPort | String | 指定容器所在主機需要監聽的端口號,默認跟上面containerPort相同,注意設置了hostPort同一臺主機無法啟動該容器的相同副本(因為主機的端口號不能相同,這樣會沖突) |

| spec.containers[].ports[].protocol | String | 指定端口協議,支持TCP和UDP,默認值為 TCP |

| spec.containers[].env[] | list | 指定容器運行前需設置的環境變量列表 |

| spec.containers[].env[].name | String | 指定環境變量名稱 |

| spec.containers[].env[].value | String | 指定環境變量值 |

| spec.containers[].resources | Object | 指定資源限制和資源請求的值(這里開始就是設置容器的資源上限) |

| spec.containers[].resources.limits | Object | 指定設置容器運行時資源的運行上限 |

| spec.containers[].resources.limits.cpu | String | 指定CPU的限制,單位為核心數,1=1000m |

| spec.containers[].resources.limits.memory | String | 指定MEM內存的限制,單位為MIB、GiB |

| spec.containers[].resources.requests | Object | 指定容器啟動和調度時的限制設置 |

| spec.containers[].resources.requests.cpu | String | CPU請求,單位為core數,容器啟動時初始化可用數量 |

| spec.containers[].resources.requests.memory | String | 內存請求,單位為MIB、GIB,容器啟動的初始化可用數量 |

| spec.restartPolicy | string | 定義Pod的重啟策略,默認值為Always. (1)Always: Pod-旦終止運行,無論容器是如何 終止的,kubelet服務都將重啟它 (2)OnFailure: 只有Pod以非零退出碼終止時,kubelet才會重啟該容器。如果容器正常結束(退出碼為0),則kubelet將不會重啟它 (3) Never: Pod終止后,kubelet將退出碼報告給Master,不會重啟該 |

| spec.nodeSelector | Object | 定義Node的Label過濾標簽,以key:value格式指定 |

| spec.imagePullSecrets | Object | 定義pull鏡像時使用secret名稱,以name:secretkey格式指定 |

| spec.hostNetwork | Boolean | 定義是否使用主機網絡模式,默認值為false。設置true表示使用宿主機網絡,不使用docker網橋,同時設置了true將無法在同一臺宿主機 上啟動第二個副本 |

4.1.4.3 如何獲得資源幫助

kubectl explain pod.spec.containers4.1.4.4 編寫示例

4.1.4.4.1 示例1:運行簡單的單個容器pod

[root@k8s-master ~]# vim pod.yml

apiVersion: v1

kind: Pod

metadata:labels:run: timing #pod標簽name: timinglee #pod名稱

spec:containers:- image: myapp:v1 #pod鏡像name: timinglee #容器名稱4.1.4.4.2?示例2:運行多個容器pod

注意:注意如果多個容器運行在一個pod中,資源共享的同時在使用相同資源時也會干擾,比如端口

#一個端口干擾示例:

[root@k8s-master ~]# vim pod.yml

apiVersion: v1

kind: Pod

metadata:labels:run: timingname: timinglee

spec:containers:- image: nginx:latestname: web1- image: nginx:latestname: web2[root@k8s-master ~]# kubectl apply -f pod.yml

pod/timinglee created[root@k8s-master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

timinglee 1/2 Error 1 (14s ago) 18s#查看日志

[root@k8s-master ~]# kubectl logs timinglee web2

2024/08/31 12:43:20 [emerg] 1#1: bind() to [::]:80 failed (98: Address already in use)

nginx: [emerg] bind() to [::]:80 failed (98: Address already in use)

2024/08/31 12:43:20 [notice] 1#1: try again to bind() after 500ms

2024/08/31 12:43:20 [emerg] 1#1: still could not bind()

nginx: [emerg] still could not bind()注意:在一個pod中開啟多個容器時一定要確保容器彼此不能互相干擾

[root@k8s-master ~]# vim pod.yml[root@k8s-master ~]# kubectl apply -f pod.yml

pod/timinglee created

apiVersion: v1

kind: Pod

metadata:labels:run: timingname: timinglee

spec:containers:- image: nginx:latestname: web1- image: busybox:latestname: busyboxcommand: ["/bin/sh","-c","sleep 1000000"][root@k8s-master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

timinglee 2/2 Running 0 19s4.1.4.4.3?示例3:理解pod間的網絡整合

同在一個pod中的容器公用一個網絡

[root@master podsManager]# cat pod.yml

apiVersion: v1

kind: Pod

metadata:labels:run: timingleename: test

spec:containers:- image: myapp:v1name: myapp1- image: busyboxplus:latestname: busyboxpluscommand: ["/bin/sh","-c","sleep 1000000"][root@master podsManager]# kubectl apply -f pod.yml

pod/test created

[root@master podsManager]# kubectl get pods

NAME READY STATUS RESTARTS AGE

test 2/2 Running 0 18s

[root@master podsManager]# kubectl exec test -c busyboxplus -- curl -s localhost

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>可以看到同一個pod里容器共享一個網絡

4.1.4.4.4 示例4:端口映射

[root@master podsManager]# cat 1-pod.yml

apiVersion: v1

kind: Pod

metadata:labels:run: timingleename: test

spec:containers:- image: myapp:v1name: myapp1ports:- name: httpcontainerPort: 80 hostPort: 80 #映射端口到被調度的節點的真實網卡ip上protocol: TCP[root@master podsManager]# kubectl apply -f 1-pod.yml

pod/test created#測試

[root@master podsManager]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

test 1/1 Running 0 69s 10.244.104.48 node2 <none> <none>

[root@master podsManager]# curl node2

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>4.1.4.4.5 示例5:如何設定環境變量

[root@master podsManager]# cat 2-pod.yml

apiVersion: v1

kind: Pod

metadata:labels:run: timingleename: test

spec:containers:- image: busybox:latestname: busyboxcommand: ["/bin/sh","-c","echo $NAME;sleep 3000000"]env:- name: NAMEvalue: timinglee[root@master podsManager]# kubectl apply -f 2-pod.yml

pod/test created

[root@master podsManager]# kubectl logs pods/test busybox

timinglee

4.1.4.4.6 示例6:資源限制

資源限制會影響pod的Qos Class資源優先級,資源優先級分為Guaranteed > Burstable > BestEffort

QoS(Quality of Service)即服務質量

資源設定 優先級類型 資源限定未設定 BestEffort 資源限定設定且最大和最小不一致 Burstable 資源限定設定且最大和最小一致 Guaranteed

[root@k8s-master ~]# vim pod.yml

apiVersion: v1

kind: Pod

metadata:labels:run: timingleename: test

spec:containers:- image: myapp:v1name: myappresources:limits: #pod使用資源的最高限制 cpu: 500mmemory: 100Mrequests: #pod期望使用資源量,不能大于limitscpu: 500mmemory: 100Mroot@k8s-master ~]# kubectl apply -f pod.yml

pod/test created[root@k8s-master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

test 1/1 Running 0 3s[root@k8s-master ~]# kubectl describe pods testLimits:cpu: 500mmemory: 100MRequests:cpu: 500mmemory: 100M

QoS Class: Guaranteed4.1.4.4.7 示例7 容器啟動管理

[root@k8s-master ~]# vim pod.yml

apiVersion: v1

kind: Pod

metadata:labels:run: timingleename: test

spec:restartPolicy: Alwayscontainers:- image: myapp:v1name: myapp

[root@k8s-master ~]# kubectl apply -f pod.yml

pod/test created[root@k8s-master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

test 1/1 Running 0 6s 10.244.2.3 k8s-node2 <none> <none>[root@k8s-node2 ~]# docker rm -f ccac1d64ea814.1.4.4.8 示例8 選擇運行節點

[root@k8s-master ~]# vim pod.yml

apiVersion: v1

kind: Pod

metadata:labels:run: timingleename: test

spec:nodeSelector:kubernetes.io/hostname: k8s-node1restartPolicy: Alwayscontainers:- image: myapp:v1name: myapp[root@k8s-master ~]# kubectl apply -f pod.yml

pod/test created[root@k8s-master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

test 1/1 Running 0 21s 10.244.1.5 k8s-node1 <none> <none>4.1.4.4.9 示例9 共享宿主機網絡

[root@k8s-master ~]# vim pod.yml

apiVersion: v1

kind: Pod

metadata:labels:run: timingleename: test

spec:hostNetwork: true #共享宿主機網絡restartPolicy: Alwayscontainers:- image: busybox:latestname: busyboxcommand: ["/bin/sh","-c","sleep 100000"]

[root@k8s-master ~]# kubectl apply -f pod.yml

pod/test created

[root@k8s-master ~]# kubectl exec -it pods/test -c busybox -- /bin/sh

/ # ifconfig

cni0 Link encap:Ethernet HWaddr E6:D4:AA:81:12:B4inet addr:10.244.2.1 Bcast:10.244.2.255 Mask:255.255.255.0inet6 addr: fe80::e4d4:aaff:fe81:12b4/64 Scope:LinkUP BROADCAST RUNNING MULTICAST MTU:1450 Metric:1RX packets:6259 errors:0 dropped:0 overruns:0 frame:0TX packets:6495 errors:0 dropped:0 overruns:0 carrier:0collisions:0 txqueuelen:1000RX bytes:506704 (494.8 KiB) TX bytes:625439 (610.7 KiB)docker0 Link encap:Ethernet HWaddr 02:42:99:4A:30:DCinet addr:172.17.0.1 Bcast:172.17.255.255 Mask:255.255.0.0UP BROADCAST MULTICAST MTU:1500 Metric:1RX packets:0 errors:0 dropped:0 overruns:0 frame:0TX packets:0 errors:0 dropped:0 overruns:0 carrier:0collisions:0 txqueuelen:0RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)eth0 Link encap:Ethernet HWaddr 00:0C:29:6A:A8:61inet addr:172.25.254.20 Bcast:172.25.254.255 Mask:255.255.255.0inet6 addr: fe80::8ff3:f39c:dc0c:1f0e/64 Scope:LinkUP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1RX packets:27858 errors:0 dropped:0 overruns:0 frame:0TX packets:14454 errors:0 dropped:0 overruns:0 carrier:0collisions:0 txqueuelen:1000RX bytes:26591259 (25.3 MiB) TX bytes:1756895 (1.6 MiB)flannel.1 Link encap:Ethernet HWaddr EA:36:60:20:12:05inet addr:10.244.2.0 Bcast:0.0.0.0 Mask:255.255.255.255inet6 addr: fe80::e836:60ff:fe20:1205/64 Scope:LinkUP BROADCAST RUNNING MULTICAST MTU:1450 Metric:1RX packets:0 errors:0 dropped:0 overruns:0 frame:0TX packets:0 errors:0 dropped:40 overruns:0 carrier:0collisions:0 txqueuelen:0RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)lo Link encap:Local Loopbackinet addr:127.0.0.1 Mask:255.0.0.0inet6 addr: ::1/128 Scope:HostUP LOOPBACK RUNNING MTU:65536 Metric:1RX packets:163 errors:0 dropped:0 overruns:0 frame:0TX packets:163 errors:0 dropped:0 overruns:0 carrier:0collisions:0 txqueuelen:1000RX bytes:13630 (13.3 KiB) TX bytes:13630 (13.3 KiB)veth9a516531 Link encap:Ethernet HWaddr 7A:92:08:90:DE:B2inet6 addr: fe80::7892:8ff:fe90:deb2/64 Scope:LinkUP BROADCAST RUNNING MULTICAST MTU:1450 Metric:1RX packets:6236 errors:0 dropped:0 overruns:0 frame:0TX packets:6476 errors:0 dropped:0 overruns:0 carrier:0collisions:0 txqueuelen:0RX bytes:592532 (578.6 KiB) TX bytes:622765 (608.1 KiB)/ # exit默認情況下,K8s 的 Pod 會有獨立的網絡命名空間(即獨立的 IP、網卡、端口等),與宿主機(運行 K8s 的服務器)網絡隔離。而通過設置?

hostNetwork: true,Pod 會放棄獨立網絡,直接使用宿主機的網絡命名空間 —— 相當于 Pod 內的容器和宿主機 “共用一套網卡、IP 和端口”。

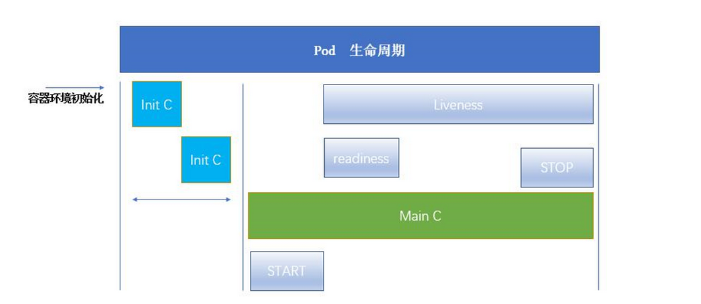

4.2 pod的生命周期

4.2.1 INIT 容器

官方文檔:Pod | Kubernetes

-

Pod 可以包含多個容器,應用運行在這些容器里面,同時 Pod 也可以有一個或多個先于應用容器啟動的 Init 容器。

-

Init 容器與普通的容器非常像,除了如下兩點:

-

它們總是運行到完成

-

init 容器不支持 Readiness,因為它們必須在 Pod 就緒之前運行完成,每個 Init 容器必須運行成功,下一個才能夠運行。

-

-

如果Pod的 Init 容器失敗,Kubernetes 會不斷地重啟該 Pod,直到 Init 容器成功為止。但是,如果 Pod 對應的 restartPolicy 值為 Never,它不會重新啟動。

4.2.1.1?INIT 容器的功能

-

Init 容器可以包含一些安裝過程中應用容器中不存在的實用工具或個性化代碼。

-

Init 容器可以安全地運行這些工具,避免這些工具導致應用鏡像的安全性降低。

-

應用鏡像的創建者和部署者可以各自獨立工作,而沒有必要聯合構建一個單獨的應用鏡像。

-

Init 容器能以不同于Pod內應用容器的文件系統視圖運行。因此,Init容器可具有訪問 Secrets 的權限,而應用容器不能夠訪問。

-

由于 Init 容器必須在應用容器啟動之前運行完成,因此 Init 容器提供了一種機制來阻塞或延遲應用容器的啟動,直到滿足了一組先決條件。一旦前置條件滿足,Pod內的所有的應用容器會并行啟動。

4.2.1.2?INIT 容器示例

[root@k8s-master ~]# vim pod.yml

apiVersion: v1

kind: Pod

metadata:labels:name: initpodname: initpod

spec:containers:- image: myapp:v1name: myappinitContainers:- name: init-myserviceimage: busyboxcommand: ["sh","-c","until test -e /testfile;do echo wating for myservice; sleep 2;done"][root@k8s-master ~]# kubectl apply -f pod.yml

pod/initpod created

[root@k8s-master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

initpod 0/1 Init:0/1 0 3s[root@k8s-master ~]# kubectl logs pods/initpod init-myservice

wating for myservice

wating for myservice

wating for myservice

wating for myservice

wating for myservice

wating for myservice

[root@k8s-master ~]# kubectl exec pods/initpod -c init-myservice -- /bin/sh -c "touch /testfile"[root@k8s-master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE

initpod 1/1 Running 0 62s4.2.2?探針

探針是由 kubelet 對容器執行的定期診斷:

-

ExecAction:在容器內執行指定命令。如果命令退出時返回碼為 0 則認為診斷成功。

-

TCPSocketAction:對指定端口上的容器的 IP 地址進行 TCP 檢查。如果端口打開,則診斷被認為是成功的。

-

HTTPGetAction:對指定的端口和路徑上的容器的 IP 地址執行 HTTP Get 請求。如果響應的狀態碼大于等于200 且小于 400,則診斷被認為是成功的。

每次探測都將獲得以下三種結果之一:

-

成功:容器通過了診斷。

-

失敗:容器未通過診斷。

-

未知:診斷失敗,因此不會采取任何行動。

Kubelet 可以選擇是否執行在容器上運行的三種探針執行和做出反應:

-

livenessProbe:指示容器是否正在運行。如果存活探測失敗,則 kubelet 會殺死容器,并且容器將受到其重啟策略的影響。如果容器不提供存活探針,則默認狀態為 Success。

-

readinessProbe:指示容器是否準備好服務請求。如果就緒探測失敗,端點控制器將從與 Pod 匹配的所有 Service 的端點中刪除該 Pod 的 IP 地址。初始延遲之前的就緒狀態默認為 Failure。如果容器不提供就緒探針,則默認狀態為 Success。

-

startupProbe: 指示容器中的應用是否已經啟動。如果提供了啟動探測(startup probe),則禁用所有其他探測,直到它成功為止。如果啟動探測失敗,kubelet 將殺死容器,容器服從其重啟策略進行重啟。如果容器沒有提供啟動探測,則默認狀態為成功Success。

ReadinessProbe 與 LivenessProbe 的區別

-

ReadinessProbe 當檢測失敗后,將 Pod 的 IP:Port 從對應的 EndPoint 列表中刪除。

-

LivenessProbe 當檢測失敗后,將殺死容器并根據 Pod 的重啟策略來決定作出對應的措施

StartupProbe 與 ReadinessProbe、LivenessProbe 的區別

-

如果三個探針同時存在,先執行 StartupProbe 探針,其他兩個探針將會被暫時禁用,直到 pod 滿足 StartupProbe 探針配置的條件,其他 2 個探針啟動,如果不滿足按照規則重啟容器。

-

另外兩種探針在容器啟動后,會按照配置,直到容器消亡才停止探測,而 StartupProbe 探針只是在容器啟動后按照配置滿足一次后,不在進行后續的探測。

4.2.2.1 探針實例

4.2.1.1.1 存活探針示例:

[root@k8s-master ~]# vim pod.yml

apiVersion: v1

kind: Pod

metadata:labels:name: livenessname: liveness

spec:containers:- image: myapp:v1name: myapplivenessProbe:tcpSocket: #檢測端口存在性port: 8080initialDelaySeconds: 3 #容器啟動后要等待多少秒后就探針開始工作,默認是 0periodSeconds: 1 #執行探測的時間間隔,默認為 10stimeoutSeconds: 1 #探針執行檢測請求后,等待響應的超時時間,默認為 1s#測試:

[root@k8s-master ~]# kubectl apply -f pod.yml

pod/liveness created

[root@k8s-master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

liveness 0/1 CrashLoopBackOff 2 (7s ago) 22s[root@k8s-master ~]# kubectl describe pods

Warning Unhealthy 1s (x9 over 13s) kubelet Liveness probe failed: dial tcp 10.244.2.6:8080: connect: connection refused4.2.2.1.2 就緒探針示例:

[root@k8s-master ~]# vim pod.yml

apiVersion: v1

kind: Pod

metadata:labels:name: readinessname: readiness

spec:containers:- image: myapp:v1name: myappreadinessProbe:httpGet:path: /test.htmlport: 80initialDelaySeconds: 1periodSeconds: 3timeoutSeconds: 1#測試:

[root@k8s-master ~]# kubectl expose pod readiness --port 80 --target-port 80[root@k8s-master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

readiness 0/1 Running 0 5m25s[root@k8s-master ~]# kubectl describe pods readiness

Warning Unhealthy 26s (x66 over 5m43s) kubelet Readiness probe failed: HTTP probe failed with statuscode: 404[root@k8s-master ~]# kubectl describe services readiness

Name: readiness

Namespace: default

Labels: name=readiness

Annotations: <none>

Selector: name=readiness

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.100.171.244

IPs: 10.100.171.244

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: #沒有暴漏端口,就緒探針探測不滿足暴漏條件

Session Affinity: None

Events: <none>kubectl exec pods/readiness -c myapp -- /bin/sh -c "echo test > /usr/share/nginx/html/test.html"[root@k8s-master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

readiness 1/1 Running 0 7m49s[root@k8s-master ~]# kubectl describe services readiness

Name: readiness

Namespace: default

Labels: name=readiness

Annotations: <none>

Selector: name=readiness

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.100.171.244

IPs: 10.100.171.244

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.2.8:80 #滿組條件端口暴漏

Session Affinity: None

Events: <none>

五 控制器

5.1 什么是控制器

官方文檔:

工作負載管理 | Kubernetes

控制器也是管理pod的一種手段

-

自主式pod:pod退出或意外關閉后不會被重新創建

-

控制器管理的 Pod:在控制器的生命周期里,始終要維持 Pod 的副本數目

Pod控制器是管理pod的中間層,使用Pod控制器之后,只需要告訴Pod控制器,想要多少個什么樣的Pod就可以了,它會創建出滿足條件的Pod并確保每一個Pod資源處于用戶期望的目標狀態。如果Pod資源在運行中出現故障,它會基于指定策略重新編排Pod

當建立控制器后,會把期望值寫入etcd,k8s中的apiserver檢索etcd中我們保存的期望狀態,并對比pod的當前狀態,如果出現差異代碼自驅動立即恢復

5.2?控制器常用類型

| 控制器名稱 | 控制器用途 |

|---|---|

| Replication Controller | 比較原始的pod控制器,已經被廢棄,由ReplicaSet替代 |

| ReplicaSet | ReplicaSet 確保任何時間都有指定數量的 Pod 副本在運行 |

| Deployment | 一個 Deployment 為 Pod 和 ReplicaSet 提供聲明式的更新能力 |

| DaemonSet | DaemonSet 確保全指定節點上運行一個 Pod 的副本 |

| StatefulSet | StatefulSet 是用來管理有狀態應用的工作負載 API 對象。 |

| Job | 執行批處理任務,僅執行一次任務,保證任務的一個或多個Pod成功結束 |

| CronJob | Cron Job 創建基于時間調度的 Jobs。 |

| HPA全稱Horizontal Pod Autoscaler | 根據資源利用率自動調整service中Pod數量,實現Pod水平自動縮放 |

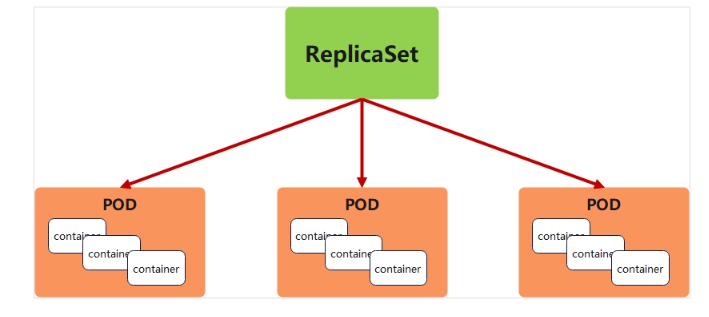

5.3?replicaset控制器

5.3.1 replicaset功能

-

ReplicaSet 是下一代的 Replication Controller,官方推薦使用ReplicaSet

-

ReplicaSet和Replication Controller的唯一區別是選擇器的支持,ReplicaSet支持新的基于集合的選擇器需求

-

ReplicaSet 確保任何時間都有指定數量的 Pod 副本在運行

-

雖然 ReplicaSets 可以獨立使用,但今天它主要被Deployments 用作協調 Pod 創建、刪除和更新的機制

5.3.2 replicaset參數說明

| 參數名稱 | 字段類型 | 參數說明 |

|---|---|---|

| spec | Object | 詳細定義對象,固定值就寫Spec |

| spec.replicas | integer | 指定維護pod數量 |

| spec.selector | Object | Selector是對pod的標簽查詢,與pod數量匹配 |

| spec.selector.matchLabels | string | 指定Selector查詢標簽的名稱和值,以key:value方式指定 |

| spec.template | Object | 指定對pod的描述信息,比如lab標簽,運行容器的信息等 |

| spec.template.metadata | Object | 指定pod屬性 |

| spec.template.metadata.labels | string | 指定pod標簽 |

| spec.template.spec | Object | 詳細定義對象 |

| spec.template.spec.containers | list | Spec對象的容器列表定義 |

| spec.template.spec.containers.name | string | 指定容器名稱 |

| spec.template.spec.containers.image | string | 指定容器鏡像 |

#生成yml文件

[root@k8s-master ~]# kubectl create deployment replicaset --image myapp:v1 --dry-run=client -o yaml > replicaset.yml[root@k8s-master ~]# vim replicaset.yml

apiVersion: apps/v1

kind: ReplicaSet

metadata:name: replicaset #指定pod名稱,一定小寫,如果出現大寫報錯

spec:replicas: 2 #指定維護pod數量為2selector: #指定檢測匹配方式matchLabels: #指定匹配方式為匹配標簽app: myapp #指定匹配的標簽為app=myapptemplate: #模板,當副本數量不足時,會根據下面的模板創建pod副本metadata:labels:app: myappspec:containers:- image: myapp:v1name: myapp[root@k8s-master ~]# kubectl apply -f replicaset.yml

replicaset.apps/replicaset created[root@k8s-master ~]# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

replicaset-l4xnr 1/1 Running 0 96s app=myapp

replicaset-t2s5p 1/1 Running 0 96s app=myapp#replicaset是通過標簽匹配pod

[root@k8s-master ~]# kubectl label pod replicaset-f7ztm app=xie --overwrite

pod/replicaset-l4xnr labeled

[root@k8s-master ~]# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

replicaset-gd5fh 1/1 Running 0 2s app=myapp #新開啟的pod

replicaset-l4xnr 1/1 Running 0 3m19s app=timinglee

replicaset-t2s5p 1/1 Running 0 3m19s app=myapp#恢復標簽后

[root@k8s2 pod]# kubectl label pod replicaset-example-q2sq9 app-

[root@k8s2 pod]# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

replicaset-example-q2sq9 1/1 Running 0 3m14s app=nginx

replicaset-example-th24v 1/1 Running 0 3m14s app=nginx

replicaset-example-w7zpw 1/1 Running 0 3m14s app=nginx#replicaset自動控制副本數量,pod可以自愈

[root@k8s-master ~]# kubectl delete pods replicaset-t2s5p

pod "replicaset-t2s5p" deleted[root@k8s-master ~]# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

replicaset-l4xnr 1/1 Running 0 5m43s app=myapp

replicaset-nxmr9 1/1 Running 0 15s app=myapp回收資源

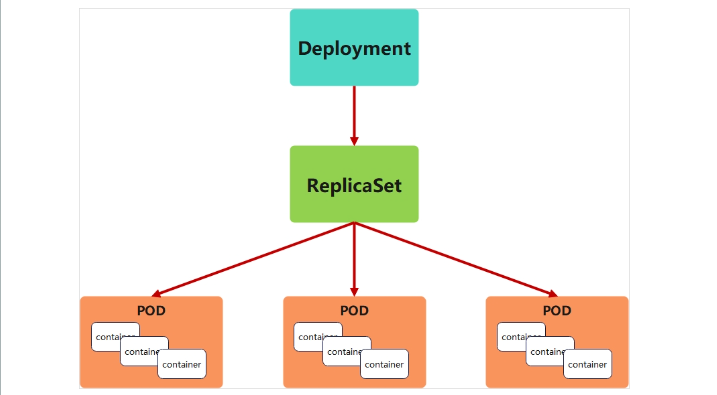

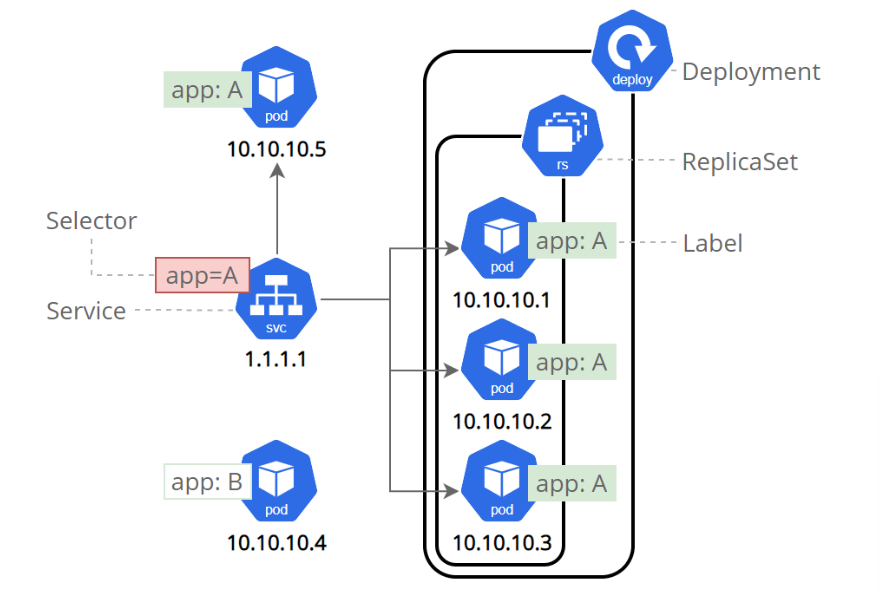

[root@k8s2 pod]# kubectl delete -f rs-example.yml5.4?deployment 控制器

5.4.1 deployment控制器的功能

-

為了更好的解決服務編排的問題,kubernetes在V1.2版本開始,引入了Deployment控制器。

-

Deployment控制器并不直接管理pod,而是通過管理ReplicaSet來間接管理Pod

-

Deployment管理ReplicaSet,ReplicaSet管理Pod

-

Deployment 為 Pod 和 ReplicaSet 提供了一個申明式的定義方法

-

在Deployment中ReplicaSet相當于一個版本

典型的應用場景:

-

用來創建Pod和ReplicaSet

-

滾動更新和回滾

-

擴容和縮容

-

暫停與恢復

5.4.2 deployment控制器示例

#生成yaml文件

[root@k8s-master ~]# kubectl create deployment deployment --image myapp:v1 --dry-run=client -o yaml > deployment.yml[root@k8s-master ~]# vim deployment.yml

apiVersion: apps/v1

kind: Deployment

metadata:name: deployment

spec:replicas: 4selector:matchLabels:app: myapptemplate:metadata:labels:app: myappspec:containers:- image: myapp:v1name: myapp

#建立pod

root@k8s-master ~]# kubectl apply -f deployment.yml

deployment.apps/deployment created#查看pod信息

[root@k8s-master ~]# kubectl get pods --show-labels

NAME READY STATUS RESTARTS AGE LABELS

deployment-5d886954d4-2ckqw 1/1 Running 0 23s app=myapp,pod-template-hash=5d886954d4

deployment-5d886954d4-m8gpd 1/1 Running 0 23s app=myapp,pod-template-hash=5d886954d4

deployment-5d886954d4-s7pws 1/1 Running 0 23s app=myapp,pod-template-hash=5d886954d4

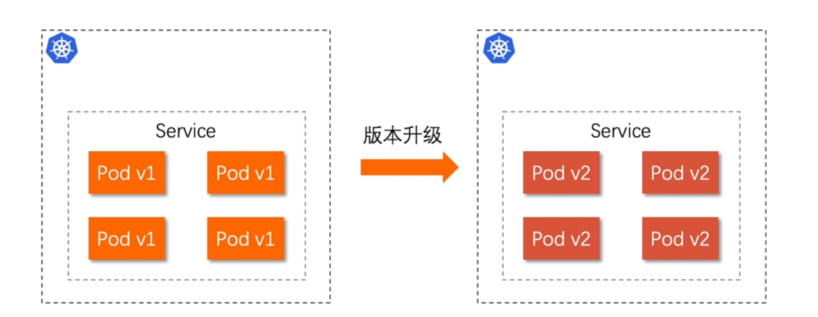

deployment-5d886954d4-wqnvv 1/1 Running 0 23s app=myapp,pod-template-hash=5d886954d45.4.2.1 版本迭代

[root@k8s-master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deployment-5d886954d4-2ckqw 1/1 Running 0 2m40s 10.244.2.14 k8s-node2 <none> <none>

deployment-5d886954d4-m8gpd 1/1 Running 0 2m40s 10.244.1.17 k8s-node1 <none> <none>

deployment-5d886954d4-s7pws 1/1 Running 0 2m40s 10.244.1.16 k8s-node1 <none> <none>

deployment-5d886954d4-wqnvv 1/1 Running 0 2m40s 10.244.2.15 k8s-node2 <none> <none>#pod運行容器版本為v1

[root@k8s-master ~]# curl 10.244.2.14

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>[root@k8s-master ~]# kubectl describe deployments.apps deployment

Name: deployment

Namespace: default

CreationTimestamp: Sun, 01 Sep 2024 23:19:10 +0800

Labels: <none>

Annotations: deployment.kubernetes.io/revision: 1

Selector: app=myapp

Replicas: 4 desired | 4 updated | 4 total | 4 available | 0 unavailable

StrategyType: RollingUpdate

MinReadySeconds: 0

RollingUpdateStrategy: 25% max unavailable, 25% max surge #默認每次更新25%#更新容器運行版本

[root@k8s-master ~]# vim deployment.yml

apiVersion: apps/v1

kind: Deployment

metadata:name: deployment

spec:minReadySeconds: 5 #最小就緒時間5秒replicas: 4selector:matchLabels:app: myapptemplate:metadata:labels:app: myappspec:containers:- image: myapp:v2 #更新為版本2name: myapp[root@k8s2 pod]# kubectl apply -f deployment-example.yaml#更新過程

[root@k8s-master ~]# watch - n1 kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE

deployment-5d886954d4-8kb28 1/1 Running 0 48s

deployment-5d886954d4-8s4h8 1/1 Running 0 49s

deployment-5d886954d4-rclkp 1/1 Running 0 50s

deployment-5d886954d4-tt2hz 1/1 Running 0 50s

deployment-7f4786db9c-g796x 0/1 Pending 0 0s#測試更新效果

[root@k8s-master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deployment-7f4786db9c-967fk 1/1 Running 0 10s 10.244.1.26 k8s-node1 <none> <none>

deployment-7f4786db9c-cvb9k 1/1 Running 0 10s 10.244.2.24 k8s-node2 <none> <none>

deployment-7f4786db9c-kgss4 1/1 Running 0 9s 10.244.1.27 k8s-node1 <none> <none>

deployment-7f4786db9c-qts8c 1/1 Running 0 9s 10.244.2.25 k8s-node2 <none> <none>[root@k8s-master ~]# curl 10.244.1.26

Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a>Note:

更新的過程是重新建立一個版本的RS,新版本的RS會把pod 重建,然后把老版本的RS回收

5.4.2.2 版本回滾

[root@k8s-master ~]# vim deployment.yml

apiVersion: apps/v1

kind: Deployment

metadata:name: deployment

spec:replicas: 4selector:matchLabels:app: myapptemplate:metadata:labels:app: myappspec:containers:- image: myapp:v1 #回滾到之前版本name: myapp[root@k8s-master ~]# kubectl apply -f deployment.yml

deployment.apps/deployment configured#測試回滾效果

[root@k8s-master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deployment-5d886954d4-dr74h 1/1 Running 0 8s 10.244.2.26 k8s-node2 <none> <none>

deployment-5d886954d4-thpf9 1/1 Running 0 7s 10.244.1.29 k8s-node1 <none> <none>

deployment-5d886954d4-vmwl9 1/1 Running 0 8s 10.244.1.28 k8s-node1 <none> <none>

deployment-5d886954d4-wprpd 1/1 Running 0 6s 10.244.2.27 k8s-node2 <none> <none>[root@k8s-master ~]# curl 10.244.2.26

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>5.4.2.3 滾動更新策略

[root@k8s-master ~]# vim deployment.yml

apiVersion: apps/v1

kind: Deployment

metadata:name: deployment

spec:minReadySeconds: 5 #最小就緒時間,指定pod每隔多久更新一次replicas: 4strategy: #指定更新策略rollingUpdate:maxSurge: 1 #比定義pod數量多幾個maxUnavailable: 0 #比定義pod個數少幾個selector:matchLabels:app: myapptemplate:metadata:labels:app: myappspec:containers:- image: myapp:v1name: myapp

[root@k8s2 pod]# kubectl apply -f deployment-example.yaml5.4.2.4 暫停及恢復

在實際生產環境中我們做的變更可能不止一處,當修改了一處后,如果執行變更就直接觸發了

我們期望的觸發時當我們把所有修改都搞定后一次觸發

暫停,避免觸發不必要的線上更新

[root@k8s2 pod]# kubectl rollout pause deployment deployment-example[root@k8s2 pod]# vim deployment-example.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: deployment-example

spec:minReadySeconds: 5strategy:rollingUpdate:maxSurge: 1maxUnavailable: 0replicas: 6 selector:matchLabels:app: myapptemplate:metadata:labels:app: myappspec:containers:- name: myappimage: nginxresources:limits:cpu: 0.5memory: 200Mirequests:cpu: 0.5memory: 200Mi[root@k8s2 pod]# kubectl apply -f deployment-example.yaml#調整副本數,不受影響

[root@k8s-master ~]# kubectl describe pods deployment-7f4786db9c-8jw22

Name: deployment-7f4786db9c-8jw22

Namespace: default

Priority: 0

Service Account: default

Node: k8s-node1/172.25.254.10

Start Time: Mon, 02 Sep 2024 00:27:20 +0800

Labels: app=myapppod-template-hash=7f4786db9c

Annotations: <none>

Status: Running

IP: 10.244.1.31

IPs:IP: 10.244.1.31

Controlled By: ReplicaSet/deployment-7f4786db9c

Containers:myapp:Container ID: docker://01ad7216e0a8c2674bf17adcc9b071e9bfb951eb294cafa2b8482bb8b4940c1dImage: myapp:v2Image ID: docker-pullable://myapp@sha256:5f4afc8302ade316fc47c99ee1d41f8ba94dbe7e3e7747dd87215a15429b9102Port: <none>Host Port: <none>State: RunningStarted: Mon, 02 Sep 2024 00:27:21 +0800Ready: TrueRestart Count: 0Environment: <none>Mounts:/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-mfjjp (ro)

Conditions:Type StatusPodReadyToStartContainers TrueInitialized TrueReady TrueContainersReady TruePodScheduled True

Volumes:kube-api-access-mfjjp:Type: Projected (a volume that contains injected data from multiple sources)TokenExpirationSeconds: 3607ConfigMapName: kube-root-ca.crtConfigMapOptional: <nil>DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300snode.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:Type Reason Age From Message---- ------ ---- ---- -------Normal Scheduled 6m22s default-scheduler Successfully assigned default/deployment-7f4786db9c-8jw22 to k8s-node1Normal Pulled 6m22s kubelet Container image "myapp:v2" already present on machineNormal Created 6m21s kubelet Created container myappNormal Started 6m21s kubelet Started container myapp#但是更新鏡像和修改資源并沒有觸發更新

[root@k8s2 pod]# kubectl rollout history deployment deployment-example

deployment.apps/deployment-example

REVISION CHANGE-CAUSE

3 <none>

4 <none>#恢復后開始觸發更新

[root@k8s2 pod]# kubectl rollout resume deployment deployment-example[root@k8s2 pod]# kubectl rollout history deployment deployment-example

deployment.apps/deployment-example

REVISION CHANGE-CAUSE

3 <none>

4 <none>

5 <none>#回收

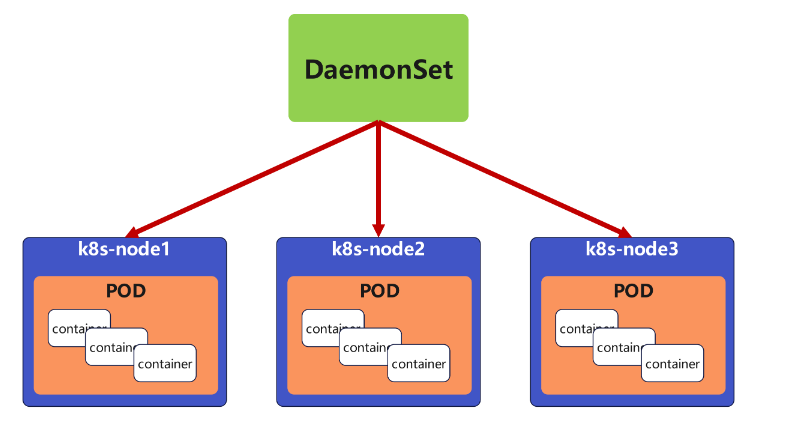

[root@k8s2 pod]# kubectl delete -f deployment-example.yaml5.5 daemonset控制器

5.5.1 daemonset功能

DaemonSet 確保全部(或者某些)節點上運行一個 Pod 的副本。當有節點加入集群時, 也會為他們新增一個 Pod ,當有節點從集群移除時,這些 Pod 也會被回收。刪除 DaemonSet 將會刪除它創建的所有 Pod

DaemonSet 的典型用法:

-

在每個節點上運行集群存儲 DaemonSet,例如 glusterd、ceph。

-

在每個節點上運行日志收集 DaemonSet,例如 fluentd、logstash。

-

在每個節點上運行監控 DaemonSet,例如 Prometheus Node Exporter、zabbix agent等

-

一個簡單的用法是在所有的節點上都啟動一個 DaemonSet,將被作為每種類型的 daemon 使用

-

一個稍微復雜的用法是單獨對每種 daemon 類型使用多個 DaemonSet,但具有不同的標志, 并且對不同硬件類型具有不同的內存、CPU 要求

5.5.2 daemonset 示例

[root@k8s2 pod]# cat daemonset-example.yml

apiVersion: apps/v1

kind: DaemonSet

metadata:name: daemonset-example

spec:selector:matchLabels:app: nginxtemplate:metadata:labels:app: nginxspec:tolerations: #對于污點節點的容忍- effect: NoScheduleoperator: Existscontainers:- name: nginximage: nginx[root@k8s-master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

daemonset-87h6s 1/1 Running 0 47s 10.244.0.8 k8s-master <none> <none>

daemonset-n4vs4 1/1 Running 0 47s 10.244.2.38 k8s-node2 <none> <none>

daemonset-vhxmq 1/1 Running 0 47s 10.244.1.40 k8s-node1 <none> <none>#回收

[root@k8s2 pod]# kubectl delete -f daemonset-example.yml5.6? job 控制器

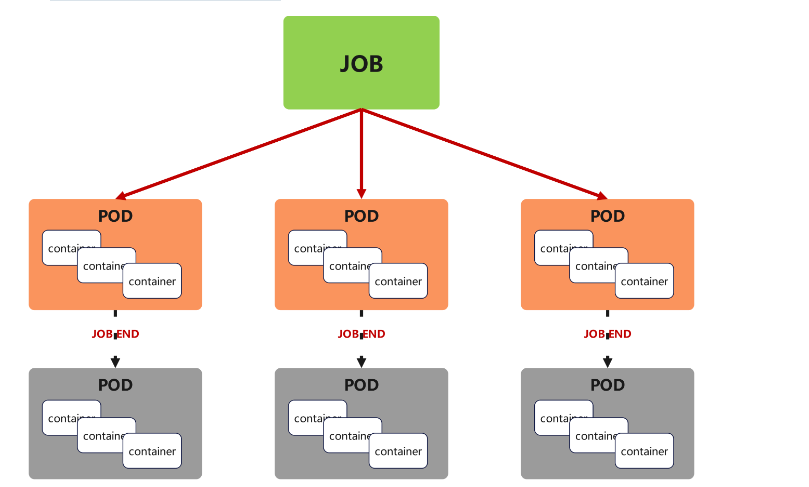

5.6.1 job控制器功能

Job,主要用于負責批量處理(一次要處理指定數量任務)短暫的一次性(每個任務僅運行一次就結束)任務

Job特點如下:

-

當Job創建的pod執行成功結束時,Job將記錄成功結束的pod數量

-

當成功結束的pod達到指定的數量時,Job將完成執行

5.6.2 job 控制器示例:

[root@k8s2 pod]# vim job.yml

apiVersion: batch/v1

kind: Job

metadata:name: pi

spec:completions: 6 #一共完成任務數為6 parallelism: 2 #每次并行完成2個template:spec:containers:- name: piimage: perl:5.34.0command: ["perl", "-Mbignum=bpi", "-wle", "print bpi(2000)"] 計算Π的后2000位restartPolicy: Never #關閉后不自動重啟backoffLimit: 4 #運行失敗后嘗試4重新運行[root@k8s2 pod]# kubectl apply -f job.ymlNote:

關于重啟策略設置的說明:

如果指定為OnFailure,則job會在pod出現故障時重啟容器

而不是創建pod,failed次數不變

如果指定為Never,則job會在pod出現故障時創建新的pod

并且故障pod不會消失,也不會重啟,failed次數加1

如果指定為Always的話,就意味著一直重啟,意味著job任務會重復去執行了

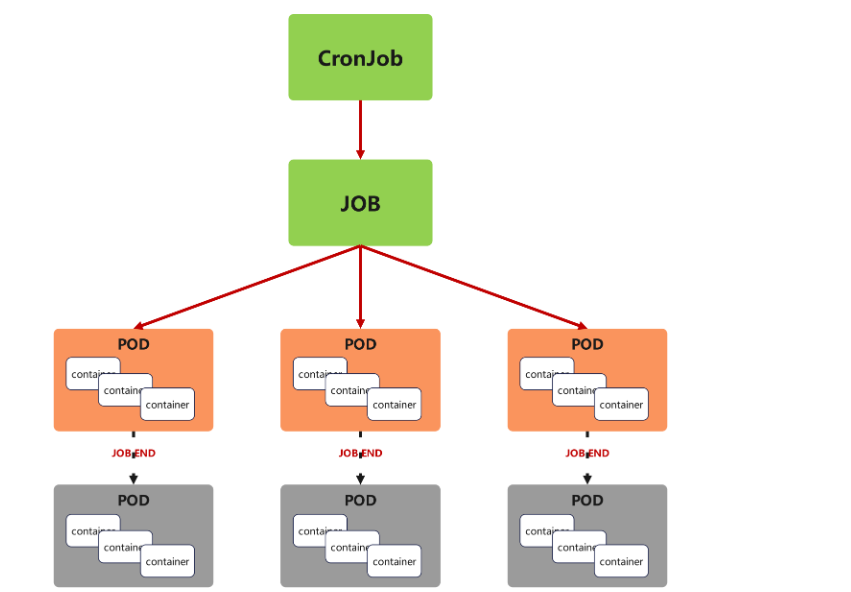

5.7 cronjob 控制器

5.7.1 cronjob 控制器功能

-

Cron Job 創建基于時間調度的 Jobs。

-

CronJob控制器以Job控制器資源為其管控對象,并借助它管理pod資源對象,

-

CronJob可以以類似于Linux操作系統的周期性任務作業計劃的方式控制其運行時間點及重復運行的方式。

-

CronJob可以在特定的時間點(反復的)去運行job任務。

5.7.2 cronjob 控制器 示例

[root@k8s2 pod]# vim cronjob.yml

apiVersion: batch/v1

kind: CronJob

metadata:name: hello

spec:schedule: "* * * * *"jobTemplate:spec:template:spec:containers:- name: helloimage: busyboximagePullPolicy: IfNotPresentcommand:- /bin/sh- -c- date; echo Hello from the Kubernetes clusterrestartPolicy: OnFailure[root@k8s2 pod]# kubectl apply -f cronjob.yml六 微服務

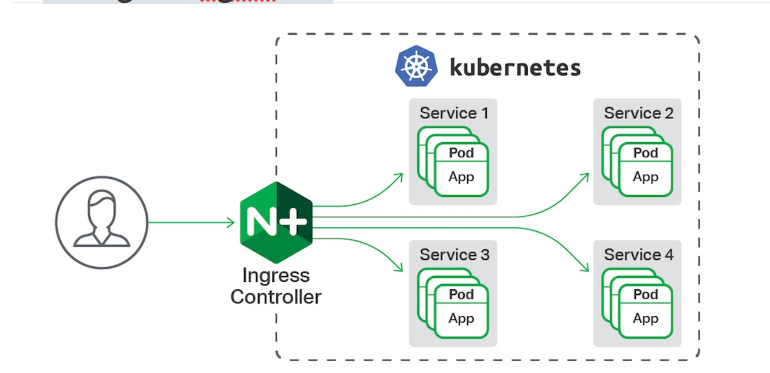

6.1?什么是微服務

用控制器來完成集群的工作負載,那么應用如何暴漏出去?需要通過微服務暴漏出去后才能被訪問

-

Service是一組提供相同服務的Pod對外開放的接口。

-

借助Service,應用可以實現服務發現和負載均衡。

-

service默認只支持4層負載均衡能力,沒有7層功能。(可以通過Ingress實現)

6.2?微服務的類型

| ClusterIP | 默認值,k8s系統給service自動分配的虛擬IP,只能在集群內部訪問 |

|---|---|

| NodePort | 將Service通過指定的Node上的端口暴露給外部,訪問任意一個NodeIP:nodePort都將路由到ClusterIP |

| 微服務類型 | 作用描述 |

| LoadBalancer | 在NodePort的基礎上,借助cloud provider創建一個外部的負載均衡器,并將請求轉發到 NodeIP:NodePort,此模式只能在云服務器上使用 |

| ExternalName | 將服務通過 DNS CNAME 記錄方式轉發到指定的域名(通過 spec.externlName 設定 |

示例:

#生成控制器文件并建立控制器

[root@k8s-master ~]# kubectl create deployment timinglee --image myapp:v1 --replicas 2 --dry-run=client -o yaml > timinglee.yaml#生成微服務yaml追加到已有yaml中

[root@k8s-master ~]# kubectl expose deployment timinglee --port 80 --target-port 80 --dry-run=client -o yaml >> timinglee.yaml[root@k8s-master ~]# vim timinglee.yaml

apiVersion: apps/v1

kind: Deployment

metadata:labels:app: timingleename: timinglee

spec:replicas: 2selector:matchLabels:app: timingleetemplate:metadata:creationTimestamp: nulllabels:app: timingleespec:containers:- image: myapp:v1name: myapp

--- #不同資源間用---隔開apiVersion: v1

kind: Service

metadata:labels:app: timingleename: timinglee

spec:ports:- port: 80protocol: TCPtargetPort: 80selector:app: timinglee[root@k8s-master ~]# kubectl apply -f timinglee.yaml

deployment.apps/timinglee created

service/timinglee created[root@k8s-master ~]# kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 19h

timinglee ClusterIP 10.99.127.134 <none> 80/TCP 16s

微服務默認使用iptables調度

[root@k8s-master ~]# kubectl get services -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 19h <none>

timinglee ClusterIP 10.99.127.134 <none> 80/TCP 119s app=timinglee #集群內部IP 134#可以在火墻中查看到策略信息

[root@k8s-master ~]# iptables -t nat -nL

KUBE-SVC-I7WXYK76FWYNTTGM 6 -- 0.0.0.0/0 10.99.127.134 /* default/timinglee cluster IP */ tcp dpt:806.3 ipvs模式

-

Service 是由 kube-proxy 組件,加上 iptables 來共同實現的

-

kube-proxy 通過 iptables 處理 Service 的過程,需要在宿主機上設置相當多的 iptables 規則,如果宿主機有大量的Pod,不斷刷新iptables規則,會消耗大量的CPU資源

-

IPVS模式的service,可以使K8s集群支持更多量級的Pod

6.3.1 ipvs模式配置方式

1 在所有節點中安裝ipvsadm

[root@k8s-所有節點 pod]yum install ipvsadm –y2 修改master節點的代理配置

[root@k8s-master ~]# kubectl -n kube-system edit cm kube-proxymetricsBindAddress: ""mode: "ipvs" #設置kube-proxy使用ipvs模式nftables:3 重啟pod,在pod運行時配置文件中采用默認配置,當改變配置文件后已經運行的pod狀態不會變化,所以要重啟pod

[root@k8s-master ~]# kubectl -n kube-system get pods | awk '/kube-proxy/{system("kubectl -n kube-system delete pods "$1)}'[root@k8s-master ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.96.0.1:443 rr-> 172.25.254.100:6443 Masq 1 0 0

TCP 10.96.0.10:53 rr-> 10.244.0.2:53 Masq 1 0 0-> 10.244.0.3:53 Masq 1 0 0

TCP 10.96.0.10:9153 rr-> 10.244.0.2:9153 Masq 1 0 0-> 10.244.0.3:9153 Masq 1 0 0

TCP 10.97.59.25:80 rr-> 10.244.1.17:80 Masq 1 0 0-> 10.244.2.13:80 Masq 1 0 0

UDP 10.96.0.10:53 rr-> 10.244.0.2:53 Masq 1 0 0-> 10.244.0.3:53 Masq 1 0 0Note:

切換ipvs模式后,kube-proxy會在宿主機上添加一個虛擬網卡:kube-ipvs0,并分配所有service IP

[root@k8s-master ~]# ip a | tailinet6 fe80::c4fb:e9ff:feee:7d32/64 scope linkvalid_lft forever preferred_lft forever 8: kube-ipvs0: <BROADCAST,NOARP> mtu 1500 qdisc noop state DOWN group defaultlink/ether fe:9f:c8:5d:a6:c8 brd ff:ff:ff:ff:ff:ffinet 10.96.0.10/32 scope global kube-ipvs0valid_lft forever preferred_lft foreverinet 10.96.0.1/32 scope global kube-ipvs0valid_lft forever preferred_lft foreverinet 10.97.59.25/32 scope global kube-ipvs0valid_lft forever preferred_lft forever

6.4 微服務類型詳解

6.4.1 clusterip

特點:

clusterip模式只能在集群內訪問,并對集群內的pod提供健康檢測和自動發現功能

示例:

[root@k8s2 service]# vim myapp.yml

---

apiVersion: v1

kind: Service

metadata:labels:app: timingleename: timinglee

spec:ports:- port: 80protocol: TCPtargetPort: 80selector:app: timingleetype: ClusterIPservice創建后集群DNS提供解析

[root@k8s-master ~]# dig timinglee.default.svc.cluster.local @10.96.0.10; <<>> DiG 9.16.23-RH <<>> timinglee.default.svc.cluster.local @10.96.0.10

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; You are currently testing what happens when an mDNS query is leaked to DNS

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 27827

;; flags: qr aa rd; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

; COOKIE: 057d9ff344fe9a3a (echoed)

;; QUESTION SECTION:

;timinglee.default.svc.cluster.local. IN A;; ANSWER SECTION:

timinglee.default.svc.cluster.local. 30 IN A 10.97.59.25;; Query time: 8 msec

;; SERVER: 10.96.0.10#53(10.96.0.10)

;; WHEN: Wed Sep 04 13:44:30 CST 2024

;; MSG SIZE rcvd: 1276.4.2 ClusterIP中的特殊模式headless

headless(無頭服務)

對于無頭 Services 并不會分配 Cluster IP,kube-proxy不會處理它們, 而且平臺也不會為它們進行負載均衡和路由,集群訪問通過dns解析直接指向到業務pod上的IP,所有的調度有dns單獨完成

[root@k8s-master ~]# vim timinglee.yaml

---

apiVersion: v1

kind: Service

metadata:labels:app: timingleename: timinglee

spec:ports:- port: 80protocol: TCPtargetPort: 80selector:app: timingleetype: ClusterIPclusterIP: None[root@k8s-master ~]# kubectl delete -f timinglee.yaml

[root@k8s-master ~]# kubectl apply -f timinglee.yaml

deployment.apps/timinglee created#測試

[root@k8s-master ~]# kubectl get services timinglee

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

timinglee ClusterIP None <none> 80/TCP 6s[root@k8s-master ~]# dig timinglee.default.svc.cluster.local @10.96.0.10; <<>> DiG 9.16.23-RH <<>> timinglee.default.svc.cluster.local @10.96.0.10

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; You are currently testing what happens when an mDNS query is leaked to DNS

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 51527

;; flags: qr aa rd; QUERY: 1, ANSWER: 2, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

; COOKIE: 81f9c97b3f28b3b9 (echoed)

;; QUESTION SECTION:

;timinglee.default.svc.cluster.local. IN A;; ANSWER SECTION:

timinglee.default.svc.cluster.local. 20 IN A 10.244.2.14 #直接解析到pod上

timinglee.default.svc.cluster.local. 20 IN A 10.244.1.18;; Query time: 0 msec

;; SERVER: 10.96.0.10#53(10.96.0.10)

;; WHEN: Wed Sep 04 13:58:23 CST 2024

;; MSG SIZE rcvd: 178#開啟一個busyboxplus的pod測試

[root@k8s-master ~]# kubectl run test --image busyboxplus -it

If you don't see a command prompt, try pressing enter.

/ # nslookup timinglee-service

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.localName: timinglee-service

Address 1: 10.244.2.16 10-244-2-16.timinglee-service.default.svc.cluster.local

Address 2: 10.244.2.17 10-244-2-17.timinglee-service.default.svc.cluster.local

Address 3: 10.244.1.22 10-244-1-22.timinglee-service.default.svc.cluster.local

Address 4: 10.244.1.21 10-244-1-21.timinglee-service.default.svc.cluster.local

/ # curl timinglee-service

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

/ # curl timinglee-service/hostname.html

timinglee-c56f584cf-b8t6m6.4.3 nodeport

通過ipvs暴漏端口從而使外部主機通過master節點的對外ip:<port>來訪問pod業務

其訪問過程為:

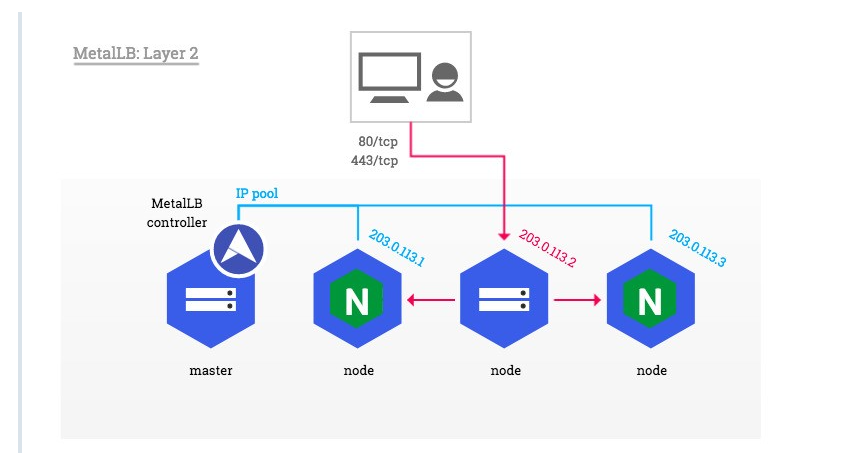

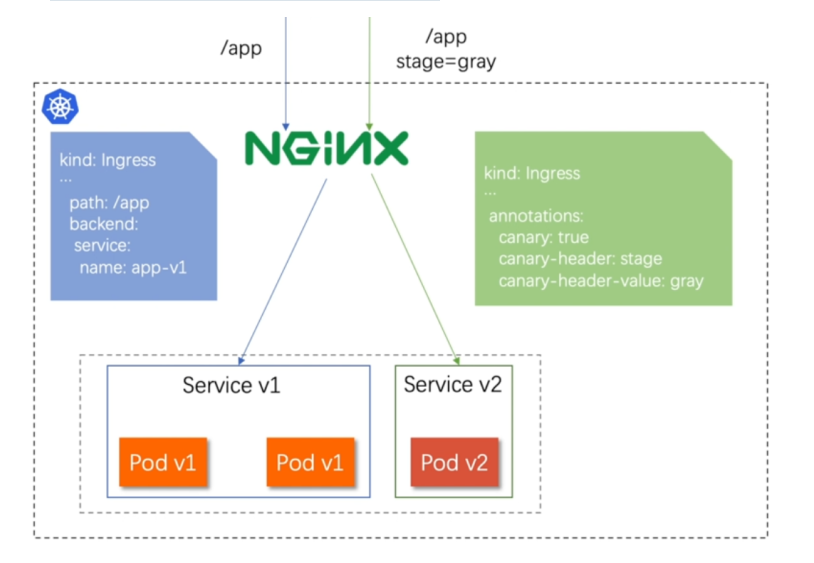

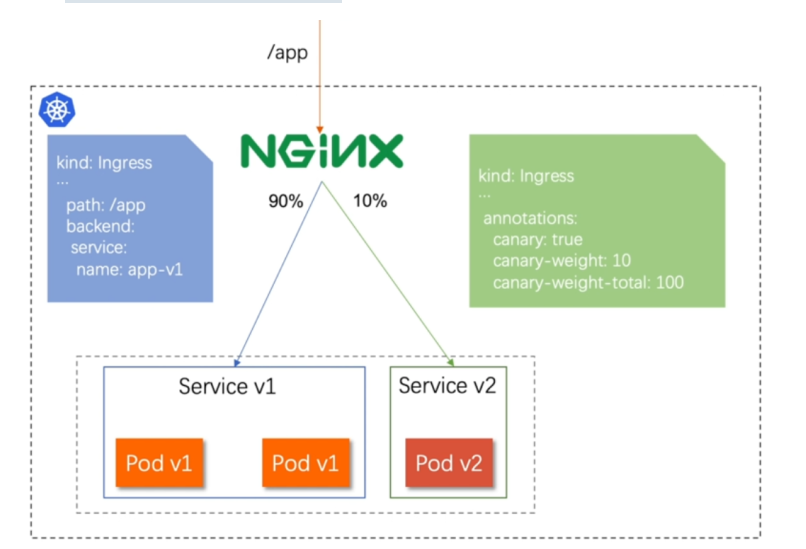

![]()

示例:

[root@k8s-master ~]# vim timinglee.yaml

---apiVersion: v1

kind: Service

metadata:labels:app: timinglee-servicename: timinglee-service

spec:ports:- port: 80protocol: TCPtargetPort: 80selector:app: timingleetype: NodePort[root@k8s-master ~]# kubectl apply -f timinglee.yaml

deployment.apps/timinglee created

service/timinglee-service created

[root@k8s-master ~]# kubectl get services timinglee-service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE