官網鏈接

NLP From Scratch: Generating Names with a Character-Level RNN — PyTorch Tutorials 2.0.1+cu117 documentation

使用字符級RNN生成名字

這是我們關于“NLP From Scratch”的三篇教程中的第二篇。在第一個教程中</intermediate/char_rnn_classification_tutorial> 我們使用RNN將名字按其原始語言進行分類。這一次,我們將通過語言中生成名字。

> python sample.py Russian RUS

Rovakov

Uantov

Shavakov> python sample.py German GER

Gerren

Ereng

Rosher> python sample.py Spanish SPA

Salla

Parer

Allan> python sample.py Chinese CHI

Chan

Hang

Iun我們仍然手工制作一個帶有幾個線性層的小型RNN模型。最大的區別在于,我們不是在讀取一個名字的所有字母后預測一個類別,而是輸入一個類別并每次輸出一個字母。經常預測字符以形成語言(這也可以用單詞或其他高階結構來完成)通常被稱為“語言模型”。

推薦閱讀:

我假設你至少安裝了PyTorch,了解Python,并且理解張量:

- PyTorch 安裝說明

- Deep Learning with PyTorch: A 60 Minute Blitz 來開始使用PyTorch

- Learning PyTorch with Examples pytorch使用概述

- PyTorch for Former Torch Users 如果您是前Lua Torch用戶

了解rnn及其工作原理也很有用:

- The Unreasonable Effectiveness of Recurrent Neural Networks 展示了一些現實生活中的例子

- Understanding LSTM Networks 是專門關于LSTM的,但也有關于RNN的信息

我還推薦上一篇教程, NLP From Scratch: Classifying Names with a Character-Level RNN

準備數據

從這里(here)下載數據并將其解壓縮到當前目錄。

有關此過程的更多細節,請參閱最后一篇教程。簡而言之,有一堆純文本文件data/names/[Language].txt 每行有一個名稱。我們將每行分割成一個數組,將Unicode轉換為ASCII,最后得到一個字典{language: [names ...]}.

from io import open

import glob

import os

import unicodedata

import stringall_letters = string.ascii_letters + " .,;'-"

n_letters = len(all_letters) + 1 # Plus EOS markerdef findFiles(path): return glob.glob(path)# Turn a Unicode string to plain ASCII, thanks to https://stackoverflow.com/a/518232/2809427

def unicodeToAscii(s):return ''.join(c for c in unicodedata.normalize('NFD', s)if unicodedata.category(c) != 'Mn'and c in all_letters)# Read a file and split into lines

def readLines(filename):with open(filename, encoding='utf-8') as some_file:return [unicodeToAscii(line.strip()) for line in some_file]# Build the category_lines dictionary, a list of lines per category

category_lines = {}

all_categories = []

for filename in findFiles('data/names/*.txt'):category = os.path.splitext(os.path.basename(filename))[0]all_categories.append(category)lines = readLines(filename)category_lines[category] = linesn_categories = len(all_categories)if n_categories == 0:raise RuntimeError('Data not found. Make sure that you downloaded data ''from https://download.pytorch.org/tutorial/data.zip and extract it to ''the current directory.')print('# categories:', n_categories, all_categories)

print(unicodeToAscii("O'Néàl"))輸出

# categories: 18 ['Arabic', 'Chinese', 'Czech', 'Dutch', 'English', 'French', 'German', 'Greek', 'Irish', 'Italian', 'Japanese', 'Korean', 'Polish', 'Portuguese', 'Russian', 'Scottish', 'Spanish', 'Vietnamese']

O'Neal創建網絡

這個網絡擴展了上一篇教程的RNN(the last tutorial’s RNN),為類別張量增加了一個額外的參數,它與其他參數連接在一起。category張量是一個獨熱向量就像輸入的字母一樣。

我們將把輸出解釋為下一個字母出現的概率。采樣時,最可能的輸出字母被用作下一個輸入字母。

我添加了第二個線性層o2o(在將hidden和output結合起來之后),讓其更有影響力。還有一個dropout層,它以給定的概率(這里是0.1)隨機地將部分輸入歸零,通常用于模糊輸入以防止過擬合。在這里,我們在網絡的末尾使用它來有意地增加一些混亂和增加采樣的多樣性。

import torch

import torch.nn as nnclass RNN(nn.Module):def __init__(self, input_size, hidden_size, output_size):super(RNN, self).__init__()self.hidden_size = hidden_sizeself.i2h = nn.Linear(n_categories + input_size + hidden_size, hidden_size)self.i2o = nn.Linear(n_categories + input_size + hidden_size, output_size)self.o2o = nn.Linear(hidden_size + output_size, output_size)self.dropout = nn.Dropout(0.1)self.softmax = nn.LogSoftmax(dim=1)def forward(self, category, input, hidden):input_combined = torch.cat((category, input, hidden), 1)hidden = self.i2h(input_combined)output = self.i2o(input_combined)output_combined = torch.cat((hidden, output), 1)output = self.o2o(output_combined)output = self.dropout(output)output = self.softmax(output)return output, hiddendef initHidden(self):return torch.zeros(1, self.hidden_size)

訓練

訓練準備

首先,輔助函數獲得(類別,行)的隨機對:

import random# Random item from a list

def randomChoice(l):return l[random.randint(0, len(l) - 1)]# Get a random category and random line from that category

def randomTrainingPair():category = randomChoice(all_categories)line = randomChoice(category_lines[category])return category, line對于每個時間步(即對于訓練詞中的每個字母),網絡的輸入將是(category, current letter, hidden state),輸出將是(next letter, next hidden state)。對于每個訓練集,我們需要類別,一組輸入字母,和一組輸出/目標字母。

由于我們預測每個時間步當前字母的下一個字母,因此字母對是一行中連續字母的組-例如,"ABCD<EOS>" 我們將創建(“A”,“B”),(“B”,“C”),(“C”,“D”),(“D”,“EOS”)。

category張量是一個獨熱張量,大小為<1 x n_categories>. 當訓練時,我們在每個時間步向網絡提供它,這是一個設計選擇,它可以作為初始隱藏狀態的一部分或其他策略。

# One-hot vector for category

def categoryTensor(category):li = all_categories.index(category)tensor = torch.zeros(1, n_categories)tensor[0][li] = 1return tensor# One-hot matrix of first to last letters (not including EOS) for input

def inputTensor(line):tensor = torch.zeros(len(line), 1, n_letters)for li in range(len(line)):letter = line[li]tensor[li][0][all_letters.find(letter)] = 1return tensor# ``LongTensor`` of second letter to end (EOS) for target

def targetTensor(line):letter_indexes = [all_letters.find(line[li]) for li in range(1, len(line))]letter_indexes.append(n_letters - 1) # EOSreturn torch.LongTensor(letter_indexes)為了在訓練過程中方便起見,我們將創建一個randomTrainingExample函數來獲取一個隨機的(category, line)對。并將它們轉換為所需的(category, input, target)張量。

# Make category, input, and target tensors from a random category, line pair

def randomTrainingExample():category, line = randomTrainingPair()category_tensor = categoryTensor(category)input_line_tensor = inputTensor(line)target_line_tensor = targetTensor(line)return category_tensor, input_line_tensor, target_line_tensor訓練網絡

與只使用最后一個輸出的分類相反,我們在每一步都進行預測,因此我們在每一步都計算損失。

自動梯度的魔力讓你可以簡單地將每一步的損失加起來,并在最后進行反向調用。

criterion = nn.NLLLoss()learning_rate = 0.0005def train(category_tensor, input_line_tensor, target_line_tensor):target_line_tensor.unsqueeze_(-1)hidden = rnn.initHidden()rnn.zero_grad()loss = torch.Tensor([0]) # you can also just simply use ``loss = 0``for i in range(input_line_tensor.size(0)):output, hidden = rnn(category_tensor, input_line_tensor[i], hidden)l = criterion(output, target_line_tensor[i])loss += lloss.backward()for p in rnn.parameters():p.data.add_(p.grad.data, alpha=-learning_rate)return output, loss.item() / input_line_tensor.size(0)為了跟蹤訓練需要多長時間,我添加了一個timeSince(timestamp)函數,它返回一個人類可讀的字符串:

import time

import mathdef timeSince(since):now = time.time()s = now - sincem = math.floor(s / 60)s -= m * 60return '%dm %ds' % (m, s)訓練和往常一樣——調用train多次并等待幾分鐘,在每個print_every示例中打印當前時間和損失,并在all_losses中保存每個plot_every示例的平均損失,以便稍后繪制。

rnn = RNN(n_letters, 128, n_letters)n_iters = 100000

print_every = 5000

plot_every = 500

all_losses = []

total_loss = 0 # Reset every ``plot_every`` ``iters``start = time.time()for iter in range(1, n_iters + 1):output, loss = train(*randomTrainingExample())total_loss += lossif iter % print_every == 0:print('%s (%d %d%%) %.4f' % (timeSince(start), iter, iter / n_iters * 100, loss))if iter % plot_every == 0:all_losses.append(total_loss / plot_every)total_loss = 0輸出

0m 37s (5000 5%) 3.1506

1m 15s (10000 10%) 2.5070

1m 55s (15000 15%) 3.3047

2m 33s (20000 20%) 2.4247

3m 12s (25000 25%) 2.6406

3m 50s (30000 30%) 2.0266

4m 29s (35000 35%) 2.6520

5m 6s (40000 40%) 2.4261

5m 45s (45000 45%) 2.2302

6m 24s (50000 50%) 1.6496

7m 2s (55000 55%) 2.7101

7m 41s (60000 60%) 2.5396

8m 19s (65000 65%) 2.5978

8m 57s (70000 70%) 1.6029

9m 35s (75000 75%) 0.9634

10m 13s (80000 80%) 3.0950

10m 52s (85000 85%) 2.0512

11m 30s (90000 90%) 2.5302

12m 8s (95000 95%) 3.2365

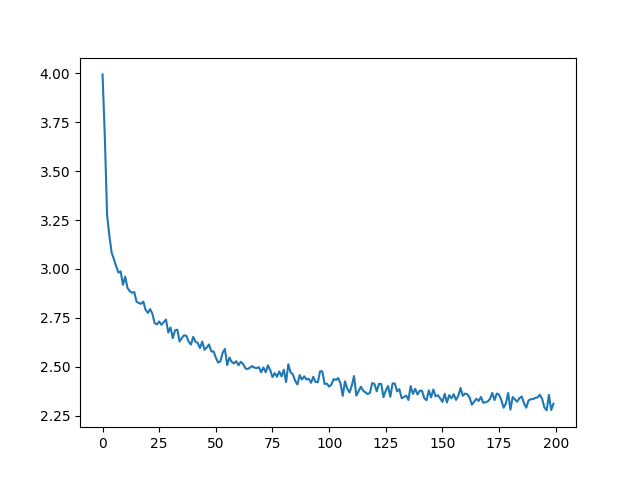

12m 47s (100000 100%) 1.7113繪制損失

繪制all_losses的歷史損失圖顯示了網絡的學習情況:

import matplotlib.pyplot as pltplt.figure()

plt.plot(all_losses)

輸出

[<matplotlib.lines.Line2D object at 0x7fa0159af880>]網絡采樣

為了進行示例,我們給網絡一個字母并詢問下一個字母是什么,將其作為下一個字母輸入,并重復直到EOS令牌。

- 為輸入類別、起始字母和空隱藏狀態創建張量

- 創建一個以字母開頭的字符串output_name

- 最大輸出長度

-

- 將當前的字母提供給網絡

- 從最高輸出中獲取下一個字母,以及下一個隱藏狀態

- 如果字母是EOS,就停在這里

- 如果是普通字母,添加到output_name并繼續

- 返回最終名稱

與其給它一個起始字母,另一種策略是在訓練中包含一個“字符串起始”標記,并讓網絡選擇自己的起始字母。

max_length = 20# Sample from a category and starting letter

def sample(category, start_letter='A'):with torch.no_grad(): # no need to track history in samplingcategory_tensor = categoryTensor(category)input = inputTensor(start_letter)hidden = rnn.initHidden()output_name = start_letterfor i in range(max_length):output, hidden = rnn(category_tensor, input[0], hidden)topv, topi = output.topk(1)topi = topi[0][0]if topi == n_letters - 1:breakelse:letter = all_letters[topi]output_name += letterinput = inputTensor(letter)return output_name# Get multiple samples from one category and multiple starting letters

def samples(category, start_letters='ABC'):for start_letter in start_letters:print(sample(category, start_letter))samples('Russian', 'RUS')samples('German', 'GER')samples('Spanish', 'SPA')samples('Chinese', 'CHI')輸出

Rovaki

Uarinovev

Shinan

Gerter

Eeren

Roune

Santera

Paneraz

Allan

Chin

Han

Ion練習

- 嘗試使用不同數據集category -> line,例如,

-

- Fictional series -> Character name

- Part of speech -> Word

- Country -> City

- 使用“start of sentence”標記,這樣就可以在不選擇起始字母的情況下進行抽樣

- 擁有一個更大的和/或更好的網絡,可以獲得更好的結果

-

- 嘗試使用 nn.LSTM 和 nn.GRU 網絡層

- 將這些RNN組合成一個更高級的網絡

?

)