1 環境準備

1.1 主機信息

| ip | hostname |

| 10.220.43.203 | master |

| 10.220.43.204 | node1 |

1.2 系統信息

$ cat /etc/redhat-release

Alibaba Cloud Linux (Aliyun Linux) release 2.1903 LTS (Hunting Beagle)2 部署準備

master/與slave主機均需要設置。

2.1 設置主機名

# master

hostnamectl set-hostname master# slave

hostnamectl set-hostname slave2.2? 設置hosts

$ vim /etc/hosts

#添加如下內容:

10.220.43.203 master

10.220.43.204 slave

#保存退出,重新登錄主機?2.3 網絡配置

# 橋接設置(master/node)$ cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

$ sysctl --system3 安裝部署?

master/slave均安裝

3.1?安裝docker

docker二進制安裝參考:docker部署及常用命令-CSDN博客?

3.2 配置kubernetes加速yum源

為kubernetes添加國內阿里云YUM軟件源。

$ cat > /etc/yum.repos.d/kubernetes.repo << EOF

[k8s]

name=k8s

enabled=1

gpgcheck=0

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

EOF3.3?安裝kubeadm/kubelet/kubectl

#版本可以選擇自己要安裝的版本號

$ yum install -y kubelet-1.25.0 kubectl-1.25.0 kubeadm-1.25.0

# 此時,還不能啟動kubelet,因為此時配置還不能,現在僅僅可以設置開機自啟動

$ systemctl enable kubelet3.4?安裝容器運行時

如果k8s版本低于1.24版,可以忽略此步驟。

由于1.24版本不能直接兼容docker引擎,

Docker Engine 沒有實現 CRI, 而這是容器運行時在 Kubernetes 中工作所需要的。 為此,必須安裝一個額外的服務cri-dockerd。 cri-dockerd 是一個基于傳統的內置 Docker 引擎支持的項目, 它在 1.24 版本從 kubelet 中移除。

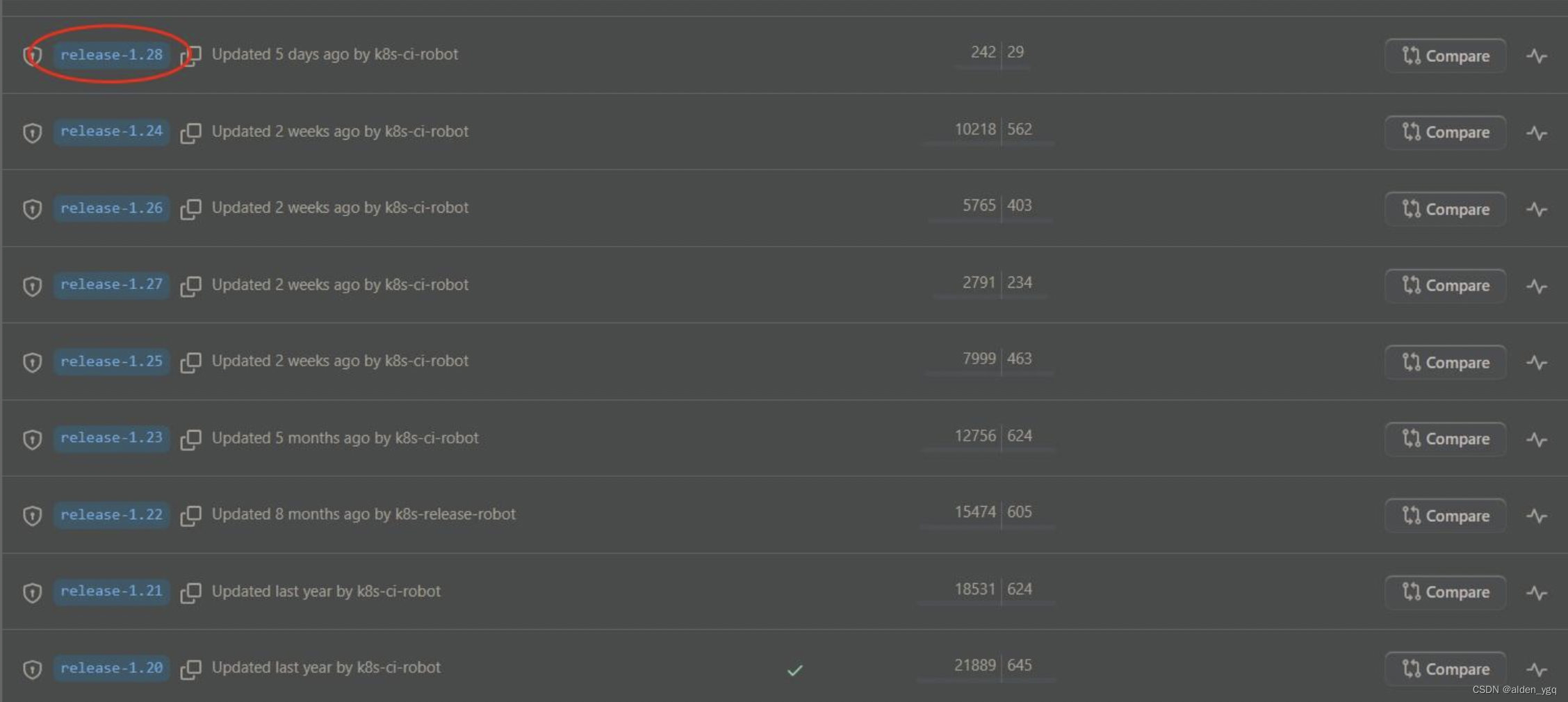

目前最新k8s版本為1.28.x。

需要在集群內每個節點上安裝一個容器運行時以使Pod可以運行在上面。高版本Kubernetes要求使用符合容器運行時接口(CRI)的運行時。

以下是幾款 Kubernetes 中幾個常見的容器運行時的用法:

- containerd

- CRI-O

- Docker Engine

- Mirantis Container Runtime

以下是使用 cri-dockerd 適配器來將 Docker Engine 與 Kubernetes 集成。

3.4.1?安裝cri-dockerd

$ wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.2.6/cri-dockerd-0.2.6.amd64.tgz

$ tar -xf cri-dockerd-0.2.6.amd64.tgz

$ cp cri-dockerd/cri-dockerd /usr/bin/

$ chmod +x /usr/bin/cri-dockerd3.4.2?配置啟動服務

$ cat <<"EOF" > /usr/lib/systemd/system/cri-docker.service

> [Unit]

> Description=CRI Interface for Docker Application Container Engine

> Documentation=https://docs.mirantis.com

> After=network-online.target firewalld.service docker.service

> Wants=network-online.target

> Requires=cri-docker.socket

> [Service]

> Type=notify

> ExecStart=/usr/bin/cri-dockerd --network-plugin=cni --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.8

> ExecReload=/bin/kill -s HUP $MAINPID

> TimeoutSec=0

> RestartSec=2

> Restart=always

> StartLimitBurst=3

> StartLimitInterval=60s

> LimitNOFILE=infinity

> LimitNPROC=infinity

> LimitCORE=infinity

> TasksMax=infinity

> Delegate=yes

> KillMode=process

> [Install]

> WantedBy=multi-user.target

> EOF主要是以下命令:ExecStart=/usr/bin/cri-dockerd --network-plugin=cni --pod-infra-container-image=http://registry.aliyuncs.com/google_containers/pause:3.8

pause容器的版本可以通過kubeadm config images list查看:

$ kubeadm config images list

W1210 17:27:44.009895 31608 version.go:104] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.txt": Get "https://cdn.dl.k8s.io/release/stable-1.txt": context deadline exceeded (Client.Timeout exceeded while awaiting headers)

W1210 17:27:44.009935 31608 version.go:105] falling back to the local client version: v1.25.0

registry.k8s.io/kube-apiserver:v1.25.0

registry.k8s.io/kube-controller-manager:v1.25.0

registry.k8s.io/kube-scheduler:v1.25.0

registry.k8s.io/kube-proxy:v1.25.0

registry.k8s.io/pause:3.8

registry.k8s.io/etcd:3.5.4-0

registry.k8s.io/coredns/coredns:v1.9.33.4.3??成 socket ?件?

$ cat <<"EOF" > /usr/lib/systemd/system/cri-docker.socket

[Unit]

Description=CRI Docker Socket for the API

PartOf=cri-docker.service

[Socket]

ListenStream=%t/cri-dockerd.sock

SocketMode=0660

SocketUser=root

SocketGroup=docker

[Install]

WantedBy=sockets.target

EOF3.4.4?啟動 cri-docker 服務并配置開機啟動?

$ systemctl daemon-reload

$ systemctl enable cri-docker

$ systemctl start cri-docker

$ systemctl is-active cri-docker3.5?部署Kubernetes

master需要部署?,slave node節點不需要執行kubeadm init。

創建kubeadm.yaml文件,內容如下:

kubeadm init \

--apiserver-advertise-address=10.220.43.203 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.25.0 \

--service-cidr=192.168.0.0/16 \

--pod-network-cidr=172.25.0.0/16 \

--ignore-preflight-errors=all \

--cri-socket unix:///var/run/cri-dockerd.sock- --apiserver-advertise-address=master節點IP

- --pod-network-cidr=10.244.0.0/16,要與后面kube-flannel.yml里的ip一致也就是使用10.244.0.0/16不要改它。

輸出:

[init] Using Kubernetes version: v1.25.0

[preflight] Running pre-flight checks[WARNING CRI]: container runtime is not running: output: time="2023-12-10T17:38:57+08:00" level=fatal msg="validate service connection: CRI v1 runtime API is not implemented for endpoint \"unix:///var/run/cri-dockerd.sock\": rpc error: code = Unimplemented desc = unknown service runtime.v1.RuntimeService"

, error: exit status 1

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'[WARNING ImagePull]: failed to pull image registry.aliyuncs.com/google_containers/kube-apiserver:v1.25.0: output: time="2023-12-10T17:38:57+08:00" level=fatal msg="validate service connection: CRI v1 image API is not implemented for endpoint \"unix:///var/run/cri-dockerd.sock\": rpc error: code = Unimplemented desc = unknown service runtime.v1.ImageService"

, error: exit status 1[WARNING ImagePull]: failed to pull image registry.aliyuncs.com/google_containers/kube-controller-manager:v1.25.0: output: time="2023-12-10T17:38:57+08:00" level=fatal msg="validate service connection: CRI v1 image API is not implemented for endpoint \"unix:///var/run/cri-dockerd.sock\": rpc error: code = Unimplemented desc = unknown service runtime.v1.ImageService"

, error: exit status 1[WARNING ImagePull]: failed to pull image registry.aliyuncs.com/google_containers/kube-scheduler:v1.25.0: output: time="2023-12-10T17:38:57+08:00" level=fatal msg="validate service connection: CRI v1 image API is not implemented for endpoint \"unix:///var/run/cri-dockerd.sock\": rpc error: code = Unimplemented desc = unknown service runtime.v1.ImageService"

, error: exit status 1[WARNING ImagePull]: failed to pull image registry.aliyuncs.com/google_containers/kube-proxy:v1.25.0: output: time="2023-12-10T17:38:57+08:00" level=fatal msg="validate service connection: CRI v1 image API is not implemented for endpoint \"unix:///var/run/cri-dockerd.sock\": rpc error: code = Unimplemented desc = unknown service runtime.v1.ImageService"

, error: exit status 1[WARNING ImagePull]: failed to pull image registry.aliyuncs.com/google_containers/pause:3.8: output: time="2023-12-10T17:38:57+08:00" level=fatal msg="validate service connection: CRI v1 image API is not implemented for endpoint \"unix:///var/run/cri-dockerd.sock\": rpc error: code = Unimplemented desc = unknown service runtime.v1.ImageService"

, error: exit status 1[WARNING ImagePull]: failed to pull image registry.aliyuncs.com/google_containers/etcd:3.5.4-0: output: time="2023-12-10T17:38:57+08:00" level=fatal msg="validate service connection: CRI v1 image API is not implemented for endpoint \"unix:///var/run/cri-dockerd.sock\": rpc error: code = Unimplemented desc = unknown service runtime.v1.ImageService"

, error: exit status 1[WARNING ImagePull]: failed to pull image registry.aliyuncs.com/google_containers/coredns:v1.9.3: output: time="2023-12-10T17:38:58+08:00" level=fatal msg="validate service connection: CRI v1 image API is not implemented for endpoint \"unix:///var/run/cri-dockerd.sock\": rpc error: code = Unimplemented desc = unknown service runtime.v1.ImageService"

, error: exit status 1

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master] and IPs [192.168.0.1 10.220.43.203]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost master] and IPs [10.220.43.203 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost master] and IPs [10.220.43.203 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 28.001898 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node master as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node master as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: 3u2q8d.u899qmv8lsm7sxyz

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxyYour Kubernetes control-plane has initialized successfully!To start using your cluster, you need to run the following as a regular user:mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/configAlternatively, if you are the root user, you can run:export KUBECONFIG=/etc/kubernetes/admin.confYou should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:https://kubernetes.io/docs/concepts/cluster-administration/addons/Then you can join any number of worker nodes by running the following on each as root:kubeadm join 10.220.43.203:6443 --token 3u2q8d.u899qmv8lsm7sxyz \--discovery-token-ca-cert-hash sha256:d7b2a47417fbff13e11a50ae92aaa0666448a92eb4c8deaaae9e9aa5c0cbc930 這里是通過kubeadm init安裝,所以執行后會下載相應的docker鏡像,一般會發現在控制臺卡著不動很久,這時就是在下載鏡像,可以使用docker images命令查看是不是有新的鏡像增加。

3.6 測試kubectl工具

master/slave均執行。

kubeadm安裝好后,控制臺也會有提示執行以下命令,照著執行(也就是第11步最后控制臺輸出的)。

3.6.1 配置kubeconfig

master執行。

$ mkdir -p $HOME/.kube

$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

$ sudo chown $(id -u):$(id -g) $HOME/.kube/config

$ scp /etc/kubernetes/admin.conf 10.220.43.204:/etc/kubernetes

root@10.220.43.204's password:

admin.conf 100% 5641 19.2MB/s 00:00 slave執行。?

$ mkdir -p $HOME/.kube

$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

$ sudo chown $(id -u):$(id -g) $HOME/.kube/config3.6.2 配置變量

$ vim /etc/profile

#加入以下變量

export KUBECONFIG=/etc/kubernetes/admin.conf

$ source /etc/profile3.6.3?測試kubectl命令

$ kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master NotReady control-plane 21m v1.25.0 10.220.43.203 <none> Alibaba Cloud Linux (Aliyun Linux) 2.1903 LTS (Hunting Beagle) 4.19.91-27.6.al7.x86_64 docker://20.10.21

一般來說狀態先會是NotReady ,可能程序還在啟動中,過一會再看看就會變成Ready

3.7 安裝網絡插件?

常用的cni網絡插件有calico和flannel,兩者區別為:

- flannel不支持復雜的網絡策略

- calico支持網絡策略

3.7.1 安裝Pod CNI網絡插件flannel

master/slave均執行?

$ kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

namespace/kube-flannel created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created報錯:The connection to the server?http://raw.githubusercontent.com?was refused - did you specify the right host or port?

原因:國外資源訪問不了

解決辦法:host配置可以訪問的ip

vim /etc/hosts

#在/etc/hosts增加以下這條

199.232.28.133 raw.githubusercontent.com重新執行上面命令,便可成功安裝!

3.7.2?部署Pod CNI網絡插件calico

官網:About Calico | Calico Documentation

3.7.2.1 下載calico.yaml文件?

$ curl https://raw.githubusercontent.com/projectcalico/calico/v3.26.1/manifests/calico.yaml -O3.7.2.2 拉取calico鏡像

$ grep -w image calico.yaml| uniq image: docker.io/calico/cni:v3.26.1image: docker.io/calico/node:v3.26.1image: docker.io/calico/kube-controllers:v3.26.1

$ docker pull docker.io/calico/cni:v3.26.1

$ docker pull docker.io/calico/node:v3.26.1

$ docker pull docker.io/calico/kube-controllers:v3.26.13.7.2.3 修改calico網段信息

修改calico.yaml 文件中CALICO_IPV4POOL_CIDR的IP段要和kubeadm初始化時候的pod網段一致,注意格式要對齊,不然會報錯。

$ vim calico.yaml - name: CALICO_IPV4POOL_CIDRvalue: "172.16.0.0/16"3.7.2.4 加載calico.yaml文件?

$ kubectl apply -f calico.yaml

poddisruptionbudget.policy/calico-kube-controllers configured

serviceaccount/calico-kube-controllers unchanged

serviceaccount/calico-node unchanged

serviceaccount/calico-cni-plugin unchanged

configmap/calico-config unchanged

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/bgpfilters.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org configured

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers unchanged

clusterrole.rbac.authorization.k8s.io/calico-node unchanged

clusterrole.rbac.authorization.k8s.io/calico-cni-plugin unchanged

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers unchanged

clusterrolebinding.rbac.authorization.k8s.io/calico-node unchanged

clusterrolebinding.rbac.authorization.k8s.io/calico-cni-plugin unchanged3.8 slave節點加入master

此步驟需要用到第3.5?部署Kubernetes控制臺輸出內容:

kubeadm join 10.220.43.203:6443 --token 3u2q8d.u899qmv8lsm7sxyz \--discovery-token-ca-cert-hash sha256:d7b2a47417fbff13e11a50ae92aaa0666448a92eb4c8deaaae9e9aa5c0cbc930 加入命令為:

kubeadm join 10.220.43.203:6443 --token 3u2q8d.u899qmv8lsm7sxyz \--discovery-token-ca-cert-hash sha256:d7b2a47417fbff13e11a50ae92aaa0666448a92eb4c8deaaae9e9aa5c0cbc930 \--ignore-preflight-errors=all \

--cri-socket unix:///var/run/cri-dockerd.sock- --ignore-preflight-errors=all?

- --cri-socket unix:///var/run/cri-dockerd.sock

這兩行一定要加上不然就會報各種錯:

[preflight] Running pre-flight checks

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR CRI]: container runtime is not running: output: time="2023-08-31T16:42:23+08:00" level=fatal msg="validate service connection: CRI v1 runtime API is not implemented for endpoint \"unix:///var/run/cri-dockerd.sock\": rpc error: code = Unimplemented desc = unknown service runtime.v1.RuntimeService"

, error: exit status 1

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

To see the stack trace of this error execute with --v=5 or higher

Found multiple CRI endpoints on the host. Please define which one do you wish to use by setting the 'criSocket' field in the kubeadm configuration file: unix:///var/run/containerd/containerd.sock, unix:///var/run/cri-dockerd.sock

To see the stack trace of this error execute with --v=5 or higher

3.9 驗證

master節點:

$ kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master Ready control-plane 49m v1.25.0 10.220.43.203 <none> Alibaba Cloud Linux (Aliyun Linux) 2.1903 LTS (Hunting Beagle) 4.19.91-27.6.al7.x86_64 docker://20.10.21

slave Ready <none> 10m v1.25.0 10.220.43.204 <none> Alibaba Cloud Linux (Aliyun Linux) 2.1903 LTS (Hunting Beagle) 4.19.91-27.6.al7.x86_64 docker://20.10.21slavea節點:

$ kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master Ready control-plane 50m v1.25.0 10.220.43.203 <none> Alibaba Cloud Linux (Aliyun Linux) 2.1903 LTS (Hunting Beagle) 4.19.91-27.6.al7.x86_64 docker://20.10.21

slave Ready <none> 11m v1.25.0 10.220.43.204 <none> Alibaba Cloud Linux (Aliyun Linux) 2.1903 LTS (Hunting Beagle) 4.19.91-27.6.al7.x86_64 docker://20.10.214 常見使用問題

4.1?K8S在kubeadm init后,沒有記錄kubeadm join如何查詢?

#再生成一個token即可

kubeadm token create --print-join-command

#下在的命令可以查看歷史的token

kubeadm token list4.2?node節點kubeadm join失敗后,要重新join怎么辦?

#再生成一個token即可

kubeadm token create --print-join-command

#下在的命令可以查看歷史的token

kubeadm token list4.3?重啟kubelet

systemctl daemon-reload

systemctl restart kubelet4.4 查詢系統組件

#查詢節點

kubectl get nodes

#查詢pods 一般要帶上"-n"即命名空間。不帶等同 -n dafault

kubectl get pods -n kube-system5 異常問題處理

5.1?kubeadm init報錯

[root@k8s centos]# kubeadm init

I1205 06:44:01.459391 12097 version.go:94] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.txt": Get https://dl.k8s.io/release/stable-1.txt: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

I1205 06:44:01.459549 12097 version.go:95] falling back to the local client version: v1.13.0

[init] Using Kubernetes version: v1.13.0

[preflight] Running pre-flight checks[WARNING Service-Docker]: docker service is not enabled, please run 'systemctl enable docker.service'[WARNING Hostname]: hostname "k8s.novalocal" could not be reached[WARNING Hostname]: hostname "k8s.novalocal": lookup k8s.novalocal on 10.32.148.99:53: no such host[WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service'

error execution phase preflight: [preflight] Some fatal errors occurred:[ERROR FileContent--proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables contents are not set to 1

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`5.1.1?網絡設置問題

5.1.1.1 錯誤內容

/proc/sys/net/bridge/bridge-nf-call-iptables contents are not set to 15.1.1.2?解決方法

$ echo 1 > /proc/sys/net/bridge/bridge-nf-call-iptables5.1.2?Enable docker

5.1.2.1? 錯誤內容

[WARNING Service-Docker]: docker service is not enabled, please run 'systemctl enable docker.service'5.1.2.2?解決方法

$ systemctl enable docker.service5.1.3?hostname問題?

5.1.3.1 錯誤內容

[WARNING Hostname]: hostname "slave" could not be reached

[WARNING Hostname]: hostname "slave": lookup slave on 10.32.148.99:53: no such host5.1.3.2?解決方法

1)修改主機名

$ hostnamectl set-hostname slave2)更改/etc/hostname

$ echo k8s > /etc/hostname5.1.4?Enable kubelet

5.1.4.1 錯誤內容

[WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service'5.1.4.2?錯誤內容?

$ systemctl enable kubelet.service6?配置kubectl命令tab鍵自動補全?

$ kubectl --help | grep bashcompletion Output shell completion code for the specified shell (bash or zsh)添加source <(kubectl completion bash)到/etc/profile,并使配置生效:

$ cat /etc/profile | head -2

# /etc/profile

source <(kubectl completion bash)$ source /etc/profile驗證kubectl是否可以自動補全。

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

ops-master-1 Ready control-plane,master 33m v1.21.0

ops-worker-1 Ready <none> 30m v1.21.0

ops-worker-2 Ready <none> 30m v1.21.0#注意:需要bash-completion-2.1-6.el7.noarch包,不然不能自動補全命令

$ rpm -qa | grep bash bash-completion-2.1-6.el7.noarch bash-4.2.46-30.el7.x86_64 bash-doc-4.2.46-30.el7.x86_64

實驗五:CSS3動畫制作)

實驗三:CSS字體等屬性使用)

- 華為OD統一考試(C卷))

環境搭建)

)