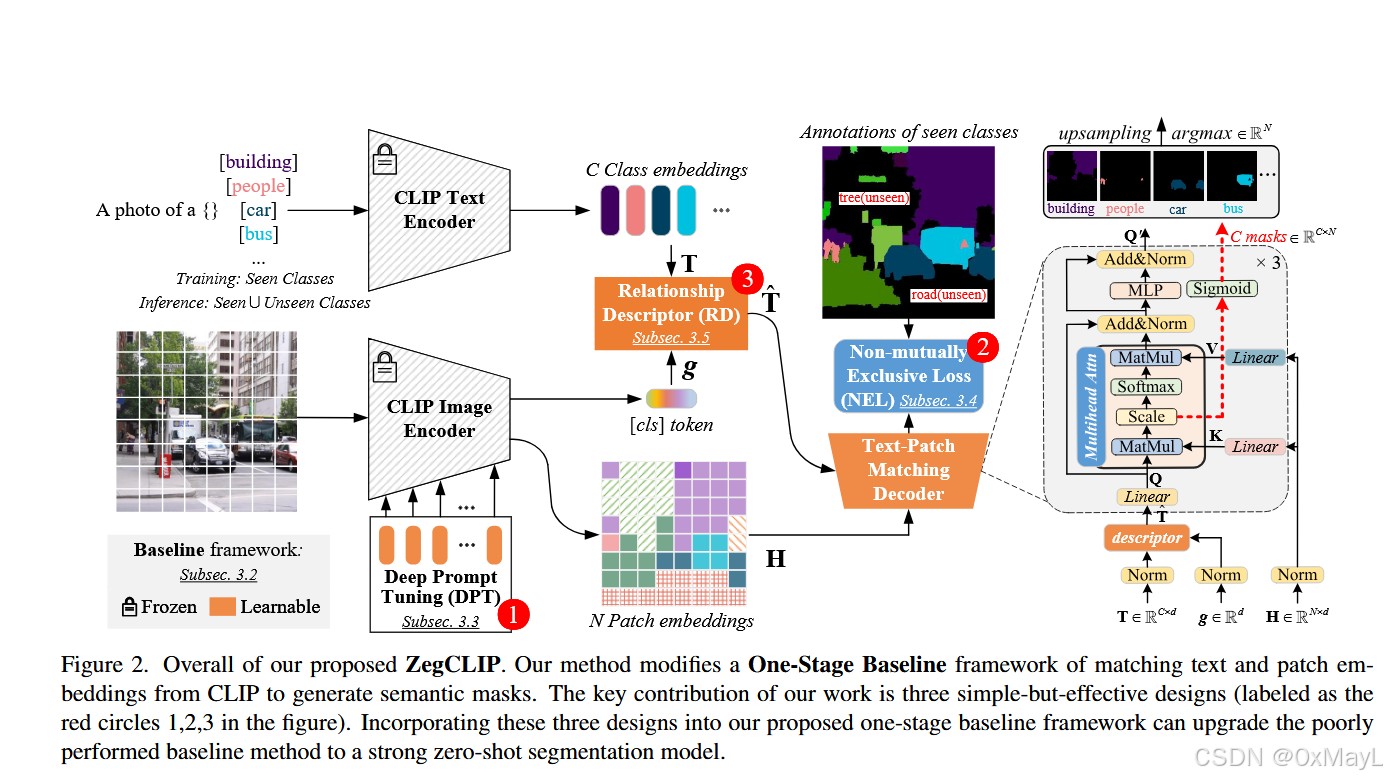

Zegclip

- 獲取圖像的特殊編碼:使用prompt tuning的技術,目的是減少過擬合和計算量。

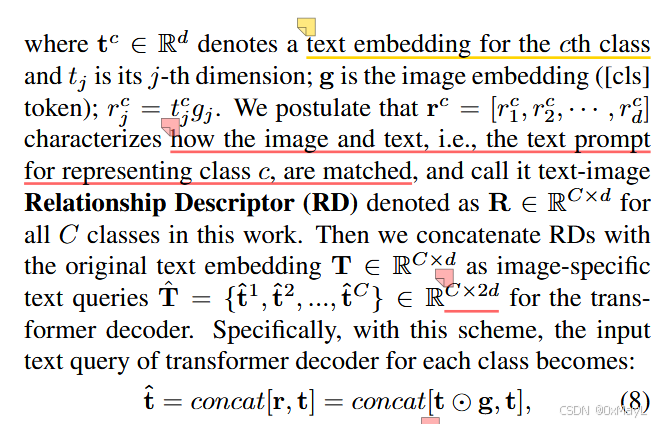

- 調整文本編碼:使用RD關系描述符,將每一個文本對應的[cls] token和圖像對應的[cls] token作哈密頓積,最后文本[cls]token

形式化任務

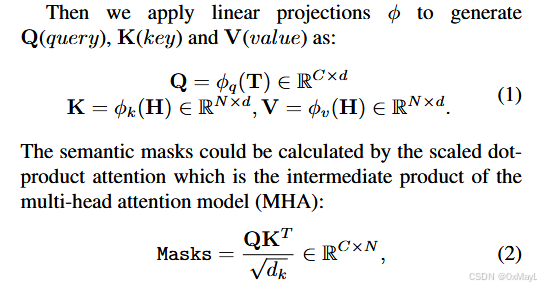

- 文本的[cls] token和每一個patch token進行一一匹配,這一點是通過交叉注意力實現的,通過

argmax操作得到最后的分割結果

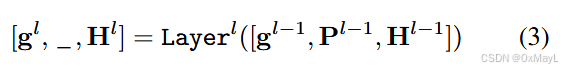

圖像編碼:prompt tuning

- P作為prompt token

文本編碼:RD關系描述符

While being quite intuitive, we find this design could lead to severe overfitting. We postulate that this is because the matching capability between the text query and image patterns is only trained on the seen-class datasets.

Non-mutually Exclusive Loss (NEL)

“the class space will be different from the training scenario, making the logit of an unseen class poorly calibrated with the other unseen classes.” (Zhou 等, 2023, p. 5) (pdf) 🔤類空間將與訓練場景不同,使得看不見的類的 logit 與其他看不見的類的校準很差。🔤

- 動機:unseen class相比seen class的概率很差,不適合進行softmax

inductive和transductive訓練設置

- inductive:訓練只用seen類,完全不了解unseen class的name,完全不知道unseen class的標注信息,測試時預測seen類和unseen類

- transductive:訓練分為兩個階段,全程都知道seen和unseen class的name,但是unseen class的標注信息完全不知道。第一個階段只在seen class上訓練,然后預測unseen class的標注信息,生成偽標簽。第二個階段使用unseen class的為標簽和seen class的ground truth進行訓練,測試與inductive一致。

“In the “transductive” setting, we train our ZegCLIP model on seen classes in the first half of training iterations and then apply self-training via generating pseudo labels in the rest of iterations.” (Zhou 等, 2023, p. 6) (pdf) 🔤在“轉導”設置中,我們在訓練迭代的前半部分在看到的類上訓練我們的 ZegCLIP 模型,然后在其余迭代中通過生成偽標簽來應用自訓練。🔤

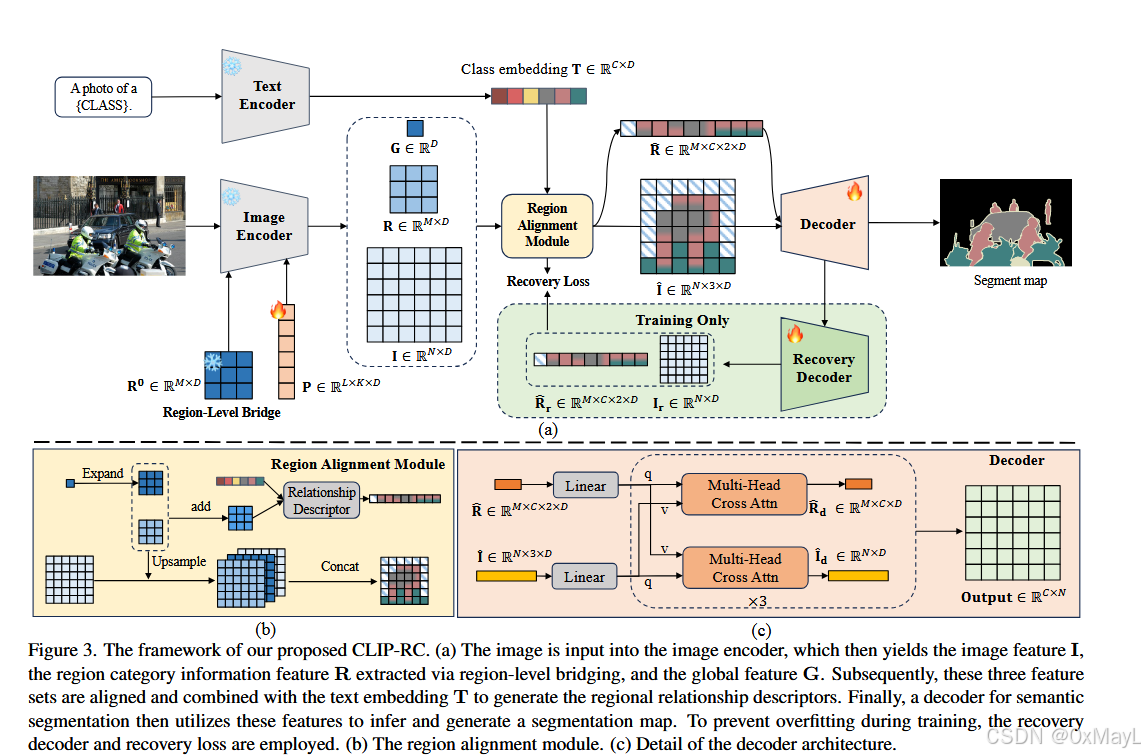

CLIP-RC

- RLB:VIT的特殊編碼

- RAM:Text encoder的特殊編碼+對齊

- 損失函數:Recovery Decoder With Recovery Loss

RLB

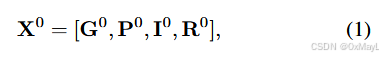

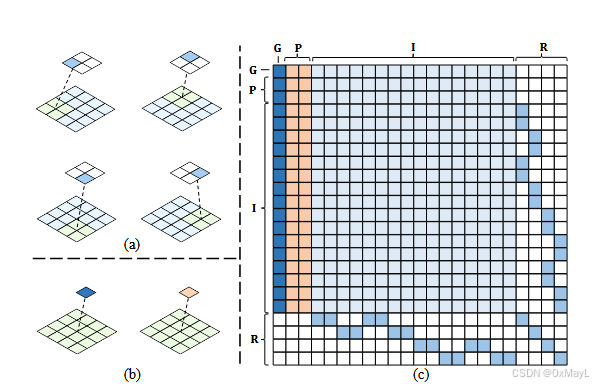

- VIT的輸入結構

- VIT的輸出結構

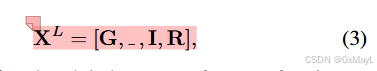

- G是圖像token(1,D),P是prompt token(K,D),I是patch token(N,D),R是作者引入的region token(M,D)。

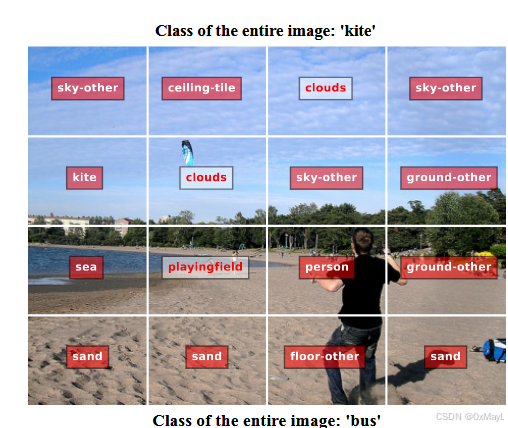

R的理解和掩碼設計

-

作者認為每一個R中的token對應了NMNM\frac{\sqrt{N}\sqrt{M}}{\sqrt{N}\sqrt{M}}N?M?N?M??個patches

-

例子:假設N=4,M=2,圖像中2x2的區域對應一個R的token

-

多了個掩碼矩陣,一個R的token對應這些patch,其他的patch不需要參與計算,所以說有個掩碼矩陣

-

輸出結果正常拋棄prompt token。

RLB

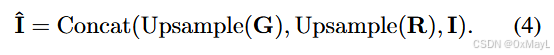

對齊圖像編碼

- image特征對齊為:(N,3D)

區域描述符(特殊編碼text encoder)

- 得到特殊編碼:(M,C,2D)

Decoder頭

- 先把I^\hat{I}I^和R^\hat{R}R^進行線性層映射到D維度(N,D)和(M,C,D)

- 正常交叉注意力

- 得到I和R形狀不變

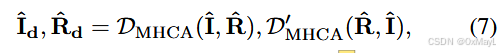

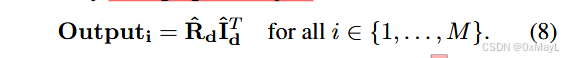

where, DMHCA and D′ MHCA denotes the decoder for semantic segmentation with multi-head cross attention, and ?Id ∈ RN×D and ?Rd ∈ RM×C×D are the image features and region-specific text queries respectively, used for segmentation. The segmentation map Output ∈ RC×N is obtained by averaging the outputs:

- Output:(M,C,D),然后對M維度平均得到最后的掩碼矩陣。

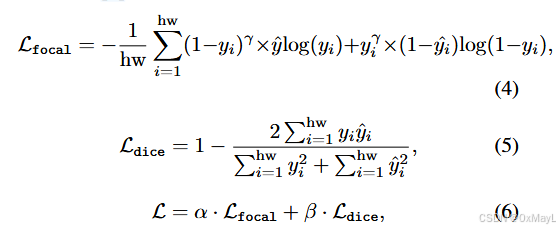

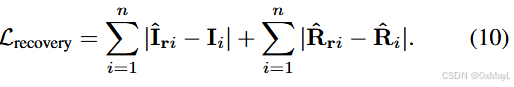

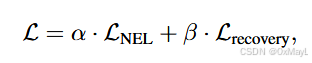

損失函數

- NLS+Recovery Loss

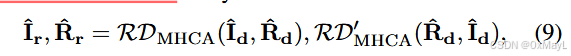

- 完全一模一樣架構的decoder(輔助頭)

Then, during training, a recovery decoder recovers the features extracted by the decoder into features with strong generalization. The network architecture of the recovery decoder is completely identical to that of the semantic segmentation decoder. They are recovered as follows:

- 這里的I指的是原始CLIP提取的圖像特征,已經被凍結,R指的是關系描述符,也就是文本特征。

)

)

)