本文手把手演示了如何在 K8s + Rancher 環境中快速拉起一套生產可用的 Apache DolphinScheduler 3.2.2 集群。全文圍繞“鏡像加速、依賴本地化、存儲持久化”三大痛點展開,附有詳細的代碼解析,收藏細看吧!

環境準備

1、軟件準備

2、環境規劃

部署

1、官網下載apache-dolphinscheduler源碼

[root@master ~]# mkdir /opt/dolphinscheduler

#

[root@master ~]# cd /opt/dolphinscheduler

[root@master dolphinscheduler]# curl -Lo https://dlcdn.apache.org/dolphinscheduler/3.2.2/apache-dolphinscheduler-3.2.2-src.tar.gz

[root@master dolphinscheduler]# tar -xzvf ./apache-dolphinscheduler-3.2.2-src.tar.gz

2、修改Chart的鏡像地址配置

(1)、修改Chart.yaml配置文件

將repository: https://raw.githubusercontent.com/bitnami/charts/archive-full-index/bitnami改為repository: https://raw.gitmirror.com/bitnami/charts/archive-full-index/bitnami

[root@master dolphinscheduler]# cd /opt/dolphinscheduler/apache-dolphinscheduler-3.2.2-src/deploy/kubernetes/dolphinscheduler

[root@master dolphinscheduler]# vim Chart.yaml

# Application charts are a collection of templates that can be packaged into versioned archives

# to be deployed.

#

# Library charts provide useful utilities or functions for the chart developer. They're included as

# a dependency of application charts to inject those utilities and functions into the rendering

# pipeline. Library charts do not define any templates and therefore cannot be deployed.

type: application# This is the chart version. This version number should be incremented each time you make changes

# to the chart and its templates, including the app version.

version: 3.3.0-alpha# This is the version number of the application being deployed. This version number should be

# incremented each time you make changes to the application.

appVersion: 3.3.0-alphadependencies:

- name: postgresqlversion: 10.3.18# Due to a change in the Bitnami repo, https://charts.bitnami.com/bitnami was truncated only# containing entries for the latest 6 months (from January 2022 on).# This URL: https://raw.githubusercontent.com/bitnami/charts/archive-full-index/bitnami# contains the full 'index.yaml'.# See detail here: https://github.com/bitnami/charts/issues/10833#repository: https://raw.githubusercontent.com/bitnami/charts/archive-full-index/bitnamirepository: https://raw.gitmirror.com/bitnami/charts/archive-full-index/bitnamicondition: postgresql.enabled

- name: zookeeperversion: 6.5.3# Same as above.#repository: https://raw.githubusercontent.com/bitnami/charts/archive-full-index/bitnamirepository: https://raw.gitmirror.com/bitnami/charts/archive-full-index/bitnamicondition: zookeeper.enabled

- name: mysqlversion: 9.4.1#repository: https://raw.githubusercontent.com/bitnami/charts/archive-full-index/bitnamirepository: https://raw.gitmirror.com/bitnami/charts/archive-full-index/bitnamicondition: mysql.enabled

- name: minioversion: 11.10.13#repository: https://raw.githubusercontent.com/bitnami/charts/archive-full-index/bitnamirepository: https://raw.gitmirror.com/bitnami/charts/archive-full-index/bitnamicondition: minio.enabled

(2)、執行helm更新

Chart.yaml配置文件修改完成后執行下面的命令:

[root@master dolphinscheduler]# helm repo add bitnami-full-index https://raw.gitmirror.com/bitnami/charts/archive-full-index/bitnami

[root@master dolphinscheduler]# helm repo add bitnami https://charts.bitnami.com/bitnami

[root@master dolphinscheduler]# helm dependency update .

(3)、解壓依賴文件

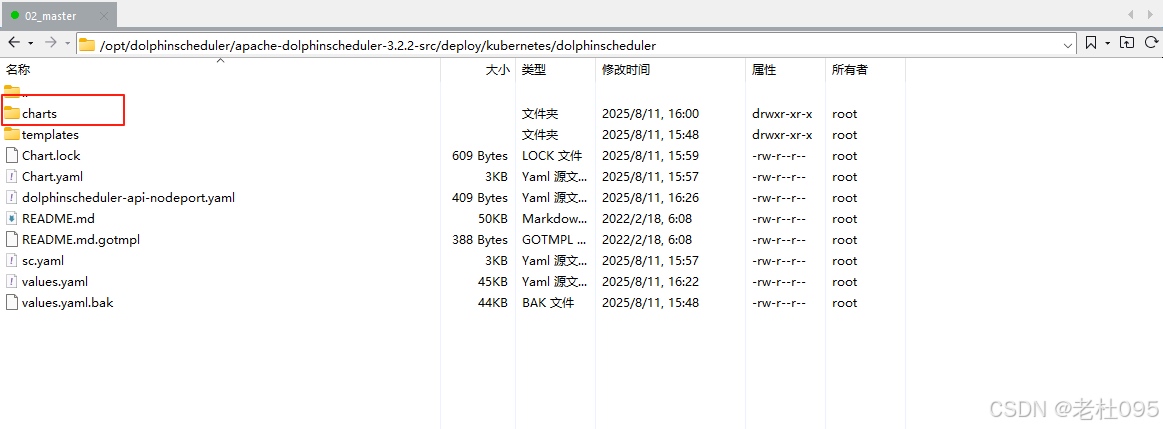

helm更新完成后,在當前目錄會新生成【charts】目錄,該目錄下就是過helm更新下載到本地的依賴文件,如下圖所示:

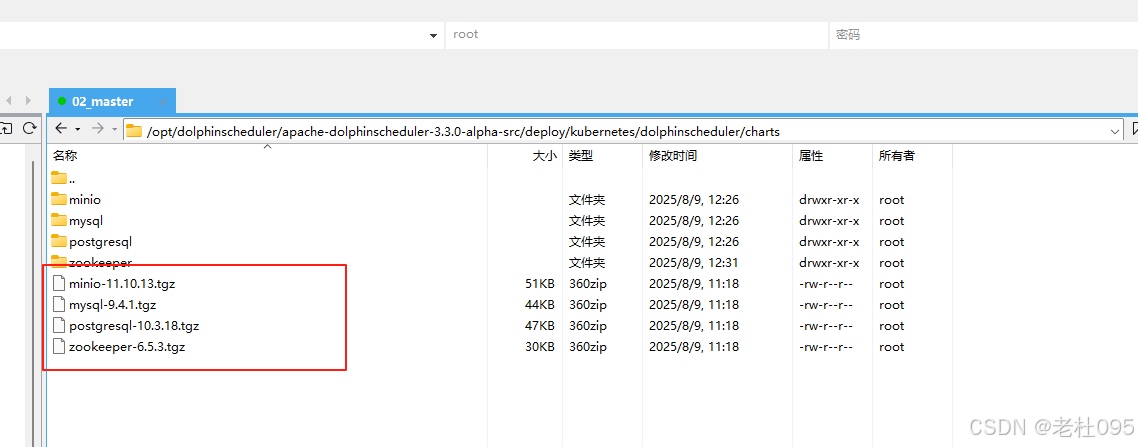

解壓依賴包文件,因為本次安裝除了Mysql都需要同步安裝,所以要解壓minio-11.10.13.tgz,postgresql-10.3.18.tgz,zookeeper-6.5.3.tgz這幾個壓縮包

[root@master dolphinscheduler]# tar -zxvf minio-11.10.13.tgz

[root@master dolphinscheduler]# tar -zxvf postgresql-10.3.18.tgz

[root@master dolphinscheduler]# tar -zxvf zookeeper-6.5.3.tgz

3、修改Dolphinscheduler的Helm配置文件

cd /opt/dolphinscheduler/apache-dolphinscheduler-3.2.2-src/deploy/kubernetes/dolphinscheduler

vim values.yaml

(1)、修改初始鏡像

需要修改busybox和dolphinscheduler各個組件的鏡像地址,修改后如下:

# -- World time and date for cities in all time zones

timezone: "Asia/Shanghai"# -- Used to detect whether dolphinscheduler dependent services such as database are ready

initImage:# -- Image pull policy. Options: Always, Never, IfNotPresentpullPolicy: "IfNotPresent"# -- Specify initImage repository#busybox: "busybox:1.30.1"busybox: "registry.cn-hangzhou.aliyuncs.com/docker_image-ljx/busybox:1.30.1"image:# -- Docker image repository for the DolphinSchedulerregistry: registry.cn-hangzhou.aliyuncs.com/docker_image-ljx# -- Docker image version for the DolphinSchedulertag: 3.2.2# -- Image pull policy. Options: Always, Never, IfNotPresentpullPolicy: "IfNotPresent"# -- Specify a imagePullSecretspullSecret: ""# -- master imagemaster: dolphinscheduler-master# -- worker imageworker: dolphinscheduler-worker# -- api-server imageapi: dolphinscheduler-api# -- alert-server imagealert: dolphinscheduler-alert-server# -- tools imagetools: dolphinscheduler-tools

(2)、修改postgresql配置

- 本文安裝依賴的數據庫配置了默認的數據庫postgresql,參見配置

datasource.profile: postgresql,但是values.yaml的默認配置中并沒有配置postgresql鏡像,會導致postgresql鏡像拉取失敗。 - 本文dolphinscheduler依賴于

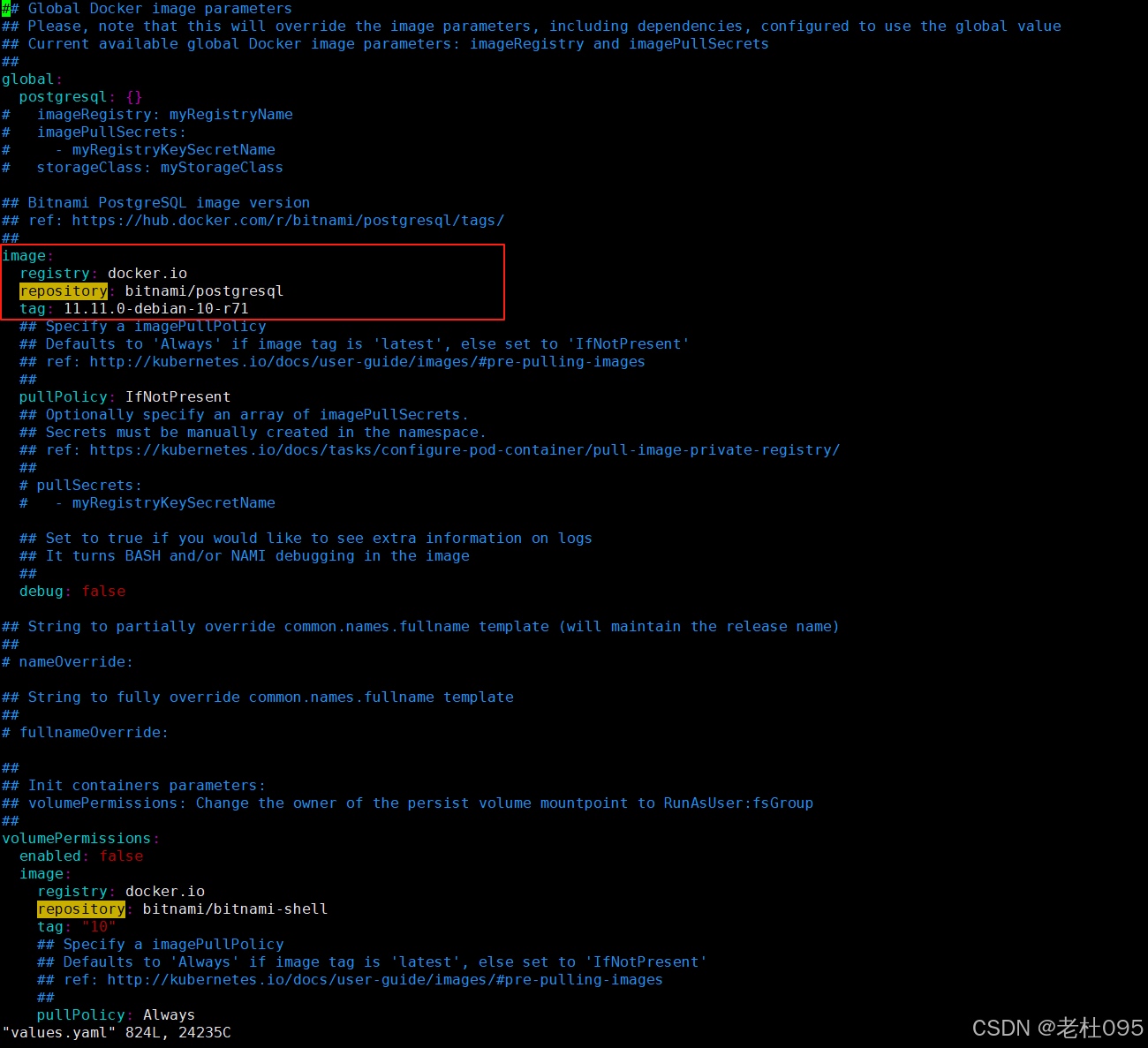

bitnami postgresql的Chart,且已經在上一步 解壓了。獲取鏡像地址的方式只需進入解壓目錄(/opt/dolphinscheduler/apache-dolphinscheduler-3.2.2-src/deploy/kubernetes/dolphinscheduler/charts/postgresql)。

查看postgresql的values.yaml(/opt/dolphinscheduler/apache-dolphinscheduler-3.2.2-src/deploy/kubernetes/dolphinscheduler/charts/postgresql/values.yaml)找到鏡像地址:

image:registry: docker.iorepository: bitnami/postgresqltag: 11.11.0-debian-10-r71## Specify a imagePullPolicy## Defaults to 'Always' if image tag is 'latest', else set to 'IfNotPresent'## ref: http://kubernetes.io/docs/user-guide/images/#pre-pulling-images##pullPolicy: IfNotPresent## Optionally specify an array of imagePullSecrets.## Secrets must be manually created in the namespace.## ref: https://kubernetes.io/docs/tasks/configure-pod-container/pull-image-private-registry/### pullSecrets:# - myRegistryKeySecretName## Set to true if you would like to see extra information on logs## It turns BASH and/or NAMI debugging in the image##debug: false

復制上述配置文件中的image配置信息,如下圖所示:

將上步復制到image粘貼至/opt/dolphinscheduler/apache-dolphinscheduler-3.2.2-src/deploy/kubernetes/dolphinscheduler/values.yaml配置文件中postgresql配置項下,如下所示:

datasource:# -- The profile of datasourceprofile: postgresqlpostgresql:image:registry: docker.iorepository: bitnami/postgresqltag: 11.11.0-debian-10-r71# -- If not exists external PostgreSQL, by default, the DolphinScheduler will use a internal PostgreSQLenabled: true# -- The username for internal PostgreSQLpostgresqlUsername: "root"# -- The password for internal PostgreSQLpostgresqlPassword: "root"# -- The database for internal PostgreSQLpostgresqlDatabase: "dolphinscheduler"# -- The driverClassName for internal PostgreSQLdriverClassName: "org.postgresql.Driver"# -- The params for internal PostgreSQLparams: "characterEncoding=utf8"persistence:# -- Set postgresql.persistence.enabled to true to mount a new volume for internal PostgreSQLenabled: false# -- `PersistentVolumeClaim` sizesize: "20Gi"# -- PostgreSQL data persistent volume storage class. If set to "-", storageClassName: "", which disables dynamic provisioningstorageClass: "-"

(3)、修改minio配置

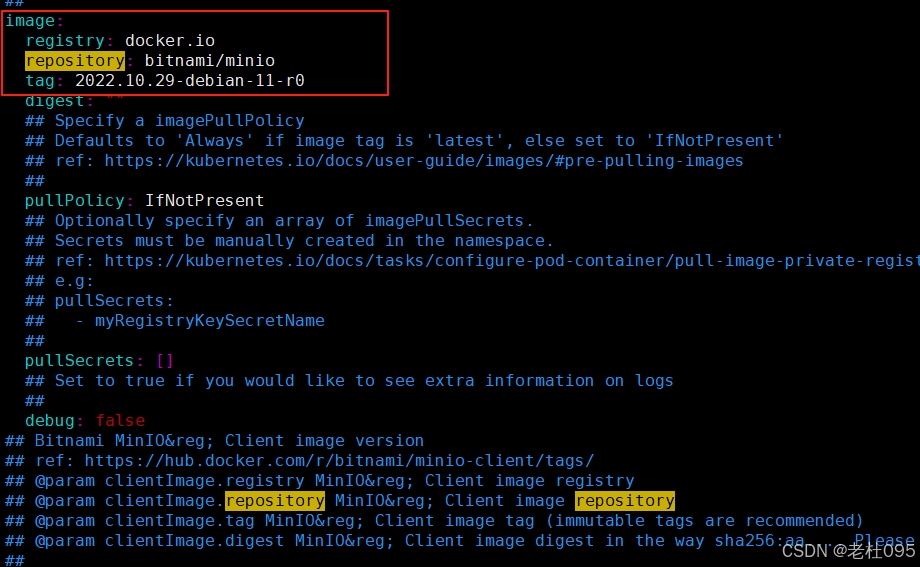

查看minio的values.yaml(/opt/dolphinscheduler/apache-dolphinscheduler-3.2.2-src/deploy/kubernetes/dolphinscheduler/charts/minio/values.yaml)找到鏡像地址:

image:registry: docker.iorepository: bitnami/miniotag: 2022.10.29-debian-11-r0digest: ""## Specify a imagePullPolicy## Defaults to 'Always' if image tag is 'latest', else set to 'IfNotPresent'## ref: https://kubernetes.io/docs/user-guide/images/#pre-pulling-images##pullPolicy: IfNotPresent## Optionally specify an array of imagePullSecrets.## Secrets must be manually created in the namespace.## ref: https://kubernetes.io/docs/tasks/configure-pod-container/pull-image-private-registry/## e.g:## pullSecrets:## - myRegistryKeySecretName##pullSecrets: []## Set to true if you would like to see extra information on logs##debug: false

復制上述配置文件中的image配置信息,如下圖所示:

將上步復制到image粘貼至/opt/dolphinscheduler/apache-dolphinscheduler-3.2.2-src/deploy/kubernetes/dolphinscheduler/values.yaml配置文件中minio配置項下,如下所示:

minio:image:registry: docker.iorepository: bitnami/miniotag: 2022.10.29-debian-11-r0# -- Deploy minio and configure it as the default storage for DolphinScheduler, note this is for demo only, not for production.enabled: trueauth:# -- minio usernamerootUser: minioadmin# -- minio passwordrootPassword: minioadminpersistence:# -- Set minio.persistence.enabled to true to mount a new volume for internal minioenabled: false# -- minio default bucketsdefaultBuckets: "dolphinscheduler"

(4)、修改zookeeper配置

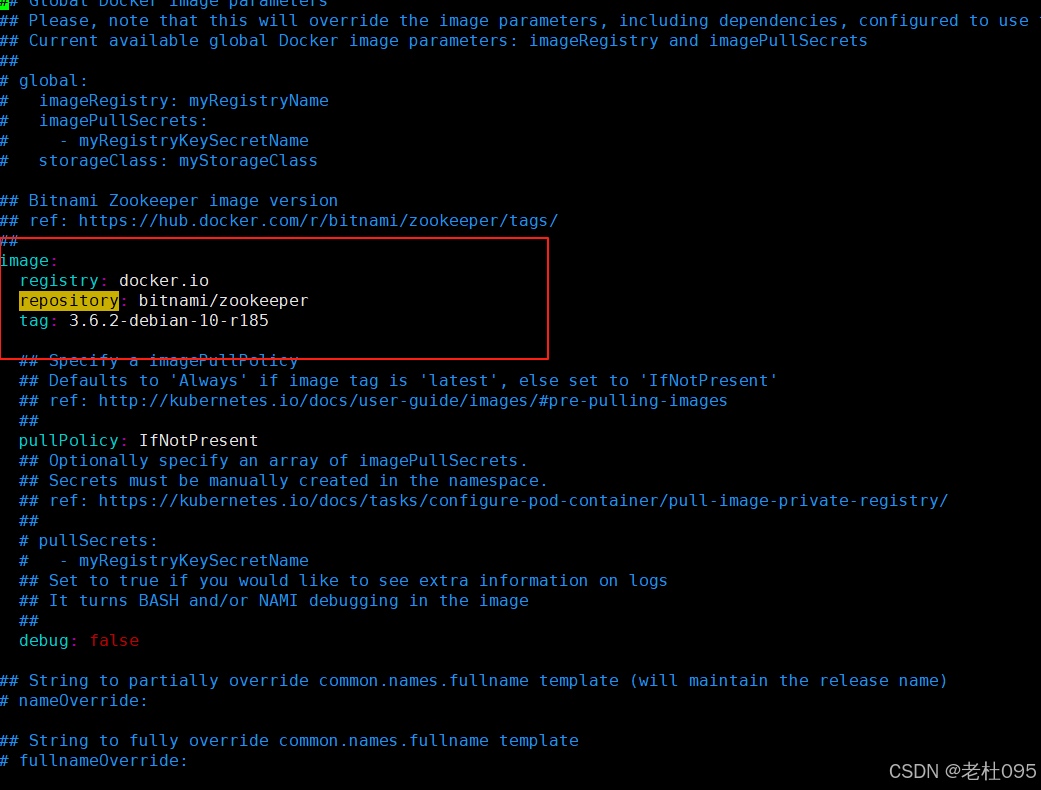

查看zookeeper的values.yaml(/opt/dolphinscheduler/apache-dolphinscheduler-3.2.2-src/deploy/kubernetes/dolphinscheduler/charts/zookeeper/values.yaml)找到鏡像地址:

image:registry: docker.iorepository: bitnami/zookeepertag: 3.6.2-debian-10-r185## Specify a imagePullPolicy## Defaults to 'Always' if image tag is 'latest', else set to 'IfNotPresent'## ref: http://kubernetes.io/docs/user-guide/images/#pre-pulling-images##pullPolicy: IfNotPresent## Optionally specify an array of imagePullSecrets.## Secrets must be manually created in the namespace.## ref: https://kubernetes.io/docs/tasks/configure-pod-container/pull-image-private-registry/### pullSecrets:# - myRegistryKeySecretName## Set to true if you would like to see extra information on logs## It turns BASH and/or NAMI debugging in the image##debug: false

復制上述配置文件中的image配置信息,如下圖所示:

將上步復制到image粘貼至/opt/dolphinscheduler/apache-dolphinscheduler-3.2.2-src/deploy/kubernetes/dolphinscheduler/values.yaml配置文件中zookeeper配置項下,如下所示:

zookeeper:image:registry: docker.iorepository: bitnami/zookeepertag: 3.6.2-debian-10-r185# -- If not exists external registry, the zookeeper registry will be used by default.enabled: trueservice:# -- The port of zookeeperport: 2181# -- A list of comma separated Four Letter Words commands to usefourlwCommandsWhitelist: "srvr,ruok,wchs,cons"persistence:# -- Set `zookeeper.persistence.enabled` to true to mount a new volume for internal ZooKeeperenabled: false# -- PersistentVolumeClaim sizesize: "20Gi"# -- ZooKeeper data persistent volume storage class. If set to "-", storageClassName: "", which disables dynamic provisioningstorageClass: "-"

(5)、修改master配置

master:# -- Enable or disable the Master componentenabled: truereplicas: "1"resources:limits:memory: "4Gi"cpu: "4"requests:memory: "2Gi"cpu: "500m" persistentVolumeClaim:# -- Set `worker.persistentVolumeClaim.enabled` to `true` to enable `persistentVolumeClaim` for `worker`enabled: true## dolphinscheduler data volumedataPersistentVolume:# -- Set `worker.persistentVolumeClaim.dataPersistentVolume.enabled` to `true` to mount a data volume for `worker`enabled: true# -- `PersistentVolumeClaim` access modesaccessModes:- "ReadWriteOnce"# -- `Worker` data persistent volume storage class. If set to "-", storageClassName: "", which disables dynamic provisioningstorageClassName: "-"# -- `PersistentVolumeClaim` sizestorage: "20Gi"env:# -- The jvm options for master serverJAVA_OPTS: "-Xms1g -Xmx1g -Xmn512m"

(6)、修改worker配置

nfs-storage存儲卷的創建

sc.yaml配置文件如下:

## 創建了一個存儲類

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:name: nfs-storageannotations:storageclass.kubernetes.io/is-default-class: "true"

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner

parameters:archiveOnDelete: "true" ## 刪除pv的時候,pv的內容是否要備份---

apiVersion: apps/v1

kind: Deployment

metadata:name: nfs-clientlabels:app: nfs-client# replace with namespace where provisioner is deployednamespace: default

spec:replicas: 1strategy:type: Recreateselector:matchLabels:app: nfs-clienttemplate:metadata:labels:app: nfs-clientspec:serviceAccountName: nfs-clientcontainers:- name: nfs-clientimage: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/nfs-subdir-external-provisioner:v4.0.2# resources:# limits:# cpu: 10m# requests:# cpu: 10mvolumeMounts:- name: nfs-client-rootmountPath: /persistentvolumesenv:- name: PROVISIONER_NAMEvalue: k8s-sigs.io/nfs-subdir-external-provisioner- name: NFS_SERVERvalue: 192.168.255.140 ## 指定自己nfs服務器地址- name: NFS_PATH value: /data/nfsdata ## nfs服務器共享的目錄volumes:- name: nfs-client-rootnfs:server: 192.168.255.140path: /data/nfsdata

---

apiVersion: v1

kind: ServiceAccount

metadata:name: nfs-client# replace with namespace where provisioner is deployednamespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: nfs-client-runner

rules:- apiGroups: [""]resources: ["nodes"]verbs: ["get", "list", "watch"]- apiGroups: [""]resources: ["persistentvolumes"]verbs: ["get", "list", "watch", "create", "delete"]- apiGroups: [""]resources: ["persistentvolumeclaims"]verbs: ["get", "list", "watch", "update"]- apiGroups: ["storage.k8s.io"]resources: ["storageclasses"]verbs: ["get", "list", "watch"]- apiGroups: [""]resources: ["events"]verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: run-nfs-client

subjects:- kind: ServiceAccountname: nfs-client# replace with namespace where provisioner is deployednamespace: default

roleRef:kind: ClusterRolename: nfs-client-runnerapiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: leader-locking-nfs-client# replace with namespace where provisioner is deployednamespace: default

rules:- apiGroups: [""]resources: ["endpoints"]verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: leader-locking-nfs-client# replace with namespace where provisioner is deployednamespace: default

subjects:- kind: ServiceAccountname: nfs-client# replace with namespace where provisioner is deployednamespace: default

roleRef:kind: Rolename: leader-locking-nfs-clientapiGroup: rbac.authorization.k8s.io

執行命令:

# 執行發布安裝

kubectl apply -f sc.yaml# 查看nfs-client-provisioner pod 是否安裝成功

kubectl get pod -A

Worker節點配置如下:

worker:# -- Enable or disable the Worker componentenabled: truereplicas: "1"resources:limits:memory: "4Gi"cpu: "2"requests:memory: "2Gi"cpu: "500m" persistentVolumeClaim:# -- Set `worker.persistentVolumeClaim.enabled` to `true` to enable `persistentVolumeClaim` for `worker`enabled: true## dolphinscheduler data volumedataPersistentVolume:# -- Set `worker.persistentVolumeClaim.dataPersistentVolume.enabled` to `true` to mount a data volume for `worker`enabled: true# -- `PersistentVolumeClaim` access modesaccessModes:- "ReadWriteOnce"# -- `Worker` data persistent volume storage class. If set to "-", storageClassName: "", which disables dynamic provisioningstorageClassName: "nfs-storage"# -- `PersistentVolumeClaim` sizestorage: "20Gi"env:# -- The jvm options for master serverJAVA_OPTS: "-Xms1g -Xmx1g -Xmn512m"

(6)、修改alter配置

alert:# -- Enable or disable the Worker componentenabled: truereplicas: "1"resources:limits:memory: "2Gi"cpu: "1"requests:memory: "1Gi"cpu: "500m"persistentVolumeClaim:# -- Set `worker.persistentVolumeClaim.enabled` to `true` to enable `persistentVolumeClaim` for `worker`enabled: true## dolphinscheduler data volumedataPersistentVolume:# -- Set `worker.persistentVolumeClaim.dataPersistentVolume.enabled` to `true` to mount a data volume for `worker`enabled: true# -- `PersistentVolumeClaim` access modesaccessModes:- "ReadWriteOnce"# -- `Worker` data persistent volume storage class. If set to "-", storageClassName: "", which disables dynamic provisioningstorageClassName: "-"# -- `PersistentVolumeClaim` sizestorage: "20Gi"env:# -- The jvm options for master serverJAVA_OPTS: "-Xms1g -Xmx1g -Xmn512m"

完整的values.yaml配置文件如下:

#

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

## Default values for dolphinscheduler-chart.

# This is a YAML-formatted file.

# Declare variables to be passed into your templates.# -- World time and date for cities in all time zones

timezone: "Asia/Shanghai"# -- Used to detect whether dolphinscheduler dependent services such as database are ready

initImage:# -- Image pull policy. Options: Always, Never, IfNotPresentpullPolicy: "IfNotPresent"# -- Specify initImage repositorybusybox: "registry.cn-hangzhou.aliyuncs.com/docker_image-ljx/busybox:1.30.1"image:# -- Docker image repository for the DolphinSchedulerregistry: registry.cn-hangzhou.aliyuncs.com/docker_image-ljx# -- Docker image version for the DolphinSchedulertag: 3.2.2# -- Image pull policy. Options: Always, Never, IfNotPresentpullPolicy: "IfNotPresent"# -- Specify a imagePullSecretspullSecret: ""# -- master imagemaster: dolphinscheduler-master# -- worker imageworker: dolphinscheduler-worker# -- api-server imageapi: dolphinscheduler-api# -- alert-server imagealert: dolphinscheduler-alert-server# -- tools imagetools: dolphinscheduler-toolsdatasource:# -- The profile of datasourceprofile: postgresqlpostgresql:image:registry: docker.iorepository: bitnami/postgresqltag: 11.11.0-debian-10-r71# -- If not exists external PostgreSQL, by default, the DolphinScheduler will use a internal PostgreSQLenabled: true# -- The username for internal PostgreSQLpostgresqlUsername: "root"# -- The password for internal PostgreSQLpostgresqlPassword: "root"# -- The database for internal PostgreSQLpostgresqlDatabase: "dolphinscheduler"# -- The driverClassName for internal PostgreSQLdriverClassName: "org.postgresql.Driver"# -- The params for internal PostgreSQLparams: "characterEncoding=utf8"persistence:# -- Set postgresql.persistence.enabled to true to mount a new volume for internal PostgreSQLenabled: false# -- `PersistentVolumeClaim` sizesize: "20Gi"# -- PostgreSQL data persistent volume storage class. If set to "-", storageClassName: "", which disables dynamic provisioningstorageClass: "-"mysql:image:registry: docker.iorepository: bitnami/mysqltag: 8.0.31-debian-11-r0# -- If not exists external MySQL, by default, the DolphinScheduler will use a internal MySQLenabled: false# -- mysql driverClassNamedriverClassName: "com.mysql.cj.jdbc.Driver"auth:# -- mysql usernameusername: "ds"# -- mysql passwordpassword: "ds"# -- mysql databasedatabase: "dolphinscheduler"# -- mysql paramsparams: "characterEncoding=utf8"primary:persistence:# -- Set mysql.primary.persistence.enabled to true to mount a new volume for internal MySQLenabled: false# -- `PersistentVolumeClaim` sizesize: "20Gi"# -- MySQL data persistent volume storage class. If set to "-", storageClassName: "", which disables dynamic provisioningstorageClass: "-"minio:image:registry: docker.iorepository: bitnami/miniotag: 2022.10.29-debian-11-r0# -- Deploy minio and configure it as the default storage for DolphinScheduler, note this is for demo only, not for production.enabled: trueauth:# -- minio usernamerootUser: minioadmin# -- minio passwordrootPassword: minioadminpersistence:# -- Set minio.persistence.enabled to true to mount a new volume for internal minioenabled: false# -- minio default bucketsdefaultBuckets: "dolphinscheduler"externalDatabase:# -- If exists external database, and set postgresql.enable value to false.# external database will be used, otherwise Dolphinscheduler's internal database will be used.enabled: false# -- The type of external database, supported types: postgresql, mysqltype: "postgresql"# -- The host of external databasehost: "localhost"# -- The port of external databaseport: "5432"# -- The username of external databaseusername: "root"# -- The password of external databasepassword: "root"# -- The database of external databasedatabase: "dolphinscheduler"# -- The params of external databaseparams: "characterEncoding=utf8"# -- The driverClassName of external databasedriverClassName: "org.postgresql.Driver"zookeeper:image:registry: docker.iorepository: bitnami/zookeepertag: 3.6.2-debian-10-r185# -- If not exists external registry, the zookeeper registry will be used by default.enabled: trueservice:# -- The port of zookeeperport: 2181# -- A list of comma separated Four Letter Words commands to usefourlwCommandsWhitelist: "srvr,ruok,wchs,cons"persistence:# -- Set `zookeeper.persistence.enabled` to true to mount a new volume for internal ZooKeeperenabled: false# -- PersistentVolumeClaim sizesize: "20Gi"# -- ZooKeeper data persistent volume storage class. If set to "-", storageClassName: "", which disables dynamic provisioningstorageClass: "-"registryEtcd:# -- If you want to use Etcd for your registry center, change this value to true. And set zookeeper.enabled to falseenabled: false# -- Etcd endpointsendpoints: ""# -- Etcd namespacenamespace: "dolphinscheduler"# -- Etcd useruser: ""# -- Etcd passWordpassWord: ""# -- Etcd authorityauthority: ""# Please create a new folder: deploy/kubernetes/dolphinscheduler/etcd-certsssl:# -- If your Etcd server has configured with ssl, change this value to true. About certification files you can see [here](https://github.com/etcd-io/jetcd/blob/main/docs/SslConfig.md) for how to convert.enabled: false# -- CertFile file pathcertFile: "etcd-certs/ca.crt"# -- keyCertChainFile file pathkeyCertChainFile: "etcd-certs/client.crt"# -- keyFile file pathkeyFile: "etcd-certs/client.pem"registryJdbc:# -- If you want to use JDbc for your registry center, change this value to true. And set zookeeper.enabled and registryEtcd.enabled to falseenabled: false# -- Used to schedule refresh the ephemeral data/ locktermRefreshInterval: 2s# -- Used to calculate the expire timetermExpireTimes: 3hikariConfig:# -- Default use same Dolphinscheduler's database, if you want to use other database please change `enabled` to `true` and change other configsenabled: false# -- Default use same Dolphinscheduler's database if you don't change this value. If you set this value, Registry jdbc's database type will use itdriverClassName: com.mysql.cj.jdbc.Driver# -- Default use same Dolphinscheduler's database if you don't change this value. If you set this value, Registry jdbc's database type will use itjdbcurl: jdbc:mysql://# -- Default use same Dolphinscheduler's database if you don't change this value. If you set this value, Registry jdbc's database type will use itusername: ""# -- Default use same Dolphinscheduler's database if you don't change this value. If you set this value, Registry jdbc's database type will use itpassword: ""## If exists external registry and set zookeeper.enable value to false, the external registry will be used.

externalRegistry:# -- If exists external registry and set `zookeeper.enable` && `registryEtcd.enabled` && `registryJdbc.enabled` to false, specify the external registry plugin nameregistryPluginName: "zookeeper"# -- If exists external registry and set `zookeeper.enable` && `registryEtcd.enabled` && `registryJdbc.enabled` to false, specify the external registry serversregistryServers: "127.0.0.1:2181"security:authentication:# -- Authentication types (supported types: PASSWORD,LDAP,CASDOOR_SSO)type: PASSWORD# IF you set type `LDAP`, below config will be effectiveldap:# -- LDAP urlsurls: ldap://ldap.forumsys.com:389/# -- LDAP base dnbasedn: dc=example,dc=com# -- LDAP usernameusername: cn=read-only-admin,dc=example,dc=com# -- LDAP passwordpassword: passworduser:# -- Admin user account when you log-in with LDAPadmin: read-only-admin# -- LDAP user identity attributeidentityattribute: uid# -- LDAP user email attributeemailattribute: mail# -- action when ldap user is not exist,default value: CREATE. Optional values include(CREATE,DENY)notexistaction: CREATEssl:# -- LDAP ssl switchenable: false# -- LDAP jks file absolute path, do not change this valuetruststore: "/opt/ldapkeystore.jks"# -- LDAP jks file base64 content.# If you use macOS, please run `base64 -b 0 -i /path/to/your.jks`.# If you use Linux, please run `base64 -w 0 /path/to/your.jks`.# If you use Windows, please run `certutil -f -encode /path/to/your.jks`.# Then copy the base64 content to below field in one linejksbase64content: ""# -- LDAP jks passwordtruststorepassword: ""conf:# -- auto restart, if true, all components will be restarted automatically after the common configuration is updated. if false, you need to restart the components manually. default is falseauto: false# common configurationcommon:# -- user data local directory path, please make sure the directory exists and have read write permissionsdata.basedir.path: /tmp/dolphinscheduler# -- resource storage type: HDFS, S3, OSS, GCS, ABS, NONEresource.storage.type: S3# -- resource store on HDFS/S3 path, resource file will store to this base path, self configuration, please make sure the directory exists on hdfs and have read write permissions. "/dolphinscheduler" is recommendedresource.storage.upload.base.path: /dolphinscheduler# -- The AWS access key. if resource.storage.type=S3 or use EMR-Task, This configuration is requiredresource.aws.access.key.id: minioadmin# -- The AWS secret access key. if resource.storage.type=S3 or use EMR-Task, This configuration is requiredresource.aws.secret.access.key: minioadmin# -- The AWS Region to use. if resource.storage.type=S3 or use EMR-Task, This configuration is requiredresource.aws.region: ca-central-1# -- The name of the bucket. You need to create them by yourself. Otherwise, the system cannot start. All buckets in Amazon S3 share a single namespace; ensure the bucket is given a unique name.resource.aws.s3.bucket.name: dolphinscheduler# -- You need to set this parameter when private cloud s3. If S3 uses public cloud, you only need to set resource.aws.region or set to the endpoint of a public cloud such as S3.cn-north-1.amazonaws.com.cnresource.aws.s3.endpoint: http://minio:9000# -- alibaba cloud access key id, required if you set resource.storage.type=OSSresource.alibaba.cloud.access.key.id: <your-access-key-id># -- alibaba cloud access key secret, required if you set resource.storage.type=OSSresource.alibaba.cloud.access.key.secret: <your-access-key-secret># -- alibaba cloud region, required if you set resource.storage.type=OSSresource.alibaba.cloud.region: cn-hangzhou# -- oss bucket name, required if you set resource.storage.type=OSSresource.alibaba.cloud.oss.bucket.name: dolphinscheduler# -- oss bucket endpoint, required if you set resource.storage.type=OSSresource.alibaba.cloud.oss.endpoint: https://oss-cn-hangzhou.aliyuncs.com# -- azure storage account name, required if you set resource.storage.type=ABSresource.azure.client.id: minioadmin# -- azure storage account key, required if you set resource.storage.type=ABSresource.azure.client.secret: minioadmin# -- azure storage subId, required if you set resource.storage.type=ABSresource.azure.subId: minioadmin# -- azure storage tenantId, required if you set resource.storage.type=ABSresource.azure.tenant.id: minioadmin# -- if resource.storage.type=HDFS, the user must have the permission to create directories under the HDFS root pathresource.hdfs.root.user: hdfs# -- if resource.storage.type=S3, the value like: s3a://dolphinscheduler; if resource.storage.type=HDFS and namenode HA is enabled, you need to copy core-site.xml and hdfs-site.xml to conf dirresource.hdfs.fs.defaultFS: hdfs://mycluster:8020# -- whether to startup kerberoshadoop.security.authentication.startup.state: false# -- java.security.krb5.conf pathjava.security.krb5.conf.path: /opt/krb5.conf# -- login user from keytab usernamelogin.user.keytab.username: hdfs-mycluster@ESZ.COM# -- login user from keytab pathlogin.user.keytab.path: /opt/hdfs.headless.keytab# -- kerberos expire time, the unit is hourkerberos.expire.time: 2# -- resourcemanager port, the default value is 8088 if not specifiedresource.manager.httpaddress.port: 8088# -- if resourcemanager HA is enabled, please set the HA IPs; if resourcemanager is single, keep this value emptyyarn.resourcemanager.ha.rm.ids: 192.168.xx.xx,192.168.xx.xx# -- if resourcemanager HA is enabled or not use resourcemanager, please keep the default value; If resourcemanager is single, you only need to replace ds1 to actual resourcemanager hostnameyarn.application.status.address: http://ds1:%s/ws/v1/cluster/apps/%s# -- job history status url when application number threshold is reached(default 10000, maybe it was set to 1000)yarn.job.history.status.address: http://ds1:19888/ws/v1/history/mapreduce/jobs/%s# -- datasource encryption enabledatasource.encryption.enable: false# -- datasource encryption saltdatasource.encryption.salt: '!@#$%^&*'# -- data quality optiondata-quality.jar.dir:# -- Whether hive SQL is executed in the same sessionsupport.hive.oneSession: false# -- use sudo or not, if set true, executing user is tenant user and deploy user needs sudo permissions; if set false, executing user is the deploy user and doesn't need sudo permissionssudo.enable: true# -- development statedevelopment.state: false# -- rpc portalert.rpc.port: 50052# -- set path of conda.shconda.path: /opt/anaconda3/etc/profile.d/conda.sh# -- Task resource limit statetask.resource.limit.state: false# -- mlflow task plugin preset repositoryml.mlflow.preset_repository: https://github.com/apache/dolphinscheduler-mlflow# -- mlflow task plugin preset repository versionml.mlflow.preset_repository_version: "main"# -- way to collect applicationId: log, aopappId.collect: logcommon:## Configmapconfigmap:# -- The jvm options for dolphinscheduler, suitable for all serversDOLPHINSCHEDULER_OPTS: ""# -- User data directory path, self configuration, please make sure the directory exists and have read write permissionsDATA_BASEDIR_PATH: "/tmp/dolphinscheduler"# -- Resource store on HDFS/S3 path, please make sure the directory exists on hdfs and have read write permissionsRESOURCE_UPLOAD_PATH: "/dolphinscheduler"# dolphinscheduler env# -- Set `HADOOP_HOME` for DolphinScheduler's task environmentHADOOP_HOME: "/opt/soft/hadoop"# -- Set `HADOOP_CONF_DIR` for DolphinScheduler's task environmentHADOOP_CONF_DIR: "/opt/soft/hadoop/etc/hadoop"# -- Set `SPARK_HOME` for DolphinScheduler's task environmentSPARK_HOME: "/opt/soft/spark"# -- Set `PYTHON_LAUNCHER` for DolphinScheduler's task environmentPYTHON_LAUNCHER: "/usr/bin/python/bin/python3"# -- Set `JAVA_HOME` for DolphinScheduler's task environmentJAVA_HOME: "/opt/java/openjdk"# -- Set `HIVE_HOME` for DolphinScheduler's task environmentHIVE_HOME: "/opt/soft/hive"# -- Set `FLINK_HOME` for DolphinScheduler's task environmentFLINK_HOME: "/opt/soft/flink"# -- Set `DATAX_LAUNCHER` for DolphinScheduler's task environmentDATAX_LAUNCHER: "/opt/soft/datax/bin/datax.py"## Shared storage persistence mounted into api, master and worker, such as Hadoop, Spark, Flink and DataX binary packagesharedStoragePersistence:# -- Set `common.sharedStoragePersistence.enabled` to `true` to mount a shared storage volume for Hadoop, Spark binary and etcenabled: false# -- The mount path for the shared storage volumemountPath: "/opt/soft"# -- `PersistentVolumeClaim` access modes, must be `ReadWriteMany`accessModes:- "ReadWriteMany"# -- Shared Storage persistent volume storage class, must support the access mode: ReadWriteManystorageClassName: "-"# -- `PersistentVolumeClaim` sizestorage: "20Gi"## If RESOURCE_STORAGE_TYPE is HDFS and FS_DEFAULT_FS is file:///, fsFileResourcePersistence should be enabled for resource storagefsFileResourcePersistence:# -- Set `common.fsFileResourcePersistence.enabled` to `true` to mount a new file resource volume for `api` and `worker`enabled: false# -- `PersistentVolumeClaim` access modes, must be `ReadWriteMany`accessModes:- "ReadWriteMany"# -- Resource persistent volume storage class, must support the access mode: `ReadWriteMany`storageClassName: "-"# -- `PersistentVolumeClaim` sizestorage: "20Gi"master:# -- Enable or disable the Master componentenabled: true# -- PodManagementPolicy controls how pods are created during initial scale up, when replacing pods on nodes, or when scaling down.podManagementPolicy: "Parallel"# -- Replicas is the desired number of replicas of the given Template.replicas: "1"# -- You can use annotations to attach arbitrary non-identifying metadata to objects.# Clients such as tools and libraries can retrieve this metadata.annotations: {}# -- Affinity is a group of affinity scheduling rules. If specified, the pod's scheduling constraints.# More info: [node-affinity](https://kubernetes.io/docs/concepts/scheduling-eviction/assign-pod-node/#node-affinity)affinity: {}# -- NodeSelector is a selector which must be true for the pod to fit on a node.# Selector which must match a node's labels for the pod to be scheduled on that node.# More info: [assign-pod-node](https://kubernetes.io/docs/concepts/scheduling-eviction/assign-pod-node/)nodeSelector: {}# -- Tolerations are appended (excluding duplicates) to pods running with this RuntimeClass during admission,# effectively unioning the set of nodes tolerated by the pod and the RuntimeClass.tolerations: []# -- Compute Resources required by this container.# More info: [manage-resources-containers](https://kubernetes.io/docs/concepts/configuration/manage-resources-containers/)#resources: {}resources:limits:memory: "4Gi"cpu: "4"requests:memory: "2Gi"cpu: "500m"# -- enable configure custom configenableCustomizedConfig: false# -- configure aligned with https://github.com/apache/dolphinscheduler/blob/dev/dolphinscheduler-master/src/main/resources/application.yamlcustomizedConfig: { }# customizedConfig:# application.yaml: |# profiles:# active: postgresql# banner:# charset: UTF-8# jackson:# time-zone: UTC# date-format: "yyyy-MM-dd HH:mm:ss"# -- Periodic probe of container liveness. Container will be restarted if the probe fails.# More info: [container-probes](https://kubernetes.io/docs/concepts/workloads/pods/pod-lifecycle/#container-probes)livenessProbe:# -- Turn on and off liveness probeenabled: true# -- Delay before liveness probe is initiatedinitialDelaySeconds: "30"# -- How often to perform the probeperiodSeconds: "30"# -- When the probe times outtimeoutSeconds: "5"# -- Minimum consecutive failures for the probefailureThreshold: "3"# -- Minimum consecutive successes for the probesuccessThreshold: "1"# -- Periodic probe of container service readiness. Container will be removed from service endpoints if the probe fails.# More info: [container-probes](https://kubernetes.io/docs/concepts/workloads/pods/pod-lifecycle/#container-probes)readinessProbe:# -- Turn on and off readiness probeenabled: true# -- Delay before readiness probe is initiatedinitialDelaySeconds: "30"# -- How often to perform the probeperiodSeconds: "30"# -- When the probe times outtimeoutSeconds: "5"# -- Minimum consecutive failures for the probefailureThreshold: "3"# -- Minimum consecutive successes for the probesuccessThreshold: "1"# -- PersistentVolumeClaim represents a reference to a PersistentVolumeClaim in the same namespace.# The StatefulSet controller is responsible for mapping network identities to claims in a way that maintains the identity of a pod.# Every claim in this list must have at least one matching (by name) volumeMount in one container in the template.# A claim in this list takes precedence over any volumes in the template, with the same name.persistentVolumeClaim:# -- Set `master.persistentVolumeClaim.enabled` to `true` to mount a new volume for `master`enabled: false# -- `PersistentVolumeClaim` access modesaccessModes:- "ReadWriteOnce"# -- `Master` logs data persistent volume storage class. If set to "-", storageClassName: "", which disables dynamic provisioningstorageClassName: "-"# -- `PersistentVolumeClaim` sizestorage: "20Gi"env:# -- The jvm options for master serverJAVA_OPTS: "-Xms1g -Xmx1g -Xmn512m"# -- Master execute thread number to limit process instancesMASTER_EXEC_THREADS: "100"# -- Master execute task number in parallel per process instanceMASTER_EXEC_TASK_NUM: "20"# -- Master dispatch task number per batchMASTER_DISPATCH_TASK_NUM: "3"# -- Master host selector to select a suitable worker, optional values include Random, RoundRobin, LowerWeightMASTER_HOST_SELECTOR: "LowerWeight"# -- Master max heartbeat intervalMASTER_MAX_HEARTBEAT_INTERVAL: "10s"# -- Master heartbeat error thresholdMASTER_HEARTBEAT_ERROR_THRESHOLD: "5"# -- Master commit task retry timesMASTER_TASK_COMMIT_RETRYTIMES: "5"# -- master commit task interval, the unit is secondMASTER_TASK_COMMIT_INTERVAL: "1s"# -- master state wheel interval, the unit is secondMASTER_STATE_WHEEL_INTERVAL: "5s"# -- If set true, will open master overload protectionMASTER_SERVER_LOAD_PROTECTION_ENABLED: false# -- Master max system cpu usage, when the master's system cpu usage is smaller then this value, master server can execute workflow.MASTER_SERVER_LOAD_PROTECTION_MAX_SYSTEM_CPU_USAGE_PERCENTAGE_THRESHOLDS: 0.7# -- Master max jvm cpu usage, when the master's jvm cpu usage is smaller then this value, master server can execute workflow.MASTER_SERVER_LOAD_PROTECTION_MAX_JVM_CPU_USAGE_PERCENTAGE_THRESHOLDS: 0.7# -- Master max System memory usage , when the master's system memory usage is smaller then this value, master server can execute workflow.MASTER_SERVER_LOAD_PROTECTION_MAX_SYSTEM_MEMORY_USAGE_PERCENTAGE_THRESHOLDS: 0.7# -- Master max disk usage , when the master's disk usage is smaller then this value, master server can execute workflow.MASTER_SERVER_LOAD_PROTECTION_MAX_DISK_USAGE_PERCENTAGE_THRESHOLDS: 0.7# -- Master failover interval, the unit is minuteMASTER_FAILOVER_INTERVAL: "10m"# -- Master kill application when handle failoverMASTER_KILL_APPLICATION_WHEN_HANDLE_FAILOVER: "true"service:# -- annotations may need to be set when want to scrapy metrics by prometheus but not install prometheus operatorannotations: {}# -- serviceMonitor for prometheus operatorserviceMonitor:# -- Enable or disable master serviceMonitorenabled: false# -- serviceMonitor.interval interval at which metrics should be scrapedinterval: 15s# -- serviceMonitor.path path of the metrics endpointpath: /actuator/prometheus# -- serviceMonitor.labels ServiceMonitor extra labelslabels: {}# -- serviceMonitor.annotations ServiceMonitor annotationsannotations: {}worker:# -- Enable or disable the Worker componentenabled: true# -- PodManagementPolicy controls how pods are created during initial scale up, when replacing pods on nodes, or when scaling down.podManagementPolicy: "Parallel"# -- Replicas is the desired number of replicas of the given Template.replicas: "3"# -- You can use annotations to attach arbitrary non-identifying metadata to objects.# Clients such as tools and libraries can retrieve this metadata.annotations: {}# -- Affinity is a group of affinity scheduling rules. If specified, the pod's scheduling constraints.# More info: [node-affinity](https://kubernetes.io/docs/concepts/scheduling-eviction/assign-pod-node/#node-affinity)affinity: {}# -- NodeSelector is a selector which must be true for the pod to fit on a node.# Selector which must match a node's labels for the pod to be scheduled on that node.# More info: [assign-pod-node](https://kubernetes.io/docs/concepts/scheduling-eviction/assign-pod-node/)nodeSelector: {}# -- Tolerations are appended (excluding duplicates) to pods running with this RuntimeClass during admission,# effectively unioning the set of nodes tolerated by the pod and the RuntimeClass.tolerations: [ ]# -- Compute Resources required by this container.# More info: [manage-resources-containers](https://kubernetes.io/docs/concepts/configuration/manage-resources-containers/)#resources: {}resources:limits:memory: "4Gi"cpu: "2"requests:memory: "2Gi"cpu: "500m"# -- Periodic probe of container liveness. Container will be restarted if the probe fails.# More info: [container-probes](https://kubernetes.io/docs/concepts/workloads/pods/pod-lifecycle/#container-probes)# -- enable configure custom configenableCustomizedConfig: false# -- configure aligned with https://github.com/apache/dolphinscheduler/blob/dev/dolphinscheduler-worker/src/main/resources/application.yamlcustomizedConfig: { }

# customizedConfig:

# application.yaml: |

# banner:

# charset: UTF-8

# jackson:

# time-zone: UTC

# date-format: "yyyy-MM-dd HH:mm:ss"livenessProbe:# -- Turn on and off liveness probeenabled: true# -- Delay before liveness probe is initiatedinitialDelaySeconds: "30"# -- How often to perform the probeperiodSeconds: "30"# -- When the probe times outtimeoutSeconds: "5"# -- Minimum consecutive failures for the probefailureThreshold: "3"# -- Minimum consecutive successes for the probesuccessThreshold: "1"# -- Periodic probe of container service readiness. Container will be removed from service endpoints if the probe fails.# More info: [container-probes](https://kubernetes.io/docs/concepts/workloads/pods/pod-lifecycle/#container-probes)readinessProbe:# -- Turn on and off readiness probeenabled: true# -- Delay before readiness probe is initiatedinitialDelaySeconds: "30"# -- How often to perform the probeperiodSeconds: "30"# -- When the probe times outtimeoutSeconds: "5"# -- Minimum consecutive failures for the probefailureThreshold: "3"# -- Minimum consecutive successes for the probesuccessThreshold: "1"# -- PersistentVolumeClaim represents a reference to a PersistentVolumeClaim in the same namespace.# The StatefulSet controller is responsible for mapping network identities to claims in a way that maintains the identity of a pod.# Every claim in this list must have at least one matching (by name) volumeMount in one container in the template.# A claim in this list takes precedence over any volumes in the template, with the same name.persistentVolumeClaim:# -- Set `worker.persistentVolumeClaim.enabled` to `true` to enable `persistentVolumeClaim` for `worker`enabled: false## dolphinscheduler data volumedataPersistentVolume:# -- Set `worker.persistentVolumeClaim.dataPersistentVolume.enabled` to `true` to mount a data volume for `worker`enabled: false# -- `PersistentVolumeClaim` access modesaccessModes:- "ReadWriteOnce"# -- `Worker` data persistent volume storage class. If set to "-", storageClassName: "", which disables dynamic provisioningstorageClassName: "nfs-storage"# -- `PersistentVolumeClaim` sizestorage: "20Gi"## dolphinscheduler logs volumelogsPersistentVolume:# -- Set `worker.persistentVolumeClaim.logsPersistentVolume.enabled` to `true` to mount a logs volume for `worker`enabled: false# -- `PersistentVolumeClaim` access modesaccessModes:- "ReadWriteOnce"# -- `Worker` logs data persistent volume storage class. If set to "-", storageClassName: "", which disables dynamic provisioningstorageClassName: "-"# -- `PersistentVolumeClaim` sizestorage: "20Gi"env:# -- If set true, will open worker overload protectionWORKER_SERVER_LOAD_PROTECTION_ENABLED: false# -- Worker max system cpu usage, when the worker's system cpu usage is smaller then this value, worker server can be dispatched tasks.WORKER_SERVER_LOAD_PROTECTION_MAX_SYSTEM_CPU_USAGE_PERCENTAGE_THRESHOLDS: 0.7# -- Worker max jvm cpu usage, when the worker's jvm cpu usage is smaller then this value, worker server can be dispatched tasks.WORKER_SERVER_LOAD_PROTECTION_MAX_JVM_CPU_USAGE_PERCENTAGE_THRESHOLDS: 0.7# -- Worker max memory usage , when the worker's memory usage is smaller then this value, worker server can be dispatched tasks.WORKER_SERVER_LOAD_PROTECTION_MAX_SYSTEM_MEMORY_USAGE_PERCENTAGE_THRESHOLDS: 0.7# -- Worker max disk usage , when the worker's disk usage is smaller then this value, worker server can be dispatched tasks.WORKER_SERVER_LOAD_PROTECTION_MAX_DISK_USAGE_PERCENTAGE_THRESHOLDS: 0.7# -- Worker execute thread number to limit task instancesWORKER_EXEC_THREADS: "100"# -- Worker heartbeat intervalWORKER_MAX_HEARTBEAT_INTERVAL: "10s"# -- Worker host weight to dispatch tasksWORKER_HOST_WEIGHT: "100"# -- tenant corresponds to the user of the system, which is used by the worker to submit the job. If system does not have this user, it will be automatically created after the parameter worker.tenant.auto.create is true.WORKER_TENANT_CONFIG_AUTO_CREATE_TENANT_ENABLED: true# -- Scenes to be used for distributed users. For example, users created by FreeIpa are stored in LDAP. This parameter only applies to Linux, When this parameter is true, worker.tenant.auto.create has no effect and will not automatically create tenants.WORKER_TENANT_CONFIG_DISTRIBUTED_TENANT: false# -- If set true, will use worker bootstrap user as the tenant to execute task when the tenant is `default`;DEFAULT_TENANT_ENABLED: falsekeda:# -- Enable or disable the Keda componentenabled: false# -- Keda namespace labelsnamespaceLabels: { }# -- How often KEDA polls the DolphinScheduler DB to report new scale requests to the HPApollingInterval: 5# -- How many seconds KEDA will wait before scaling to zero.# Note that HPA has a separate cooldown period for scale-downscooldownPeriod: 30# -- Minimum number of workers created by kedaminReplicaCount: 0# -- Maximum number of workers created by kedamaxReplicaCount: 3# -- Specify HPA related optionsadvanced: { }# horizontalPodAutoscalerConfig:# behavior:# scaleDown:# stabilizationWindowSeconds: 300# policies:# - type: Percent# value: 100# periodSeconds: 15service:# -- annotations may need to be set when want to scrapy metrics by prometheus but not install prometheus operatorannotations: {}# -- serviceMonitor for prometheus operatorserviceMonitor:# -- Enable or disable worker serviceMonitorenabled: false# -- serviceMonitor.interval interval at which metrics should be scrapedinterval: 15s# -- serviceMonitor.path path of the metrics endpointpath: /actuator/prometheus# -- serviceMonitor.labels ServiceMonitor extra labelslabels: {}# -- serviceMonitor.annotations ServiceMonitor annotationsannotations: {}alert:# -- Enable or disable the Alert-Server componentenabled: true# -- Number of desired pods. This is a pointer to distinguish between explicit zero and not specified. Defaults to 1.replicas: 1# -- The deployment strategy to use to replace existing pods with new ones.strategy:# -- Type of deployment. Can be "Recreate" or "RollingUpdate"type: "RollingUpdate"rollingUpdate:# -- The maximum number of pods that can be scheduled above the desired number of podsmaxSurge: "25%"# -- The maximum number of pods that can be unavailable during the updatemaxUnavailable: "25%"# -- You can use annotations to attach arbitrary non-identifying metadata to objects.# Clients such as tools and libraries can retrieve this metadata.annotations: {}# -- Affinity is a group of affinity scheduling rules. If specified, the pod's scheduling constraints.# More info: [node-affinity](https://kubernetes.io/docs/concepts/scheduling-eviction/assign-pod-node/#node-affinity)affinity: {}# -- NodeSelector is a selector which must be true for the pod to fit on a node.# Selector which must match a node's labels for the pod to be scheduled on that node.# More info: [assign-pod-node](https://kubernetes.io/docs/concepts/scheduling-eviction/assign-pod-node/)nodeSelector: {}# -- Tolerations are appended (excluding duplicates) to pods running with this RuntimeClass during admission,# effectively unioning the set of nodes tolerated by the pod and the RuntimeClass.tolerations: []# -- Compute Resources required by this container.# More info: [manage-resources-containers](https://kubernetes.io/docs/concepts/configuration/manage-resources-containers/)#resources: {}resources:limits:memory: "2Gi"cpu: "1"requests:memory: "1Gi"cpu: "500m"# -- enable configure custom configenableCustomizedConfig: false# -- configure aligned with https://github.com/apache/dolphinscheduler/blob/dev/dolphinscheduler-alert/dolphinscheduler-alert-server/src/main/resources/application.yamlcustomizedConfig: { }# customizedConfig:# application.yaml: |# profiles:# active: postgresql# banner:# charset: UTF-8# jackson:# time-zone: UTC# date-format: "yyyy-MM-dd HH:mm:ss"# -- Periodic probe of container liveness. Container will be restarted if the probe fails.# More info: [container-probes](https://kubernetes.io/docs/concepts/workloads/pods/pod-lifecycle/#container-probes)livenessProbe:# -- Turn on and off liveness probeenabled: true# -- Delay before liveness probe is initiatedinitialDelaySeconds: "30"# -- How often to perform the probeperiodSeconds: "30"# -- When the probe times outtimeoutSeconds: "5"# -- Minimum consecutive failures for the probefailureThreshold: "3"# -- Minimum consecutive successes for the probesuccessThreshold: "1"# -- Periodic probe of container service readiness. Container will be removed from service endpoints if the probe fails.# More info: [container-probes](https://kubernetes.io/docs/concepts/workloads/pods/pod-lifecycle/#container-probes)readinessProbe:# -- Turn on and off readiness probeenabled: true# -- Delay before readiness probe is initiatedinitialDelaySeconds: "30"# -- How often to perform the probeperiodSeconds: "30"# -- When the probe times outtimeoutSeconds: "5"# -- Minimum consecutive failures for the probefailureThreshold: "3"# -- Minimum consecutive successes for the probesuccessThreshold: "1"# -- PersistentVolumeClaim represents a reference to a PersistentVolumeClaim in the same namespace.# More info: [persistentvolumeclaims](https://kubernetes.io/docs/concepts/storage/persistent-volumes/#persistentvolumeclaims)persistentVolumeClaim:# -- Set `alert.persistentVolumeClaim.enabled` to `true` to mount a new volume for `alert`enabled: false# -- `PersistentVolumeClaim` access modesaccessModes:- "ReadWriteOnce"# -- `Alert` logs data persistent volume storage class. If set to "-", storageClassName: "", which disables dynamic provisioningstorageClassName: "-"# -- `PersistentVolumeClaim` sizestorage: "20Gi"env:# -- The jvm options for alert serverJAVA_OPTS: "-Xms512m -Xmx512m -Xmn256m"service:# -- annotations may need to be set when want to scrapy metrics by prometheus but not install prometheus operatorannotations: {}# -- serviceMonitor for prometheus operatorserviceMonitor:# -- Enable or disable alert-server serviceMonitorenabled: false# -- serviceMonitor.interval interval at which metrics should be scrapedinterval: 15s# -- serviceMonitor.path path of the metrics endpointpath: /actuator/prometheus# -- serviceMonitor.labels ServiceMonitor extra labelslabels: {}# -- serviceMonitor.annotations ServiceMonitor annotationsannotations: {}api:# -- Enable or disable the API-Server componentenabled: true# -- Number of desired pods. This is a pointer to distinguish between explicit zero and not specified. Defaults to 1.replicas: "1"# -- The deployment strategy to use to replace existing pods with new ones.strategy:# -- Type of deployment. Can be "Recreate" or "RollingUpdate"type: "RollingUpdate"rollingUpdate:# -- The maximum number of pods that can be scheduled above the desired number of podsmaxSurge: "25%"# -- The maximum number of pods that can be unavailable during the updatemaxUnavailable: "25%"# -- You can use annotations to attach arbitrary non-identifying metadata to objects.# Clients such as tools and libraries can retrieve this metadata.annotations: {}# -- Affinity is a group of affinity scheduling rules. If specified, the pod's scheduling constraints.# More info: [node-affinity](https://kubernetes.io/docs/concepts/scheduling-eviction/assign-pod-node/#node-affinity)affinity: { }# -- NodeSelector is a selector which must be true for the pod to fit on a node.# Selector which must match a node's labels for the pod to be scheduled on that node.# More info: [assign-pod-node](https://kubernetes.io/docs/concepts/scheduling-eviction/assign-pod-node/)nodeSelector: { }# -- Tolerations are appended (excluding duplicates) to pods running with this RuntimeClass during admission,# effectively unioning the set of nodes tolerated by the pod and the RuntimeClass.tolerations: [ ]# -- Compute Resources required by this container.# More info: [manage-resources-containers](https://kubernetes.io/docs/concepts/configuration/manage-resources-containers/)#resources: {}resources:limits:memory: "2Gi"cpu: "4"requests:memory: "1Gi"cpu: "500m"# -- enable configure custom configenableCustomizedConfig: false# -- configure aligned with https://github.com/apache/dolphinscheduler/blob/dev/dolphinscheduler-api/src/main/resources/application.yamlcustomizedConfig: { }# customizedConfig:# application.yaml: |# profiles:# active: postgresql# banner:# charset: UTF-8# jackson:# time-zone: UTC# date-format: "yyyy-MM-dd HH:mm:ss"# -- Periodic probe of container liveness. Container will be restarted if the probe fails.# More info: [container-probes](https://kubernetes.io/docs/concepts/workloads/pods/pod-lifecycle/#container-probes)livenessProbe:# -- Turn on and off liveness probeenabled: true# -- Delay before liveness probe is initiatedinitialDelaySeconds: "30"# -- How often to perform the probeperiodSeconds: "30"# -- When the probe times outtimeoutSeconds: "5"# -- Minimum consecutive failures for the probefailureThreshold: "3"# -- Minimum consecutive successes for the probesuccessThreshold: "1"# -- Periodic probe of container service readiness. Container will be removed from service endpoints if the probe fails.# More info: [container-probes](https://kubernetes.io/docs/concepts/workloads/pods/pod-lifecycle/#container-probes)readinessProbe:# -- Turn on and off readiness probeenabled: true# -- Delay before readiness probe is initiatedinitialDelaySeconds: "30"# -- How often to perform the probeperiodSeconds: "30"# -- When the probe times outtimeoutSeconds: "5"# -- Minimum consecutive failures for the probefailureThreshold: "3"# -- Minimum consecutive successes for the probesuccessThreshold: "1"# -- PersistentVolumeClaim represents a reference to a PersistentVolumeClaim in the same namespace.# More info: [persistentvolumeclaims](https://kubernetes.io/docs/concepts/storage/persistent-volumes/#persistentvolumeclaims)persistentVolumeClaim:# -- Set `api.persistentVolumeClaim.enabled` to `true` to mount a new volume for `api`enabled: false# -- `PersistentVolumeClaim` access modesaccessModes:- "ReadWriteOnce"# -- `api` logs data persistent volume storage class. If set to "-", storageClassName: "", which disables dynamic provisioningstorageClassName: "-"# -- `PersistentVolumeClaim` sizestorage: "20Gi"service:# -- type determines how the Service is exposed. Defaults to ClusterIP. Valid options are ExternalName, ClusterIP, NodePort, and LoadBalancertype: "ClusterIP"# -- clusterIP is the IP address of the service and is usually assigned randomly by the masterclusterIP: ""# -- nodePort is the port on each node on which this api service is exposed when type=NodePortnodePort: ""# -- pythonNodePort is the port on each node on which this python api service is exposed when type=NodePortpythonNodePort: ""# -- externalIPs is a list of IP addresses for which nodes in the cluster will also accept traffic for this serviceexternalIPs: []# -- externalName is the external reference that kubedns or equivalent will return as a CNAME record for this service, requires Type to be ExternalNameexternalName: ""# -- loadBalancerIP when service.type is LoadBalancer. LoadBalancer will get created with the IP specified in this fieldloadBalancerIP: ""# -- annotations may need to be set when service.type is LoadBalancer# service.beta.kubernetes.io/aws-load-balancer-ssl-cert: arn:aws:acm:us-east-1:EXAMPLE_CERTannotations: {}# -- serviceMonitor for prometheus operatorserviceMonitor:# -- Enable or disable api-server serviceMonitorenabled: false# -- serviceMonitor.interval interval at which metrics should be scrapedinterval: 15s# -- serviceMonitor.path path of the metrics endpointpath: /dolphinscheduler/actuator/prometheus# -- serviceMonitor.labels ServiceMonitor extra labelslabels: {}# -- serviceMonitor.annotations ServiceMonitor annotationsannotations: {}env:# -- The jvm options for api serverJAVA_OPTS: "-Xms512m -Xmx512m -Xmn256m"taskTypeFilter:# -- Enable or disable the task type filter.# If set to true, the API-Server will return tasks of a specific type set in api.taskTypeFilter.task# Note: This feature only filters tasks to return a specific type on the WebUI. However, you can still create any task that DolphinScheduler supports via the API.enabled: false# -- taskTypeFilter.taskType task type# -- ref: [task-type-config.yaml](https://github.com/apache/dolphinscheduler/blob/dev/dolphinscheduler-api/src/main/resources/task-type-config.yaml)task: {}# example task sets# universal:# - 'SQL'# cloud: []# logic: []# dataIntegration: []# dataQuality: []# machineLearning: []# other: []ingress:# -- Enable ingressenabled: true# -- Ingress hosthost: "dolphinscheduler.tyzwkj.cn"# -- Ingress pathpath: "/dolphinscheduler"# -- Ingress annotationsannotations: {}tls:# -- Enable ingress tlsenabled: false# -- Ingress tls secret namesecretName: "dolphinscheduler-tls"

3、執行安裝

(1)、安裝前檢查

[root@master dolphinscheduler]# helm template dolphinscheduler -f values.yaml ./

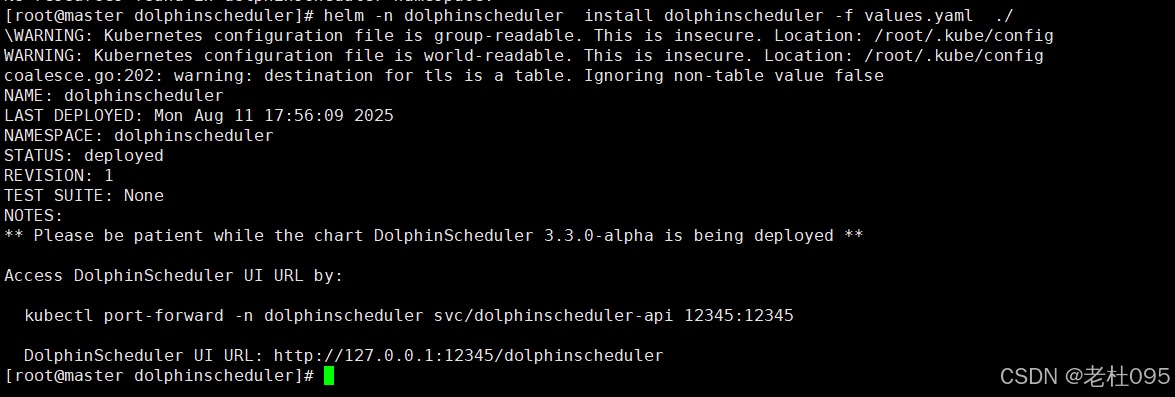

(2)、安裝

[root@master dolphinscheduler]# kubectl create namespace dolphinscheduler

[root@master dolphinscheduler]# helm -n dolphinscheduler install dolphinscheduler -f values.yaml ./

(3)、查看安裝進度

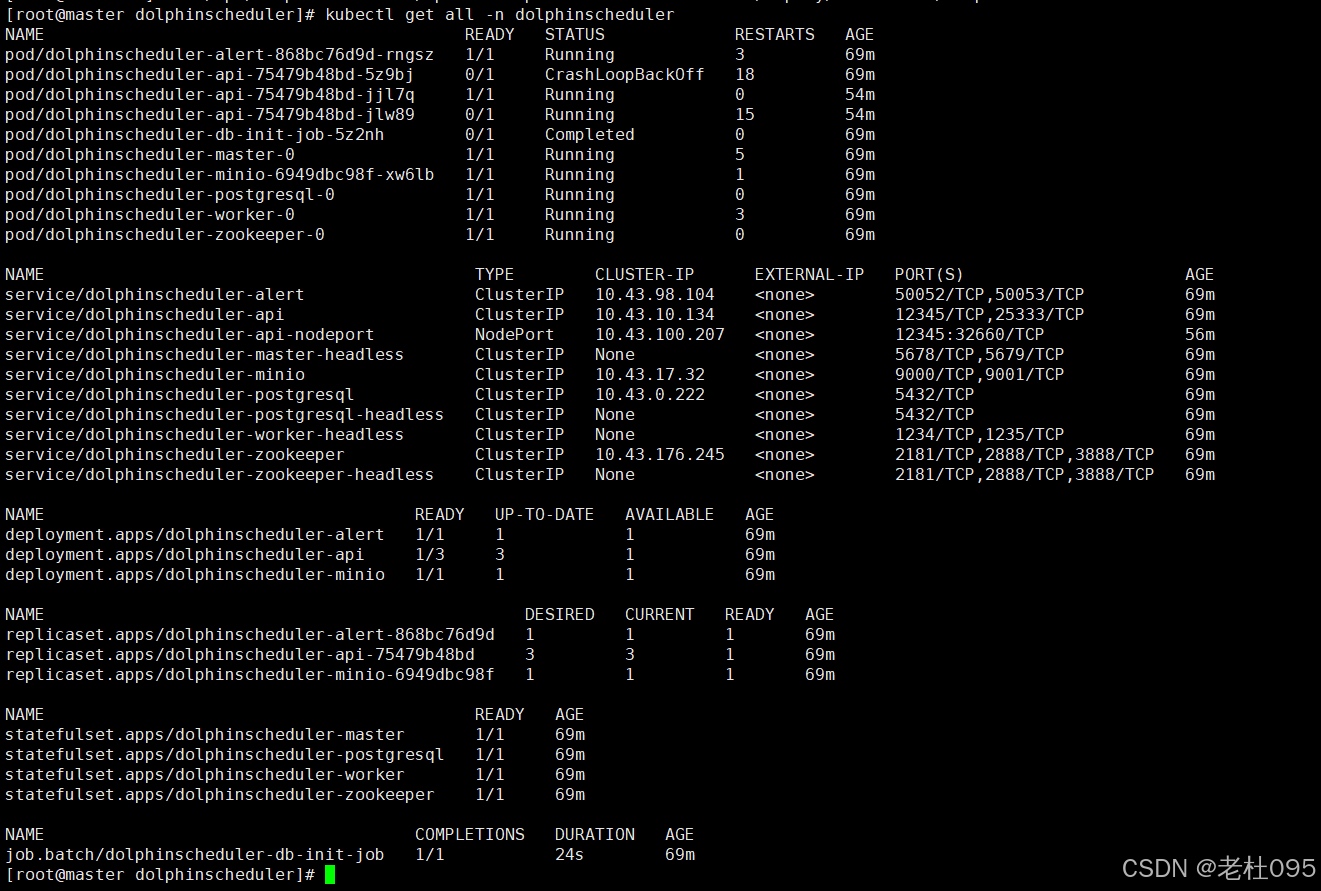

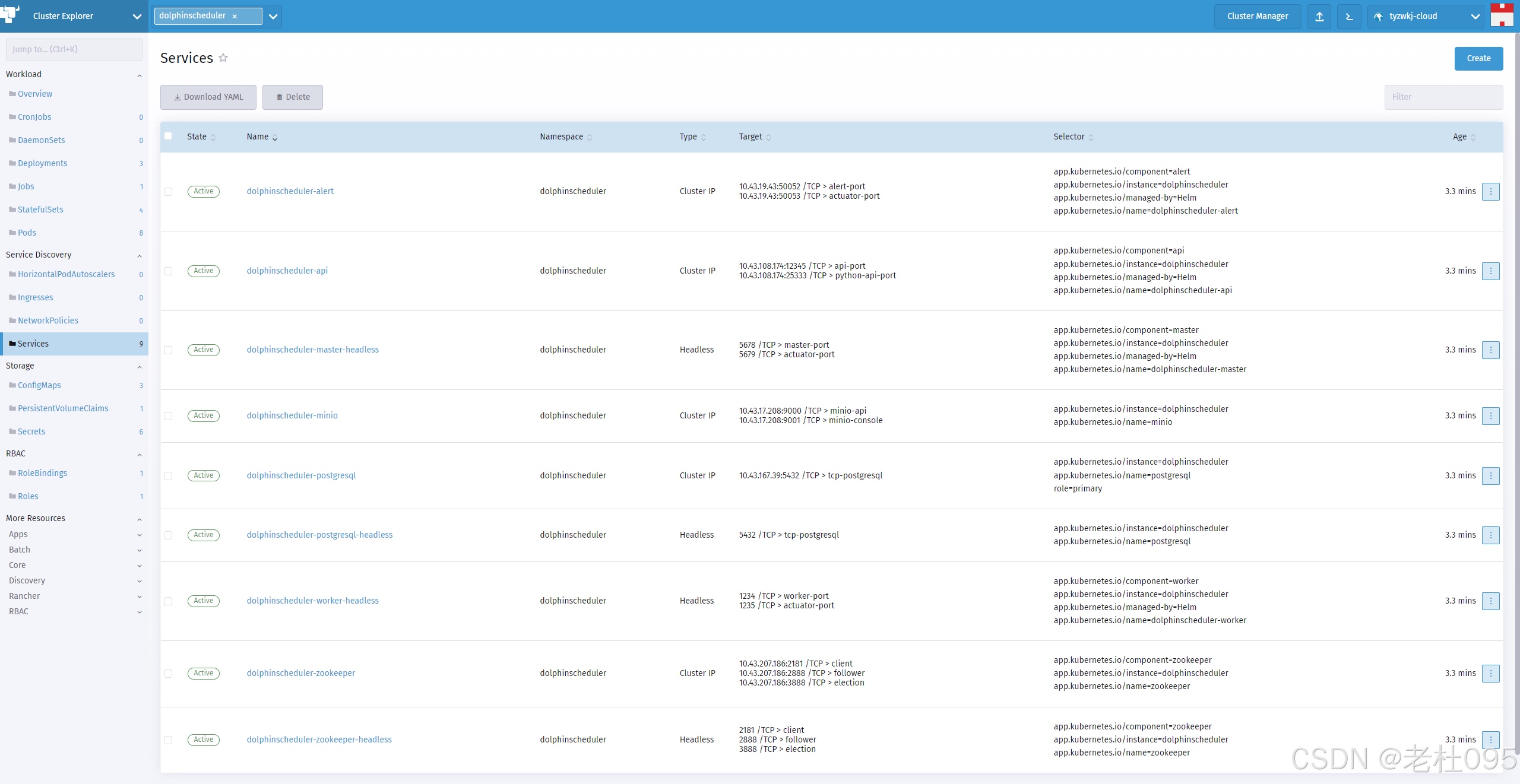

[root@master dolphinscheduler]# kubectl get all -n dolphinscheduler

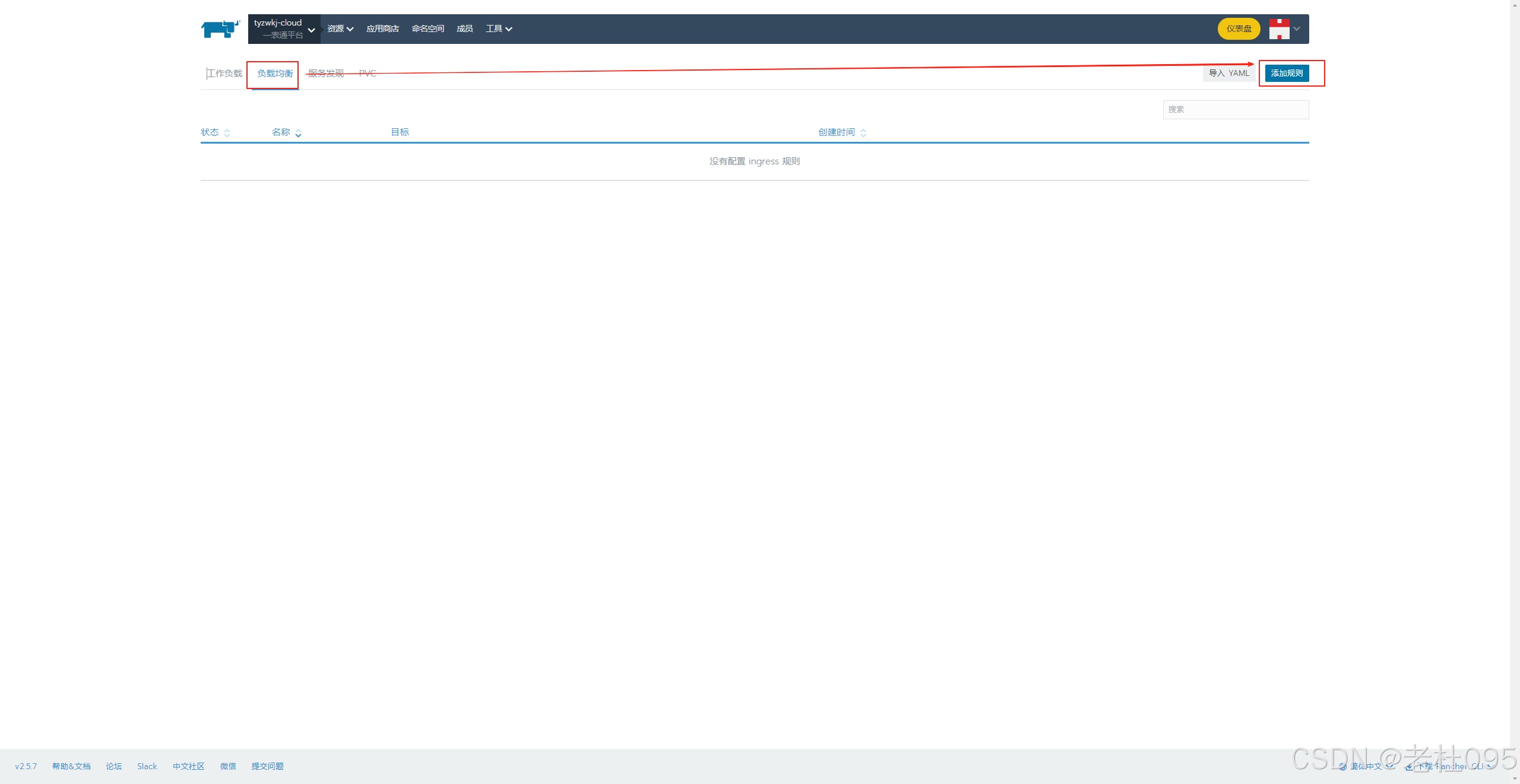

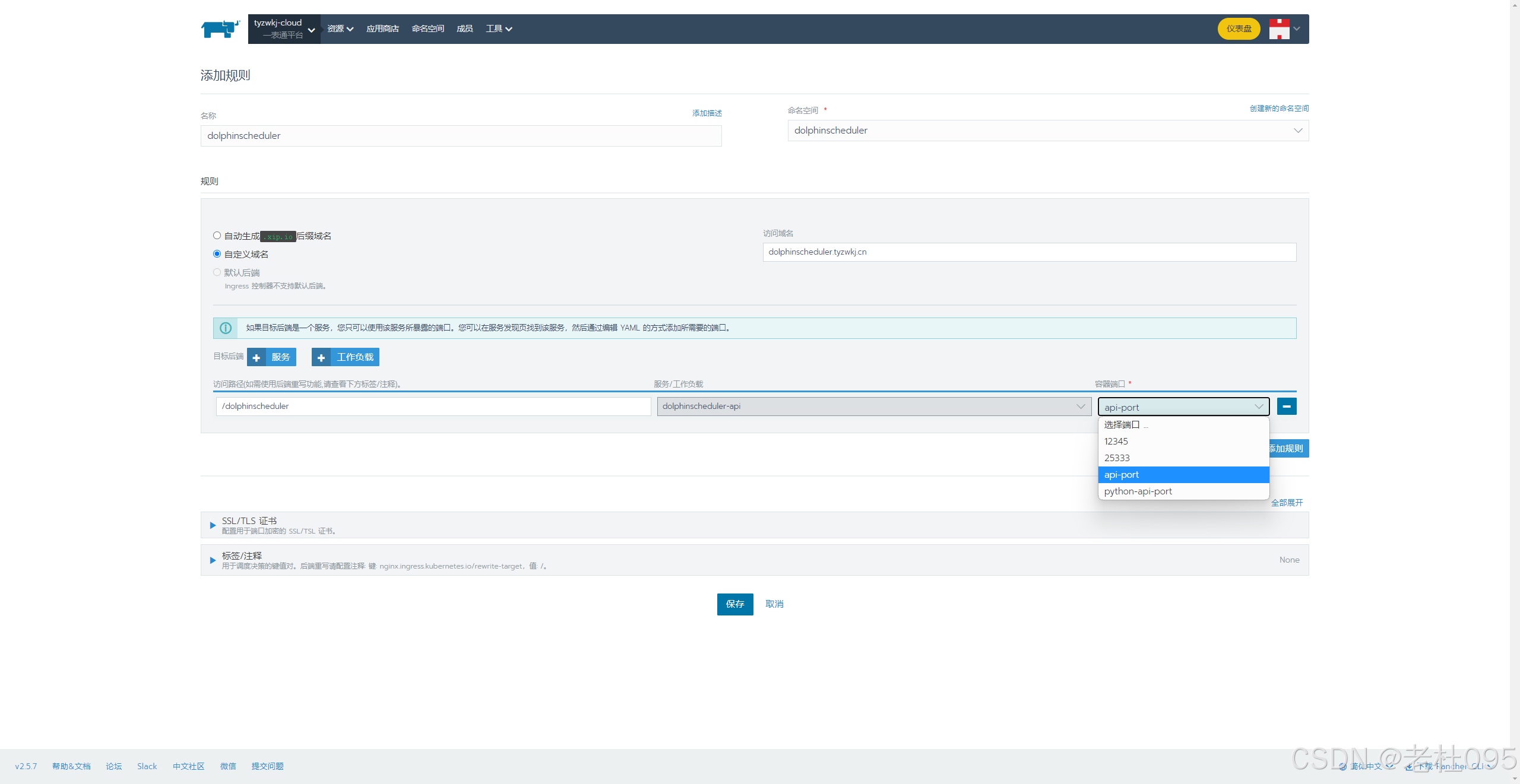

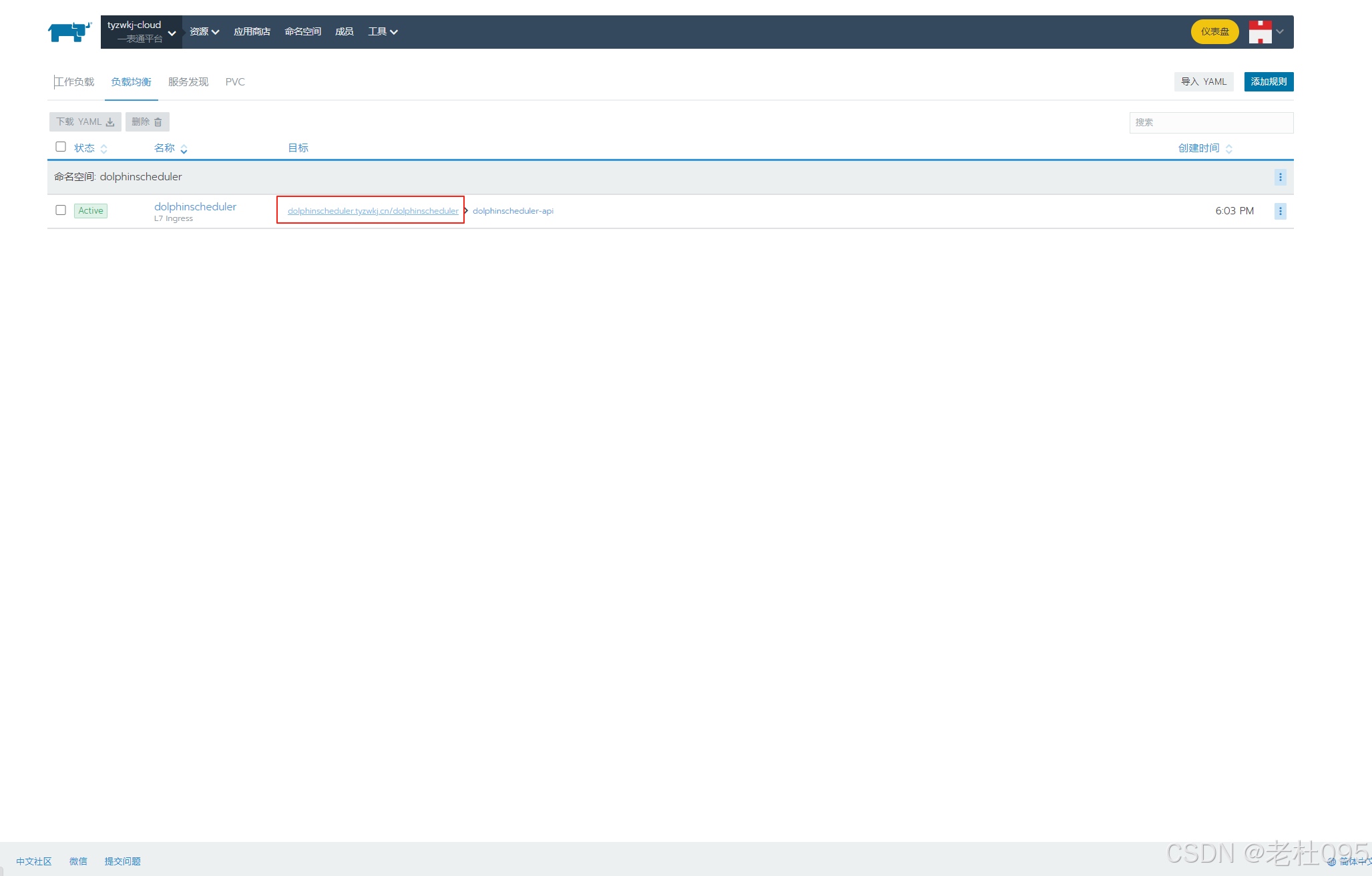

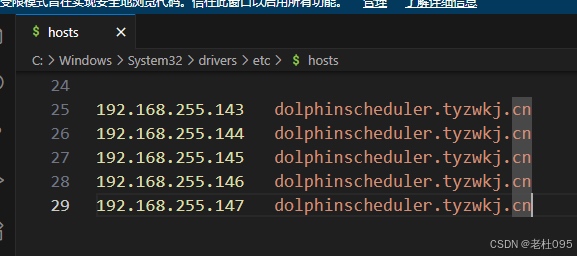

(4)、添加ingress配置實現訪問ui

【重點】

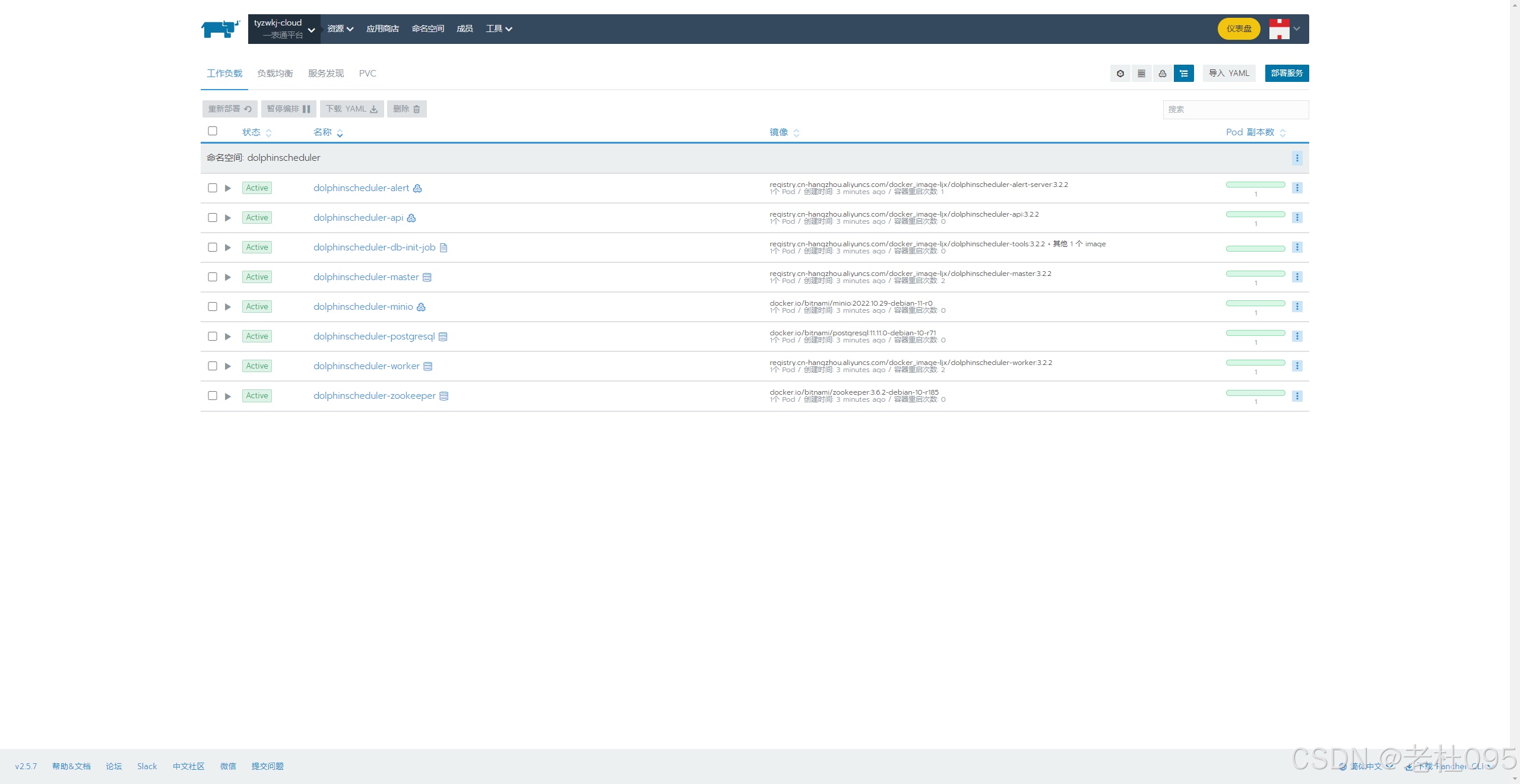

配置完成后,Dolphinscheduler管理端的UI訪問地址為: http://dolphinscheduler.tyzwkj.cn/dolphinscheduler/ui/, 但是此時由于dolphinscheduler.tyzwkj.cn自定義域名還不能自動解析,需要在本地hosts文件中添加路由后才可以訪問。

4、登錄

配置完hosts后在瀏覽器中輸入http://dolphinscheduler.tyzwkj.cn/dolphinscheduler/ui/

用戶名:admin

密碼:dolphinscheduler123

結束,以上就是通過k8s部署Apache Dolphinscheduler集群的全部內容。

原文鏈接:https://blog.csdn.net/dyj095/article/details/150106792

)

的證明)

![NSSCTF每日一題_Web_[SWPUCTF 2022 新生賽]奇妙的MD5](http://pic.xiahunao.cn/NSSCTF每日一題_Web_[SWPUCTF 2022 新生賽]奇妙的MD5)