示例代碼:

import numpy as np

import matplotlib.pyplot as plt

from sklearn import svm

from sklearn.datasets import make_blobs

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score, classification_report# 設置中文字體支持

plt.rcParams['font.sans-serif'] = ['SimHei', 'Microsoft YaHei', 'KaiTi', 'SimSun'] # 使用系統自帶字體

plt.rcParams['axes.unicode_minus'] = False # 解決負號顯示問題

# 1. 生成模擬數據

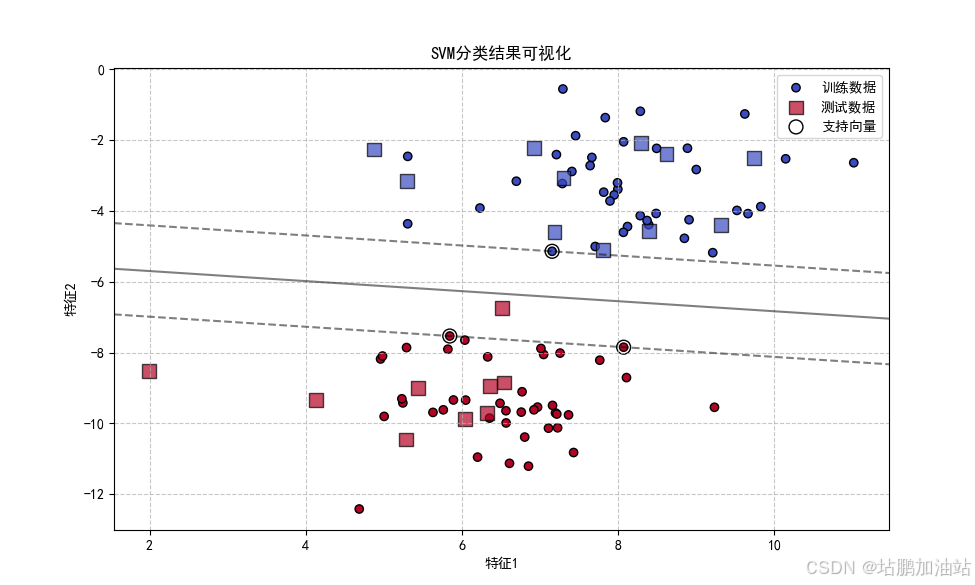

X, y = make_blobs(n_samples=100, centers=2,random_state=6, cluster_std=1.2)

print("這是X")

print(X)

print("這是y")

print(y)

# 2. 劃分訓練集和測試集

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)# 3. 創建SVM分類器(使用線性核)

clf = svm.SVC(kernel='linear', C=1.0)

clf.fit(X_train, y_train)# 4. 預測測試集

y_pred = clf.predict(X_test)print(y_pred)

print(y_test)# 5. 評估模型性能

print(f"測試集準確率: {accuracy_score(y_test, y_pred):.2f}")

print("\n分類報告:")

print(classification_report(y_test, y_pred))# 6. 可視化結果

plt.figure(figsize=(10, 6))# 繪制訓練數據

plt.scatter(X_train[:, 0], X_train[:, 1], c=y_train,cmap='coolwarm', edgecolors='k', label='訓練數據')# 繪制測試數據

plt.scatter(X_test[:, 0], X_test[:, 1], c=y_test,cmap='coolwarm', marker='s', s=100,edgecolors='k', alpha=0.7, label='測試數據')# 繪制決策邊界

ax = plt.gca()

xlim = ax.get_xlim()

ylim = ax.get_ylim()# 創建網格評估模型

xx = np.linspace(xlim[0], xlim[1], 30)

yy = np.linspace(ylim[0], ylim[1], 30)

YY, XX = np.meshgrid(yy, xx)

xy = np.vstack([XX.ravel(), YY.ravel()]).T

Z = clf.decision_function(xy).reshape(XX.shape)# 繪制決策邊界和間隔

ax.contour(XX, YY, Z, colors='k', levels=[-1, 0, 1],alpha=0.5, linestyles=['--', '-', '--'])

ax.scatter(clf.support_vectors_[:, 0], clf.support_vectors_[:, 1],s=100, linewidth=1, facecolors='none',edgecolors='k', label='支持向量')plt.title('SVM分類結果可視化')

plt.xlabel('特征1')

plt.ylabel('特征2')

plt.legend()

plt.grid(True, linestyle='--', alpha=0.7)

plt.show()可視化結果:

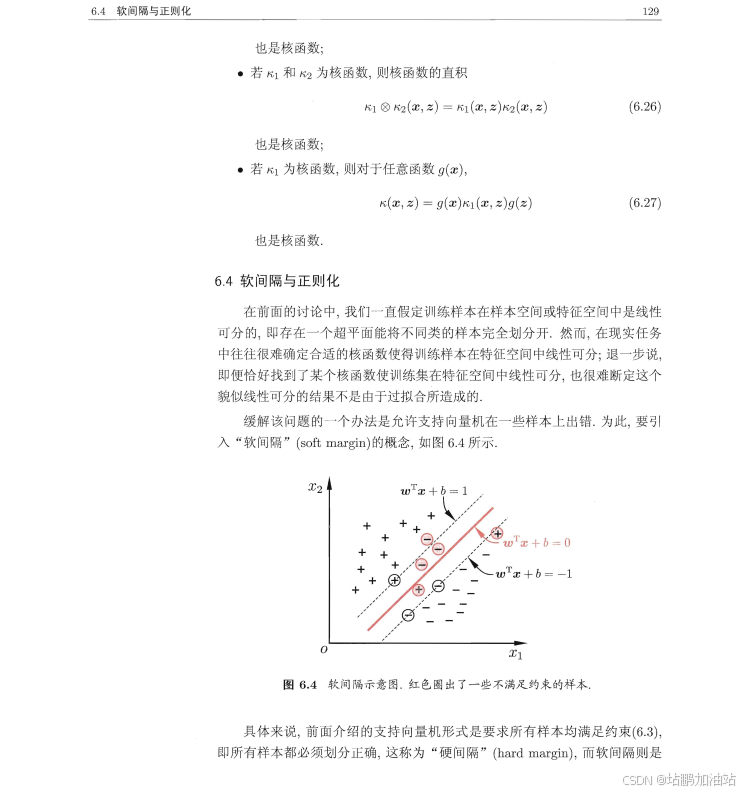

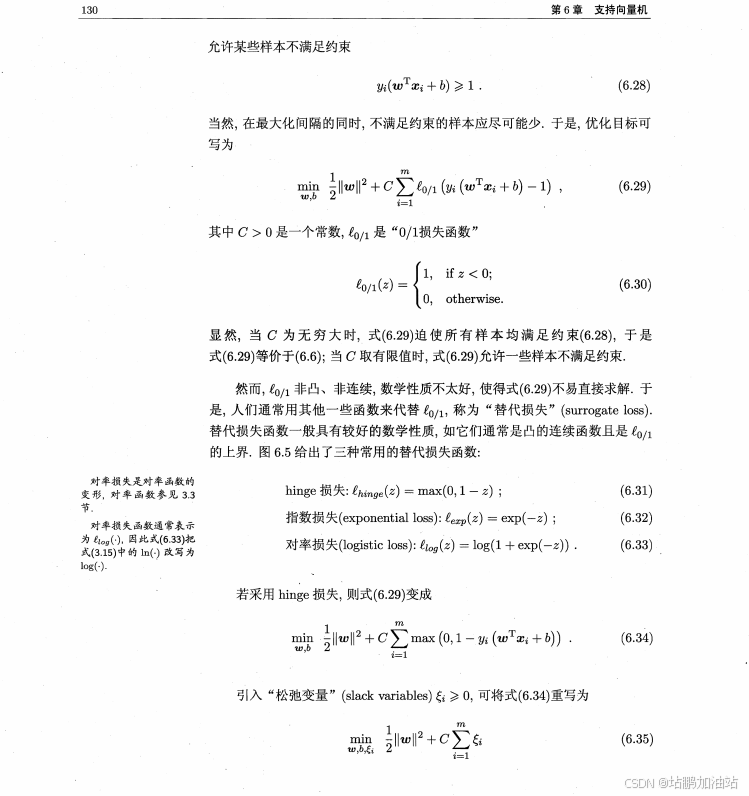

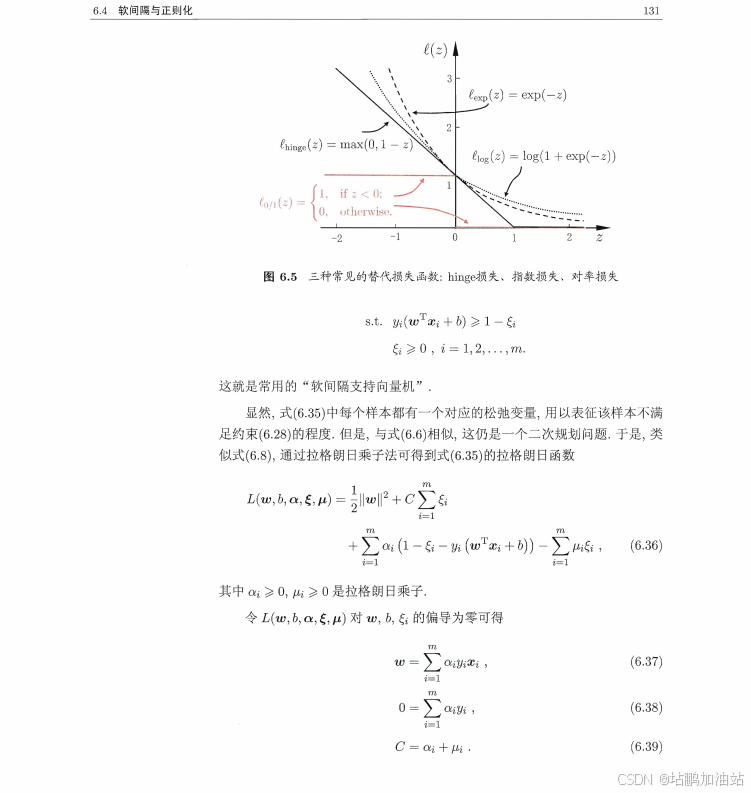

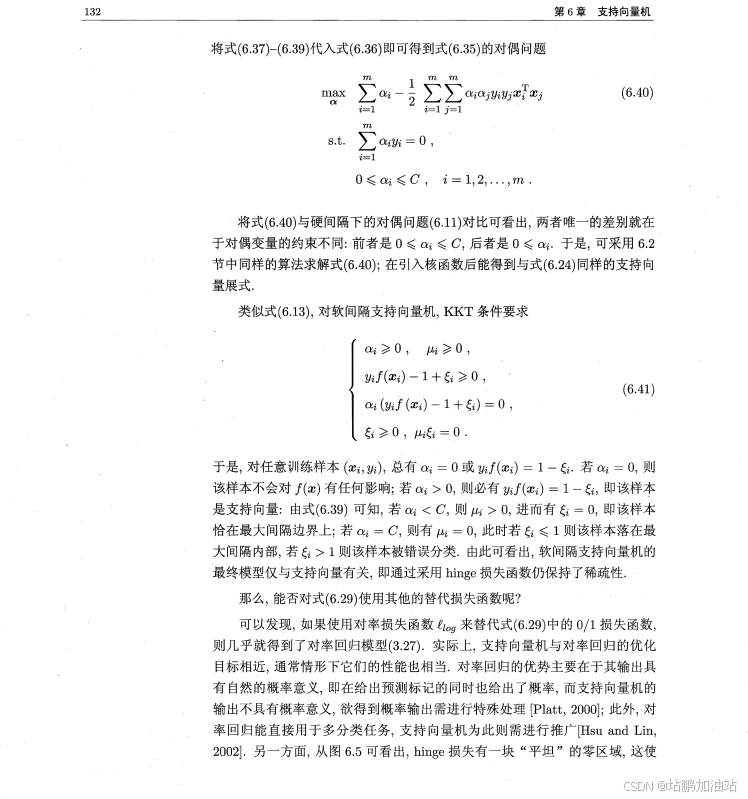

資料來源于:周志華-機器學習,如有侵權請聯系刪除

(模擬)(對各位進行拆解))

)

)

(獲取方式看綁定的資源))