引言

在當今數據驅動的商業環境中,企業業務數據通常存儲在MySQL等關系型數據庫中,但當數據量增長到千萬級甚至更高時,直接在MySQL中進行復雜分析會導致性能瓶頸。本文將詳細介紹如何將MySQL業務數據遷移到大數據平臺,并通過Spark等工具實現高效的離線分析流程。

一、整體架構設計

1.1 技術棧選擇

核心組件:

數據抽取:Sqoop、Flink CDC

數據存儲:HDFS、Hive

計算引擎:Spark、Hive

調度系統:Airflow

可視化:Superset

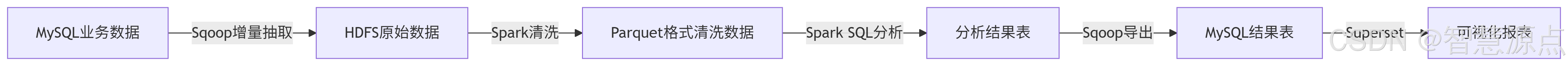

1.2 流程概覽

二、數據抽取實戰

2.1 Sqoop全量導入最佳實踐

#!/bin/bash

# sqoop_full_import.shDB_URL="jdbc:mysql://mysql-host:3306/prod_db"

USERNAME="etl_user"

PASSWORD="secure_password"

TABLE_NAME="orders"

HDFS_PATH="/data/raw/${TABLE_NAME}_$(date +%Y%m%d)"sqoop import \--connect $DB_URL \--username $USERNAME \--password $PASSWORD \--table $TABLE_NAME \--target-dir $HDFS_PATH \--compress \--compression-codec org.apache.hadoop.io.compress.SnappyCodec \--fields-terminated-by '\001' \--null-string '\\N' \--null-non-string '\\N' \--m 8關鍵參數說明:

--compress:啟用壓縮--fields-terminated-by '\001':使用不可見字符作為分隔符--m 8:設置8個并行任務

2.2 增量同步方案對比

| 方案 | 適用場景 | 優缺點 |

|---|---|---|

| Sqoop增量 | T+1批處理 | 簡單但需要維護last-value |

| Flink CDC | 近實時同步 | 復雜但支持精確一次語義 |

| 時間戳觸發器 | 業務系統有更新時間字段 | 依賴業務表設計 |

三、數據清洗與轉換

3.1 Spark清洗標準化流程

import org.apache.spark.sql.*;public class DataCleaningJob {public static void main(String[] args) {// 初始化SparkSessionSparkSession spark = SparkSession.builder().appName("JavaDataCleaning").config("spark.sql.parquet.writeLegacyFormat", "true").getOrCreate();// 1. 讀取原始數據Dataset<Row> rawDF = spark.read().format("parquet").load("/data/raw/orders");// 2. 數據清洗轉換Dataset<Row> cleanedDF = rawDF// 處理空值.na().fill(0.0, new String[]{"discount"}).na().fill(-1, new String[]{"user_id"})// 過濾無效記錄.filter(functions.col("order_amount").gt(0))// 日期轉換.withColumn("order_date", functions.to_date(functions.from_unixtime(functions.col("create_timestamp")), "yyyy-MM-dd"))// 數據脫敏.withColumn("user_name", functions.when(functions.length(functions.col("user_name")).gt(0),functions.expr("mask(user_name)")).otherwise("Anonymous"));// 3. 分區寫入cleanedDF.write().partitionBy("order_date").mode(SaveMode.Overwrite).parquet("/data/cleaned/orders");spark.stop();}

}數據質量檢查工具類

import org.apache.spark.sql.*;public class DataQualityChecker {public static void checkNullValues(Dataset<Row> df) {System.out.println("=== Null Value Check ===");for (String colName : df.columns()) {long nullCount = df.filter(functions.col(colName).isNull()).count();System.out.printf("Column %s has %d null values%n", colName, nullCount);}}public static void checkValueRange(Dataset<Row> df, String colName) {Row stats = df.select(functions.mean(colName).alias("mean"),functions.stddev(colName).alias("stddev")).first();double mean = stats.getDouble(0);double stddev = stats.getDouble(1);double upperBound = mean + 3 * stddev;double lowerBound = mean - 3 * stddev;System.out.printf("Column %s statistics:%n", colName);System.out.printf("Mean: %.2f, StdDev: %.2f%n", mean, stddev);System.out.printf("Normal range: %.2f ~ %.2f%n", lowerBound, upperBound);long outliers = df.filter(functions.col(colName).lt(lowerBound).or(functions.col(colName).gt(upperBound))).count();System.out.printf("Found %d outliers%n", outliers);}

}四、高效存儲策略

4.1 存儲格式對比測試

我們對10GB訂單數據進行了基準測試:

| 格式 | 存儲大小 | 查詢耗時 | 寫入耗時 |

|---|---|---|---|

| Text | 10.0GB | 78s | 65s |

| Parquet | 1.2GB | 12s | 32s |

| ORC | 1.0GB | 9s | 28s |

4.2 分區優化實踐

動態分區配置:

SET hive.exec.dynamic.partition=true;

SET hive.exec.dynamic.partition.mode=nonstrict;

SET hive.exec.max.dynamic.partitions=1000;CREATE TABLE orders_partitioned (order_id BIGINT,user_id INT,amount DECIMAL(10,2)

) PARTITIONED BY (dt STRING, region STRING)

STORED AS PARQUET;五、離線計算模式

5.1 典型分析場景實現

場景1:RFM用戶分群

import org.apache.spark.sql.*;

import org.apache.spark.sql.expressions.Window;

import static org.apache.spark.sql.functions.*;public class RFMAnalysis {public static void main(String[] args) {SparkSession spark = SparkSession.builder().appName("JavaRFMAnalysis").enableHiveSupport().getOrCreate();// 計算RFM基礎指標Dataset<Row> rfmDF = spark.sql("SELECT user_id, " +"DATEDIFF(CURRENT_DATE, MAX(order_date)) AS recency, " +"COUNT(DISTINCT order_id) AS frequency, " +"SUM(amount) AS monetary " +"FROM orders_cleaned " +"WHERE order_date >= DATE_SUB(CURRENT_DATE, 365) " +"GROUP BY user_id");// 使用窗口函數計算分位數WindowSpec recencyWindow = Window.orderBy(col("recency").desc());WindowSpec frequencyWindow = Window.orderBy(col("frequency").desc());WindowSpec monetaryWindow = Window.orderBy(col("monetary").desc());Dataset<Row> result = rfmDF.withColumn("r_score", ntile(5).over(recencyWindow)).withColumn("f_score", ntile(5).over(frequencyWindow)).withColumn("m_score", ntile(5).over(monetaryWindow)).withColumn("rfm", concat(col("r_score"), col("f_score"), col("m_score")));// 保存結果result.write().saveAsTable("user_rfm_analysis");spark.stop();}

}5.2 漏斗分析

import org.apache.spark.sql.*;

import static org.apache.spark.sql.functions.*;public class FunnelAnalysis {public static void main(String[] args) {SparkSession spark = SparkSession.builder().appName("JavaFunnelAnalysis").getOrCreate();String[] stages = {"view", "cart", "payment"};Dataset<Row> funnelDF = null;// 構建漏斗各階段數據集for (int i = 0; i < stages.length; i++) {String stage = stages[i];Dataset<Row> stageDF = spark.table("user_behavior").filter(col("action").equalTo(stage)).groupBy("user_id").agg(countDistinct("session_id").alias(stage + "_count"));if (i == 0) {funnelDF = stageDF;} else {funnelDF = funnelDF.join(stageDF, "user_id", "left_outer");}}// 計算轉化率for (int i = 0; i < stages.length - 1; i++) {String fromStage = stages[i];String toStage = stages[i+1];double conversionRate = funnelDF.filter(col(fromStage + "_count").gt(0)).select(avg(when(col(toStage + "_count").gt(0), 1).otherwise(0))).first().getDouble(0);System.out.printf("Conversion rate from %s to %s: %.2f%%%n", fromStage, toStage, conversionRate * 100);}spark.stop();}

}六、生產環境優化

6.1 數據傾斜處理工具類

import org.apache.spark.sql.*;

import static org.apache.spark.sql.functions.*;public class DataSkewHandler {public static Dataset<Row> handleSkew(Dataset<Row> df, String skewedColumn, Object skewedValue) {// 方法1:加鹽處理Dataset<Row> saltedDF = df.withColumn("salt", when(col(skewedColumn).equalTo(skewedValue), floor(rand().multiply(10))).otherwise(0));return saltedDF.repartition(col("salt"));}public static Dataset<Row> separateProcessing(Dataset<Row> df, String skewedColumn, Object skewedValue) {// 方法2:分離處理Dataset<Row> normalData = df.filter(col(skewedColumn).notEqual(skewedValue));Dataset<Row> skewedData = df.filter(col(skewedColumn).equalTo(skewedValue));// 對skewedData進行特殊處理...// 例如增加并行度skewedData = skewedData.repartition(20);return normalData.union(skewedData);}

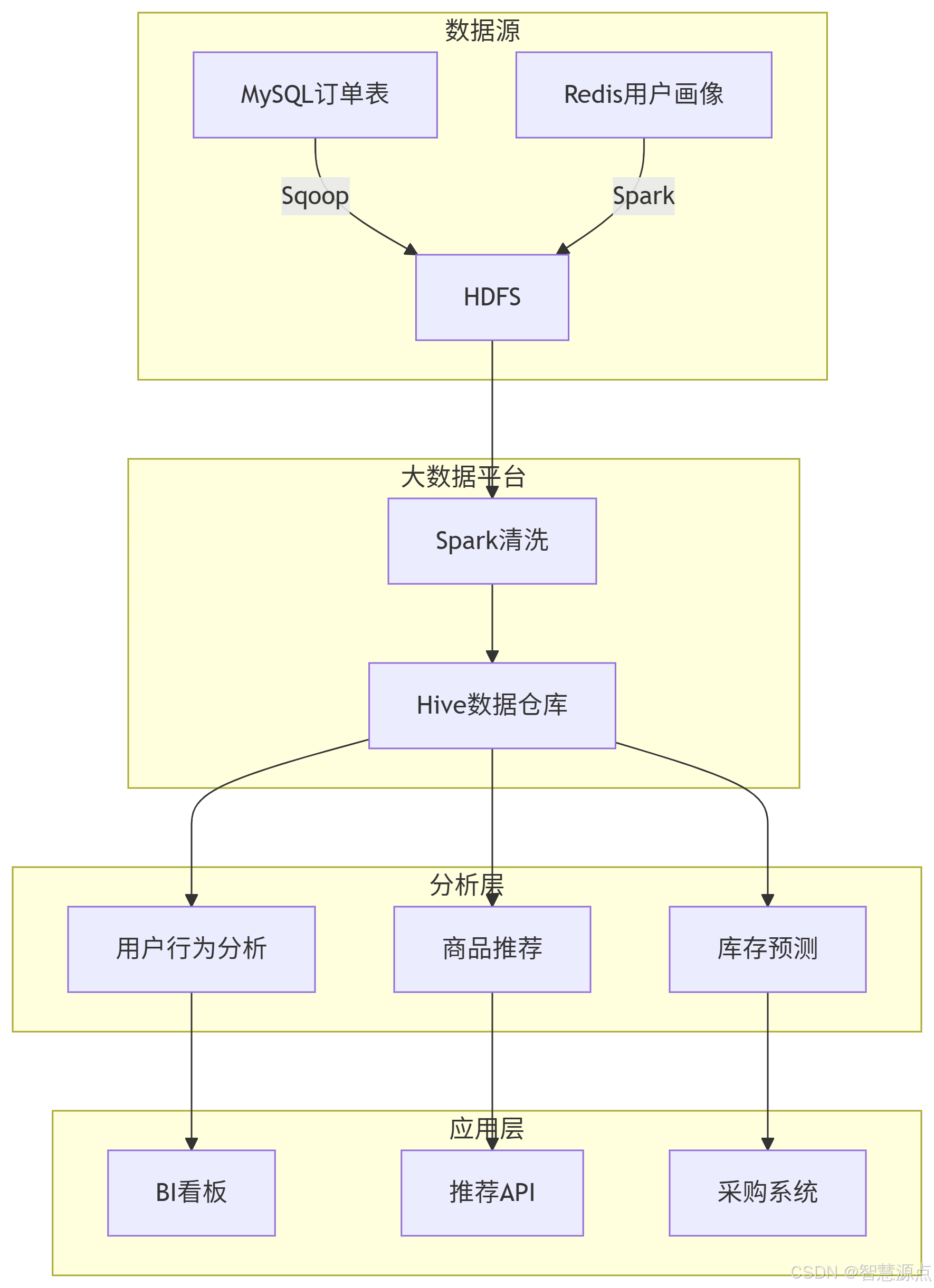

}七、完整案例:電商數據分析平臺

7.1 數據流設計

7.1 電商分析平臺主程序

import org.apache.spark.sql.*;public class ECommerceAnalysisPlatform {public static void main(String[] args) {// 初始化SparkSparkSession spark = SparkSession.builder().appName("ECommerceAnalysis").config("spark.sql.warehouse.dir", "/user/hive/warehouse").enableHiveSupport().getOrCreate();// 1. 數據抽取MySQLToHDFSExporter.exportTable("orders", "/data/raw/orders");// 2. 數據清洗new DataCleaningJob().run(spark);// 3. 分析任務new RFMAnalysis().run(spark);new FunnelAnalysis().run(spark);// 4. 日報生成generateDailyReport(spark);spark.stop();}private static void generateDailyReport(SparkSession spark) {// GMV周同比計算Dataset<Row> reportDF = spark.sql("WITH current_week AS (" +" SELECT SUM(amount) AS gmv, COUNT(DISTINCT user_id) AS uv " +" FROM orders_cleaned " +" WHERE dt BETWEEN DATE_SUB(CURRENT_DATE, 7) AND CURRENT_DATE" +"), last_week AS (" +" SELECT SUM(amount) AS gmv, COUNT(DISTINCT user_id) AS uv " +" FROM orders_cleaned " +" WHERE dt BETWEEN DATE_SUB(CURRENT_DATE, 14) AND DATE_SUB(CURRENT_DATE, 7)" +") " +"SELECT " +" c.gmv AS current_gmv, " +" l.gmv AS last_gmv, " +" (c.gmv - l.gmv) / l.gmv AS gmv_yoy, " +" c.uv AS current_uv, " +" l.uv AS last_uv " +"FROM current_week c CROSS JOIN last_week l");// 保存到MySQLreportDF.write().format("jdbc").option("url", "jdbc:mysql://mysql-host:3306/report_db").option("dbtable", "daily_gmv_report").option("user", "report_user").option("password", "report_password").mode(SaveMode.Overwrite).save();}

}結語

構建完整的大數據離線分析管道需要綜合考慮數據規模、時效性要求和業務需求。本文介紹的技術方案已在多個生產環境驗證,可支持每日億級數據的處理分析。隨著業務發展,可逐步引入實時計算、特征倉庫等更先進的架構組件。

最佳實踐建議:

始終保留原始數據副本

建立完善的數據血緣追蹤

監控關鍵指標:任務耗時、數據質量、資源利用率

定期優化分區和文件大小

![[論文閱讀] 人工智能 + 軟件工程 | LLM協作新突破:用多智能體強化學習實現高效協同——解析MAGRPO算法](http://pic.xiahunao.cn/[論文閱讀] 人工智能 + 軟件工程 | LLM協作新突破:用多智能體強化學習實現高效協同——解析MAGRPO算法)

)

把“可配置查詢”裝進 Mustache)

)

)

:I2C 總線上可以接多少個設備?如何保證數據的準確性?)

如何開發有監督神經網絡系統?)