本實驗基于ELFK已經搭好的情況下 ELK日志分析

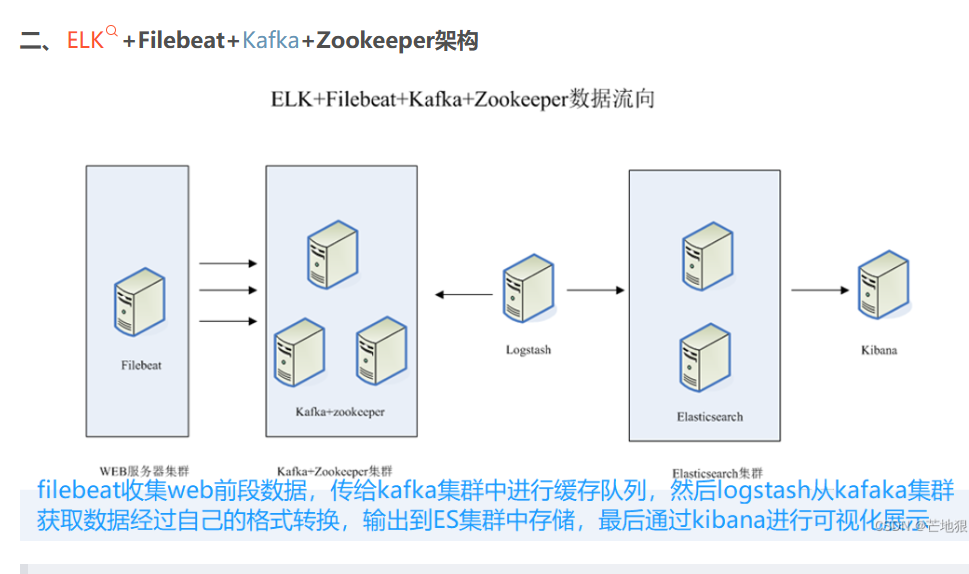

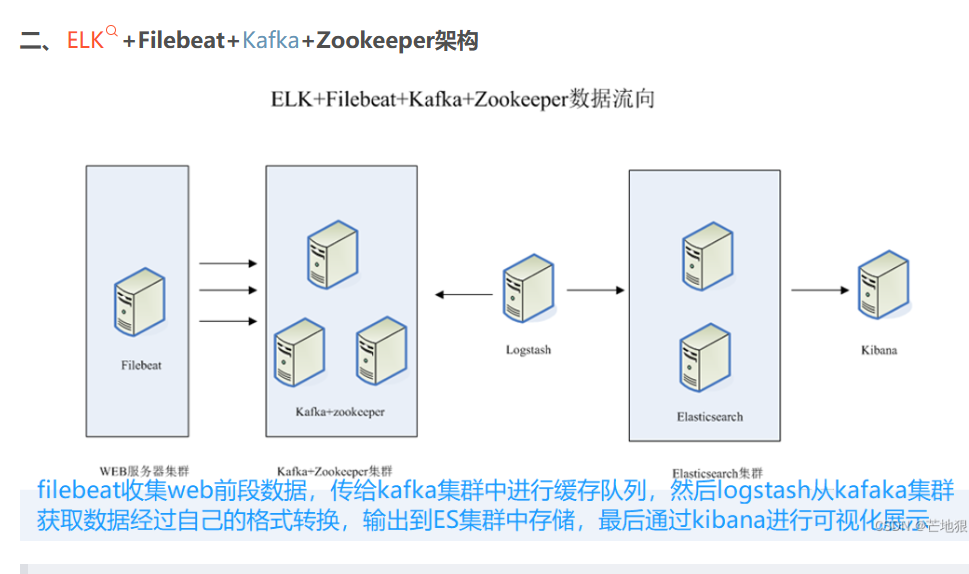

架構解析

第一層、數據采集層

數據采集層位于最左邊的業務服務器集群上,在每個業務服務器上面安裝了filebeat做日志收集,然后把采集到的原始日志發送到Kafka+zookeeper集群上。第二層、消息隊列層

原始日志發送到Kafka+zookeeper集群上后,會進行集中存儲,此時,filbeat是消息的生產者,存儲的消息可以隨時被消費。第三層、數據分析層

Logstash作為消費者,會去Kafka+zookeeper集群節點實時拉取原始日志,然后將獲取到的原始日志根據規則進行分析、清洗、過濾,最后將清洗好的日志轉發至Elasticsearch集群。第四層、數據持久化存儲

Elasticsearch集群在接收到logstash發送過來的數據后,執行寫磁盤,建索引庫等操作,最后將結構化的數據存儲到Elasticsearch集群上。第五層、數據查詢、展示層

Kibana是一個可視化的數據展示平臺,當有數據檢索請求時,它從Elasticsearch集群上讀取數據,然后進行可視化出圖和多維度分析。

搭建ELK+Filebeat+Kafka+Zookeeper

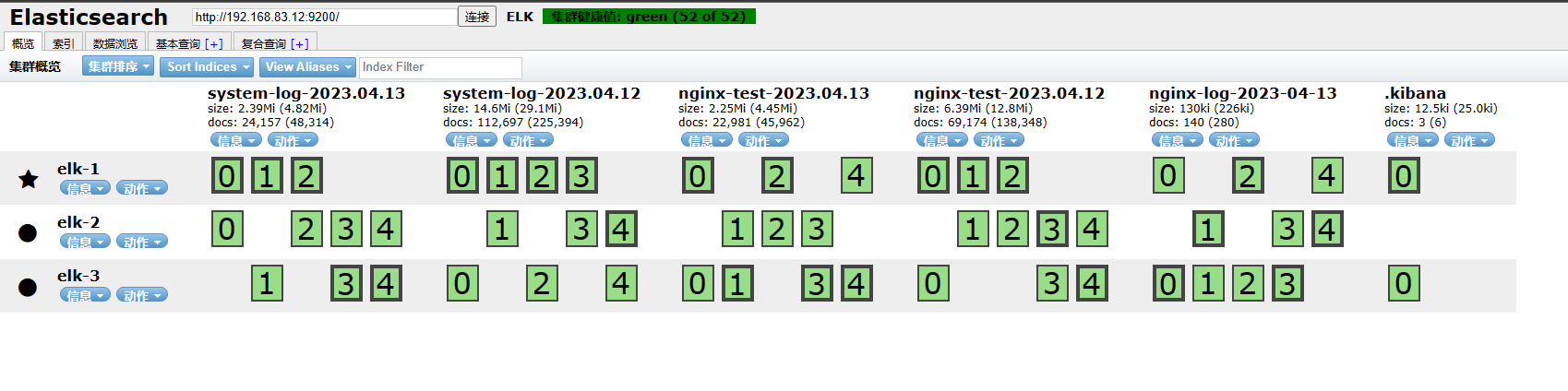

zIP: 所屬集群: 端口:

192.168.83.11 Elasticsearch+Kibana+kafka+zookeeper+nginx反向代理 9100 9200 5601 9092 3288 8080 都可以安裝filebeat

192.168.83.12 Elasticsearch+Logstash+kafka+zookeeper+filebeat+nginx反向代理 9100 9200 9600 9092 3288 隨機 8080

192.168.83.13 Elasticsearch+kafka+zookeeper+nginx反向代理 z 9100 9200 9092 3288

root@elk2 ~]# netstat -antp |grep filebeat

tcp 1 0 192.168.83.12:40348 192.168.83.11:9092 CLOSE_WAIT 6975/filebeat

tcp 0 0 192.168.83.12:51220 192.168.83.12:9092 ESTABLISHED 6975/filebeat

1.3臺機子安裝zookeeper

wget https://dlcdn.apache.org/zookeeper/zookeeper-3.8.0/apache-zookeeper-3.8.0-bin.tar.gz --no-check-certificate

1.1 解壓安裝zookeeper軟件包

cd /opt上傳apache-zookeeper-3.8.0-bin.tar.gz包tar zxf apache-zookeeper-3.8.0-bin.tar.gz 解包

mv apache-zookeeper-3.8.0-bin /usr/local/zookeeper-3.8.0 #將解壓的目錄剪切到/usr/local/

cd /usr/local/zookeeper-3.8.0/conf/

cp zoo_sample.cfg zoo.cfg 備份復制模板配置文件為zoo.cfg

1.2 修改Zookeeper配置配置文件

cd /usr/local/zookeeper-3.8.0/conf #進入zookeeper配置文件匯總

ls 后可以看到zoo_sample.cfg模板配置文件

cp zoo_sample.cfg zoo.cfg 復制模板配置文件為zoo.cfg

mkdir -p /usr/local/zookeeper-3.8.0/data

mkdir -p dataLogDir=/usr/local/zookeeper-3.8.0/1ogs

vim zoo.cfg

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/usr/local/zookeeper-3.8.0/data

dataLogDir=/usr/local/zookeeper-3.8.0/1ogs

# the port at which the clients will connect

clientPort=2181

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# https://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1## Metrics Providers

#

# https://prometheus.io Metrics Exporter

#metricsProvider.className=org.apache.zookeeper.metrics.prometheus.PrometheusMetricsProvider

#metricsProvider.httpHost=0.0.0.0

#metricsProvider.httpPort=7000

#metricsProvider.exportJvmInfo=true

server.1=192.168.83.11:3188:3288

server.2=192.168.83.12:3188:3288

server.3=192.168.83.13:3188:3288

scp zoo.cfg elk2:/usr/local/zookeeper-3.8.0/conf/zoo.cfg

scp zoo.cfg elk3:/usr/local/zookeeper-3.8.0/conf/zoo.cfg

1.3 設置myid號以及啟動腳本 到這里就不要設置同步了,下面的操作,做好一臺機器一臺機器的配置。

echo 1 >/usr/local/zookeeper-3.8.0/data/myid

# node1上配置echo 2 >/usr/local/zookeeper-3.8.0/data/myid

#node2上配置echo 3 >/usr/local/zookeeper-3.8.0/data/myid

#node3上配置

1.4 兩種啟動zookeeper的方法

cd /usr/local/zookeeper-3.8.0/bin

ls

./zkServer.sh start #啟動 一次性啟動三臺,,才可以看狀態

./zkServer.sh status #查看狀態

[root@elk1 bin]# ./zkServer.sh status

/usr/bin/java

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper-3.8.0/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: follower[root@elk2 bin]# ./zkServer.sh status

/usr/bin/java

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper-3.8.0/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: leader[root@elk3 bin]# ./zkServer.sh status

/usr/bin/java

ZooKeeper JMX enabled by default

Using config: /usr/local/zookeeper-3.8.0/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: follower

1.5腳本啟動 推薦

第2種啟動3臺節點需要執行的腳本#//配置啟動腳本,腳本在開啟啟動執行的目錄中創建

vim /etc/init.d/zookeeper

#!/bin/bash

#chkconfig:2345 20 90

#description:Zookeeper Service Control Script

ZK_HOME='/usr/local/zookeeper-3.8.0'

case $1 in

start)echo "----------zookeeper啟動----------"$ZK_HOME/bin/zkServer.sh start

;;

stop)echo "---------- zookeeper停止-----------"$ZK_HOME/bin/zkServer.sh stop

;;

restart)echo "---------- zookeeper 重啟------------"$ZK_HOME/bin/zkServer.sh restart

;;

status)echo "---------- zookeeper 狀態------------"$ZK_HOME/bin/zkServer.sh status

;;

*)echo "Usage: $0 {start|stop|restart|status}"

esac

cd /usr/local/zookeeper-3.8.0/bin

在節點1服務操作

chmod +x /etc/init.d/zookeeper

chkconfig --add zookeeper #加入到系統管理

service zookeeper start 啟動服務

service zookeeper status 查看狀態后 是 follower

在節點2服務操作

chmod +x /etc/init.d/zookeeper

chkconfig --add zookeeper #加入到系統管理

service zookeeper start 啟動服務

service zookeeper status 查看狀態后 是 leader 第二臺啟動的,他是leader

在節點3服務操作

chmod +x /etc/init.d/zookeeper

chkconfig --add zookeeper #加入到系統管理

service zookeeper start 啟動服務

service zookeeper status 查看狀態后 是 follower

2. 安裝 kafka(3臺機子都要操作)

#下載kafka

cd /opt

wget http://archive.apache.org/dist/kafka/2.7.1/kafka_2.13-2.7.1.tgz

上傳kafka_2.13-2.7.1.tgz到/opt

tar zxf kafka_2.13-2.7.1.tgz

mv kafka_2.13-2.7.1 /usr/local/kafka

2.2 修改配置文件

cd /usr/local/kafka/config/

cp server.properties server.properties.bak

vim server.properties192.168.83.11配置

broker.id=1

listeners=PLAINTEXT://192.168.83.11:9092

zookeeper.connect=192.168.83.11:2181,192.168.83.12:2181,192.168.83.13:2181

192.168.83.13配置

broker.id=2

listeners=PLAINTEXT://192.168.83.12:9092

zookeeper.connect=192.168.83.11:2181,192.168.83.12:2181,192.168.83.13:21810:2181192.168.83.13配置

broker.id=3

listeners=PLAINTEXT://192.168.83.13:9092

zookeeper.connect=192.168.83.11:2181,192.168.83.12:2181,192.168.83.13:2181

2.3 將相關命令加入到系統環境當中

vim /etc/profile 末行加入

export KAFKA_HOME=/usr/local/kafka

export PATH=$PATH:$KAFKA_HOME/bin

source /etc/profile

[root@elk1 config]# scp /etc/profile elk2:/etc/profile

profile 100% 1888 1.4MB/s 00:00

[root@elk1 config]# scp /etc/profile elk3:/etc/profile

profile

2.3 將相關命令加入到系統環境當中

cd /usr/local/kafka/config/

kafka-server-start.sh -daemon server.properties

netstat -antp | grep 9092

2.4Kafka 命令行操作

創建topickafka-topics.sh --create --zookeeper 192.168.121.10:2181,192.168.121.12:2181,192.168.121.14:2181 --replication-factor 2 --partitions 3 --topic test

–zookeeper:定義 zookeeper 集群服務器地址,如果有多個 IP 地址使用逗號分割,一般使用一個 IP 即可

–replication-factor:定義分區副本數,1 代表單副本,建議為 2

–partitions:定義分區數

–topic:定義 topic 名稱查看當前服務器中的所有 topickafka-topics.sh --list --zookeeper 192.168.121.10:2181,192.168.121.12:2181,192.168.121.14:2181查看某個 topic 的詳情kafka-topics.sh --describe --zookeeper 192.168.121.10:2181,192.168.121.12:2181,192.168.121.14:2181發布消息kafka-console-producer.sh --broker-list 192.168.121.10:9092,192.168.121.12:9092,192.168.121.14:9092 --topic test消費消息kafka-console-consumer.sh --bootstrap-server 192.168.121.10:9092,192.168.121.12:9092,192.168.121.14:9092 --topic test --from-beginning–from-beginning:會把主題中以往所有的數據都讀取出來修改分區數kafka-topics.sh

--zookeeper 192.168.80.10:2181,192.168.80.11:2181,192.168.80.12:2181 --alter --topic test --partitions 6刪除 topickafka-topics.sh

--delete --zookeeper 192.168.80.10:2181,192.168.80.11:2181,192.168.80.12:2181 --topic test

3.配置數據采集層filebeat

3.1 定制日志格式

3.1 定制日志格式

[root@elk2 ~]# vim /etc/nginx/nginx.conf

user nginx;

worker_processes auto;error_log /var/log/nginx/error.log notice;

pid /var/run/nginx.pid;events

{worker_connections 1024;

}http

{include /etc/nginx/mime.types;default_type application/octet-stream;# log_format main2 '$http_host $remote_addr - $remote_user [$time_local] "$request" '

# '$status $body_bytes_sent "$http_referer" '

# '"$http_user_agent" "$upstream_addr" $request_time';

# access_log /var/log/nginx/access.log main2;log_format json '{"@timestamp":"$time_iso8601",''"@version":"1",''"client":"$remote_addr",''"url":"$uri",''"status":"$status",''"domain":"$host",''"host":"$server_addr",''"size":$body_bytes_sent,''"responsetime":$request_time,''"referer": "$http_referer",''"ua": "$http_user_agent"''}';access_log /var/log/nginx/access.log json;sendfile on;#tcp_nopush on;keepalive_timeout 65;#gzip on;upstream elasticsearch{zone elasticsearch 64K;server 192.168.83.11:9200;server 192.168.83.12:9200;server 192.168.83.13:9200;}server{listen 8080;server_name localhost;location /{proxy_pass http://elasticsearch;root html;index index.html index.htm;}}include /etc/nginx/conf.d/*.conf;

}

3.2安裝filebeat

[root@elk2 ~]# wget https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-6.0.0-x86_64.rpm

[root@elk2 ~]# rpm -ivh filebeat-6.0.0-x86_64.rpm

3.3 修改配置文件filebeat.yml

[root@elk2 ~]# vim /etc/filebeat/filebeat.ymlenabled: truepaths:- /var/log/nginx/*.log

#-------------------------- Elasticsearch output ------------------------------

output.kafka:# Array of hosts to connect to.hosts: ["192.168.83.11:9092","192.168.83.12:9092","192.168.83.13:9092"] #145topic: "nginx-es"

3.4 啟動filebeat

[root@elk2 ~]# systemctl restart filebeat

4、所有組件部署完成之后,開始配置部署

4.1 在kafka上創建一個話題nginx-es

kafka-topics.sh --create --zookeeper 192.168.83.11:2181,192.168.83.12:2181,192.168.83.13:2181 --replication-factor 1 --partitions 1 --topic nginx-es

4.2 修改logstash的配置文件

[root@elk2 ~]# vim /etc/logstash/conf.d/nginxlog.conf

input{

kafka{topics=>"nginx-es"codec=>"json"decorate_events=>truebootstrap_servers=>"192.168.83.11:9092,192.168.83.12:9092,192.168.83.13:9092"}

}

output {elasticsearch {hosts=>["192.168.83.11:9200","192.168.83.12:9200","192.168.83.13:9200"]index=>'nginx-log-%{+YYYY-MM-dd}'}

}

重啟logstash

systemctl restart logstash

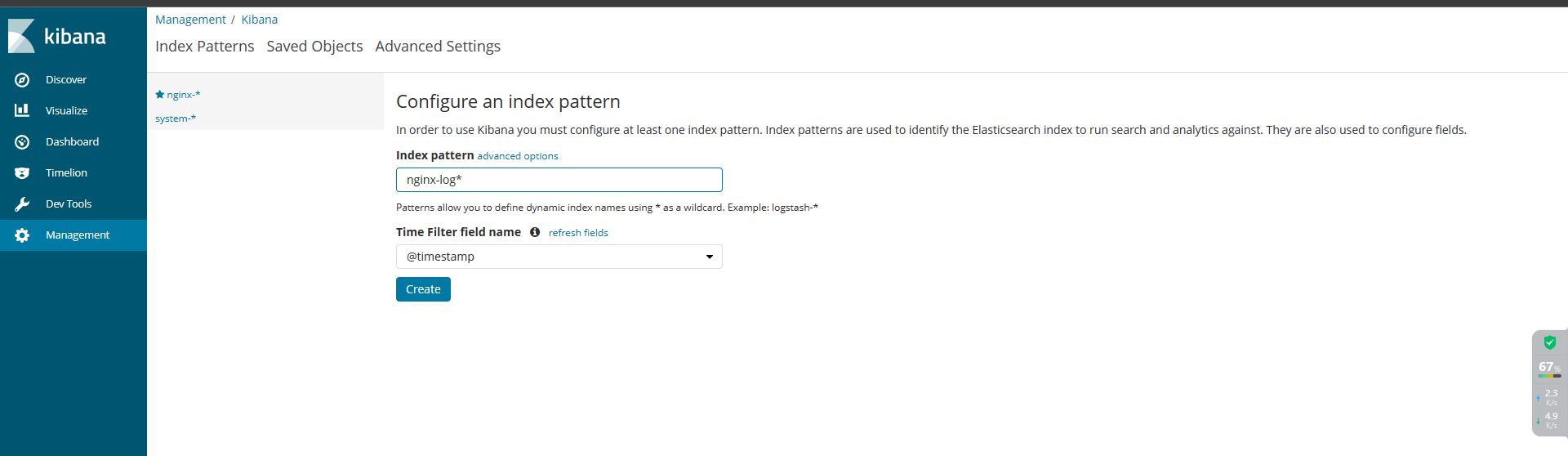

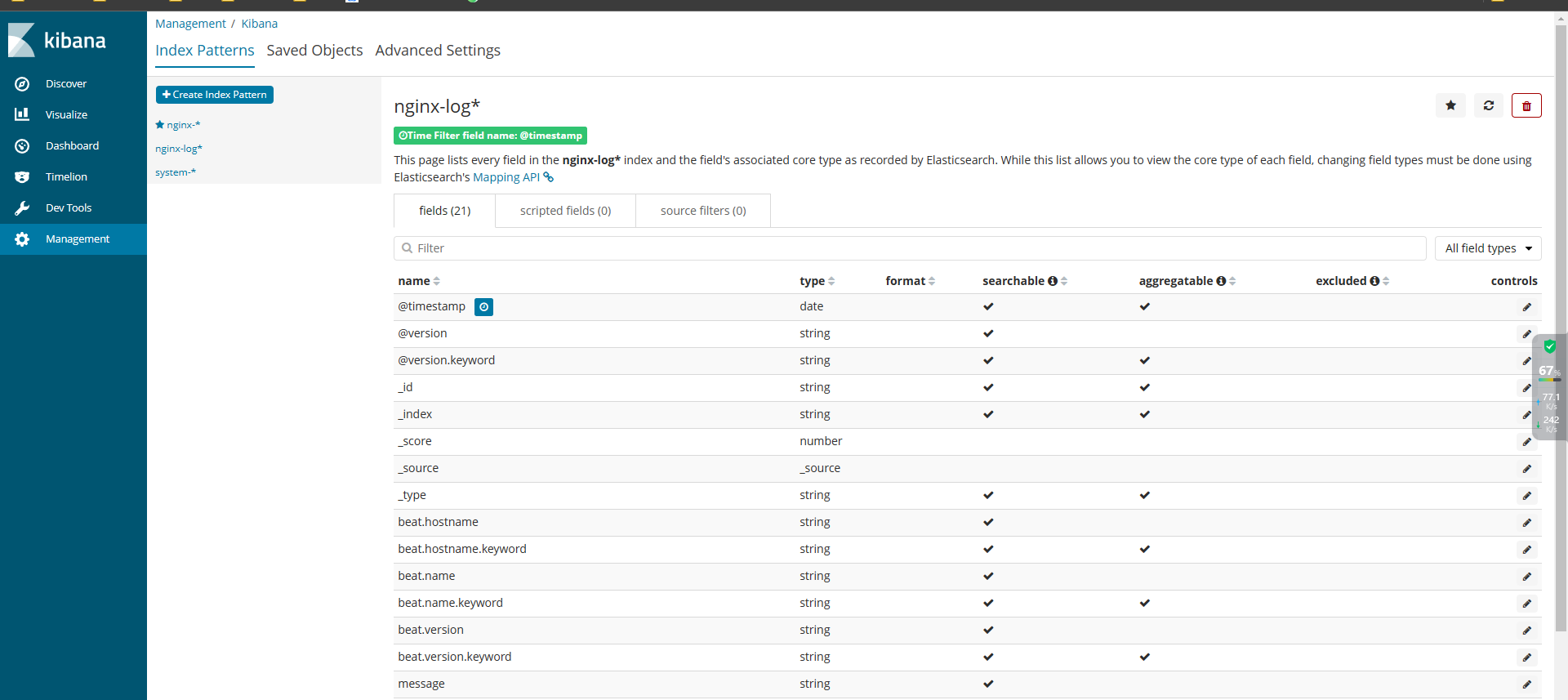

4.3 驗證網頁

】)

CMAKE)

)

![ceph mgr [errno 39] RBD image has snapshots (error deleting image from trash)](http://pic.xiahunao.cn/ceph mgr [errno 39] RBD image has snapshots (error deleting image from trash))