ant-research/scalelsd | DeepWiki

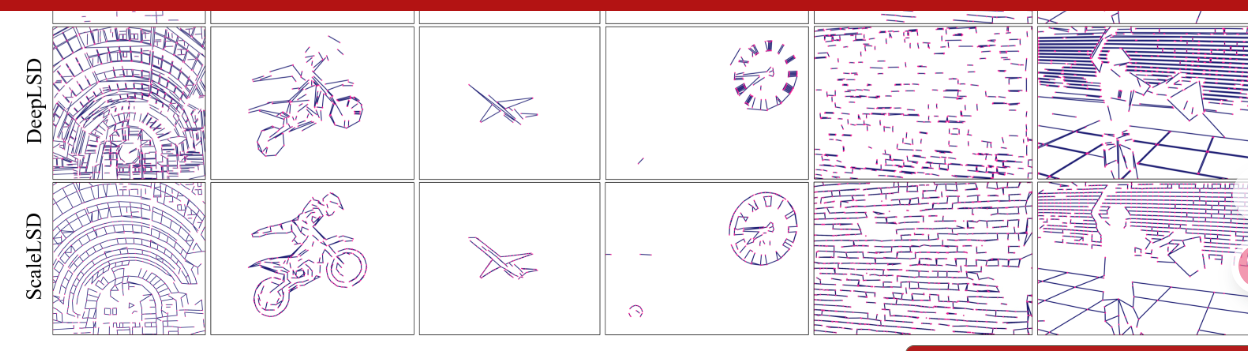

https://arxiv.org/html/2506.09369?_immersive_translate_auto_translate=1

https://gitee.com/njsgcs/scalelsd

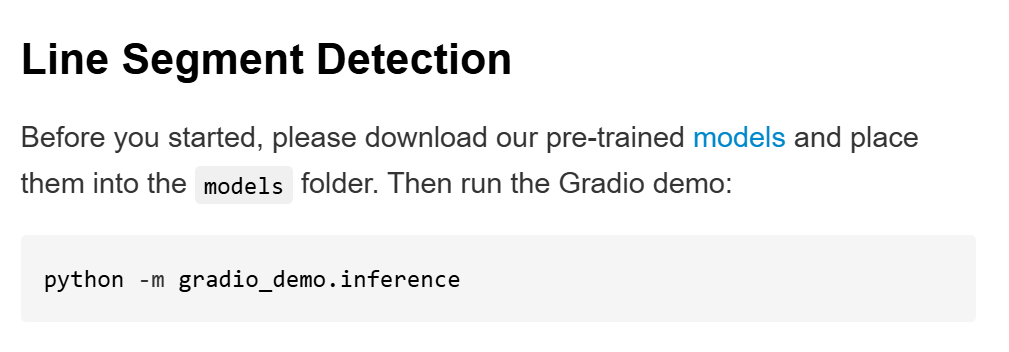

https://github.com/ant-research/scalelsd

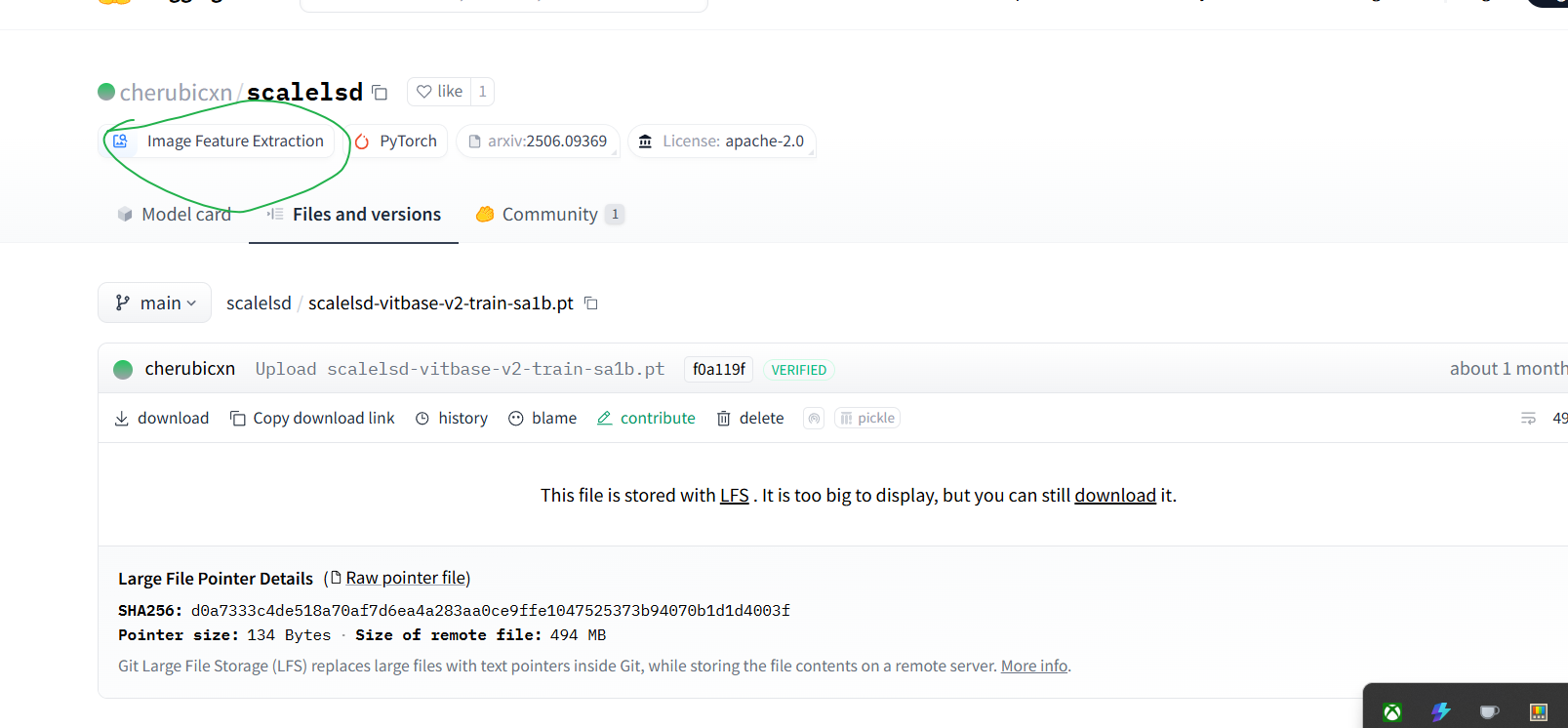

https://huggingface.co/cherubicxn/scalelsd

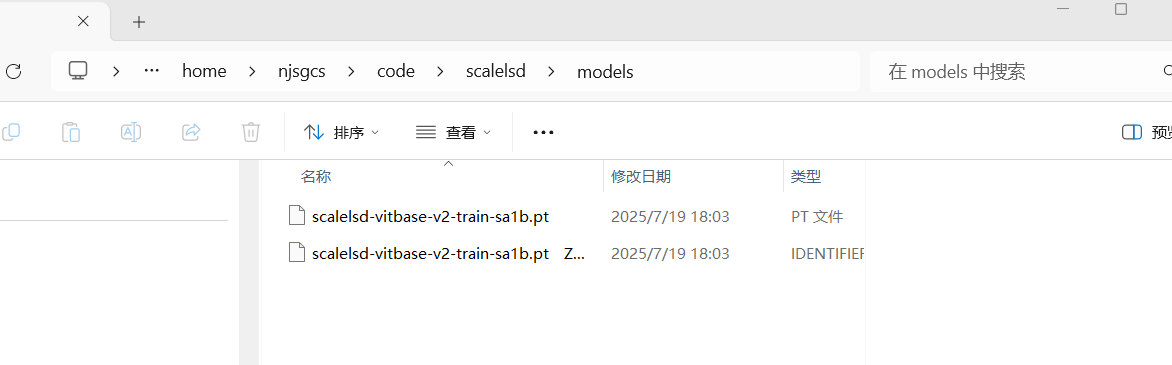

模型鏈接:?

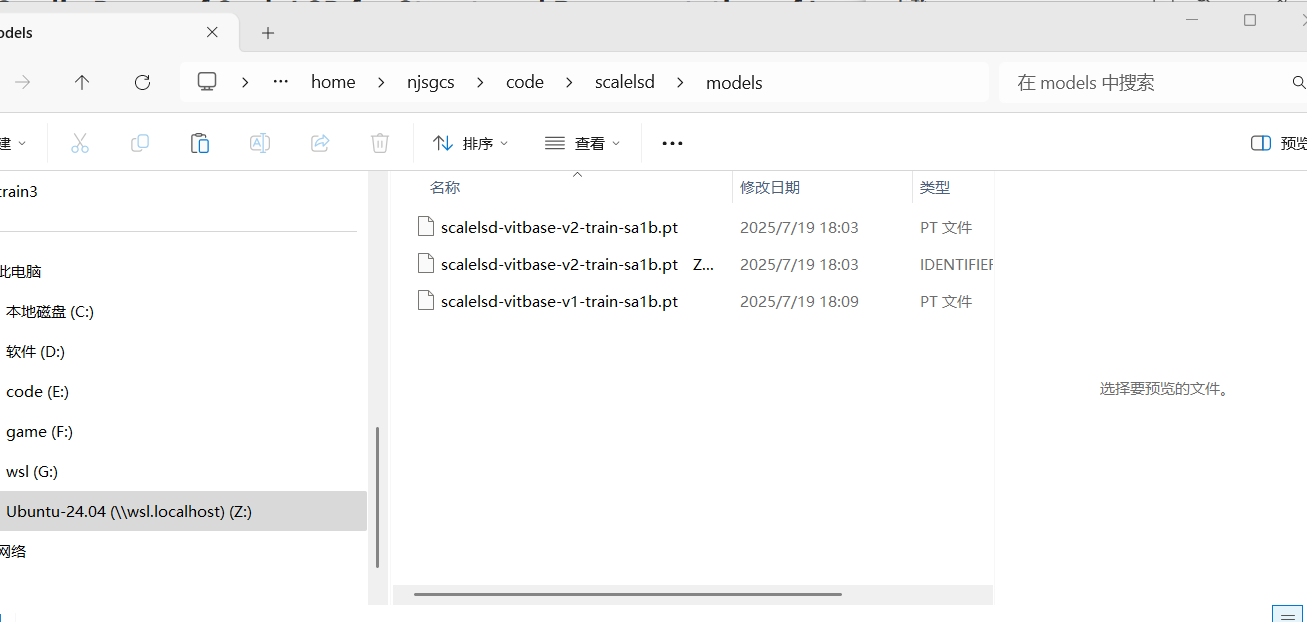

https://cdn-lfs-us-1.hf.co/repos/4b/b7/4bb773266cdc6bd3898cae785776f1dd52f835c2d4fcdf132ca4c487ecac7942/d0a7333c4de518a70af7d6ea4a283aa0ce9ffe1047525373b94070b1d1d4003f?response-content-disposition=attachment%3B+filename*%3DUTF-8%27%27scalelsd-vitbase-v2-train-sa1b.pt%3B+filename%3D%22scalelsd-vitbase-v2-train-sa1b.pt%22%3B&Expires=1752922960&Policy=eyJTdGF0ZW1lbnQiOlt7IkNvbmRpdGlvbiI6eyJEYXRlTGVzc1RoYW4iOnsiQVdTOkVwb2NoVGltZSI6MTc1MjkyMjk2MH19LCJSZXNvdXJjZSI6Imh0dHBzOi8vY2RuLWxmcy11cy0xLmhmLmNvL3JlcG9zLzRiL2I3LzRiYjc3MzI2NmNkYzZiZDM4OThjYWU3ODU3NzZmMWRkNTJmODM1YzJkNGZjZGYxMzJjYTRjNDg3ZWNhYzc5NDIvZDBhNzMzM2M0ZGU1MThhNzBhZjdkNmVhNGEyODNhYTBjZTlmZmUxMDQ3NTI1MzczYjk0MDcwYjFkMWQ0MDAzZj9yZXNwb25zZS1jb250ZW50LWRpc3Bvc2l0aW9uPSoifV19&Signature=fCSnqgEbqmYV7X3VhNo-uEz-ZO6gRvRUqtS4TzrEO2iSJ6U0LU%7EbrVwWwhc69FveoaXgoq7ikmJRWv7MZxomAF5qxPxQIr3Nhor1KyDeEp267ikFN6ASBAA8IcJ67unIWzT4d3CTrbNVh8wT0pn1JsL4gGK-Xl2A0x7%7ErVb9exw-8h7AUGDejkvg5yFbzrRIw6U4QqyuwOFpicMlZXNaPBPnjfyKuQmfdz4UzbZ4C5WeKKoUAa6sxsMTu-GEF4wv%7ETBbjQS1ev5ZQ2%7EYaZxZnICvEwcwdNxSpJPiZuJrrZq95xSOcTaTFORO-1wDT7zXJY9pdFPofzIV2CBv1i6AAQ__&Key-Pair-Id=K24J24Z295AEI9

https://cdn-lfs-us-1.hf.co/repos/4b/b7/4bb773266cdc6bd3898cae785776f1dd52f835c2d4fcdf132ca4c487ecac7942/a7eaabbfc5e79f8fc407251f923f791b39c739042eb585ca52bb85abe64c4ee7?response-content-disposition=attachment%3B+filename*%3DUTF-8%27%27scalelsd-vitbase-v1-train-sa1b.pt%3B+filename%3D%22scalelsd-vitbase-v1-train-sa1b.pt%22%3B&Expires=1752923360&Policy=eyJTdGF0ZW1lbnQiOlt7IkNvbmRpdGlvbiI6eyJEYXRlTGVzc1RoYW4iOnsiQVdTOkVwb2NoVGltZSI6MTc1MjkyMzM2MH19LCJSZXNvdXJjZSI6Imh0dHBzOi8vY2RuLWxmcy11cy0xLmhmLmNvL3JlcG9zLzRiL2I3LzRiYjc3MzI2NmNkYzZiZDM4OThjYWU3ODU3NzZmMWRkNTJmODM1YzJkNGZjZGYxMzJjYTRjNDg3ZWNhYzc5NDIvYTdlYWFiYmZjNWU3OWY4ZmM0MDcyNTFmOTIzZjc5MWIzOWM3MzkwNDJlYjU4NWNhNTJiYjg1YWJlNjRjNGVlNz9yZXNwb25zZS1jb250ZW50LWRpc3Bvc2l0aW9uPSoifV19&Signature=rtwQzCIgS2POke1hHELX2G7I-9MbRqzH51BWuHemwviaTAHyp1HaxOf3e%7EX1bGOld1k57ZR%7EHzeWh6D1PRLpaO-aG%7EgyhmCydrSIgkUzHd9VB2vGdGkoky8l9vP3HpUUkt7ERXCLInynopDd5191g3diZ0WyYy05d69Qel1ld-vhWlBOFrp8BzY%7EzLWnfITlCu0PGSlexlM86P9k0HaWor3vwqUYFJ0b0FVJ7DSrbRKIayP-S4M1fR4amT17tCXNDn3Jb-B2z4Eu1un-YpP67GeiItMuS0RtB4qkjHbJpVFKIQSvP0i08qb0HQuxiiN%7Ez%7EepWWWW1XxraAaWhbFcWA__&Key-Pair-Id=K24J24Z295AEI9

python predictor/predict.py -i Drawing_Annotation_Recognition8.v1i.yolov12/test/images/Snipaste_2025-07-12_19-54-54_png.rf.a562ea098219605eff9cb4ce58f09d0e.jpg

?

?

?

?

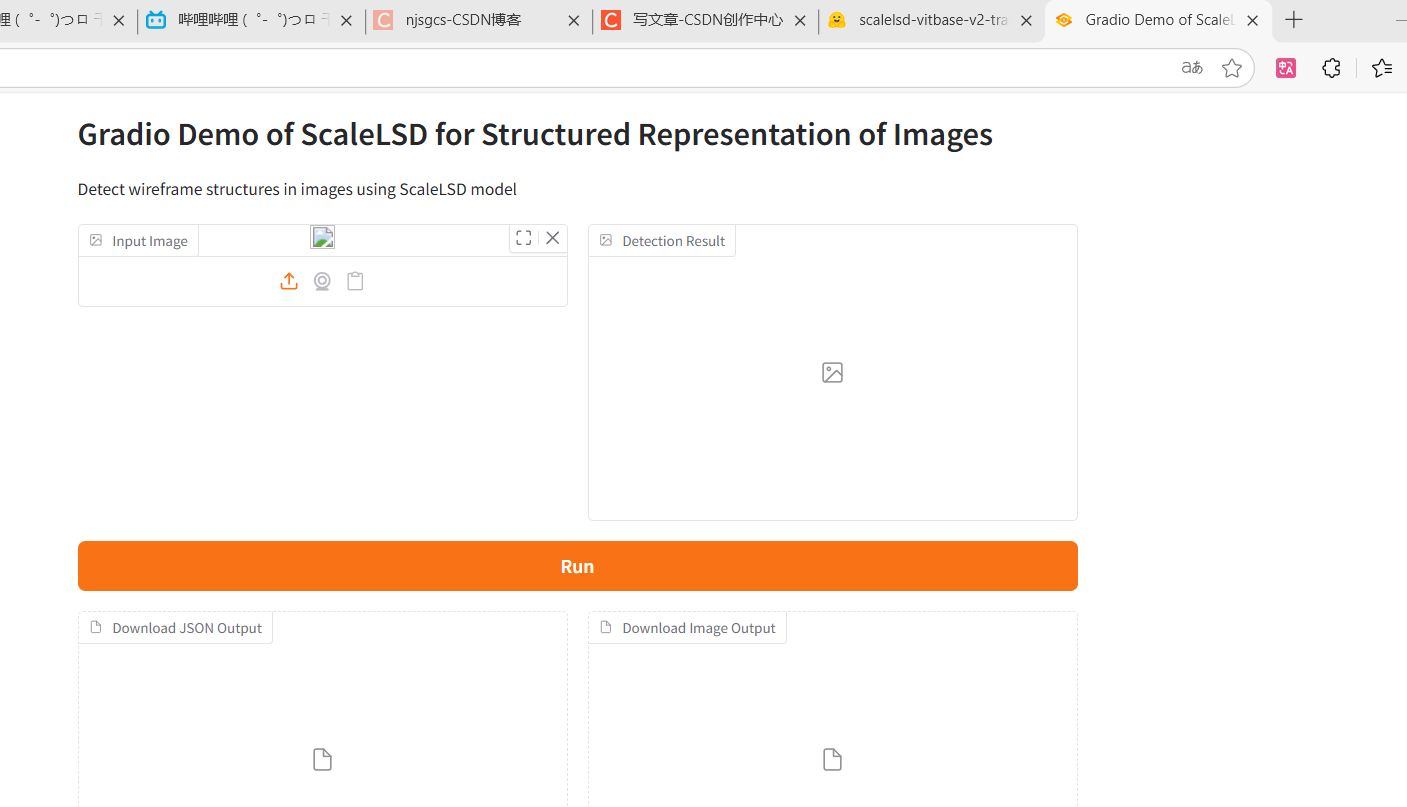

ptsd了看到這個界面就想到ai畫圖

?

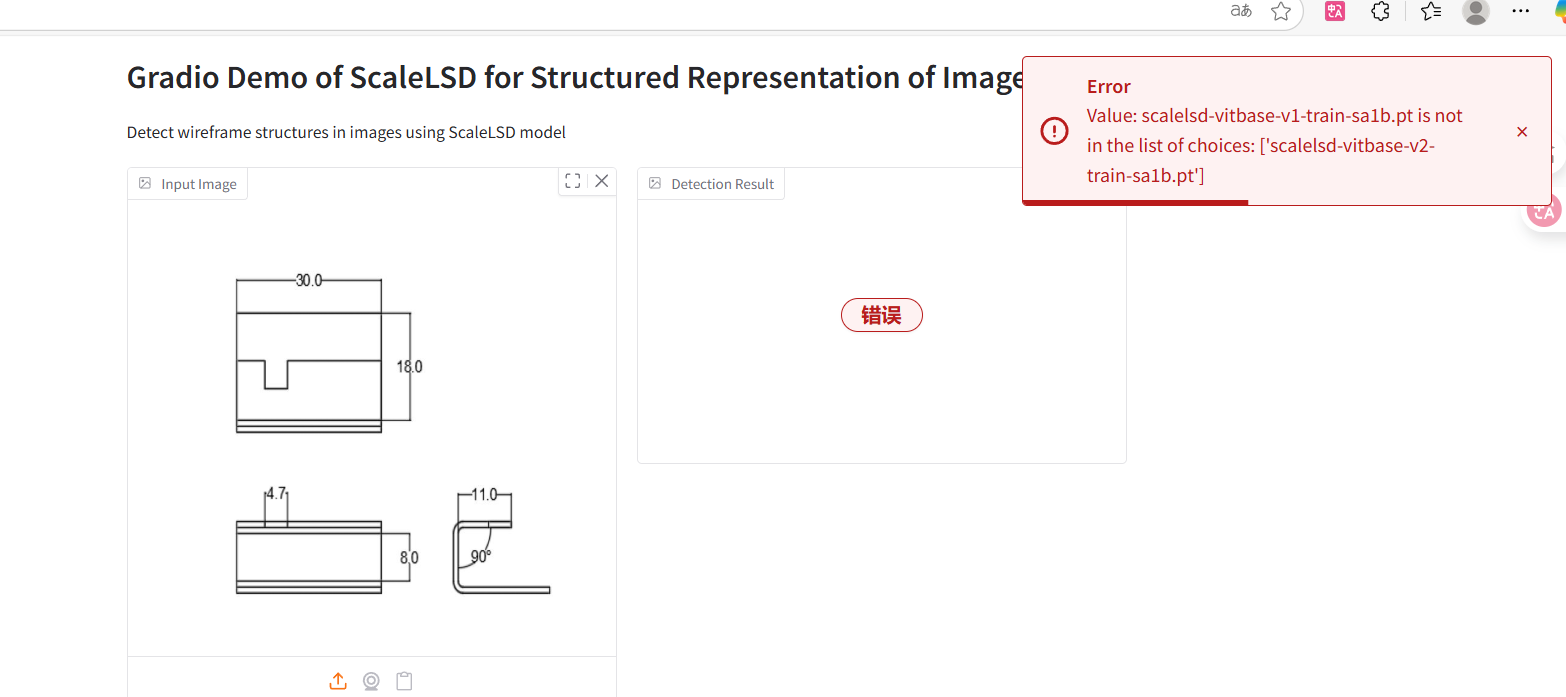

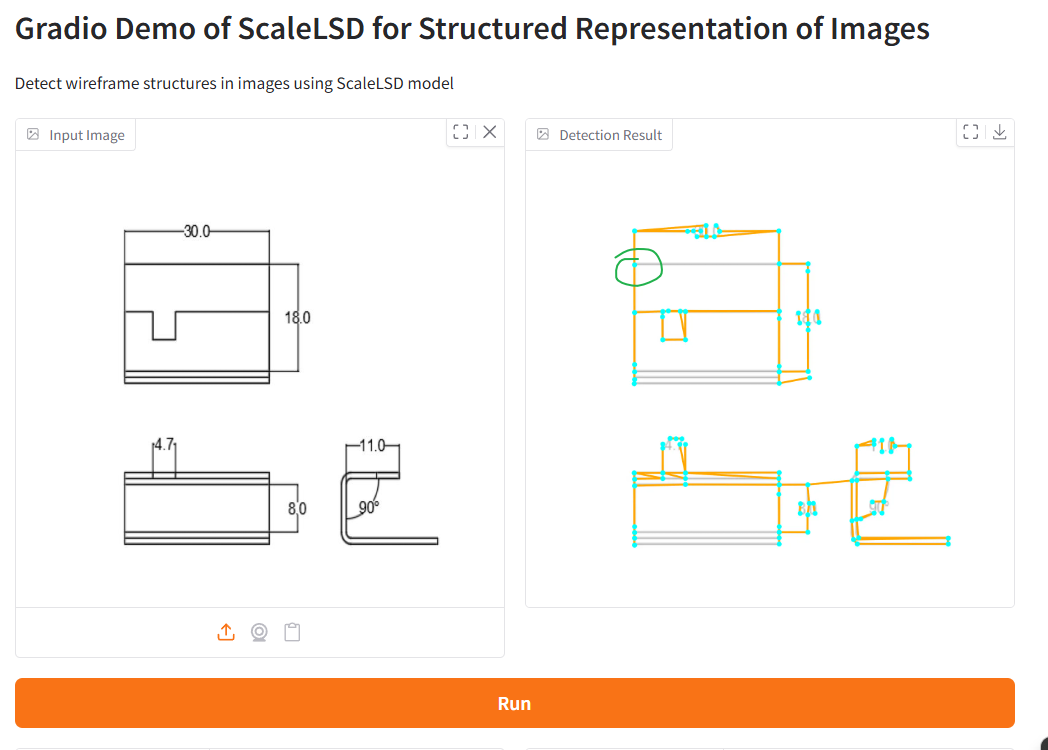

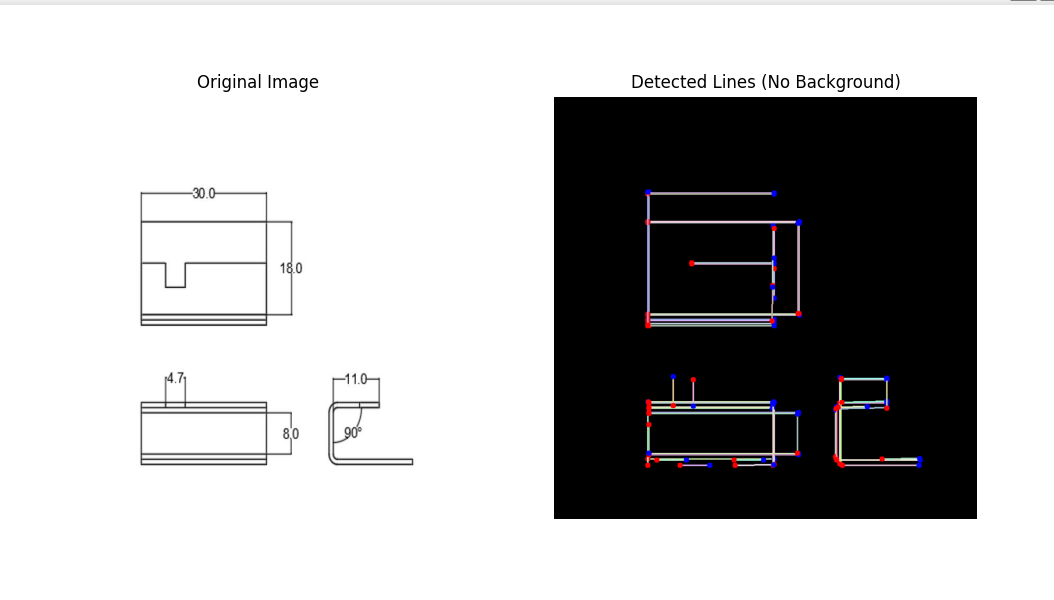

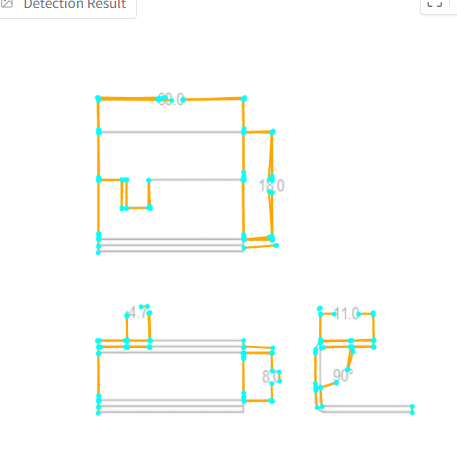

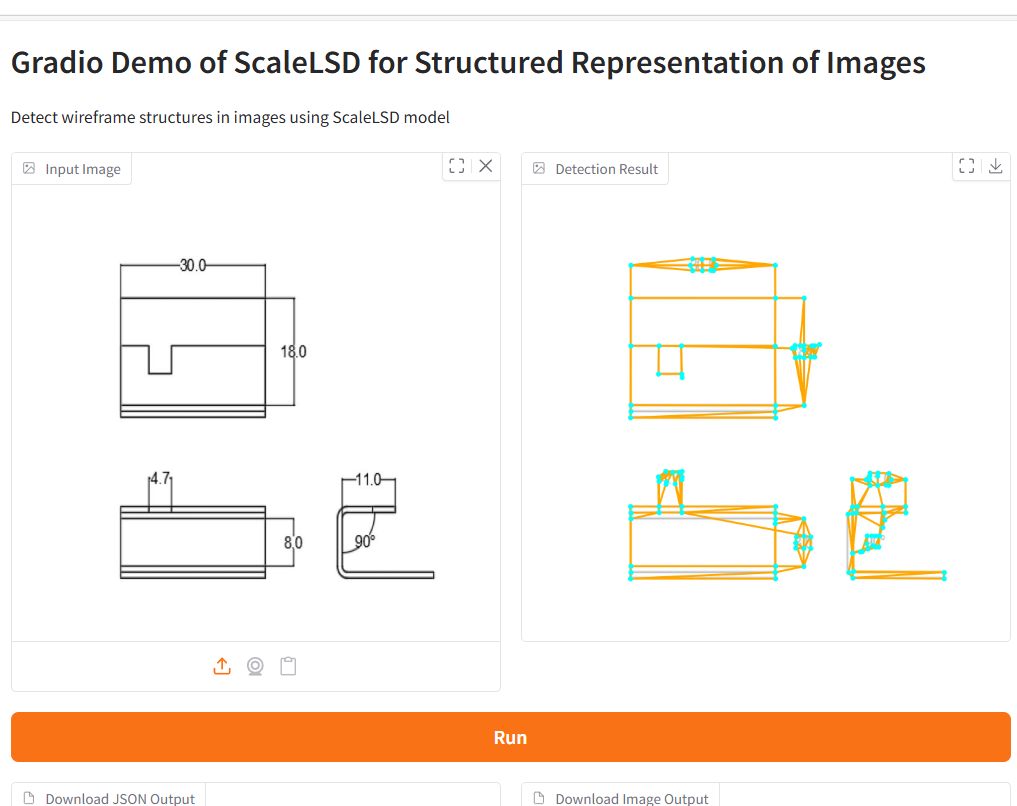

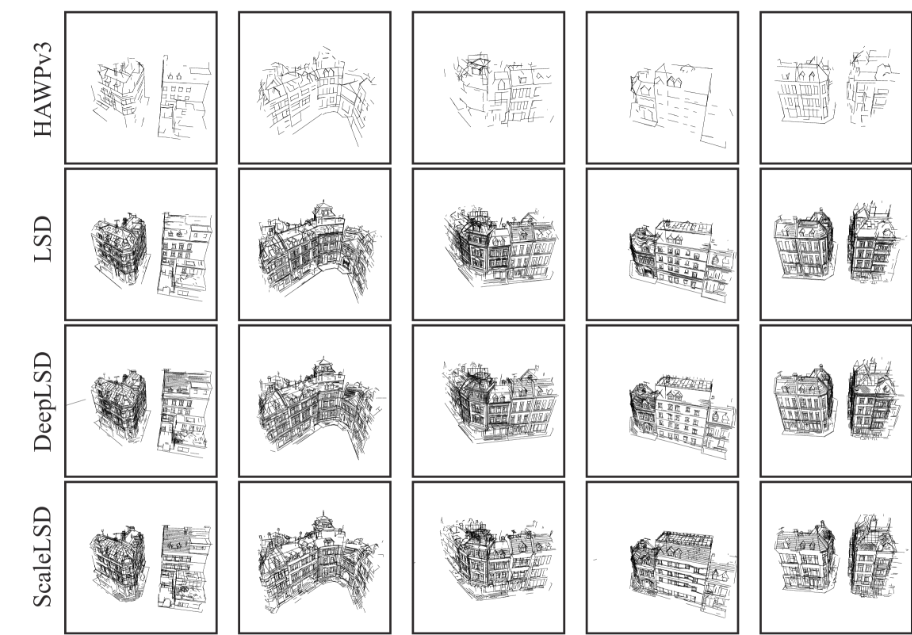

比霍爾識別好,知道這里分兩段,但是無中生有了線也缺線了,

????????

?

?

有encoder

?

?

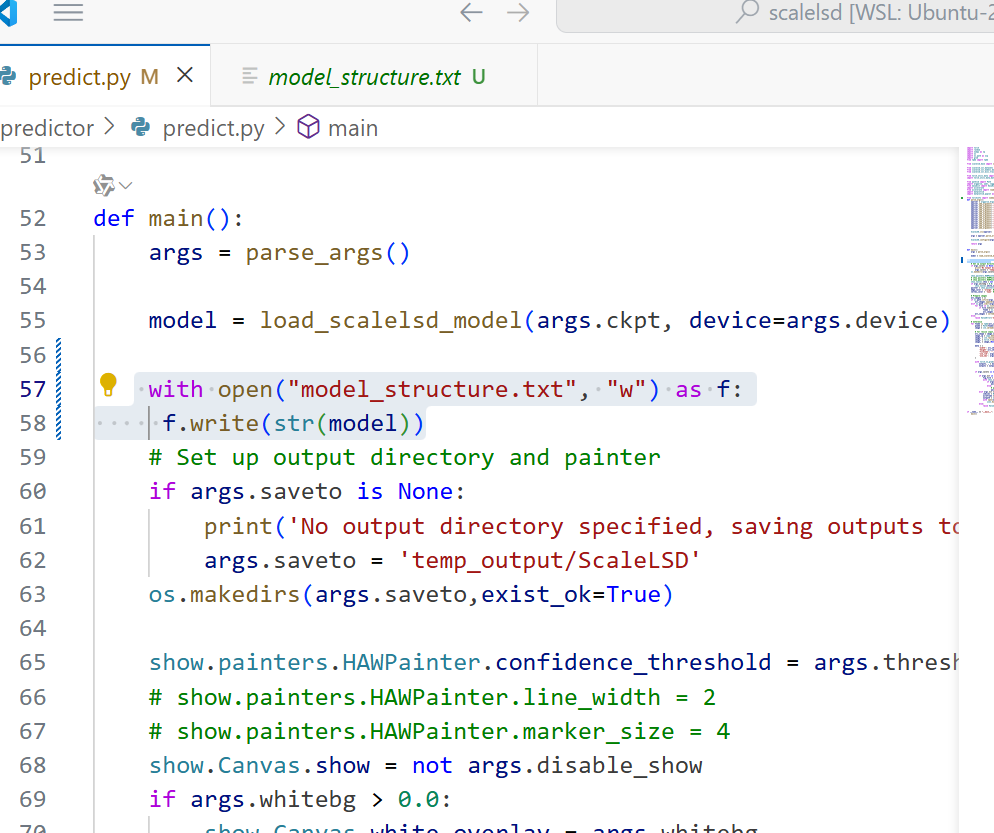

torchinfo看這個模型會報錯好像是動態模型,模型里帶if結構

?

with open("model_structure.txt", "w") as f:f.write(str(model))ScaleLSD((backbone): DPTFieldModel((pretrained): Module((model): VisionTransformer((patch_embed): HybridEmbed((backbone): ResNetV2((stem): Sequential((conv): StdConv2dSame(3, 64, kernel_size=(7, 7), stride=(2, 2), bias=False)(norm): GroupNormAct(32, 64, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(pool): MaxPool2dSame(kernel_size=(3, 3), stride=(2, 2), padding=(0, 0), dilation=(1, 1), ceil_mode=False))(stages): Sequential((0): ResNetStage((blocks): Sequential((0): Bottleneck((downsample): DownsampleConv((conv): StdConv2dSame(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm): GroupNormAct(32, 256, eps=1e-05, affine=True(drop): Identity()(act): Identity()))(conv1): StdConv2dSame(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm1): GroupNormAct(32, 64, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(conv2): StdConv2dSame(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(norm2): GroupNormAct(32, 64, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(conv3): StdConv2dSame(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm3): GroupNormAct(32, 256, eps=1e-05, affine=True(drop): Identity()(act): Identity())(drop_path): Identity()(act3): ReLU(inplace=True))(1): Bottleneck((conv1): StdConv2dSame(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm1): GroupNormAct(32, 64, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(conv2): StdConv2dSame(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(norm2): GroupNormAct(32, 64, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(conv3): StdConv2dSame(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm3): GroupNormAct(32, 256, eps=1e-05, affine=True(drop): Identity()(act): Identity())(drop_path): Identity()(act3): ReLU(inplace=True))(2): Bottleneck((conv1): StdConv2dSame(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm1): GroupNormAct(32, 64, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(conv2): StdConv2dSame(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(norm2): GroupNormAct(32, 64, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(conv3): StdConv2dSame(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm3): GroupNormAct(32, 256, eps=1e-05, affine=True(drop): Identity()(act): Identity())(drop_path): Identity()(act3): ReLU(inplace=True))))(1): ResNetStage((blocks): Sequential((0): Bottleneck((downsample): DownsampleConv((conv): StdConv2dSame(256, 512, kernel_size=(1, 1), stride=(2, 2), bias=False)(norm): GroupNormAct(32, 512, eps=1e-05, affine=True(drop): Identity()(act): Identity()))(conv1): StdConv2dSame(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm1): GroupNormAct(32, 128, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(conv2): StdConv2dSame(128, 128, kernel_size=(3, 3), stride=(2, 2), bias=False)(norm2): GroupNormAct(32, 128, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(conv3): StdConv2dSame(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm3): GroupNormAct(32, 512, eps=1e-05, affine=True(drop): Identity()(act): Identity())(drop_path): Identity()(act3): ReLU(inplace=True))(1): Bottleneck((conv1): StdConv2dSame(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm1): GroupNormAct(32, 128, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(conv2): StdConv2dSame(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(norm2): GroupNormAct(32, 128, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(conv3): StdConv2dSame(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm3): GroupNormAct(32, 512, eps=1e-05, affine=True(drop): Identity()(act): Identity())(drop_path): Identity()(act3): ReLU(inplace=True))(2): Bottleneck((conv1): StdConv2dSame(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm1): GroupNormAct(32, 128, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(conv2): StdConv2dSame(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(norm2): GroupNormAct(32, 128, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(conv3): StdConv2dSame(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm3): GroupNormAct(32, 512, eps=1e-05, affine=True(drop): Identity()(act): Identity())(drop_path): Identity()(act3): ReLU(inplace=True))(3): Bottleneck((conv1): StdConv2dSame(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm1): GroupNormAct(32, 128, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(conv2): StdConv2dSame(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(norm2): GroupNormAct(32, 128, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(conv3): StdConv2dSame(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm3): GroupNormAct(32, 512, eps=1e-05, affine=True(drop): Identity()(act): Identity())(drop_path): Identity()(act3): ReLU(inplace=True))))(2): ResNetStage((blocks): Sequential((0): Bottleneck((downsample): DownsampleConv((conv): StdConv2dSame(512, 1024, kernel_size=(1, 1), stride=(2, 2), bias=False)(norm): GroupNormAct(32, 1024, eps=1e-05, affine=True(drop): Identity()(act): Identity()))(conv1): StdConv2dSame(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm1): GroupNormAct(32, 256, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(conv2): StdConv2dSame(256, 256, kernel_size=(3, 3), stride=(2, 2), bias=False)(norm2): GroupNormAct(32, 256, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(conv3): StdConv2dSame(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm3): GroupNormAct(32, 1024, eps=1e-05, affine=True(drop): Identity()(act): Identity())(drop_path): Identity()(act3): ReLU(inplace=True))(1): Bottleneck((conv1): StdConv2dSame(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm1): GroupNormAct(32, 256, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(conv2): StdConv2dSame(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(norm2): GroupNormAct(32, 256, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(conv3): StdConv2dSame(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm3): GroupNormAct(32, 1024, eps=1e-05, affine=True(drop): Identity()(act): Identity())(drop_path): Identity()(act3): ReLU(inplace=True))(2): Bottleneck((conv1): StdConv2dSame(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm1): GroupNormAct(32, 256, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(conv2): StdConv2dSame(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(norm2): GroupNormAct(32, 256, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(conv3): StdConv2dSame(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm3): GroupNormAct(32, 1024, eps=1e-05, affine=True(drop): Identity()(act): Identity())(drop_path): Identity()(act3): ReLU(inplace=True))(3): Bottleneck((conv1): StdConv2dSame(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm1): GroupNormAct(32, 256, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(conv2): StdConv2dSame(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(norm2): GroupNormAct(32, 256, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(conv3): StdConv2dSame(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm3): GroupNormAct(32, 1024, eps=1e-05, affine=True(drop): Identity()(act): Identity())(drop_path): Identity()(act3): ReLU(inplace=True))(4): Bottleneck((conv1): StdConv2dSame(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm1): GroupNormAct(32, 256, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(conv2): StdConv2dSame(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(norm2): GroupNormAct(32, 256, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(conv3): StdConv2dSame(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm3): GroupNormAct(32, 1024, eps=1e-05, affine=True(drop): Identity()(act): Identity())(drop_path): Identity()(act3): ReLU(inplace=True))(5): Bottleneck((conv1): StdConv2dSame(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm1): GroupNormAct(32, 256, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(conv2): StdConv2dSame(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(norm2): GroupNormAct(32, 256, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(conv3): StdConv2dSame(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm3): GroupNormAct(32, 1024, eps=1e-05, affine=True(drop): Identity()(act): Identity())(drop_path): Identity()(act3): ReLU(inplace=True))(6): Bottleneck((conv1): StdConv2dSame(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm1): GroupNormAct(32, 256, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(conv2): StdConv2dSame(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(norm2): GroupNormAct(32, 256, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(conv3): StdConv2dSame(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm3): GroupNormAct(32, 1024, eps=1e-05, affine=True(drop): Identity()(act): Identity())(drop_path): Identity()(act3): ReLU(inplace=True))(7): Bottleneck((conv1): StdConv2dSame(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm1): GroupNormAct(32, 256, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(conv2): StdConv2dSame(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(norm2): GroupNormAct(32, 256, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(conv3): StdConv2dSame(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm3): GroupNormAct(32, 1024, eps=1e-05, affine=True(drop): Identity()(act): Identity())(drop_path): Identity()(act3): ReLU(inplace=True))(8): Bottleneck((conv1): StdConv2dSame(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm1): GroupNormAct(32, 256, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(conv2): StdConv2dSame(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(norm2): GroupNormAct(32, 256, eps=1e-05, affine=True(drop): Identity()(act): ReLU(inplace=True))(conv3): StdConv2dSame(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)(norm3): GroupNormAct(32, 1024, eps=1e-05, affine=True(drop): Identity()(act): Identity())(drop_path): Identity()(act3): ReLU(inplace=True)))))(norm): Identity()(head): ClassifierHead((global_pool): SelectAdaptivePool2d(pool_type=, flatten=Identity())(drop): Dropout(p=0.0, inplace=False)(fc): Identity()(flatten): Identity()))(proj): Conv2d(1024, 768, kernel_size=(1, 1), stride=(1, 1)))(pos_drop): Dropout(p=0.0, inplace=False)(patch_drop): Identity()(norm_pre): Identity()(blocks): Sequential((0): Block((norm1): LayerNorm((768,), eps=1e-06, elementwise_affine=True)(attn): Attention((qkv): Linear(in_features=768, out_features=2304, bias=True)(q_norm): Identity()(k_norm): Identity()(attn_drop): Dropout(p=0.0, inplace=False)(norm): Identity()(proj): Linear(in_features=768, out_features=768, bias=True)(proj_drop): Dropout(p=0.0, inplace=False))(ls1): Identity()(drop_path1): Identity()(norm2): LayerNorm((768,), eps=1e-06, elementwise_affine=True)(mlp): Mlp((fc1): Linear(in_features=768, out_features=3072, bias=True)(act): GELU(approximate='none')(drop1): Dropout(p=0.0, inplace=False)(norm): Identity()(fc2): Linear(in_features=3072, out_features=768, bias=True)(drop2): Dropout(p=0.0, inplace=False))(ls2): Identity()(drop_path2): Identity())(1): Block((norm1): LayerNorm((768,), eps=1e-06, elementwise_affine=True)(attn): Attention((qkv): Linear(in_features=768, out_features=2304, bias=True)(q_norm): Identity()(k_norm): Identity()(attn_drop): Dropout(p=0.0, inplace=False)(norm): Identity()(proj): Linear(in_features=768, out_features=768, bias=True)(proj_drop): Dropout(p=0.0, inplace=False))(ls1): Identity()(drop_path1): Identity()(norm2): LayerNorm((768,), eps=1e-06, elementwise_affine=True)(mlp): Mlp((fc1): Linear(in_features=768, out_features=3072, bias=True)(act): GELU(approximate='none')(drop1): Dropout(p=0.0, inplace=False)(norm): Identity()(fc2): Linear(in_features=3072, out_features=768, bias=True)(drop2): Dropout(p=0.0, inplace=False))(ls2): Identity()(drop_path2): Identity())(2): Block((norm1): LayerNorm((768,), eps=1e-06, elementwise_affine=True)(attn): Attention((qkv): Linear(in_features=768, out_features=2304, bias=True)(q_norm): Identity()(k_norm): Identity()(attn_drop): Dropout(p=0.0, inplace=False)(norm): Identity()(proj): Linear(in_features=768, out_features=768, bias=True)(proj_drop): Dropout(p=0.0, inplace=False))(ls1): Identity()(drop_path1): Identity()(norm2): LayerNorm((768,), eps=1e-06, elementwise_affine=True)(mlp): Mlp((fc1): Linear(in_features=768, out_features=3072, bias=True)(act): GELU(approximate='none')(drop1): Dropout(p=0.0, inplace=False)(norm): Identity()(fc2): Linear(in_features=3072, out_features=768, bias=True)(drop2): Dropout(p=0.0, inplace=False))(ls2): Identity()(drop_path2): Identity())(3): Block((norm1): LayerNorm((768,), eps=1e-06, elementwise_affine=True)(attn): Attention((qkv): Linear(in_features=768, out_features=2304, bias=True)(q_norm): Identity()(k_norm): Identity()(attn_drop): Dropout(p=0.0, inplace=False)(norm): Identity()(proj): Linear(in_features=768, out_features=768, bias=True)(proj_drop): Dropout(p=0.0, inplace=False))(ls1): Identity()(drop_path1): Identity()(norm2): LayerNorm((768,), eps=1e-06, elementwise_affine=True)(mlp): Mlp((fc1): Linear(in_features=768, out_features=3072, bias=True)(act): GELU(approximate='none')(drop1): Dropout(p=0.0, inplace=False)(norm): Identity()(fc2): Linear(in_features=3072, out_features=768, bias=True)(drop2): Dropout(p=0.0, inplace=False))(ls2): Identity()(drop_path2): Identity())(4): Block((norm1): LayerNorm((768,), eps=1e-06, elementwise_affine=True)(attn): Attention((qkv): Linear(in_features=768, out_features=2304, bias=True)(q_norm): Identity()(k_norm): Identity()(attn_drop): Dropout(p=0.0, inplace=False)(norm): Identity()(proj): Linear(in_features=768, out_features=768, bias=True)(proj_drop): Dropout(p=0.0, inplace=False))(ls1): Identity()(drop_path1): Identity()(norm2): LayerNorm((768,), eps=1e-06, elementwise_affine=True)(mlp): Mlp((fc1): Linear(in_features=768, out_features=3072, bias=True)(act): GELU(approximate='none')(drop1): Dropout(p=0.0, inplace=False)(norm): Identity()(fc2): Linear(in_features=3072, out_features=768, bias=True)(drop2): Dropout(p=0.0, inplace=False))(ls2): Identity()(drop_path2): Identity())(5): Block((norm1): LayerNorm((768,), eps=1e-06, elementwise_affine=True)(attn): Attention((qkv): Linear(in_features=768, out_features=2304, bias=True)(q_norm): Identity()(k_norm): Identity()(attn_drop): Dropout(p=0.0, inplace=False)(norm): Identity()(proj): Linear(in_features=768, out_features=768, bias=True)(proj_drop): Dropout(p=0.0, inplace=False))(ls1): Identity()(drop_path1): Identity()(norm2): LayerNorm((768,), eps=1e-06, elementwise_affine=True)(mlp): Mlp((fc1): Linear(in_features=768, out_features=3072, bias=True)(act): GELU(approximate='none')(drop1): Dropout(p=0.0, inplace=False)(norm): Identity()(fc2): Linear(in_features=3072, out_features=768, bias=True)(drop2): Dropout(p=0.0, inplace=False))(ls2): Identity()(drop_path2): Identity())(6): Block((norm1): LayerNorm((768,), eps=1e-06, elementwise_affine=True)(attn): Attention((qkv): Linear(in_features=768, out_features=2304, bias=True)(q_norm): Identity()(k_norm): Identity()(attn_drop): Dropout(p=0.0, inplace=False)(norm): Identity()(proj): Linear(in_features=768, out_features=768, bias=True)(proj_drop): Dropout(p=0.0, inplace=False))(ls1): Identity()(drop_path1): Identity()(norm2): LayerNorm((768,), eps=1e-06, elementwise_affine=True)(mlp): Mlp((fc1): Linear(in_features=768, out_features=3072, bias=True)(act): GELU(approximate='none')(drop1): Dropout(p=0.0, inplace=False)(norm): Identity()(fc2): Linear(in_features=3072, out_features=768, bias=True)(drop2): Dropout(p=0.0, inplace=False))(ls2): Identity()(drop_path2): Identity())(7): Block((norm1): LayerNorm((768,), eps=1e-06, elementwise_affine=True)(attn): Attention((qkv): Linear(in_features=768, out_features=2304, bias=True)(q_norm): Identity()(k_norm): Identity()(attn_drop): Dropout(p=0.0, inplace=False)(norm): Identity()(proj): Linear(in_features=768, out_features=768, bias=True)(proj_drop): Dropout(p=0.0, inplace=False))(ls1): Identity()(drop_path1): Identity()(norm2): LayerNorm((768,), eps=1e-06, elementwise_affine=True)(mlp): Mlp((fc1): Linear(in_features=768, out_features=3072, bias=True)(act): GELU(approximate='none')(drop1): Dropout(p=0.0, inplace=False)(norm): Identity()(fc2): Linear(in_features=3072, out_features=768, bias=True)(drop2): Dropout(p=0.0, inplace=False))(ls2): Identity()(drop_path2): Identity())(8): Block((norm1): LayerNorm((768,), eps=1e-06, elementwise_affine=True)(attn): Attention((qkv): Linear(in_features=768, out_features=2304, bias=True)(q_norm): Identity()(k_norm): Identity()(attn_drop): Dropout(p=0.0, inplace=False)(norm): Identity()(proj): Linear(in_features=768, out_features=768, bias=True)(proj_drop): Dropout(p=0.0, inplace=False))(ls1): Identity()(drop_path1): Identity()(norm2): LayerNorm((768,), eps=1e-06, elementwise_affine=True)(mlp): Mlp((fc1): Linear(in_features=768, out_features=3072, bias=True)(act): GELU(approximate='none')(drop1): Dropout(p=0.0, inplace=False)(norm): Identity()(fc2): Linear(in_features=3072, out_features=768, bias=True)(drop2): Dropout(p=0.0, inplace=False))(ls2): Identity()(drop_path2): Identity())(9): Block((norm1): LayerNorm((768,), eps=1e-06, elementwise_affine=True)(attn): Attention((qkv): Linear(in_features=768, out_features=2304, bias=True)(q_norm): Identity()(k_norm): Identity()(attn_drop): Dropout(p=0.0, inplace=False)(norm): Identity()(proj): Linear(in_features=768, out_features=768, bias=True)(proj_drop): Dropout(p=0.0, inplace=False))(ls1): Identity()(drop_path1): Identity()(norm2): LayerNorm((768,), eps=1e-06, elementwise_affine=True)(mlp): Mlp((fc1): Linear(in_features=768, out_features=3072, bias=True)(act): GELU(approximate='none')(drop1): Dropout(p=0.0, inplace=False)(norm): Identity()(fc2): Linear(in_features=3072, out_features=768, bias=True)(drop2): Dropout(p=0.0, inplace=False))(ls2): Identity()(drop_path2): Identity())(10): Block((norm1): LayerNorm((768,), eps=1e-06, elementwise_affine=True)(attn): Attention((qkv): Linear(in_features=768, out_features=2304, bias=True)(q_norm): Identity()(k_norm): Identity()(attn_drop): Dropout(p=0.0, inplace=False)(norm): Identity()(proj): Linear(in_features=768, out_features=768, bias=True)(proj_drop): Dropout(p=0.0, inplace=False))(ls1): Identity()(drop_path1): Identity()(norm2): LayerNorm((768,), eps=1e-06, elementwise_affine=True)(mlp): Mlp((fc1): Linear(in_features=768, out_features=3072, bias=True)(act): GELU(approximate='none')(drop1): Dropout(p=0.0, inplace=False)(norm): Identity()(fc2): Linear(in_features=3072, out_features=768, bias=True)(drop2): Dropout(p=0.0, inplace=False))(ls2): Identity()(drop_path2): Identity())(11): Block((norm1): LayerNorm((768,), eps=1e-06, elementwise_affine=True)(attn): Attention((qkv): Linear(in_features=768, out_features=2304, bias=True)(q_norm): Identity()(k_norm): Identity()(attn_drop): Dropout(p=0.0, inplace=False)(norm): Identity()(proj): Linear(in_features=768, out_features=768, bias=True)(proj_drop): Dropout(p=0.0, inplace=False))(ls1): Identity()(drop_path1): Identity()(norm2): LayerNorm((768,), eps=1e-06, elementwise_affine=True)(mlp): Mlp((fc1): Linear(in_features=768, out_features=3072, bias=True)(act): GELU(approximate='none')(drop1): Dropout(p=0.0, inplace=False)(norm): Identity()(fc2): Linear(in_features=3072, out_features=768, bias=True)(drop2): Dropout(p=0.0, inplace=False))(ls2): Identity()(drop_path2): Identity()))(norm): LayerNorm((768,), eps=1e-06, elementwise_affine=True)(fc_norm): Identity()(head_drop): Dropout(p=0.0, inplace=False)(head): Linear(in_features=768, out_features=1000, bias=True))(act_postprocess1): Sequential((0): Identity()(1): Identity()(2): Identity())(act_postprocess2): Sequential((0): Identity()(1): Identity()(2): Identity())(act_postprocess3): Sequential((0): ProjectReadout((project): Sequential((0): Linear(in_features=1536, out_features=768, bias=True)(1): GELU(approximate='none')))(1): Transpose()(2): Unflatten(dim=2, unflattened_size=torch.Size([24, 24]))(3): Conv2d(768, 768, kernel_size=(1, 1), stride=(1, 1)))(act_postprocess4): Sequential((0): ProjectReadout((project): Sequential((0): Linear(in_features=1536, out_features=768, bias=True)(1): GELU(approximate='none')))(1): Transpose()(2): Unflatten(dim=2, unflattened_size=torch.Size([24, 24]))(3): Conv2d(768, 768, kernel_size=(1, 1), stride=(1, 1))(4): Conv2d(768, 768, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))))(scratch): Module((layer1_rn): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(layer2_rn): Conv2d(512, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(layer3_rn): Conv2d(768, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(layer4_rn): Conv2d(768, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(refinenet1): FeatureFusionBlock_custom((out_conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1))(resConfUnit1): ResidualConvUnit_custom((conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(activation): ReLU()(skip_add): FloatFunctional((activation_post_process): Identity()))(resConfUnit2): ResidualConvUnit_custom((conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(activation): ReLU()(skip_add): FloatFunctional((activation_post_process): Identity()))(skip_add): FloatFunctional((activation_post_process): Identity()))(refinenet2): FeatureFusionBlock_custom((out_conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1))(resConfUnit1): ResidualConvUnit_custom((conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(activation): ReLU()(skip_add): FloatFunctional((activation_post_process): Identity()))(resConfUnit2): ResidualConvUnit_custom((conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(activation): ReLU()(skip_add): FloatFunctional((activation_post_process): Identity()))(skip_add): FloatFunctional((activation_post_process): Identity()))(refinenet3): FeatureFusionBlock_custom((out_conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1))(resConfUnit1): ResidualConvUnit_custom((conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(activation): ReLU()(skip_add): FloatFunctional((activation_post_process): Identity()))(resConfUnit2): ResidualConvUnit_custom((conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(activation): ReLU()(skip_add): FloatFunctional((activation_post_process): Identity()))(skip_add): FloatFunctional((activation_post_process): Identity()))(refinenet4): FeatureFusionBlock_custom((out_conv): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1))(resConfUnit1): ResidualConvUnit_custom((conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(activation): ReLU()(skip_add): FloatFunctional((activation_post_process): Identity()))(resConfUnit2): ResidualConvUnit_custom((conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)(activation): ReLU()(skip_add): FloatFunctional((activation_post_process): Identity()))(skip_add): FloatFunctional((activation_post_process): Identity()))(output_conv): Sequential((0): Conv2d(256, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(1): ReLU(inplace=True)(2): MultitaskHead((heads): ModuleList((0): Sequential((0): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(1): ReLU(inplace=True)(2): Conv2d(32, 3, kernel_size=(1, 1), stride=(1, 1)))(1-2): 2 x Sequential((0): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(1): ReLU(inplace=True)(2): Conv2d(32, 1, kernel_size=(1, 1), stride=(1, 1)))(3-4): 2 x Sequential((0): Conv2d(128, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))(1): ReLU(inplace=True)(2): Conv2d(32, 2, kernel_size=(1, 1), stride=(1, 1))))))))(loss): CrossEntropyLoss()(bce_loss): BCEWithLogitsLoss()

)

的工具選擇方式)

![[硬件電路-57]:根據電子元器件的受控程度,可以把電子元器件分為:不受控、半受控、完全受控三種大類](http://pic.xiahunao.cn/[硬件電路-57]:根據電子元器件的受控程度,可以把電子元器件分為:不受控、半受控、完全受控三種大類)

![[前端技術基礎]CSS選擇器沖突解決方法-由DeepSeek產生](http://pic.xiahunao.cn/[前端技術基礎]CSS選擇器沖突解決方法-由DeepSeek產生)

0.5.7.3版本)

)