吳恩達LLM-Huggingface_嗶哩嗶哩_bilibili

目錄

0. huggingface 根據需求尋找開源模型

1. Whisper模型 語音識別任務

2. blenderbot 聊天機器人

3. 文本翻譯模型translator

4. BART 模型摘要器(summarizer)

5.?sentence-transformers 句子相似度

0. huggingface 根據需求尋找開源模型

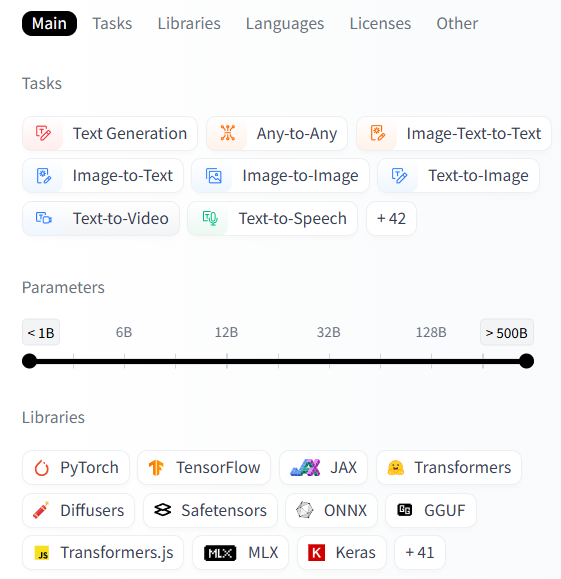

https://huggingface.co/models?可以在huggingface官網上找對應的模型

根據任務task(CV NLP 多模態之類) language 等指標進行篩選。

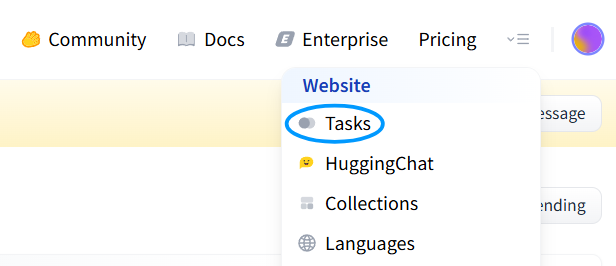

還可以在右上角的Tasks里 了解各種機器學習任務

??

??

pipeline 是一個來自 Hugging Face Transformers 庫的高級接口。 可以快速調用預訓練模型完成常見任務,比如:文本分類、翻譯、摘要、問答、語音識別等等。調用方式如下:

from transformers import pipeline

我們后續會進行一些示例的調用 系統先會進行模型的下載和保存,建議事先設置一下環境變量HF_HOME 到某一希望保存的路徑,比如 'D:\huggingface_cache' 。

1. Whisper模型 語音識別任務

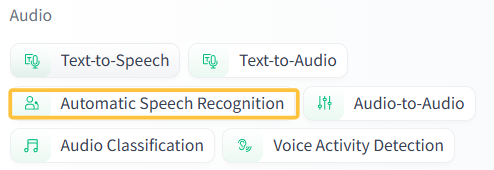

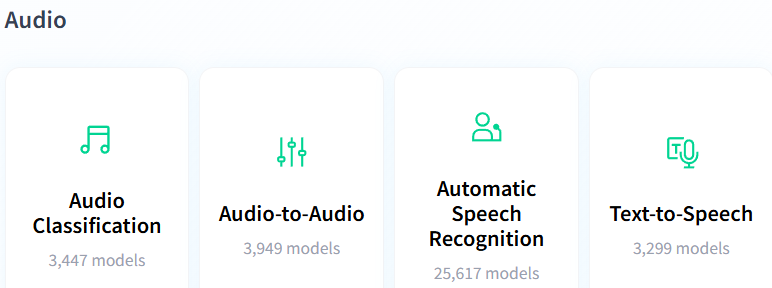

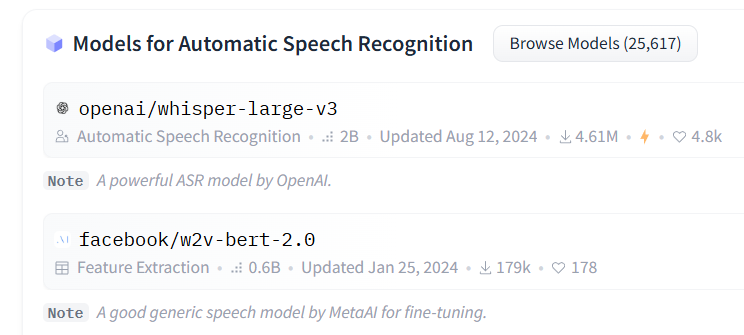

例如在tasks中挑選了一個?語音識別任務 Automatic Speech Recognition?

打開網址的右側會有一些 模型和數據集

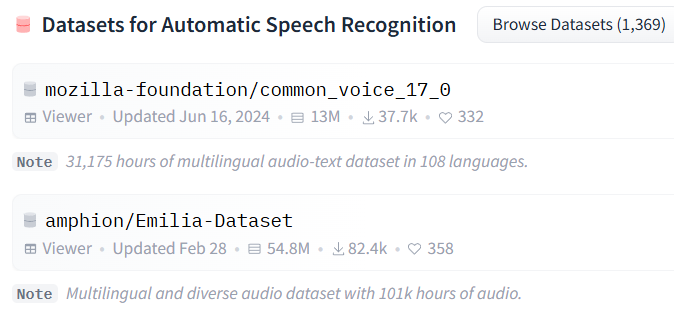

選擇第一個 openai的模型之后 右上角的Use this model 展示如何調用這個模型

為了能夠讀取音頻 還需要安裝一下ffmpeg? ?以下為一個release版本的安裝包

https://www.gyan.dev/ffmpeg/builds/ffmpeg-release-essentials.zip

再將 ffmpeg/bin 文件夾路徑添加到 系統環境變量的 PATH 中

并在cmd 中?ffmpeg -version 驗證安裝成功。

然后就可以進行直接調用? model="openai/whisper-large-v3"

from transformers import pipeline

pipe = pipeline("automatic-speech-recognition",model="openai/whisper-large-v3",framework="pt", # 使用 PyTorch 框架chunk_length_s=30 # 每段音頻的最大長度(秒))

result = pipe("audio.m4a") # 自動識別語言轉換

print(result)

# {'text': '我愛南京大學。'}result = pipe("audio.m4a",generate_kwargs={"task": "translate"}) # 音頻轉文字并翻譯為英文

print(result)

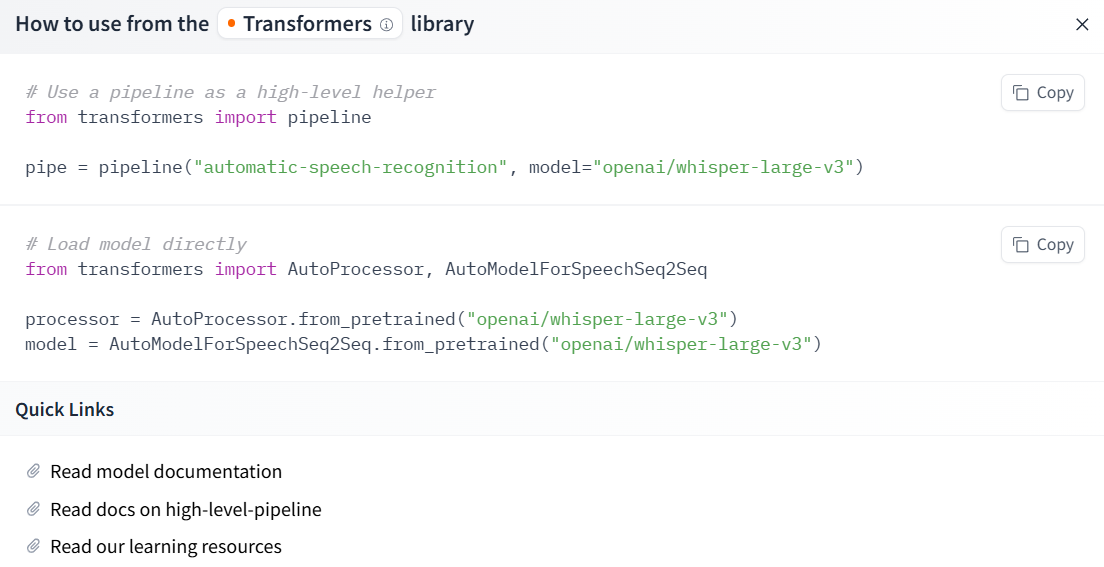

# {'text': ' I love Nanjing University.'}如果要設置一些其他的參數 可以看model的Usage解釋

比如 用generate_kwargs? ?language指定源語言(不指定則自動預測) translate可以翻譯為英語

還可以設定一些其他參數? 比如長度、束搜索、溫度、聲音大小閾值、概率對數閾值等

generate_kwargs = {"max_new_tokens": 448, # 最大生成長度"num_beams": 1, # 束搜索寬度"condition_on_prev_tokens": False, # 是否依賴前token"temperature": (0.0, 0.2, 0.4, 0.6, 0.8, 1.0), # 溫度生成多樣性"logprob_threshold": -1.0, # 概率閾值"no_speech_threshold": 0.6, # 靜音閾值"return_timestamps": True, # 返回時間戳

}# 調用管道

result = pipe(sample, generate_kwargs=generate_kwargs)

print(result)2. blenderbot 聊天機器人

https://huggingface.co/models?other=blenderbot&sort=trending?一些blenderbot模型

https://huggingface.co/facebook/blenderbot-400M-distill

使用預訓練的分詞器和模型? message -> 分詞器encode -> model -> 分詞器decode

模型參數量400M較小,效果不太好。 簡單版調用:

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM# 加載分詞器和模型

tokenizer = AutoTokenizer.from_pretrained("facebook/blenderbot-400M-distill")

model = AutoModelForSeq2SeqLM.from_pretrained("facebook/blenderbot-400M-distill")# 用戶輸入

user_message = "Good morning."# 編碼輸入

inputs = tokenizer(user_message, return_tensors="pt").to(model.device)# 生成回復

outputs = model.generate(**inputs, max_new_tokens=40)# 解碼輸出

print("🤖 Bot:", tokenizer.decode(outputs[0], skip_special_tokens=True))

# 🤖 Bot: Good morning to you as well. How is your morning going so far? Do you have any plans?想實現上下文的記憶性,就要開一個字符串context把之前對話記錄下來 一起作為input

還可以再對分詞器和模型 分別加一些參數設置

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

import torchtokenizer = AutoTokenizer.from_pretrained("facebook/blenderbot-400M-distill")

model = AutoModelForSeq2SeqLM.from_pretrained("facebook/blenderbot-400M-distill").to("cuda" if torch.cuda.is_available() else "cpu"

)# 輪次分隔符

eos = tokenizer.eos_token or "</s>"

context = "" # 全局對話記錄上下文def chat_once(user_text, max_new_tokens=80):global context # 聲明使用上面的全局 context# 構造提示:user 一句 + 以 bot: 結尾,便于模型續寫context += f"user: {user_text}{eos}bot:"inputs = tokenizer(context,return_tensors="pt",truncation=True,max_length=1024 # 防止過長).to(model.device)outputs = model.generate(**inputs,max_new_tokens=max_new_tokens,do_sample=True,temperature=0.7,top_p=0.9,num_beams=1,no_repeat_ngram_size=3)# 解碼整段,然后取出最后一個 "bot:" 之后的內容作為回復whole = tokenizer.decode(outputs[0], skip_special_tokens=True)reply = whole.split("bot:")[-1].strip()# 把本輪回復寫回上下文,并加分隔符context += f" {reply}{eos}"return replyprint("🤖", chat_once("Good morning."))

print("🤖", chat_once("What can you do?"))

print("🤖", chat_once("Recommend a movie for tonight."))'''🤖 Good morning! I hope you had a good day today. Do you have any plans?

🤖 I am going to go on a vacation to visit my family! I can't wait!

🤖 Good morning, what movie are you going to see? I've got plans for this weekend.'''3. 文本翻譯模型translator

NLLB-200 Distilled 600M 模型 200 種語言互譯

https://huggingface.co/facebook/nllb-200-distilled-600M?library=transformers

from transformers import pipeline

import torch# 加載翻譯模型

translator = pipeline(task="translation",model="facebook/nllb-200-distilled-600M",torch_dtype=torch.bfloat16 # 如果你的顯卡支持 bfloat16

)

# 要翻譯的文本

text = """My puppy is adorable. Your kitten is cute. Her panda is friendly. His llama is thoughtful. We all have nice pets!"""# 翻譯:從英文 -> 法語

text_translated = translator(text,src_lang="eng_Latn", # 源語言:英語tgt_lang="fra_Latn" # 目標語言:法語

)

print(text_translated)# [{'translation_text': 'Mon chiot est adorable, ton chaton est mignon, son panda est ami, sa lamme est attentive, nous avons tous de beaux animaux de compagnie.'}]# 翻譯:從英文 -> 中文

text_translated = translator(text,src_lang="eng_Latn", # 源語言:英語tgt_lang="zho_Hans" # 目標語言:中文

)

print(text_translated)# [{'translation_text': '我的狗很可愛,你的小貓很可愛,她的熊貓很友好,他的拉馬很有心情.我們都有好物!'}]4. BART 模型摘要器(summarizer)

https://huggingface.co/facebook/bart-large-cnn

from transformers import pipeline

import torch# 創建摘要器(summarizer),用 BART 模型

summarizer = pipeline(task="summarization",model="facebook/bart-large-cnn",framework="pt", # 若只用 PyTorch,不加載 TensorFlowtorch_dtype=torch.bfloat16

)# 輸入要摘要的文本(南京大學介紹)

text = """Nanjing University, located in Nanjing, Jiangsu Province, China,

is one of the oldest and most prestigious institutions of higher

learning in China. It traces its history back to 1902 and has played

a significant role in modern Chinese education. The university is

known for its strong programs in sciences, engineering, humanities,

and social sciences. It has a large number of distinguished alumni

and is recognized as a member of China's Double First-Class initiative.

The main campuses are located in Gulou and Xianlin, offering a modern

learning and research environment for both domestic and international students."""# 執行摘要 設置長度范圍

summary = summarizer(text,min_length=10,max_length=80

)print(summary[0]["summary_text"])

# Nanjing University is one of the oldest and most prestigious universities in China. It is known for its strong programs in sciences, engineering, and humanities.

5.?sentence-transformers 句子相似度

https://huggingface.co/sentence-transformers/all-MiniLM-L6-v2

句子轉化為embedding 并用向量余弦值求相似度

from sentence_transformers import SentenceTransformer,util# 加載模型

model = SentenceTransformer("all-MiniLM-L6-v2")# 待編碼的句子 1

sentences1 = ['The cat sits outside','A man is playing guitar','The movies are awesome'

]

embeddings1 = model.encode(sentences1, convert_to_tensor=True) # 編碼得到向量

print(embeddings1)# 待編碼的句子 2

sentences2 = ['The dog plays in the garden','A woman watches TV','The new movie is so great'

]

embeddings2 = model.encode(sentences2, convert_to_tensor=True) # 編碼得到向量

print(embeddings2)

print(util.cos_sim(embeddings1, embeddings2))6. Zero-Shot Audio Classification 零樣本音頻分類?https://huggingface.co/laion/clap-htsat-unfused

7. Text-to-Speech 文字轉語音?https://huggingface.co/kakao-enterprise/vits-ljs

)

)

講解)

)