編解碼

問題引入

例如:

https://www.baidu.com/s?wd=章若楠

https://www.baidu.com/s?wd=%E7%AB%A0%E8%8B%A5%E6%A5%A0

第二部分的一串亂碼就是章若楠

如果這里是寫的章若楠就會

產生這樣的錯誤

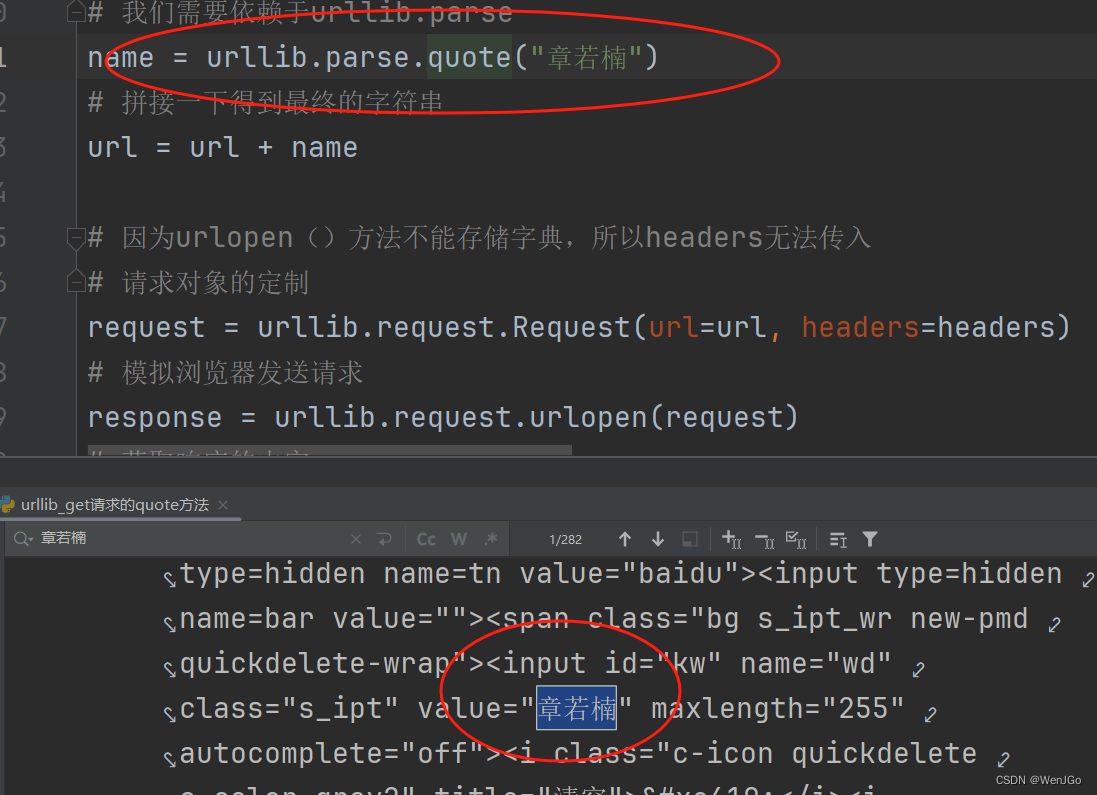

所以我們就可以使用get請求方式的quote方法了

get請求方式的quote()方法

urllib.parse.quote("章若楠"):可將參數中的中文變成Unicode編碼

import urllib.request

import urllib.parseurl = "https://www.baidu.com/s?wd="headers = {"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/122.0.0.0 Safari/537.36"

}

# 將周杰倫三個字變成Unicode格式

# 我們需要依賴于urllib.parse

name = urllib.parse.quote("章若楠")

# 拼接一下得到最終的字符串

url = url + name# 因為urlopen()方法不能存儲字典,所以headers無法傳入

# 請求對象的定制

request = urllib.request.Request(url=url, headers=headers)

# 模擬瀏覽器發送請求

response = urllib.request.urlopen(request)

# 獲取響應的內容

content = response.read().decode("utf-8")print(content)成功查詢出來結果?

get請求的urlencode方法

應用場景:多個參數時

例如如下URL有章若楠和女兩個參數,也可以使用quote,但是比較麻煩

url = "https://www.baidu.com/s?wd=章若楠&sex=女"但是如果使用urlencode方法就比較容易;呃

data = {"wd": "章若楠","sex": "女",

}

a = urllib.parse.urlencode(data)

print(a)

整體代碼示例?

import urllib.request

import urllib.parseurl = "https://www.baidu.com/s?"data = {"wd": "章若楠","sex": "女","location": "浙江"

}

new_data = urllib.parse.urlencode(data)

# 請求資源路徑

url = url + new_dataheaders = {"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/122.0.0.0 Safari/537.36"

}# 請求對象的定制

request = urllib.request.Request(url=url, headers=headers)# 模擬瀏覽器發送請求

response = urllib.request.urlopen(request)# 獲取網頁源碼數據

content = response.read().decode("utf-8")

print(content)post請求百度翻譯

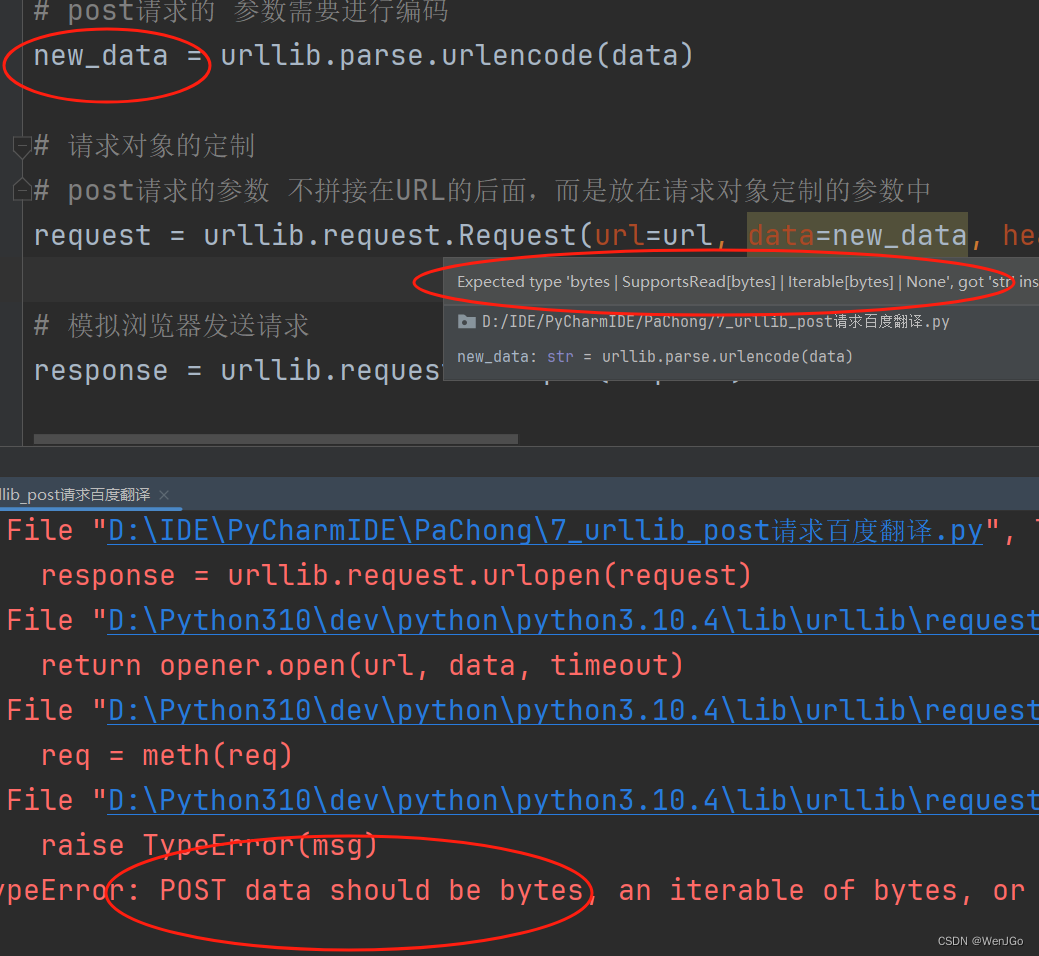

(1)post請求的參數需要進行編碼

new_data = urllib.parse.urlencode(data)

(2)參數放置在請求對象定制的參數中

request = urllib.request.Request(url=url, data=new_data, headers=headers)

(3)編碼之后需要調用encode方法,否則會報錯

new_data = urllib.parse.urlencode(data).encode("utf-8")

????????但是即使是加了encode將data編碼之后,打印出來的內容還是亂碼,這時候就需要將content從字符串轉換成JSON對象了

整體代碼如下:

import urllib.request

import urllib.parse

import json# post請求

url = "https://fanyi.baidu.com/sug"headers = {"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/122.0.0.0 Safari/537.36"

}data = {"kw": "spider",

}

# post請求的 參數需要進行編碼

new_data = urllib.parse.urlencode(data).encode("utf-8")# 請求對象的定制

# post請求的參數 不拼接在URL的后面,而是放在請求對象定制的參數中

request = urllib.request.Request(url=url, data=new_data, headers=headers)# 模擬瀏覽器發送請求

response = urllib.request.urlopen(request)# 獲取網頁源碼數據

content = response.read().decode("utf-8")# 將字符串轉換為JSON對象

obj = json.loads(content)

print(obj)

post請求百度翻譯之詳細翻譯

百度翻譯存在一個詳細翻譯,位置下圖課可見

然后我們一頓操作就可以得到下面代碼

import urllib.request

import urllib.parse

import json# post請求

url = "https://fanyi.baidu.com/v2transapi?from=en&to=zh"headers = {"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/122.0.0.0 Safari/537.36"

}data = {"from": "en","to": "zh","query": "love","transtype": "realtime","simple_means_flag": "3","sign": "198772.518981","token": "cdd52406abbf29bdf0d424e2889d9724","domain": "common","ts": "1709212364268"

}

# post請求的 參數需要進行編碼

new_data = urllib.parse.urlencode(data).encode("utf-8")# 請求對象的定制

# post請求的參數 不拼接在URL的后面,而是放在請求對象定制的參數中

request = urllib.request.Request(url=url, data=new_data, headers=headers)# 模擬瀏覽器發送請求

response = urllib.request.urlopen(request)# 獲取響應的數據

content = response.read().decode("utf-8")# 將字符串轉換為JSON對象

obj = json.loads(content)

print(obj)

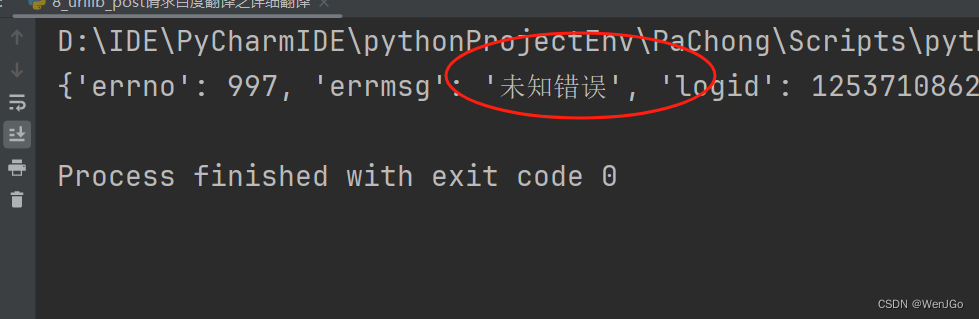

再得到如下的結果

wdf,發生了什么? ?o((⊙﹏⊙))o

被反扒拿下了又? o(╥﹏╥)o

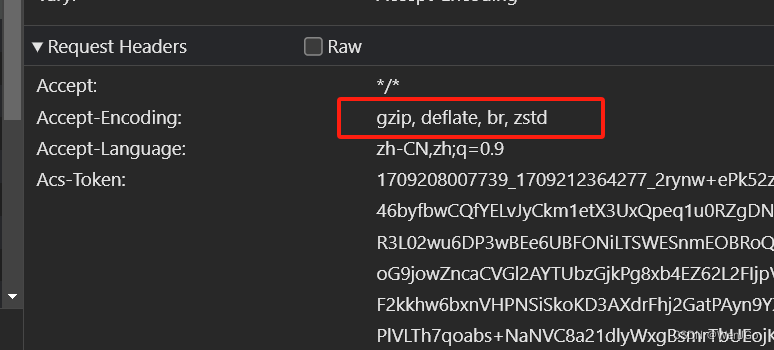

那么來看請求頭,

????????這么多東西都是真實的瀏覽器需要發送過去的東西,而我們只發送了一個User-Agent,顯然是被識破了

然后把這些參數都加入到header之中

headers = {"Accept": "*/*",# "Accept-Encoding": "gzip, deflate, br, zstd","Accept-Language": "zh-CN,zh;q=0.9","Acs-Token": "1709208007739_1709212364277_2rynw+ePk52zCeBqFrnpVyboCMK+LPtSWG7fFss9tB46byfbwCQfYELvJyCkm1etX3UxQpeq1u0RZgDNoBMV4TZMgoBePG0jlPUTwV8YiGfTxR3L02wu6DP3wBEe6UBFONiLTSWESnmEOBRoQ3yX7KBs+A8w1QV8BHgguDCGc9Q/foG9jowZncaCVGl2AYTUbzGjkPg8xb4EZ62L2FIjpVZ1oVatDtgSFqtAVEO5W3z7tRVaI0JxFF2kkhw6bxnVHPNSiSkoKD3AXdrFhj2GatPAyn9YXlLw20qoyE+UjZIyRat4xdWkFsdTG/kvPlVLTh7qoabs+NaNVC8a21dlyWxgBsmrTbUEojKiYyaURQG0COiv/u0teilELxPLCo+FwatSE0yD8alqLGXSbi6v/yOOphDWau7zRYMynAEaxaLrQTuOgHfvllflSel+GMBctvdS6RtLdhQb+pIa3Sp1c8C2JvJ/DM/1Th2s+7pdaqE=","Connection": "keep-alive","Content-Length": "152","Content-Type": "application/x-www-form-urlencoded; charset=UTF-8","Cookie": 'BIDUPSID=2DC3FD925EDB9E9310057AAA4313A978; PSTM=1679797623; BAIDUID=2DC3FD925EDB9E939299595287C491C9:FG=1; REALTIME_TRANS_SWITCH=1; FANYI_WORD_SWITCH=1; HISTORY_SWITCH=1; SOUND_SPD_SWITCH=1; SOUND_PREFER_SWITCH=1; MCITY=-75%3A; BDORZ=B490B5EBF6F3CD402E515D22BCDA1598; BAIDUID_BFESS=2DC3FD925EDB9E939299595287C491C9:FG=1; ZFY=KUd37zEBYu5HusDOqV1jxs1znlRRBUOop2UvOac44TU:C; RT="z=1&dm=baidu.com&si=8d0cddbe-c90e-4db5-b3a0-3fd3a4f6ea21&ss=lt6jrqb7&sl=3&tt=rei&bcn=https%3A%2F%2Ffclog.baidu.com%2Flog%2Fweirwood%3Ftype%3Dperf&nu=9y8m6cy&cl=6qwh&ld=6pgv&ul=7z34&hd=7z3q"; BA_HECTOR=2k802l8l0l010184242k04a598vrdh1iu0cmp1t; H_PS_PSSID=40009_39661_40206_40211_40215_40222_40246_40274_40294_40289_40286_40317_40080; PSINO=1; delPer=0; APPGUIDE_10_6_9=1; Hm_lvt_64ecd82404c51e03dc91cb9e8c025574=1709210293; Hm_lpvt_64ecd82404c51e03dc91cb9e8c025574=1709210293; ab_sr=1.0.1_MGY0MDFkY2E0MjFjNzAwODk0Yjg1NTk1M2ZmYmUxMjlmMGEyZGRjNTk0MDM4NWE2NmM0ZmQzNzE4NzhhMDBhZWM5M2QxNDEwNzljNjhlNTE1MThhMTg3OWI0NmQ4OTAwOTlhMGExODIxNWM3ZDVmNmJmZTQ1MTIyM2JkNDIzMTRhOWMzYzM2ZTFjZTcyZDQ4MTUxNzBlZjE2NmFmODczYw==',"Host": 'fanyi.baidu.com',"Origin": 'https://fanyi.baidu.com',"Referer": 'https://fanyi.baidu.com/?ext_channel=DuSearch',"Sec-Ch-Ua": '"Chromium";v="122", "Not(A:Brand";v="24", "Google Chrome";v="122"','Sec-Ch-Ua-Mobile': '?0','Sec-Ch-Ua-Platform': '"Windows"','Sec-Fetch-Dest': 'empty','Sec-Fetch-Mode': 'cors','Sec-Fetch-Site': 'same-origin','User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/122.0.0.0 Safari/537.36','X-Requested-With': 'XMLHttpRequest'

}我們成功了嗎!!!

并沒有,怎么了呢

這里的編碼格式并沒有utf-8,所以我們不要攜帶這一行參數

????????但是這里你把這里百分之九十的東西刪除了都行,只留下一個cookie即可,因為這里只有cookie被用來驗證了。

import urllib.request

import urllib.parse

import json# post請求

url = "https://fanyi.baidu.com/v2transapi?from=en&to=zh"headers = {# "Accept": "*/*",# "Accept-Encoding": "gzip, deflate, br, zstd",# "Accept-Language": "zh-CN,zh;q=0.9",# "Acs-Token": "1709208007739_1709212364277_2rynw+ePk52zCeBqFrnpVyboCMK+LPtSWG7fFss9tB46byfbwCQfYELvJyCkm1etX3UxQpeq1u0RZgDNoBMV4TZMgoBePG0jlPUTwV8YiGfTxR3L02wu6DP3wBEe6UBFONiLTSWESnmEOBRoQ3yX7KBs+A8w1QV8BHgguDCGc9Q/foG9jowZncaCVGl2AYTUbzGjkPg8xb4EZ62L2FIjpVZ1oVatDtgSFqtAVEO5W3z7tRVaI0JxFF2kkhw6bxnVHPNSiSkoKD3AXdrFhj2GatPAyn9YXlLw20qoyE+UjZIyRat4xdWkFsdTG/kvPlVLTh7qoabs+NaNVC8a21dlyWxgBsmrTbUEojKiYyaURQG0COiv/u0teilELxPLCo+FwatSE0yD8alqLGXSbi6v/yOOphDWau7zRYMynAEaxaLrQTuOgHfvllflSel+GMBctvdS6RtLdhQb+pIa3Sp1c8C2JvJ/DM/1Th2s+7pdaqE=",# "Connection": "keep-alive",# "Content-Length": "152",# "Content-Type": "application/x-www-form-urlencoded; charset=UTF-8","Cookie": 'BIDUPSID=2DC3FD925EDB9E9310057AAA4313A978; PSTM=1679797623; BAIDUID=2DC3FD925EDB9E939299595287C491C9:FG=1; REALTIME_TRANS_SWITCH=1; FANYI_WORD_SWITCH=1; HISTORY_SWITCH=1; SOUND_SPD_SWITCH=1; SOUND_PREFER_SWITCH=1; MCITY=-75%3A; BDORZ=B490B5EBF6F3CD402E515D22BCDA1598; BAIDUID_BFESS=2DC3FD925EDB9E939299595287C491C9:FG=1; ZFY=KUd37zEBYu5HusDOqV1jxs1znlRRBUOop2UvOac44TU:C; RT="z=1&dm=baidu.com&si=8d0cddbe-c90e-4db5-b3a0-3fd3a4f6ea21&ss=lt6jrqb7&sl=3&tt=rei&bcn=https%3A%2F%2Ffclog.baidu.com%2Flog%2Fweirwood%3Ftype%3Dperf&nu=9y8m6cy&cl=6qwh&ld=6pgv&ul=7z34&hd=7z3q"; BA_HECTOR=2k802l8l0l010184242k04a598vrdh1iu0cmp1t; H_PS_PSSID=40009_39661_40206_40211_40215_40222_40246_40274_40294_40289_40286_40317_40080; PSINO=1; delPer=0; APPGUIDE_10_6_9=1; Hm_lvt_64ecd82404c51e03dc91cb9e8c025574=1709210293; Hm_lpvt_64ecd82404c51e03dc91cb9e8c025574=1709210293; ab_sr=1.0.1_MGY0MDFkY2E0MjFjNzAwODk0Yjg1NTk1M2ZmYmUxMjlmMGEyZGRjNTk0MDM4NWE2NmM0ZmQzNzE4NzhhMDBhZWM5M2QxNDEwNzljNjhlNTE1MThhMTg3OWI0NmQ4OTAwOTlhMGExODIxNWM3ZDVmNmJmZTQ1MTIyM2JkNDIzMTRhOWMzYzM2ZTFjZTcyZDQ4MTUxNzBlZjE2NmFmODczYw==',# "Host": 'fanyi.baidu.com',# "Origin": 'https://fanyi.baidu.com',# "Referer": 'https://fanyi.baidu.com/?ext_channel=DuSearch',# "Sec-Ch-Ua": '"Chromium";v="122", "Not(A:Brand";v="24", "Google Chrome";v="122"',# 'Sec-Ch-Ua-Mobile': '?0',# 'Sec-Ch-Ua-Platform': '"Windows"',# 'Sec-Fetch-Dest': 'empty',# 'Sec-Fetch-Mode': 'cors',# 'Sec-Fetch-Site': 'same-origin',# 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/122.0.0.0 Safari/537.36',# 'X-Requested-With': 'XMLHttpRequest'

}data = {"from": "en","to": "zh","query": "love","transtype": "realtime","simple_means_flag": "3","sign": "198772.518981","token": "cdd52406abbf29bdf0d424e2889d9724","domain": "common","ts": "1709212364268"

}

# post請求的 參數需要進行編碼

new_data = urllib.parse.urlencode(data).encode("utf-8")# 請求對象的定制

# post請求的參數 不拼接在URL的后面,而是放在請求對象定制的參數中

request = urllib.request.Request(url=url, data=new_data, headers=headers)# 模擬瀏覽器發送請求

response = urllib.request.urlopen(request)# 獲取響應的數據

content = response.read().decode("utf-8")# 將字符串轉換為JSON對象

obj = json.loads(content)

print(obj)

????????這個就是百度翻譯所需的驗證,看見沒有連UA甚至都不需要,這就是各種網站的反扒機制需要不同的headers的數值,百度網盤只需要一個cookie

總結

累了,以后再總結ヾ( ̄▽ ̄)Bye~Bye~

)

——點對點傳輸場景方案)