分類預測 | MATLAB實現DBN-SVM深度置信網絡結合支持向量機多輸入分類預測

目錄

- 分類預測 | MATLAB實現DBN-SVM深度置信網絡結合支持向量機多輸入分類預測

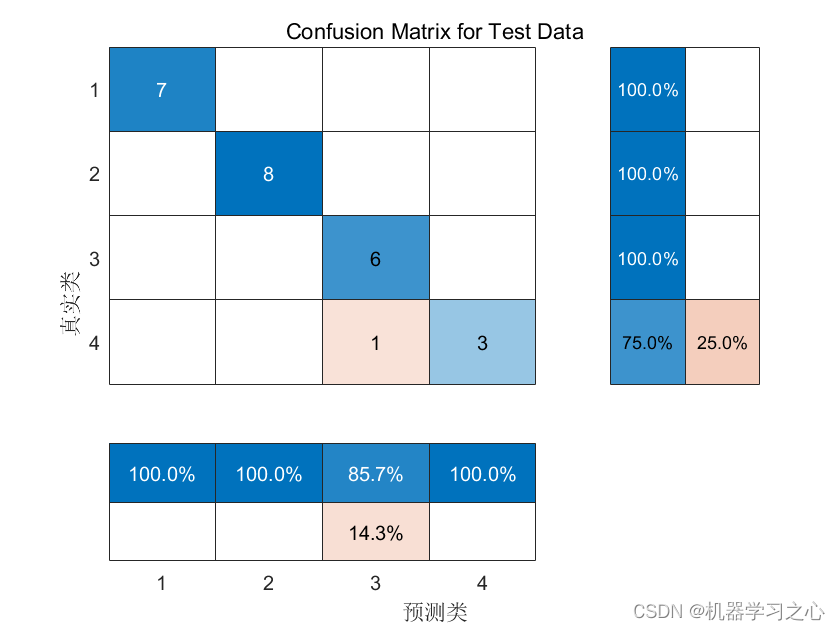

- 預測效果

- 基本介紹

- 程序設計

- 參考資料

預測效果

基本介紹

1.分類預測 | MATLAB實現DBN-SVM深度置信網絡結合支持向量機多輸入分類預測

2.代碼說明:要求于Matlab 2021版及以上版本。

程序設計

- 完整程序和數據獲取方式1:同等價值程序兌換;

- 完整程序和數據獲取方式2:私信博主回復 MATLAB實現DBN-SVM深度置信網絡結合支持向量機多輸入分類預測獲取。

%% 劃分訓練集和測試集

P_train = res(1: num_train_s, 1: f_)';

T_train = res(1: num_train_s, f_ + 1: end)';

M = size(P_train, 2);P_test = res(num_train_s + 1: end, 1: f_)';

T_test = res(num_train_s + 1: end, f_ + 1: end)';

N = size(P_test, 2);%% 數據歸一化

[p_train, ps_input] = mapminmax(P_train, 0, 1);

p_test = mapminmax('apply', P_test, ps_input);[t_train, ps_output] = mapminmax(T_train, 0, 1);

t_test = mapminmax('apply', T_test, ps_output);

dbn = dbnsetup(dbn, p_train, opts); % 建立模型

dbn = dbntrain(dbn, p_train, opts); % 訓練模型

%-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

%% 訓練權重移植,添加輸出層

nn = dbnunfoldtonn(dbn, num_class);%% 反向調整網絡

opts.numepochs = 576; % 反向微調次數

opts.batchsize = M; % 每次反向微調樣本數 需滿足:(M / batchsize = 整數)nn.activation_function = 'sigm'; % 激活函數

nn.learningRate = 2.9189; % 學習率

nn.momentum = 0.5; % 動量參數

nn.scaling_learningRate = 1; % 學習率的比例因子[nn, loss, accu] = nntrain(nn, p_train, t_train, opts); % 訓練

%-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

%% 仿真預測

T_sim1 = nnpredict(nn, p_train);

T_sim2 = nnpredict(nn, p_test );%% 性能評價

error1 = sum((T_sim1' == T_train)) / M * 100 ;

error2 = sum((T_sim2' == T_test )) / N * 100 ;

https://blog.csdn.net/kjm13182345320/article/details/131174983

版權聲明:本文為CSDN博主「機器學習之心」的原創文章,遵循CC 4.0 BY-SA版權協議,轉載請附上原文出處鏈接及本聲明。

原文鏈接:https://blog.csdn.net/kjm13182345320/article/details/130462492參考資料

[1] https://blog.csdn.net/kjm13182345320/article/details/129679476?spm=1001.2014.3001.5501

[2] https://blog.csdn.net/kjm13182345320/article/details/129659229?spm=1001.2014.3001.5501

[3] https://blog.csdn.net/kjm13182345320/article/details/129653829?spm=1001.2014.3001.5501

適用于微信群管、社群管理)

)

遞歸 JAVA)

)