[oneAPI] 手寫數字識別-LSTM

- 手寫數字識別

- 參數與包

- 加載數據

- 模型

- 訓練過程

- 結果

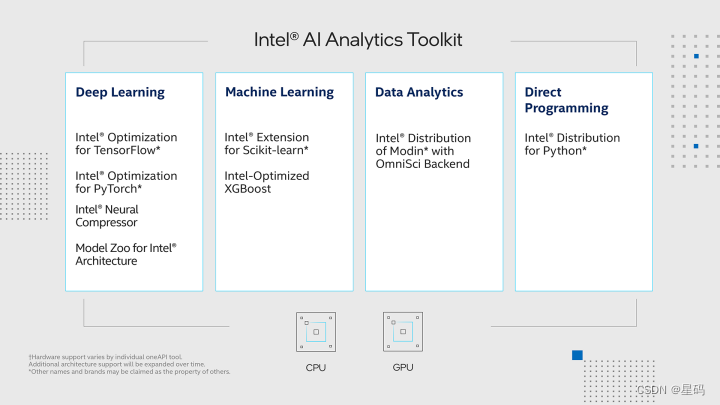

- oneAPI

比賽:https://marketing.csdn.net/p/f3e44fbfe46c465f4d9d6c23e38e0517

Intel? DevCloud for oneAPI:https://devcloud.intel.com/oneapi/get_started/aiAnalyticsToolkitSamples/

手寫數字識別

使用了pytorch以及Intel? Optimization for PyTorch,通過優化擴展了 PyTorch,使英特爾硬件的性能進一步提升,讓手寫數字識別問題更加的快速高效

使用MNIST數據集,該數據集包含了一系列以黑白圖像表示的手寫數字,每個圖像的大小為28x28像素,數據集組成如下:

- 訓練集:包含60,000個圖像和標簽,用于訓練模型。

- 測試集:包含10,000個圖像和標簽,用于測試模型的性能。

每個圖像都被標記為0到9之間的一個數字,表示圖像中顯示的手寫數字。這個數據集常常被用來驗證圖像分類模型的性能,特別是在計算機視覺領域。

參數與包

import torch

import torch.nn as nn

import torchvision

import torchvision.transforms as transformsimport intel_extension_for_pytorch as ipex# Device configuration

device = torch.device('xpu' if torch.cuda.is_available() else 'cpu')# Hyper-parameters

sequence_length = 28

input_size = 28

hidden_size = 128

num_layers = 2

num_classes = 10

batch_size = 100

num_epochs = 2

learning_rate = 0.01

加載數據

# MNIST dataset

train_dataset = torchvision.datasets.MNIST(root='../../data/',train=True,transform=transforms.ToTensor(),download=True)test_dataset = torchvision.datasets.MNIST(root='../../data/',train=False,transform=transforms.ToTensor())# Data loader

train_loader = torch.utils.data.DataLoader(dataset=train_dataset,batch_size=batch_size,shuffle=True)test_loader = torch.utils.data.DataLoader(dataset=test_dataset,batch_size=batch_size,shuffle=False)

模型

# Recurrent neural network (many-to-one)

class RNN(nn.Module):def __init__(self, input_size, hidden_size, num_layers, num_classes):super(RNN, self).__init__()self.hidden_size = hidden_sizeself.num_layers = num_layersself.lstm = nn.LSTM(input_size, hidden_size, num_layers, batch_first=True)self.fc = nn.Linear(hidden_size, num_classes)def forward(self, x):# Set initial hidden and cell states h0 = torch.zeros(self.num_layers, x.size(0), self.hidden_size).to(device)c0 = torch.zeros(self.num_layers, x.size(0), self.hidden_size).to(device)# Forward propagate LSTMout, _ = self.lstm(x, (h0, c0)) # out: tensor of shape (batch_size, seq_length, hidden_size)# Decode the hidden state of the last time stepout = self.fc(out[:, -1, :])return out

訓練過程

model = RNN(input_size, hidden_size, num_layers, num_classes).to(device)# Loss and optimizer

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate)'''

Apply Intel Extension for PyTorch optimization against the model object and optimizer object.

'''

model, optimizer = ipex.optimize(model, optimizer=optimizer)# Train the model

total_step = len(train_loader)

for epoch in range(num_epochs):for i, (images, labels) in enumerate(train_loader):images = images.reshape(-1, sequence_length, input_size).to(device)labels = labels.to(device)# Forward passoutputs = model(images)loss = criterion(outputs, labels)# Backward and optimizeoptimizer.zero_grad()loss.backward()optimizer.step()if (i + 1) % 100 == 0:print('Epoch [{}/{}], Step [{}/{}], Loss: {:.4f}'.format(epoch + 1, num_epochs, i + 1, total_step, loss.item()))# Test the model

model.eval()

with torch.no_grad():correct = 0total = 0for images, labels in test_loader:images = images.reshape(-1, sequence_length, input_size).to(device)labels = labels.to(device)outputs = model(images)_, predicted = torch.max(outputs.data, 1)total += labels.size(0)correct += (predicted == labels).sum().item()print('Test Accuracy of the model on the 10000 test images: {} %'.format(100 * correct / total))# Save the model checkpoint

torch.save(model.state_dict(), 'model.ckpt')

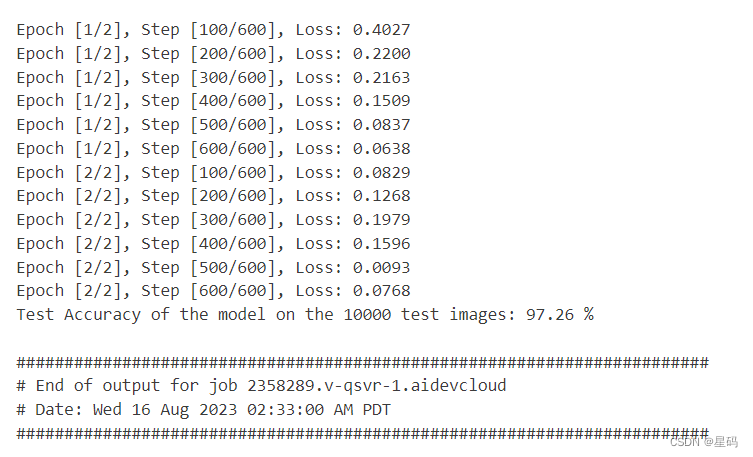

結果

oneAPI

import intel_extension_for_pytorch as ipex# Device configuration

device = torch.device('xpu' if torch.cuda.is_available() else 'cpu')# 模型

model = ConvNet(num_classes).to(device)# Loss and optimizer

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate)'''

Apply Intel Extension for PyTorch optimization against the model object and optimizer object.

'''

model, optimizer = ipex.optimize(model, optimizer=optimizer)

基于深度學習的運動目標檢測(一)YOLO運動目標檢測算法)

——Centos7安裝MySQL)