一、環境準備

集群環境hadoop11,hadoop12 ,hadoop13

安裝 zookeeper 和 HDFS

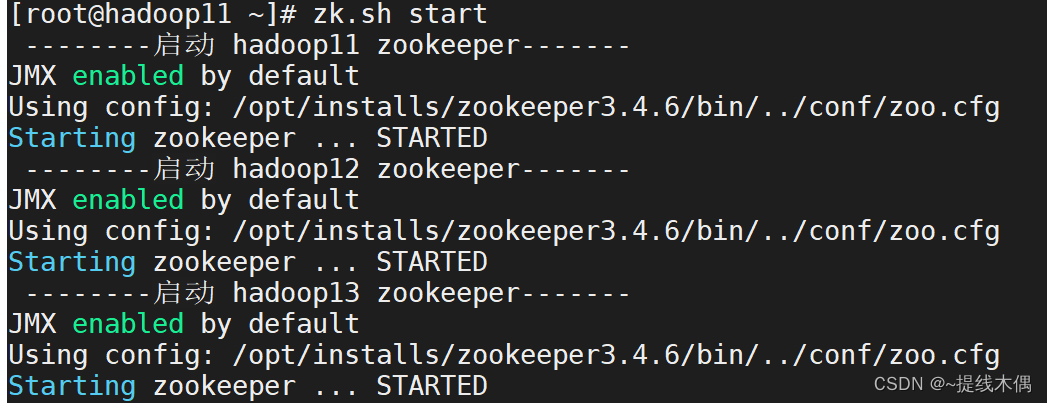

1、啟動zookeeper

-- 啟動zookeeper(11,12,13都需要啟動)

xcall.sh zkServer.sh start

-- 或者

zk.sh start

-- xcall.sh 和zk.sh都是自己寫的腳本

-- 查看進程

jps

-- 有QuorumPeerMain進程不能說明zookeeper啟動成功

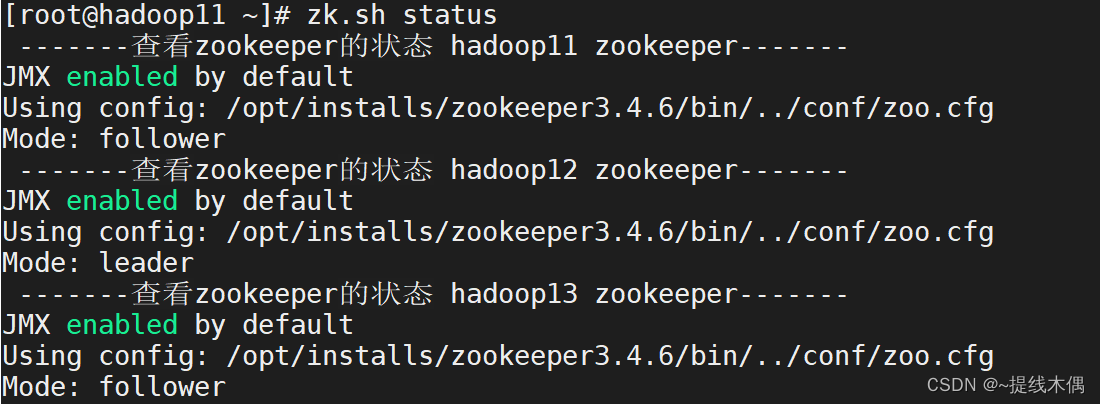

-- 需要查看zookeeper的狀態

xcall.sh zkServer.sh status

-- 或者

zk.sh status-------查看zookeeper的狀態 hadoop11 zookeeper-------

JMX enabled by default

Using config: /opt/installs/zookeeper3.4.6/bin/../conf/zoo.cfg

Mode: follower-------查看zookeeper的狀態 hadoop12 zookeeper-------

JMX enabled by default

Using config: /opt/installs/zookeeper3.4.6/bin/../conf/zoo.cfg

Mode: leader-------查看zookeeper的狀態 hadoop13 zookeeper-------

JMX enabled by default

Using config: /opt/installs/zookeeper3.4.6/bin/../conf/zoo.cfg

Mode: follower-- 有leader,有follower才算啟動成功

2、啟動HDFS

[root@hadoop11 ~]# start-dfs.sh

Starting namenodes on [hadoop11 hadoop12]

上一次登錄:三 8月 16 09:13:59 CST 2023從 192.168.182.1pts/0 上

Starting datanodes

上一次登錄:三 8月 16 09:36:55 CST 2023pts/0 上

Starting journal nodes [hadoop13 hadoop12 hadoop11]

上一次登錄:三 8月 16 09:37:00 CST 2023pts/0 上

Starting ZK Failover Controllers on NN hosts [hadoop11 hadoop12]

上一次登錄:三 8月 16 09:37:28 CST 2023pts/0 上jps查看進程

[root@hadoop11 ~]# xcall.sh jps

------------------------ hadoop11 ---------------------------

10017 DataNode

10689 DFSZKFailoverController

9829 NameNode

12440 Jps

9388 QuorumPeerMain

10428 JournalNode

------------------------ hadoop12 ---------------------------

1795 JournalNode

1572 NameNode

1446 QuorumPeerMain

1654 DataNode

1887 DFSZKFailoverController

1999 Jps

------------------------ hadoop13 ---------------------------

1446 QuorumPeerMain

1767 Jps

1567 DataNode

1679 JournalNode

查看HDFS高可用節點狀態,出現一個active和一個standby說名HDFS啟動成功(或者可以訪問web端=>主機名:8020來查看狀態)

[root@hadoop11 ~]# hdfs haadmin -getAllServiceState

hadoop11:8020 standby

hadoop12:8020 active

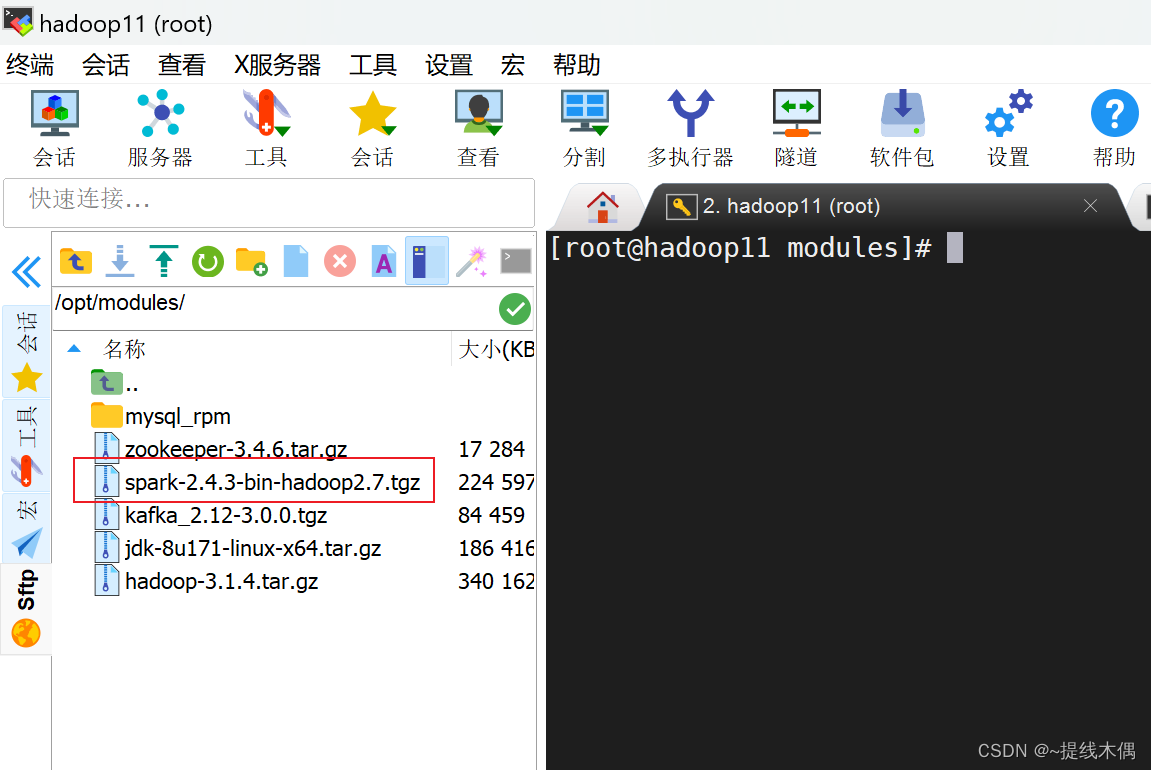

二、安裝Spark

1、上傳安裝包到hadoop11

上傳到/opt/modules目錄下

我的是2.4.3版本的

2、解壓

[root@hadoop11 modules]# tar -zxf spark-2.4.3-bin-hadoop2.7.tgz -C /opt/installs/

[root@hadoop11 modules]# cd /opt/installs/

[root@hadoop11 installs]# ll

總用量 4

drwxr-xr-x. 8 root root 198 6月 21 10:20 flume1.9.0

drwxr-xr-x. 11 1001 1002 173 5月 30 19:59 hadoop3.1.4

drwxr-xr-x. 8 10 143 255 3月 29 2018 jdk1.8

drwxr-xr-x. 3 root root 18 5月 30 20:30 journalnode

drwxr-xr-x. 8 root root 117 8月 3 10:03 kafka3.0

drwxr-xr-x. 13 1000 1000 211 5月 1 2019 spark-2.4.3-bin-hadoop2.7

drwxr-xr-x. 11 1000 1000 4096 5月 30 06:32 zookeeper3.4.6

3、更名

[root@hadoop11 installs]# mv spark-2.4.3-bin-hadoop2.7/ spark

[root@hadoop11 installs]# ls

flume1.9.0 hadoop3.1.4 jdk1.8 journalnode kafka3.0 spark zookeeper3.4.6

4、配置環境變量

vim /etc/profile

-- 添加

export SPARK_HOME=/opt/installs/spark

export PATH=$PATH:$SPARK_HOME/bin

-- 重新加載環境變量

source /etc/profile

5、修改配置文件

(1)conf目錄下的 slaves 和 spark-env.sh

cd /opt/installs/spark/conf/

-- 給文件更名

mv slaves.template slaves

mv spark-env.sh.template spark-env.sh#配置Spark集群節點主機名,在該主機上啟動worker進程

[root@hadoop11 conf]# vim slaves

[root@hadoop11 conf]# tail -3 slaves

hadoop11

hadoop12

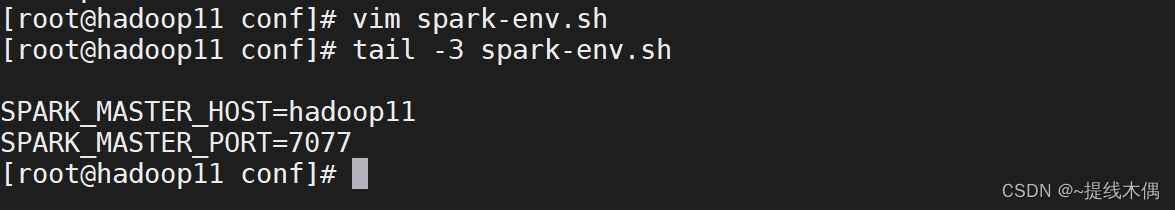

hadoop13#聲明Spark集群中Master的主機名和端口號

[root@hadoop11 conf]# vim spark-env.sh

[root@hadoop11 conf]# tail -3 spark-env.sh

SPARK_MASTER_HOST=hadoop11

SPARK_MASTER_PORT=7077

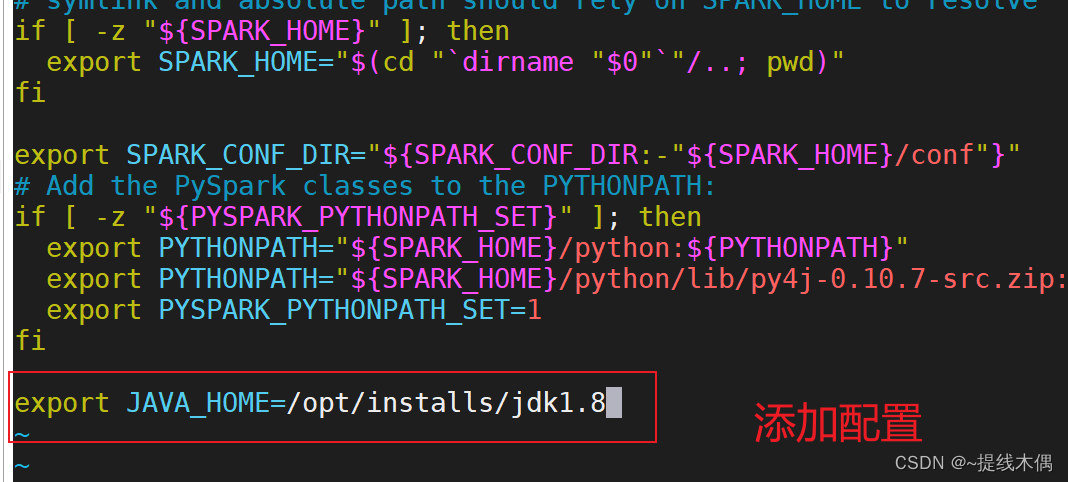

(2)sbin 目錄下的 spark-config.sh

vim spark-config.sh

#在最后增加 JAVA_HOME 配置

export JAVA_HOME=/opt/installs/jdk1.8

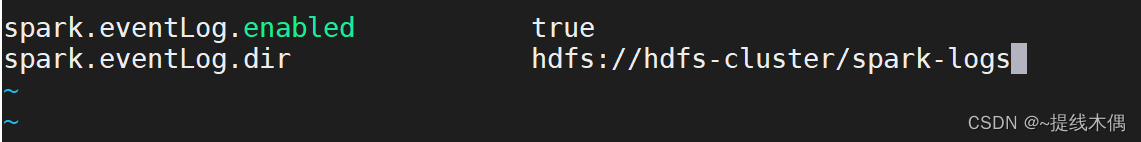

6、配置JobHistoryServer

(1)修改配置文件

[root@hadoop11 sbin]# hdfs dfs -mkdir /spark-logs

[root@hadoop11 sbin]# cd ../conf/

[root@hadoop11 conf]# mv spark-defaults.conf.template spark-defaults.conf

[root@hadoop11 conf]# vim spark-defaults.conf

[root@hadoop11 conf]# vim spark-env.sh

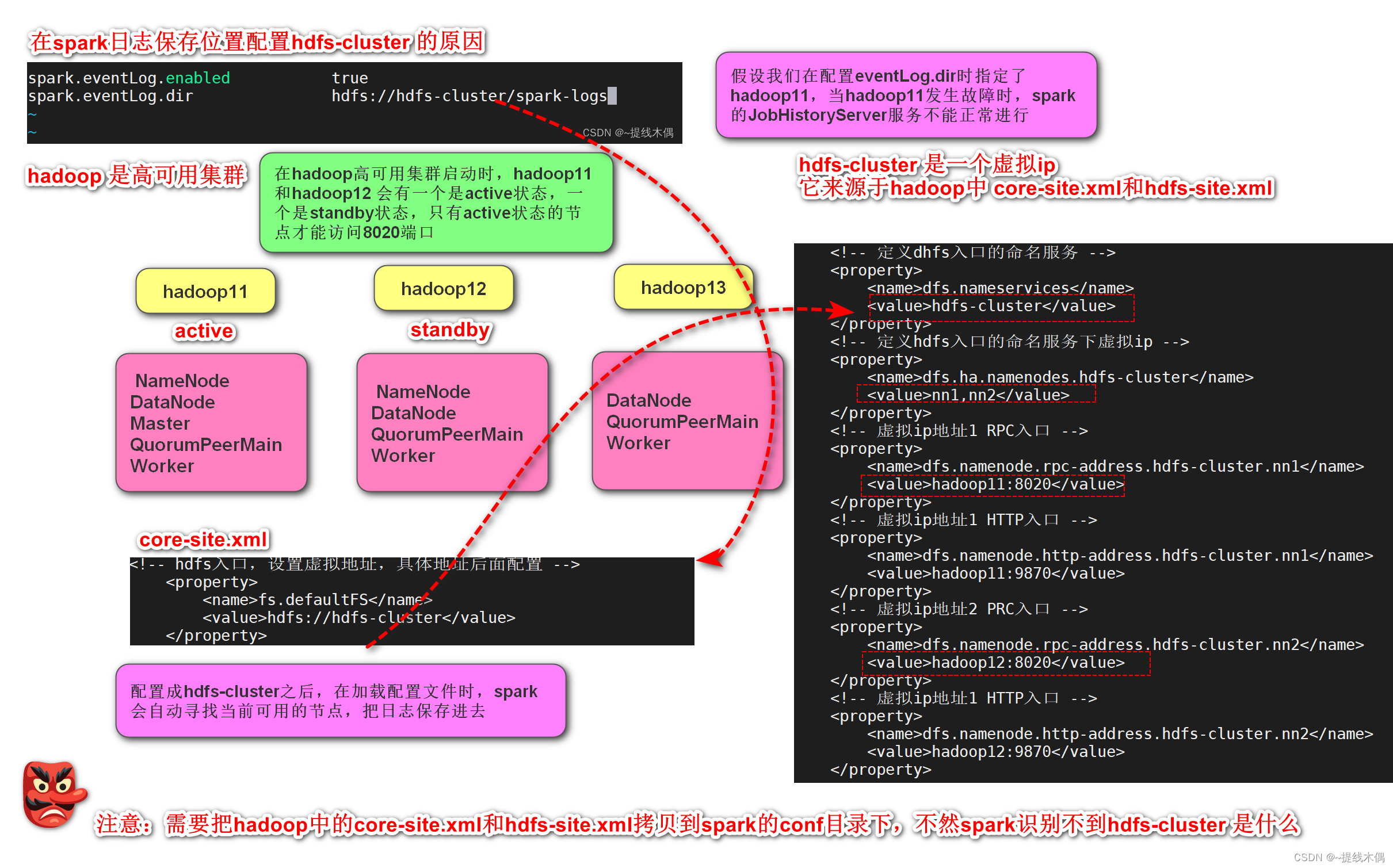

SPARK_HISTORY_OPTS="-Dspark.history.fs.logDirectory=hdfs://hdfs-cluster/spark-logs"

這里使用hdfs-cluster的原因:

在scala中寫hdfs-cluster而不寫具體的主機名,需要將hadoop中的兩個配置文件拷貝到resources目錄下,原因和這里的一樣(需要動態尋找可用的hadoop節點,以便讀寫數據)

(2)復制hadoop的配置文件到spark的conf目錄下

[root@hadoop11 conf]# cp /opt/installs/hadoop3.1.4/etc/hadoop/core-site.xml ./

[root@hadoop11 conf]# cp /opt/installs/hadoop3.1.4/etc/hadoop/hdfs-site.xml ./

[root@hadoop11 conf]# ll

總用量 44

-rw-r--r--. 1 root root 1289 8月 16 11:10 core-site.xml

-rw-r--r--. 1 1000 1000 996 5月 1 2019 docker.properties.template

-rw-r--r--. 1 1000 1000 1105 5月 1 2019 fairscheduler.xml.template

-rw-r--r--. 1 root root 3136 8月 16 11:10 hdfs-site.xml

-rw-r--r--. 1 1000 1000 2025 5月 1 2019 log4j.properties.template

-rw-r--r--. 1 1000 1000 7801 5月 1 2019 metrics.properties.template

-rw-r--r--. 1 1000 1000 883 8月 16 10:47 slaves

-rw-r--r--. 1 1000 1000 1396 8月 16 11:03 spark-defaults.conf

-rwxr-xr-x. 1 1000 1000 4357 8月 16 11:05 spark-env.sh7、集群分發

分發到hadoop12 hadoop13 上

myscp.sh ./spark/ /opt/installs/-- myscp.sh是腳本

[root@hadoop11 installs]# cat /usr/local/sbin/myscp.sh

#!/bin/bash# 使用pcount記錄傳入腳本參數個數pcount=$#

if ((pcount == 0))

thenecho no args;exit;

fi

pname=$1

#根據給定的路徑pname獲取真實的文件名fname

fname=`basename $pname`

echo "$fname"

#根據給定的路徑pname,獲取路徑中的絕對路徑,如果是軟鏈接,則通過cd -P 獲取到真實路徑

pdir=`cd -P $(dirname $pname);pwd`

#獲取當前登錄用戶名

user=`whoami`

for((host=12;host<=13;host++))

doecho"scp -r $pdir/$fname $user@hadoop$host:$pdir"scp -r $pdir/$fname $user@hadoop$host:$pdir

done

查看hadoop12 和hadoop13 上是否有spark

hadoop12

[root@hadoop12 ~]# cd /opt/installs/

[root@hadoop12 installs]# ll

總用量 4

drwxr-xr-x. 11 root root 173 5月 30 19:59 hadoop3.1.4

drwxr-xr-x. 8 10 143 255 3月 29 2018 jdk1.8

drwxr-xr-x. 3 root root 18 5月 30 20:30 journalnode

drwxr-xr-x. 8 root root 117 8月 3 10:06 kafka3.0

drwxr-xr-x. 13 root root 211 8月 16 11:13 spark

drwxr-xr-x. 11 root root 4096 5月 30 06:39 zookeeper3.4.6

hadoop13

[root@hadoop13 ~]# cd /opt/installs/

[root@hadoop13 installs]# ll

總用量 4

drwxr-xr-x. 11 root root 173 5月 30 19:59 hadoop3.1.4

drwxr-xr-x. 8 10 143 255 3月 29 2018 jdk1.8

drwxr-xr-x. 3 root root 18 5月 30 20:30 journalnode

drwxr-xr-x. 8 root root 117 8月 3 10:06 kafka3.0

drwxr-xr-x. 13 root root 211 8月 16 11:13 spark

drwxr-xr-x. 11 root root 4096 5月 30 06:39 zookeeper3.4.6

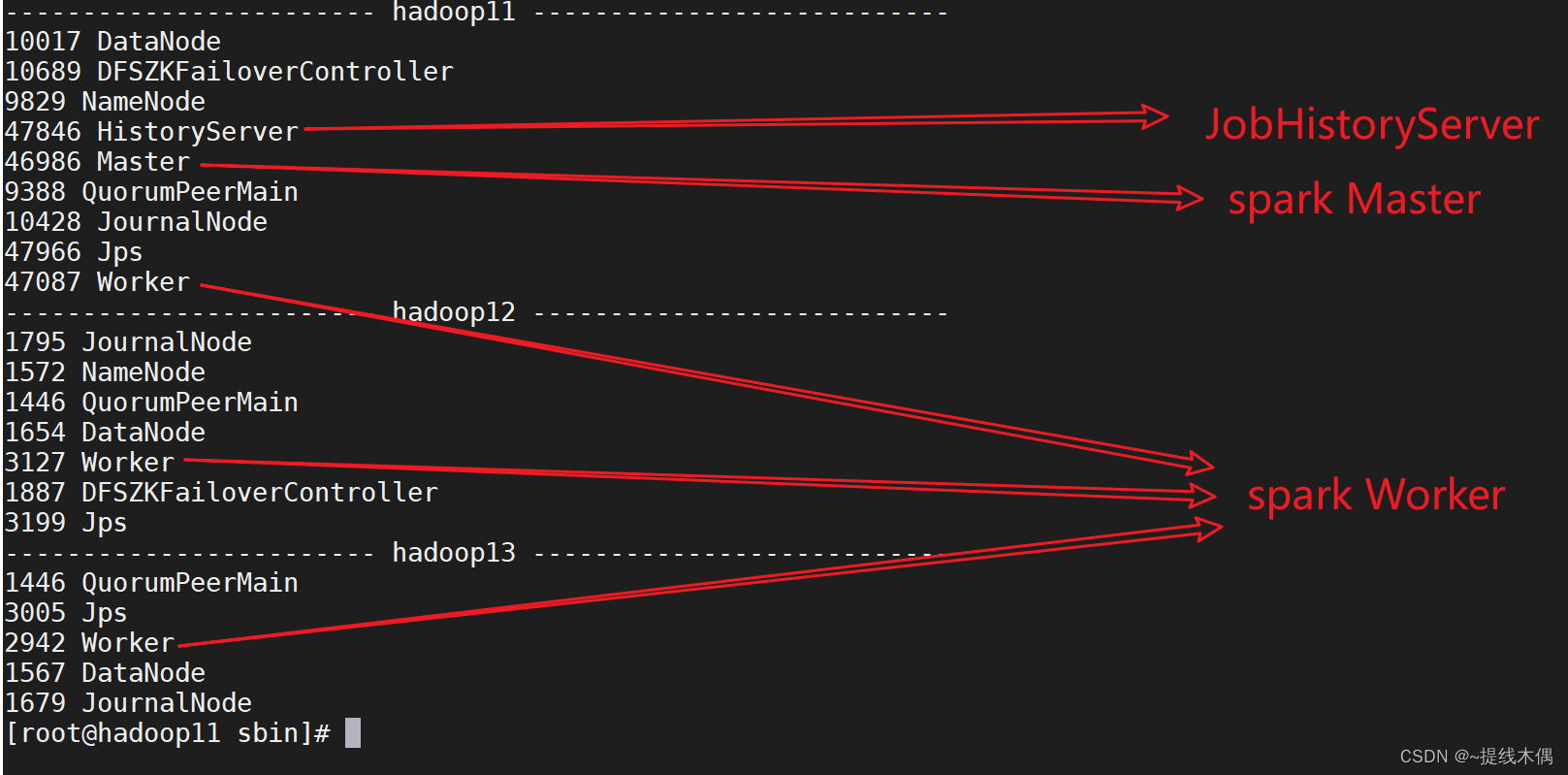

三、啟動spark

在Master所在的機器上啟動

[root@hadoop11 installs]# cd spark/sbin/

# 開啟standalone分布式集群

[root@hadoop11 sbin]# ./start-all.sh

starting org.apache.spark.deploy.master.Master, logging to /opt/installs/spark/logs/spark-root-org.apache.spark.deploy.master.Master-1-hadoop11.out

hadoop13: starting org.apache.spark.deploy.worker.Worker, logging to /opt/installs/spark/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-hadoop13.out

hadoop12: starting org.apache.spark.deploy.worker.Worker, logging to /opt/installs/spark/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-hadoop12.out

hadoop11: starting org.apache.spark.deploy.worker.Worker, logging to /opt/installs/spark/logs/spark-root-org.apache.spark.deploy.worker.Worker-1-hadoop11.out

#開啟JobHistoryServer

[root@hadoop11 sbin]# ./start-history-server.sh

starting org.apache.spark.deploy.history.HistoryServer, logging to /opt/installs/spark/logs/spark-root-org.apache.spark.deploy.history.HistoryServer-1-hadoop11.out

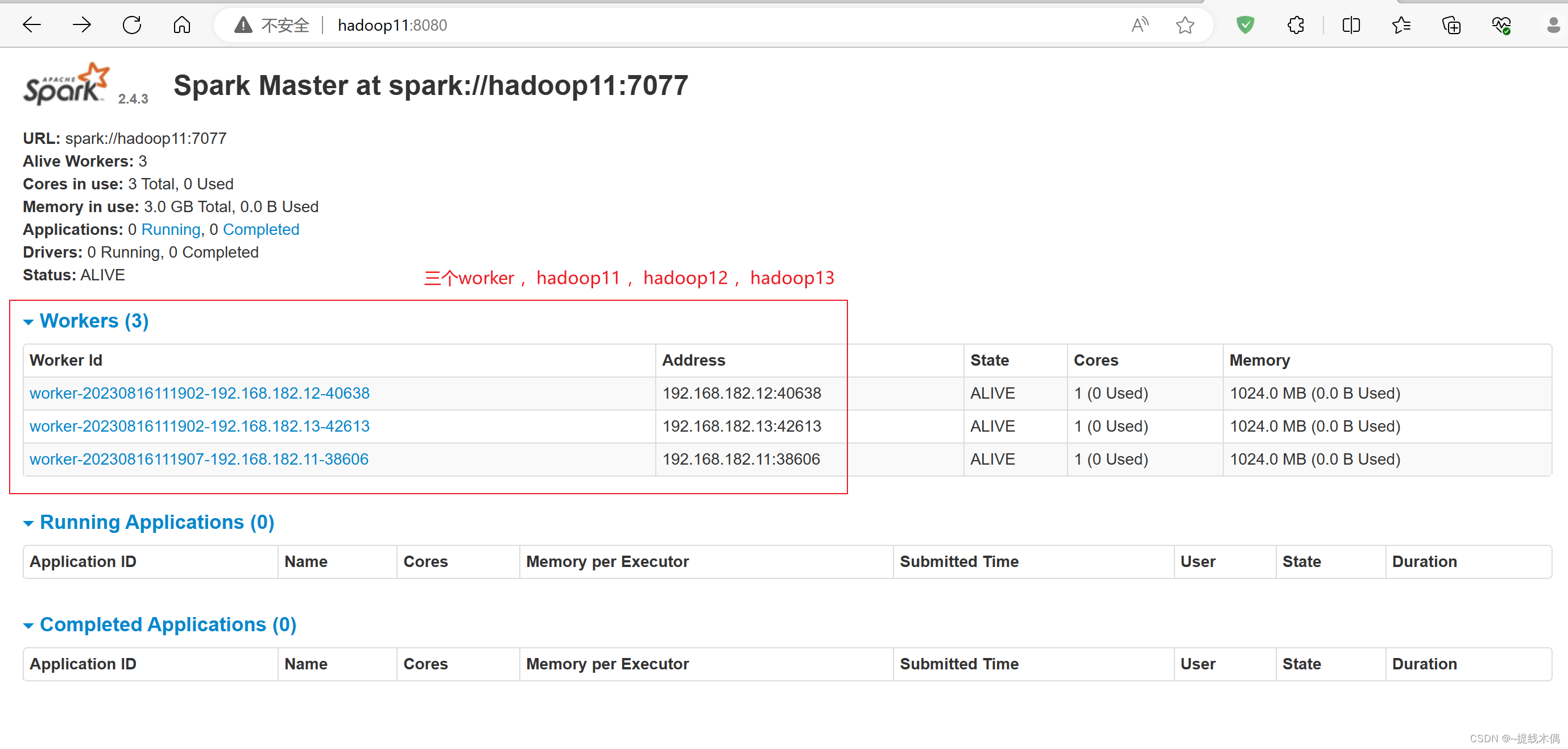

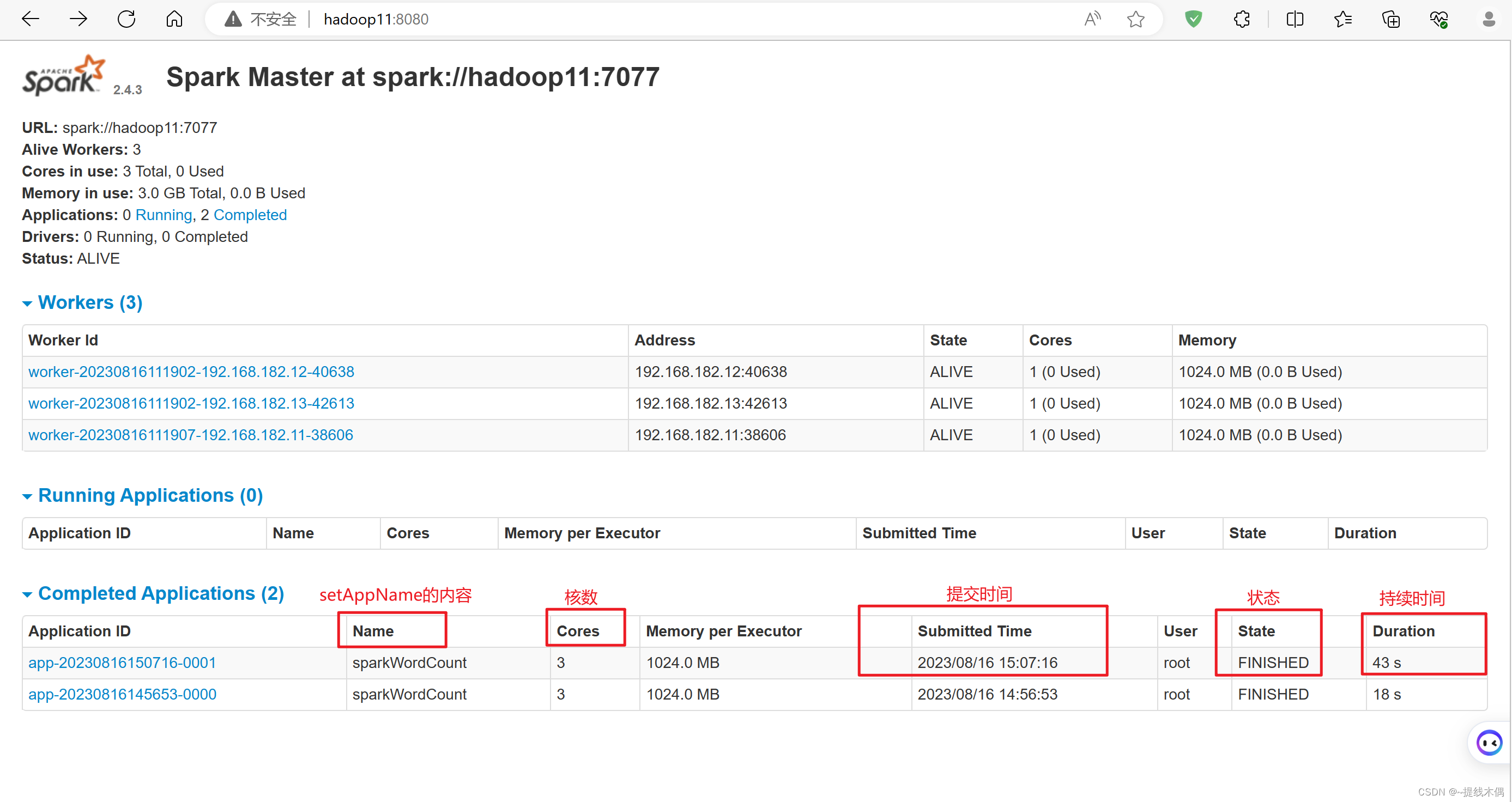

查看 web UI

查看spark的web端

訪問8080端口:

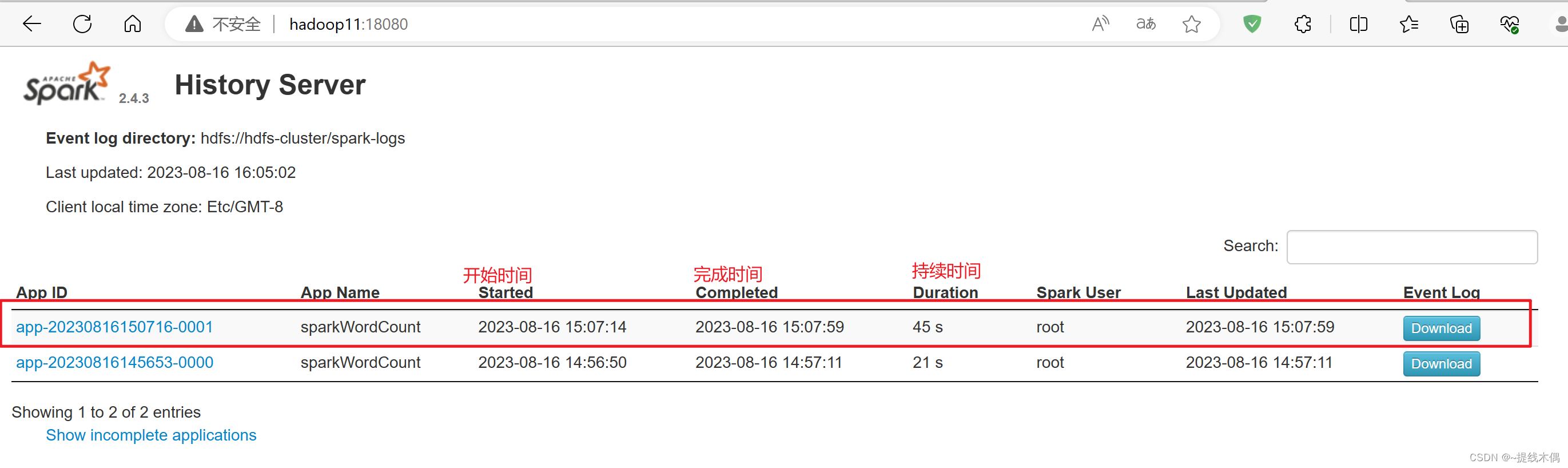

查看歷史服務

訪問18080端口:

四、初次使用

1、使用IDEA開發部署一個spark程序

(1)pom.xml

<dependencies><!-- spark依賴--><dependency><groupId>org.apache.spark</groupId><artifactId>spark-core_2.11</artifactId><version>2.4.3</version></dependency></dependencies><build><extensions><extension><groupId>org.apache.maven.wagon</groupId><artifactId>wagon-ssh</artifactId><version>2.8</version></extension></extensions><plugins><plugin><groupId>org.codehaus.mojo</groupId><artifactId>wagon-maven-plugin</artifactId><version>1.0</version><configuration><!--上傳的本地jar的位置--><fromFile>target/${project.build.finalName}.jar</fromFile><!--遠程拷貝的地址--><url>scp://root:root@hadoop11:/opt/jars</url></configuration></plugin><!-- maven項目對scala編譯打包 --><plugin><groupId>net.alchim31.maven</groupId><artifactId>scala-maven-plugin</artifactId><version>4.0.1</version><executions><execution><id>scala-compile-first</id><phase>process-resources</phase><goals><goal>add-source</goal><goal>compile</goal></goals></execution></executions></plugin></plugins></build>

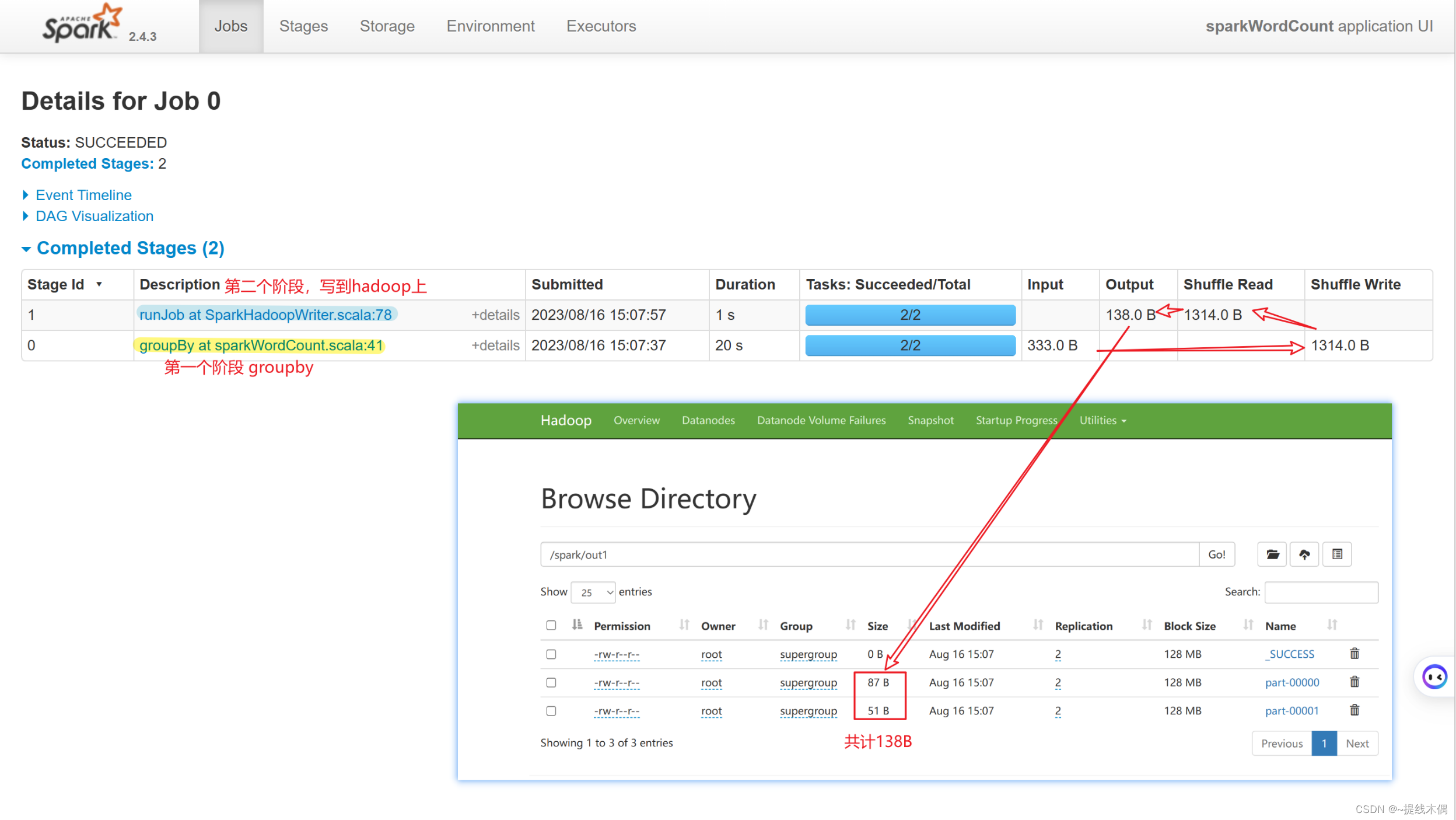

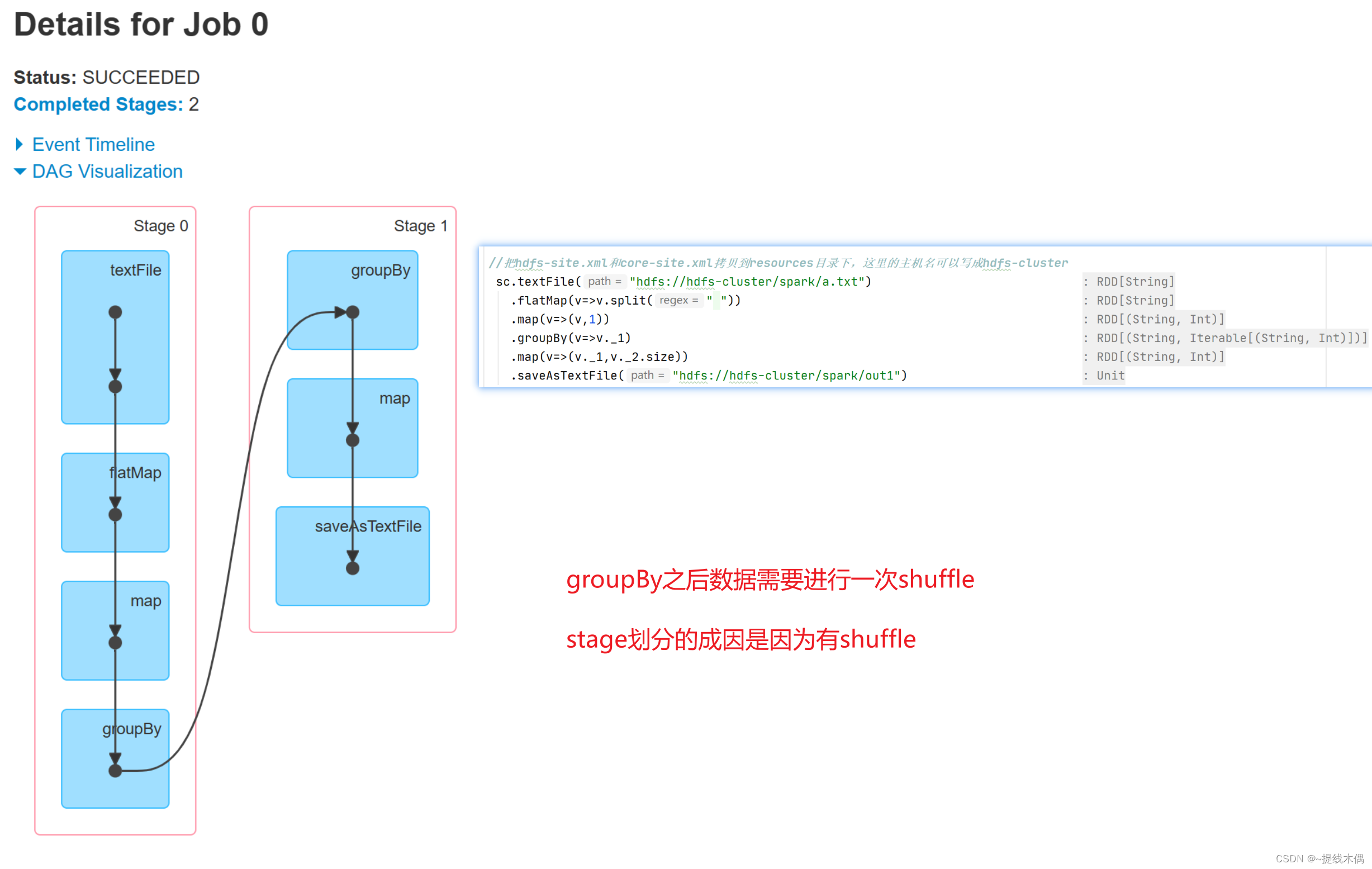

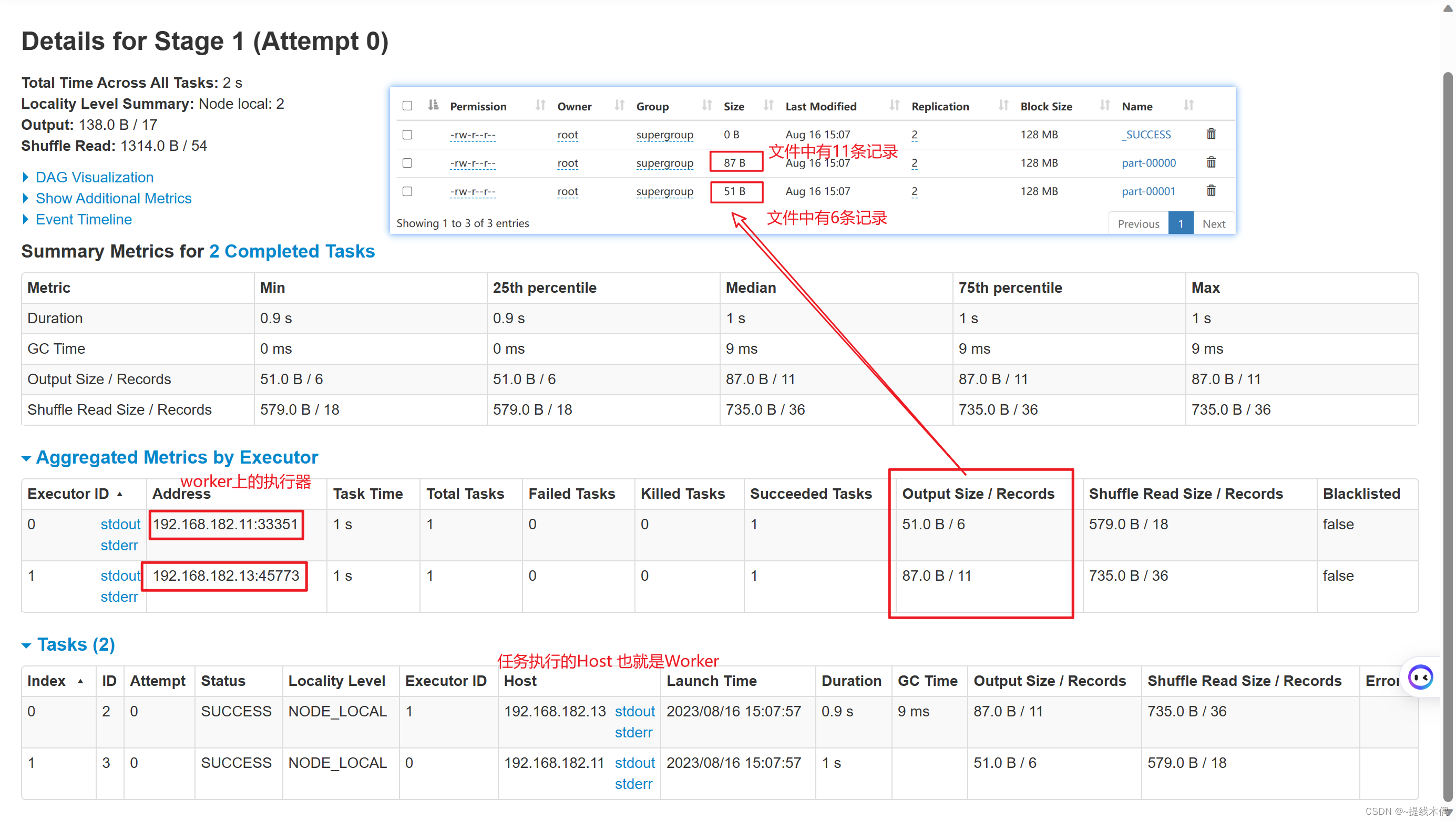

(2)sparkWordCount.scala

object sparkWordCount {def main(args: Array[String]): Unit = {//1.建立sparkContext對象val conf = new SparkConf().setMaster("spark://hadoop11:7077").setAppName("sparkWordCount")val sc = new SparkContext(conf)//2.對文件進行操作sc.textFile("hdfs://hadoop11:8020/spark/a.txt").flatMap(v=>v.split(" ")).map(v=>(v,1)).groupBy(v=>v._1).map(v=>(v._1,v._2.size)).saveAsTextFile("hdfs://hadoop11:8020/spark/out1")/* //把hdfs-site.xml和core-site.xml拷貝到resources目錄下,這里的主機名可以寫成hdfs-clustersc.textFile("hdfs://hdfs-cluster/spark/a.txt").flatMap(v=>v.split(" ")).map(v=>(v,1)).groupBy(v=>v._1).map(v=>(v._1,v._2.size)).saveAsTextFile("hdfs://hdfs-cluster/spark/out1")*///3.關閉資源sc.stop()}

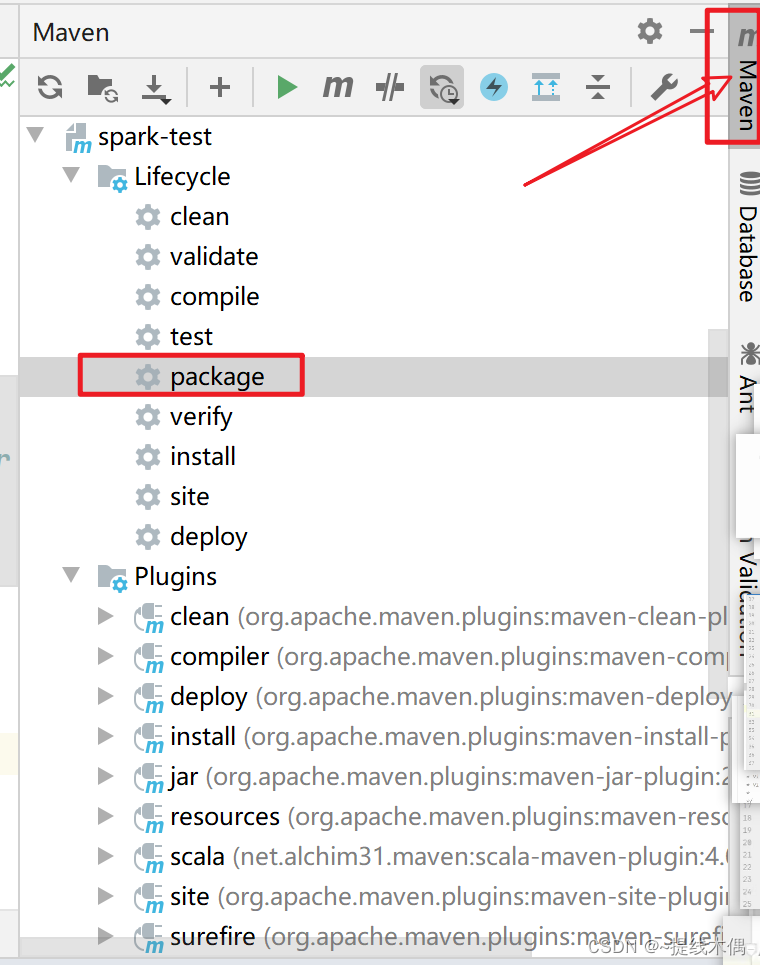

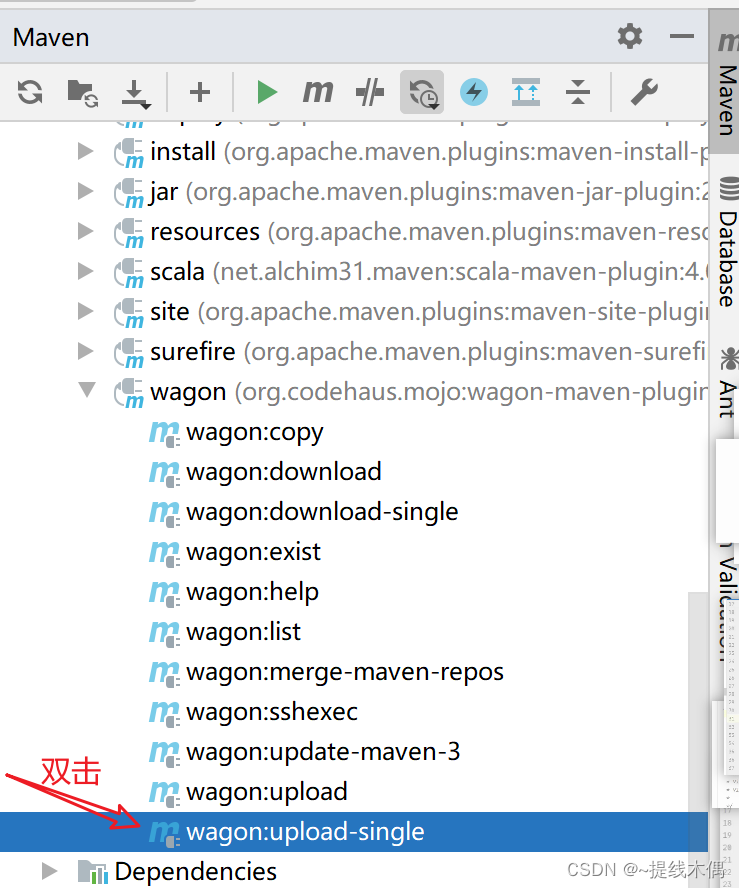

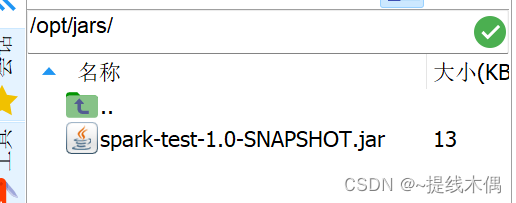

(3)打包,上傳

要現在hadoop11的 /opt下面新建一個jars文件夾

[root@hadoop11 hadoop]# cd /opt/

[root@hadoop11 opt]# mkdir jars

[root@hadoop11 opt]# ll

總用量 0

drwxr-xr-x. 9 root root 127 8月 16 10:39 installs

drwxr-xr-x. 2 root root 6 8月 16 14:05 jars

drwxr-xr-x. 3 root root 179 8月 16 10:33 modules

[root@hadoop11 opt]# cd jars/

(4)運行這個jar包

spark-submit --master spark://hadoop11:7077 --class day1.sparkWordCount /opt/jars/spark-test-1.0-SNAPSHOT.jar

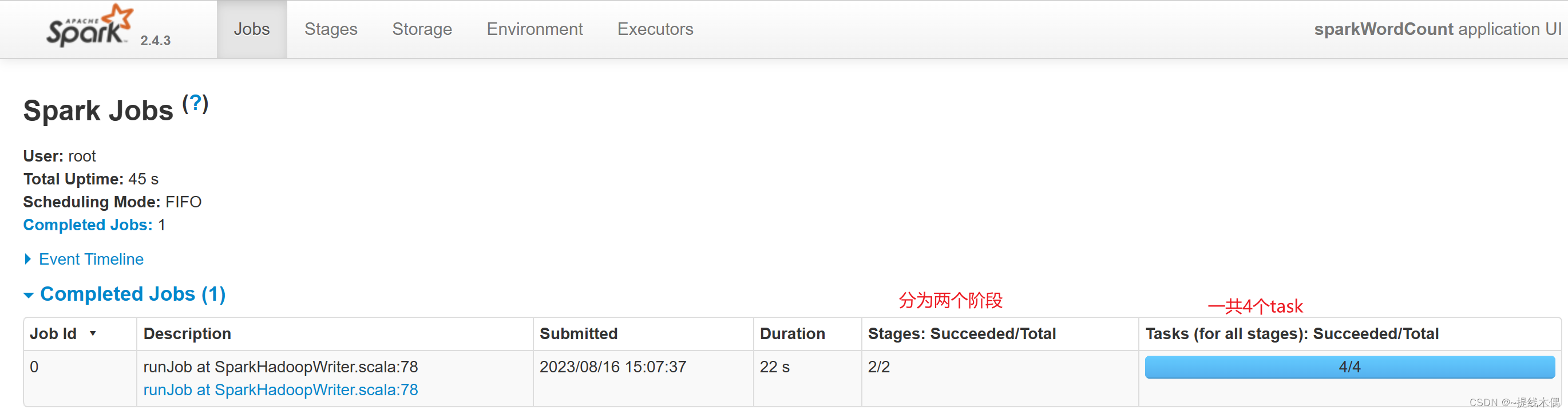

看一下8080端口:

看一下18080端口:

)

)