前饋神經網絡中的前饋

This is a preliminary showcase of a collaborative research by Seouk Jun Kim (Daniel) and Sunmin Lee. You can find our contacts at the bottom of the article.

這是 Seouk Jun Kim(Daniel) 和 Sunmin Lee 進行合作研究的初步展示 。 您可以在文章底部找到我們的聯系人。

Note from Towards Data Science’s editors: While we allow independent authors to publish articles in accordance with our rules and guidelines, we do not endorse each author’s contribution. You should not rely on an author’s works without seeking professional advice. See our Reader Terms for details.

Towards Data Science編輯的注意事項: 盡管我們允許獨立作者按照我們的 規則和指南 發表文章 ,但我們不認可每位作者的貢獻。 您不應在未征求專業意見的情況下依賴作者的作品。 有關 詳細信息, 請參見我們的 閱讀器條款 。

With the interest in artificial intelligence on the rise, numerous people have attempted to apply machine learning techniques in predicting the market, especially in the field of high-frequency trading using stock price time series data.

隨著對人工智能的興趣不斷提高,許多人嘗試將機器學習技術應用于預測市場,尤其是在使用股票價格時間序列數據進行高頻交易的領域。

Just on Medium alone there are tens of posts on stock price prediction using RNN, LSTM, GRU, feed-forward neural nets, etc. Predicting the market, however, is no trivial task: it seems that most revealed attempts show less than required performance for a strategy based on the model prediction to succeed. In most posts the situation is either a perfect prediction (an indicator that the writer has definitely done something wrong) or a dismal result that discourages any further research.

僅在Medium上,就有數十種使用RNN,LSTM,GRU,前饋神經網絡等進行股價預測的帖子。然而,預測市場并非易事:似乎大多數發現的嘗試都顯示出低于所需性能的結果。基于模型預測的策略獲得成功。 在大多數職位中,情況要么是完美的預測(表明作者確實做錯了事的指標),要么是令人沮喪的結果,不鼓勵任何進一步的研究。

Aldridge and Avellaneda (2019), however, shows that there is hope in using neural networks for predicted returns. While the paper definitely demonstrates the limitations of a simple neural net, it also shows that through careful selection of training period and input data, a simple strategy based on neural net prediction could outperform the buy-and-hold strategy.

但是, Aldridge和Avellaneda(2019)表明,使用神經網絡來預測回報是有希望的。 盡管本文明確說明了簡單神經網絡的局限性,但它也表明,通過仔細選擇訓練周期和輸入數據,基于神經網絡預測的簡單策略可能會勝過購買并持有策略。

Our research hopes to reproduce Aldridge and Avellaneda (2019)’s results while also taking more time to explore the financial theoretical background. In the end we will use the research’s validated results to introduce more robust prediction models and strategies.

我們的研究希望重現Aldridge和Avellaneda(2019)的結果,同時還需要更多時間來探索金融理論背景。 最后,我們將使用研究的驗證結果來介紹更強大的預測模型和策略。

In this specific article, we will discuss the early failures we had. More specifically, we will be training a feed-forward neural network with a fixed-window time-series input, explain the hypothesis behind why such training should work, and then explore why that hypothesis fails.

在本文中,我們將討論我們早期的失敗。 更具體地說,我們將使用固定窗口時間序列輸入來訓練前饋神經網絡,解釋為什么這種訓練應該起作用的假設,然后探討該假設失敗的原因。

股票價格趨勢分析背后的理由 (Rationale Behind Stock Price Trend-Based Analysis)

Since there are a million medium posts explaining the effectiveness and limitations of neural nets, we will cut to the chase and discuss why stock price trend-based analysis might work. That is, past stock price data + a few other data points might hold enough information to provide meaningful prediction.

由于有100萬個中等職位來解釋神經網絡的有效性和局限性,因此我們將緊追其后,并討論為什么基于股價趨勢分析的方法可能行得通。 也就是說,過去的股票價格數據+其他一些數據點可能包含足夠的信息以提供有意義的預測。

Pedersen (2015) describes the life cycle of a trend as the following:

Pedersen(2015)將趨勢的生命周期描述如下:

- Start of the Trend: Underreaction to Information 趨勢的開始:對信息的React不足

- Trend Continuation: Delayed Overreaction 趨勢延續:過度React延遲

- End of Trend (Reversion to Fundamental Value) 趨勢結束(恢復至基本價值)

Pedersen (2015) mentions trend-following investing as a strategy for managed futures, but maybe some of the ideas could be applied for our case here.

Pedersen(2015)提到了將趨勢追蹤投資作為管理期貨的一種策略,但也許有些想法可以在這里應用于我們的案例。

Although the extreme form of efficient market hypothesis states that the market instantaneously incorporates every information, both public and undisclosed, researches like Ho, Danny et al. (2019) has shown that people underreact to even publicly available data. Likewise, overreaction because of “herding” effect and events like margin call during a market crisis could cause the market price to move away from the asset’s fundamental value.

盡管有效市場假說的極端形式指出,市場即時整合了所有信息,包括公共信息和未公開信息,但Ho,Danny等人的研究卻是這樣。 (2019)表明人們對公開數據React不足。 同樣,由于“羊群效應”引起的過度React以及市場危機期間發生的追加保證金等事件,可能導致市場價格偏離資產的基本價值。

It is true that many of the inefficiencies of the market have already been exploited, thus strengthening the market efficiency. This is where the reason for using a neural net comes in. As underlined by Aldridge and Avellaneda (2019) and QRAFT (2020), the ability to detect nonlinear patterns in data could be essential in detecting financial patterns that have not been detected before. For example, Aldridge and Avellaneda (2019) cautiously attributes the superb performance of a neural net prediction operating on both SPY and target asset’s stock price data to the ability of neural nets to detect nonlinear patterns, overcoming the limitations of the famous CAPM’s dependence on linearity.

的確,許多低效率的市場已經被利用,從而增強了市場效率。 這就是使用神經網絡的原因所在。正如Aldridge和Avellaneda(2019)和QRAFT(2020)所強調的那樣,檢測數據中非線性模式的能力對于檢測以前未檢測到的財務模式可能至關重要 。 例如, Aldridge和Avellaneda(2019)謹慎地將基于SPY和目標資產的股價數據的神經網絡預測的出色表現歸因于神經網絡檢測非線性模式的能力,克服了著名的CAPM依賴于線性的局限性。

理論假設 (Theoretical Assumption)

The hypothesis that we held beginning the research was that a neural network trained with a large amount of stock price data would be able to detect the related stock’s deviation from its fundamental value. Although stock price data holds numerous noises and biases, we assumed that a large amount of train data will allow the neural net to train properly.

我們開始研究的假設是,經過大量股票價格數據訓練的神經網絡將能夠檢測到相關股票偏離其基本價值的情況。 盡管股價數據存在許多雜訊和偏見,但我們假設大量的訓練數據將使神經網絡能夠正確訓練。

困難的目標與認可 (Goal and Acknowledgment of the Difficulty)

The goal of the research was to beat the buy-and-hold strategy. As we were focused on uncovering the ability/inability of the neural net to make accurate future price predictions, we only emulated the trading strategy to work with one given asset. In hindsight, this was an incredibly ambitious project as beating the buy-and-hold strategy for one given asset while only using minimal financial data would promise phenomenal returns if expanded to a diverse portfolio. However, Aldridge and Avellaneda (2019) already shows that using stock data from only two assets (the target asset and the SPY) is sufficient to beat the buy-and-hold strategy with a relatively simple feed-forward neural net. So perhaps while this method might not work for any given asset, it could work for certain ones.

該研究的目的是擊敗購買和持有策略。 由于我們專注于發現神經網絡進行準確的未來價格預測的能力/無能為力,因此我們僅模擬了一種交易策略,可以與一種給定資產一起使用。 事后看來,這是一個令人難以置信的雄心勃勃的項目,因為擊敗一項給定資產的購買和持有策略,而僅使用最少的財務數據,如果將其擴展到多樣化的投資組合,將帶來可觀的回報。 但是, Aldridge和Avellaneda(2019)已經表明,僅使用兩個資產(目標資產和SPY)的股票數據就足以用相對簡單的前饋神經網絡擊敗并購策略。 因此,雖然此方法可能不適用于任何給定資產,但它可能適用于某些資產。

要避免的陷阱 (Pitfalls To Avoid)

So before we go on…

所以在我們繼續之前...

There are common pitfalls observable in too many Medium posts on time-series data analysis on stock price prediction.

在太多有關股票價格預測的時間序列數據分析的中型職位中,存在常見的陷阱。

- Standardizing/normalizing the entire dataset 標準化/標準化整個數據集

- Training the whole dataset and testing within the trained dataset 訓練整個數據集并在訓練后的數據集中進行測試

- Selecting input/output incorrectly, therefore producing a seemingly perfect prediction output that is in fact just the input with a time-lag (in most cases, training the model to predict stock prices, not returns) 錯誤地選擇了輸入/輸出,因此產生了看似完美的預測輸出,實際上只是一個時間滯后的輸入(在大多數情況下,訓練模型以預測股價而不是回報)

While our research is not perfect, you can be assured that the pitfalls mentioned above are avoided.

盡管我們的研究還不夠完善,但可以確保可以避免上述陷阱。

測試交易策略 (Trading Strategy for Testing)

As mentioned above, our test trading strategy does not create a portfolio; we use one asset’s return prediction to make an all-out-buy/sell decision.

如上所述,我們的測試交易策略不會創建投資組合。 我們使用一種資產的回報預測做出全買/賣決策。

- If the return prediction for the next trading period is positive, buy as many of that asset as possible using the current cash bank. 如果下一個交易期的收益預測為正,請使用當前現金銀行購買盡可能多的資產。

- If the return prediction for the next trading period is negative, liquidate everything. 如果下一個交易期的收益預測為負,則清算所有資產。

In theory a well-trained neural net will be able to beat the buy-and-hold position with this strategy. The key factors that will enable excellent returns with this strategy are:

從理論上講,訓練有素的神經網絡將可以用這種策略擊敗買入和持有的位置。 使用此策略可帶來出色回報的關鍵因素是:

- Keep up with the increasing price of the stock during a bull market. 在牛市期間跟上不斷上漲的股票價格。

- Avoid the market crash, and ride the wave when the market picks up again. 避免市場崩潰,并在市場回暖時乘風破浪。

The reason for selecting such a simple trading strategy is, firstly because this enables the performance of the strategy to be more directly ascribable to the prediction model, secondly because we lack more sophisticated financial knowledge, and thirdly because as a soldier with 400 dollars monthly income and a university student, we cannot afford the required computation power for complex portfolio management backtesting.

選擇這樣一個簡單的交易策略的原因是,首先,因為這使得策略的性能可以更直接地歸因于預測模型;其次,由于我們缺乏更復雜的財務知識,其次,因為作為一個月收入為400美元的士兵,對于一個大學生,我們無法承受復雜的投資組合管理回測所需的計算能力。

Also note that we will be assuming the risk-free rate is zero, and that we haven’t incorporated transaction cost in our backtests.

還要注意,我們將假設無風險利率為零,并且我們沒有在回測中納入交易成本。

訓練固定窗口預測模型 (Training the Fixed-Window Prediction Model)

Those familiar with the subject might scoff as they read the specifications of the prediction model discussed here, as it is quite blatant that such model should not work. However, the failure of this model does provide key insights, and helps eliminate false assumptions, so it should be worth exploring.

熟悉此主題的人在閱讀此處討論的預測模型的規范時可能會sc之以鼻,因為這種模型不起作用是很公然的。 但是,此模型的失敗確實提供了關鍵的見解,并有助于消除錯誤的假設,因此值得探討。

假設條件 (Assumptions)

- Training a feed-forward neural net on a fixed 10~20 years of asset return data should enable the model to detect notable trends. 在固定的10到20年的資產收益數據上訓練前饋神經網絡,應該使模型能夠檢測到顯著的趨勢。

- The detected trends could be applied to predict future asset returns. 檢測到的趨勢可用于預測未來資產收益。

數據預處理 (Data Pre-processing)

I used yfinance to collect Yahoo Finance historical data. For ease of understanding I will introduce three terminologies that I used in the process.

我使用yfinance收集Yahoo Finance歷史數據。 為了便于理解,我將介紹在此過程中使用的三種術語。

- Training period: the span of time from which training data is collected 培訓期:收集培訓數據的時間跨度

- Training size: the size of the period from which the input data (train_x) to the neural net is assembled 訓練大小:組裝輸入數據(train_x)到神經網絡的周期的大小

- Prediction period: the period of time we want to look forward into 預測期:我們要期待的時間段

For the example here we will use training period of 18 years (using trading days between 1993, June 30th to 2010, June 30th), a training size of 100 days, and a prediction period of 21 days (= making monthly return predictions). The chosen asset will be the SPY ETF. We will only consider monthly returns as training data.

在此示例中,我們將使用18年的培訓期(使用1993年6月30日至2010年6月30日之間的交易日),100天的培訓規模以及21天的預測期(=進行月收益預測)。 選擇的資產將是SPY ETF。 我們只會將每月收益作為培訓數據。

Using pandas DataFrame makes the pre-processing process quite trivial.(Note that the description below explains the input and output as a numpy array, not in form of tensors)

使用pandas DataFrame可使預處理過程變得非常簡單。 (請注意,以下說明將輸入和輸出解釋為numpy數組,而不是張量形式)

- Shift the closing price by (-1) * prediction_period. 將收盤價移動(-1)*預測周期。

- Calculate the forward return for each trading day using the shifted closing price. 使用調整后的收盤價計算每個交易日的遠期收益。

- Eliminate trading days with null values, then extract a list of lists of forward returns, each inner list with the size of training_size. This is our train_x. 消除具有空值的交易日,然后提取一個預期收益列表列表,每個內部列表的大小為training_size。 這是我們的train_x。

- For each list of returns (each list within train_x), the target return should be the monthly forward return of the trading day that is a prediction_period away from the latest date included in the list. 對于每個收益列表(train_x中的每個列表),目標收益應為交易日的月度遠期收益,該月的遠期收益距該列表中包含的最新日期的prediction_period。

Then use the MinMaxScaler from sklearn to scaler.fit_transform the input data.

然后使用sklearn中的MinMaxScaler縮放到scaler.fit_transform輸入數據。

As you might notice step 3 and 4 could be modified so that instead of forward returns, historical returns [(close_t / close_(t - prediction_period)) - 1] could be used for the same effect.

您可能會注意到,可以修改第3步和第4步,以便代替前向收益,可以使用歷史收益[[close_t / close_(t-projection_period))-1]來達到相同的效果。

You could also be wondering here why we chose to use the daily closing price and not the adjusted closing price. We assumed that the closing price will be ‘safer’ to use than the adjusted closing price considering the possible look-ahead bias. There are other opinions asserting that using the adjusted closing price is more adequate when backtesting on returns (not price!); this is something that we are continuing to look into. Through feature engineering, we hope to minimize the survivorship bias and look-ahead bias altogether in near future.

您可能還在想知道為什么我們選擇使用每日收盤價而不是調整后的收盤價。 考慮到可能的前瞻性偏差,我們假設收盤價比調整后的收盤價“更安全”。 還有其他觀點認為,在對收益進行回測(不是價格!)時,使用調整后的收盤價更為合適。 這是我們一直在研究的東西。 通過功能工程,我們希望在不久的將來將生存偏差和前瞻偏差全部降至最低。

Then what of the test set? We followed the same process detailed above, except that the data was gathered from about 10 years (using trading days between 2010, July 1st to 2020, July 1st), and that when scaling the test input, we used scaler.trasnform to avoid data leakage.

那測試集是什么? 除了從大約10年(使用2010年7月1日至2020年,7月1日之間的交易日)收集數據,并且在縮放測試輸入時,我們使用scaler.trasnform來避免數據,我們遵循與上述相同的過程,不同的是泄漏。

選型 (Model Selection)

As it is obvious from the title of this article, we experimented with a feedforward neural network.

從本文標題可以明顯看出,我們嘗試了前饋神經網絡。

To optimize the neural net and gain insight we tried numerous hyperparameters, activation functions, number of layers, etc, but there is one similarity that is shared.

為了優化神經網絡并獲得洞察力,我們嘗試了許多超參數,激活函數,層數等,但是有一個相似之處可以共享。

In all models we use the tanh activation function for the final output layer. As tanh returns a value inbetween -1 and 1, it is a better choice than sigmoid as the output layer’s activation function, and since we are discounting leverage in our strategy there should not be a case in which the actual return goes beyond the range of a tanh function.

在所有模型中,我們將tanh激活函數用于最終輸出層。 由于tanh返回的值介于-1和1之間,因此它比sigmoid更好,因為它是輸出層的激活函數,并且由于我們在策略中不考慮杠桿率,因此實際情況不應超過實際范圍。 tanh函數。

回測 (Backtesting)

一層隱藏層,均方誤差損失函數 (One hidden layer, Mean squared error loss function)

Aldridge and Avellaneda (2019) shows that quite surprisingly, a simple neural net with a single hidden layer has enough computation power to emulate a crossover moving average strategy. It however becomes less clear whether Aldridge and Avellaneda (2019) maintains that same model structure throughout the paper, and it is only at the end of the paper that we see a successful instance of a prediction model that beats the buy-and-hold strategy.

Aldridge和Avellaneda(2019)表明,令人驚訝的是,一個具有單個隱藏層的簡單神經網絡具有足夠的計算能力來模擬交叉移動平均策略。 但是,尚不清楚Aldridge和Avellaneda(2019)是否在整個論文中都保持相同的模型結構,只是在論文結尾處,我們才看到成功的預測模型實例擊敗了買入并持有策略。

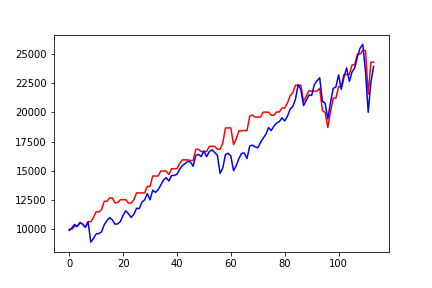

We therefore tested performance with a neural net with one hidden layer first. Quite surprisingly, the first backtesting we tried with this model structure showed outstanding results.

因此,我們首先使用具有一個隱藏層的神經網絡測試了性能。 令人驚訝的是,我們使用此模型結構進行的首次回測顯示了出色的結果。

A stunning 0.903 Sharpe ratio (zero risk-free rate) compared to 0.750 Sharpe (zero risk-free rate) of the buy-and-hold position of SPY ETF. Impressive, right? What could be wrong?

SPY ETF的買入和持有頭寸的夏普比率為驚人的0.903夏普比率(零無風險利率),而夏普比率為0.750夏普(零無風險利率)。 令人印象深刻,對不對? 有什么事嗎

Sadly, there are many problems.

可悲的是,有很多問題。

The first problem is that as excellent as the graph above seems, such results are not readily reproducible. Running the same code several times shows that the graph above is the result of pure luck. Most iterations of code shows poor Sharpe, returns, etc, and at that point, tinkering with the model to achieve a similar result as the one above effectively turns the test set into a validation set, putting credibility of any result in question.

第一個問題是,如上圖所示,這種結果不容易再現。 多次運行相同的代碼表明,上圖是純運氣的結果。 大多數代碼迭代都顯示出不良的Sharpe,回報等,并且在那一點上,對模型進行修補以達到相似的結果,因為上面的模型有效地將測試集轉換為驗證集,從而使任何有問題的結果具有可信度。

The second problem, and the major problem, is that the performance of the model above mainly comes from predicting a positive return and leeching off the buy-and-hold position of the SPY. To put it in numbers, the model trained for the graph above predicts positive return 78% of the time, and in cases where it predicts a negative return, it is wrong 62% of the time.

第二個也是主要的問題是,上述模型的性能主要來自預測正收益并擺脫SPY的買入和持有頭寸。 從數量上講,為上圖訓練的模型在78%的時間內預測正收益,而在預測為負的情況下,則有62%的時間是錯誤的。

In other words, the model gets lucky so that the 38% of the time it is right with the negative return predictions, it nails it at critical locations where the asset’s price fluctuation is high, and then resorts to hugging onto the asset.

換句話說,該模型很幸運,因此有38%的時間使用負回報預測是正確的,它將其釘在資產價格波動高的關鍵位置,然后求助于資產。

The last problem is that the training loss stops decreasing at a pretty high point. This perhaps should have been the first indicator that one hidden layer is not going to do the magic; the neural net lacks the computation power to train properly, otherwise the training loss would keep decreasing.

最后一個問題是訓練損失在相當高的時候停止下降。 這也許應該是表明一個隱藏層無法發揮作用的第一個指標。 神經網絡缺乏適當訓練的計算能力,否則訓練損失將不斷減少。

多個隱藏層,均方誤差損失函數 (Multiple hidden layers, Mean squared error loss function)

There’s really nothing to be said here. The model prediction is awful.

這里真的沒什么可說的。 模型預測非常糟糕。

The model with multiple hidden layers shows more promise than the one hidden layer one, in that predicted returns are not so lopsided to either the positive or negative realm. Which in turn just translates to the model sucking at making useful predictions. Yikes.

具有多個隱藏層的模型比一個隱藏層的模型顯示出更多的希望,因為預測收益不會太偏向于正向或負向領域。 反過來,這也只是轉化為模型在做出有用的預測時變得很糟糕。 kes。

其他 (Others)

- Different training periods 不同的訓練時間

- Custom loss functions 自定義損失功能

- Different assets 不同資產

- A collection of data from different assets from the same training period 收集來自同一培訓期間的不同資產的數據

- Averaging the prediction of several models trained with same hyperparameters and data to get consistent results 對使用相同超參數和數據訓練的幾個模型進行平均預測以獲得一致的結果

All the efforts above turned avail and confirmed that a fixed-window prediction model utilizing a feed-forward neural net cannot beat the buy-and-hold position.

以上所有努力均奏效,并證實了使用前饋神經網絡的固定窗口預測模型無法擊敗買入并持有的頭寸。

等等,然后Aldridge和Avellaneda(2019)傳播虛假消息嗎? (Wait, then is Aldridge and Avellaneda (2019) spreading false news?)

NO. The terrible performance of our backtests above is caused first and foremost by the deviation of our strategy from the one mentioned by Aldridge and Avellaneda (2019). One such major deviation is the use of fixed-window prediction model itself. From our interpretations of the paper, Aldridge and Avellaneda (2019) seems to have used a moving-window prediction model.

不行 我們的上述回測的糟糕表現首先是由于我們的策略與Aldridge和Avellaneda(2019)所提到的策略的偏離所致。 這樣的主要偏差之一是使用固定窗口預測模型本身。 根據我們對本文的解釋, Aldridge和Avellaneda(2019)似乎使用了移動窗口預測模型。

In fact, digging into the theoretical background shows that fixed-window prediction model is bound to fail in making useful predictions.

實際上,深入理論背景表明,固定窗口預測模型必然無法做出有用的預測。

為什么固定窗口預測模型失敗 (Why Fixed-Window Prediction Model Failed)

缺乏數據 (Lack of data)

We had more than 4000 data points as our input to the feed-forward neural net in the example above. In a traditional machine learning research, this might seem like an ideal amount of data. That is not the case with our project here, however.

在上面的示例中,我們有4000多個數據點作為前饋神經網絡的輸入。 在傳統的機器學習研究中,這似乎是理想的數據量。 但是,這里的項目不是這種情況。

It is important to consider what pattern we hope our neural net trains to learn. Remember we mentioned in the beginning of the article, under Rationale Behind Stock Price Trend-Based Analysis, that the neural net should be successful if it could detect the overreaction and underreaction of the market.

重要的是要考慮我們希望神經網絡訓練的學習模式。 請記住,我們在本文開頭的“ 基于股價趨勢分析的原理”下提到,如果神經網絡可以檢測到市場的過度React和React不足,那么它應該是成功的。

What we conclude here is not backed by any evidence, but it seems that there are too many deviations of the market from the fundamental value of the asset that it becomes impossible for the neural net to detect the specific trend-based pattern we are looking for. That is, the market is not as efficient as we thought it would be, causing too much noise in the data.

我們在此得出的結論沒有任何證據支持,但似乎市場與資產的基本價值之間存在太多偏差,因此神經網絡無法檢測到我們正在尋找的基于特定趨勢的模式。 也就是說,市場效率不如我們預期的那樣,從而導致數據中的噪音過多。

If there are certain patterns that only exist dominantly within the training period (1993, June 30th to 2010, June 30th), they could cause overfitting, which would hurt the prediction. Also, note that the pattern we hoped to look for can be described as an universal pattern — it should exist within any time frame because, as of yet, people do overreact and underreact to market information.

如果某些模式僅在訓練期間(1993年6月30日至2010年6月30日)中占主導地位,則可能會導致過度擬合,從而影響預測。 另外,請注意,我們希望尋找的模式可以描述為一種通用模式-它應該存在于任何時間范圍內,因為到目前為止,人們對市場信息確實React過度,React不足。

The effectiveness of the contrarian strategy demonstrated by Khandani and Lo (2007) shows us that even quite recently there existed large inefficiencies in the market that persisted for more than a decade (a Sharpe exceeding 50 was once achievable with a relatively simple strategy!). Taking this into consideration, a proper training of the neural net could require a century, if not multiple centuries, of raw data to be able to rid of persisting noise.

Khandani和Lo(2007)所展示的逆勢策略的有效性向我們表明,即使在最近,市場上仍然存在著效率低下的巨大問題,這種低效率現象持續了十多年 (使用相對簡單的策略,夏普就可以超過50個!)。 考慮到這一點,對神經網絡進行適當的訓練可能需要一個世紀(即使不是幾個世紀)的原始數據才能消除持續存在的噪聲。

The conclusion can be the following: if we want viable feed-forward neural net predictions, we either need immensely more data, or more polished and engineered data (unlike the raw ones we used here).

結論可以是:如果我們想要可行的前饋神經網絡預測,我們要么需要更多的數據,要么需要更多的經過精煉和工程化的數據(與此處使用的原始數據不同)。

Another alternative is to switch the trend pattern we are looking for; we stop looking for a universal pattern, and instead go for the most recent pattern that we can detect. This is the idea behind the moving-window prediction.

另一種選擇是切換我們正在尋找的趨勢模式。 我們不再尋找通用模式,而是尋找我們可以檢測到的最新模式。 這就是移動窗口預測背后的想法。

數據不平衡 (Imbalance in data)

Another problem with our selection of time period is that it is slightly more bullish than bearish. By just predicting every future return to be positive, the neural net could achieve a directional prediction accuracy near 56%.

我們選擇時間周期的另一個問題是,看漲比看跌略微多。 通過僅預測每個未來的回報都是正數,神經網絡可以實現接近56%的方向預測精度。

This would not be a problem if it is mechanically possible to achieve a higher prediction accuracy than the 56%. But what if the best prediction accuracy we could achieve through this method, when not sticking to one specific directional prediction, is below 56%? Then it makes sense for the neural net to predict every next return to be between 0 and 1, as that might be a straightforward way to achieve the lowest loss.

如果機械上可以實現比56%更高的預測精度,那么這將不是問題。 但是,如果不遵循一種特定的方向預測,而通過這種方法可以達到的最佳預測精度低于56%呢? 然后,對于神經網絡來說,預測下一個返回值在0到1之間是有意義的,因為這可能是實現最低損失的直接方法。

The dialogue above in reality is actually irrelevant to most of the processes undertaken during the research, as in most cases the neural net overfits, meaning it surpasses the 56% accuracy in direction prediction. But it has proven to be impediments in our earliest efforts, so we mention it for those who might attempt to reproduce our work.

實際上,以上對話實際上與研究過程中進行的大多數過程無關,因為在大多數情況下,神經網絡過擬合,這意味著其方向預測精度超過了56%。 但是事實證明,這是我們最早的努力中的障礙,因此對于那些可能會嘗試復制我們作品的人來說,我們會提到它。

任務的野心 (The Ambitiousness of the Task)

When we pause to think again about the goal of the task and the little amount of information we utilized to make strides towards that goal, the more we realize the ambitiousness of the task.

當我們停下來重新考慮任務的目標以及為實現該目標而大步使用的少量信息時,我們就越能意識到任務的雄心。

Up so far, the results of the experiments above do tell us that more sophisticated methods are warranted.

到目前為止,以上實驗的結果確實告訴我們,需要更復雜的方法。

那下一步呢? (So What Next?)

The results above are far from discouraging. In a sense, the methods for asset return prediction we described in the article were bound to fail; our actual implementations only confirmed that belief.

上面的結果絕不令人沮喪。 從某種意義上說,我們在本文中描述的資產收益預測方法注定會失敗; 我們的實際實現只證實了這一信念。

As we discussed above, there are alternate methods to punch through the problem of lack of data. Currently we are implementing moving-window prediction model with varying engineered features and different machine learning models, and are beginning to see improvements.

正如我們上面討論的那樣,有其他方法可以解決數據不足的問題。 當前,我們正在實現具有變化的工程特征和不同的機器學習模型的移動窗口預測模型,并且開始看到改進。

Please give us feedback if you notice logical fallacies or errors in implementation! Recommendations on papers, posts, courses, etc are also welcome.

如果您發現邏輯錯誤或實施錯誤,請給我們反饋! 也歡迎有關論文,職位,課程等的建議。

作者 (Authors)

Seouk Jun Kim

金碩俊

Seouk Jun Kimhttps://www.linkedin.com/in/seouk-jun-kim-a74921184/

Seouk Jun Kim https://www.linkedin.com/in/seouk-jun-kim-a74921184 /

Sunmin Lee

李新民

Sunmin Leehttps://www.linkedin.com/in/sun-min-lee-3116aa123/

李新民https://www.linkedin.com/in/sun-min-lee-3116aa123/

資料來源 (Sources)

- Aldridge, Irene E. and Marco Avellaneda. “Neural Networks in Finance: Design and Performance.” (2019). Aldridge,Irene E.和Marco Avellaneda。 “金融中的神經網絡:設計和性能。” (2019)。

- Pedersen, Lasse Heje. “Efficiently Inefficient: How Smart Money Invests and Market Prices Are Determined.” (2015) Pedersen,Lasse Heje。 “效率低下:如何確定精明的貨幣投資和市場價格。” (2015年)

- Ho, Danny and Huang, Yuxuan and Capretz, Luiz F. “Neural Network Models for Stock Selection Based on Fundamental Analysis” (2019). Ho,Danny和Huang,Yuxuan和Capretz,Luiz F.“基于基本面分析的股票選擇神經網絡模型”(2019)。

QRAFT. “AI Asset Management Report. How can AI innovate asset management?” Qraft Technologies. Medium., (2020)

QRAFT。 “ AI資產管理報告。 人工智能如何創新資產管理?” Qraft Technologies。 中(2020)

- Khandani, Amir E. and Lo, Andrew W., What Happened to the Quants in August 2007? (November 4, 2007) Khandani,Amir E.和Lo,Andrew W.,2007年8月發生了什么? (2007年11月4日)

yfinance, https://pypi.org/project/yfinance/

yfinance, https: //pypi.org/project/yfinance/

翻譯自: https://towardsdatascience.com/the-effectiveness-of-feed-forward-neural-networks-in-trend-based-trading-1-4074912cf5cd

前饋神經網絡中的前饋

本文來自互聯網用戶投稿,該文觀點僅代表作者本人,不代表本站立場。本站僅提供信息存儲空間服務,不擁有所有權,不承擔相關法律責任。 如若轉載,請注明出處:http://www.pswp.cn/news/390603.shtml 繁體地址,請注明出處:http://hk.pswp.cn/news/390603.shtml 英文地址,請注明出處:http://en.pswp.cn/news/390603.shtml

如若內容造成侵權/違法違規/事實不符,請聯系多彩編程網進行投訴反饋email:809451989@qq.com,一經查實,立即刪除!

![[轉]kafka介紹](http://pic.xiahunao.cn/[轉]kafka介紹)