深度學習數據集中數據差異大

The modern world runs on “big data,” the massive data sets used by governments, firms, and academic researchers to conduct analyses, unearth patterns, and drive decision-making. When it comes to data analysis, bigger can be better: The more high-quality data is incorporated, the more robust the analysis will be. Large-scale data analysis is becoming increasingly powerful thanks to machine learning and has a wide range of benefits, such as informing public-health research, reducing traffic, and identifying systemic discrimination in loan applications.

現代世界基于“大數據”,這是政府,企業和學術研究人員用來進行分析,挖掘模式和推動決策的海量數據集。 當涉及到數據分析時,越大越好:合并的數據越多,分析就越魯棒。 得益于機器學習,大規模數據分析正變得越來越強大,并具有廣泛的好處,例如為公共衛生研究提供信息,減少流量以及識別貸款申請中的系統性歧視。

But there’s a downside to big data, as it requires aggregating vast amounts of potentially sensitive personal information. Whether amassing medical records, scraping social media profiles, or tracking banking and credit card transactions, data scientists risk jeopardizing the privacy of the individuals whose records they collect. And once data is stored on a server, it may be stolen, shared, or compromised. “Improper disclosure of such data can have adverse consequences for a data subject’s private information, or even lead to civil liability or bodily harm,” explains data scientist An Nguyen, in his article, “Understanding Differential Privacy.”

但是大數據有一個缺點,因為它需要匯總大量潛在的敏感個人信息。 無論是收集病歷,抓取社交媒體資料還是跟蹤銀行和信用卡交易,數據科學家都有可能危及收集其記錄的個人的隱私。 數據一旦存儲在服務器上,就可能被盜,共享或泄露。 數據科學家An Nguyen在他的文章“ 了解差異隱私 ”中解釋說:“不當披露此類數據可能會對數據主體的私人信息產生不利影響,甚至導致民事責任或人身傷害。”

Computer scientists have worked for years to try to find ways to make data more private, but even if they attempt to de-identify data — for example, by removing individuals’ names or other parts of a data set — it is often possible for others to “connect the dots” and piece together information from multiple sources to determine a supposedly anonymous individual’s identity (via a so-called re-identification or linkage attack).

計算機科學家已經工作了多年,試圖找到使數據更具私密性的方法,但是即使他們試圖去識別數據(例如,通過刪除個人的姓名或數據集的其他部分),對于其他人也通常是可能的。 “點點滴滴”,并將來自多個來源的信息拼湊起來,以確定所謂的匿名個人的身份(通過所謂的重新識別或鏈接攻擊)。

Fortunately, in recent years, computer scientists have developed a promising new approach to privacy-preserving data analysis known as “differential privacy” that allows researchers to unearth the patterns within a data set — and derive observations about the population as a whole — while obscuring the information about each individual’s records.

幸運的是,近年來,計算機科學家為保護隱私的數據分析開發了一種很有前途的新方法,稱為“差異性隱私”,它使研究人員能夠挖掘數據集內的模式,并獲得有關總體人口的觀察結果,同時又能掩蓋整體情況。有關每個人的記錄的信息。

解決方案:差異隱私 (The solution: differential privacy)

Differential privacy (also known as “epsilon indistinguishability”) was first developed in 2006 by Cynthia Dwork, Frank McSherry, Kobbi Nissim and Adam Smith. In a 2016 lecture, Dwork defined differential privacy as being achieved when “the outcome of any analysis is essentially equally likely, independent of whether any individual joins, or refrains from joining, the dataset.”

差異性隱私(也稱為“ε不可區分性”)由Cynthia Dwork,Frank McSherry,Kobbi Nissim和Adam Smith 于2006年首次開發 。 在2016年的一次演講中,Dwork 將差異性隱私定義為“當任何分析的結果基本上具有同等可能性,而與任何個人加入還是拒絕加入數據集無關時”。

How is this possible? Differential privacy works by adding a pre-determined amount of randomness, or “noise,” into a computation performed on a data set. As an example, imagine if five people submit “yes” or “no” about a question on a survey, but before their responses are accepted, they have to flip a coin. If they flip heads, they answer the question honestly. But if they flip tails, they have to re-flip the coin, and if the second toss is tails, they respond “yes,” and if heads, they respond “no” — regardless of their actual answer to the question.

這怎么可能? 差分隱私通過將預定量的隨機性或“噪聲”添加到對數據集執行的計算中而起作用。 例如,假設有五個人對調查中的一個問題回答“是”或“否”,但是在他們的回答被接受之前,他們必須擲硬幣。 如果他們低著頭,他們會誠實地回答這個問題。 但是,如果他們甩尾巴,就必須重新擲硬幣;如果第二次拋硬幣是尾巴,則他們回答“是”,如果是拋頭,則他們回答“否”,而不管他們對問題的實際回答如何。

As a result of this process, we would expect a quarter of respondents (0.5 x 0.5 — those who flip tails and tails) to answer “yes,” even if their actual answer would have been “no”. With sufficient data, the researcher would be able to factor in this probability and still determine the overall population’s response to the original question, but every individual in the data set would be able to plausibly deny that their actual response was included.

作為此過程的結果,我們希望四分之一的受訪者(0.5 x 0.5-那些甩尾巴的人)回答“是”,即使他們的實際回答是“否”。 有了足夠的數據,研究人員將能夠考慮這一可能性,并且仍然可以確定總體人群對原始問題的回答,但是數據集中的每個人都可以合理地否認包括了他們的實際回答。

Of course, researchers don’t actually use coin tosses and instead rely on algorithms that, based on a pre-determined probability, similarly alter some of the responses in the data set. The more responses are changed by the algorithm, the more the privacy is preserved for the individuals in the data set. The trade-off, of course, is that as more “noise” is added to the computation — that is, as a greater percentage of responses are changed — then the accuracy of the data analysis goes down.

當然,研究人員實際上并沒有使用拋硬幣,而是依靠基于預定概率的算法來類似地更改數據集中的某些響應。 該算法更改的響應越多,為數據集中的個人保留的隱私越多。 當然,要權衡的是,隨著計算中添加更多“噪聲”(即,隨著更大百分比的響應發生變化),數據分析的準確性就會下降。

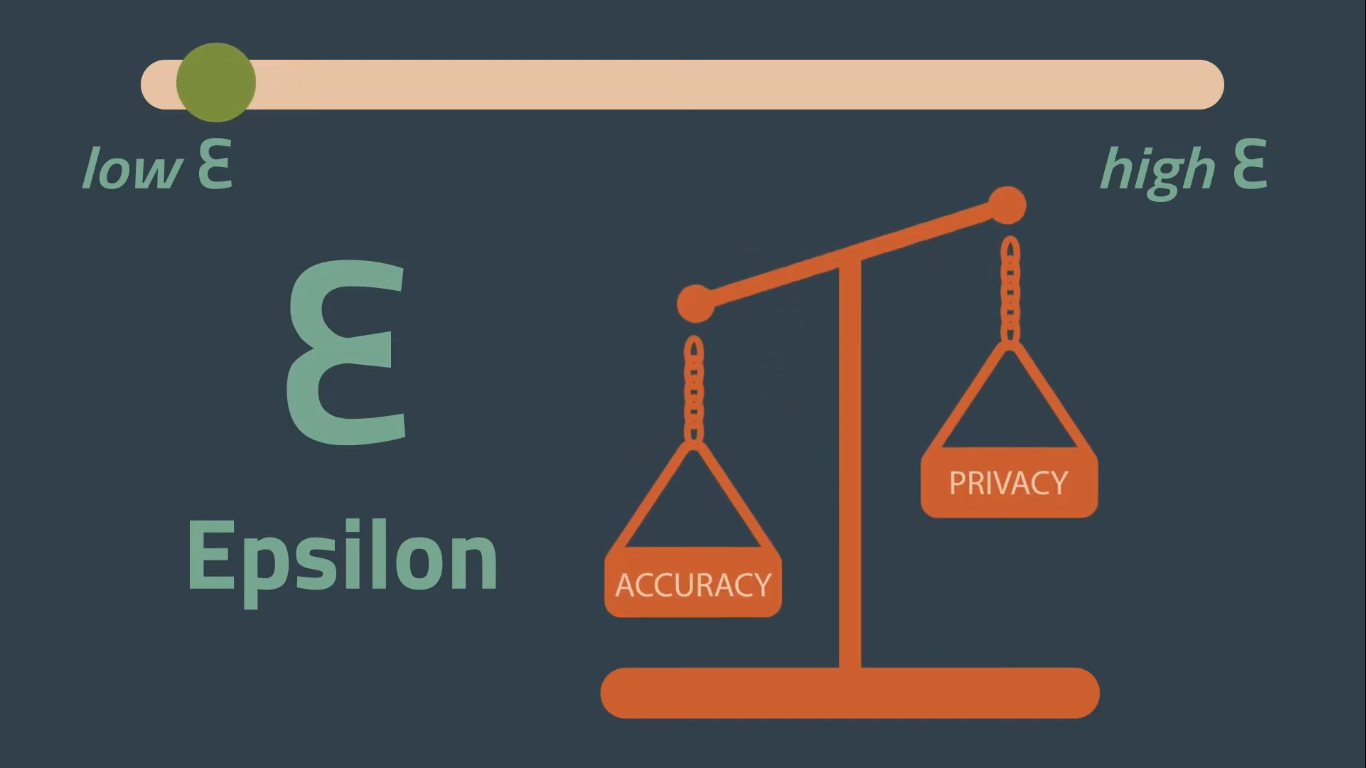

When Dwork and her colleagues first defined differential privacy, they used the Greek symbol ε, or epsilon, to mathematically define the privacy loss associated with the release of data from a data set. This value defines just how much differential privacy is provided by a particular algorithm: The lower the value of epsilon, the more each individual’s privacy is protected. The higher the epsilon, the more accurate the data analysis — but the less privacy is preserved.

當Dwork和她的同事首次定義差異隱私時,他們使用希臘符號ε或epsilon在數學上定義與從數據集中釋放數據相關的隱私損失。 此值僅定義特定算法提供多少差異隱私:epsilon值越低,每個人的隱私受到保護的程度就越高。 ε越高,數據分析越準確-但是保留的隱私越少。

When the data is perturbed (i.e. the “noise” is added) while still on a user’s device, it’s known as local differential privacy. When the noise is added to a computation after the data has been collected, it’s called central differential privacy. With this latter method, the more you query a data set, the more information risks being leaked about the individual records. Therefore, the central model requires constantly searching for new sources of data to maintain high levels of privacy.

當數據仍在用戶設備上而受到干擾(即添加了“噪聲”)時,稱為本地差異隱私。 在收集數據后將噪聲添加到計算中時,這稱為中央差分隱私。 使用后一種方法,您查詢數據集的次數越多,就越有可能泄露有關各個記錄的信息。 因此,中心模型要求不斷搜索新的數據源以保持高度的隱私。

Either way, a key goal of differential privacy is to ensure that the results of a given query will not be affected by the presence (or absence) of a single record. Differential privacy also makes data less attractive to would-be attackers and can help prevent them from connecting personal data from multiple platforms.

無論哪種方式,差異隱私的主要目標都是確保給定查詢的結果不會受到單個記錄的存在(或不存在)的影響。 差異性隱私還使數據對潛在的攻擊者的吸引力降低,并且可以幫助防止他們連接來自多個平臺的個人數據。

實踐中的差異隱私 (Differential privacy in practice)

Differential privacy has already gained widespread adoption by governments, firms, and researchers. It is already being used for “disclosure avoidance” by the U.S. census, for example, and Apple uses differential privacy to analyze user data ranging from emoji suggestions to Safari crashes. Google has even released an open-source version of a differential privacy library used in many of the company’s core products.

差異性隱私已被政府,公司和研究人員廣泛采用。 例如, 美國人口普查已經將其用于“避免泄露”,而Apple使用差異隱私來分析用戶數據,從表情符號建議到Safari崩潰。 谷歌甚至發布了該公司許多核心產品中使用的差異隱私庫的開源版本。

Using a concept known as “elastic sensitivity” developed in recent years by researchers at UC Berkeley, differential privacy is being extended into real-world SQL queries. The ride-sharing service Uber adopted this approach to study everything from traffic patterns to drivers’ earnings, all while protecting users’ privacy. By incorporating elastic sensitivity into a system that requires massive amounts of user data to connect riders with drivers, the company can help protect its users from a snoop.

加州大學伯克利分校的研究人員近年來使用一種稱為“彈性敏感性”的概念,將差分隱私擴展到了實際SQL查詢中。 乘車共享服務Uber采用這種方法研究了從交通方式到駕駛員收入的所有內容,同時保護了用戶的隱私。 通過將彈性敏感度納入需要大量用戶數據才能將騎手與駕駛員連接起來的系統,該公司可以幫助保護其用戶免遭窺探。

Consider, for example, how implementing elastic sensitivity could protect a high-profile Uber user, such as Ivanka Trump. As Andy Greenberg wrote in Wired: “If an Uber business analyst asks how many people are currently hailing cars in midtown Manhattan — perhaps to check whether the supply matches the demand — and Ivanka Trump happens to requesting an Uber at that moment, the answer wouldn’t reveal much about her in particular. But if a prying analyst starts asking the same question about the block surrounding Trump Tower, for instance, Uber’s elastic sensitivity would add a certain amount of randomness to the result to mask whether Ivanka, specifically, might be leaving the building at that time.”

例如,考慮實現彈性敏感性如何保護著名的Uber用戶,例如Ivanka Trump。 正如安迪·格林伯格(Andy Greenberg)在《 連線 》中寫道:“如果一個優步業務分析師詢問目前曼哈頓中城有多少人在叫車(也許是為了檢查供應量是否符合需求),而伊萬卡·特朗普當時恰好要求一個優步,答案就不會特別是沒有透露太多關于她的信息。 但是,例如,如果一個撬動的分析師開始對特朗普大廈周圍的街區提出相同的問題,那么優步的彈性敏感性將給結果增加一定程度的隨機性,以掩蓋伊萬卡是否特別是在那時可能離開建筑物。”

Still, for all its benefits, most organizations are not yet using differential privacy. It requires large data sets, it is computationally intensive, and organizations may lack the resources or personnel to deploy it. They also may not want to reveal how much private information they’re using — and potentially leaking.

盡管有其所有優點,但大多數組織仍未使用差異隱私。 它需要大量的數據集,計算量很大,并且組織可能缺乏部署它的資源或人員。 他們可能也不想透露正在使用多少私人信息,并且有可能泄露信息。

Another concern is that organizations that use differential privacy may be overstating how much privacy they’re providing. A firm may claim to use differential privacy, but in practice could use such a high epsilon value that the actual privacy provided would be limited.

另一個擔憂是,使用差異隱私的組織可能夸大了他們提供的隱私數量。 公司可能聲稱使用差別隱私,但實際上可能會使用很高的ε值,以致實際提供的隱私將受到限制。

Given the importance of these implementation details there is a need for shared learning amongst the differential privacy community.

考慮到這些實施細節的重要性,需要在不同的隱私社區之間共享學習。

To address whether differential privacy is being properly deployed, Dwork, together with UC Berkeley researchers Nitin Kohli and Deirdre Mulligan, have proposed the creation of an “Epsilon Registry” to encourage companies to be more transparent. “Given the importance of these implementation details there is a need for shared learning amongst the differential privacy community,” they wrote in the Journal of Privacy and Confidentiality. “To serve these purposes, we propose the creation of the Epsilon Registry — a publicly available communal body of knowledge about differential privacy implementations that can be used by various stakeholders to drive the identification and adoption of judicious differentially private implementations.”

為了解決差異性隱私是否得到適當部署,Dwork與加州大學伯克利分校的研究人員Nitin Kohli和Deirdre Mulligan共同建議創建“ Epsilon注冊中心”,以鼓勵公司提高透明度。 他們在《隱私與機密性雜志 》上寫道: “鑒于這些實施細節的重要性,因此需要在不同的隱私社區之間進行共同學習。” “為實現這些目的,我們建議創建Epsilon注冊管理機構-一個公開的公共社區,以了解差異隱私實施的知識,各種利益相關者可以使用該知識體系來推動識別和采用明智的差異私有實施。”

As a final note, organizations should not rely on differential privacy alone, but rather should use it as just one defense in a broader arsenal, alongside other measures, like encryption and access control. Organizations should disclose the sources of data they’re using for their analysis, along with what steps they’re taking to protect that data. Combining such practices with differential privacy with low epsilon values will go a long way in helping to realize the benefits of “big data” while reducing the leakage of sensitive personal data.

最后要說明的是,組織不應僅依賴于差異性隱私,而應將其用作更廣泛的武器庫中的一種防御措施,以及諸如加密和訪問控制之類的其他措施。 企業應披露其用于分析的數據源,以及他們將采取哪些步驟來保護這些數據。 將此類做法與具有較低epsilon值的差異性隱私相結合,將有助于幫助實現“大數據”的好處,同時減少敏感個人數據的泄漏。

This article was cross-posted by Brookings TechStream. The video was animated by Annalise Kamegawa. The Center for Long-Term Cybersecurity would like to thank Nitin Kohli, PhD student in the UC Berkeley School of Information, and Paul Laskowski, Assistant Adjunct Professor in the UC Berkeley School of Information, for providing their expertise to review this video and article.

本文由 Brookings TechStream 交叉發布 。 該視頻由 Annalize Kamegawa 制作動畫 。 長期網絡安全中心要感謝加州大學伯克利分校信息學院的博士生Nitin Kohli和加州大學伯克利分校信息學院的助理兼職教授Paul Laskowski,他們提供了專業知識來審閱此視頻和文章。

翻譯自: https://medium.com/cltc-bulletin/using-differential-privacy-to-harness-big-data-and-preserve-privacy-349d84799862

深度學習數據集中數據差異大

本文來自互聯網用戶投稿,該文觀點僅代表作者本人,不代表本站立場。本站僅提供信息存儲空間服務,不擁有所有權,不承擔相關法律責任。 如若轉載,請注明出處:http://www.pswp.cn/news/388759.shtml 繁體地址,請注明出處:http://hk.pswp.cn/news/388759.shtml 英文地址,請注明出處:http://en.pswp.cn/news/388759.shtml

如若內容造成侵權/違法違規/事實不符,請聯系多彩編程網進行投訴反饋email:809451989@qq.com,一經查實,立即刪除!)

)

![B1922 [Sdoi2010]大陸爭霸 最短路](http://pic.xiahunao.cn/B1922 [Sdoi2010]大陸爭霸 最短路)

)