a/b測試

The idea of A/B testing is to present different content to different variants (user groups), gather their reactions and user behaviour and use the results to build product or marketing strategies in the future.

A / B測試的想法是將不同的內容呈現給不同的變體(用戶組),收集他們的React和用戶行為,并使用結果在將來構建產品或營銷策略。

A/B testing is a methodology of comparing multiple versions of a feature, a page, a button, headline, page structure, form, landing page, navigation and pricing etc. by showing the different versions to customers or prospective customers and assessing the quality of interaction by some metric (Click-through rate, purchase, following any call to action, etc.).

A / B測試是通過向客戶或潛在客戶顯示不同版本并評估質量來比較功能,頁面,按鈕,標題,頁面結構,表單,著陸頁,導航和定價等多個版本的方法按某種指標(點擊率,購買,任何號召性用語等)進行互動的次數。

This is becoming increasingly important in a data-driven world where business decisions need to be backed by facts and numbers.

在數據驅動的世界中,這一點變得越來越重要,在這個世界中,業務決策需要事實和數字的支持。

如何進行標準的A / B測試 (How to conduct a standard A/B test)

- Formulate your Hypothesis 制定假設

- Deciding on Splitting and Evaluation Metrics 確定劃分和評估指標

- Create your Control group and Test group 創建控制組和測試組

- Length of the A/B Test A / B測試時間

- Conduct the Test 進行測試

- Draw Conclusions 得出結論

1.提出你的假設 (1. Formulate your hypothesis)

Before conducting an A/B testing, you want to state your null hypothesis and alternative hypothesis:

在進行A / B測試之前,您需要陳述零假設和替代假設:

The null hypothesis is one that states that there is no difference between the control and variant group.The alternative hypothesis is one that states that there is a difference between the control and variant group.

零假設 是一個狀態存在 的控制和變體group.The 備選假設 沒有區別 是一個狀態存在 的控制和變體組之間的差。

Imagine a software company that is looking for ways to increase the number of people who pay for their software. The way that the software is currently set up, users can download and use the software free of charge, for a 7-day trial. The company wants to change the layout of the homepage to emphasise with a red logo instead of blue logo that there is a 7-day trial available for the company’s software.

想象一下,一家軟件公司正在尋找增加軟件購買費用的人數的方法。 用戶可以免費下載和使用該軟件的當前設置方式,試用期為7天。 該公司希望更改首頁的布局,以紅色徽標代替藍色徽標來強調該公司的軟件有7天的試用期。

Here is an example of hypothesis test: Default action: Approve blue logo.Alternative action: Approve red logo.Null hypothesis: Blue logo does not cause at least 10% more license purchase than red logo.Alternative hypothesis: Red logo does cause at least 10% more license purchase than blue logo.

以下是假設檢驗的示例: 默認操作:批準藍色徽標。 替代措施:批準紅色徽標。 無假設:藍色徽標不會導致購買的許可證比紅色徽標多至少10%。 替代假設:紅色徽標確實導致購買的許可證比藍色徽標多至少10%。

It’s important to note that all other variables need to be held constant when performing an A/B test.

重要的是要注意,在執行A / B測試時,所有其他變量都必須保持恒定。

2.確定劃分和評估指標 (2. Deciding on Splitting and Evaluation Metrics)

We should consider two things: where and how we should split users into experiment groups when entering the website, and what metrics we will use to track the success or failure of the experimental manipulation. The choice of unit of diversion (the point at which we divide observations into groups) may affect what evaluation metrics we can use.

我們應該考慮兩件事:進入網站時應在何處以及如何將用戶分為實驗組,以及我們將使用什么指標來跟蹤實驗操作的成功或失敗。 轉移單位的選擇(將觀察分為幾組的點)可能會影響我們可以使用的評估指標。

The control, or ‘A’ group, will see the old homepage, while the experimental, or ‘B’ group, will see the new homepage that emphasises the 7-day trial.

對照組(即“ A”組)將看到舊的主頁,而實驗組(即“ B”組)將看到強調7天試用期的新主頁。

Three different splitting metric techniques:

三種不同的拆分指標技術:

a) Event-based diversionb) Cookie-based diversion c) Account-based diversion

a)基于事件的轉移b)基于Cookie的轉移c)基于帳戶的轉移

An event-based diversion (like a pageview) can provide many observations to draw conclusions from, but if the condition changes on each pageview, then a visitor might get a different experience on each homepage visit. Event-based diversion is much better when the changes aren’t as easily visible to users, to avoid disruption of experience.

基于事件的轉移 (如綜合瀏覽量)可以提供許多觀察結果,以得出結論,但是如果條件在每個綜合瀏覽量上都發生變化,那么訪問者可能會在每次首頁訪問中獲得不同的體驗。 當更改對用戶而言不那么容易看到時,基于事件的轉移要好得多,這樣可以避免體驗中斷。

In addition, event-based diversion would let us know how many times the download page was accessed from each condition, but can’t go any further in tracking how many actual downloads were generated from each condition.

此外,基于事件的轉移將使我們知道從每個條件訪問了多少次下載頁面,但無法進一步跟蹤從每個條件產生了多少實際下載。

Account-based can be stable, but is not suitable in this case. Since visitors only register after getting to the download page, this is too late to introduce the new homepage to people who should be assigned to the experimental condition.

基于帳戶的帳戶可以穩定,但在這種情況下不適合。 由于訪問者僅在進入下載頁面后進行注冊,因此將新首頁介紹給應該分配到實驗條件的人們為時已晚。

So this leaves the consideration of cookie-based diversion, which feels like the right choice. Cookies also allow tracking of each visitor hitting each page. The downside of cookie based diversion, is that it get some inconsistency in counts if users enter the site via incognito window, different browsers, or cookies that expire or get deleted before they make a download. As a simplification, however, we’ll assume that this kind of assignment dilution will be small, and ignore its potential effects.

因此,這無需考慮基于cookie的轉移 ,這似乎是正確的選擇。 Cookies還可以跟蹤每個訪問者訪問每個頁面的情況。 基于cookie的轉移的缺點是,如果用戶通過隱身窗口,不同的瀏覽器或過期或在下載前被刪除的cookie進入站點,則計數會出現一些不一致的情況。 但是,為簡化起見,我們將假定這種分配稀釋很小,并忽略其潛在影響。

In terms of evaluation metrics, we should prefer using the download rate (# downloads / # cookies) and purchase rate (# licenses / # cookies) relative to the number of cookies as evaluation metrics.

在 評估指標方面 ,相對于Cookie數量,我們應該更喜歡使用下載率 (#次下載/#cookie)和購買率 (#個許可/#cookies)作為評估指標。

Product usage statistics like the average time the software was used in the trial period are potentially interesting features, but aren’t directly related to our experiment. Certainly, these statistics might help us dig deeper into the reasons for observed effects after an experiment is complete. But in terms of experiment success, product usage shouldn’t be considered as an evaluation metric.

產品使用情況統計信息(例如軟件在試用期內的平均使用時間)可能是有趣的功能,但與我們的實驗沒有直接關系。 當然,這些統計信息可能有助于我們在實驗完成后更深入地觀察觀察到的效果的原因。 但就實驗成功而言,不應將產品使用情況視為評估指標。

3.創建您的對照組和測試組 (3. Create your control group and test group)

Once you determine your null and alternative hypothesis, the next step is to create your control and test (variant) group. There are two important concepts to consider in this step, sampling and sample size.

一旦確定了零假設和替代假設,下一步就是創建對照和測試(變量)組。 在此步驟中,有兩個重要概念需要考慮,即采樣和樣本量。

SamplingRandom sampling is one most common sampling techniques. Each sample in a population has an equal chance of being chosen. Random sampling is important in hypothesis testing because it eliminates sampling bias, and it’s important to eliminate bias because you want the results of your A/B test to be representative of the entire population rather than the sample itself.

采樣隨機采樣是一種最常見的采樣技術。 總體中的每個樣本都有相等的機會被選中。 隨機抽樣在假設檢驗中很重要,因為它消除了抽樣偏差,而消除偏差也很重要,因為您希望A / B檢驗的結果能夠代表整個總體而不是樣本本身。

A problem of A/B tests is that if you haven’t defined your target group properly or you’re in the early stages of your product, you may not know a lot about your customers. If you’re not sure who they are (try creating some user personas to get started!) then you might end up with misleading results. Important to understand which sampling method that suits your use case.

A / B測試的問題是,如果您沒有正確定義目標組,或者您處于產品的早期階段,那么您可能對客戶了解的不多。 如果不確定他們是誰(嘗試創建一些用戶角色來開始!),那么最終可能會產生誤導性的結果。 重要的是要了解哪種采樣方法適合您的用例。

Sample SizeIt’s essential that you determine the minimum sample size for your A/B test prior to conducting it so that you can eliminate under coverage bias, bias from sampling too few observations.

樣本大小 ,你先確定你的A / B測試的最小樣本量進行,這樣你可以在覆蓋偏倚 ,從取樣太少觀察偏見消除它是必不可少的。

4. A / B測試的時間 (4. Length of the A/B test)

A calculator like this one can help you determine the length of time you need to get any real significance from your A/B tests.

像這樣的計算器可以幫助您確定從A / B測試中獲得任何實際意義所需的時間。

History data shows that there are about 3250 unique visitors per day. There are about 520 software downloads per day (a .16 rate) and about 65 licenses purchased each day (a .02 rate). In an ideal case, both the download rate and license purchase rate should increase with the new homepage; a statistically significant negative change should be a sign to not deploy the homepage change. However, if only one of our metrics shows a statistically significant positive change we should be happy enough to deploy the new homepage

歷史數據顯示,每天大約有3250位唯一身份訪問者。 每天大約有520個軟件下載( .16比率 ),每天購買約65個許可證( .02比率 )。 在理想情況下,下載率和許可證購買率均應隨新首頁的增加而增加; 具有統計意義的負面變化應該是不部署主頁更改的標志。 但是,如果只有一項指標顯示出統計上顯著的積極變化,那么我們應該很樂意部署新的首頁

For an overall 5% Type I error rate with Bonferroni correction and 80% power, we should require 6 days to reliably detect a 50 download increase per day and 21 days to detect an increase of 10 license purchases per day. Performing both individual tests at a .05 error rate carries the risk of making too many Type I errors. As such, we’ll apply the Bonferroni correction to run each test at a .025 error rate so as to protect against making too many errors.

對于具有Bonferroni校正和80%功率的5%I型錯誤率,我們應該需要6天才能可靠地檢測到每天50個下載量的增加,而需要21天才能檢測到每天10個許可證購買量的增加。 以.05的錯誤率執行兩項測試都可能導致I型錯誤過多。 因此,我們將應用Bonferroni校正以.025的錯誤率運行每個測試,以防止發生太多錯誤。

Use the link above for the test days calculations: Estimated existing conversion rate (%): 16% Minimum improvement in conversion rate you want to detect (%): 50/520*100 %Number of variations/combinations (including control): 2Average number of daily visitors: 3250Percent visitors included in test? 100%Total number of days to run the test: 6 days

使用上面的鏈接進行測試日計算: 估計現有轉化率(%): 16% 您要檢測的轉化率的最小改進(%): 50/520 * 100% 變體/組合數(包括對照): 2 每日平均訪客人數: 3250 測試中是否包含訪客? 100% 運行測試的總天數: 6 天

Estimated existing conversion rate (%): 2 % Minimum improvement in conversion rate you want to detect (%): 10/65*100 %Number of variations/combinations (including control): 2Average number of daily visitors: 3250Percent visitors included in test? 100%Total number of days to run the test: 21 days

估計的現有轉化率(%): 2% 您要檢測的轉化率的最低改進(%): 10/65 * 100% 變體/組合數(包括對照): 2 平均每日訪問者數量: 3250 包含的 訪問者 百分比在測試中? 100% 運行測試的總天數: 21天

One thing that isn’t accounted for in the base experiment length calculations is that there is going to be a delay between when users download the software and when they actually purchase a license. That is, when we start the experiment, there could be about seven days before a user account associated with a cookie actually comes back to make their purchase. Any purchases observed within the first week might not be attributable to either experimental condition. As a way of accounting for this, we’ll run the experiment for about one week longer to allow those users who come in during the third week a chance to come back and be counted in the license purchases tally.

在基礎實驗時長計算中未考慮的一件事是,用戶下載軟件的時間與實際購買許可證之間將有一個延遲。 也就是說,當我們開始實驗時,可能需要大約7天的時間,與Cookie相關聯的用戶帳戶才能真正恢復購買。 在第一周內觀察到的任何購買都可能與實驗條件無關。 為了說明這一點,我們將實驗進行大約一周的時間,以使在第三周內進入的用戶有機會回來并計入許可證購買計數。

As for biases, we don’t expect users to come back to the homepage regularly. Downloading and license purchasing are actions we expect to only occur once per user, so there’s no real ‘return rate’ to worry about. One possibility, however, is that if more people download the software under the new homepage, the expanded user base is qualitatively different from the people who came to the page under the original homepage. This might cause more homepage hits from people looking for the support pages on the site, causing the number of unique cookies under each condition to differ. If we do see something wrong or out of place in the invariant metric (number of cookies), then this might be an area to explore in further investigations.

至于偏見,我們不希望用戶定期返回首頁。 下載和購買許可證是我們希望每個用戶僅執行一次的操作,因此無需擔心真正的“回報率”。 但是,一種可能性是,如果有更多的人在新首頁下下載該軟件,則擴展的用戶基礎在質量上將不同于訪問原始首頁下的頁面的人。 這可能會導致人們在網站上尋找支持頁面的點擊量增加,從而導致每種情況下唯一Cookie的數量有所不同。 如果我們在固定指標(Cookie的數量)中確實發現了錯誤或不正確的地方,那么這可能是需要進一步研究的領域。

5.進行測試 (5. Conduct the test)

Once you conduct your experiment and collect your data, you want to determine if the difference between your control group and variant group is statistically significant. There are a few steps in determining this:

完成實驗并收集數據后,您要確定對照組和變異組之間的差異是否在統計上顯著。 確定此步驟有幾個步驟:

First, you want to set your alpha, the probability of making a type 1 error. Typically the alpha is set at 5% or 0.05

首先,您要設置alpha ,即發生1型錯誤的概率。 通常將alpha設置為5%或0.05

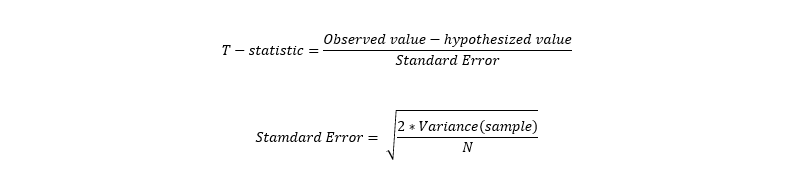

- Second, you want to determine the probability value (p-value) by first calculating the t-statistic using the formula above or using z-score. 其次,您想通過首先使用上述公式或使用z分數計算t統計量來確定概率值(p值)。

- Lastly, compare the p-value to the alpha. If the p-value is greater than the alpha, do not reject the null! 最后,將p值與alpha進行比較。 如果p值大于alpha,請不要拒絕null!

5.1使用實際統計數據比較結果 (5.1 Use actual statistics to compare the results)

Do not rely on simple 1 on 1 comparison metrics to dictate what works and does not work. “Version A yields a 20 percent conversion rate and Version B yields a 22 percent conversion rate, therefore we should switch to Version B!” Please do not do this. Use actual confidence intervals, z-scores, and statistically significant data.

不要依靠簡單的一對一比較指標來確定哪些有效,哪些無效。 “ 版本A產生20%的轉換率, 版本B產生22%的轉換率,因此我們應該切換到版本B!” 請不要這樣做。 使用實際的置信區間,z得分和具有統計意義的數據。

5.2產品增長 (5.2 Product Growth)

Changing colours and layout may have a marginal impact on your key performance metrics. However, these results seem to be very short-lived. Product growth does not result from changing a button from red to blue, it comes from building a product that people want to use.

更改顏色和布局可能會對關鍵績效指標產生輕微影響。 但是,這些結果似乎是短暫的。 產品的增長并非來自將按鈕從紅色更改為藍色的結果,而是來自構建人們想要使用的產品。

Instead of choosing feature that you think might work, you can use an A/B test to know what works.

您可以使用A / B測試來了解有效的方法,而不是選擇您認為可能有效的功能。

5.3分析數據 (5.3 Analyse Data)

For the first evaluation metric, download rate, there was an extremely convincing effect. An absolute increase from 0.1612 to 0.1805 results in a z-score of 7.87 (z-score = 0.1805–0.1612/0.0025) and p-value < .00001, well beyond any standard significance bound. However, the second evaluation metric, license purchasing rate, only shows a small increase from 0.0210 to 0.0213 (following the assumption that only the first 21 days of cookies account for all purchases). This results in a p-value of 0.398 (z = 0.26).

對于第一個評估指標,下載率,具有令人信服的效果。 從0.1612到0.1805的絕對增加會導致z得分為7.87(z得分= 0.1805–0.1612 / 0.0025),p值<.00001,遠遠超出了任何標準顯著性范圍。 但是,第二個評估指標,即許可證購買率,僅顯示從0.0210到0.0213的小幅增長(假設所有購買的數據僅占cookie的前21天)。 這導致p值為0.398(z = 0.26)。

6.得出結論 (6. Draw Conclusions)

Despite the fact that statistical significance wasn’t obtained for the number of licenses purchased, the new homepage appeared to have a strong effect on the number of downloads made. Based on our goals, this seems enough to suggest replacing the old homepage with the new homepage. Establishing whether there was a significant increase in the number of license purchases, either through the rate or the increase in the number of homepage visits, will need to wait for further experiments or data collection.

盡管沒有獲得購買許可證數量的統計意義,但新主頁似乎對下載的數量產生了很大影響。 根據我們的目標,這似乎足以建議用新主頁替換舊主頁。 要確定購買許可證的數量是否顯著增加(無論是通過訪問率還是通過首頁訪問的數量增加),都需要等待進一步的實驗或數據收集。

One inference we might like to make is that the new homepage attracted new users who would not normally try out the program, but that these new users didn’t convert to purchases at the same rate as the existing user base. This is a nice story to tell, but we can’t actually say that with the data as given. In order to make this inference, we would need more detailed information about individual visitors that isn’t available. However, if the software did have the capability of reporting usage statistics, that might be a way of seeing if certain profiles are more likely to purchase a license. This might then open additional ideas for improving revenue.

我們可能要做出的一個推斷是,新首頁吸引了通常不會試用該程序的新用戶,但是這些新用戶沒有以與現有用戶群相同的速度轉換為購買商品。 這是一個很好的故事,但是我們不能用給定的數據這么說。 為了進行推斷,我們將需要有關不可用的單個訪客的更多詳細信息。 但是,如果該軟件確實具有報告使用情況統計信息的功能,則可能是查看某些配置文件是否更有可能購買許可證的一種方式。 然后,這可能會打開其他想法來提高收入。

翻譯自: https://towardsdatascience.com/how-to-conduct-a-b-testing-3076074a8458

a/b測試

本文來自互聯網用戶投稿,該文觀點僅代表作者本人,不代表本站立場。本站僅提供信息存儲空間服務,不擁有所有權,不承擔相關法律責任。 如若轉載,請注明出處:http://www.pswp.cn/news/388738.shtml 繁體地址,請注明出處:http://hk.pswp.cn/news/388738.shtml 英文地址,請注明出處:http://en.pswp.cn/news/388738.shtml

如若內容造成侵權/違法違規/事實不符,請聯系多彩編程網進行投訴反饋email:809451989@qq.com,一經查實,立即刪除!

)