learning to link with wikipedia

一、本文目標:

???????? 如何自動識別非結構化文本中提到的主題,并將其鏈接到適當的Wikipedia文章中進行解釋。

?

二、主要借鑒論文:

???? Mihalcea and Csomai----Wikify!: linking documents to encyclopedic knowledge

???????? 第一步:detection(identifying the terms and phrases from which links should be made):

link probabilities:它作為錨的維基百科文章數量,除以提及它的文章數量。

?????? 第二步:disambiguation:從短語和上下文的單詞中提取特征。

??????

???? Medelyan et al.---- Topic Indexing with Wikipedia.

???????? Disambiguation:

Balancing the commonness (or prior probability) of each sense and how the sense relates to its surrounding context.

?

?

?

三、兩大步驟:link disambiguation and link detection

Link disambiguation:

????? Commonness and Relatedness

1.The commonness of a sense is defined by the number of times it is used as a destination in Wikipedia.

?

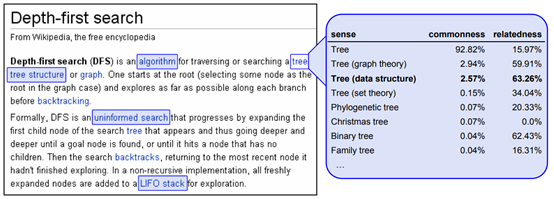

2.Our algorithm identifies these cases by comparing each possible sense with its surrounding context. This is a cyclic problem because these terms may also be ambiguous

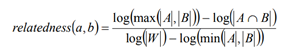

????????

???????? where a and b are the two articles of interest, A and B are the sets of all articles that link to a and b respectively, and W is set of all articles in Wikipedia.

????????

????? Some context terms are better than others

???????? 1.單詞The是明確的,因為它只用于鏈接到文章的語法概念,但是對于消除其他概念的歧義,它沒有任何價值。

?????? link probability 可以解決這個問題。很多文章提到the,但沒有把它作為鏈接使用。

2. 許多上下文術語都是與文檔的中心無關的. 我們可以使用Relatedness的度量方法,通過計算一個術語與所有其他上下文術語的平均語義關聯,來確定該術語與這個中心線程的關系有多密切。

These two variables—link probability and relatedness—are averaged to provide a weight for each context term.

????????

????? Combining the features

圖中,大多關于“樹”是與本文是不相關的,因為該文檔顯然是關于計算機科學的。如果在上下文不明確或混淆的情況下,則應選擇最常用。這在大多數情況下都是正確的。

?????? 引入最后一個feature: context quality

???????? This takes into account the number of terms involved, the extent they relate to each other, and how often they are used as Wikipedia links.

????????

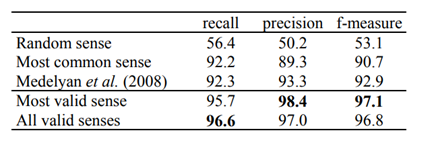

???????? the commonness of each sense,its relatedness to the surrounding context,context quality

這三個feature來訓練一個分類器。

注:這個分類器并不是為每一項選擇最好的詞義,而是獨立考慮每一種候選,并產生它的概率。

????????

?

???????? 訓練階段需要考慮的問題:參數,分類器。

?????????????????? 參數:specifies the minimum probability of senses that are considered by the algorithm.

??????????????????????????? ---- 2%

?????????????????? 分類器:C4.5

?

????????

?

link detection:

link detection首先收集文檔中的所有n-grams,并保留那些概率超過非常低的閾值(這用于丟棄無意義的短語和停止詞)。使用分類器消除所有剩余短語的歧義。

?

?

1.會有幾個鏈接與之相關的情況。就像Democrats and Democratic Party的情況一樣。

? 2.如果分類器發現多個可能的情況,術語可能指向多個候選。例如,民主黨人可以指該黨或任何民主的支持者。

Features of these articles are used to inform the classifier about which topics should and should not be linked:

Link Probability

Mihalcea and Csomai’s link probability to recognize the majority of links

???????? 引入兩個feature: the average and the maximum

???????? the average: expected to be more consistent

???????? the maxinum: be more indicative of links

比如:Democratic Party 比 the party 有更高的鏈接可能性。

Relatedness

此文中,讀者更可能對克林頓、奧巴馬和民主黨感興趣,而不是佛羅里達州或密歇根州。

希望與文檔中心線相關的主題更有可能被鏈接。

引入feature: ?the average relatedness

between each topic and all of the other candidates.

Disambiguation Confidence

使用分類器的結果作為置信度。

引入兩個feature: average and maximum values

Generality

對于讀者來說,為他們不知道的主題提供鏈接要比為那些不需要解釋的主題提供鏈接更有用。

為一個鏈接定義一個generality表示它位于Wikipedia類別樹中的最小深度。

通過從構成Wikipedia組織層次結構根的基本類別開始執行廣度優先搜索來計算。

Location and Spread

? ? ? ? ?三個feature: Frequency ??????? first occurrence??????? last occurrence

???????? 第一次和最后一次出現的距離用于體現文檔討論主題的一致性。????????

?

訓練階段唯一要配置的變量是初始鏈接概率閾值,用于丟棄無意義的短語和停止單詞。

???????? --6.5%

?

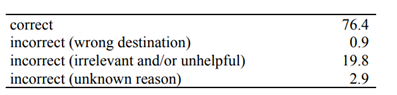

四.WIKIFICATION IN THE WILD

???????? Data: Xinhua News Service, the New York Times, and the Associated Press.

????????

?

????????

?

)

)

)

)

)

)

)