1. 原理介紹

- 設置 HPA 每次最小擴容 Pod 數為可用區數量,以期可用區間 Pod 同步擴容

- 設置 TopologySpreadConstraints 可用區分散 maxSkew 為 1,以盡可能可用區間 Pod 均勻分布

2. 實驗驗證

2.1. 準備 Kind 集群

準備如下配置文件,命名為 kind-cluster.yaml

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-planeimage: kindest/node:v1.24.0@sha256:0866296e693efe1fed79d5e6c7af8df71fc73ae45e3679af05342239cdc5bc8e

- role: workerimage: kindest/node:v1.24.0@sha256:0866296e693efe1fed79d5e6c7af8df71fc73ae45e3679af05342239cdc5bc8elabels:topology.kubernetes.io/zone: "us-east-1a"

- role: workerimage: kindest/node:v1.24.0@sha256:0866296e693efe1fed79d5e6c7af8df71fc73ae45e3679af05342239cdc5bc8elabels:topology.kubernetes.io/zone: "us-east-1c"

上述配置為集群定義了 2 個工作節點,并分別打上了不同的可用區標簽。

執行如下命令創建該 Kubernetes 集群:

$ kind create cluster --config cluster-1.24.yaml

Creating cluster "kind" ...? Ensuring node image (kindest/node:v1.24.0) 🖼 ? Preparing nodes 📦 📦 📦 ? Writing configuration 📜 ? Starting control-plane 🕹? ? Installing CNI 🔌 ? Installing StorageClass 💾 ? Joining worker nodes 🚜

Set kubectl context to "kind-kind"

You can now use your cluster with:kubectl cluster-info --context kind-kindHave a question, bug, or feature request? Let us know! https://kind.sigs.k8s.io/#community 🙂

檢查集群運行正常:

$ kubectl get node --show-labels

NAME STATUS ROLES AGE VERSION LABELS

kind-control-plane Ready control-plane 161m v1.24.0 beta.kubernetes.io/arch=arm64,beta.kubernetes.io/os=linux,kubernetes.io/arch=arm64,kubernetes.io/hostname=kind-control-plane,kubernetes.io/os=linux,node-role.kubernetes.io/control-plane=,node.kubernetes.io/exclude-from-external-load-balancers=

kind-worker Ready <none> 160m v1.24.0 beta.kubernetes.io/arch=arm64,beta.kubernetes.io/os=linux,kubernetes.io/arch=arm64,kubernetes.io/hostname=kind-worker,kubernetes.io/os=linux,topology.kubernetes.io/zone=us-east-1a

kind-worker2 Ready <none> 160m v1.24.0 beta.kubernetes.io/arch=arm64,beta.kubernetes.io/os=linux,kubernetes.io/arch=arm64,kubernetes.io/hostname=kind-worker2,kubernetes.io/os=linux,topology.kubernetes.io/zone=us-east-1c

2.2. 安裝 metrics-server 組件

HPA 依賴 metrics-server 提供監控指標,通過如下命令安裝:

$ kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

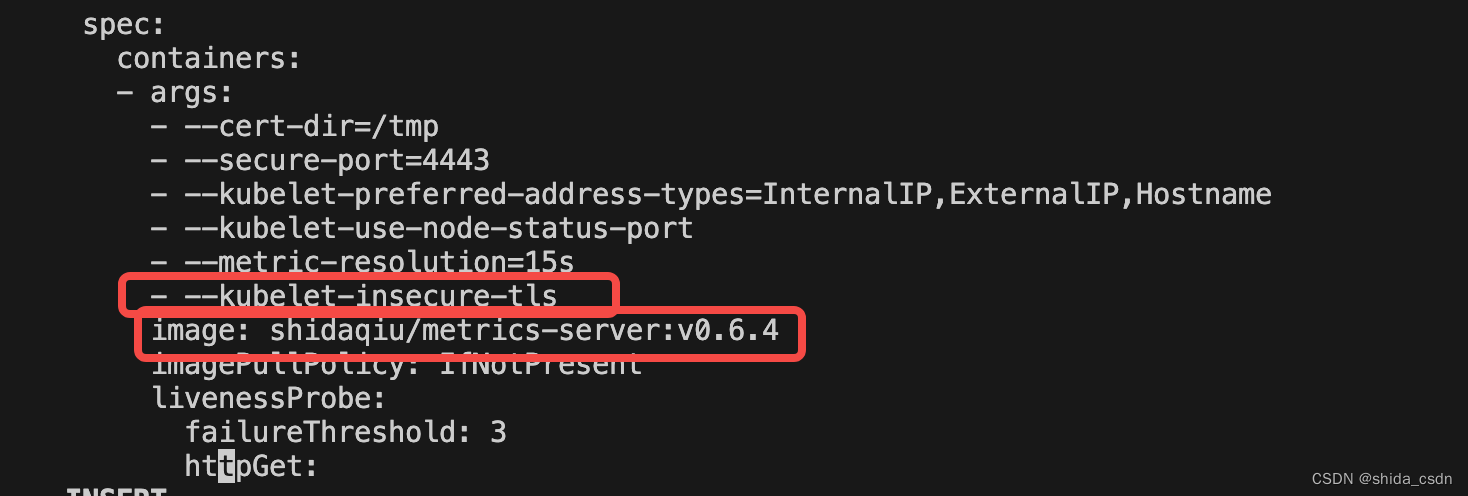

提示: 國內網絡不能直接下載到 registry.k8s.io/metrics-server/metrics-server:v0.6.4 鏡像,可以替換為等同的 shidaqiu/metrics-server:v0.6.4。同時,關閉 tls 安全校驗,如下圖:

檢查部署后的 metrics-server 運行正常:

$ kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

kind-control-plane 238m 5% 667Mi 8%

kind-worker 76m 1% 207Mi 2%

kind-worker2 41m 1% 110Mi 1%

2.3. 部署測試服務

準備如下 YAML,命名為 hpa-php-demo.yaml

注意:Deployment 的 topologySpreadConstraints 配置為可用區分散!

apiVersion: apps/v1

kind: Deployment

metadata:name: php-web-demo

spec:selector:matchLabels:run: php-web-demoreplicas: 1template:metadata:labels:run: php-web-demospec:topologySpreadConstraints:- maxSkew: 1topologyKey: kubernetes.io/zonewhenUnsatisfiable: ScheduleAnywaylabelSelector:matchLabels:run: php-web-democontainers:- name: php-web-demoimage: shidaqiu/hpademo:latestports:- containerPort: 80resources:limits:cpu: 500mrequests:cpu: 200m

---

apiVersion: v1

kind: Service

metadata:name: php-web-demolabels:run: php-web-demo

spec:ports:- port: 80selector:run: php-web-demo

部署上述服務:

kubectl apply -f hpa-php-demo.yaml

2.4. 部署 HPA 配置

準備 HPA 配置文件,命名為 hpa-demo.yaml

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:name: php-web-demo

spec:scaleTargetRef:apiVersion: apps/v1kind: Deploymentname: php-web-demominReplicas: 2maxReplicas: 10metrics:- type: Resourceresource:name: cputarget:type: UtilizationaverageUtilization: 50behavior:scaleDown:stabilizationWindowSeconds: 300policies:- type: Percentvalue: 50periodSeconds: 15- type: Podsvalue: 2periodSeconds: 15scaleUp:stabilizationWindowSeconds: 0policies:- type: Percentvalue: 100periodSeconds: 15- type: Podsvalue: 2periodSeconds: 15 selectPolicy: Max

部署上述 HPA 配置:

$ kubectl apply -f hpa-demo.yaml

上述 HPA 通過 scaleUp 和 scaleDown 定義了擴容和縮容的行為,每次擴容一倍或 2 個 Pod(取較大者),每次縮容一半或 2 個 Pod(取較大者)。

2.5. 驗證擴容

擴容前,觀察 Pod 分別運行在兩個區:

$ kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

php-web-demo-d6d66c8d5-22tn6 1/1 Running 0 6m57s 10.244.2.3 kind-worker2 <none> <none>

php-web-demo-d6d66c8d5-tz8m9 1/1 Running 0 76s 10.244.1.3 kind-worker <none> <none>

給服務施加壓力:

$ kubectl run -it --rm load-generator --image=busybox /bin/sh

進入容器后,執行如下腳本:

while true; do wget -q -O- http://php-web-demo; done

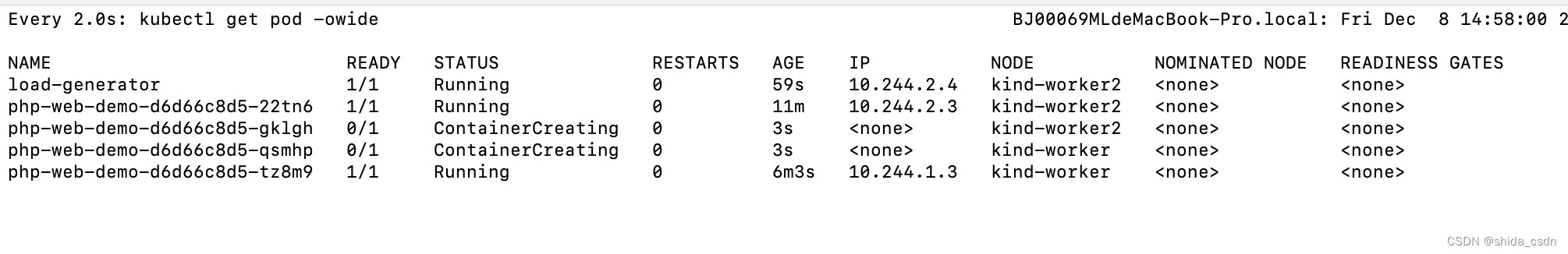

可以觀察到 Pod 擴容時,同時在兩個可用區進行,實現了可用區同步擴容的效果

停止施加壓力,可以觀察到 Pod 縮容保持了可用區分散的狀態

如何保證縮容后,Pod 仍在多可用區均勻分散?

可以考慮借助 descheduler 的 rebalance 能力,參考 https://github.com/kubernetes-sigs/descheduler?tab=readme-ov-file#removepodsviolatingtopologyspreadconstraint

![[Python系列] 文字轉語音](http://pic.xiahunao.cn/[Python系列] 文字轉語音)

)

真題解析)

64-Bit Server VM warning: ignoring option MaxPermSize=256m)