通過構建更復雜的深度學習模型可以提高分類的準確性,即分別基于TextCNN、TextRNN和TextRCNN三種算法實現中文文本分類。

項目地址:zz-zik/NLP-Application-and-Practice: 本項目將《自然語言處理與應用實戰》原書中代碼進行了實現,并在此基礎上進行了改進。原書作者:韓少云、裴廣戰、吳飛等。 (github.com)

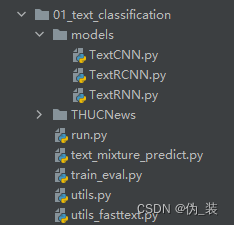

該項目目錄如圖:

實用工具

utils.py代碼編寫

# coding: UTF-8

import os

import torch

import numpy as np

import pickle as pkl

from tqdm import tqdm

import time

from datetime import timedeltaMAX_VOCAB_SIZE = 10000 # 詞表長度限制

UNK, PAD = '<UNK>', '<PAD>' # 未知字,padding符號def build_vocab(file_path, tokenizer, max_size, min_freq):vocab_dic = {}with open(file_path, 'r', encoding='UTF-8') as f:for line in tqdm(f):lin = line.strip()if not lin:continuecontent = lin.split('\t')[0]for word in tokenizer(content):vocab_dic[word] = vocab_dic.get(word, 0) + 1vocab_list = sorted([_ for _ in vocab_dic.items() if _[1] >= min_freq], key=lambda x: x[1],reverse=True)[:max_size]vocab_dic = {word_count[0]: idx for idx, word_count in enumerate(vocab_list)}vocab_dic.update({UNK: len(vocab_dic), PAD: len(vocab_dic) + 1})return vocab_dicdef build_dataset(config, ues_word):if ues_word:tokenizer = lambda x: x.split(' ') # 以空格隔開,word-levelelse:tokenizer = lambda x: [y for y in x] # char-levelif os.path.exists(config.vocab_path):vocab = pkl.load(open(config.vocab_path, 'rb'))else:vocab = build_vocab(config.train_path, tokenizer=tokenizer, max_size=MAX_VOCAB_SIZE, min_freq=1)pkl.dump(vocab, open(config.vocab_path, 'wb'))print(f"Vocab size: {len(vocab)}")def load_dataset(path, pad_size=32):contents = []with open(path, 'r', encoding='UTF-8') as f:for line in tqdm(f):lin = line.strip()if not lin:continuecontent, label = lin.split('\t')words_line = []token = tokenizer(content)seq_len = len(token)if pad_size:if len(token) < pad_size:token.extend([PAD] * (pad_size - len(token)))else:token = token[:pad_size]seq_len = pad_size# word to idfor word in token:words_line.append(vocab.get(word, vocab.get(UNK)))contents.append((words_line, int(label), seq_len))return contents # [([...], 0), ([...], 1), ...]train = load_dataset(config.train_path, config.pad_size)dev = load_dataset(config.dev_path, config.pad_size)test = load_dataset(config.test_path, config.pad_size)return vocab, train, dev, test, # predictclass DatasetIterater(object):def __init__(self, batches, batch_size, device):self.batch_size = batch_sizeself.batches = batchesself.n_batches = len(batches) // batch_sizeself.residue = False # 記錄batch數量是否為整數if len(batches) % self.n_batches != 0:self.residue = Trueself.index = 0self.device = devicedef _to_tensor(self, datas):x = torch.LongTensor([_[0] for _ in datas]).to(self.device)y = torch.LongTensor([_[1] for _ in datas]).to(self.device)# pad前的長度(超過pad_size的設為pad_size)seq_len = torch.LongTensor([_[2] for _ in datas]).to(self.device)return (x, seq_len), ydef __next__(self):if self.residue and self.index == self.n_batches:batches = self.batches[self.index * self.batch_size: len(self.batches)]self.index += 1batches = self._to_tensor(batches)return batcheselif self.index >= self.n_batches:self.index = 0raise StopIterationelse:batches = self.batches[self.index * self.batch_size: (self.index + 1) * self.batch_size]self.index += 1batches = self._to_tensor(batches)return batchesdef __iter__(self):return selfdef __len__(self):if self.residue:return self.n_batches + 1else:return self.n_batchesdef build_iterator(dataset, config, predict):if predict==True:config.batch_size = 1iter = DatasetIterater(dataset, config.batch_size, config.device)return iterdef get_time_dif(start_time):"""獲取已使用時間"""end_time = time.time()time_dif = end_time - start_timereturn timedelta(seconds=int(round(time_dif)))if __name__ == "__main__":'''提取預訓練詞向量'''# 下面的目錄、文件名按需更改。train_dir = "./THUCNews/data/train.txt"vocab_dir = "./THUCNews/data/vocab.pkl"pretrain_dir = "./THUCNews/data/sgns.sogou.char"emb_dim = 300filename_trimmed_dir = "./THUCNews/data/embedding_SougouNews"if os.path.exists(vocab_dir):word_to_id = pkl.load(open(vocab_dir, 'rb'))else:# tokenizer = lambda x: x.split(' ') # 以詞為單位構建詞表(數據集中詞之間以空格隔開)tokenizer = lambda x: [y for y in x] # 以字為單位構建詞表word_to_id = build_vocab(train_dir, tokenizer=tokenizer, max_size=MAX_VOCAB_SIZE, min_freq=1)pkl.dump(word_to_id, open(vocab_dir, 'wb'))embeddings = np.random.rand(len(word_to_id), emb_dim)f = open(pretrain_dir, "r", encoding='UTF-8')for i, line in enumerate(f.readlines()):# if i == 0: # 若第一行是標題,則跳過# continuelin = line.strip().split(" ")if lin[0] in word_to_id:idx = word_to_id[lin[0]]emb = [float(x) for x in lin[1:301]]embeddings[idx] = np.asarray(emb, dtype='float32')f.close()np.savez_compressed(filename_trimmed_dir, embeddings=embeddings)

快速文本工具

utils_fasttext.py代碼編寫

# coding: UTF-8

import os

import torch

import numpy as np

import pickle as pkl

from tqdm import tqdm

import time

from datetime import timedeltaMAX_VOCAB_SIZE = 10000

UNK, PAD = '<UNK>', '<PAD>'def build_vocab(file_path, tokenizer, max_size, min_freq):vocab_dic = {}with open(file_path, 'r', encoding='UTF-8') as f:for line in tqdm(f):lin = line.strip()if not lin:continuecontent = lin.split('\t')[0]for word in tokenizer(content):vocab_dic[word] = vocab_dic.get(word, 0) + 1vocab_list = sorted([_ for _ in vocab_dic.items() if _[1] >= min_freq], key=lambda x: x[1],reverse=True)[:max_size]vocab_dic = {word_count[0]: idx for idx, word_count in enumerate(vocab_list)}vocab_dic.update({UNK: len(vocab_dic), PAD: len(vocab_dic) + 1})return vocab_dicdef build_dataset(config, ues_word):if ues_word:tokenizer = lambda x: x.split(' ') # 以空格隔開,word-levelelse:tokenizer = lambda x: [y for y in x] # char-levelif os.path.exists(config.vocab_path):vocab = pkl.load(open(config.vocab_path, 'rb'))else:vocab = build_vocab(config.train_path, tokenizer=tokenizer, max_size=MAX_VOCAB_SIZE, min_freq=1)pkl.dump(vocab, open(config.vocab_path, 'wb'))print(f"Vocab size: {len(vocab)}")def biGramHash(sequence, t, buckets):t1 = sequence[t - 1] if t - 1 >= 0 else 0return (t1 * 14918087) % bucketsdef triGramHash(sequence, t, buckets):t1 = sequence[t - 1] if t - 1 >= 0 else 0t2 = sequence[t - 2] if t - 2 >= 0 else 0return (t2 * 14918087 * 18408749 + t1 * 14918087) % bucketsdef load_dataset(path, pad_size=32):contents = []with open(path, 'r', encoding='UTF-8') as f:for line in tqdm(f):lin = line.strip()if not lin:continuecontent, label = lin.split('\t')words_line = []token = tokenizer(content)seq_len = len(token)if pad_size:if len(token) < pad_size:token.extend([PAD] * (pad_size - len(token)))else:token = token[:pad_size]seq_len = pad_size# word to idfor word in token:words_line.append(vocab.get(word, vocab.get(UNK)))# fasttext ngrambuckets = config.n_gram_vocabbigram = []trigram = []# ------ngram------for i in range(pad_size):bigram.append(biGramHash(words_line, i, buckets))trigram.append(triGramHash(words_line, i, buckets))# -----------------contents.append((words_line, int(label), seq_len, bigram, trigram))return contents # [([...], 0), ([...], 1), ...]train = load_dataset(config.train_path, config.pad_size)dev = load_dataset(config.dev_path, config.pad_size)test = load_dataset(config.test_path, config.pad_size)return vocab, train, dev, testclass DatasetIterater(object):def __init__(self, batches, batch_size, device):self.batch_size = batch_sizeself.batches = batchesself.n_batches = len(batches) // batch_sizeself.residue = False # 記錄batch數量是否為整數 if len(batches) % self.n_batches != 0:self.residue = Trueself.index = 0self.device = devicedef _to_tensor(self, datas):# xx = [xxx[2] for xxx in datas]# indexx = np.argsort(xx)[::-1]# datas = np.array(datas)[indexx]x = torch.LongTensor([_[0] for _ in datas]).to(self.device)y = torch.LongTensor([_[1] for _ in datas]).to(self.device)bigram = torch.LongTensor([_[3] for _ in datas]).to(self.device)trigram = torch.LongTensor([_[4] for _ in datas]).to(self.device)# pad前的長度(超過pad_size的設為pad_size)seq_len = torch.LongTensor([_[2] for _ in datas]).to(self.device)return (x, seq_len, bigram, trigram), ydef __next__(self):if self.residue and self.index == self.n_batches:batches = self.batches[self.index * self.batch_size: len(self.batches)]self.index += 1batches = self._to_tensor(batches)return batcheselif self.index >= self.n_batches:self.index = 0raise StopIterationelse:batches = self.batches[self.index * self.batch_size: (self.index + 1) * self.batch_size]self.index += 1batches = self._to_tensor(batches)return batchesdef __iter__(self):return selfdef __len__(self):if self.residue:return self.n_batches + 1else:return self.n_batchesdef build_iterator(dataset, config, predict):if predict == True:config.batch_size = 1iter = DatasetIterater(dataset, config.batch_size, config.device)return iterdef get_time_dif(start_time):"""獲取已使用時間"""end_time = time.time()time_dif = end_time - start_timereturn timedelta(seconds=int(round(time_dif)))if __name__ == "__main__":'''提取預訓練詞向量'''vocab_dir = "./THUCNews/data/vocab.pkl"pretrain_dir = "./THUCNews/data/sgns.sogou.char"emb_dim = 300filename_trimmed_dir = "./THUCNews/data/vocab.embedding.sougou"word_to_id = pkl.load(open(vocab_dir, 'rb'))embeddings = np.random.rand(len(word_to_id), emb_dim)f = open(pretrain_dir, "r", encoding='UTF-8')for i, line in enumerate(f.readlines()):# if i == 0: # 若第一行是標題,則跳過# continuelin = line.strip().split(" ")if lin[0] in word_to_id:idx = word_to_id[lin[0]]emb = [float(x) for x in lin[1:301]]embeddings[idx] = np.asarray(emb, dtype='float32')f.close()np.savez_compressed(filename_trimmed_dir, embeddings=embeddings)

數據訓練

train_eval.py代碼編寫

# coding: UTF-8

import numpy as np

import torch

import torch.nn as nn

import torch.nn.functional as F

from sklearn import metrics

import time

from torch.utils.tensorboard import SummaryWriter

from utils import get_time_dif# 權重初始化,默認xavier

def init_network(model, method='xavier', exclude='embedding', seed=123):for name, w in model.named_parameters():if exclude not in name:if 'weight' in name:if method == 'xavier':nn.init.xavier_normal_(w)elif method == 'kaiming':nn.init.kaiming_normal_(w)else:nn.init.normal_(w)elif 'bias' in name:nn.init.constant_(w, 0)else:passdef train(config, model, train_iter, dev_iter, test_iter):start_time = time.time()model.train()optimizer = torch.optim.Adam(model.parameters(), lr=config.learning_rate)# 學習率指數衰減,每次epoch:學習率 = gamma * 學習率# scheduler = torch.optim.lr_scheduler.ExponentialLR(optimizer, gamma=0.9)total_batch = 0 # 記錄進行到多少batchdev_best_loss = float('inf')last_improve = 0 # 記錄上次驗證集loss下降的batch數flag = False # 記錄是否很久沒有效果提升writer = SummaryWriter(log_dir=config.log_path + '/' + time.strftime('%m-%d_%H.%M', time.localtime()))for epoch in range(config.num_epochs):print('Epoch [{}/{}]'.format(epoch + 1, config.num_epochs))# scheduler.step() # 學習率衰減for i, (trains, labels) in enumerate(train_iter):outputs = model(trains)model.zero_grad()loss = F.cross_entropy(outputs, labels)loss.backward()optimizer.step()if total_batch % 100 == 0:# 每多少輪輸出在訓練集和驗證集上的效果true = labels.data.cpu()predic = torch.max(outputs.data, 1)[1].cpu()train_acc = metrics.accuracy_score(true, predic)dev_acc, dev_loss = evaluate(config, model, dev_iter)if dev_loss < dev_best_loss:dev_best_loss = dev_losstorch.save(model.state_dict(), config.save_path)improve = '*'last_improve = total_batchelse:improve = ''time_dif = get_time_dif(start_time)msg = 'Iter: {0:>6}, Train Loss: {1:>5.2}, Train Acc: {2:>6.2%}, Val Loss: {3:>5.2}, ' \'Val Acc: {4:>6.2%}, Time: {5} {6}'print(msg.format(total_batch, loss.item(), train_acc, dev_loss, dev_acc, time_dif, improve))writer.add_scalar("loss/train", loss.item(), total_batch)writer.add_scalar("loss/dev", dev_loss, total_batch)writer.add_scalar("acc/train", train_acc, total_batch)writer.add_scalar("acc/dev", dev_acc, total_batch)model.train()total_batch += 1if total_batch - last_improve > config.require_improvement:# 驗證集loss超過1000batch沒下降,結束訓練print("No optimization for a long time, auto-stopping...")flag = Truebreakif flag:breakwriter.close()test(config, model, test_iter)def test(config, model, test_iter):# testmodel.load_state_dict(torch.load(config.save_path))model.eval()start_time = time.time()test_acc, test_loss, test_report, test_confusion = evaluate(config, model, test_iter, test=True)msg = 'Test Loss: {0:>5.2}, Test Acc: {1:>6.2%}'print(msg.format(test_loss, test_acc))print("Precision, Recall and F1-Score...")print(test_report)print("Confusion Matrix...")print(test_confusion)time_dif = get_time_dif(start_time)print("Time usage:", time_dif)def evaluate(config, model, data_iter, test=False):model.eval()loss_total = 0predict_all = np.array([], dtype=int)labels_all = np.array([], dtype=int)with torch.no_grad():for texts, labels in data_iter:outputs = model(texts)loss = F.cross_entropy(outputs, labels)loss_total += losslabels = labels.data.cpu().numpy()predic = torch.max(outputs.data, 1)[1].cpu().numpy()labels_all = np.append(labels_all, labels)predict_all = np.append(predict_all, predic)acc = metrics.accuracy_score(labels_all, predict_all)if test:report = metrics.classification_report(labels_all, predict_all, target_names=config.class_list, digits=4)confusion = metrics.confusion_matrix(labels_all, predict_all)return acc, loss_total / len(data_iter), report, confusionreturn acc, loss_total / len(data_iter)?數據測試

text_mixture_predict.py代碼編寫

# coding:utf-8import torch

import numpy as np

import pickle as pkl

from importlib import import_module

from utils import build_iterator

import argparseparser = argparse.ArgumentParser(description='Chinese Text Classification')

parser.add_argument('--model', type=str, required=True, help='choose a model: TextCNN, TextRNN, TextRCNN')

parser.add_argument('--embedding', default='pre_trained', type=str, help='random or pre_trained')

parser.add_argument('--word', default=False, type=bool, help='True for word, False for char')

args = parser.parse_args()MAX_VOCAB_SIZE = 10000 # 詞表長度限制

tokenizer = lambda x: [y for y in x] # char-level

UNK, PAD = '<UNK>', '<PAD>' # 未知字,padding符號def load_dataset(content, vocab, pad_size=32):contents = []for line in content:lin = line.strip()if not lin:continue# content, label = lin.split('\t')words_line = []token = tokenizer(line)seq_len = len(token)if pad_size:if len(token) < pad_size:token.extend([PAD] * (pad_size - len(token)))else:token = token[:pad_size]seq_len = pad_size# word to idfor word in token:words_line.append(vocab.get(word, vocab.get(UNK)))contents.append((words_line, int(0), seq_len))return contents # [([...], 0), ([...], 1), ...]def match_label(pred, config):label_list = config.class_listreturn label_list[pred]def final_predict(config, model, data_iter):map_location = lambda storage, loc: storagemodel.load_state_dict(torch.load(config.save_path, map_location=map_location))model.eval()predict_all = np.array([])with torch.no_grad():for texts, _ in data_iter:outputs = model(texts)pred = torch.max(outputs.data, 1)[1].cpu().numpy()pred_label = [match_label(i, config) for i in pred]predict_all = np.append(predict_all, pred_label)return predict_alldef main(text):dataset = 'THUCNews' # 數據集# 搜狗新聞:embedding_SougouNews.npz, 騰訊:embedding_Tencent.npz, 隨機初始化:randomembedding = 'embedding_SougouNews.npz'if args.embedding == 'random':embedding = 'random'model_name = args.model # 'TextRCNN' # TextCNN, TextRNN, FastText, TextRCNN, TextRNN_Att, DPCNN, Transformerx = import_module('models.' + model_name)config = x.Config(dataset, embedding)vocab = pkl.load(open(config.vocab_path, 'rb'))content = load_dataset(text, vocab, 64)predict = Truepredict_iter = build_iterator(content, config, predict)config.n_vocab = len(vocab)model = x.Model(config).to(config.device)result = final_predict(config, model, predict_iter)for i, j in enumerate(result):print('text:{}'.format(text[i]),'\t','label:{}'.format(j))if __name__ == '__main__':test = ['國考28日網上查報名序號查詢后務必牢記報名參加2011年國家公務員的考生,如果您已通過資格審查,那么請于10月28日8:00后,登錄考錄專題網站查詢自己的“關鍵數字”——報名序號。''國家公務員局等部門提醒:報名序號是報考人員報名確認和下載打印準考證等事項的重要依據和關鍵字,請務必牢記。此外,由于年齡在35周歲以上、40周歲以下的應屆畢業碩士研究生和''博士研究生(非在職),不通過網絡進行報名,所以,這類人報名須直接與要報考的招錄機關聯系,通過電話傳真或發送電子郵件等方式報名。','高品質低價格東芝L315雙核本3999元作者:徐彬【北京行情】2月20日東芝SatelliteL300(參數圖片文章評論)采用14.1英寸WXGA寬屏幕設計,配備了IntelPentiumDual-CoreT2390''雙核處理器(1.86GHz主頻/1MB二級緩存/533MHz前端總線)、IntelGM965芯片組、1GBDDR2內存、120GB硬盤、DVD刻錄光驅和IntelGMAX3100集成顯卡。目前,它的經銷商報價為3999元。','國安少帥曾兩度出山救危局他已托起京師一代才俊新浪體育訊隨著聯賽中的連續不勝,衛冕冠軍北京國安的隊員心里到了崩潰的邊緣,俱樂部董事會連夜開會做出了更換主教練洪元碩的決定。''而接替洪元碩的,正是上賽季在李章洙下課風波中同樣下課的國安俱樂部副總魏克興。生于1963年的魏克興球員時代并沒有特別輝煌的履歷,但也絕對稱得上特別:15歲在北京青年隊獲青年''聯賽最佳射手,22歲進入國家隊,著名的5-19一戰中,他是國家隊的替補隊員。','湯盈盈撞人心情未平復眼泛淚光拒談悔意(附圖)新浪娛樂訊湯盈盈日前醉駕撞車傷人被捕,原本要彩排《歡樂滿東華2008》的她因而缺席,直至昨日(12月2日),盈盈繼續要與王君馨、馬''賽、胡定欣等彩排,大批記者在電視城守候,她足足遲了約1小時才到場。全身黑衣打扮的盈盈,神情落寞、木無表情,回答記者問題時更眼泛淚光。盈盈因為遲到,向記者說聲“不好意思”后''便急步入場,其助手坦言盈盈沒什么可以講。后來在《歡樂滿東華2008》監制何小慧陪同下,盈盈接受簡短訪問,她小聲地說:“多謝大家關心,交給警方處理了,不方便講,','甲醇期貨今日掛牌上市繼上半年焦炭、鉛期貨上市后,醞釀已久的甲醇期貨將在今日正式掛牌交易。基準價均為3050元/噸繼上半年焦炭、鉛期貨上市后,醞釀已久的甲醇期貨將在今日正式''掛牌交易。鄭州商品交易所(鄭商所)昨日公布首批甲醇期貨8合約的上市掛牌基準價,均為3050元/噸。據此推算,買賣一手甲醇合約至少需要12200元。業內人士認為,作為國際市場上的''首個甲醇期貨品種,其今日掛牌后可能會因炒新資金追捧而出現沖高走勢,脈沖式行情過后可能有所回落,不過,投資者在上市初期應關注期現價差異常帶來的無風險套利交易機會。']main(test)

TextCNN

# coding: UTF-8

import torch

import torch.nn as nn

import torch.nn.functional as F

import numpy as npclass Config(object):"""配置參數"""def __init__(self, dataset, embedding):self.model_name = 'TextCNN'self.train_path = dataset + '/data/train.txt' # 訓練集self.dev_path = dataset + '/data/dev.txt' # 驗證集self.test_path = dataset + '/data/test.txt' # 測試集self.predict_path = dataset + '/data/predict.txt'# self.class_list = [x.strip() for x in open(dataset + '/data/class.txt', encoding='utf-8').readlines()] # 類別名單self.class_list = ['財經', '房產', '股票', '教育', '科技', '社會', '時政', '體育', '游戲','娛樂']self.vocab_path = dataset + '/data/vocab.pkl' # 詞表self.save_path = dataset + '/saved_dict/' + self.model_name + '.ckpt' # 模型訓練結果self.log_path = dataset + '/log/' + self.model_nameself.embedding_pretrained = torch.tensor(np.load(dataset + '/data/' + embedding)["embeddings"].astype('float32'))\if embedding != 'random' else None # 預訓練詞向量self.device = torch.device('cuda' if torch.cuda.is_available() else 'cpu') # 設備self.dropout = 0.5 # 隨機失活self.require_improvement = 1000 # 若超過1000batch效果還沒提升,則提前結束訓練self.num_classes = len(self.class_list) # 類別數self.n_vocab = 0 # 詞表大小,在運行時賦值self.num_epochs = 20 # epoch數self.batch_size = 128 # mini-batch大小self.pad_size = 32 # 每句話處理成的長度(短填長切)self.learning_rate = 1e-3 # 學習率self.embed = self.embedding_pretrained.size(1)\if self.embedding_pretrained is not None else 300 # 字向量維度self.filter_sizes = (2, 3, 4) # 卷積核尺寸self.num_filters = 256 # 卷積核數量(channels數)'''Convolutional Neural Networks for Sentence Classification'''class Model(nn.Module):def __init__(self, config):super(Model, self).__init__()if config.embedding_pretrained is not None:self.embedding = nn.Embedding.from_pretrained(config.embedding_pretrained, freeze=False)else:self.embedding = nn.Embedding(config.n_vocab, config.embed, padding_idx=config.n_vocab - 1)self.convs = nn.ModuleList([nn.Conv2d(1, config.num_filters, (k, config.embed)) for k in config.filter_sizes])self.dropout = nn.Dropout(config.dropout)self.fc = nn.Linear(config.num_filters * len(config.filter_sizes), config.num_classes)def conv_and_pool(self, x, conv):x = F.relu(conv(x)).squeeze(3)x = F.max_pool1d(x, x.size(2)).squeeze(2)return xdef forward(self, x):out = self.embedding(x[0])out = out.unsqueeze(1)out = torch.cat([self.conv_and_pool(out, conv) for conv in self.convs], 1)out = self.dropout(out)out = self.fc(out)return out訓練效果?

| TextCNN訓練效果 | ||||

| Test Time | 0:04:23 | |||

| Test Loaa: | 0.3 | Test Acc | 90.58% | |

| 類別 | Precision | recall | F1-score | support |

| 財經 | 0.9123 | 0.8950 | 0.9036 | 1000 |

| 房產 | 0.9043 | 0.9360 | 0.9199 | 1000 |

| 股票 | 0.8812 | 0.8230 | 0.8511 | 1000 |

| 教育 | 0.9540 | 0.9530 | 0.9535 | 1000 |

| 科技 | 0.8354 | 0.8880 | 0.9609 | 1000 |

| 社會 | 0.8743 | 0.9110 | 0.8923 | 1000 |

| 時政 | 0.8816 | 0.8860 | 0.8838 | 1000 |

| 體育 | 0.9682 | 0.9430 | 0.9554 | 1000 |

| 游戲 | 0.9249 | 0.9110 | 0.9179 | 1000 |

| 娛樂 | 0.9287 | 0.9120 | 0.9203 | 1000 |

| Accuracy | 0.9058 | 1000 | ||

| Macro avg | 0.9065 | 0.9058 | 0.9059 | 1000 |

| Weighted avg | 0.9065 | 0.9058 | 0.9059 | 1000 |

TextRNN

# coding: UTF-8

import torch

import torch.nn as nn

import numpy as npclass Config(object):"""配置參數"""def __init__(self, dataset, embedding):self.model_name = 'TextRNN'self.train_path = dataset + '/data/train.txt' # 訓練集self.dev_path = dataset + '/data/dev.txt' # 驗證集self.test_path = dataset + '/data/test.txt' # 測試集# self.class_list = [x.strip() for x in open(dataset + '/data/class.txt', encoding='utf-8').readlines()]self.class_list = ['體育', '軍事', '娛樂', '政治', '教育', '災難', '社會', '科技', '財經', '違法']self.vocab_path = dataset + '/data/vocab.pkl' # 詞表self.save_path = dataset + '/saved_dict/' + self.model_name + '.ckpt' # 模型訓練結果self.log_path = dataset + '/log/' + self.model_nameself.embedding_pretrained = torch.tensor(np.load(dataset + '/data/' + embedding)["embeddings"].astype('float32'))\if embedding != 'random' else None # 預訓練詞向量self.device = torch.device('cuda' if torch.cuda.is_available() else 'cpu') # 設備self.dropout = 0.5 # 隨機失活self.require_improvement = 1000 # 若超過1000batch效果還沒提升,則提前結束訓練self.num_classes = len(self.class_list) # 類別數self.n_vocab = 0 # 詞表大小,在運行時賦值self.num_epochs = 20 # epoch數self.batch_size = 128 # mini-batch大小self.pad_size = 32 # 每句話處理成的長度(短填長切)self.learning_rate = 1e-3 # 學習率self.embed = self.embedding_pretrained.size(1)\if self.embedding_pretrained is not None else 300 # 字向量維度, 若使用了預訓練詞向量,則維度統一self.hidden_size = 128 # lstm隱藏層self.num_layers = 2 # lstm層數'''Recurrent Neural Network for Text Classification with Multi-Task Learning'''class Model(nn.Module):def __init__(self, config):super(Model, self).__init__()if config.embedding_pretrained is not None:self.embedding = nn.Embedding.from_pretrained(config.embedding_pretrained, freeze=False)else:self.embedding = nn.Embedding(config.n_vocab, config.embed, padding_idx=config.n_vocab - 1)self.lstm = nn.LSTM(config.embed, config.hidden_size, config.num_layers,bidirectional=True, batch_first=True, dropout=config.dropout)self.fc = nn.Linear(config.hidden_size * 2, config.num_classes)def forward(self, x):x, _ = xout = self.embedding(x) # [batch_size, seq_len, embeding]=[128, 32, 300]out, _ = self.lstm(out)out = self.fc(out[:, -1, :]) # 句子最后時刻的 hidden statereturn out'''變長RNN,效果差不多,甚至還低了點...'''# def forward(self, x):# x, seq_len = x# out = self.embedding(x)# _, idx_sort = torch.sort(seq_len, dim=0, descending=True) # 長度從長到短排序(index)# _, idx_unsort = torch.sort(idx_sort) # 排序后,原序列的 index# out = torch.index_select(out, 0, idx_sort)# seq_len = list(seq_len[idx_sort])# out = nn.utils.rnn.pack_padded_sequence(out, seq_len, batch_first=True)# # [batche_size, seq_len, num_directions * hidden_size]# out, (hn, _) = self.lstm(out)# out = torch.cat((hn[2], hn[3]), -1)# # out, _ = nn.utils.rnn.pad_packed_sequence(out, batch_first=True)# out = out.index_select(0, idx_unsort)# out = self.fc(out)# return out

訓練效果

| TextRNN訓練效果 | ||||

| Test Time | 0:05:07 | |||

| Test Loaa: | 0.29 | Test Acc | 91.03% | |

| 類別 | Precision | recall | F1-score | support |

| 財經 | 0.9195 | 0.8790 | 0.8988 | 1000 |

| 房產 | 0.9181 | 0.9190 | 0.9185 | 1000 |

| 股票 | 0.8591 | 0.8290 | 0.8438 | 1000 |

| 教育 | 0.9349 | 0.9480 | 0.9414 | 1000 |

| 科技 | 0.8642 | 0.8720 | 0.8681 | 1000 |

| 社會 | 0.9190 | 0.9080 | 0.9135 | 1000 |

| 時政 | 0.8578 | 0.8990 | 0.8779 | 1000 |

| 體育 | 0.9690 | 0.9690 | 0.9690 | 1000 |

| 游戲 | 0.9454 | 0.9350 | 0.9402 | 1000 |

| 娛樂 | 0.9175 | 0.9450 | 0.9310 | 1000 |

| Accuracy | 0.9103 | 1000 | ||

| Macro avg | 0.9104 | 0.9103 | 0.9102 | 1000 |

| Weighted avg | 0.9104 | 0.9103 | 0.9102 | 1000 |

?TextRNN網絡的訓練效果最好,準確率達到了91.03%,明顯高于TextCNN網絡的效果。

TextRCNN

# coding: UTF-8

import torch

import torch.nn as nn

import torch.nn.functional as F

import numpy as npclass Config(object):"""配置參數"""def __init__(self, dataset, embedding):self.model_name = 'TextRCNN'self.train_path = dataset + '/data/train.txt' # 訓練集self.dev_path = dataset + '/data/dev.txt' # 驗證集self.test_path = dataset + '/data/test.txt' # 測試集self.class_list = [x.strip() for x in open(dataset + '/data/class.txt', encoding='utf-8').readlines()] # 類別名單self.vocab_path = dataset + '/data/vocab.pkl' # 詞表self.save_path = dataset + '/saved_dict/' + self.model_name + '.ckpt' # 模型訓練結果self.log_path = dataset + '/log/' + self.model_nameself.embedding_pretrained = torch.tensor(np.load(dataset + '/data/' + embedding)["embeddings"].astype('float32'))\if embedding != 'random' else None # 預訓練詞向量self.device = torch.device('cuda' if torch.cuda.is_available() else 'cpu') # 設備self.dropout = 1.02 # 隨機失活self.require_improvement = 1000 # 若超過1000batch效果還沒提升,則提前結束訓練self.num_classes = len(self.class_list) # 類別數self.n_vocab = 0 # 詞表大小,在運行時賦值self.num_epochs = 20 # epoch數self.batch_size = 128 # mini-batch大小self.pad_size = 32 # 每句話處理成的長度(短填長切)self.learning_rate = 1e-3 # 學習率self.embed = self.embedding_pretrained.size(1)\if self.embedding_pretrained is not None else 300 # 字向量維度, 若使用了預訓練詞向量,則維度統一self.hidden_size = 256 # lstm隱藏層self.num_layers = 1 # lstm層數'''Recurrent Convolutional Neural Networks for Text Classification'''class Model(nn.Module):def __init__(self, config):super(Model, self).__init__()if config.embedding_pretrained is not None:self.embedding = nn.Embedding.from_pretrained(config.embedding_pretrained, freeze=False)else:self.embedding = nn.Embedding(config.n_vocab, config.embed, padding_idx=config.n_vocab - 1)self.lstm = nn.LSTM(config.embed, config.hidden_size, config.num_layers,bidirectional=True, batch_first=True, dropout=config.dropout)self.maxpool = nn.MaxPool1d(config.pad_size)self.fc = nn.Linear(config.hidden_size * 2 + config.embed, config.num_classes)def forward(self, x):x, _ = xembed = self.embedding(x) # [batch_size, seq_len, embeding]=[64, 32, 64]out, _ = self.lstm(embed)out = torch.cat((embed, out), 2)out = F.relu(out)out = out.permute(0, 2, 1)out = self.maxpool(out).squeeze()out = self.fc(out)return out

?訓練效果

| TextRCNN訓練效果 | ||||

| Test Time | 0:03:20 | |||

| Test Loaa: | 0.29 | Test Acc | 90.96% | |

| 類別 | Precision | recall | F1-score | support |

| 財經 | 0.9134 | 0.8970 | 0.9051 | 1000 |

| 房產 | 0.9051 | 0.9350 | 0.9198 | 1000 |

| 股票 | 0.8658 | 0.8320 | 0.8485 | 1000 |

| 教育 | 0.9295 | 0.9500 | 0.9397 | 1000 |

| 科技 | 0.8352 | 0.8770 | 0.8556 | 1000 |

| 社會 | 0.8993 | 0.9290 | 0.9139 | 1000 |

| 時政 | 0.8921 | 0.9680 | 0.8799 | 1000 |

| 體育 | 0.9851 | 0.9670 | 0.9743 | 1000 |

| 游戲 | 0.9551 | 0.9140 | 0.9341 | 1000 |

| 娛樂 | 0.9233 | 0.9270 | 0.9251 | 1000 |

| Accuracy | 0.9096 | 1000 | ||

| Macro avg | 0.9101 | 0.9096 | 0.9096 | 1000 |

| Weighted avg | 0.9101 | 0.9096 | 0.9096 | 1000 |

TextRCNN網絡的效果為90.96%,與TextCNN網絡模型效果相近。

| mode | time | Cpu |

| TextCNN | 0:04:23 | CORE i5 |

| TextRNN | 0:05:07 | |

| TextRCNN | 0:03:20 |

通過分別對模型TextCNN、TextRNN、TextRCNN和不同的硬件環境進行實驗,分別對實驗結果中的訓練時間、準確率、召回率和F1值進行比較,進一步確定哪個模型在給定數據集和硬件環境下表現最佳。最終發現TextRNN的訓練結果最好,但所需的時間也是最久的,而TextRCNN模型的訓練結果與TextCNN幾乎相同,但TextRCNN所需的時間最少。

← DFS)

)

)