FFmpeg+javacpp中仿ffplay播放

- 1、[ffplay 基于 SDL 和 FFmpeg 庫的簡單媒體播放器](https://ffmpeg.org/ffplay.html)

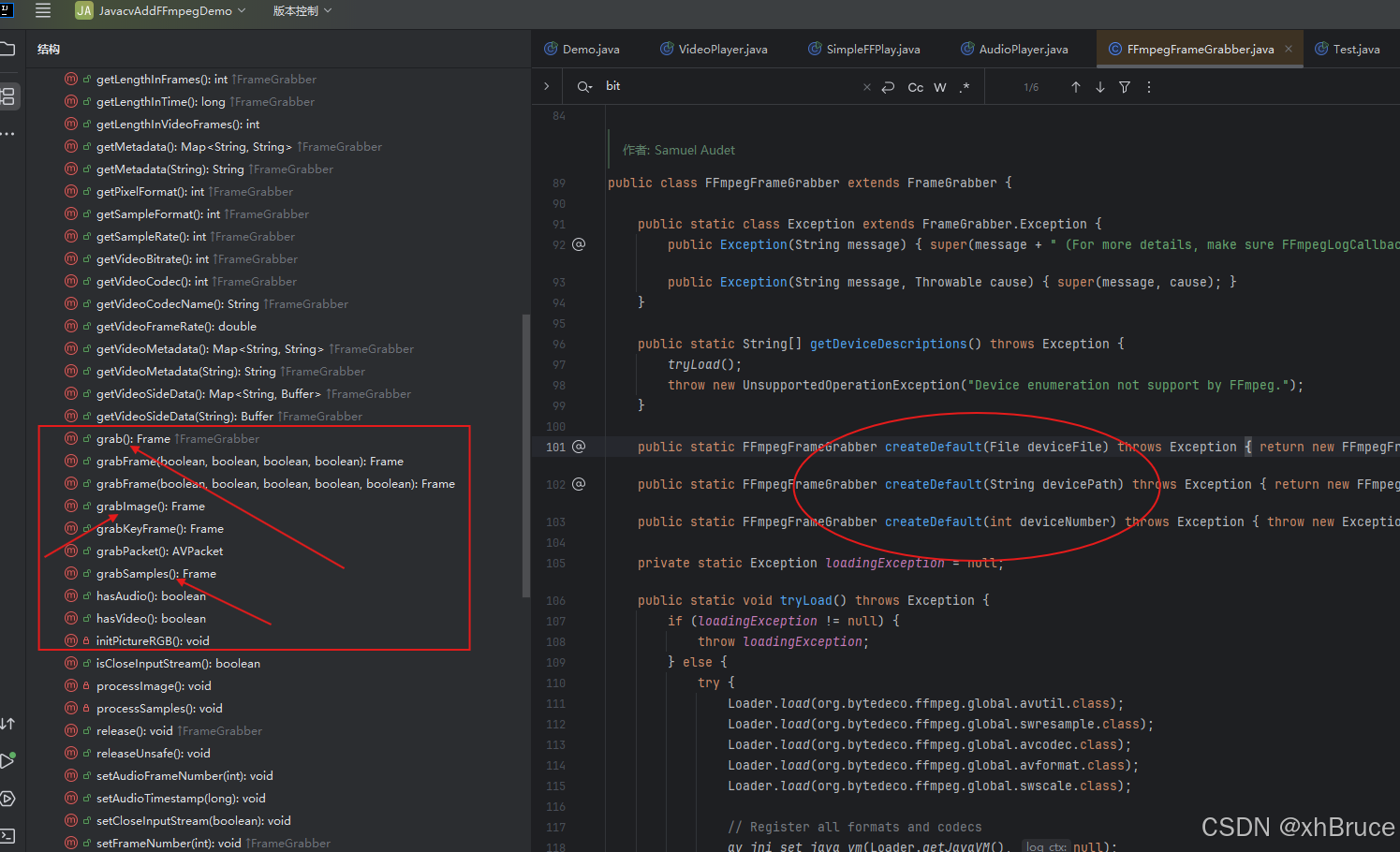

- 2、FFmpeg幀捕獲器 : FFmpegFrameGrabber

- 2.1 grabSamples()

- 2.2 grabImage()

- 2.3 grab() 獲取音視頻幀

FFmpeg+javacpp+javacv使用

ffmpeg-6.0\fftools\ffplay.c

1、ffplay 基于 SDL 和 FFmpeg 庫的簡單媒體播放器

ffmpeg-6.0\fftools\ffplay.cFFplay是一款使用FFmpeg庫和SDL庫的非常簡單便攜的媒體播放器。它主要用作各種FFmpeg API的測試平臺。

parse_options(NULL, argc, argv, options, opt_input_file);

is = stream_open(input_filename, file_iformat);

event_loop(is);

實質刷新每一幀流refresh_loop_wait_event(cur_stream, &event);,video_refresh獲取,對應到 FFmpeg幀捕獲器 :FFmpegFrameGrabber

ffplay -i “G:\視頻\動漫\長安三萬里2023.mp4” -hide_banner -x 800 -y 600

/* Called from the main */

int main(int argc, char **argv)

{int flags;VideoState *is;init_dynload();av_log_set_flags(AV_LOG_SKIP_REPEATED);parse_loglevel(argc, argv, options);/* register all codecs, demux and protocols */

#if CONFIG_AVDEVICEavdevice_register_all();

#endifavformat_network_init();signal(SIGINT , sigterm_handler); /* Interrupt (ANSI). */signal(SIGTERM, sigterm_handler); /* Termination (ANSI). */show_banner(argc, argv, options);parse_options(NULL, argc, argv, options, opt_input_file);if (!input_filename) {show_usage();av_log(NULL, AV_LOG_FATAL, "An input file must be specified\n");av_log(NULL, AV_LOG_FATAL,"Use -h to get full help or, even better, run 'man %s'\n", program_name);exit(1);}if (display_disable) {video_disable = 1;}flags = SDL_INIT_VIDEO | SDL_INIT_AUDIO | SDL_INIT_TIMER;if (audio_disable)flags &= ~SDL_INIT_AUDIO;else {/* Try to work around an occasional ALSA buffer underflow issue when the* period size is NPOT due to ALSA resampling by forcing the buffer size. */if (!SDL_getenv("SDL_AUDIO_ALSA_SET_BUFFER_SIZE"))SDL_setenv("SDL_AUDIO_ALSA_SET_BUFFER_SIZE","1", 1);}if (display_disable)flags &= ~SDL_INIT_VIDEO;if (SDL_Init (flags)) {av_log(NULL, AV_LOG_FATAL, "Could not initialize SDL - %s\n", SDL_GetError());av_log(NULL, AV_LOG_FATAL, "(Did you set the DISPLAY variable?)\n");exit(1);}SDL_EventState(SDL_SYSWMEVENT, SDL_IGNORE);SDL_EventState(SDL_USEREVENT, SDL_IGNORE);if (!display_disable) {int flags = SDL_WINDOW_HIDDEN;if (alwaysontop)

#if SDL_VERSION_ATLEAST(2,0,5)flags |= SDL_WINDOW_ALWAYS_ON_TOP;

#elseav_log(NULL, AV_LOG_WARNING, "Your SDL version doesn't support SDL_WINDOW_ALWAYS_ON_TOP. Feature will be inactive.\n");

#endifif (borderless)flags |= SDL_WINDOW_BORDERLESS;elseflags |= SDL_WINDOW_RESIZABLE;#ifdef SDL_HINT_VIDEO_X11_NET_WM_BYPASS_COMPOSITORSDL_SetHint(SDL_HINT_VIDEO_X11_NET_WM_BYPASS_COMPOSITOR, "0");

#endifwindow = SDL_CreateWindow(program_name, SDL_WINDOWPOS_UNDEFINED, SDL_WINDOWPOS_UNDEFINED, default_width, default_height, flags);SDL_SetHint(SDL_HINT_RENDER_SCALE_QUALITY, "linear");if (window) {renderer = SDL_CreateRenderer(window, -1, SDL_RENDERER_ACCELERATED | SDL_RENDERER_PRESENTVSYNC);if (!renderer) {av_log(NULL, AV_LOG_WARNING, "Failed to initialize a hardware accelerated renderer: %s\n", SDL_GetError());renderer = SDL_CreateRenderer(window, -1, 0);}if (renderer) {if (!SDL_GetRendererInfo(renderer, &renderer_info))av_log(NULL, AV_LOG_VERBOSE, "Initialized %s renderer.\n", renderer_info.name);}}if (!window || !renderer || !renderer_info.num_texture_formats) {av_log(NULL, AV_LOG_FATAL, "Failed to create window or renderer: %s", SDL_GetError());do_exit(NULL);}}is = stream_open(input_filename, file_iformat);if (!is) {av_log(NULL, AV_LOG_FATAL, "Failed to initialize VideoState!\n");do_exit(NULL);}event_loop(is);/* never returns */return 0;

}

static void video_refresh(void *opaque, double *remaining_time)

{VideoState *is = opaque;double time;Frame *sp, *sp2;if (!is->paused && get_master_sync_type(is) == AV_SYNC_EXTERNAL_CLOCK && is->realtime)check_external_clock_speed(is);if (!display_disable && is->show_mode != SHOW_MODE_VIDEO && is->audio_st) {time = av_gettime_relative() / 1000000.0;if (is->force_refresh || is->last_vis_time + rdftspeed < time) {video_display(is);is->last_vis_time = time;}*remaining_time = FFMIN(*remaining_time, is->last_vis_time + rdftspeed - time);}if (is->video_st) {

retry:if (frame_queue_nb_remaining(&is->pictq) == 0) {// nothing to do, no picture to display in the queue} else {double last_duration, duration, delay;Frame *vp, *lastvp;/* dequeue the picture */lastvp = frame_queue_peek_last(&is->pictq);vp = frame_queue_peek(&is->pictq);if (vp->serial != is->videoq.serial) {frame_queue_next(&is->pictq);goto retry;}if (lastvp->serial != vp->serial)is->frame_timer = av_gettime_relative() / 1000000.0;if (is->paused)goto display;/* compute nominal last_duration */last_duration = vp_duration(is, lastvp, vp);delay = compute_target_delay(last_duration, is);time= av_gettime_relative()/1000000.0;if (time < is->frame_timer + delay) {*remaining_time = FFMIN(is->frame_timer + delay - time, *remaining_time);goto display;}is->frame_timer += delay;if (delay > 0 && time - is->frame_timer > AV_SYNC_THRESHOLD_MAX)is->frame_timer = time;SDL_LockMutex(is->pictq.mutex);if (!isnan(vp->pts))update_video_pts(is, vp->pts, vp->pos, vp->serial);SDL_UnlockMutex(is->pictq.mutex);if (frame_queue_nb_remaining(&is->pictq) > 1) {Frame *nextvp = frame_queue_peek_next(&is->pictq);duration = vp_duration(is, vp, nextvp);if(!is->step && (framedrop>0 || (framedrop && get_master_sync_type(is) != AV_SYNC_VIDEO_MASTER)) && time > is->frame_timer + duration){is->frame_drops_late++;frame_queue_next(&is->pictq);goto retry;}}if (is->subtitle_st) {while (frame_queue_nb_remaining(&is->subpq) > 0) {sp = frame_queue_peek(&is->subpq);if (frame_queue_nb_remaining(&is->subpq) > 1)sp2 = frame_queue_peek_next(&is->subpq);elsesp2 = NULL;if (sp->serial != is->subtitleq.serial|| (is->vidclk.pts > (sp->pts + ((float) sp->sub.end_display_time / 1000)))|| (sp2 && is->vidclk.pts > (sp2->pts + ((float) sp2->sub.start_display_time / 1000)))){if (sp->uploaded) {int i;for (i = 0; i < sp->sub.num_rects; i++) {AVSubtitleRect *sub_rect = sp->sub.rects[i];uint8_t *pixels;int pitch, j;if (!SDL_LockTexture(is->sub_texture, (SDL_Rect *)sub_rect, (void **)&pixels, &pitch)) {for (j = 0; j < sub_rect->h; j++, pixels += pitch)memset(pixels, 0, sub_rect->w << 2);SDL_UnlockTexture(is->sub_texture);}}}frame_queue_next(&is->subpq);} else {break;}}}frame_queue_next(&is->pictq);is->force_refresh = 1;if (is->step && !is->paused)stream_toggle_pause(is);}

display:/* display picture */if (!display_disable && is->force_refresh && is->show_mode == SHOW_MODE_VIDEO && is->pictq.rindex_shown)video_display(is);}is->force_refresh = 0;if (show_status) {AVBPrint buf;static int64_t last_time;int64_t cur_time;int aqsize, vqsize, sqsize;double av_diff;cur_time = av_gettime_relative();if (!last_time || (cur_time - last_time) >= 30000) {aqsize = 0;vqsize = 0;sqsize = 0;if (is->audio_st)aqsize = is->audioq.size;if (is->video_st)vqsize = is->videoq.size;if (is->subtitle_st)sqsize = is->subtitleq.size;av_diff = 0;if (is->audio_st && is->video_st)av_diff = get_clock(&is->audclk) - get_clock(&is->vidclk);else if (is->video_st)av_diff = get_master_clock(is) - get_clock(&is->vidclk);else if (is->audio_st)av_diff = get_master_clock(is) - get_clock(&is->audclk);av_bprint_init(&buf, 0, AV_BPRINT_SIZE_AUTOMATIC);av_bprintf(&buf,"%7.2f %s:%7.3f fd=%4d aq=%5dKB vq=%5dKB sq=%5dB f=%"PRId64"/%"PRId64" \r",get_master_clock(is),(is->audio_st && is->video_st) ? "A-V" : (is->video_st ? "M-V" : (is->audio_st ? "M-A" : " ")),av_diff,is->frame_drops_early + is->frame_drops_late,aqsize / 1024,vqsize / 1024,sqsize,is->video_st ? is->viddec.avctx->pts_correction_num_faulty_dts : 0,is->video_st ? is->viddec.avctx->pts_correction_num_faulty_pts : 0);if (show_status == 1 && AV_LOG_INFO > av_log_get_level())fprintf(stderr, "%s", buf.str);elseav_log(NULL, AV_LOG_INFO, "%s", buf.str);fflush(stderr);av_bprint_finalize(&buf, NULL);last_time = cur_time;}}

}

2、FFmpeg幀捕獲器 : FFmpegFrameGrabber

JavaCV 1.5.12 API

JavaCPP Presets for FFmpeg 7.1.1-1.5.12 API

2.1 grabSamples()

grabber.grabSamples()獲取音頻信息

javax.sound.sampled.AudioFormat 設置PCM編碼的AudioFormat參數,注意每個樣本中的位數,無法獲取,一般默認設置16位深。

package org.xhbruce.test;import org.bytedeco.ffmpeg.avcodec.AVCodecParameters;

import org.bytedeco.ffmpeg.avformat.AVFormatContext;

import org.bytedeco.ffmpeg.avformat.AVStream;

import org.bytedeco.ffmpeg.global.avutil;

import org.bytedeco.javacv.FFmpegFrameGrabber;

import org.bytedeco.javacv.Frame;

import org.xhbruce.Log.XLog;import javax.sound.sampled.AudioFormat;

import javax.sound.sampled.AudioSystem;

import javax.sound.sampled.DataLine;

import javax.sound.sampled.SourceDataLine;

import java.nio.Buffer;

import java.nio.ByteBuffer;

import java.nio.ShortBuffer;public class AudioPlayer implements Runnable {private String filePath;public AudioPlayer(String filePath) {this.filePath = filePath;}public void playAudio() throws Exception {FFmpegFrameGrabber grabber = new FFmpegFrameGrabber(filePath);grabber.start();int sampleRate = grabber.getSampleRate();int audioChannels = grabber.getAudioChannels();int audioBitsPerSample = getAudioBitsPerSample(grabber);XLog.d("audioBitsPerSample=" + audioBitsPerSample);if (audioBitsPerSample <= 0) {audioBitsPerSample = 16; // 默認使用16位深度}AudioFormat audioFormat = new AudioFormat((float) sampleRate,audioBitsPerSample,audioChannels,true,false);DataLine.Info info = new DataLine.Info(SourceDataLine.class, audioFormat);SourceDataLine line = (SourceDataLine) AudioSystem.getLine(info);line.open(audioFormat);line.start();Frame frame;while ((frame = grabber.grabSamples()) != null) {if (frame.samples == null || frame.samples.length == 0) continue;Buffer buffer = frame.samples[0];byte[] audioBytes;if (buffer instanceof ShortBuffer) {ShortBuffer shortBuffer = (ShortBuffer) buffer;audioBytes = new byte[shortBuffer.remaining() * 2]; // Each short is 2 bytesfor (int i = 0; i < shortBuffer.remaining(); i++) {short value = shortBuffer.get(i);audioBytes[i * 2] = (byte) (value & 0xFF);audioBytes[i * 2 + 1] = (byte) ((value >> 8) & 0xFF);}} else if (buffer instanceof ByteBuffer) {ByteBuffer byteBuffer = (ByteBuffer) buffer;audioBytes = new byte[byteBuffer.remaining()];byteBuffer.get(audioBytes);} else {throw new IllegalArgumentException("Unsupported buffer type: " + buffer.getClass());}line.write(audioBytes, 0, audioBytes.length);}line.drain();line.stop();line.close();grabber.stop();}private int getAudioBitsPerSample(FFmpegFrameGrabber grabber) {AVFormatContext formatContext = grabber.getFormatContext();if (formatContext == null) {return -1;}int nbStreams = formatContext.nb_streams();for (int i = 0; i < nbStreams; i++) {AVStream stream = formatContext.streams(i);if (stream.codecpar().codec_type() == avutil.AVMEDIA_TYPE_AUDIO) {AVCodecParameters codecParams = stream.codecpar();return codecParams.bits_per_raw_sample();}}return -1;}@Overridepublic void run() {try {playAudio();} catch (Exception e) {throw new RuntimeException(e);}}public static void main(String[] args) {

// new Thread(new AudioPlayer("G:\\視頻\\動漫\\長安三萬里2023.mp4")).start();new Thread(new AudioPlayer("F:\\Music\\Let Me Down Slowly.mp3")).start();}

}

2.2 grabImage()

grabImage()獲取視頻信息

JFrame、JLabel圖片顯示

package org.xhbruce.test;import org.bytedeco.javacv.FFmpegFrameGrabber;

import org.bytedeco.javacv.Frame;

import org.bytedeco.javacv.Java2DFrameConverter;import javax.swing.*;

import java.awt.*;public class VideoPlayer extends JFrame implements Runnable {private String filePath;private JLabel videoLabel;public VideoPlayer(String title, String filePath) {super(title);this.filePath = filePath;setSize(800, 600);setDefaultCloseOperation(JFrame.EXIT_ON_CLOSE);setLocationRelativeTo(null);videoLabel = new JLabel();getContentPane().add(videoLabel, BorderLayout.CENTER);}public void playVideo() throws Exception {FFmpegFrameGrabber grabber = new FFmpegFrameGrabber(filePath);grabber.start();int width = grabber.getImageWidth();int height = grabber.getImageHeight();Java2DFrameConverter converter = new Java2DFrameConverter();Frame frame;while ((frame = grabber.grabImage()) != null) {if (frame.image == null) continue;Image image = converter.convert(frame);int windowWidth = getWidth();int windowHeight = getHeight();float scaleX = (float) width / windowWidth;float scaleY = (float) height / windowHeight;float maxScale = Math.max(scaleX, scaleY);ImageIcon icon = new ImageIcon(image.getScaledInstance((int) (width / maxScale), (int) (height / maxScale), Image.SCALE_SMOOTH));SwingUtilities.invokeLater(() -> videoLabel.setIcon(icon));Thread.sleep(40); // 控制幀率}grabber.stop();}@Overridepublic void run() {try {setVisible(true);playVideo();} catch (Exception e) {throw new RuntimeException(e);}}public static void main(String[] args) {String mp4Url = "G:\\視頻\\動漫\\長安三萬里2023.mp4";new Thread(new VideoPlayer("JavaCV Video Player", mp4Url)).start();}

}

2.3 grab() 獲取音視頻幀

將上面組合處理,但存在同步卡頓問題,音頻尤其嚴重,建議音視頻不同線程處理。

package org.xhbruce.test;import org.bytedeco.ffmpeg.avcodec.AVCodecParameters;

import org.bytedeco.ffmpeg.avformat.AVFormatContext;

import org.bytedeco.ffmpeg.avformat.AVStream;

import org.bytedeco.ffmpeg.global.avutil;

import org.bytedeco.javacv.FFmpegFrameGrabber;

import org.bytedeco.javacv.Frame;

import org.bytedeco.javacv.Java2DFrameConverter;

import org.xhbruce.Log.XLog;import javax.sound.sampled.AudioFormat;

import javax.sound.sampled.AudioSystem;

import javax.sound.sampled.DataLine;

import javax.sound.sampled.SourceDataLine;

import javax.swing.*;

import java.awt.*;

import java.awt.image.BufferedImage;

import java.nio.Buffer;

import java.nio.ByteBuffer;

import java.nio.ShortBuffer;public class SimpleFFPlay extends JFrame implements Runnable {private String filePath;private JLabel videoLabel;public SimpleFFPlay(String filePath) {this.filePath = filePath;setTitle("Simple FFPlay - JavaCV");setSize(800, 600);setDefaultCloseOperation(JFrame.EXIT_ON_CLOSE);setLocationRelativeTo(null);videoLabel = new JLabel();getContentPane().add(videoLabel, BorderLayout.CENTER);setVisible(true);}@Overridepublic void run() {FFmpegFrameGrabber grabber = null;try {grabber = FFmpegFrameGrabber.createDefault(filePath);} catch (FFmpegFrameGrabber.Exception e) {throw new RuntimeException(e);}Java2DFrameConverter converter = new Java2DFrameConverter();try {grabber.start();//grabber.setFrameRate(30);// 音頻播放初始化int sampleRate = grabber.getSampleRate();int audioChannels = grabber.getAudioChannels();int audioBitsPerSample = getAudioBitsPerSample(grabber);XLog.d("sampleRate="+sampleRate+", audioChannels="+audioChannels+", audioBitsPerSample=" + audioBitsPerSample+"; getAudioBitrate="+grabber.getAudioBitrate());if (audioBitsPerSample <= 0) {audioBitsPerSample = 16; // 默認使用16位深度}AudioFormat audioFormat = new AudioFormat((float) sampleRate,audioBitsPerSample/*grabber.getAudioBitsPerSample()*/,audioChannels,true,false);DataLine.Info info = new DataLine.Info(SourceDataLine.class, audioFormat, 4096);SourceDataLine audioLine = (SourceDataLine) AudioSystem.getLine(info);//SourceDataLine audioLine = AudioSystem.getSourceDataLine(audioFormat);audioLine.open(audioFormat);audioLine.start();// 主循環讀取幀Frame frame;while ((frame = grabber.grab()) != null) {if (frame.image != null) {// 視頻幀BufferedImage image = converter.getBufferedImage(frame);int width = grabber.getImageWidth();int height = grabber.getImageHeight();paintFrame(image, width, height);}if (frame.samples != null && frame.samples.length > 0) {Buffer buffer = frame.samples[0];byte[] audioBytes;if (buffer instanceof ShortBuffer) {ShortBuffer shortBuffer = (ShortBuffer) buffer;audioBytes = new byte[shortBuffer.remaining() * 2]; // Each short is 2 bytesfor (int i = 0; i < shortBuffer.remaining(); i++) {short value = shortBuffer.get(i);audioBytes[i * 2] = (byte) (value & 0xFF);audioBytes[i * 2 + 1] = (byte) ((value >> 8) & 0xFF);}} else if (buffer instanceof ByteBuffer) {ByteBuffer byteBuffer = (ByteBuffer) buffer;audioBytes = new byte[byteBuffer.remaining()];byteBuffer.get(audioBytes);} else {throw new IllegalArgumentException("Unsupported buffer type: " + buffer.getClass());}audioLine.write(audioBytes, 0, audioBytes.length);}Thread.sleep(40); // 控制幀率}audioLine.drain();audioLine.stop();audioLine.close();grabber.stop();} catch (Exception e) {e.printStackTrace();}}private int getAudioBitsPerSample(FFmpegFrameGrabber grabber) {AVFormatContext formatContext = grabber.getFormatContext();if (formatContext == null) {return -1;}int nbStreams = formatContext.nb_streams();for (int i = 0; i < nbStreams; i++) {AVStream stream = formatContext.streams(i);if (stream.codecpar().codec_type() == avutil.AVMEDIA_TYPE_AUDIO) {AVCodecParameters codecParams = stream.codecpar();return codecParams.bits_per_raw_sample();}}return -1;}private void paintFrame(BufferedImage image, int width, int height) {int windowWidth = getWidth();int windowHeight = getHeight();float scaleX = (float) width / windowWidth;float scaleY = (float) height / windowHeight;float maxScale = Math.max(scaleX, scaleY);//XLog.d("width="+width+", height="+height+"; windowWidth="+windowWidth+", windowHeight="+windowHeight+"; scaleX="+scaleX+", "+scaleY);ImageIcon icon = new ImageIcon(image.getScaledInstance((int) (width/maxScale), (int) (height/maxScale), Image.SCALE_SMOOTH));SwingUtilities.invokeLater(() -> {

// setSize(800, 600);videoLabel.setIcon(icon);});}public static void main(String[] args) {if (args.length < 1) {System.out.println("Usage: java SimpleFFPlay <video_file>");return;}new Thread(new SimpleFFPlay(args[0])).start();}

}

設計原則之合成復用原則)

)

)

)

list的使用)