基于飛漿訓練車牌識別模型

基于飛漿訓練車牌識別模型

LPRNet(License Plate Recognition via Deep Neural Networks)是一種輕量級卷積神經網絡,專為端到端車牌識別設計,由Intel IOTG Computer Vision Group的Sergey Zherzdev于2018年提出 。該模型最大創新在于首次完全去除RNN(如BiLSTM)結構,僅通過CNN和CTC Loss實現序列識別,同時保持了高精度和實時性,對中國車牌的識別準確率高達95%,處理速度達3ms/plate(GPU端)或1.3ms/plate(CPU端) 。LPRNet憑借其輕量化、端到端特性,已成為智能交通領域的重要技術,被廣泛集成到YOLO系列(如YOLOv5/v8)的檢測-識別框架中,形成完整的車牌識別系統 。

一、LPRNet的基本概念與發展歷程

LPRNet是一種專為車牌識別設計的深度神經網絡,其核心思想是通過端到端的方式直接從原始圖像中提取車牌信息,無需預先進行字符分割和RNN處理 。在傳統車牌識別系統中,通常需要先通過圖像處理技術(如邊緣檢測、閾值分割)定位車牌區域,然后進行字符分割,最后對每個分割后的字符進行識別。這種流程雖然有效,但存在分割錯誤導致整體識別失敗的風險,且依賴于手工設計的分割規則,難以適應復雜多變的現實場景 。

LPRNet的提出標志著車牌識別技術的重要突破。2018年,Intel團隊發表論文《LPRNet: License Plate Recognition via Deep Neural Networks》,首次提出了一種無需字符預分割的端到端車牌識別方法 。該方法基于深度神經網絡的最新進展,將車牌檢測和字符識別整合為一個統一的模型,避免了傳統方法中可能出現的分割錯誤問題。自LPRNet發布以來,其輕量化特性(僅0.48M參數)使其成為嵌入式設備上的理想選擇,隨后被廣泛集成到YOLO系列(如YOLOv5、YOLOv7、YOLOv8)的檢測-識別框架中,形成完整的車牌識別系統 。2022年后,LPRNet在嵌入式設備(如BM1684芯片)和云平臺(如SOPHNET)上的部署實踐增多,進一步推動了其實時識別能力在邊緣計算場景的應用 。

二、LPRNet的網絡架構設計

LPRNet采用了一種創新的輕量級CNN架構,通過精心設計的網絡結構實現了無需RNN的序列識別 。其整體架構主要包括以下幾個關鍵組件:

1. 空間變換網絡(STN,可選模塊)

STN模塊用于對輸入圖像進行幾何變換,校正車牌的扭曲和傾斜 。該模塊由定位網絡(Localization Net)構成,通過卷積和全連接層輸出仿射變換參數(6維),將原始圖像轉換為更適合識別的視角 。STN模塊的引入顯著提高了模型對畸變車牌的魯棒性,但并不是模型的必需組件,可以根據具體應用場景選擇是否使用 。

2. 輕量級Backbone網絡

Backbone網絡是LPRNet的核心特征提取模塊,設計靈感來自SqueezeNet的Fire Module和Inception的多分支結構 。具體來說,Backbone由多個"Small Basic Block"堆疊而成,每個基本構建塊包含以下組件:

- 1×1卷積層(通道壓縮)

- 批歸一化層(Batch Normalization)

- ReLU/PReLU激活函數

- 3×3卷積層(特征提取)

- 批歸一化層

- ReLU/PReLU激活函數

這種設計通過減少參數量和計算復雜度,實現了高效的特征提取 。Backbone網絡的輸出是一個表示字符概率的序列,其長度與輸入圖像像素寬度相關,每個位置對應一個字符的預測概率分布 。

3. 寬卷積模塊(1×13卷積)

LPRNet在Backbone末尾引入了一個寬卷積模塊(1×13卷積核),這是替代傳統RNN的關鍵設計 。該模塊通過寬卷積操作提取序列方向(寬度方向)的上下文信息,捕捉字符之間的關聯性,從而在不使用RNN的情況下實現序列識別。1×13卷積核的設計使得網絡能夠同時考慮單個字符及其相鄰字符的信息,增強對字符序列的預測能力 。

4. 全局上下文融合模塊

為了進一步增強序列預測的上下文關聯,LPRNet在Backbone外還額外使用了一個全連接層進行全局上下文特征提取 。該全連接層提取的全局特征會被平鋪到所需的大小,并與Backbone輸出的局部特征進行拼接(concat) ,形成更豐富的特征表示,最終輸入到字符分類頭進行識別。

5. 字符分類頭與CTC解碼

字符分類頭負責對每個位置的特征進行分類,預測可能的字符(包括漢字、字母和數字) 。網絡輸出的序列通過CTC(Connectionist Temporal Classification)損失函數進行訓練,解決輸入和輸出序列長度不一致的問題 。在推理階段,使用波束搜索(beam search)或貪婪算法(greedy algorithm)從輸出序列中解碼出最終的車牌字符串 。

下表展示了LPRNet與其他主流車牌識別模型的參數量和計算量對比:

| 模型名稱 | 輸入尺寸 | 參數量 | GFLOPs |

|---|---|---|---|

| LPRNet | 94×24 | 0.48M | 0.147 |

| CRNN | 160×32 | 8.35M | 1.06 |

| PlateNet | 168×48 | 1.92M | 1.25 |

從表中可以看出,LPRNet的參數量遠低于CRNN,計算量也顯著減少,這使其特別適合在嵌入式設備上部署 。

三、LPRNet的訓練方法與優化策略

LPRNet的訓練過程采用了端到端的方法,直接將原始車牌圖像輸入模型,輸出對應的字符序列 。訓練數據主要來源于中國城市停車數據集(CCPD),該數據集包含超過25萬張圖片,覆蓋了各種復雜環境下的車牌圖像,如模糊、傾斜、雨天、雪天等 。

1. 訓練參數設置

LPRNet的訓練參數根據不同的實現版本有所差異,但核心參數包括:

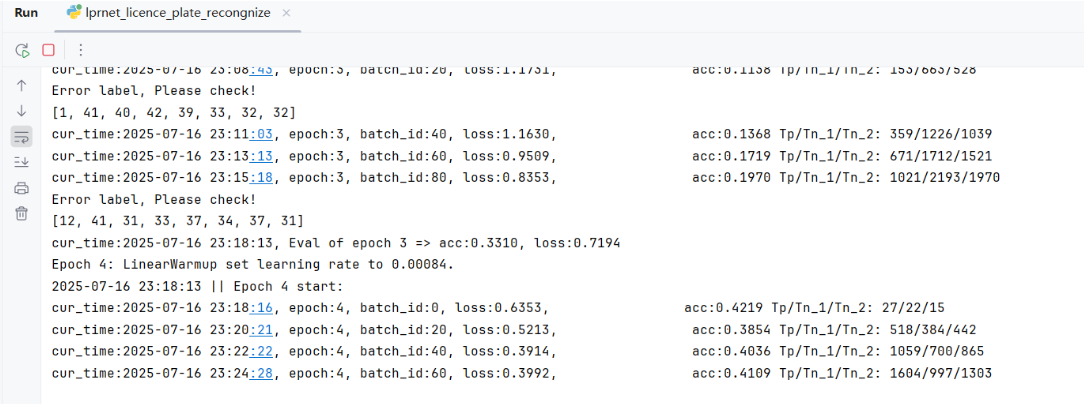

- 優化器:原始論文使用Adam優化器,初始學習率設置為0.001 ;后續改進版本(如材料[47])改用SGD優化器,初始學習率設置為0.0008,批次大小為64,訓練300個epoch 。

- 學習率策略:動態衰減(如余弦退火)、Warm-up(前3個epoch逐步提升至1e-3) 。

- 正則化技術:梯度噪聲注入(尺度0.008)、Dropout等防止過擬合 。

2. 數據增強方法

為提高模型的泛化能力和魯棒性,LPRNet的訓練采用了多種數據增強技術:

- 圖像級增強:旋轉、高斯噪聲、動作模糊、圖像裁剪等 。

- STN預處理:通過仿射變換模擬不同視角下的車牌圖像 。

- 隨機暗化處理:提升模型在夜間場景下的識別能力,使識別精度從93.2%提高到96.1% 。

- 基于GAN的數據生成:平衡字符分布,減少訓練數據不足導致的過擬合問題 。

3. CTC Loss實現

LPRNet采用了CTC損失函數來解決輸入和輸出序列長度不一致的問題 。CTC的核心思想是引入一個"空白符"(blank)類別,允許模型在輸出序列中插入空白符來對齊輸入和目標序列 。在訓練過程中,所有與目標序列等價的預測序列(通過刪除空白符和重復字符得到相同序列)都會被考慮為正確預測,從而簡化了訓練流程 。

CTC解碼通常采用波束搜索算法,從輸出序列中尋找概率最大的字符序列 。在LPRNet中,解碼過程還會結合后過濾策略,通過與國家車牌規則的預定義模板集匹配,進一步提高識別準確率 。

四、基于飛漿的代碼實現

# -*- coding: utf-8 -*-

# @Time : 2025/7/14 22:12

# @Author : pblh123@126.com

# @File : paddlepaddle2_6_2_lprnet_licence_plate_recognize.py

# @Describe : lprnet 車牌識別import os

import time

from statistics import mean

import paddle

from matplotlib import pyplot as plt

from paddle import nn

from paddle.io import Dataset, DataLoader

from PIL import Image

import numpy as np

import paddle.vision.transforms as T

import cv2# 讀取數據

CHARS = ['京', '滬', '津', '渝', '冀', '晉', '蒙', '遼', '吉', '黑','蘇', '浙', '皖', '閩', '贛', '魯', '豫', '鄂', '湘', '粵','桂', '瓊', '川', '貴', '云', '藏', '陜', '甘', '青', '寧','新','0', '1', '2', '3', '4', '5', '6', '7', '8', '9','A', 'B', 'C', 'D', 'E', 'F', 'G', 'H', 'J', 'K','L', 'M', 'N', 'P', 'Q', 'R', 'S', 'T', 'U', 'V','W', 'X', 'Y', 'Z', 'I', 'O', '-']CHARS_DICT = {char:i for i, char in enumerate(CHARS)}EPOCH = 50

IMGSIZE = (94, 24)

IMGDIR = './datas/rec_filtered_images/data'

TRAINFILE = './datas/rec_filtered_images/train.txt'

VALIDFILE = './datas/rec_filtered_images/valid.txt'

SAVEFOLDER = './runs'

DROPOUT = 0.01

LEARNINGRATE = 0.001

LPRMAXLEN = 18

TRAINBATCHSIZE = 64

EVALBATCHSIZE = 64

NUMWORKERS = 4 # 越大越快,和CPU性能有關。若dataloader報錯,調小該參數,或直接改為0

WEIGHTDECAY = 0.001class LprnetDataloader(Dataset):def __init__(self, target_path, label_text, transforms=None):super().__init__()self.transforms = transformsself.target_path = target_pathwith open(label_text, 'r', encoding='utf-8', errors='ignore') as f:self.data = f.readlines()def __getitem__(self, index):img_name = self.data[index].strip()img_path = os.path.join(self.target_path, img_name)data = Image.open(img_path)label = []img_label = img_name.split('.', 1)[0]for c in img_label:label.append(CHARS_DICT[c])if len(label) == 8:if self.check(label) == False:print(label)# print(imgname)# assert 0, "Error label ^~^!!!"if self.transforms is not None:data = self.transforms(data)data = np.array(data, dtype=np.float32)np_label = np.array(label, dtype=np.int64)return data, np_label, len(np_label)def __len__(self):return len(self.data)def check(self, label):if label[2] == CHARS_DICT['D'] or label[2] == CHARS_DICT['F'] \or label[-1] == CHARS_DICT['D'] or label[-1] == CHARS_DICT['F']:return Trueelse:print("Error label, Please check!")return Falsedef collate_fn(batch):# 圖片輸入已經規范到相同大小,這里只需要對標簽進行paddingbatch_size = len(batch)# 找出標簽最長的batch_temp = sorted(batch, key=lambda sample: len(sample[1]), reverse=True)max_label_length = len(batch_temp[0][1])# 以最大的長度創建0張量labels = np.zeros((batch_size, max_label_length), dtype='int64')label_lens = []img_list = []for x in range(batch_size):sample = batch[x]tensor = sample[0]target = sample[1]label_length = sample[2]img_list.append(tensor)# 將數據插入都0張量中,實現了paddinglabels[x, :label_length] = target[:]label_lens.append(len(target))label_lens = paddle.to_tensor(label_lens, dtype='int64') # ctcloss需要imgs = paddle.to_tensor(img_list, dtype='float32')labels = paddle.to_tensor(labels, dtype="int32") # ctcloss僅支持int32的labelsreturn imgs, labels, label_lens# LPRNet網絡

# 網絡結構class small_basic_block(nn.Layer):def __init__(self, ch_in, ch_out):super(small_basic_block, self).__init__()self.block = nn.Sequential(nn.Conv2D(ch_in, ch_out // 4, kernel_size=1),nn.ReLU(),nn.Conv2D(ch_out // 4, ch_out // 4, kernel_size=(3, 1), padding=(1, 0)),nn.ReLU(),nn.Conv2D(ch_out // 4, ch_out // 4, kernel_size=(1, 3), padding=(0, 1)),nn.ReLU(),nn.Conv2D(ch_out // 4, ch_out, kernel_size=1),)def forward(self, x):return self.block(x)class maxpool_3d(nn.Layer):def __init__(self, kernel_size, stride):super(maxpool_3d, self).__init__()assert(len(kernel_size)==3 and len(stride)==3)kernel_size2d1 = kernel_size[-2:]stride2d1 = stride[-2:]kernel_size2d2 = (1, kernel_size[0])stride2d2 = (1, stride[0])self.maxpool1 = nn.MaxPool2D(kernel_size=kernel_size2d1, stride=stride2d1)self.maxpool2 = nn.MaxPool2D(kernel_size=kernel_size2d2, stride=stride2d2)def forward(self,x):x = self.maxpool1(x)x = x.transpose((0, 3, 2, 1))x = self.maxpool2(x)x = x.transpose((0, 3, 2, 1))return xclass LPRNet(nn.Layer):def __init__(self, lpr_max_len, class_num, dropout_rate):super(LPRNet, self).__init__()self.lpr_max_len = lpr_max_lenself.class_num = class_numself.backbone = nn.Sequential(nn.Conv2D(in_channels=3, out_channels=64, kernel_size=3, stride=1), # 0 [bs,3,24,94] -> [bs,64,22,92]nn.BatchNorm2D(num_features=64), # 1 -> [bs,64,22,92]nn.ReLU(), # 2 -> [bs,64,22,92]maxpool_3d(kernel_size=(1, 3, 3), stride=(1, 1, 1)), # 3 -> [bs,64,20,90]small_basic_block(ch_in=64, ch_out=128), # 4 -> [bs,128,20,90]nn.BatchNorm2D(num_features=128), # 5 -> [bs,128,20,90]nn.ReLU(), # 6 -> [bs,128,20,90]maxpool_3d(kernel_size=(1, 3, 3), stride=(2, 1, 2)), # 7 -> [bs,64,18,44]small_basic_block(ch_in=64, ch_out=256), # 8 -> [bs,256,18,44]nn.BatchNorm2D(num_features=256), # 9 -> [bs,256,18,44]nn.ReLU(), # 10 -> [bs,256,18,44]small_basic_block(ch_in=256, ch_out=256), # 11 -> [bs,256,18,44]nn.BatchNorm2D(num_features=256), # 12 -> [bs,256,18,44]nn.ReLU(), # 13 -> [bs,256,18,44]maxpool_3d(kernel_size=(1, 3, 3), stride=(4, 1, 2)), # 14 -> [bs,64,16,21]nn.Dropout(dropout_rate), # 15 -> [bs,64,16,21]nn.Conv2D(in_channels=64, out_channels=256, kernel_size=(1, 4), stride=1), # 16 -> [bs,256,16,18]nn.BatchNorm2D(num_features=256), # 17 -> [bs,256,16,18]nn.ReLU(), # 18 -> [bs,256,16,18]nn.Dropout(dropout_rate), # 19 -> [bs,256,16,18]nn.Conv2D(in_channels=256, out_channels=class_num, kernel_size=(13, 1), stride=1), # class_num=68 20 -> [bs,68,4,18]nn.BatchNorm2D(num_features=class_num), # 21 -> [bs,68,4,18]nn.ReLU(), # 22 -> [bs,68,4,18])self.container = nn.Sequential(nn.Conv2D(in_channels=448+self.class_num, out_channels=self.class_num, kernel_size=(1, 1), stride=(1, 1)),)def forward(self, x):keep_features = list()for i, layer in enumerate(self.backbone.children()):x = layer(x)if i in [2, 6, 13, 22]:keep_features.append(x)global_context = list()# keep_features: [bs,64,22,92] [bs,128,20,90] [bs,256,18,44] [bs,68,4,18]for i, f in enumerate(keep_features):if i in [0, 1]:# [bs,64,22,92] -> [bs,64,4,18]# [bs,128,20,90] -> [bs,128,4,18]f = nn.AvgPool2D(kernel_size=5, stride=5)(f)if i in [2]:# [bs,256,18,44] -> [bs,256,4,18]f = nn.AvgPool2D(kernel_size=(4, 10), stride=(4, 2))(f)f_pow = paddle.pow(f, 2) # [bs,64,4,18] 所有元素求平方# f_mean = paddle.mean(f_pow) # 1 所有元素求平均f_mean = paddle.mean(f_pow, axis=[1,2,3], keepdim=True)f = paddle.divide(f, f_mean) # [bs,64,4,18] 所有元素除以這個均值global_context.append(f)x = paddle.concat(global_context, 1) # [bs,516,4,18]x = self.container(x) # -> [bs, 68, 4, 18] head頭logits = paddle.mean(x, axis=2) # -> [bs, 68, 18] # 68 字符類別數 18字符序列長度return logitsdef init_weight(model):"""權重初始化函數使用model.apply的方法,修改每個子層的權重和偏置"""for name, layer in model.named_sublayers():if isinstance(layer, nn.Conv2D):weight_attr = nn.initializer.KaimingNormal()bias_attr = nn.initializer.Constant(0.)init_bias = paddle.create_parameter(layer.bias.shape, attr=bias_attr, dtype='float32')init_weight = paddle.create_parameter(layer.weight.shape, attr=weight_attr, dtype='float32')layer.weight = init_weightlayer.bias = init_biaselif isinstance(layer, nn.BatchNorm2D):weight_attr = nn.initializer.XavierUniform()bias_attr = nn.initializer.Constant(0.)init_bias = paddle.create_parameter(layer.bias.shape, attr=bias_attr, dtype='float32')init_weight = paddle.create_parameter(layer.weight.shape, attr=weight_attr, dtype='float32')layer.weight = init_weightlayer.bias = init_biasclass ACC:def __init__(self):self.Tp = 0self.Tn_1 = 0self.Tn_2 = 0self.acc = 0def batch_update(self, batch_label, label_lengths, pred):for i, label in enumerate(batch_label):length = label_lengths[i]label = label[:length]pred_i = pred[i, :, :]preb_label = []for j in range(pred_i.shape[1]): # Tpreb_label.append(np.argmax(pred_i[:, j], axis=0))no_repeat_blank_label = []pre_c = preb_label[0]if pre_c != len(CHARS) - 1: # 非空白no_repeat_blank_label.append(pre_c)for c in preb_label:if (pre_c == c) or (c == len(CHARS) - 1):if c == len(CHARS) - 1:pre_c = ccontinueno_repeat_blank_label.append(c)pre_c = cif len(label) != len(no_repeat_blank_label):self.Tn_1 += 1elif (np.asarray(label) == np.asarray(no_repeat_blank_label)).all():self.Tp += 1else:self.Tn_2 += 1self.acc = self.Tp * 1.0 / (self.Tp + self.Tn_1 + self.Tn_2)def clear(self):self.Tp = 0self.Tn_1 = 0self.Tn_2 = 0self.acc = 0def load_pretrained(model, path=None):"""加載預訓練模型:param model::param path::return:"""print('params loading...')if not (os.path.isdir(path) or os.path.exists(path + '.pdparams')):raise ValueError("Model pretrain path {} does not ""exists.".format(path))param_state_dict = paddle.load(path + ".pdparams")model.set_dict(param_state_dict)print(f'load {path + ".pdparams"} success...')returndef train_model():"""模型訓練"""# 圖片預處理train_transforms = T.Compose([T.ColorJitter(0.2, 0.2, 0.2),T.ToTensor(data_format='CHW'),T.Normalize([0.5, 0.5, 0.5], # 在totensor的時候已經將圖片縮放到0-1[0.5, 0.5, 0.5],data_format='CHW'),])eval_transforms = T.Compose([T.ToTensor(data_format='CHW'),T.Normalize([0.5, 0.5, 0.5],[0.5, 0.5, 0.5],data_format='CHW'),])# 數據加載train_data_set = LprnetDataloader(IMGDIR, TRAINFILE, train_transforms)eval_data_set = LprnetDataloader(IMGDIR, VALIDFILE, eval_transforms)train_loader = DataLoader(train_data_set,batch_size=TRAINBATCHSIZE,shuffle=True,num_workers=NUMWORKERS,drop_last=True,collate_fn=collate_fn)eval_loader = DataLoader(eval_data_set,batch_size=EVALBATCHSIZE,shuffle=False,num_workers=NUMWORKERS,drop_last=False,collate_fn=collate_fn)# 定義lossloss_func = nn.CTCLoss(len(CHARS) - 1)# input_length, loss計算需要input_length = np.ones(shape=TRAINBATCHSIZE) * LPRMAXLENinput_length = paddle.to_tensor(input_length, dtype='int64')# LPRNet網絡,初始化/加載預訓練參數model = LPRNet(LPRMAXLEN, len(CHARS), DROPOUT)model.apply(init_weight) # 首次訓練時初始化# 定義優化器def make_optimizer(base_lr, parameters=None):momentum = 0.9weight_decay = WEIGHTDECAYscheduler = paddle.optimizer.lr.CosineAnnealingDecay(learning_rate=base_lr, eta_min=0.01 * base_lr, T_max=EPOCH, verbose=1)scheduler = paddle.optimizer.lr.LinearWarmup( # 第一次訓練的時候考慮模型權重不穩定,添加warmup策略learning_rate=scheduler,warmup_steps=5,start_lr=base_lr / 5,end_lr=base_lr,verbose=True)optimizer = paddle.optimizer.Momentum(learning_rate=scheduler,weight_decay=paddle.regularizer.L2Decay(weight_decay),momentum=momentum,parameters=parameters)return optimizer, scheduleroptim, scheduler = make_optimizer(LEARNINGRATE, parameters=model.parameters())# accacc_train = ACC()acc_eval = ACC()# 早停參數patience = 5 # 連續5個epoch驗證集準確率無提升則停止best_acc = 0.1 # 初始最佳準確率no_improve_epochs = 0 # 未提升計數器# 訓練流程for epoch in range(EPOCH):start_time = time.localtime(time.time())str_time = time.strftime("%Y-%m-%d %H:%M:%S", start_time)print(f'{str_time} || Epoch {epoch} start:')model.train()for batch_id, bath_data in enumerate(train_loader):img_data, label_data, label_lens = bath_datapredict = model(img_data)logits = paddle.transpose(predict, (2, 0, 1)) # for ctc loss: T x N x Closs = loss_func(logits, label_data, input_length, label_lens)acc_train.batch_update(label_data, label_lens, predict)if batch_id % 20 == 0:cur_time = time.strftime("%Y-%m-%d %H:%M:%S", time.localtime())print(f'cur_time:{cur_time}, epoch:{epoch}, batch_id:{batch_id}, loss:{loss.item():.4f}, \acc:{acc_train.acc:.4f} Tp/Tn_1/Tn_2: {acc_train.Tp}/{acc_train.Tn_1}/{acc_train.Tn_2}')loss.backward()optim.step()optim.clear_grad()acc_train.clear()# saveif epoch and epoch % 20 == 0:paddle.save(model.state_dict(), os.path.join(SAVEFOLDER, f'lprnet_{epoch}_2.pdparams'))paddle.save(optim.state_dict(), os.path.join(SAVEFOLDER, f'lprnet_{epoch}_2.pdopt'))cur_time = time.strftime("%Y-%m-%d %H:%M:%S", time.localtime())print(f'cur_time:{cur_time}, Saved log ecpch-{epoch}')# evalwith paddle.no_grad():model.eval()loss_list = []for batch_id, bath_data in enumerate(eval_loader):img_data, label_data, label_lens = bath_datapredict = model(img_data)logits = paddle.transpose(predict, (2, 0, 1))loss = loss_func(logits, label_data, input_length, label_lens)acc_eval.batch_update(label_data, label_lens, predict)loss_list.append(loss.item())cur_time = time.strftime("%Y-%m-%d %H:%M:%S", time.localtime())print(f'cur_time:{cur_time}, Eval of epoch {epoch} => acc:{acc_eval.acc:.4f}, loss:{mean(loss_list):.4f}')# save best modelif acc_eval.acc > best_acc:## 原先是2,我改成mymodel做區分!paddle.save(model.state_dict(), os.path.join(SAVEFOLDER, f'lprnet_best_chosen_mymodel.pdparams'))paddle.save(optim.state_dict(), os.path.join(SAVEFOLDER, f'lprnet_best_chosen_mymodel.pdopt'))best_acc= acc_eval.acccur_time = time.strftime("%Y-%m-%d %H:%M:%S", time.localtime())print(f'cur_time:{cur_time}, Saved best model of epoch{epoch}, acc {acc_eval.acc:.4f}, save path "{SAVEFOLDER}"')acc_eval.clear()# 更新早停邏輯(在原有保存最佳模型邏輯之后添加)if acc_eval.acc > best_acc:no_improve_epochs = 0 # 重置計數器else:no_improve_epochs += 1# 早停判斷if no_improve_epochs >= patience:cur_time = time.strftime("%Y-%m-%d %H:%M:%S", time.localtime())print(f'\ncur_time:{cur_time}, Early stopping triggered at epoch {epoch}!')print(f'cur_time:{cur_time}, No improvement in {patience} consecutive epochs.')break# 學習率衰減策略scheduler.step()def eval():"""模型評估"""# 圖片預處理eval_transforms = T.Compose([T.ToTensor(data_format='CHW'),T.Normalize([0.5, 0.5, 0.5], # 在totensor的時候已經將圖片縮放到0-1[0.5, 0.5, 0.5],data_format='CHW'),])# 數據加載eval_data_set = LprnetDataloader(IMGDIR, VALIDFILE, eval_transforms)eval_loader = DataLoader(eval_data_set,batch_size=EVALBATCHSIZE,shuffle=False,num_workers=NUMWORKERS,drop_last=False,collate_fn=collate_fn)# 定義lossloss_func = nn.CTCLoss(len(CHARS) - 1)# input_length, loss計算需要input_length = np.ones(shape=TRAINBATCHSIZE) * LPRMAXLENinput_length = paddle.to_tensor(input_length, dtype='int64')# LPRNet網絡,添加模型權重model = LPRNet(LPRMAXLEN, len(CHARS), DROPOUT)# 這里可以加入自己訓練的模型load_pretrained(model, 'runs/lprnet_best_chosen_mymodel')# accacc_eval = ACC()# 驗證# evalwith paddle.no_grad():model.eval()loss_list = []for batch_id, bath_data in enumerate(eval_loader):img_data, label_data, label_lens = bath_datapredict = model(img_data)logits = paddle.transpose(predict, (2, 0, 1))loss = loss_func(logits, label_data, input_length, label_lens)acc_eval.batch_update(label_data, label_lens, predict)loss_list.append(loss.item())cur_time = time.strftime("%Y-%m-%d %H:%M:%S", time.localtime())print(f'cur_time:{cur_time}, Eval from {VALIDFILE} => acc:{acc_eval.acc:.4f}, loss:{mean(loss_list):.4f}')acc_eval.clear()def test():"""模型測試"""# 這里改成自己的圖片地址img_path = 'datas/rec_filtered_images/data/京A82889.jpg'print(img_path)img_data = cv2.imread(img_path)# 添加圖片讀取校驗if img_data is None:print(f"錯誤:無法讀取圖片 {img_path},請檢查路徑和文件格式")returnimg_data = img_data[:, :, ::-1] # BGR to RGB# 保證標準差的數據前處理try:img_data = cv2.resize(img_data, (94, 24)) # 調整大小except Exception as e:print(f"圖像處理失敗: {str(e)}")returnimg_data = img_data / 255.0 # 將像素值縮放到[0, 1]范圍img_data = np.transpose(img_data, (2, 0, 1)) # HWC 到 CHWimg_data = np.expand_dims(img_data, 0) # 添加批次維度# 計算每個通道的均值和標準差mean = np.mean(img_data, axis=(0, 2, 3), keepdims=True)std = np.std(img_data, axis=(0, 2, 3), keepdims=True)# 縮放使得標準差為0.5desired_std = 0.5img_data = (img_data - mean) / std * desired_stdimg_tensor = paddle.to_tensor(img_data, dtype='float32') # 轉為 PaddlePaddle 張量# 加載模型, 預測LPRMAXLEN = 18model = LPRNet(LPRMAXLEN, len(CHARS), dropout_rate=0)# 換成自己訓練的模型load_pretrained(model, 'runs/lprnet_best_chosen_mymodel')out_data = model(img_tensor)# 后處理,單張圖片數據def reprocess(pred):pred_data = pred[0]pred_label = np.argmax(pred_data, axis=0)print(pred_label)no_repeat_blank_label = []pre_c = pred_label[0]if pre_c != len(CHARS) - 1: # 非空白no_repeat_blank_label.append(pre_c)for c in pred_label:if (pre_c == c) or (c == len(CHARS) - 1):if c == len(CHARS) - 1:pre_c = ccontinueno_repeat_blank_label.append(c)pre_c = cchar_list = [CHARS[i] for i in no_repeat_blank_label]return ''.join(char_list)rep_result = reprocess(out_data)print(rep_result)def export_model():"""導出模型:return:"""model = LPRNet(18, 68, dropout_rate=0)# 換自己訓練的的模型load_pretrained(model, 'runs/lprnet_best_chosen_mymodel')# 換自己保存的路徑save_path = 'save_onnx_chosen_mymodel/lprnet'# 檢查路徑是否存在,不存在則創建if not os.path.exists(save_path):os.makedirs(save_path)x_spec = paddle.static.InputSpec([1, 3, 24, 94], 'float32', 'image')x_spec = paddle.static.InputSpec([1, 3, 24, 94], 'float32', 'image')paddle.onnx.export(model, save_path, input_spec=[x_spec], opset_version=11)def main():# 訓練模型train_model()# 評估模型eval()# 測試模型test()# 導出模型export_model()if __name__ == '__main__':main()

)

)