modelscope可控細節的長文檔摘要嘗試

本文的想法來自今年OpenAI cookbook的一篇實踐:summarizing_long_documents,目標是演示如何以可控的細節程度總結大型文檔。

如果我們想讓大語言模型總結一份長文檔(例如 10k 或更多tokens),但是直接輸入大語言模型往往會得到一個相對較短的摘要,該摘要與文檔的長度并不成比例。例如,20k tokens的文檔的摘要不會是 10k tokens的文檔摘要的兩倍長。本文通過將文檔分為幾部分來解決這個問題,然后分段生成摘要。在對大語言模型進行多次查詢后,可以重建完整的摘要。通過控制文本塊的數量及其大小,我們最終可以控制輸出中的細節級別。

本文使用的工具和模型如下:

大語言模型:Qwen2的GGUF格式模型

工具1:Ollama,將大語言模型GGUF部署成OpenAI格式的API

工具2:transformers,使用transformers的新功能,直接加載GGUF格式模型的tokenizer,用于文檔長度查詢和分段。

最佳實踐

運行Qwen2模型(詳見《魔搭社區GGUF模型怎么玩!看這篇就夠了》)

復制模型路徑,創建名為“ModelFile”的meta文件,內容如下:

FROM /mnt/workspace/qwen2-7b-instruct-q5_k_m.gguf# set the temperature to 0.7 [higher is more creative, lower is more coherent]

PARAMETER temperature 0.7

PARAMETER top_p 0.8

PARAMETER repeat_penalty 1.05

TEMPLATE """{{ if and .First .System }}<|im_start|>system

{{ .System }}<|im_end|>

{{ end }}<|im_start|>user

{{ .Prompt }}<|im_end|>

<|im_start|>assistant

{{ .Response }}"""

# set the system message

SYSTEM """

You are a helpful assistant.

"""

使用ollama create命令創建自定義模型并運行

ollama create myqwen2 --file ./ModelFile

ollama run myqwen2```

安裝依賴&讀取需要總結的文檔

import os

from typing import List, Tuple, Optional

from openai import OpenAI

from transformers import AutoTokenizer

from tqdm import tqdm

# load doc

with open("data/artificial_intelligence_wikipedia.txt", "r") as file:artificial_intelligence_wikipedia_text = file.read()

加載encoding并檢查文檔長度

HuggingFace的transformers 支持加載GGUF單文件格式,以便為 gguf 模型提供進一步的訓練/微調功能,然后再將這些模型轉換回生態系統gguf中使用ggml,GGUF文件通常包含配置屬性,tokenizer,以及其他的屬性,以及要加載到模型的所有tensor,參考文檔:https://huggingface.co/docs/transformers/gguf

目前支持的模型架構為:llama,mistral,qwen2

# load encoding and check the length of dataset

encoding = AutoTokenizer.from_pretrained("/mnt/workspace/cherry/",gguf_file="qwen2-7b-instruct-q5_k_m.gguf")

len(encoding.encode(artificial_intelligence_wikipedia_text))

調用LLM的OpenAI格式的API

client = OpenAI(base_url = 'http://127.0.0.1:11434/v1',api_key='ollama', # required, but unused

)def get_chat_completion(messages, model='myqwen2'):response = client.chat.completions.create(model=model,messages=messages,temperature=0,)return response.choices[0].message.content

文檔拆解

我們定義了一些函數,將大文檔分成較小的部分。

def tokenize(text: str) -> List[str]: return encoding.encode(text)

# This function chunks a text into smaller pieces based on a maximum token count and a delimiter.

def chunk_on_delimiter(input_string: str,max_tokens: int, delimiter: str) -> List[str]:chunks = input_string.split(delimiter)combined_chunks, _, dropped_chunk_count = combine_chunks_with_no_minimum(chunks, max_tokens, chunk_delimiter=delimiter, add_ellipsis_for_overflow=True)if dropped_chunk_count > 0:print(f"warning: {dropped_chunk_count} chunks were dropped due to overflow")combined_chunks = [f"{chunk}{delimiter}" for chunk in combined_chunks]return combined_chunks# This function combines text chunks into larger blocks without exceeding a specified token count. It returns the combined text blocks, their original indices, and the count of chunks dropped due to overflow.

def combine_chunks_with_no_minimum(chunks: List[str],max_tokens: int,chunk_delimiter="\n\n",header: Optional[str] = None,add_ellipsis_for_overflow=False,

) -> Tuple[List[str], List[int]]:dropped_chunk_count = 0output = [] # list to hold the final combined chunksoutput_indices = [] # list to hold the indices of the final combined chunkscandidate = ([] if header is None else [header]) # list to hold the current combined chunk candidatecandidate_indices = []for chunk_i, chunk in enumerate(chunks):chunk_with_header = [chunk] if header is None else [header, chunk]if len(tokenize(chunk_delimiter.join(chunk_with_header))) > max_tokens:print(f"warning: chunk overflow")if (add_ellipsis_for_overflowand len(tokenize(chunk_delimiter.join(candidate + ["..."]))) <= max_tokens):candidate.append("...")dropped_chunk_count += 1continue # this case would break downstream assumptions# estimate token count with the current chunk addedextended_candidate_token_count = len(tokenize(chunk_delimiter.join(candidate + [chunk])))# If the token count exceeds max_tokens, add the current candidate to output and start a new candidateif extended_candidate_token_count > max_tokens:output.append(chunk_delimiter.join(candidate))output_indices.append(candidate_indices)candidate = chunk_with_header # re-initialize candidatecandidate_indices = [chunk_i]# otherwise keep extending the candidateelse:candidate.append(chunk)candidate_indices.append(chunk_i)# add the remaining candidate to output if it's not emptyif (header is not None and len(candidate) > 1) or (header is None and len(candidate) > 0):output.append(chunk_delimiter.join(candidate))output_indices.append(candidate_indices)return output, output_indices, dropped_chunk_count

摘要函數

現在我們可以定義一個實用程序來以可控的細節級別總結文本(注意參數detail)。

該函數首先根據可控參數在最小和最大塊數之間進行插值來確定塊數detail。然后,它將文本拆分成塊并對每個塊進行總結。

<span>def summarize(text: str,</span>

現在,我們可以使用此實用程序生成具有不同詳細程度的摘要。通過detail從 0 增加到 1,我們可以逐漸獲得更長的底層文檔摘要。參數值越高,detail摘要越詳細,因為實用程序首先將文檔拆分為更多塊。然后對每個塊進行匯總,最終摘要是所有塊摘要的串聯。

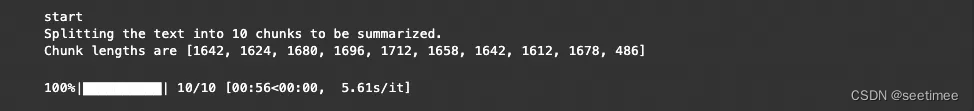

def summarize(text: str,detail: float = 0,model: str = 'myqwen2',additional_instructions: Optional[str] = None,minimum_chunk_size: Optional[int] = 500,chunk_delimiter: str = "\n",summarize_recursively=False,verbose=False):"""Summarizes a given text by splitting it into chunks, each of which is summarized individually. The level of detail in the summary can be adjusted, and the process can optionally be made recursive.Parameters:- text (str): The text to be summarized.- detail (float, optional): A value between 0 and 1 indicating the desired level of detail in the summary.0 leads to a higher level summary, and 1 results in a more detailed summary. Defaults to 0.- model (str, optional): The model to use for generating summaries. Defaults to 'gpt-3.5-turbo'.- additional_instructions (Optional[str], optional): Additional instructions to provide to the model for customizing summaries.- minimum_chunk_size (Optional[int], optional): The minimum size for text chunks. Defaults to 500.- chunk_delimiter (str, optional): The delimiter used to split the text into chunks. Defaults to ".".- summarize_recursively (bool, optional): If True, summaries are generated recursively, using previous summaries for context.- verbose (bool, optional): If True, prints detailed information about the chunking process.Returns:- str: The final compiled summary of the text.The function first determines the number of chunks by interpolating between a minimum and a maximum chunk count based on the `detail` parameter. It then splits the text into chunks and summarizes each chunk. If `summarize_recursively` is True, each summary is based on the previous summaries, adding more context to the summarization process. The function returns a compiled summary of all chunks."""# check detail is set correctlyassert 0 <= detail <= 1# interpolate the number of chunks based to get specified level of detailmax_chunks = len(chunk_on_delimiter(text, minimum_chunk_size, chunk_delimiter))min_chunks = 1num_chunks = int(min_chunks + detail * (max_chunks - min_chunks))# adjust chunk_size based on interpolated number of chunksdocument_length = len(tokenize(text))chunk_size = max(minimum_chunk_size, document_length // num_chunks)text_chunks = chunk_on_delimiter(text, chunk_size, chunk_delimiter)if verbose:print(f"Splitting the text into {len(text_chunks)} chunks to be summarized.")print(f"Chunk lengths are {[len(tokenize(x)) for x in text_chunks]}")# set system messagesystem_message_content = "Rewrite this text in summarized form."if additional_instructions is not None:system_message_content += f"\n\n{additional_instructions}"accumulated_summaries = []for chunk in tqdm(text_chunks):if summarize_recursively and accumulated_summaries:# Creating a structured prompt for recursive summarizationaccumulated_summaries_string = '\n\n'.join(accumulated_summaries)user_message_content = f"Previous summaries:\n\n{accumulated_summaries_string}\n\nText to summarize next:\n\n{chunk}"else:# Directly passing the chunk for summarization without recursive contextuser_message_content = chunk# Constructing messages based on whether recursive summarization is appliedmessages = [{"role": "system", "content": system_message_content},{"role": "user", "content": user_message_content}]# Assuming this function gets the completion and works as expectedresponse = get_chat_completion(messages, model=model)accumulated_summaries.append(response)# Compile final summary from partial summariesfinal_summary = '\n\n'.join(accumulated_summaries)return final_summary

summary_with_detail_0 = summarize(artificial_intelligence_wikipedia_text, detail=0, verbose=True)

summary_with_detail_pt25 = summarize(artificial_intelligence_wikipedia_text, detail=0.25, verbose=True)

此實用程序還允許傳遞附加指令。

summary_with_additional_instructions = summarize(artificial_intelligence_wikipedia_text, detail=0.1,additional_instructions="Write in point form and focus on numerical data.")

print(summary_with_additional_instructions)

最后,請注意,該實用程序允許遞歸匯總,其中每個摘要都基于先前的摘要,從而為匯總過程添加更多上下文。可以通過將參數設置summarize_recursively為 True 來啟用此功能。這在計算上更昂貴,但可以提高組合摘要的一致性和連貫性。

recursive_summary = summarize(artificial_intelligence_wikipedia_text, detail=0.1, summarize_recursively=True)

print(recursive_summary)

)

)

)

含源代碼)

)

)