Transformer是一種深度學習模型,最早由Vaswani等人在2017年的論文《Attention is All You Need》中提出。它最初用于自然語言處理(NLP)任務,但其架構的靈活性使其在許多其他領域也表現出色,如計算機視覺、時間序列分析等。以下是對Transformer模型的詳細介紹。

一、基本結構

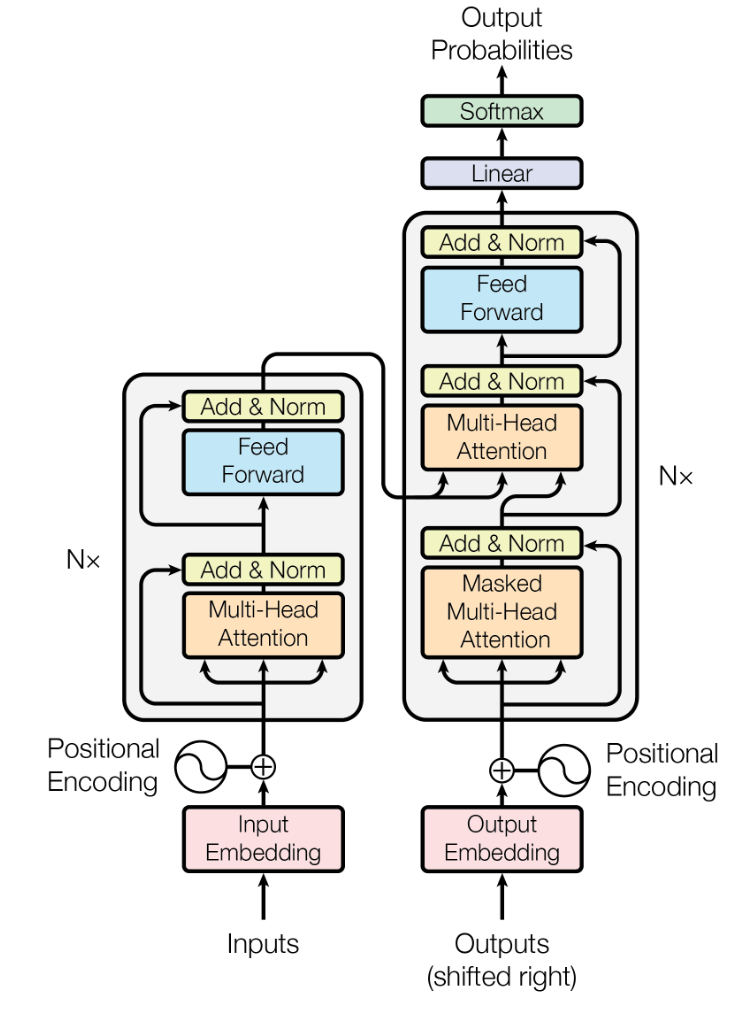

Transformer模型主要由兩個部分組成:編碼器(Encoder)和解碼器(Decoder)。

編碼器(Encoder)

- 輸入嵌入(Input Embedding):將輸入的詞匯轉換為高維向量表示。

- 位置編碼(Positional Encoding):由于Transformer沒有循環結構或卷積結構,因此需要顯式地加入位置信息。位置編碼可以幫助模型了解序列中各個詞匯的位置。

- 多頭自注意力機制(Multi-Head Self-Attention):自注意力機制可以捕捉序列中不同位置之間的依賴關系。多頭機制允許模型關注不同的子空間。

- 前饋神經網絡(Feed-Forward Neural Network):兩個線性變換和一個ReLU激活函數,獨立地應用于每個位置。

- 層歸一化(Layer Normalization)和殘差連接(Residual Connection):每個子層的輸出都進行層歸一化,并通過殘差連接加入子層輸入。

編碼器包含多個(通常是6個)這樣的子層堆疊。

解碼器(Decoder)

解碼器的結構與編碼器類似,但增加了一個用于接收編碼器輸出的注意力層。

- 輸入嵌入、位置編碼、多頭自注意力機制、前饋神經網絡、層歸一化和殘差連接:與編碼器相同。

- 掩碼多頭自注意力機制(Masked Multi-Head Self-Attention):防止解碼器當前位置注意到未來位置的信息。

- 編碼器-解碼器注意力機制(Encoder-Decoder Attention):使解碼器能關注編碼器的輸出,從而將編碼器捕捉到的上下文信息用于生成目標序列。

解碼器也包含多個(通常是6個)這樣的子層堆疊。

二、詳細機制

注意力機制(Attention Mechanism)

自注意力機制是Transformer的核心。它的計算過程如下:

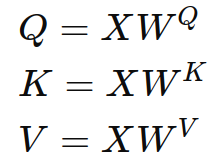

-

計算查詢(Query)、鍵(Key)、值(Value)矩陣:

其中,X 是輸入序列,WQ、WK、WV是可訓練的權重矩陣。 -

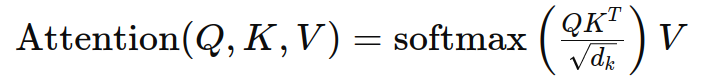

計算注意力分數:

其中:- dk是鍵向量的維度。

- KT 是鍵矩陣的轉置

-

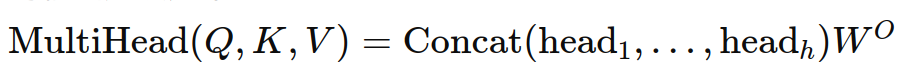

多頭機制:

多頭注意力機制將輸入映射到多個子空間,通過多個注意力頭來捕捉不同的特征。然后將這些頭的輸出連接起來:

其中,每個頭是獨立的注意力機制,WO 是可訓練的線性變換矩陣。

三、Transformer的優點

- 并行計算:不同于RNN的序列處理方式,Transformer允許并行計算,提高了訓練速度。

- 長程依賴:通過自注意力機制,Transformer能夠直接捕捉序列中任意位置之間的依賴關系。

- 靈活性:Transformer架構可以輕松擴展到不同任務,如語言翻譯、文本生成、圖像處理等。

四、變種和改進

自從Transformer被提出以來,已經出現了許多改進和變種,例如:

- BERT(Bidirectional Encoder Representations from Transformers):雙向編碼器,適用于多種NLP任務。

- GPT(Generative Pre-trained Transformer):生成模型,專注于文本生成任務。

- T5(Text-to-Text Transfer Transformer):將所有NLP任務統一為文本到文本的形式。

- Vision Transformer(ViT):將Transformer應用于圖像分類任務。

五、應用領域

Transformer模型在以下領域表現出色:

- 自然語言處理(NLP):如機器翻譯、文本生成、問答系統等。

- 計算機視覺:如圖像分類、目標檢測等。

- 時間序列分析:如股票預測、天氣預報等。

- 推薦系統:通過捕捉用戶與物品之間的復雜關系來提供個性化推薦。

六、代碼示例

以下是一個使用TensorFlow實現簡單Transformer的代碼示例:

import tensorflow as tf

import numpy as np# 注意力機制

def scaled_dot_product_attention(q, k, v, mask):matmul_qk = tf.matmul(q, k, transpose_b=True)dk = tf.cast(tf.shape(k)[-1], tf.float32)scaled_attention_logits = matmul_qk / tf.math.sqrt(dk)if mask is not None:scaled_attention_logits += (mask * -1e9)attention_weights = tf.nn.softmax(scaled_attention_logits, axis=-1)output = tf.matmul(attention_weights, v)return output, attention_weights# 多頭注意力

class MultiHeadAttention(tf.keras.layers.Layer):def __init__(self, d_model, num_heads):super(MultiHeadAttention, self).__init__()self.num_heads = num_headsself.d_model = d_modelassert d_model % self.num_heads == 0self.depth = d_model // self.num_headsself.wq = tf.keras.layers.Dense(d_model)self.wk = tf.keras.layers.Dense(d_model)self.wv = tf.keras.layers.Dense(d_model)self.dense = tf.keras.layers.Dense(d_model)def split_heads(self, x, batch_size):x = tf.reshape(x, (batch_size, -1, self.num_heads, self.depth))return tf.transpose(x, perm=[0, 2, 1, 3])def call(self, v, k, q, mask):batch_size = tf.shape(q)[0]q = self.wq(q)k = self.wk(k)v = self.wv(v)q = self.split_heads(q, batch_size)k = self.split_heads(k, batch_size)v = self.split_heads(v, batch_size)scaled_attention, attention_weights = scaled_dot_product_attention(q, k, v, mask)scaled_attention = tf.transpose(scaled_attention, perm=[0, 2, 1, 3])concat_attention = tf.reshape(scaled_attention, (batch_size, -1, self.d_model))output = self.dense(concat_attention)return output, attention_weights# 前饋神經網絡

def point_wise_feed_forward_network(d_model, dff):return tf.keras.Sequential([tf.keras.layers.Dense(dff, activation='relu'),tf.keras.layers.Dense(d_model)])# 編碼器層

class EncoderLayer(tf.keras.layers.Layer):def __init__(self, d_model, num_heads, dff, rate=0.1):super(EncoderLayer, self).__init__()self.mha = MultiHeadAttention(d_model, num_heads)self.ffn = point_wise_feed_forward_network(d_model, dff)self.layernorm1 = tf.keras.layers.LayerNormalization(epsilon=1e-6)self.layernorm2 = tf.keras.layers.LayerNormalization(epsilon=1e-6)self.dropout1 = tf.keras.layers.Dropout(rate)self.dropout2 = tf.keras.layers.Dropout(rate)def call(self, x, training, mask):attn_output, _ = self.mha(x, x, x, mask)attn_output = self.dropout1(attn_output, training=training)out1 = self.layernorm1(x + attn_output)ffn_output = self.ffn(out1)ffn_output = self.dropout2(ffn_output, training=training)out2 = self.layernorm2(out1 + ffn_output)return out2# 解碼器層

class DecoderLayer(tf.keras.layers.Layer):def __init__(self, d_model, num_heads, dff, rate=0.1):super(DecoderLayer, self).__init__()self.mha1 = MultiHeadAttention(d_model, num_heads)self.mha2 = MultiHeadAttention(d_model, num_heads)self.ffn = point_wise_feed_forward_network(d_model, dff)self.layernorm1 = tf.keras.layers.LayerNormalization(epsilon=1e-6)self.layernorm2 = tf.keras.layers.LayerNormalization(epsilon=1e-6)self.layernorm3 = tf.keras.layers.LayerNormalization(epsilon=1e-6)self.dropout1 = tf.keras.layers.Dropout(rate)self.dropout2 = tf.keras.layers.Dropout(rate)self.dropout3 = tf.keras.layers.Dropout(rate)def call(self, x, enc_output, training, look_ahead_mask, padding_mask):attn1, attn_weights_block1 = self.mha1(x, x, x, look_ahead_mask)attn1 = self.dropout1(attn1, training=training)out1 = self.layernorm1(x + attn1)attn2, attn_weights_block2 = self.mha2(enc_output, enc_output, out1, padding_mask)attn2 = self.dropout2(attn2, training=training)out2 = self.layernorm2(out1 + attn2)ffn_output = self.ffn(out2)ffn_output = self.dropout3(ffn_output, training=training)out3 = self.layernorm3(out2 + ffn_output)return out3, attn_weights_block1, attn_weights_block2# 編碼器

class Encoder(tf.keras.layers.Layer):def __init__(self, num_layers, d_model, num_heads, dff, input_vocab_size, rate=0.1):super(Encoder, self).__init__()self.d_model = d_modelself.num_layers = num_layersself.embedding = tf.keras.layers.Embedding(input_vocab_size, d_model)self.pos_encoding = positional_encoding(1000, self.d_model)self.enc_layers = [EncoderLayer(d_model, num_heads, dff, rate) for _ in range(num_layers)]self.dropout = tf.keras.layers.Dropout(rate)def call(self, x, training, mask):seq_len = tf.shape(x)[1]x = self.embedding(x)x *= tf.math.sqrt(tf.cast(self.d_model, tf.float32))x += self.pos_encoding[:, :seq_len, :]x = self.dropout(x, training=training)for i in range(self.num_layers):x = self.enc_layers[i](x, training, mask)return x# 解碼器

class Decoder(tf.keras.layers.Layer):def __init__(self, num_layers, d_model, num_heads, dff, target_vocab_size, rate=0.1):super(Decoder, self).__init__()self.d_model = d_modelself.num_layers = num_layersself.embedding = tf.keras.layers.Embedding(target_vocab_size, d_model)self.pos_encoding = positional_encoding(1000, self.d_model)self.dec_layers = [DecoderLayer(d_model, num_heads, dff, rate) for _ in range(num_layers)]self.dropout = tf.keras.layers.Dropout(rate)def call(self, x, enc_output, training, look_ahead_mask, padding_mask):seq_len = tf.shape(x)[1]attention_weights = {}x = self.embedding(x)x *= tf.math.sqrt(tf.cast(self.d_model, tf.float32))x += self.pos_encoding[:, :seq_len, :]x = self.dropout(x, training=training)for i in range(self.num_layers):x, block1, block2 = self.dec_layers[i](x, enc_output, training, look_ahead_mask, padding_mask)attention_weights[f'decoder_layer{i+1}_block1'] = block1attention_weights[f'decoder_layer{i+1}_block2'] = block2return x, attention_weights# Transformer模型

class Transformer(tf.keras.Model):def __init__(self, num_layers, d_model, num_heads, dff, input_vocab_size, target_vocab_size, rate=0.1):super(Transformer, self).__init__()self.encoder = Encoder(num_layers, d_model, num_heads, dff, input_vocab_size, rate)self.decoder = Decoder(num_layers, d_model, num_heads, dff, target_vocab_size, rate)self.final_layer = tf.keras.layers.Dense(target_vocab_size)def call(self, inp, tar, training, enc_padding_mask, look_ahead_mask, dec_padding_mask):enc_output = self.encoder(inp, training, enc_padding_mask)dec_output, attention_weights = self.decoder(tar, enc_output, training, look_ahead_mask, dec_padding_mask)final_output = self.final_layer(dec_output)return final_output, attention_weights# 位置編碼

def positional_encoding(position, d_model):angle_rads = get_angles(np.arange(position)[:, np.newaxis], np.arange(d_model)[np.newaxis, :], d_model)angle_rads[:, 0::2] = np.sin(angle_rads[:, 0::2])angle_rads[:, 1::2] = np.cos(angle_rads[:, 1::2])pos_encoding = angle_rads[np.newaxis, ...]return tf.cast(pos_encoding, dtype=tf.float32)def get_angles(pos, i, d_model):angle_rates = 1 / np.power(10000, (2 * (i // 2)) / np.float32(d_model))return pos * angle_rates# 掩碼

def create_padding_mask(seq):seq = tf.cast(tf.math.equal(seq, 0), tf.float32)return seq[:, tf.newaxis, tf.newaxis, :]def create_look_ahead_mask(size):mask = 1 - tf.linalg.band_part(tf.ones((size, size)), -1, 0)return mask# 超參數

num_layers = 4

d_model = 128

dff = 512

num_heads = 8

input_vocab_size = 8500

target_vocab_size = 8000

dropout_rate = 0.1# 創建Transformer模型

transformer = Transformer(num_layers, d_model, num_heads, dff, input_vocab_size, target_vocab_size, dropout_rate)# 損失函數和優化器

loss_object = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True, reduction='none')

def loss_function(real, pred):mask = tf.math.logical_not(tf.math.equal(real, 0))loss_ = loss_object(real, pred)mask = tf.cast(mask, dtype=loss_.dtype)loss_ *= maskreturn tf.reduce_sum(loss_) / tf.reduce_sum(mask)learning_rate = tf.keras.optimizers.schedules.ExponentialDecay(1e-4, decay_steps=100000, decay_rate=0.9, staircase=True)

optimizer = tf.keras.optimizers.Adam(learning_rate)# 編譯模型

transformer.compile(optimizer=optimizer, loss=loss_function)# 示例輸入

sample_input = tf.constant([[1, 2, 3, 4, 0, 0]])

sample_target = tf.constant([[1, 2, 3, 4, 0, 0]])# 訓練模型

transformer.fit([sample_input, sample_target], epochs=10)

解釋

- 注意力機制:定義了計算注意力權重的函數和多頭注意力機制。

- 前饋神經網絡:實現了前饋神經網絡的部分。

- 編碼器和解碼器層:定義了編碼器和解碼器的基本層。

- 編碼器和解碼器:實現了編碼器和解碼器的堆疊。

- Transformer模型:集成了編碼器和解碼器,定義了完整的Transformer模型。

- 位置編碼:為輸入序列添加位置信息。

- 掩碼:定義了填充掩碼和前瞻掩碼,用于處理輸入和目標序列中的填充和防止信息泄露。

)

】)

)

)

:實習初體驗)

——結合案例講Mybatis怎么操作sql)

)