BIoU來自發表在2018年CVPR上的文章:《Improving Object Localization With Fitness NMS and Bounded IoU Loss》

論文針對現有目標檢測方法只關注“足夠好”的定位,而非“最優”的框,提出了一種考慮定位質量的NMS策略和BIoU loss。?

?這里不贅述NMS,只看loss相關的內容。

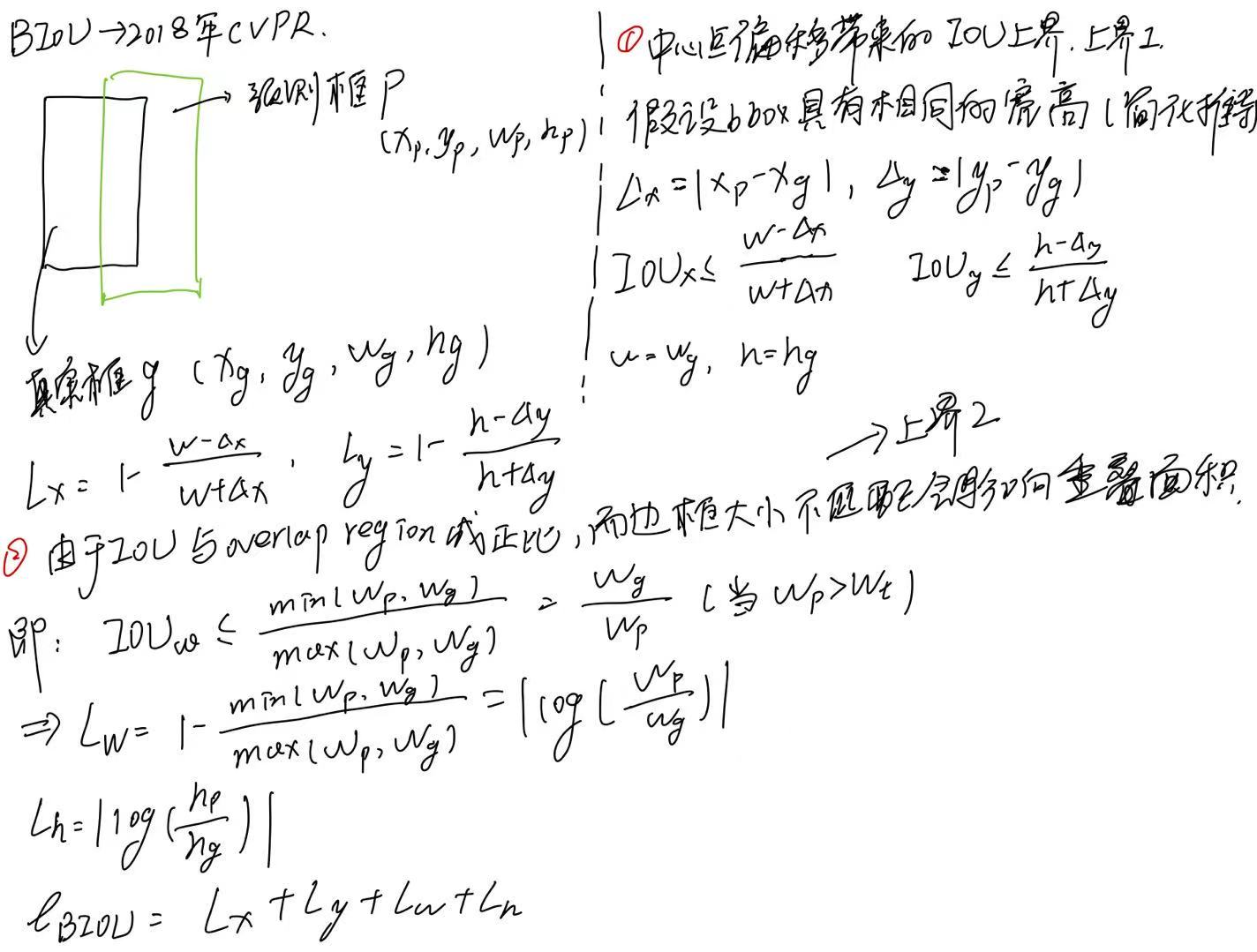

IoU 是檢測性能的主要指標,但直接優化 IoU 很難(不可導、不光滑),因此通常使用 L1/L2 或 SmoothL1 損失,但它們并不直接優化 IoU。?Bounded IoU Loss(BIoU)?通過對 IoU 上界建模,最大化ROI(Region of Interest)和相關的ground truth邊界框之間的IoU重疊,使得損失與 IoU 更緊密對應,又具備良好的優化特性。

公式:?

??代碼來自MMDetection的實現:

??代碼來自MMDetection的實現:

def bounded_iou_loss(pred: Tensor,target: Tensor,beta: float = 0.2,eps: float = 1e-3) -> Tensor:"""BIoULoss.This is an implementation of paper`Improving Object Localization with Fitness NMS and Bounded IoU Loss.<https://arxiv.org/abs/1711.00164>`_.Args:pred (Tensor): Predicted bboxes of format (x1, y1, x2, y2),shape (n, 4).target (Tensor): Corresponding gt bboxes, shape (n, 4).beta (float, optional): Beta parameter in smoothl1.eps (float, optional): Epsilon to avoid NaN values.Return:Tensor: Loss tensor."""pred_ctrx = (pred[:, 0] + pred[:, 2]) * 0.5pred_ctry = (pred[:, 1] + pred[:, 3]) * 0.5pred_w = pred[:, 2] - pred[:, 0]pred_h = pred[:, 3] - pred[:, 1]with torch.no_grad():target_ctrx = (target[:, 0] + target[:, 2]) * 0.5target_ctry = (target[:, 1] + target[:, 3]) * 0.5target_w = target[:, 2] - target[:, 0]target_h = target[:, 3] - target[:, 1]dx = target_ctrx - pred_ctrxdy = target_ctry - pred_ctry# 這里的 “×2” 來自中心差異在邊界框兩側的對稱影響loss_dx = 1 - torch.max((target_w - 2 * dx.abs()) /(target_w + 2 * dx.abs() + eps), torch.zeros_like(dx))loss_dy = 1 - torch.max((target_h - 2 * dy.abs()) /(target_h + 2 * dy.abs() + eps), torch.zeros_like(dy))loss_dw = 1 - torch.min(target_w / (pred_w + eps), pred_w /(target_w + eps))loss_dh = 1 - torch.min(target_h / (pred_h + eps), pred_h /(target_h + eps))# view(..., -1) does not work for empty tensorloss_comb = torch.stack([loss_dx, loss_dy, loss_dw, loss_dh],dim=-1).flatten(1)loss = torch.where(loss_comb < beta, 0.5 * loss_comb * loss_comb / beta,loss_comb - 0.5 * beta)return loss# 輔助說明BIoU Loss

def smooth_l1_loss(pred: Tensor, target: Tensor, beta: float = 1.0) -> Tensor:"""Smooth L1 loss.Args:pred (Tensor): The prediction.target (Tensor): The learning target of the prediction.beta (float, optional): The threshold in the piecewise function.Defaults to 1.0.Returns:Tensor: Calculated loss"""assert beta > 0if target.numel() == 0:return pred.sum() * 0assert pred.size() == target.size()diff = torch.abs(pred - target)loss = torch.where(diff < beta, 0.5 * diff * diff / beta,diff - 0.5 * beta)return lossRIoU來自發表在2020年ACM上的文章:《Single-Shot Two-Pronged Detector with Rectified IoU Loss》

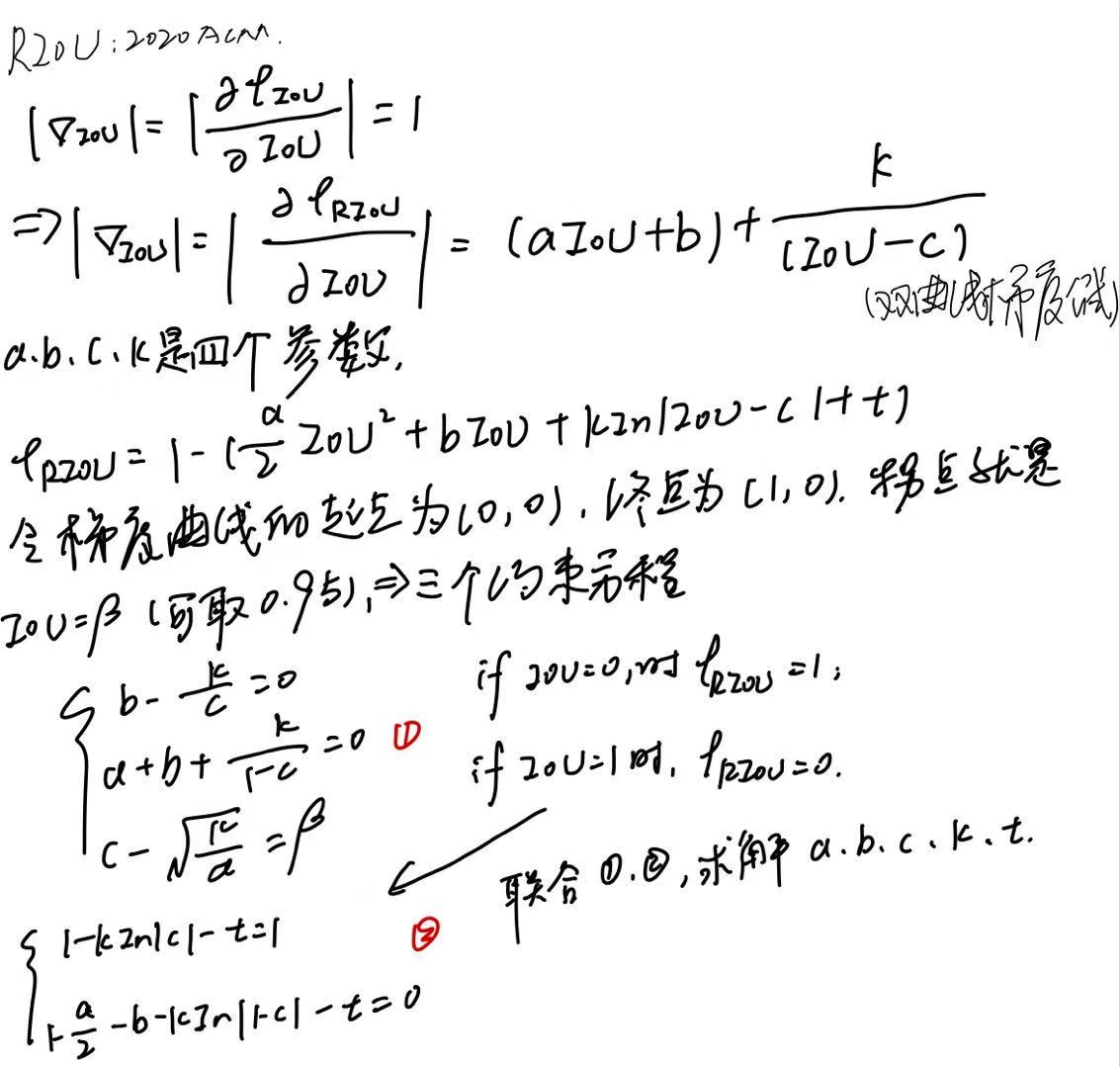

論文提出了一種基于IoU的自適應定位損失,稱為Rectified IoU(RIoU)損失,用于校正各種樣本的梯度。校正后的IoU損失增加了高IoU樣本的梯度,抑制了低IoU樣本的梯度,提高了模型的整體定位精度。

用于解決單階段目標檢測中樣本不平衡帶來的梯度偏差問題!

但是如果隨著IoU的增加而增加局部損失梯度的權重,那么將面臨另一個問題,即當回歸是完美的(IoU)時,梯度將繼續增加→ 1,?這意味著當兩個bbox完全重疊(IoU=1)時,將得到最大梯度,這是非常不合理的。

公式:?

?實現:

import torch

import torch.nn as nndef riou_loss(iou: torch.Tensor,loss_base: torch.Tensor,alpha: float = 2.0,gamma: float = 2.0,threshold: float = 0.5) -> torch.Tensor:"""Rectified IoU Loss (RIoU) implementation.Args:iou (Tensor): IoU between predicted and target boxes (shape: [N])loss_base (Tensor): Original IoU-based loss value (e.g., GIoU loss) (shape: [N])alpha (float): Scaling factor for high IoU samplesgamma (float): Focusing parameter (similar to focal loss)threshold (float): IoU threshold to apply amplificationReturns:Tensor: Rectified IoU loss (shape: [N])"""# Weight function: amplify high-quality samplesweight = torch.where(iou >= threshold,alpha * (iou ** gamma),iou)# Final loss: element-wise weightedloss = weight * loss_basereturn loss.mean()

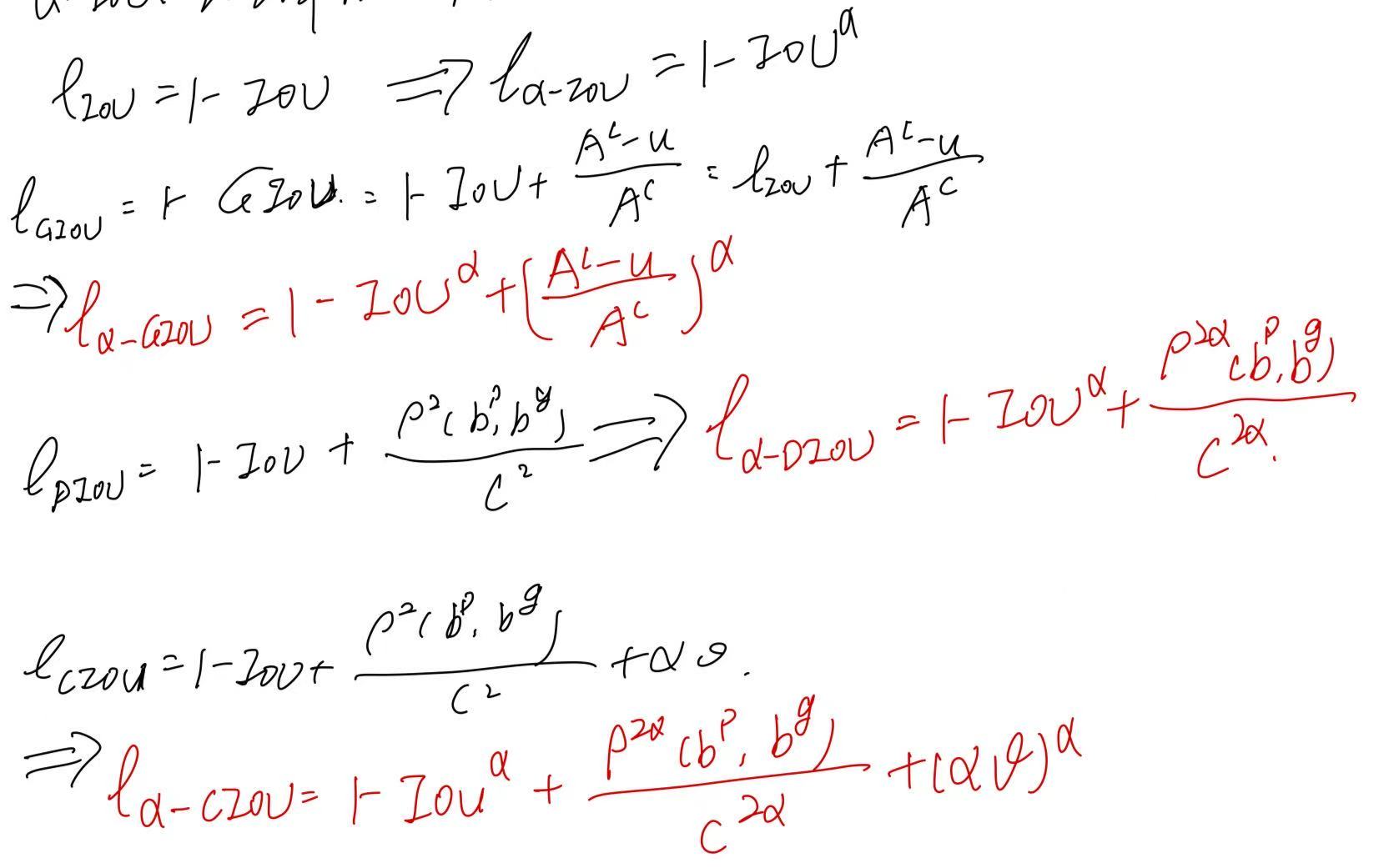

α-IoU來自發表在2021年NeurlPS上的文章:《Alpha-IoU: A Family of Power Intersection over Union Losses for Bounding Box Regression》

邊界框回歸的核心任務是學習框的預測與真實框之間的匹配程度,IoU是最常見的評價指標。然而現有 IoU 損失(如 GIoU、DIoU、CIoU)雖然考慮了位置和重疊,但它們在優化目標、梯度平滑性或收斂速度上仍有局限。該工作出現的時候已經存在EIoU和Focal-EIoU了。

α-IoU通過一個參數 α 實現靈活控制誤差加權,從而提升目標檢測精度,并為小數據集和噪聲框提供更多魯棒性。

該論文使用Box-Cox變換將IoU loss變換到α-IoU loss,這是一種統計技術,用于將非正態分布的數據轉換為更接近正態分布的形式。該方法由George Box和David Cox在1964年提出,廣泛應用于數據預處理步驟中,特別是在需要滿足正態性假設的統計分析或機器學習模型構建過程中。

然后推廣到GIoU、DIoU和CIoU上,也就是在之前的公式懲罰項上加入α,詳細地推導與說明請參考該論文:

?α 對不同的模型或數據集不太敏感,在大多數情況下 α = 3 始終表現良好。α-IoU 損失族可以很容易地應用于在干凈和嘈雜的 bbox 設置下改進最先進的檢測器,而無需向這些模型引入額外的參數(對訓練算法進行任何修改),也不增加它們的訓練/推理時間。?

?α 對不同的模型或數據集不太敏感,在大多數情況下 α = 3 始終表現良好。α-IoU 損失族可以很容易地應用于在干凈和嘈雜的 bbox 設置下改進最先進的檢測器,而無需向這些模型引入額外的參數(對訓練算法進行任何修改),也不增加它們的訓練/推理時間。?

但是應用到自定義數據集上效果如何還是需要結合具體問題具體分析 !

def bbox_alpha_iou(box1, box2, x1y1x2y2=False, GIoU=False, DIoU=False, CIoU=False, alpha=2, eps=1e-9):# Returns tsqrt_he IoU of box1 to box2. box1 is 4, box2 is nx4box2 = box2.T# Get the coordinates of bounding boxesif x1y1x2y2: # x1, y1, x2, y2 = box1b1_x1, b1_y1, b1_x2, b1_y2 = box1[0], box1[1], box1[2], box1[3]b2_x1, b2_y1, b2_x2, b2_y2 = box2[0], box2[1], box2[2], box2[3]else: # transform from xywh to xyxyb1_x1, b1_x2 = box1[0] - box1[2] / 2, box1[0] + box1[2] / 2b1_y1, b1_y2 = box1[1] - box1[3] / 2, box1[1] + box1[3] / 2b2_x1, b2_x2 = box2[0] - box2[2] / 2, box2[0] + box2[2] / 2b2_y1, b2_y2 = box2[1] - box2[3] / 2, box2[1] + box2[3] / 2# Intersection areainter = (torch.min(b1_x2, b2_x2) - torch.max(b1_x1, b2_x1)).clamp(0) * \(torch.min(b1_y2, b2_y2) - torch.max(b1_y1, b2_y1)).clamp(0)# Union Areaw1, h1 = b1_x2 - b1_x1, b1_y2 - b1_y1 + epsw2, h2 = b2_x2 - b2_x1, b2_y2 - b2_y1 + epsunion = w1 * h1 + w2 * h2 - inter + eps# change iou into pow(iou+eps)# iou = inter / unioniou = torch.pow(inter/union + eps, alpha)# beta = 2 * alphaif GIoU or DIoU or CIoU:cw = torch.max(b1_x2, b2_x2) - torch.min(b1_x1, b2_x1) # convex (smallest enclosing box) widthch = torch.max(b1_y2, b2_y2) - torch.min(b1_y1, b2_y1) # convex heightif CIoU or DIoU: # Distance or Complete IoU https://arxiv.org/abs/1911.08287v1c2 = (cw ** 2 + ch ** 2) ** alpha + eps # convex diagonalrho_x = torch.abs(b2_x1 + b2_x2 - b1_x1 - b1_x2)rho_y = torch.abs(b2_y1 + b2_y2 - b1_y1 - b1_y2)rho2 = ((rho_x ** 2 + rho_y ** 2) / 4) ** alpha # center distanceif DIoU:return iou - rho2 / c2 # DIoUelif CIoU: # https://github.com/Zzh-tju/DIoU-SSD-pytorch/blob/master/utils/box/box_utils.py#L47v = (4 / math.pi ** 2) * torch.pow(torch.atan(w2 / h2) - torch.atan(w1 / h1), 2)with torch.no_grad():alpha_ciou = v / ((1 + eps) - inter / union + v)# return iou - (rho2 / c2 + v * alpha_ciou) # CIoUreturn iou - (rho2 / c2 + torch.pow(v * alpha_ciou + eps, alpha)) # CIoUelse: # GIoU https://arxiv.org/pdf/1902.09630.pdf# c_area = cw * ch + eps # convex area# return iou - (c_area - union) / c_area # GIoUc_area = torch.max(cw * ch + eps, union) # convex areareturn iou - torch.pow((c_area - union) / c_area + eps, alpha) # GIoUelse:return iou # torch.log(iou+eps) or iou)

)

![[k8s實戰]Containerd 1.7.2 離線安裝與配置全指南(生產級優化)](http://pic.xiahunao.cn/[k8s實戰]Containerd 1.7.2 離線安裝與配置全指南(生產級優化))

)

![[密碼學實戰]國密算法面試題解析及應用](http://pic.xiahunao.cn/[密碼學實戰]國密算法面試題解析及應用)

)

)