1. 簡介

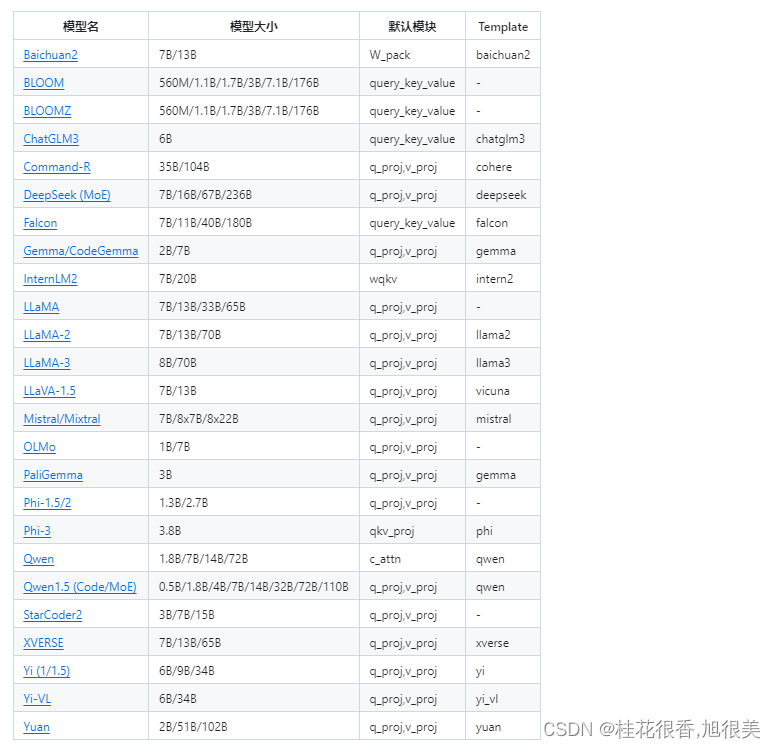

- 多種模型: LLaMA、Mistral、Mixtral-MoE、Qwen、Yi、Gemmha、Baichuan、ChatGLM、Phi等等。

- 集成方法:(增量)預訓練、指令監督微調、獎勵模型訓練、PPO訓練和DPO訓練。

- 多種精度:32比特全參數微調、16比特凍結微調、16比特LORA微調和基于AQLM/AWQ/GPTQ/LLM.int8 的2/4/8比特 QLORA 微調。

- 先進算法:GaLore、DORA、LongLoRA、LLaMAPro、LoftQ和Agen微調。

- 實用技巧:FlashAttention-2、Unsloth、RoPE scaling、NEFTune和rsLoRA。

- 實驗監控:LlamaBoard、TensorBoard、Wandb、MLfiow等等。

- 極速推理:基于VLLM的OpenAl風格API、瀏覽器界面和命令行接口。

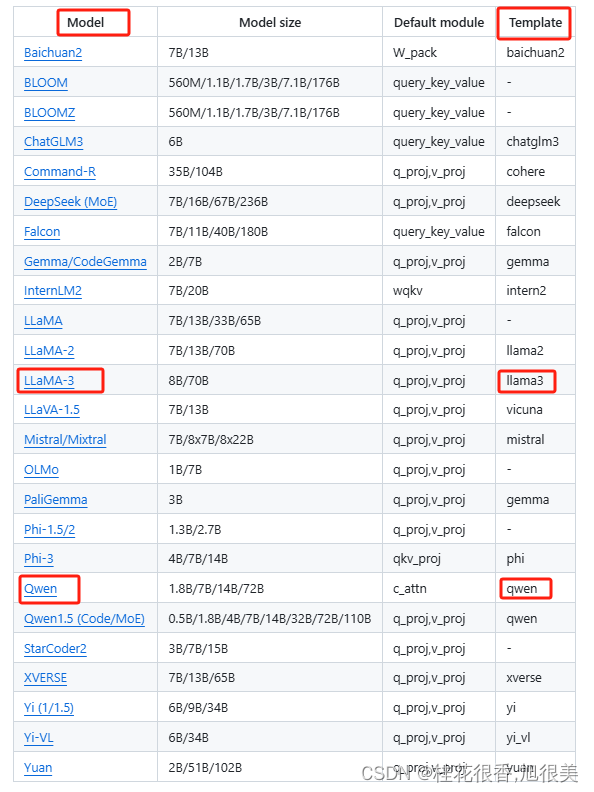

2. 模型對比

-

注意:

默認模塊應作為 --lora_target 參數的默認值,可使用 --lora_target all 參數指定全部模塊以取得更好的效果。對于所有“基座”(Base)模型,–template 參數可以是 default, alpaca, vicuna 等任意值。但“對話”(Instruct/Chat)模型請務必使用對應的模板。

請務必在訓練和推理時使用完全一致的模板。

項目所支持模型的完整列表請參閱 constants.py。

您也可以在 template.py 中添加自己的對話模板。

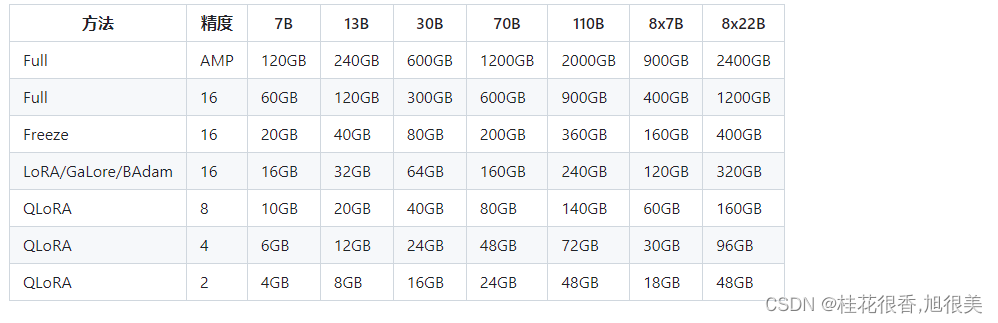

3. 訓練方法

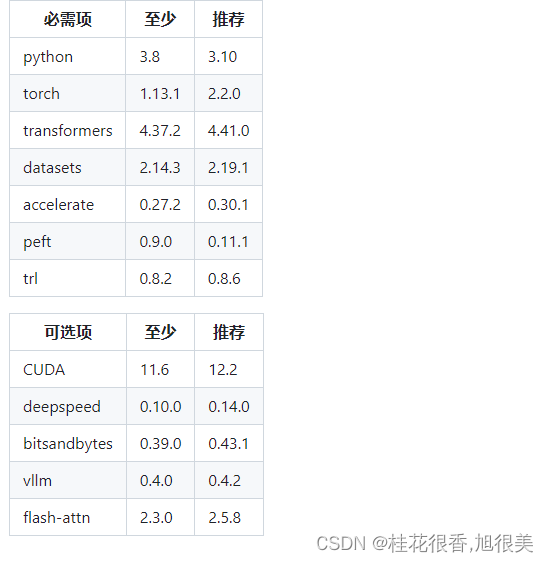

4. 軟硬件依賴

5. 硬件依賴

- 估算值

6. 如何使用

6.0 構建python 環境

# 創建新環境

conda create -n py310 python=3.10

#激活環境

conda activate py310

6.1 安裝 LLaMA Factory

# 本次LLaMA-Factory版本 c1fdf81df6ade5da7be4eb66b715f0efd171d5aa

git clone --depth 1 https://github.com/hiyouga/LLaMA-Factory.git

cd LLaMA-Factory

pip install -e .[torch,metrics]

可選的額外依賴項:torch、metrics、deepspeed、bitsandbytes、vllm、galore、badam、gptq、awq、aqlm、qwen、modelscope、quality

- 遇到包沖突時,可使用 pip install --no-deps -e . 解決。

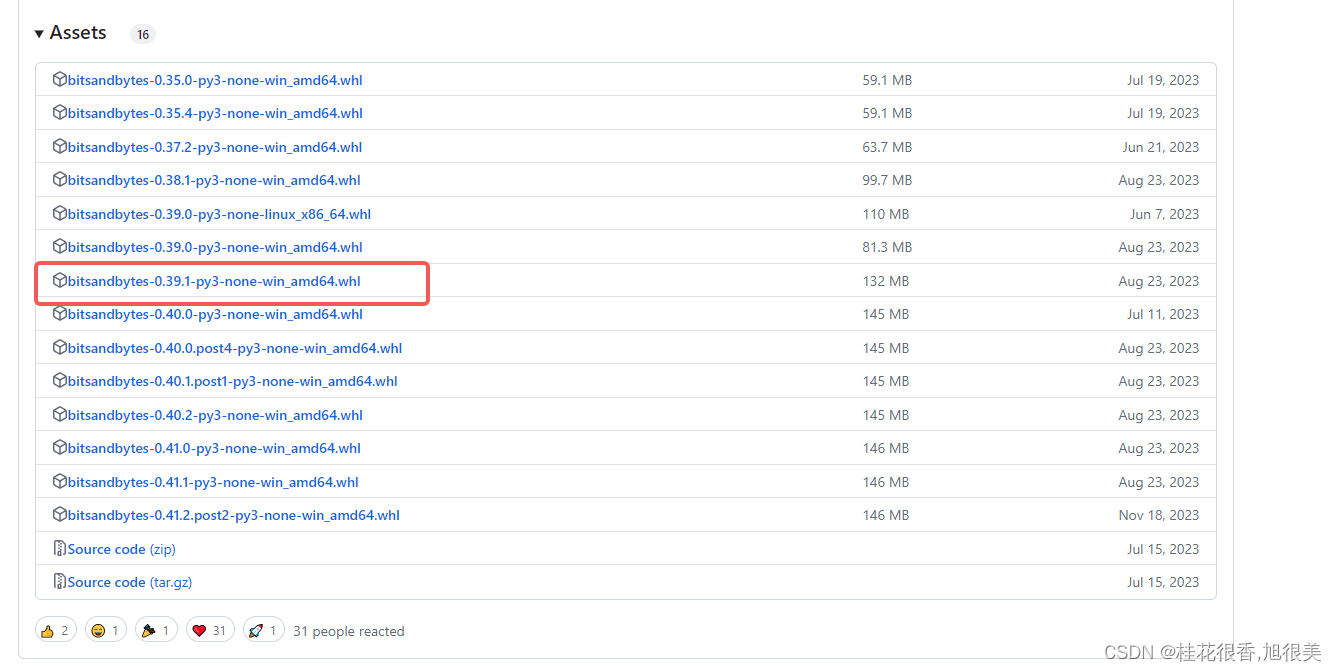

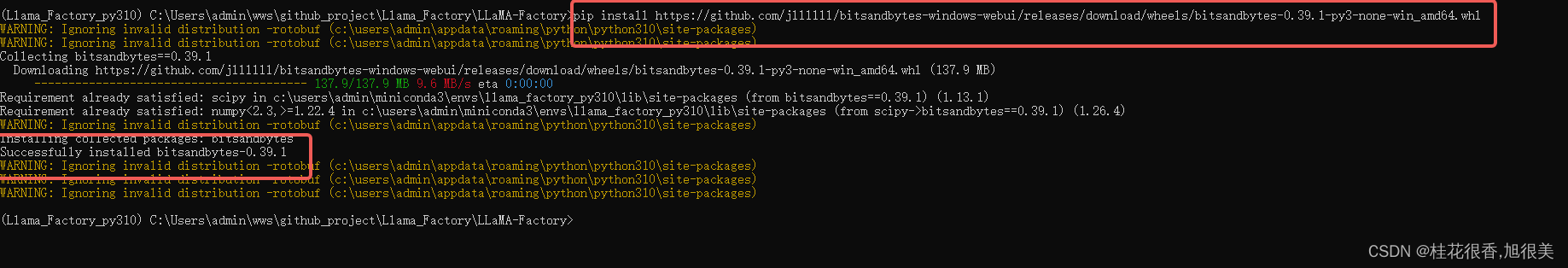

6.1.2 Windows 用戶指南

如果要在 Windows 平臺上開啟量化 LoRA(QLoRA),需要安裝預編譯的 bitsandbytes 庫, 支持 CUDA 11.1 到 12.2, 請根據您的 CUDA 版本情況選擇適合的發布版本。

pip install https://github.com/jllllll/bitsandbytes-windows-webui/releases/download/wheels/bitsandbytes-0.41.2.post2-py3-none-win_amd64.whl

如果要在 Windows 平臺上開啟 FlashAttention-2,需要安裝預編譯的 flash-attn 庫,支持 CUDA 12.1 到 12.2,請根據需求到 flash-attention 下載對應版本安裝。

6.1.3 確認自己的cuda版本

nvidia-smi

版本是12.2, 非常好

所以我安裝的是:

pip install https://github.com/jllllll/bitsandbytes-windows-webui/releases/download/wheels/bitsandbytes-0.39.1-py3-none-win_amd64.whl

6.2 安裝依賴

pip install -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple --ignore-installed

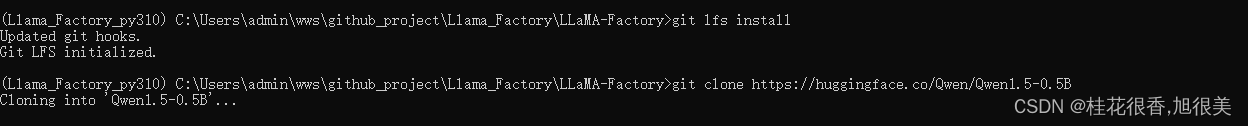

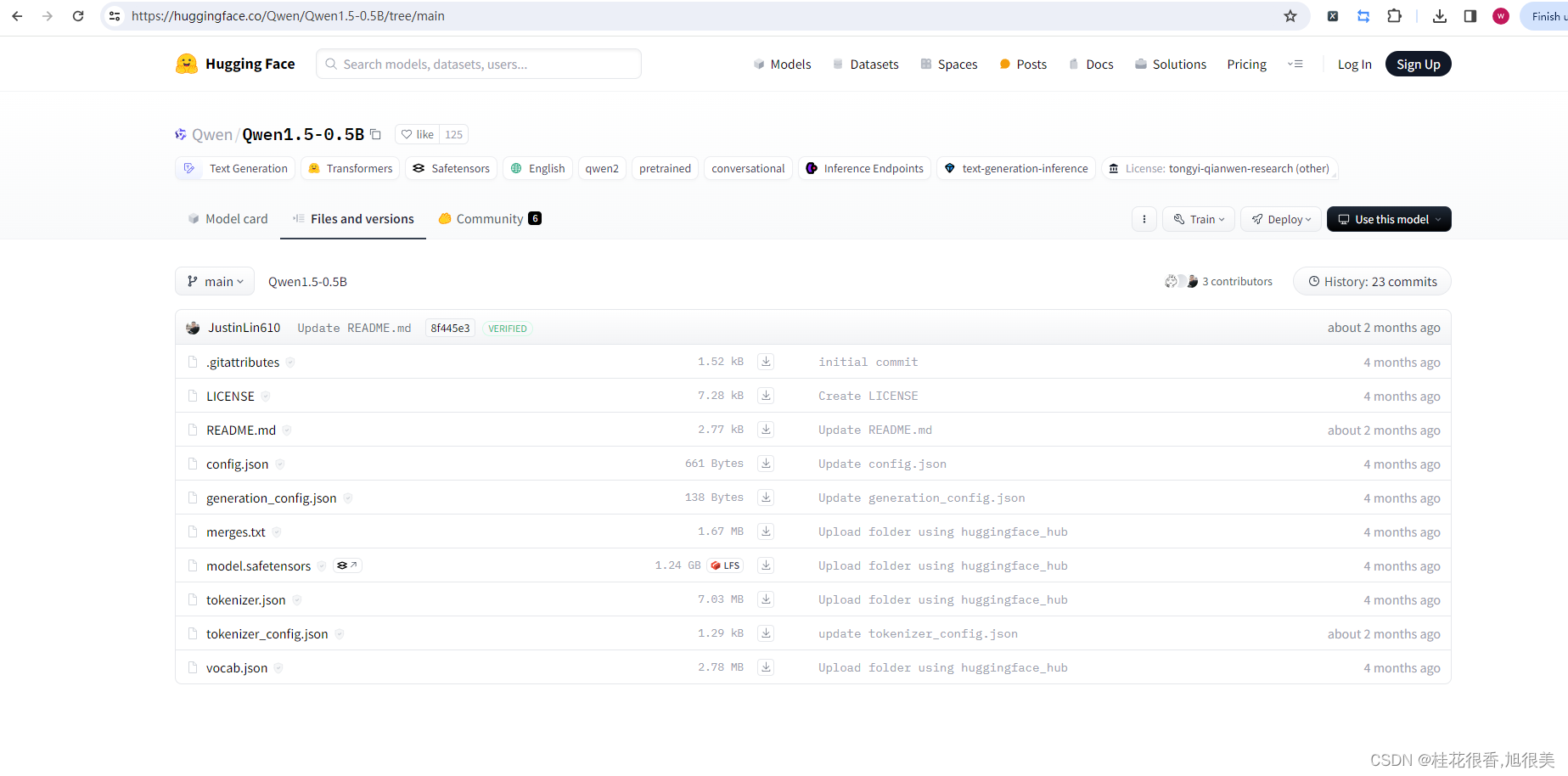

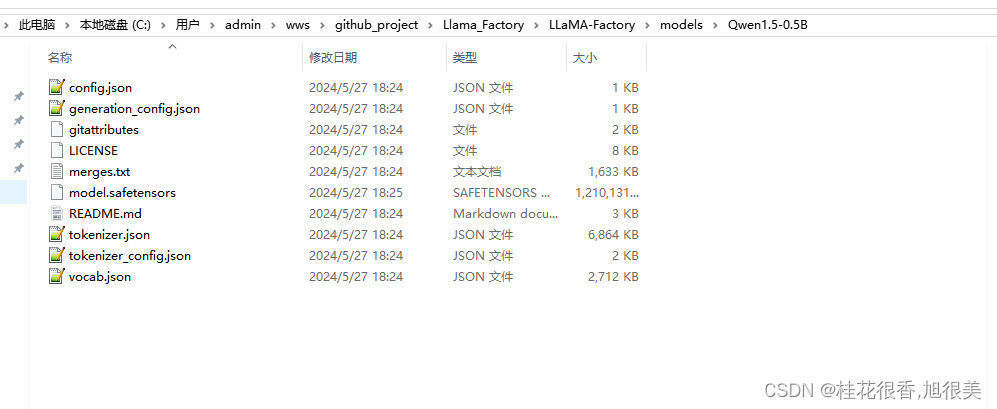

6.3 大模型下載

可以在【2. 模型對比】中選擇想微調的大模型,這里為了方便學習,選擇Qwen1.5-0.5B大模型作為本次學習的大模型

!git lfs install

!git clone https://huggingface.co/Qwen/Qwen1.5-0.5B

哈哈哈哈,沒下載下了,手動去網頁一個個點擊下載的

7. 大模型推理

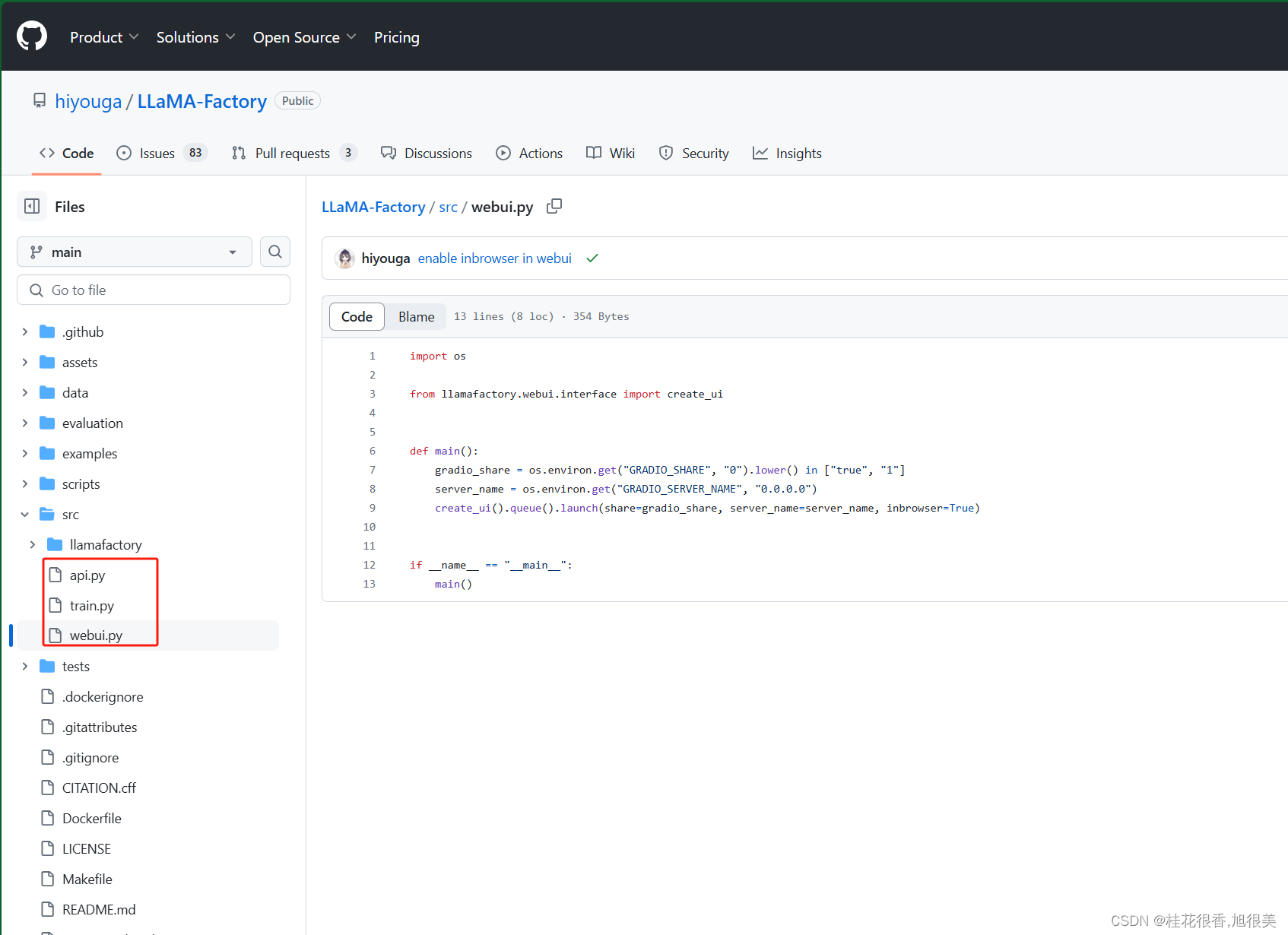

當前最新版本只有 api、webui、train三種模式,cli_demo 是之前的版本。(本次LLaMA-Factory版本c1fdf81df6ade5da7be4eb66b715f0efd171d5aa)

但是可以試用llamafactory-cli 推理

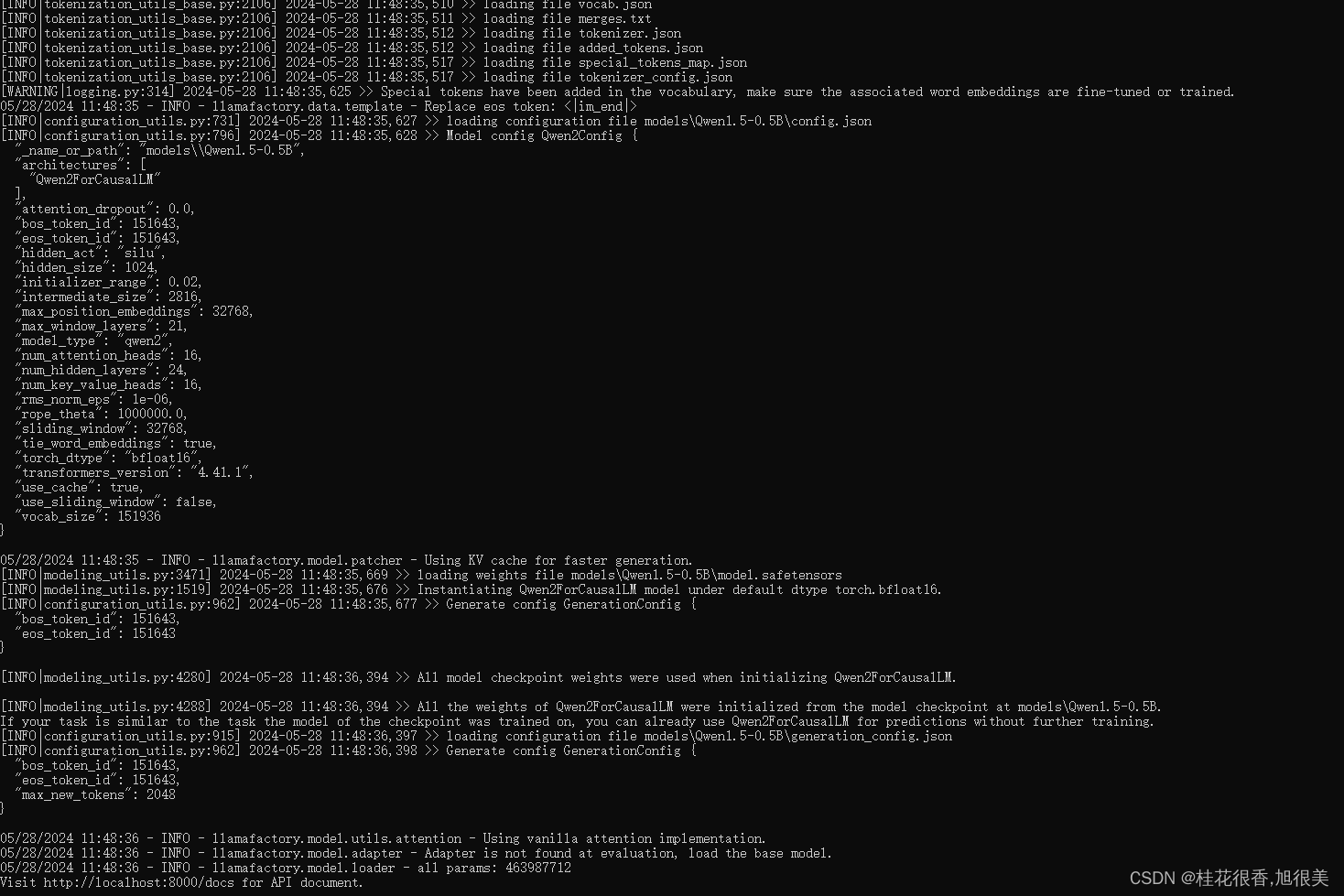

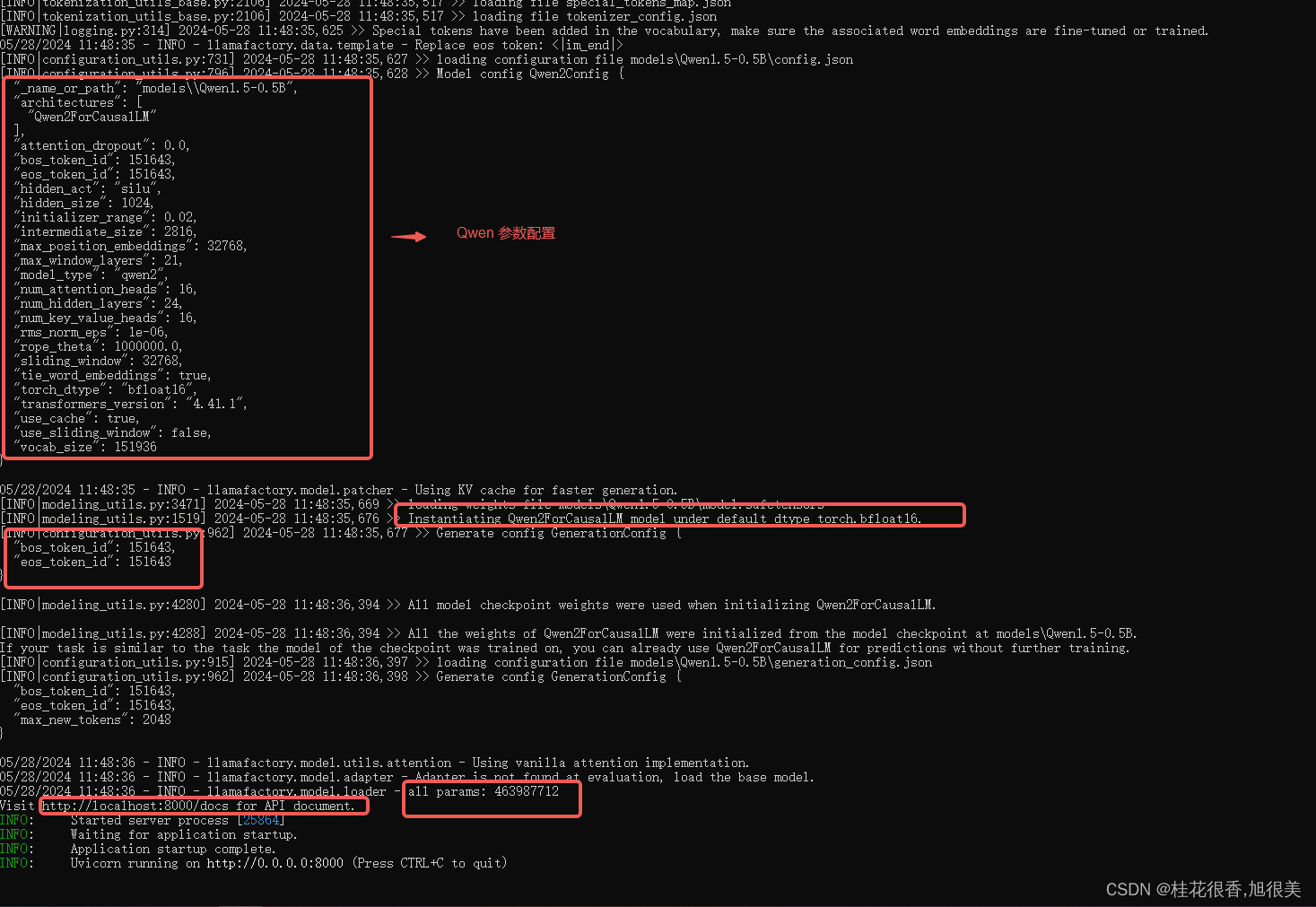

7.1 使用openai 風格 api推理

CUDA_VISIBLE_DEVICES=0 API_PORT=8000 python src/api.py

--model_name_or_path .\models\Qwen1.5-0.5B\

#--adapter_name_or_path path_to_checkpoint\

#--finetuning_type lora\

--template qwen

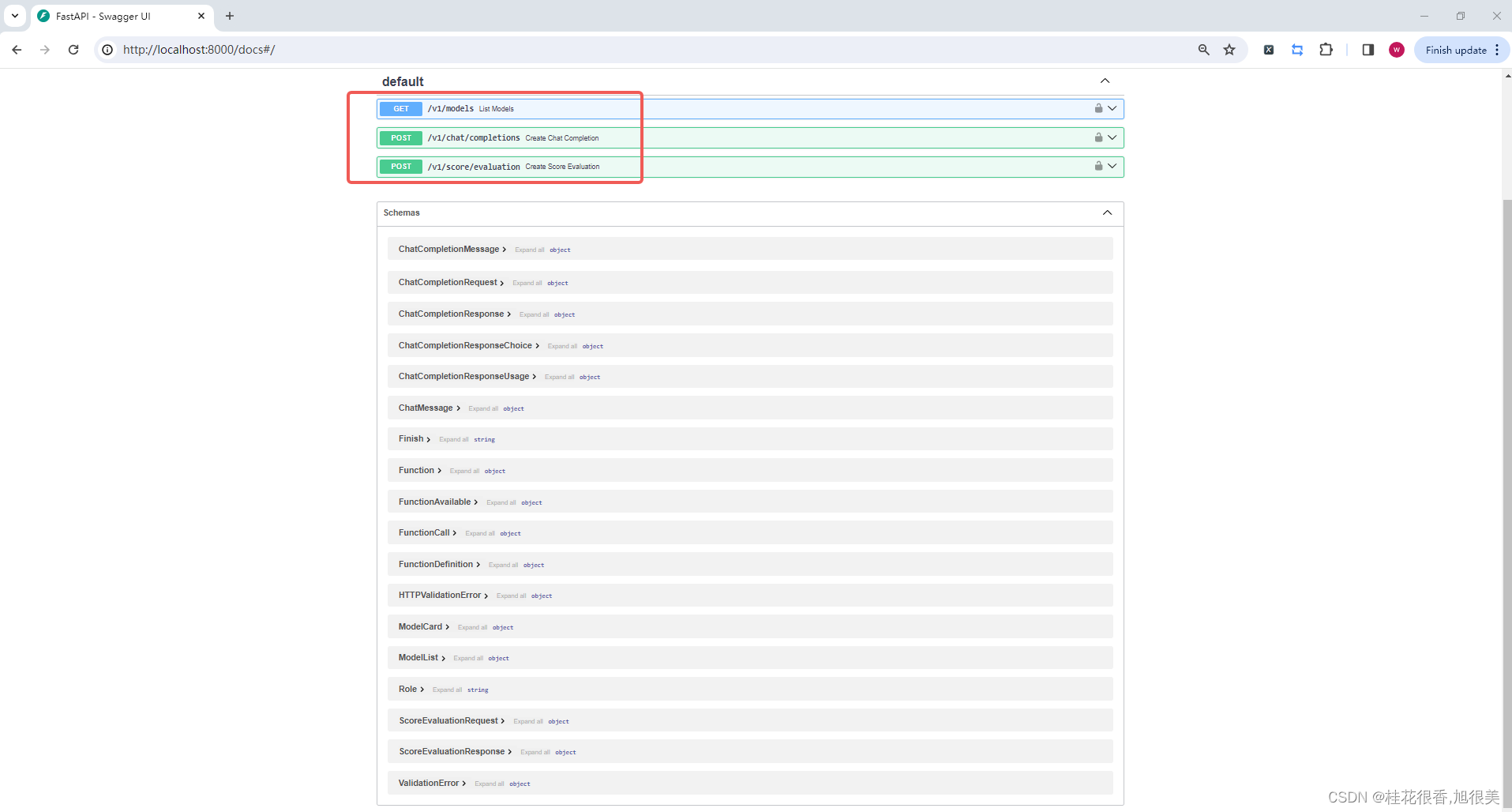

- 關于API文檔請見http://localhost:8000/docs。

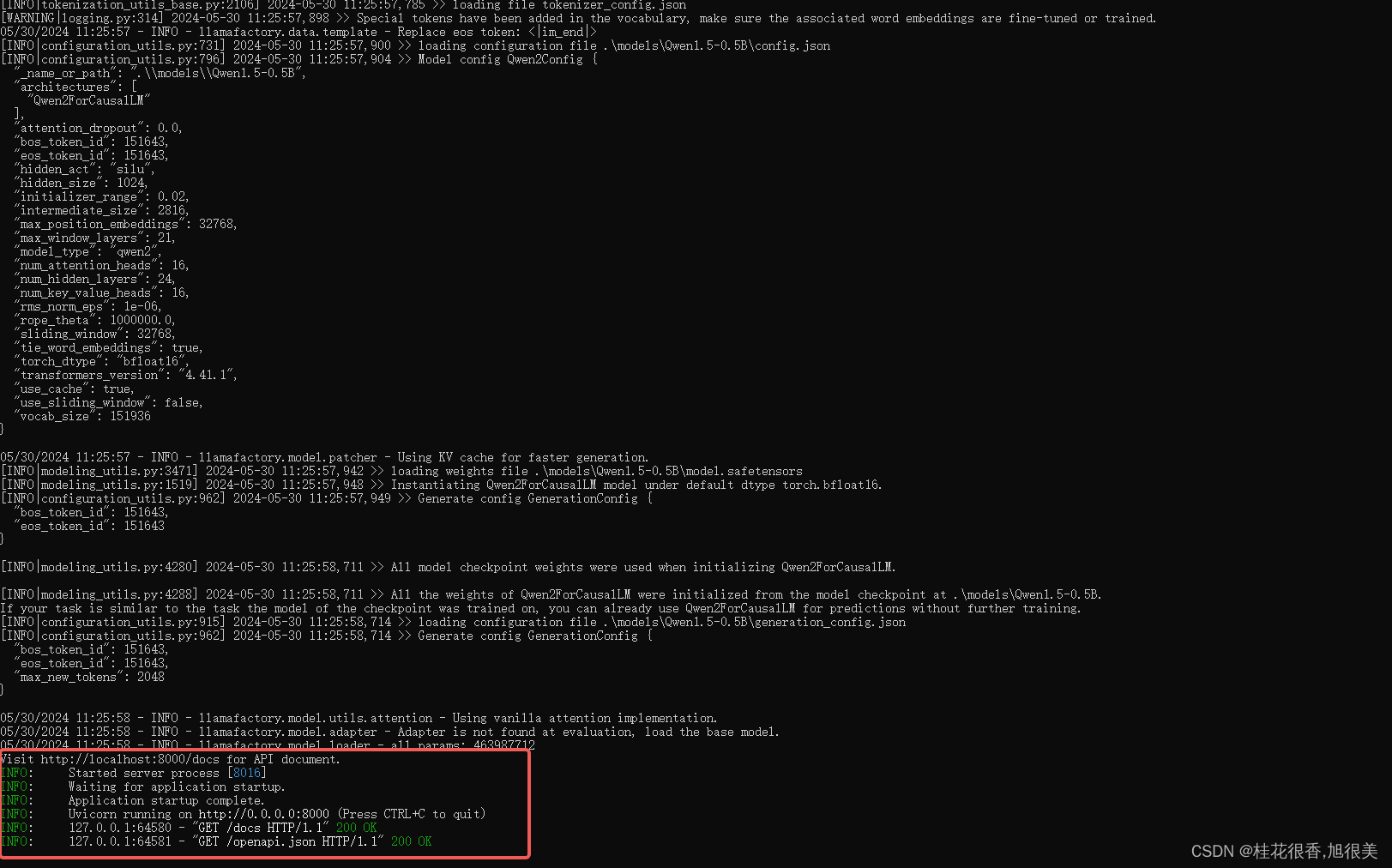

7.2 使用命令行推理

- 大模型指令推理

CUDA_VISIBLE_DEVICES=7 API_PORT=8030 llamafactory-cli api \--model_name_or_path .\models\Qwen1.5-0.5B \--template qwen

7.2.1 文檔訪問:http://localhost:8000/docs

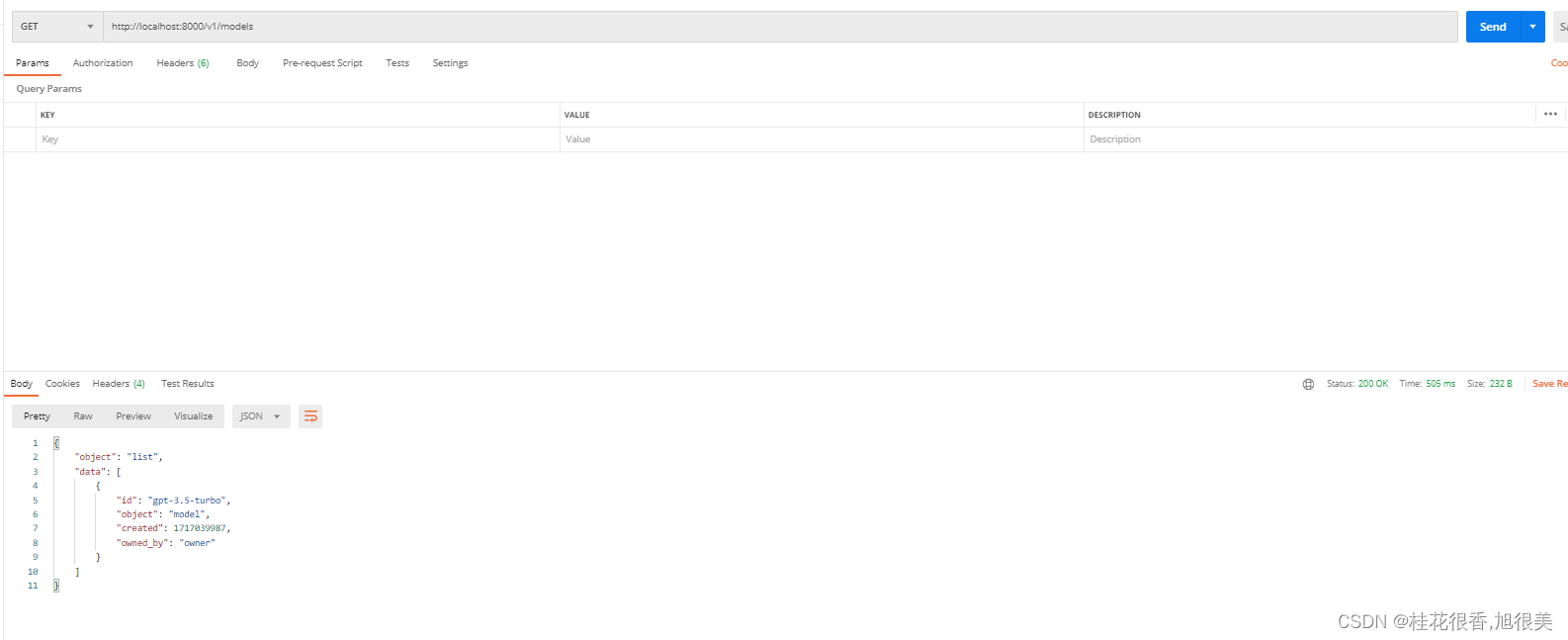

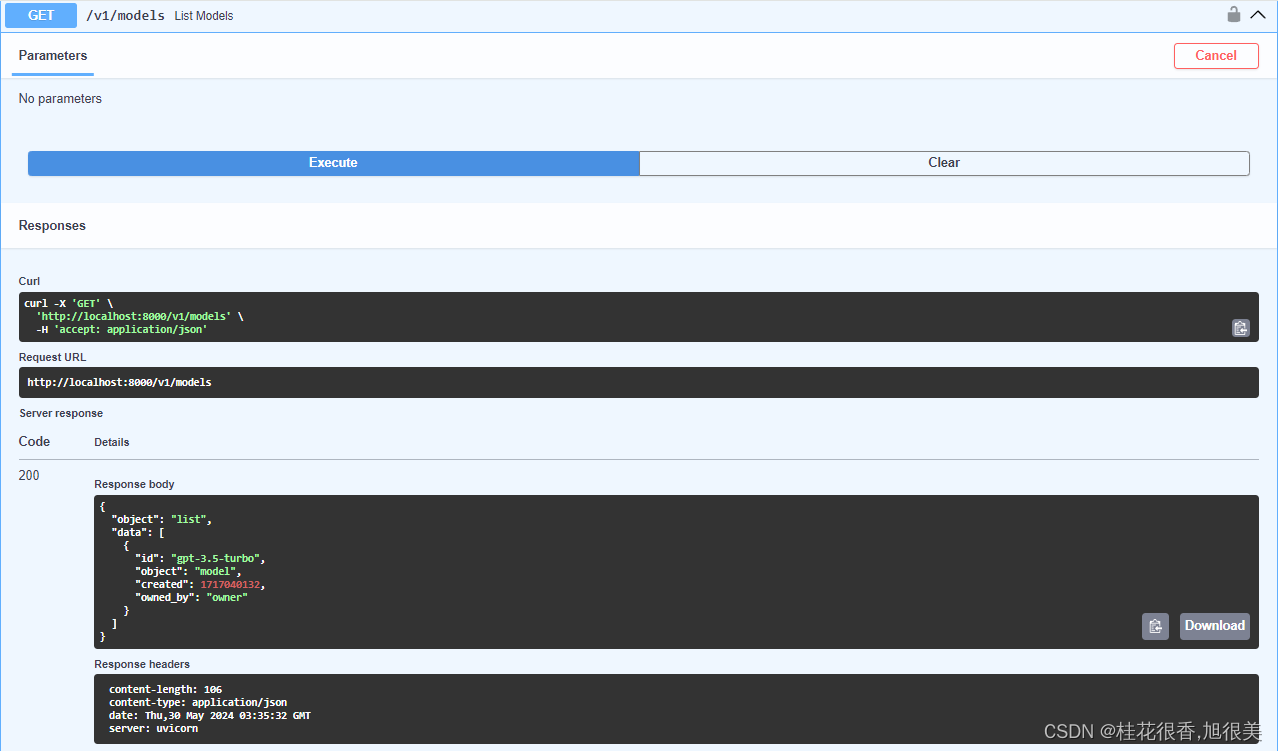

7.2.1.1 get 請求:/v1/models

postman 試一下:

網頁端自帶請求測試:

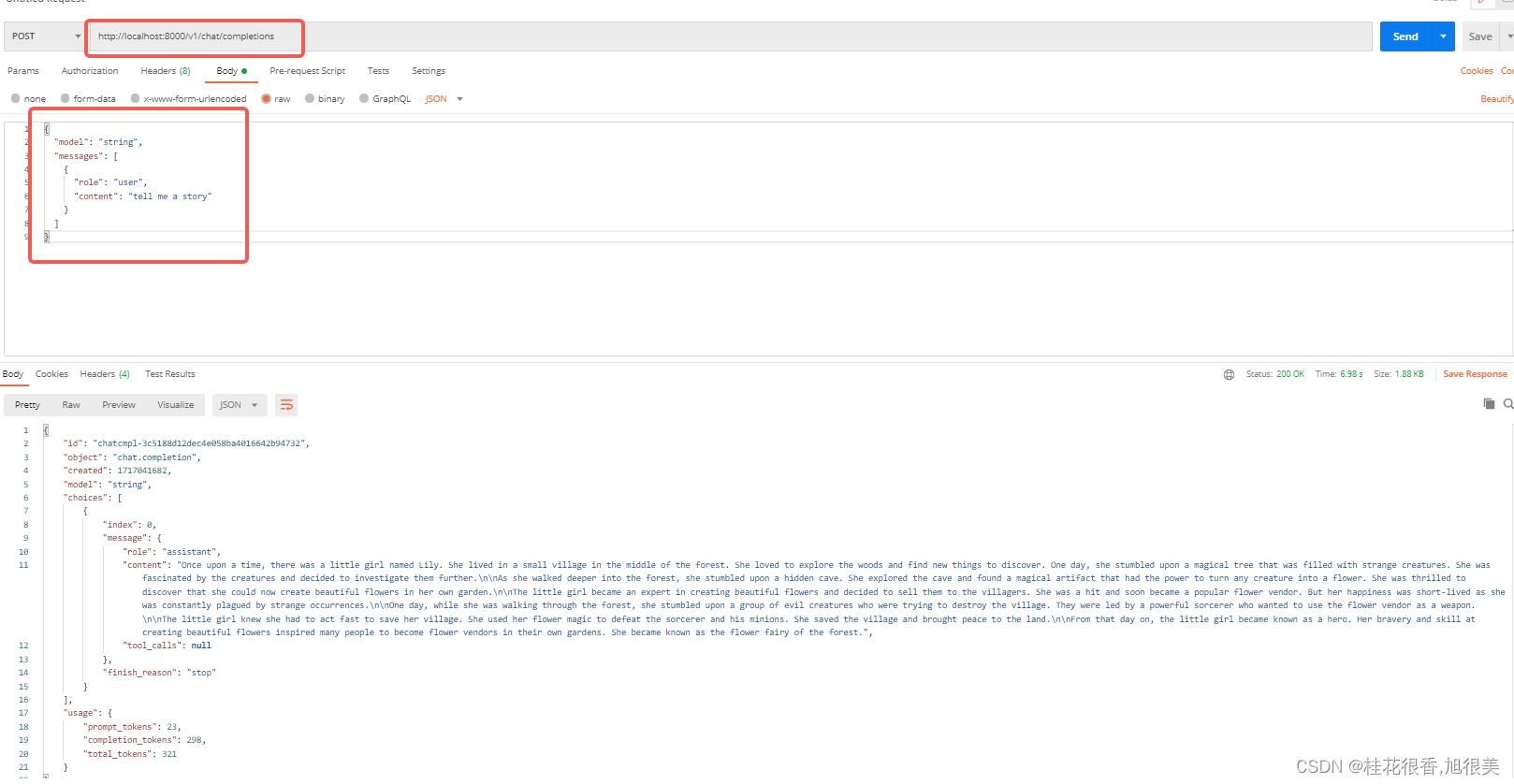

7.2.1.2 post 請求: /v1/chat/completions

# Request body Example Value

{"model": "string","messages": [{"role": "user","content": "string","tool_calls": [{"id": "string","type": "function","function": {"name": "string","arguments": "string"}}]}],"tools": [{"type": "function","function": {"name": "string","description": "string","parameters": {}}}],"do_sample": true,"temperature": 0,"top_p": 0,"n": 1,"max_tokens": 0,"stop": "string","stream": false

}

#Responses Successful Response Example Value

{"id": "string","object": "chat.completion","created": 0,"model": "string","choices": [{"index": 0,"message": {"role": "user","content": "string","tool_calls": [{"id": "string","type": "function","function": {"name": "string","arguments": "string"}}]},"finish_reason": "stop"}],"usage": {"prompt_tokens": 0,"completion_tokens": 0,"total_tokens": 0}

}

# Responses Validation Error Example Value

{"detail": [{"loc": ["string",0],"msg": "string","type": "string"}]

}

postman 試一下:

網頁端自帶請求測試:

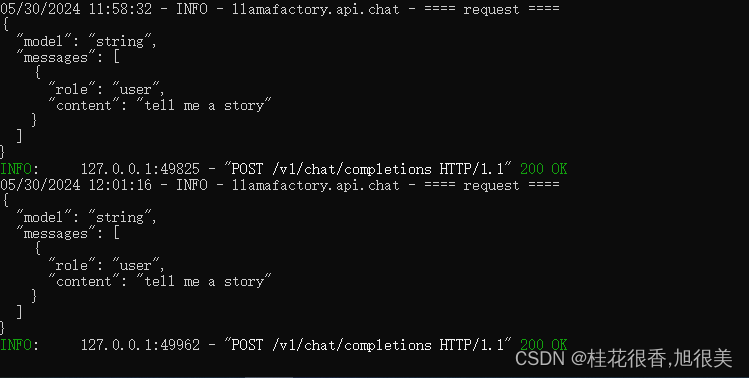

{"model": "string","messages": [{"role": "user","content": "tell me a story"}]

}

response:

{"id": "chatcmpl-5a4587623b494f46b190ab363ac4260a","object": "chat.completion","created": 1717041519,"model": "string","choices": [{"index": 0,"message": {"role": "assistant","content": "Sure, here's a story:\n\nOnce upon a time, there was a young girl named Lily. She loved to read and always wanted to learn more about the world around her. One day, while she was wandering through the woods, she stumbled upon a mysterious old book. The book was filled with stories and secrets that she had never heard before.\n\nAs she began to read, she found herself lost in the world of the book. She knew that the book was important, but she didn't know how to get it back. She searched for hours and hours, but she couldn't find it anywhere.\n\nJust when she thought she had lost hope, she saw a group of brave young men who were searching for treasure. They were looking for a lost treasure that had been hidden for centuries. Lily was excited to join them, but she was also scared.\n\nAs they searched the woods, they stumbled upon a secret passage that led to the treasure. But as they walked deeper into the forest, they realized that the passage was guarded by a group of trolls. The trolls were fierce and dangerous, and they knew that they couldn't leave without getting hurt.\n\nThe trolls were angry and territorial, and they wanted to take control of the treasure. They charged at Lily and her friends, but they were too late. They were outnumbered and outmatched, and they were all caught in the troll's trap.\n\nIn the end, Lily and her friends had to fight off the trolls and save the treasure. They had to work together to find a way out of the troll's trap, and they did it. They had a lot of fun and learned a lot about themselves and the world around them.","tool_calls": null},"finish_reason": "stop"}],"usage": {"prompt_tokens": 23,"completion_tokens": 339,"total_tokens": 362}

}

服務終端log:

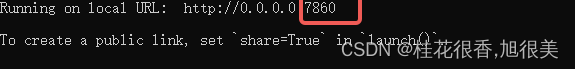

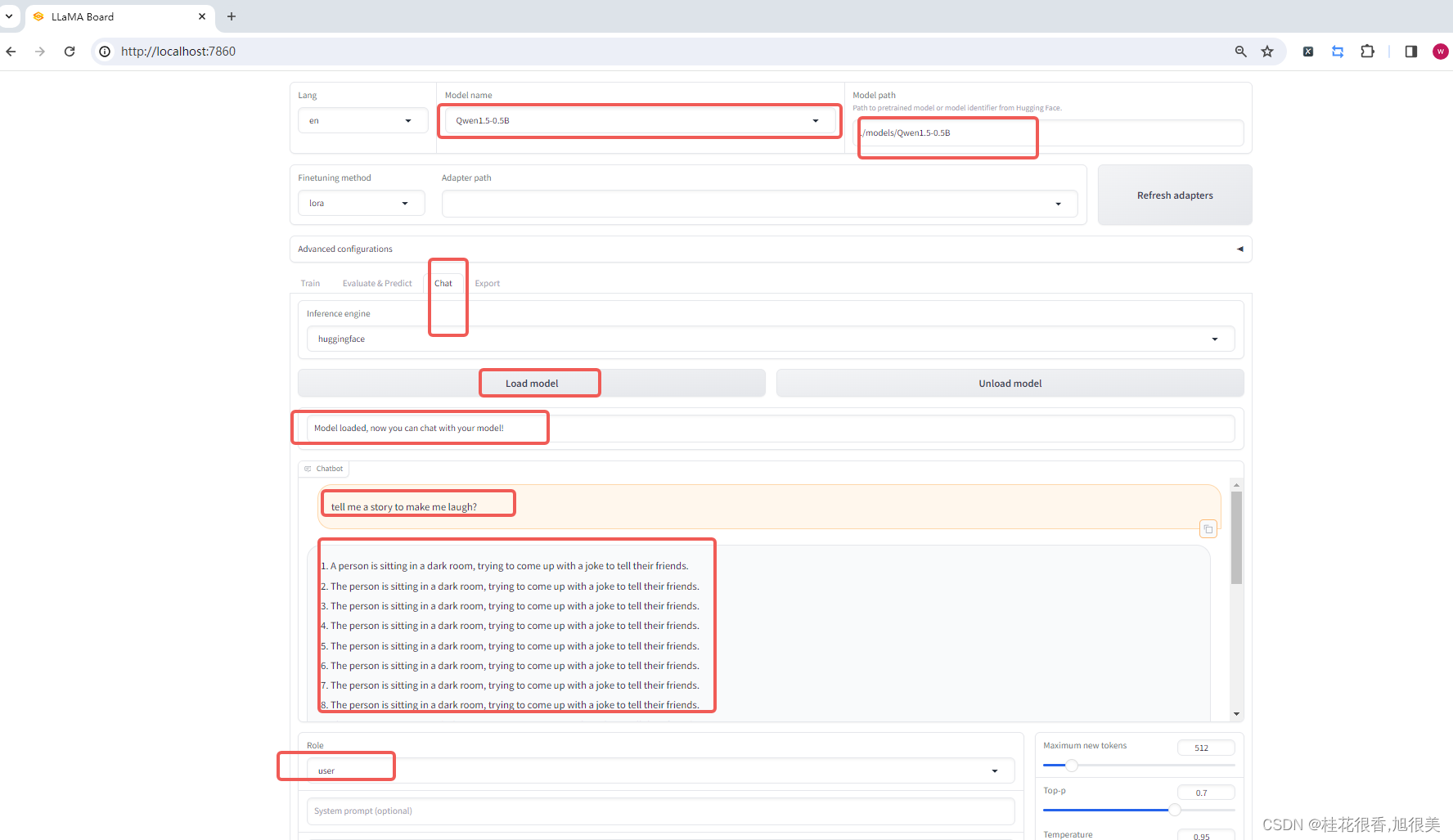

7.3 使用瀏覽器推理

CUDA_VISIBLE_DEVICES=0 python src/webui.py\

--model_name_or_path ./models/Qwen1.5-0.5B\

#--adapter_name_or_path path_to_checkpoint\

#--finetuning_type lora\

--template qwen

參數簡介:

- –model_name_or_path:參數的名稱(huggingface或者modelscope上的標準定義,如“meta-llama/Meta-Llama-3-8B-Instruct”), 或者是本地下載的絕對路徑,如/media/codingma/LLM/llama3/Meta-Llama-3-8B-Instruct 或者 /models\Qwen1.5-0.5B

- template: 模型問答時所使用的prompt模板,不同模型不同,請參考 https://github.com/hiyouga/LLaMA-Factory?tab=readme-ov-file#supported-models 獲取不同模型的模板定義,否則會回答結果會很奇怪或導致重復生成等現象的出現。chat 版本的模型基本都需要指定,比如Meta-Llama-3-8B-Instruct的template 就是 llama3 。Qwen 模型的 template 就是qwen

- finetuning_type:微調的方法,比如 lora

- adapter_name_or_path:微調后的權重位置,比如 LoRA的模型位置

最后兩個參數在微調后使用,如果是原始模型的話可以不傳這兩個參數。

參考

非一般程序員第三季大模型PEFT(一)之大模型推理實踐

LLaMA-Factory/README_zh.md

使用 LLaMA Factory 微調 Llama-3 中文對話模型

LLaMA-Factory QuickStart

數據驅動與 LLM 微調: 打造 E2E 任務型對話 Agent

LLaMA-Factory微調多模態大語言模型教程

)

)

)