文章目錄

- 一、數據集

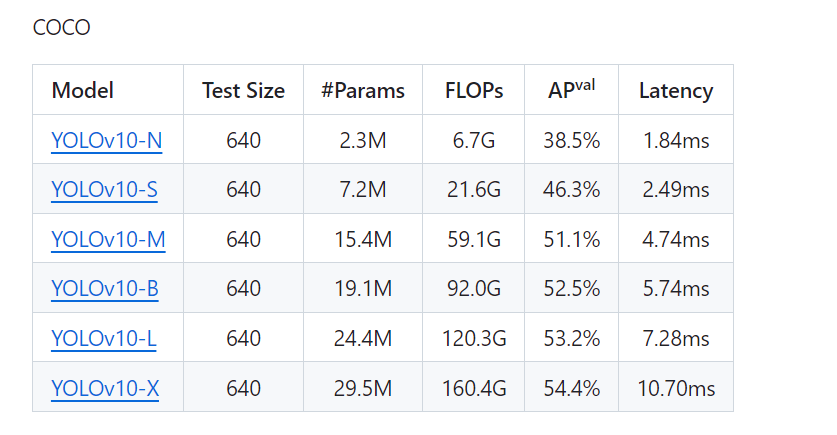

- 二、yolov10介紹

- 三、數據voc轉換為yolo

- 四、訓練

- 五、驗證

- 六、數據、模型、訓練后的所有文件

尋求幫助請看這里:

https://docs.qq.com/sheet/DUEdqZ2lmbmR6UVdU?tab=BB08J2

一、數據集

安全帽佩戴檢測

數據集:https://github.com/njvisionpower/Safety-Helmet-Wearing-Dataset

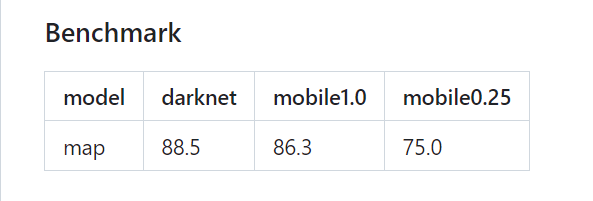

基準模型:

二、yolov10介紹

聽說過yolov10嗎:https://www.jiqizhixin.com/articles/2024-05-28-7

論文:

https://arxiv.org/abs/2405.14458

代碼:

https://github.com/THU-MIG/yolov10

三、數據voc轉換為yolo

調整一下,整成這樣:

VOC2028 # tree -L 1

.

├── images

├── labels

├── test.txt

├── train.txt

├── trainval.txt

└── val.txt2 directories, 4 files寫為絕對路徑:

# 定義需要處理的文件名列表

file_names = ['test.txt', 'train.txt', 'trainval.txt', 'val.txt']for file_name in file_names:# 打開文件用于讀取with open(file_name, 'r') as file:# 讀取所有行lines = file.readlines()# 打開(或創建)另一個文件用于寫入修改后的內容,這里使用新的文件名表示已修改new_file_name = 'modified_' + file_namewith open(new_file_name, 'w') as new_file:# 遍歷每一行并進行修改for line in lines:# 刪除行尾的換行符,添加'.jpg'和'images/',然后再添加回換行符modified_line = '/ssd/xiedong/yolov10/VOC2028/images/' + line.strip() + '.jpg\n'# 將修改后的內容寫入新文件new_file.write(modified_line)print("所有文件處理完成。")

轉yolo txt:

import traceback

import xml.etree.ElementTree as ET

import os

import shutil

import random

import cv2

import numpy as np

from tqdm import tqdmdef convert_annotation_to_list(xml_filepath, size_width, size_height, classes):in_file = open(xml_filepath, encoding='UTF-8')tree = ET.parse(in_file)root = tree.getroot()# size = root.find('size')# size_width = int(size.find('width').text)# size_height = int(size.find('height').text)yolo_annotations = []# if size_width == 0 or size_height == 0:for obj in root.iter('object'):difficult = obj.find('difficult').textcls = obj.find('name').textif cls not in classes:classes.append(cls)cls_id = classes.index(cls)xmlbox = obj.find('bndbox')b = [float(xmlbox.find('xmin').text),float(xmlbox.find('xmax').text),float(xmlbox.find('ymin').text),float(xmlbox.find('ymax').text)]# 標注越界修正if b[1] > size_width:b[1] = size_widthif b[3] > size_height:b[3] = size_heighttxt_data = [((b[0] + b[1]) / 2.0) / size_width, ((b[2] + b[3]) / 2.0) / size_height,(b[1] - b[0]) / size_width, (b[3] - b[2]) / size_height]# 標注越界修正if txt_data[0] > 1:txt_data[0] = 1if txt_data[1] > 1:txt_data[1] = 1if txt_data[2] > 1:txt_data[2] = 1if txt_data[3] > 1:txt_data[3] = 1yolo_annotations.append(f"{cls_id} {' '.join([str(round(a, 6)) for a in txt_data])}")in_file.close()return yolo_annotationsdef main():classes = []root = r"/ssd/xiedong/yolov10/VOC2028"img_path_1 = os.path.join(root, "images")xml_path_1 = os.path.join(root, "labels")dst_yolo_root_txt = xml_path_1index = 0img_path_1_files = os.listdir(img_path_1)xml_path_1_files = os.listdir(xml_path_1)for img_id in tqdm(img_path_1_files):# 右邊的.之前的部分xml_id = img_id.split(".")[0] + ".xml"if xml_id in xml_path_1_files:try:img = cv2.imdecode(np.fromfile(os.path.join(img_path_1, img_id), dtype=np.uint8), 1) # img是矩陣new_txt_name = img_id.split(".")[0] + ".txt"yolo_annotations = convert_annotation_to_list(os.path.join(xml_path_1, img_id.split(".")[0] + ".xml"),img.shape[1],img.shape[0],classes)with open(os.path.join(dst_yolo_root_txt, new_txt_name), 'w') as f:f.write('\n'.join(yolo_annotations))except:traceback.print_exc()# classesprint(f"我已經完成轉換 {classes}")if __name__ == '__main__':main()vim voc2028x.yaml

train: /ssd/xiedong/yolov10/VOC2028/modified_train.txt

val: /ssd/xiedong/yolov10/VOC2028/modified_val.txt

test: /ssd/xiedong/yolov10/VOC2028/modified_test.txt# Classes

names:0: hat1: person

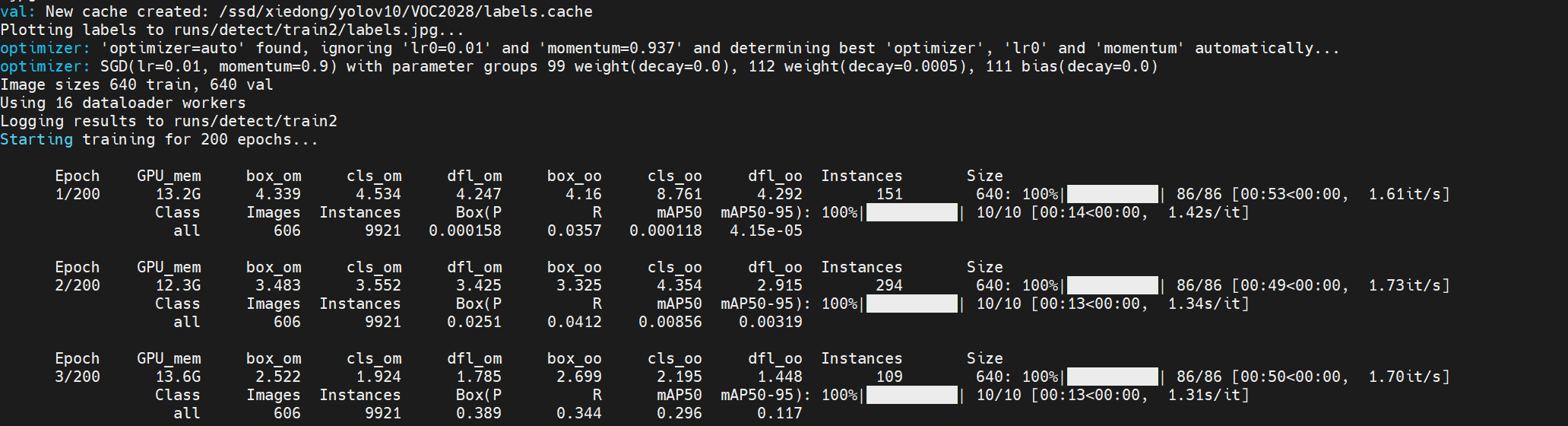

四、訓練

環境:

git clone https://github.com/THU-MIG/yolov10.git

cd yolov10

conda create -n yolov10 python=3.9 -y

conda activate yolov10

pip install -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple some-package

pip install -e . -i https://pypi.tuna.tsinghua.edu.cn/simple some-package訓練

yolo detect train data="/ssd/xiedong/yolov10/voc2028x.yaml" model=yolov10s.yaml epochs=200 batch=64 imgsz=640 device=1,3

訓練啟動后:

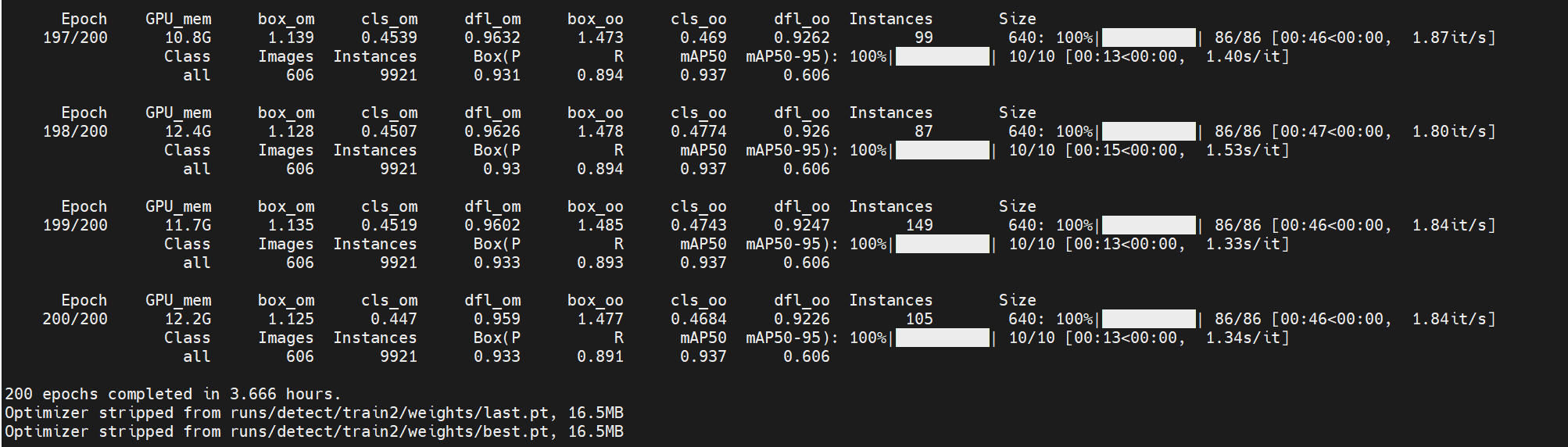

訓練完成后:

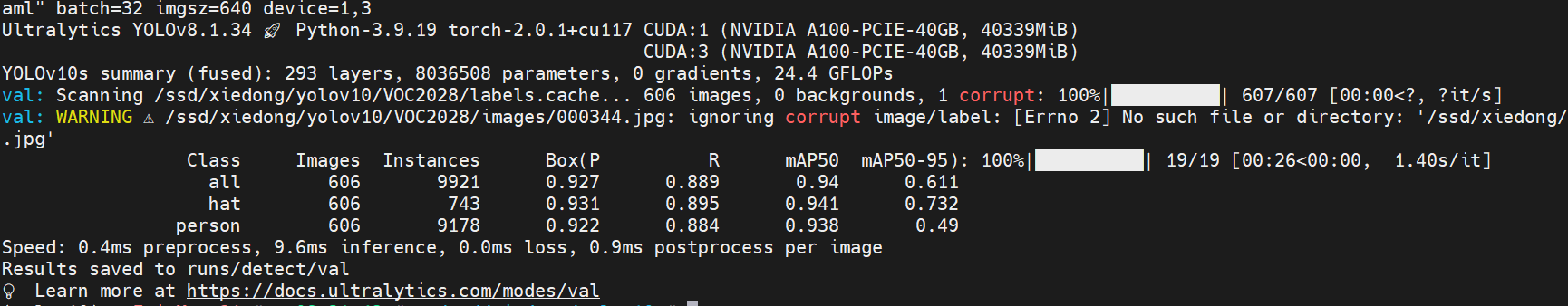

五、驗證

yolo val model="/ssd/xiedong/yolov10/runs/detect/train2/weights/best.pt" data="/ssd/xiedong/yolov10/voc2028x.yaml" batch=32 imgsz=640 device=1,3

map50平均達到0.94,已超出基準很多了。

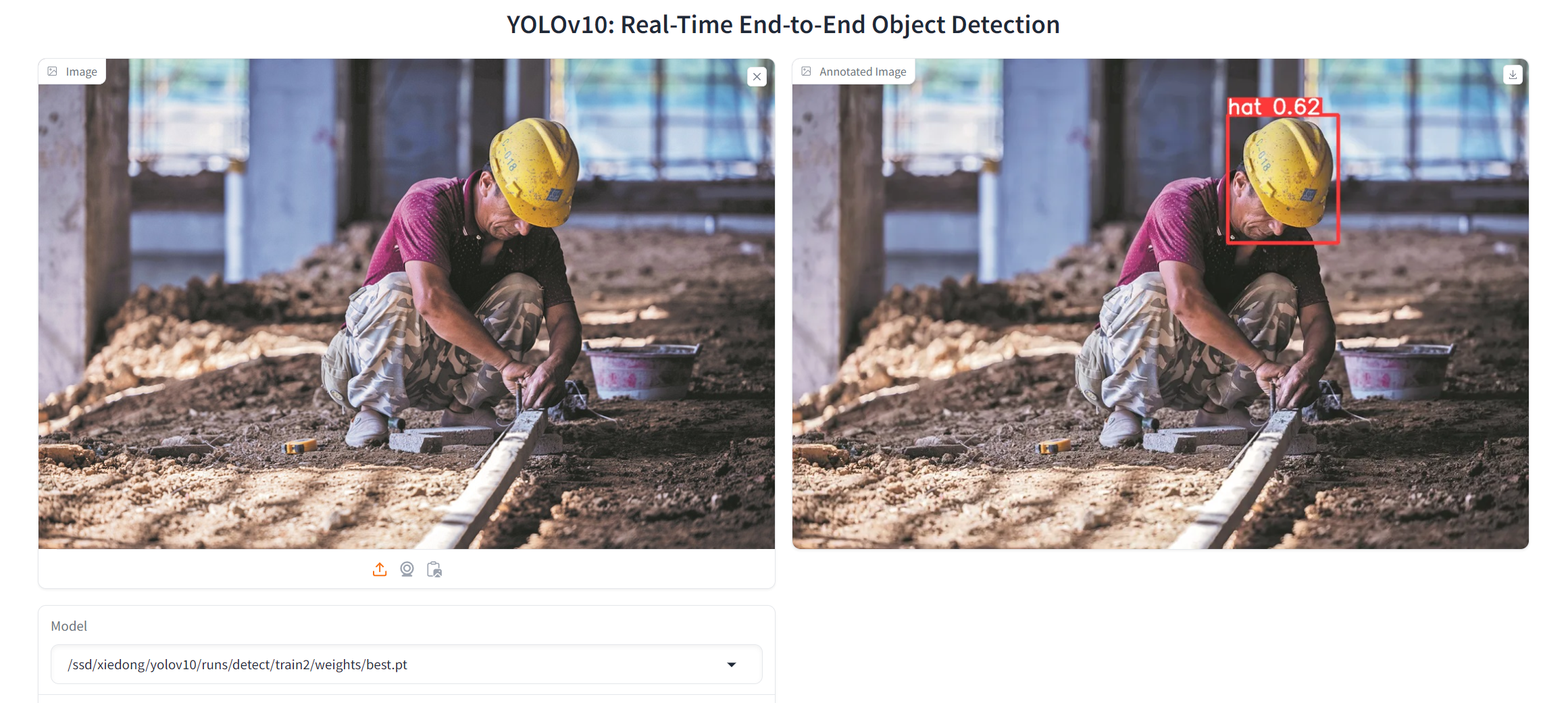

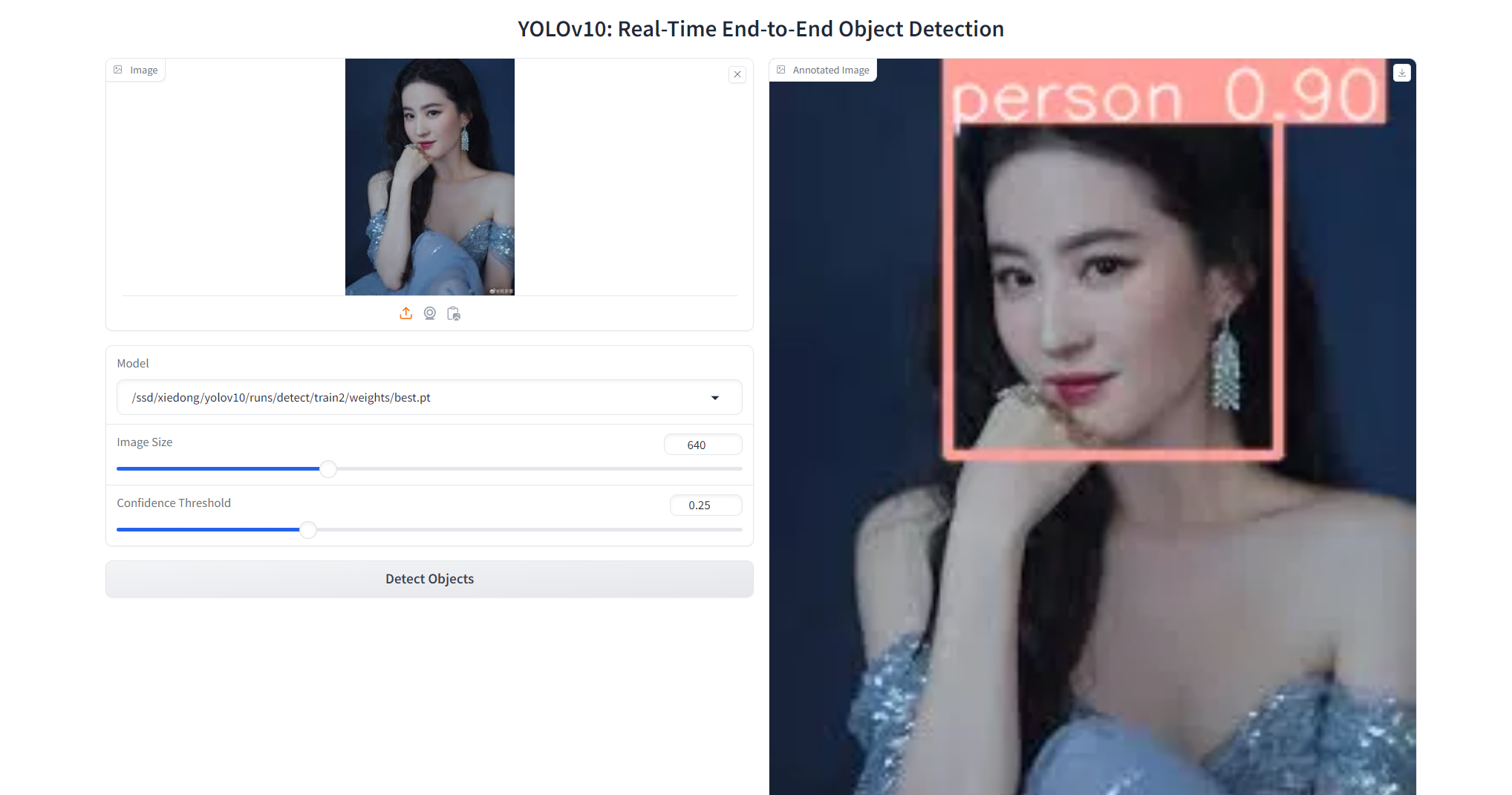

預測:

yolo predict model=yolov10n/s/m/b/l/x.pt

導出:

# End-to-End ONNX

yolo export model=yolov10n/s/m/b/l/x.pt format=onnx opset=13 simplify

# Predict with ONNX

yolo predict model=yolov10n/s/m/b/l/x.onnx# End-to-End TensorRT

yolo export model=yolov10n/s/m/b/l/x.pt format=engine half=True simplify opset=13 workspace=16

# Or

trtexec --onnx=yolov10n/s/m/b/l/x.onnx --saveEngine=yolov10n/s/m/b/l/x.engine --fp16

# Predict with TensorRT

yolo predict model=yolov10n/s/m/b/l/x.engine

demo:

wget https://github.com/THU-MIG/yolov10/releases/download/v1.1/yolov10s.pt

python app.py

# Please visit http://127.0.0.1:7860

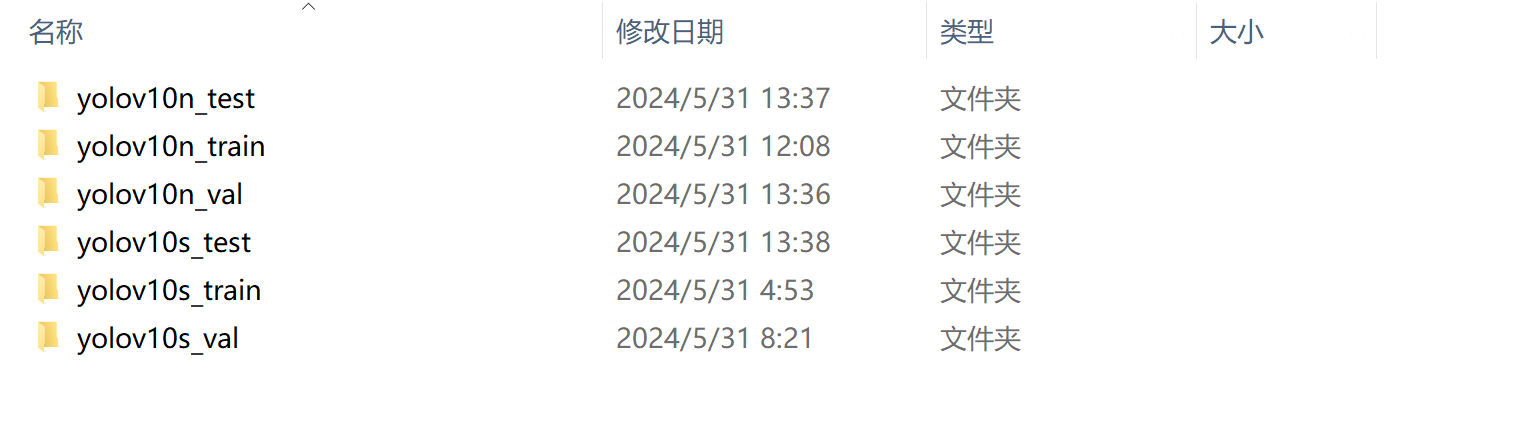

六、數據、模型、訓練后的所有文件

yolov10訓練安全帽目標監測全部東西,下載看這里:

https://docs.qq.com/sheet/DUEdqZ2lmbmR6UVdU?tab=BB08J2

)

【類的6個默認成員函數】 【零散知識點】 (萬字))

,免費參會)