每日禪語

“木末芙蓉花,山中發紅萼,澗戶寂無人,紛紛開自落。?”這是王維的一首詩,名叫《辛夷塢》?。這首詩寫的是在辛夷塢這個幽深的山谷里,辛夷花自開自落,平淡得很,既沒有生的喜悅,也沒有死的悲哀。無情有性,辛夷花得之于自然,又回歸自然。它不需要贊美,也不需要人們對它的凋謝灑同情之淚,它把自己生命的美麗發揮到了極致。在佛家眼中,眾生平等,沒有高低貴賤,每個個體都自在自足,自性自然圓滿。?《占察善惡業報經》有云:?“如來法身自性不空,有真實體,具足無量清凈功業,從無始世來自然圓滿,非修非作,乃至一切眾生身中亦皆具足,不變不異,無增無減。?”一個人如果能體察到自身不增不減的天賦,就能在世間擁有精彩和圓滿。我們常常會有這樣的感覺,遠處的風景都被籠罩在薄霧或塵埃之下,越是走近就越是朦朧;心里的念頭被圍困在重巒疊嶂之中,越是急于走出迷陣就越是辨不清方向。這是因為我們過多地執著于思維,而忽視了自性。

寫作初衷

網絡上關于k8s的部署文檔紛繁復雜,而且k8s的入門難度也比docker要高,我學習k8s的時候為了部署一套完整的k8s集群環境,尋找網上各種各樣的文檔信息,終究不得其中奧秘,所以光是部署這一步導致很多人退而缺步,最終而放棄學習,所以寫了這篇文章讓更多的k8s學習愛好者一起學習,讓大家可以更好的部署集群環境。

注:未標明具體節點的操作需要在所有節點上都執行。?

部署模式:兩主一從

市面上大部分的部署教程都是一個主節點,兩個從節點這種方式,很少有人寫這種高可用的部署方法,筆者開始的部署模式也是一主兩從,但是真正在使用的時候會發現主節點不是太穩定,經常會導致集群宕機。所以本文是采用兩主一從的方式部署

| 服務器 | 節點名稱 | k8s節點角色 |

| 192.168.11.85 | k8s-master | control-plane |

| 192.168.11.86 | k8s-master1 | control-plane |

| 192.168.11.87 | k8s-node | worker |

1.部署機器初始化操作

1.1 關閉selinux

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config1.2配置主機名稱

- 控制節點(主節點)其他的控制節點也如下命令添加

# hostnamectl set-hostname k8s-master- 子節點設置服務器名稱也是一樣操作

# hostnamectl set-hostname k8s-node

# hostnamectl set-hostname k8s-node1

# hostnamectl set-hostname k8s-node21.3關閉交換分區swap

vim /etc/fstab //注釋swap掛載,給swap這行開頭加一下注釋。# /dev/mapper/centos-swap swap swap defaults 0 0

# 重啟服務器讓其生效

reboot now1.4修改機器內核參數

# modprobe br_netfilter# echo "modprobe br_netfilter" >> /etc/profile# cat > /etc/sysctl.d/k8s.conf <<EOFnet.bridge.bridge-nf-call-ip6tables = 1net.bridge.bridge-nf-call-iptables = 1net.ipv4.ip_forward = 1EOF# sysctl -p /etc/sysctl.d/k8s.conf# vim /etc/rc.sysinit //重啟后模塊失效,下面是開機自動加載模塊的腳本,在/etc/新建rc.sysinit 文件#!/bin/bash

for file in /etc/sysconfig/modules/*.modules; do

[ -x $file ] && $file

done# vim /etc/sysconfig/modules/br_netfilter.modules //在/etc/sysconfig/modules/目錄下新建文件

modprobe br_netfilter# chmod 755 /etc/sysconfig/modules/br_netfilter.modules //增加權限1.5關閉防火墻

# systemctl stop firewalld; systemctl disable firewalld1.6配置yum源

備份基礎repo源# mkdir /root/repo.bak# cd /etc/yum.repos.d/# mv * /root/repo.bak/

# curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-vault-8.5.2111.repo

# yum makecache

# yum -y install yum-utils# yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo1.7安裝基礎軟件包

# yum -y install yum-utils openssh-clients device-mapper-persistent-data lvm2 wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel vim ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack ntpdate telnet ipvsadm2安裝containerd

2.1安裝containerd

# yum install containerd.io-1.6.6 -y2.2配置containerd配置

# mkdir -p /etc/containerd# containerd config default > /etc/containerd/config.toml //生成containerd配置文件# vim /etc/containerd/config.toml //修改配置文件把SystemdCgroup = false修改成SystemdCgroup = true把sandbox_image = "k8s.gcr.io/pause:3.6"修改成sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.7"2.3配置 containerd 開機啟動,并啟動 containerd

# systemctl enable containerd --now2.3修改/etc/crictl.yaml文件

# cat > /etc/crictl.yaml <<EOFruntime-endpoint: unix:///run/containerd/containerd.sockimage-endpoint: unix:///run/containerd/containerd.socktimeout: 10debug: falseEOF# systemctl restart containerd2.4配置containerd鏡像加速器

# vim /etc/containerd/config.toml文件將config_path = ""修改成如下目錄:config_path = "/etc/containerd/certs.d"# mkdir /etc/containerd/certs.d/docker.io/ -p# vim /etc/containerd/certs.d/docker.io/hosts.toml[host."https://vh3bm52y.mirror.aliyuncs.com",host."https://registry.docker-cn.com"]capabilities = ["pull"]# systemctl restart containerd3安裝docker服務

3.1安裝docker

備注:docker也要安裝,docker跟containerd不沖突,安裝docker是為了能基于dockerfile構建鏡像# yum install docker-ce -y# systemctl enable docker --now3.2配置docker鏡像加速器

# vim /etc/docker/daemon.json //配置docker鏡像加速器{"registry-mirrors":["https://vh3bm52y.mirror.aliyuncs.com","https://registry.docker-cn.com","https://docker.mirrors.ustc.edu.cn","https://dockerhub.azk8s.cn","http://hub-mirror.c.163.com"]}# systemctl daemon-reload# systemctl restart docker4.安裝k8s組件

4.1配置安裝k8s組件需要的阿里云的repo源(不同版本的k8s需要配置不同的repo源)

1.25版本

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

EOF查看不同版本的鏡像源地址:安裝 kubeadm | Kubernetes

- 1.30版本

# 此操作會覆蓋 /etc/yum.repos.d/kubernetes.repo 中現存的所有配置

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://pkgs.k8s.io/core:/stable:/v1.30/rpm/

enabled=1

gpgcheck=1

gpgkey=https://pkgs.k8s.io/core:/stable:/v1.30/rpm/repodata/repomd.xml.key

exclude=kubelet kubeadm kubectl cri-tools kubernetes-cni

EOF- 1.29版本

# 此操作會覆蓋 /etc/yum.repos.d/kubernetes.repo 中現存的所有配置

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://pkgs.k8s.io/core:/stable:/v1.29/rpm/

enabled=1

gpgcheck=1

gpgkey=https://pkgs.k8s.io/core:/stable:/v1.29/rpm/repodata/repomd.xml.key

exclude=kubelet kubeadm kubectl cri-tools kubernetes-cni

EOF- 其他版本的類似

4.2安裝k8s初始化工具

sudo yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

sudo systemctl enable --now kubelet4.3設置容器運行時的endpoint

# crictl config runtime-endpoint /run/containerd/containerd.sock4.4使用kubeadm初始化k8s集群(控制節點執行)

# vim kubeadm.yamlapiVersion: kubeadm.k8s.io/v1beta3...kind: InitConfigurationlocalAPIEndpoint:advertiseAddress: 172.17.11.85#控制節點的ipbindPort: 6443nodeRegistration:criSocket: unix:///run/containerd/containerd.sock #指定containerd容器運行時的endpointimagePullPolicy: IfNotPresentname: k8s-master #控制節點主機名taints: null---apiVersion: kubeadm.k8s.io/v1beta3certificatesDir: /etc/kubernetes/pkiclusterName: kubernetescontrollerManager: {}dns: {}etcd:local:dataDir: /var/lib/etcdimageRepository: registry.aliyuncs.com/google_containers #指定從阿里云倉庫拉取鏡像kind: ClusterConfigurationkubernetesVersion: 1.30.0 #k8s版本networking:dnsDomain: cluster.localpodSubnet: 10.244.0.0/16 #指定pod網段serviceSubnet: 10.96.0.0/12 #指定Service網段scheduler: {}#在文件最后,插入以下內容,(復制時,要帶著---)---apiVersion: kubeproxy.config.k8s.io/v1alpha1kind: KubeProxyConfigurationmode: ipvs---apiVersion: kubelet.config.k8s.io/v1beta1kind: KubeletConfigurationcgroupDriver: systemd4.4 修改/etc/sysconfig/kubelet

# vi /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--fail-swap-on=false"4.5 基于kubeadm.yaml文件初始化k8s (控制節點執行)

kubeadm init --config=kubeadm.yaml --ignore-preflight-errors=SystemVerification4.6配置kubectl的配置文件(配置kubectl的配置文件config,相當于對kubectl進行授權,這樣kubectl命令可以使用此證書對k8s集群進行管理)(控制節點執行)

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

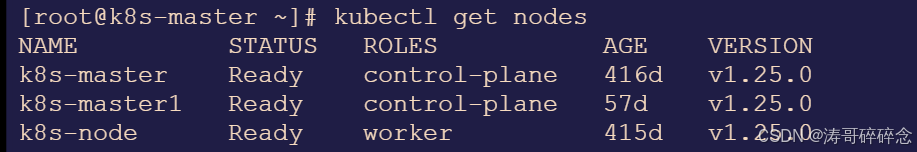

kubectl get nodes

5.安裝kubernetes網絡組件-Calico(控制節點執行)

上傳calico.yaml到master1上,使用yaml文件安裝calico 網絡插件(這里需要等幾分鐘才能ready)。kubectl apply -f calico.yaml注:在線下載配置文件地址是:https://docs.projectcalico.org/manifests/calico.yaml6.控制節點部署成功以后,添加node節點

注:未標明具體節點的操作需要在node節點上都執行。?執行完成以后在進行下面步驟的操作

6.1上述的所有操作都已經操作成功,除控制節點的操作

在看k8s-master上查看加入節點的命令:[root@k8s-master ~]# kubeadm token create --print-join-commandkubeadm join 192.168.11.85:6443 --token ol7rnk.473w56z16o24u3qs --discovery-token-ca-cert-hash sha256:98d33a741dd35172891d54ea625beb552acf6e75e66edf47e64f0f78365351c6把k8s-node加入k8s集群:[root@k8s-node ~]# kubeadm join 192.168.11.85:6443 --token ol7rnk.473w56z16o24u3qs --discovery-token-ca-cert-hash sha256:98d33a741dd35172891d54ea625beb552acf6e75e66edf47e64f0f78365351c66.2可以把node的ROLES變成work,按照如下方法

[root@k8s-master ~]# kubectl label node k8s-node node-role.kubernetes.io/worker=worker6.3查看節點情況

kubectl get nodes //在master上查看集群節點狀況

7.添加master1控制節點

?注:未標明具體節點的操作需要在看s-master1節點上都執行。?執行完成以后在進行下面步驟的操作

7.1在當前唯一的master節點上運行如下命令,獲取key

1 # kubeadm init phase upload-certs --upload-certs

2 I1109 14:34:00.836965 5988 version.go:255] remote version is much newer: v1.25.3; falling back to: stable-1.22

3 [upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

4 [upload-certs] Using certificate key:

5 ecf2abbfdf3a7bc45ddb2de75152ec12889971098d69939b98e4451b53aa3033?7.2在當前唯一的master節點上運行如下命令,獲取token

在看k8s-master上查看加入節點的命令:[root@k8s-master ~]# kubeadm token create --print-join-commandkubeadm join 192.168.11.85:6443 --token ol7rnk.473w56z16o24u3qs --discovery-token-ca-cert-hash sha256:98d33a741dd35172891d54ea625beb552acf6e75e66edf47e64f0f78365351c67.3將獲取的key和token進行拼接

kubeadm join 192.168.11.85:6443 --token xxxxxxxxx --discovery-token-ca-cert-hash xxxxxxx --control-plane --certificate-key xxxxxxx注意事項:

- 不要使用 --experimental-control-plane,會報錯

- 要加上--control-plane --certificate-key ,不然就會添加為node節點而不是master

- join的時候節點上不要部署,如果部署了kubeadm reset后再join

7.4將7.3步驟拼接好的join命令,在master1節點執行,執行成功以后顯示如下信息

This node has joined the cluster and a new control plane instance was created:* Certificate signing request was sent to apiserver and approval was received.

* The Kubelet was informed of the new secure connection details.

* Control plane (master) label and taint were applied to the new node.

* The Kubernetes control plane instances scaled up.

* A new etcd member was added to the local/stacked etcd cluster.To start administering your cluster from this node, you need to run the following as a regular user:mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/configRun 'kubectl get nodes' to see this node join the cluster.7.5報錯處理

7.5.1?第一次加入集群的時候會有以下報錯:

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

error execution phase preflight:

One or more conditions for hosting a new control plane instance is not satisfied.unable to add a new control plane instance a cluster that doesn't have a stable controlPlaneEndpoint addressPlease ensure that:

* The cluster has a stable controlPlaneEndpoint address.

* The certificates that must be shared among control plane instances are provided.To see the stack trace of this error execute with --v=5 or higher7.5.2解決辦法

查看kubeadm-config.yaml

kubectl -n kube-system get cm kubeadm-config -oyaml

發現沒有controlPlaneEndpoint

添加controlPlaneEndpoint

kubectl -n kube-system edit cm kubeadm-config

大概在這么個位置:

kind: ClusterConfiguration

kubernetesVersion: v1.25.3

controlPlaneEndpoint: 192.168.11.86 #當前需要添加為master節點的ip地址

然后再在準備添加為master的節點(k8s-master1)上執行kubeadm join的命令7.6添加成功以后可以看見2個master節點和1個worker節點

?后記

k8s的部署確實很復雜,有可能不同的系統版本,依賴版本都可能導致問題的出現,筆者這里是根據當前操作系統部署,如果你在安裝過程中可能遇到一些奇奇怪怪的問題,歡迎下方留言,我們一起探討。文中可能也有一些漏洞,歡迎指出問題,萬分感謝。

)

路由器-1)

、核心模塊分別是什么東西?)

【合集】)