基于MobileNetv2的垃圾分類

MobileNetv2模型原理介紹

MobileNet網絡是由Google團隊于2017年提出的專注于移動端、嵌入式或IoT設備的輕量級CNN網絡,相比于傳統的卷積神經網絡,MobileNet網絡使用深度可分離卷積(Depthwise Separable Convolution)的思想在準確率小幅度降低的前提下,大大減小了模型參數與運算量。并引入寬度系數 α和分辨率系數 β使模型滿足不同應用場景的需求。

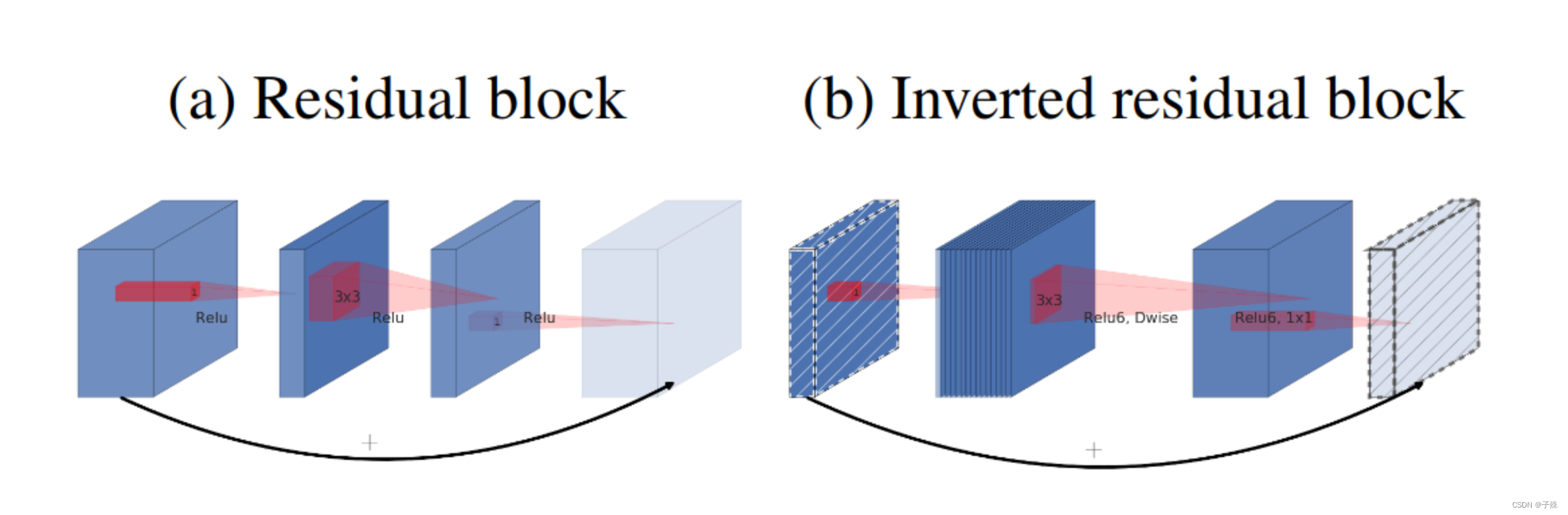

由于MobileNet網絡中Relu激活函數處理低維特征信息時會存在大量的丟失,所以MobileNetV2網絡提出使用倒殘差結構(Inverted residual block)和Linear Bottlenecks來設計網絡,以提高模型的準確率,且優化后的模型更小。

圖中Inverted residual block結構是先使用1x1卷積進行升維,然后使用3x3的DepthWise卷積,最后使用1x1的卷積進行降維,與Residual block結構相反。Residual block是先使用1x1的卷積進行降維,然后使用3x3的卷積,最后使用1x1的卷積進行升維。

- 說明:

詳細內容可參見MobileNetV2論文

數據處理

數據準備

MobileNetV2的代碼默認使用ImageFolder格式管理數據集,每一類圖片整理成單獨的一個文件夾, 數據集結構如下:

└─ImageFolder

├─train

│ class1Folder

│ ......

└─evalclass1Folder......

from download import download # 下載data_en數據集

url = "https://ascend-professional-construction-dataset.obs.cn-north-4.myhuaweicloud.com:443/MindStudio-pc/data_en.zip"

path = download(url, "./", kind="zip", replace=True)

from download import download# 下載預訓練權重文件

url = "https://ascend-professional-construction-dataset.obs.cn-north-4.myhuaweicloud.com:443/ComputerVision/mobilenetV2-200_1067.zip"

path = download(url, "./", kind="zip", replace=True)

數據加載

import math

import numpy as np

import os

import randomfrom matplotlib import pyplot as plt

from easydict import EasyDict

from PIL import Image

import numpy as np

import mindspore.nn as nn

from mindspore import ops as P

from mindspore.ops import add

from mindspore import Tensor

import mindspore.common.dtype as mstype

import mindspore.dataset as de

import mindspore.dataset.vision as C

import mindspore.dataset.transforms as C2

import mindspore as ms

from mindspore import set_context, nn, Tensor, load_checkpoint, save_checkpoint, export

from mindspore.train import Model

from mindspore.train import Callback, LossMonitor, ModelCheckpoint, CheckpointConfigos.environ['GLOG_v'] = '3' # Log level includes 3(ERROR), 2(WARNING), 1(INFO), 0(DEBUG).

os.environ['GLOG_logtostderr'] = '0' # 0:輸出到文件,1:輸出到屏幕

os.environ['GLOG_log_dir'] = '../../log' # 日志目錄

os.environ['GLOG_stderrthreshold'] = '2' # 輸出到目錄也輸出到屏幕:3(ERROR), 2(WARNING), 1(INFO), 0(DEBUG).

set_context(mode=ms.GRAPH_MODE, device_target="CPU", device_id=0) # 設置采用圖模式執行,設備為Ascend#

# 垃圾分類數據集標簽,以及用于標簽映射的字典。

garbage_classes = {'干垃圾': ['貝殼', '打火機', '舊鏡子', '掃把', '陶瓷碗', '牙刷', '一次性筷子', '臟污衣服'],'可回收物': ['報紙', '玻璃制品', '籃球', '塑料瓶', '硬紙板', '玻璃瓶', '金屬制品', '帽子', '易拉罐', '紙張'],'濕垃圾': ['菜葉', '橙皮', '蛋殼', '香蕉皮'],'有害垃圾': ['電池', '藥片膠囊', '熒光燈', '油漆桶']

}class_cn = ['貝殼', '打火機', '舊鏡子', '掃把', '陶瓷碗', '牙刷', '一次性筷子', '臟污衣服','報紙', '玻璃制品', '籃球', '塑料瓶', '硬紙板', '玻璃瓶', '金屬制品', '帽子', '易拉罐', '紙張','菜葉', '橙皮', '蛋殼', '香蕉皮','電池', '藥片膠囊', '熒光燈', '油漆桶']

class_en = ['Seashell', 'Lighter','Old Mirror', 'Broom','Ceramic Bowl', 'Toothbrush','Disposable Chopsticks','Dirty Cloth','Newspaper', 'Glassware', 'Basketball', 'Plastic Bottle', 'Cardboard','Glass Bottle', 'Metalware', 'Hats', 'Cans', 'Paper','Vegetable Leaf','Orange Peel', 'Eggshell','Banana Peel','Battery', 'Tablet capsules','Fluorescent lamp', 'Paint bucket']index_en = {'Seashell': 0, 'Lighter': 1, 'Old Mirror': 2, 'Broom': 3, 'Ceramic Bowl': 4, 'Toothbrush': 5, 'Disposable Chopsticks': 6, 'Dirty Cloth': 7,'Newspaper': 8, 'Glassware': 9, 'Basketball': 10, 'Plastic Bottle': 11, 'Cardboard': 12, 'Glass Bottle': 13, 'Metalware': 14, 'Hats': 15, 'Cans': 16, 'Paper': 17,'Vegetable Leaf': 18, 'Orange Peel': 19, 'Eggshell': 20, 'Banana Peel': 21,'Battery': 22, 'Tablet capsules': 23, 'Fluorescent lamp': 24, 'Paint bucket': 25}# 訓練超參

config = EasyDict({"num_classes": 26,"image_height": 224,"image_width": 224,#"data_split": [0.9, 0.1],"backbone_out_channels":1280,"batch_size": 16,"eval_batch_size": 8,"epochs": 10,"lr_max": 0.05,"momentum": 0.9,"weight_decay": 1e-4,"save_ckpt_epochs": 1,"dataset_path": "./data_en","class_index": index_en,"pretrained_ckpt": "./mobilenetV2-200_1067.ckpt" # mobilenetV2-200_1067.ckpt

})

數據預處理操作

利用ImageFolderDataset方法讀取垃圾分類數據集,并整體對數據集進行處理。

讀取數據集時指定訓練集和測試集,首先對整個數據集進行歸一化,修改圖像頻道等預處理操作。然后對訓練集的數據依次進行RandomCropDecodeResize、RandomHorizontalFlip、RandomColorAdjust、shuffle操作,以增加訓練數據的豐富度;對測試集進行Decode、Resize、CenterCrop等預處理操作;最后返回處理后的數據集。

def create_dataset(dataset_path, config, training=True, buffer_size=1000):"""create a train or eval datasetArgs:dataset_path(string): the path of dataset.config(struct): the config of train and eval in diffirent platform.Returns:train_dataset, val_dataset"""data_path = os.path.join(dataset_path, 'train' if training else 'test')ds = de.ImageFolderDataset(data_path, num_parallel_workers=4, class_indexing=config.class_index)resize_height = config.image_heightresize_width = config.image_widthnormalize_op = C.Normalize(mean=[0.485*255, 0.456*255, 0.406*255], std=[0.229*255, 0.224*255, 0.225*255])change_swap_op = C.HWC2CHW()type_cast_op = C2.TypeCast(mstype.int32)if training:crop_decode_resize = C.RandomCropDecodeResize(resize_height, scale=(0.08, 1.0), ratio=(0.75, 1.333))horizontal_flip_op = C.RandomHorizontalFlip(prob=0.5)color_adjust = C.RandomColorAdjust(brightness=0.4, contrast=0.4, saturation=0.4)train_trans = [crop_decode_resize, horizontal_flip_op, color_adjust, normalize_op, change_swap_op]train_ds = ds.map(input_columns="image", operations=train_trans, num_parallel_workers=4)train_ds = train_ds.map(input_columns="label", operations=type_cast_op, num_parallel_workers=4)train_ds = train_ds.shuffle(buffer_size=buffer_size)ds = train_ds.batch(config.batch_size, drop_remainder=True)else:decode_op = C.Decode()resize_op = C.Resize((int(resize_width/0.875), int(resize_width/0.875)))center_crop = C.CenterCrop(resize_width)eval_trans = [decode_op, resize_op, center_crop, normalize_op, change_swap_op]eval_ds = ds.map(input_columns="image", operations=eval_trans, num_parallel_workers=4)eval_ds = eval_ds.map(input_columns="label", operations=type_cast_op, num_parallel_workers=4)ds = eval_ds.batch(config.eval_batch_size, drop_remainder=True)return ds

展示部分處理后的數據:

ds = create_dataset(dataset_path=config.dataset_path, config=config, training=False)

print(ds.get_dataset_size())

data = ds.create_dict_iterator(output_numpy=True)._get_next()

images = data['image']

labels = data['label']for i in range(1, 5):plt.subplot(2, 2, i)plt.imshow(np.transpose(images[i], (1,2,0)))plt.title('label: %s' % class_en[labels[i]])plt.xticks([])

plt.show()

MobileNetV2模型搭建

__all__ = ['MobileNetV2', 'MobileNetV2Backbone', 'MobileNetV2Head', 'mobilenet_v2']def _make_divisible(v, divisor, min_value=None):if min_value is None:min_value = divisornew_v = max(min_value, int(v + divisor / 2) // divisor * divisor)if new_v < 0.9 * v:new_v += divisorreturn new_vclass GlobalAvgPooling(nn.Cell):"""Global avg pooling definition.Args:Returns:Tensor, output tensor.Examples:>>> GlobalAvgPooling()"""def __init__(self):super(GlobalAvgPooling, self).__init__()def construct(self, x):x = P.mean(x, (2, 3))return xclass ConvBNReLU(nn.Cell):"""Convolution/Depthwise fused with Batchnorm and ReLU block definition.Args:in_planes (int): Input channel.out_planes (int): Output channel.kernel_size (int): Input kernel size.stride (int): Stride size for the first convolutional layer. Default: 1.groups (int): channel group. Convolution is 1 while Depthiwse is input channel. Default: 1.Returns:Tensor, output tensor.Examples:>>> ConvBNReLU(16, 256, kernel_size=1, stride=1, groups=1)"""def __init__(self, in_planes, out_planes, kernel_size=3, stride=1, groups=1):super(ConvBNReLU, self).__init__()padding = (kernel_size - 1) // 2in_channels = in_planesout_channels = out_planesif groups == 1:conv = nn.Conv2d(in_channels, out_channels, kernel_size, stride, pad_mode='pad', padding=padding)else:out_channels = in_planesconv = nn.Conv2d(in_channels, out_channels, kernel_size, stride, pad_mode='pad',padding=padding, group=in_channels)layers = [conv, nn.BatchNorm2d(out_planes), nn.ReLU6()]self.features = nn.SequentialCell(layers)def construct(self, x):output = self.features(x)return outputclass InvertedResidual(nn.Cell):"""Mobilenetv2 residual block definition.Args:inp (int): Input channel.oup (int): Output channel.stride (int): Stride size for the first convolutional layer. Default: 1.expand_ratio (int): expand ration of input channelReturns:Tensor, output tensor.Examples:>>> ResidualBlock(3, 256, 1, 1)"""def __init__(self, inp, oup, stride, expand_ratio):super(InvertedResidual, self).__init__()assert stride in [1, 2]hidden_dim = int(round(inp * expand_ratio))self.use_res_connect = stride == 1 and inp == ouplayers = []if expand_ratio != 1:layers.append(ConvBNReLU(inp, hidden_dim, kernel_size=1))layers.extend([ConvBNReLU(hidden_dim, hidden_dim,stride=stride, groups=hidden_dim),nn.Conv2d(hidden_dim, oup, kernel_size=1,stride=1, has_bias=False),nn.BatchNorm2d(oup),])self.conv = nn.SequentialCell(layers)self.cast = P.Cast()def construct(self, x):identity = xx = self.conv(x)if self.use_res_connect:return P.add(identity, x)return xclass MobileNetV2Backbone(nn.Cell):"""MobileNetV2 architecture.Args:class_num (int): number of classes.width_mult (int): Channels multiplier for round to 8/16 and others. Default is 1.has_dropout (bool): Is dropout used. Default is falseinverted_residual_setting (list): Inverted residual settings. Default is Noneround_nearest (list): Channel round to . Default is 8Returns:Tensor, output tensor.Examples:>>> MobileNetV2(num_classes=1000)"""def __init__(self, width_mult=1., inverted_residual_setting=None, round_nearest=8,input_channel=32, last_channel=1280):super(MobileNetV2Backbone, self).__init__()block = InvertedResidual# setting of inverted residual blocksself.cfgs = inverted_residual_settingif inverted_residual_setting is None:self.cfgs = [# t, c, n, s[1, 16, 1, 1],[6, 24, 2, 2],[6, 32, 3, 2],[6, 64, 4, 2],[6, 96, 3, 1],[6, 160, 3, 2],[6, 320, 1, 1],]# building first layerinput_channel = _make_divisible(input_channel * width_mult, round_nearest)self.out_channels = _make_divisible(last_channel * max(1.0, width_mult), round_nearest)features = [ConvBNReLU(3, input_channel, stride=2)]# building inverted residual blocksfor t, c, n, s in self.cfgs:output_channel = _make_divisible(c * width_mult, round_nearest)for i in range(n):stride = s if i == 0 else 1features.append(block(input_channel, output_channel, stride, expand_ratio=t))input_channel = output_channelfeatures.append(ConvBNReLU(input_channel, self.out_channels, kernel_size=1))self.features = nn.SequentialCell(features)self._initialize_weights()def construct(self, x):x = self.features(x)return xdef _initialize_weights(self):"""Initialize weights.Args:Returns:None.Examples:>>> _initialize_weights()"""self.init_parameters_data()for _, m in self.cells_and_names():if isinstance(m, nn.Conv2d):n = m.kernel_size[0] * m.kernel_size[1] * m.out_channelsm.weight.set_data(Tensor(np.random.normal(0, np.sqrt(2. / n),m.weight.data.shape).astype("float32")))if m.bias is not None:m.bias.set_data(Tensor(np.zeros(m.bias.data.shape, dtype="float32")))elif isinstance(m, nn.BatchNorm2d):m.gamma.set_data(Tensor(np.ones(m.gamma.data.shape, dtype="float32")))m.beta.set_data(Tensor(np.zeros(m.beta.data.shape, dtype="float32")))@propertydef get_features(self):return self.featuresclass MobileNetV2Head(nn.Cell):"""MobileNetV2 architecture.Args:class_num (int): Number of classes. Default is 1000.has_dropout (bool): Is dropout used. Default is falseReturns:Tensor, output tensor.Examples:>>> MobileNetV2(num_classes=1000)"""def __init__(self, input_channel=1280, num_classes=1000, has_dropout=False, activation="None"):super(MobileNetV2Head, self).__init__()# mobilenet headhead = ([GlobalAvgPooling(), nn.Dense(input_channel, num_classes, has_bias=True)] if not has_dropout else[GlobalAvgPooling(), nn.Dropout(0.2), nn.Dense(input_channel, num_classes, has_bias=True)])self.head = nn.SequentialCell(head)self.need_activation = Trueif activation == "Sigmoid":self.activation = nn.Sigmoid()elif activation == "Softmax":self.activation = nn.Softmax()else:self.need_activation = Falseself._initialize_weights()def construct(self, x):x = self.head(x)if self.need_activation:x = self.activation(x)return xdef _initialize_weights(self):"""Initialize weights.Args:Returns:None.Examples:>>> _initialize_weights()"""self.init_parameters_data()for _, m in self.cells_and_names():if isinstance(m, nn.Dense):m.weight.set_data(Tensor(np.random.normal(0, 0.01, m.weight.data.shape).astype("float32")))if m.bias is not None:m.bias.set_data(Tensor(np.zeros(m.bias.data.shape, dtype="float32")))@propertydef get_head(self):return self.headclass MobileNetV2(nn.Cell):"""MobileNetV2 architecture.Args:class_num (int): number of classes.width_mult (int): Channels multiplier for round to 8/16 and others. Default is 1.has_dropout (bool): Is dropout used. Default is falseinverted_residual_setting (list): Inverted residual settings. Default is Noneround_nearest (list): Channel round to . Default is 8Returns:Tensor, output tensor.Examples:>>> MobileNetV2(backbone, head)"""def __init__(self, num_classes=1000, width_mult=1., has_dropout=False, inverted_residual_setting=None, \round_nearest=8, input_channel=32, last_channel=1280):super(MobileNetV2, self).__init__()self.backbone = MobileNetV2Backbone(width_mult=width_mult, \inverted_residual_setting=inverted_residual_setting, \round_nearest=round_nearest, input_channel=input_channel, last_channel=last_channel).get_featuresself.head = MobileNetV2Head(input_channel=self.backbone.out_channel, num_classes=num_classes, \has_dropout=has_dropout).get_headdef construct(self, x):x = self.backbone(x)x = self.head(x)return xclass MobileNetV2Combine(nn.Cell):"""MobileNetV2Combine architecture.Args:backbone (Cell): the features extract layers.head (Cell): the fully connected layers.Returns:Tensor, output tensor.Examples:>>> MobileNetV2(num_classes=1000)"""def __init__(self, backbone, head):super(MobileNetV2Combine, self).__init__(auto_prefix=False)self.backbone = backboneself.head = headdef construct(self, x):x = self.backbone(x)x = self.head(x)return xdef mobilenet_v2(backbone, head):return MobileNetV2Combine(backbone, head)

MobileNetV2模型的訓練與測試

訓練策略

一般情況下,模型訓練時采用靜態學習率,如0.01。隨著訓練步數的增加,模型逐漸趨于收斂,對權重參數的更新幅度應該逐漸降低,以減小模型訓練后期的抖動。所以,模型訓練時可以采用動態下降的學習率,常見的學習率下降策略有:

- polynomial decay/square decay;

- cosine decay;

- exponential decay;

- stage decay.

這里使用cosine decay下降策略:

def cosine_decay(total_steps, lr_init=0.0, lr_end=0.0, lr_max=0.1, warmup_steps=0):"""Applies cosine decay to generate learning rate array.Args:total_steps(int): all steps in training.lr_init(float): init learning rate.lr_end(float): end learning ratelr_max(float): max learning rate.warmup_steps(int): all steps in warmup epochs.Returns:list, learning rate array."""lr_init, lr_end, lr_max = float(lr_init), float(lr_end), float(lr_max)decay_steps = total_steps - warmup_stepslr_all_steps = []inc_per_step = (lr_max - lr_init) / warmup_steps if warmup_steps else 0for i in range(total_steps):if i < warmup_steps:lr = lr_init + inc_per_step * (i + 1)else:cosine_decay = 0.5 * (1 + math.cos(math.pi * (i - warmup_steps) / decay_steps))lr = (lr_max - lr_end) * cosine_decay + lr_endlr_all_steps.append(lr)return lr_all_steps

在模型訓練過程中,可以添加檢查點(Checkpoint)用于保存模型的參數,以便進行推理及中斷后再訓練使用。使用場景如下:

- 訓練后推理場景

- 模型訓練完畢后保存模型的參數,用于推理或預測操作。

- 訓練過程中,通過實時驗證精度,把精度最高的模型參數保存下來,用于預測操作。

- 再訓練場景

- 進行長時間訓練任務時,保存訓練過程中的Checkpoint文件,防止任務異常退出后從初始狀態開始訓練。

- Fine-tuning(微調)場景,即訓練一個模型并保存參數,基于該模型,面向第二個類似任務進行模型訓練。

這里加載ImageNet數據上預訓練的MobileNetv2進行Fine-tuning,只訓練最后修改的FC層,并在訓練過程中保存Checkpoint。

def switch_precision(net, data_type):if ms.get_context('device_target') == "Ascend":net.to_float(data_type)for _, cell in net.cells_and_names():if isinstance(cell, nn.Dense):cell.to_float(ms.float32)

模型訓練與測試

在進行正式的訓練之前,定義訓練函數,讀取數據并對模型進行實例化,定義優化器和損失函數。

首先簡單介紹損失函數及優化器的概念:

-

損失函數:又叫目標函數,用于衡量預測值與實際值差異的程度。深度學習通過不停地迭代來縮小損失函數的值。定義一個好的損失函數,可以有效提高模型的性能。

-

優化器:用于最小化損失函數,從而在訓練過程中改進模型。

定義了損失函數后,可以得到損失函數關于權重的梯度。梯度用于指示優化器優化權重的方向,以提高模型性能。

在訓練MobileNetV2之前對MobileNetV2Backbone層的參數進行了固定,使其在訓練過程中對該模塊的權重參數不進行更新;只對MobileNetV2Head模塊的參數進行更新。

MindSpore支持的損失函數有SoftmaxCrossEntropyWithLogits、L1Loss、MSELoss等。這里使用SoftmaxCrossEntropyWithLogits損失函數。

訓練測試過程中會打印loss值,loss值會波動,但總體來說loss值會逐步減小,精度逐步提高。每個人運行的loss值有一定隨機性,不一定完全相同。

每打印一個epoch后模型都會在測試集上的計算測試精度,從打印的精度值分析MobileNetV2模型的預測能力在不斷提升。

from mindspore.amp import FixedLossScaleManager

import time

LOSS_SCALE = 1024train_dataset = create_dataset(dataset_path=config.dataset_path, config=config)

eval_dataset = create_dataset(dataset_path=config.dataset_path, config=config)

step_size = train_dataset.get_dataset_size()backbone = MobileNetV2Backbone() #last_channel=config.backbone_out_channels

# Freeze parameters of backbone. You can comment these two lines.

for param in backbone.get_parameters():param.requires_grad = False

# load parameters from pretrained model

load_checkpoint(config.pretrained_ckpt, backbone)head = MobileNetV2Head(input_channel=backbone.out_channels, num_classes=config.num_classes)

network = mobilenet_v2(backbone, head)# define loss, optimizer, and model

loss = nn.SoftmaxCrossEntropyWithLogits(sparse=True, reduction='mean')

loss_scale = FixedLossScaleManager(LOSS_SCALE, drop_overflow_update=False)

lrs = cosine_decay(config.epochs * step_size, lr_max=config.lr_max)

opt = nn.Momentum(network.trainable_params(), lrs, config.momentum, config.weight_decay, loss_scale=LOSS_SCALE)# 定義用于訓練的train_loop函數。

def train_loop(model, dataset, loss_fn, optimizer):# 定義正向計算函數def forward_fn(data, label):logits = model(data)loss = loss_fn(logits, label)return loss# 定義微分函數,使用mindspore.value_and_grad獲得微分函數grad_fn,輸出loss和梯度。# 由于是對模型參數求導,grad_position 配置為None,傳入可訓練參數。grad_fn = ms.value_and_grad(forward_fn, None, optimizer.parameters)# 定義 one-step training函數def train_step(data, label):loss, grads = grad_fn(data, label)optimizer(grads)return losssize = dataset.get_dataset_size()model.set_train()for batch, (data, label) in enumerate(dataset.create_tuple_iterator()):loss = train_step(data, label)if batch % 10 == 0:loss, current = loss.asnumpy(), batchprint(f"loss: {loss:>7f} [{current:>3d}/{size:>3d}]")# 定義用于測試的test_loop函數。

def test_loop(model, dataset, loss_fn):num_batches = dataset.get_dataset_size()model.set_train(False)total, test_loss, correct = 0, 0, 0for data, label in dataset.create_tuple_iterator():pred = model(data)total += len(data)test_loss += loss_fn(pred, label).asnumpy()correct += (pred.argmax(1) == label).asnumpy().sum()test_loss /= num_batchescorrect /= totalprint(f"Test: \n Accuracy: {(100*correct):>0.1f}%, Avg loss: {test_loss:>8f} \n")print("============== Starting Training ==============")

# 由于時間問題,訓練過程只進行了2個epoch ,可以根據需求調整。

epoch_begin_time = time.time()

epochs = 2

for t in range(epochs):begin_time = time.time()print(f"Epoch {t+1}\n-------------------------------")train_loop(network, train_dataset, loss, opt)ms.save_checkpoint(network, "save_mobilenetV2_model.ckpt")end_time = time.time()times = end_time - begin_timeprint(f"per epoch time: {times}s")test_loop(network, eval_dataset, loss)

epoch_end_time = time.time()

times = epoch_end_time - epoch_begin_time

print(f"total time: {times}s")

print("============== Training Success ==============")

模型推理

加載模型Checkpoint進行推理,使用load_checkpoint接口加載數據時,需要把數據傳入給原始網絡,而不能傳遞給帶有優化器和損失函數的訓練網絡。

CKPT="save_mobilenetV2_model.ckpt"

def image_process(image):"""Precess one image per time.Args:image: shape (H, W, C)"""mean=[0.485*255, 0.456*255, 0.406*255]std=[0.229*255, 0.224*255, 0.225*255]image = (np.array(image) - mean) / stdimage = image.transpose((2,0,1))img_tensor = Tensor(np.array([image], np.float32))return img_tensordef infer_one(network, image_path):image = Image.open(image_path).resize((config.image_height, config.image_width))logits = network(image_process(image))pred = np.argmax(logits.asnumpy(), axis=1)[0]print(image_path, class_en[pred])def infer():backbone = MobileNetV2Backbone(last_channel=config.backbone_out_channels)head = MobileNetV2Head(input_channel=backbone.out_channels, num_classes=config.num_classes)network = mobilenet_v2(backbone, head)load_checkpoint(CKPT, network)for i in range(91, 100):infer_one(network, f'data_en/test/Cardboard/000{i}.jpg')

infer()

導出AIR/GEIR/ONNX模型文件

導出AIR模型文件,用于后續Atlas 200 DK上的模型轉換與推理。當前僅支持MindSpore+Ascend環境。

backbone = MobileNetV2Backbone(last_channel=config.backbone_out_channels)

head = MobileNetV2Head(input_channel=backbone.out_channels, num_classes=config.num_classes)

network = mobilenet_v2(backbone, head)

load_checkpoint(CKPT, network)input = np.random.uniform(0.0, 1.0, size=[1, 3, 224, 224]).astype(np.float32)

# export(network, Tensor(input), file_name='mobilenetv2.air', file_format='AIR')

# export(network, Tensor(input), file_name='mobilenetv2.pb', file_format='GEIR')

export(network, Tensor(input), file_name='mobilenetv2.onnx', file_format='ONNX')

)