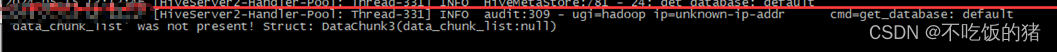

1,背景:carbondata的入庫segments對應的狀態都是success,但是查詢的時候報錯,

2,排查內容

1,segments的狀態 success

2,任務執行記錄日志 正常

3,找到對應查詢的天,指定對應的segments,進行查詢,有能查到數據的

SET carbon.input.segments.default.table_name = segments_id;

select * from table_name limit 1;

有結果

3,排查原因

1,表結構沒問題,之前查詢都是正常的

4,可能原因

1,數據入庫時候數據異常的問題

5,驗證4的想法

用腳本實現,逐個排查有問題的那一天的segments

發現有些segments沒有,最后排查,有些segment是有問題的,雖然狀態是succes,刪掉對應的segments,查詢正常

6,根據處理步驟,整理出來自動刪除腳本如下

############mkdir -pv logs mkdir -pv result##################第一個腳本,獲取segmentid以及比對異常segementidget_segment_id.sh

#!/bin/bashsource /etc/profile

date_s=`date -d "1 day ago" +"%Y-%m-%d 00:00:01"`

date_e=`date -d "1 day ago" +"%Y-%m-%d 23:59:59"`rm -f ./result/*

rm -f *.txt/home/eversec/jdbc/bin/everdata-jdbc.sh -i "jdbc:hive2://10.192.21.1:10000" -q " SHOW SEGMENTS ON default.table_name as select * from table_name_segments where loadstarttime>='$date_s' and loadstarttime<='$date_e' order by loadStartTime asc limit 1;" -o start_id.txt

/home/eversec/jdbc/bin/everdata-jdbc.sh -i "jdbc:hive2://10.192.21.1:10000" -q " SHOW SEGMENTS ON default.table_name as select * from table_name_segments where loadstarttime>='$date_s' and loadstarttime<='$date_e' order by loadStartTime desc limit 1;" -o end_id.txtif [ -f start_id.txt ] && [ -f end_id.txt ]; thensid=`cat start_id.txt|awk -F',' '{print $1}'`eid=`cat end_id.txt|awk -F',' '{print $1}'`for ((i=$sid; i<=$eid; i++))do/home/eversec/jdbc/bin/everdata-jdbc.sh -i "jdbc:hive2://10.192.21.1:10000" -q "SET carbon.input.segments.default.table_name = $i; select * from table_name where order by hour limit 1;" -o ./result/$iecho $i >>auto_id.txtdonefi##################第二個腳本,刪除使用

cat dele_bad_segment.sh

#!/bin/bashdele_id=""

ls ./result/* |awk -F'/' '{print $3}'> ./segment_id.txt

num_autoid=`cat auto_id.txt |wc -l`if [ $num_autoid -gt 0 ];thenwhile read linedonum=`cat segment_id.txt |grep "$line"|wc -l`if [ $num -eq 0 ];thenecho $line >> bad_segmentid.txtfidone <auto_id.txt

finum_de=`cat bad_segmentid.txt |wc -l`

if [ $num_de -gt 0 ];thenend_id=`tail -1 bad_segmentid.txt` start_id=`head -1 bad_segmentid.txt`while read linedoif [ $line -eq $start_id ] ;thendele_id="${line}"elif [ $line -ne $start_id ];thendele_id="${dele_id},${line}"fidone <bad_segmentid.txtecho $dele_id/home/eversec/jdbc/bin/everdata-jdbc.sh -i "jdbc:hive2://10.192.21.1:10000" -q "DELETE FROM TABLE table_name WHERE SEGMENT.ID IN ($dele_id);"

fi

深度理解死鎖、內存可見性、volatile關鍵字、wait、notify)

)

——Linux基礎和Linux命令基礎語法)

)