一,前提準備本地ai模型

1,首先需要去ollama官網下載開源ai到本地

網址:Ollama

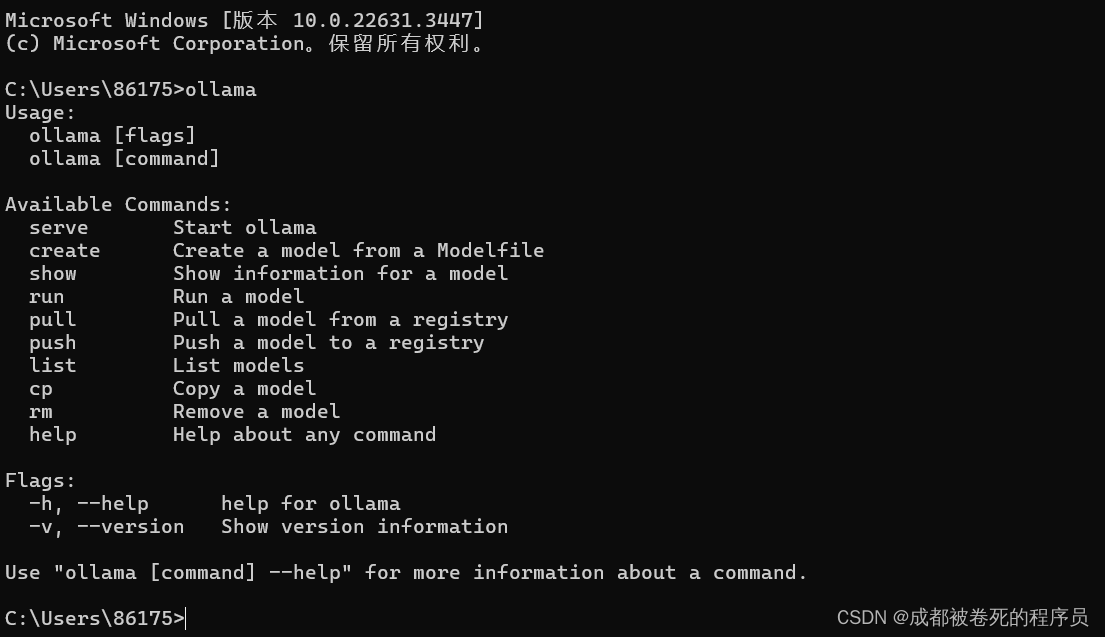

直接下載到本地,然后啟動ollama

啟動完成后,我們可以在cmd中執行ollama可以看到相關命令行

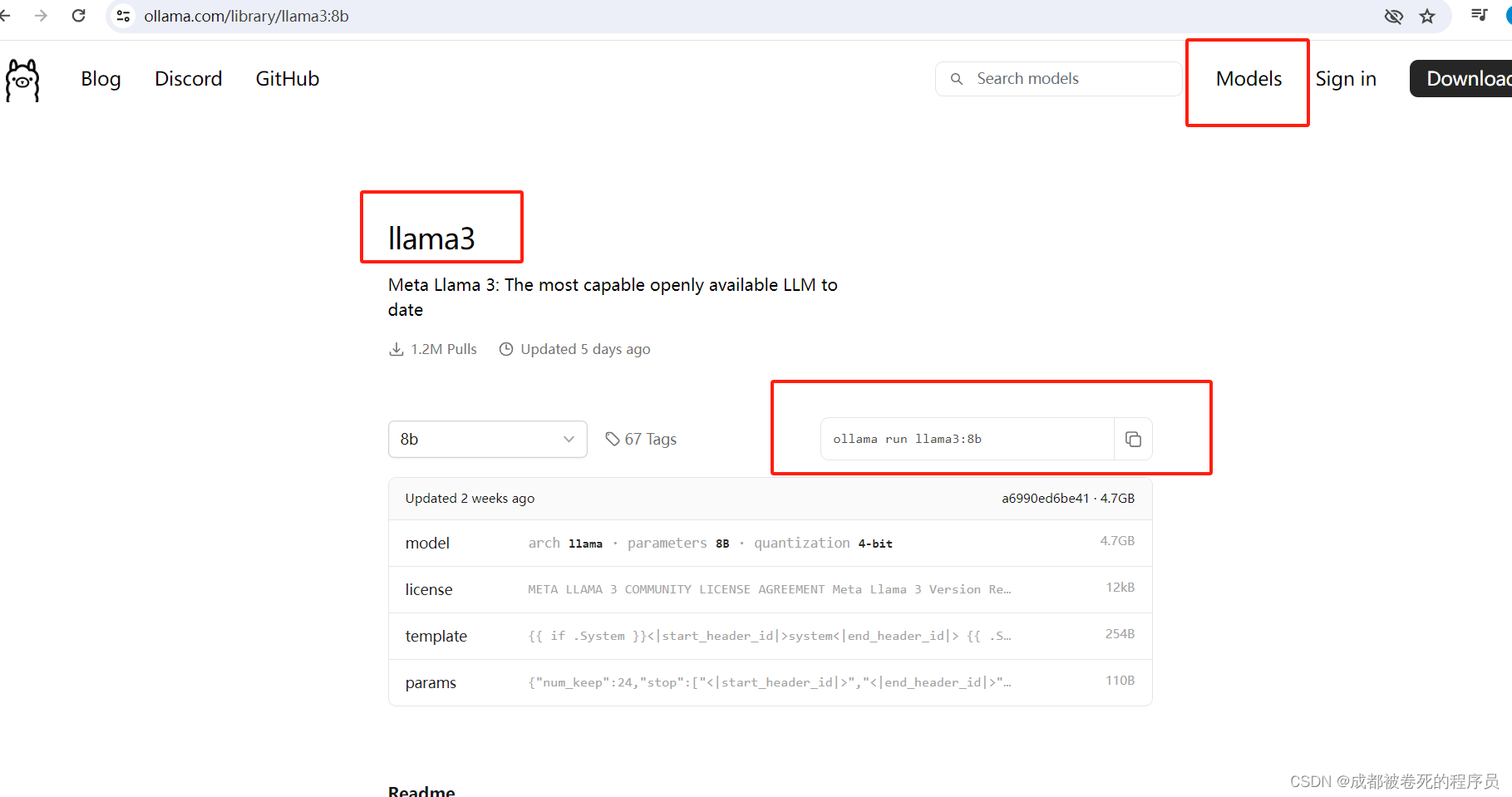

2, 下載ai moudle

然后我們需要在這個ai中給它下載好一個已有模型給我們自己使用

將命令行運行即可下載。

二, 準備本地代碼

1,首先準備pom文件中的相關依賴

<dependencies><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-web</artifactId><version>3.2.5</version></dependency><dependency><groupId>io.springboot.ai</groupId><artifactId>spring-ai-ollama</artifactId><version>1.0.3</version></dependency></dependencies>2,搭建一個簡單的springboot框架

啟動類

import org.springframework.boot.SpringApplication;

import org.springframework.boot.autoconfigure.SpringBootApplication;@SpringBootApplication

public class Application {public static void main(String[] args) {SpringApplication.run(Application.class, args);}

}yaml配置

spring:ai:ollama:base-url: localhost:11434具體代碼實現--controller

import com.rojer.delegete.OllamaDelegete;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RequestParam;

import org.springframework.web.bind.annotation.RestController;@RestController

public class OllamaController {@Autowired(required=true)OllamaDelegete ollamaDelegete;@GetMapping("/ai/getMsg")public Object getOllame(@RequestParam(value = "msg") String msg) {return ollamaDelegete.getOllame(msg);}@GetMapping("/ai/stream/getMsg")public Object getOllameByStream(@RequestParam(value = "msg") String msg) {return ollamaDelegete.getOllameByStream(msg);}具體代碼實現--impl

import com.rojer.delegete.OllamaDelegete;

import org.springframework.ai.chat.ChatResponse;

import org.springframework.ai.chat.prompt.Prompt;

import org.springframework.ai.ollama.OllamaChatClient;

import org.springframework.ai.ollama.api.OllamaApi;

import org.springframework.ai.ollama.api.OllamaOptions;

import org.springframework.stereotype.Service;

import reactor.core.publisher.Flux;import java.util.List;

import java.util.stream.Collectors;@Service

public class OllamaImpl implements OllamaDelegete {OllamaApi ollamaApi;OllamaChatClient chatClient;{ // 實例化ollamaollamaApi = new OllamaApi();OllamaOptions options = new OllamaOptions();options.setModel("llama3");chatClient = new OllamaChatClient(ollamaApi).withDefaultOptions(options);}/*** 普通文本調用* * @param msg* @return*/@Overridepublic Object getOllame(String msg) {Prompt prompt = new Prompt(msg);ChatResponse call = chatClient.call(prompt);return call.getResult().getOutput().getContent();}/*** 流式調用* * @param msg* @return*/@Overridepublic Object getOllameByStream(String msg) {Prompt prompt = new Prompt(msg);Flux<ChatResponse> stream = chatClient.stream(prompt);List<String> result = stream.toStream().map(a -> {return a.getResult().getOutput().getContent();}).collect(Collectors.toList());return result;}}三,調用展示

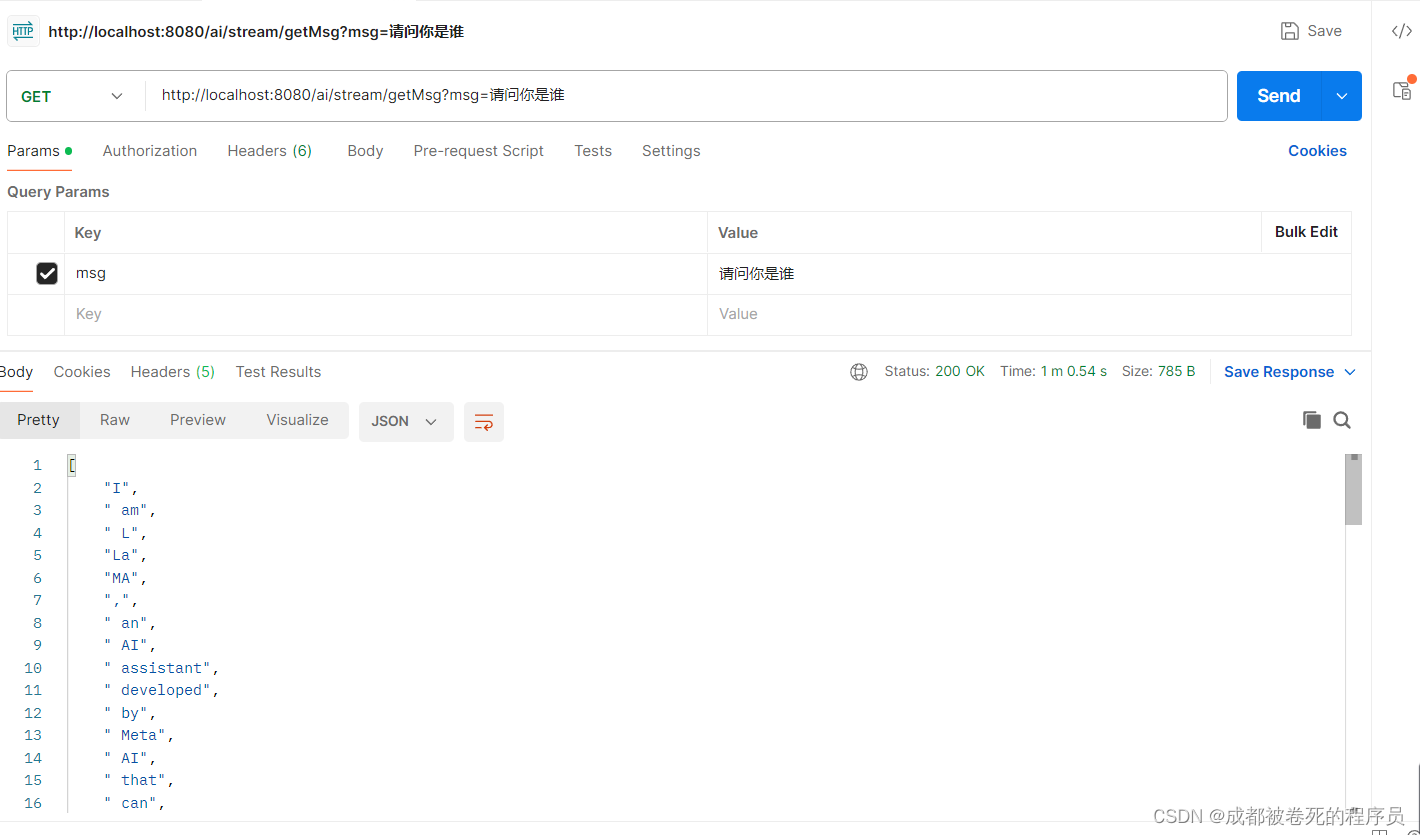

我們看看普通調用的展示

流式調用的展示(我們跟ai聊天,回答不是一下子就出來的,就是這種流式調用所展示的這般)

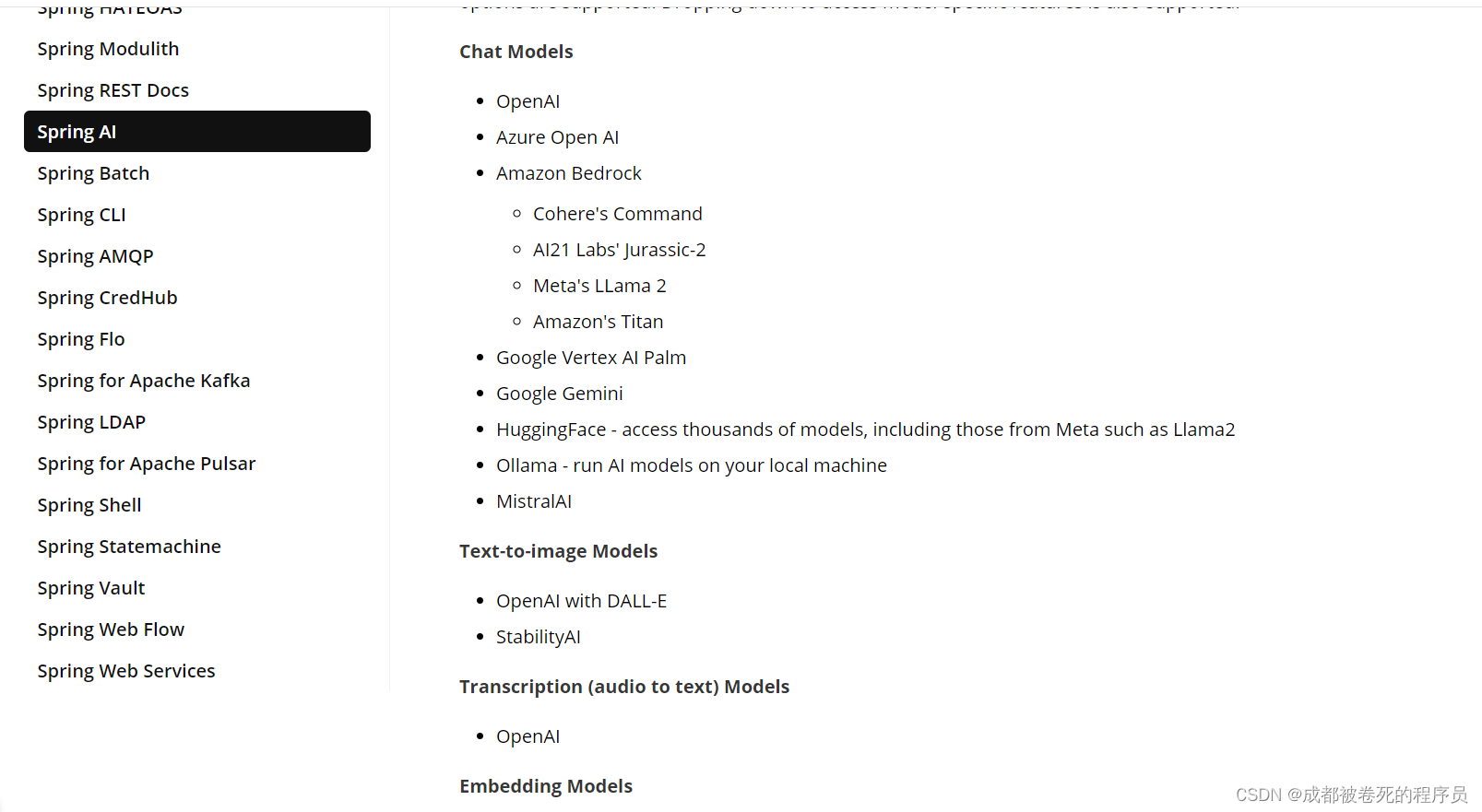

這里不做具體的代碼深挖,只做基本基礎的運用。后期有機會會出個人模型訓練方法。另外我們可以去spring官網查看,目前支持的ai模型有哪些,我這里簡單截個圖,希望各位看官老爺點個贊,加個關注,謝謝!Spring AI

![NSSCTF | [LitCTF 2023]我Flag呢?](http://pic.xiahunao.cn/NSSCTF | [LitCTF 2023]我Flag呢?)

)

- 貪心思維)