上一篇寫的beautifulsoup和request爬取出的結果有誤。首先,TapTap網頁以JS格式解析,且評論并沒有“下一頁”,而是每次加載到底部就要進行等待重新加載。我們需要做的,是模仿瀏覽器的行為,所以這里我們用Selenium的方式爬取。

下載ChromeDriver

ChromeDriver作用是給Pyhton提供一個模擬瀏覽器,讓Python能夠運行一個模擬的瀏覽器進行網頁訪問 用selenium進行鼠標及鍵盤等操作獲取到網頁真正的源代碼。

官方下載地址:https://sites.google.com/a/chromium.org/chromedriver/downloads

注意,一定要下載自己chrome瀏覽器對應版本的驅動,根據自己的電腦版本下載對應系統的文件

以Windows版本為例,將下載好的chromedriver_win64.zip解壓得到一個exe文件,將其復制到Python安裝目錄下的Scripts文件夾即可

爬蟲操作

首先導入所需庫

import pandas as pd

import time

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC滾動到底部的驅動

def scroll_to_bottom(driver):# 使用 JavaScript 模擬滾動到頁面底部driver.execute_script("window.scrollTo(0, document.body.scrollHeight);")爬取評論

def get_taptap_reviews(url, max_reviews=50):reviews = []driver = webdriver.Chrome() # 需要安裝 Chrome WebDriver,并將其路徑添加到系統環境變量中driver.get(url)try:# 等待評論加載完成WebDriverWait(driver, 10).until(EC.presence_of_element_located((By.CLASS_NAME, "text-box__content")))last_review_count = 0while len(reviews) < max_reviews:review_divs = driver.find_elements(By.CLASS_NAME, 'text-box__content')for review_div in review_divs[last_review_count:]:review = review_div.text.strip()reviews.append(review)if len(reviews) >= max_reviews:breakif len(reviews) >= max_reviews:breaklast_review_count = len(review_divs)# 模擬向下滾動頁面scroll_to_bottom(driver)# 等待新評論加載time.sleep(10) # 等待時間也可以根據實際情況調整,確保加載足夠的評論# 檢查是否有新評論加載new_review_divs = driver.find_elements(By.CLASS_NAME, 'text-box__content')if len(new_review_divs) == len(review_divs):break # 沒有新評論加載,退出循環finally:driver.quit()return reviews[:max_reviews]將評論輸出到excel中

def save_reviews_to_excel(reviews, filename='taptap.xlsx'):df = pd.DataFrame(reviews, columns=['comment'])df.to_excel(filename, index=False)main

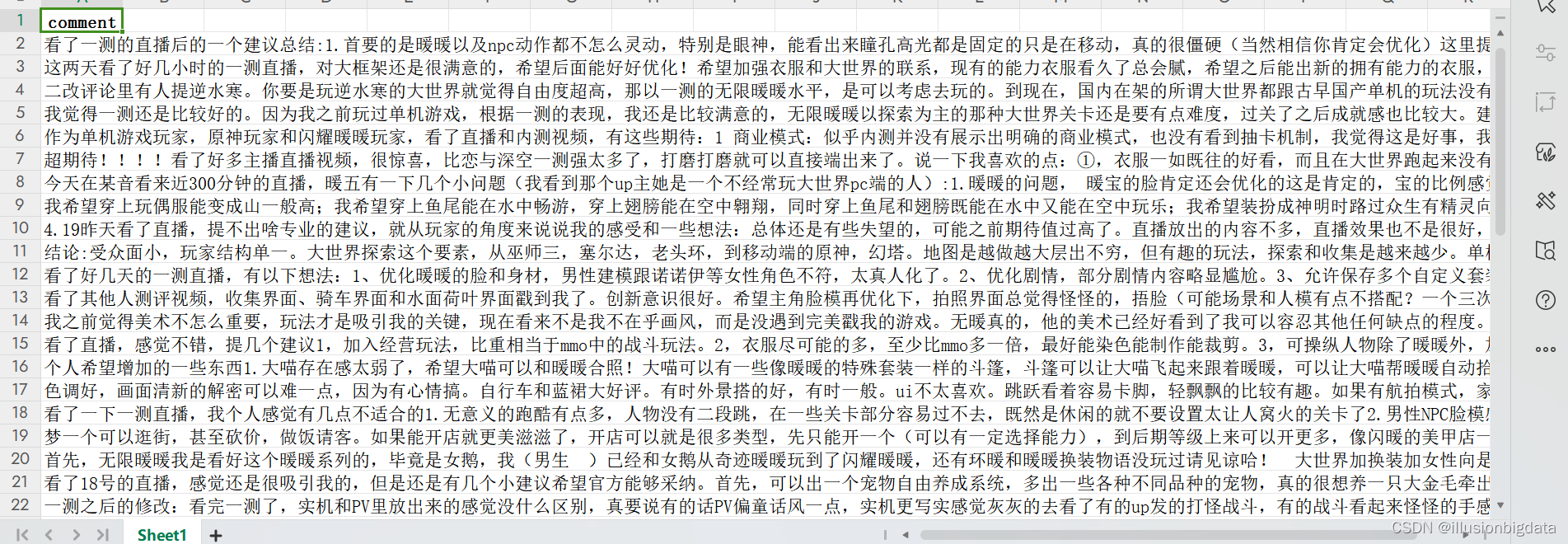

if __name__ == "__main__":url = "https://www.taptap.cn/app/247283/review"max_reviews = 50reviews = get_taptap_reviews(url, max_reviews)save_reviews_to_excel(reviews)查看輸出的結果

代碼匯總

import pandas as pd

import time

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as ECdef scroll_to_bottom(driver):# 使用 JavaScript 模擬滾動到頁面底部driver.execute_script("window.scrollTo(0, document.body.scrollHeight);")def get_taptap_reviews(url, max_reviews=50):reviews = []driver = webdriver.Chrome() # 需要安裝 Chrome WebDriver,并將其路徑添加到系統環境變量中driver.get(url)try:# 等待評論加載完成WebDriverWait(driver, 10).until(EC.presence_of_element_located((By.CLASS_NAME, "text-box__content")))last_review_count = 0while len(reviews) < max_reviews:review_divs = driver.find_elements(By.CLASS_NAME, 'text-box__content')for review_div in review_divs[last_review_count:]:review = review_div.text.strip()reviews.append(review)if len(reviews) >= max_reviews:breakif len(reviews) >= max_reviews:breaklast_review_count = len(review_divs)# 模擬向下滾動頁面scroll_to_bottom(driver)# 等待新評論加載time.sleep(10) # 等待時間也可以根據實際情況調整,確保加載足夠的評論# 檢查是否有新評論加載new_review_divs = driver.find_elements(By.CLASS_NAME, 'text-box__content')if len(new_review_divs) == len(review_divs):break # 沒有新評論加載,退出循環finally:driver.quit()return reviews[:max_reviews]def save_reviews_to_excel(reviews, filename='taptap.xlsx'):df = pd.DataFrame(reviews, columns=['comment'])df.to_excel(filename, index=False)if __name__ == "__main__":url = "https://www.taptap.cn/app/247283/review"max_reviews = 50reviews = get_taptap_reviews(url, max_reviews)save_reviews_to_excel(reviews)

)

——chunk-parser)

)

計算機網絡)

)

)

第 6 章 樹和二叉樹(二叉樹的二叉線索存儲))

)

)

)