摘要:本文介紹了通過docker-compose配置啟動達夢數據庫(DM8)的方法。配置包括容器鏡像、端口映射、數據卷掛載以及關鍵環境變量設置(如字符集、大小寫敏感等)。也說明了啟動過程可能遇到的一些問題。

通過docker-compose啟動達夢數據庫可以按照以下配置:

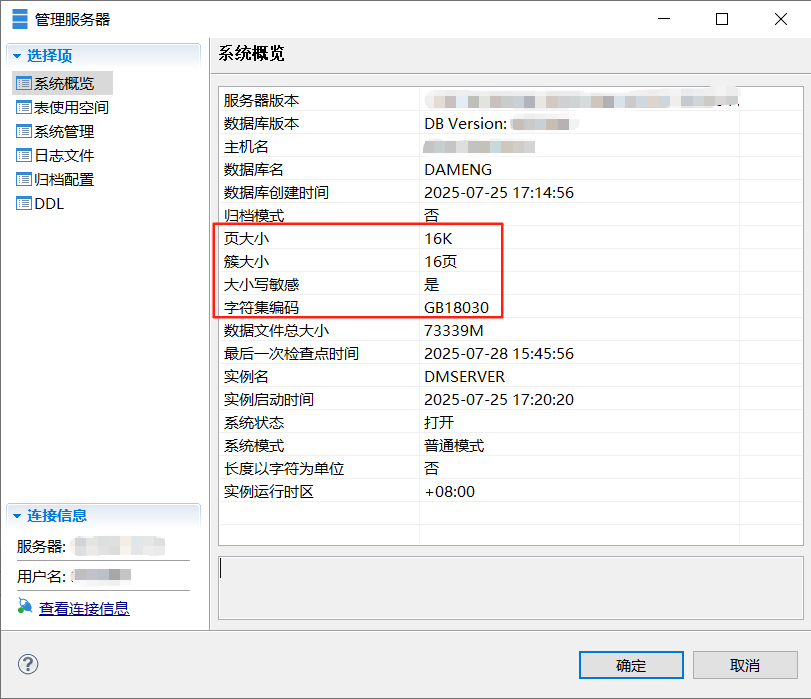

services:dm8:image: dm8_single:v8.1.2.128_ent_x86_64_ctm_pack4container_name: dm8restart: alwaysprivileged: trueenvironment:LD_LIBRARY_PATH: /opt/dmdbms/binPAGE_SIZE: 16 # 頁大小EXTENT_SIZE: 32 # 簇大小LOG_SIZE: 1024INSTANCE_NAME: dm8CASE_SENSITIVE: 1 # 是否區分大小寫,0 表示不區分,1 表示區分UNICODE_FLAG: 0 # 字符集,0 GB18030,1 UTF-8SYSDBA_PWD: SYSDBA001 # 密碼長度為 9~48 個字符ports:- "5236:5236"volumes:- ./dm8:/opt/dmdbms/data鏡像 image:修改為自己的鏡像名稱:版本

容器名?container_name:隨便起

重啟策略 restart

超級用戶權限?privileged:建議避免在生產環境中使用

環境變量 environment 中格外注意最后兩個,是否區分大小寫CASE_SENSITIVE,字符集編碼UNICODE_FLAG

環境變量里的這些參數設置完啟動后就不能修改了,建議在初始化實例時確認需求后設置

默認賬戶SYSDBA,默認密碼SYSDBA001,SYSDBA_PWD不設置時則使用默認密碼,密碼長度為 9~48 個字符,密碼設置特殊字符的話可能有問題。

端口 ports:5236

數據存儲地址 volumes:實際地址:虛擬地址

?執行下面命令啟動:

docker-compose up -d可以查看啟動日志:

docker logs 容器id啟動過程可能出現的問題:

查看容器日志顯示初始化文件未找到,使用默認配置,啟動后依然有問題,可以嘗試自己指定初始化文件:

創建文件dm.ini,粘貼下面內容

#DaMeng Database Server Configuration file

#this is comments#file location of dm.ctlCTL_PATH = /opt/dmdbms/data/DAMENG/dm.ctl #ctl file pathCTL_BAK_PATH = /opt/dmdbms/data/DAMENG/ctl_bak #dm.ctl backup pathCTL_BAK_NUM = 10 #backup number of dm.ctl, allowed to keep one more backup file besides specified number.SYSTEM_PATH = /opt/dmdbms/data/DAMENG #system pathCONFIG_PATH = /opt/dmdbms/data/DAMENG #config pathTEMP_PATH = /opt/dmdbms/data/DAMENG #temporary file pathBAK_PATH = /opt/dmdbms/data/DAMENG/bak #backup file pathDFS_PATH = $/DAMENG #path of db_file in dfs#instance nameINSTANCE_NAME = DMSERVER #Instance name#memory pool and bufferMAX_OS_MEMORY = 100 #Maximum Percent Of OS MemoryMEMORY_POOL = 500 #Memory Pool Size In MegabyteMEMORY_N_POOLS = 1 #Number of Memory Pool MEMORY_TARGET = 15000 #Memory Share Pool Target Size In MegabyteMEMORY_EXTENT_SIZE = 32 #Memory Extent Size In MegabyteMEMORY_LEAK_CHECK = 0 #Memory Pool Leak Checking FlagMEMORY_MAGIC_CHECK = 1 #Memory Pool Magic Checking FlagHUGEPAGE_THRESHOLD = 16 #IF not zero, try using hugepage if allocating size >= thresold * 2MMEMORY_BAK_POOL = 4 #Memory Backup Pool Size In MegabyteHUGE_MEMORY_PERCENTAGE = 50 #Maximum percent of HUGE buffer that can be allocated to work as common memory poolHUGE_BUFFER = 80 #Initial Huge Buffer Size In MegabytesHUGE_BUFFER_POOLS = 4 #number of Huge buffer poolsBUFFER = 1000 #Initial System Buffer Size In MegabytesBUFFER_POOLS = 19 #number of buffer poolsFAST_POOL_PAGES = 3000 #number of pages for fast poolFAST_ROLL_PAGES = 1000 #number of pages for fast roll pagesKEEP = 8 #system KEEP buffer size in MegabytesRECYCLE = 300 #system RECYCLE buffer size in MegabytesRECYCLE_POOLS = 19 #Number of recycle buffer poolsROLLSEG = 1 #system ROLLSEG buffer size in MegabytesROLLSEG_POOLS = 19 #Number of rollseg buffer poolsMULTI_PAGE_GET_NUM = 1 #Maximum number of pages for each read of bufferPRELOAD_SCAN_NUM = 0 #The number of pages scanned continuously to start preload taskPRELOAD_EXTENT_NUM = 0 #The number of clusters preloaded for the first timeSORT_BUF_SIZE = 20 #maximum sort buffer size in MegabytesSORT_BLK_SIZE = 1 #maximum sort blk size in MegabytesSORT_BUF_GLOBAL_SIZE = 1000 #maximum global sort buffer size in MegabytesSORT_FLAG = 0 #choose method of sortHAGR_HASH_SIZE = 100000 #hash table size for hagrHJ_BUF_GLOBAL_SIZE = 5000 #maximum hash buffer size for all hash join in MegabytesHJ_BUF_SIZE = 500 #maximum hash buffer size for single hash join in MegabytesHJ_BLK_SIZE = 2 #hash buffer size allocated each time for hash join in MegabytesHAGR_BUF_GLOBAL_SIZE = 5000 #maximum buffer size for all hagr in MegabytesHAGR_BUF_SIZE = 500 #maximum buffer size for single hagr in MegabytesHAGR_BLK_SIZE = 2 #buffer size allocated each time for hagr in MegabytesMTAB_MEM_SIZE = 8 #memory table size in KilobytesFTAB_MEM_SIZE = 0 #file table package size in KilobytesMMT_GLOBAL_SIZE = 4000 #memory map table global size in megabytesMMT_SIZE = 0 #memory map table size in megabytesMMT_FLAG = 1 #ways of storing bdta data in memory map tableDICT_BUF_SIZE = 50 #dictionary buffer size in MegabytesHFS_CACHE_SIZE = 160 #hfs cache size in Megabytes, used in huge horizon table for insert, update,deleteVM_STACK_SIZE = 256 #VM stack size in KilobytesVM_POOL_SIZE = 64 #VM pool size in KilobytesVM_POOL_TARGET = 16384 #VM pool target size in KilobytesSESS_POOL_SIZE = 64 #session pool size in KilobytesSESS_POOL_TARGET = 16384 #session pool target size in KilobytesRT_HEAP_TARGET = 8192 #runtime heap target size in KilobytesVM_MEM_HEAP = 0 #Whether to allocate memory to VM from HEAPRFIL_RECV_BUF_SIZE = 16 #redo file recover buffer size in MegabytesCOLDATA_POOL_SIZE = 0 #coldata pool size for each worker group HAGR_DISTINCT_HASH_TABLE_SIZE = 10000 #Size of hagr distinct hash tableCNNTB_HASH_TABLE_SIZE = 100 #Size of hash table in connect-by operationGLOBAL_RTREE_BUF_SIZE = 100 #The total size of buffer for rtreeSINGLE_RTREE_BUF_SIZE = 10 #The size of buffer for single rtreeSORT_OPT_SIZE = 0 #once max memory size of radix sort assist count arrayTSORT_OPT = 1 #minimizing memory allocation during small rowset sorting if possibleDFS_BUF_FLUSH_OPT = 0 #Whether to flush buffer page in opt mode for DFS storageBIND_PLN_PERCENT = 30 #Maximum percent of bind plan in plan cache poolFBACK_HASH_SIZE = 10000 #hash table size for flashback functionXBOX_MEM_TARGET = 1024 #Memory target size in Megabyte of XBOX system#threadWORKER_THREADS = 16 #Number Of Worker ThreadsTASK_THREADS = 16 #Number Of Task ThreadsUTHR_FLAG = 0 #User Thread FlagFAST_RW_LOCK = 1 #Fast Read Write Lock flagSPIN_TIME = 4000 #Spin Time For Threads In MicrosecondsWORK_THRD_STACK_SIZE = 8192 #Worker Thread Stack Size In KilobytesWORK_THRD_RESERVE_SIZE = 200 #Worker Thread Reserve Stack Size In KilobytesWORKER_CPU_PERCENT = 0 #Percent of CPU number special for worker threadNESTED_C_STYLE_COMMENT = 0 #flag for C stype nested comment#queryUSE_PLN_POOL = 1 #Query Plan Reuse Mode, 0: Forbidden; 1:strictly reuse, 2:parsing reuse, 3:mixed parsing reuseDYN_SQL_CAN_CACHE = 1 #Dynamic SQL cache mode. 0: Forbidden; 1: Allowed if the USE_PLN_POOL is non-zero;VPD_CAN_CACHE = 0 #VPD SQL cache mode. 0: Forbidden; 1: Allowed if the USE_PLN_POOL is non-zero;RS_CAN_CACHE = 0 #Resultset cache mode. 0: Forbidden; 1: Allowed only if the USE_PLN_POOL is non-zero;RS_CACHE_TABLES = #Tables allowed to enable result set cacheRS_CACHE_MIN_TIME = 0 #Least time for resultset to be cachedRS_BDTA_FLAG = 0 #Resultset mode. 0: row; 2: bdta;RS_BDTA_BUF_SIZE = 32 #Maximum size of message in Kilobytes for BDTA cursor, it's valid only if RS_BDTA_FLAG is set to 2RS_TUPLE_NUM_LIMIT = 2000 #Maximum number for resultset to be cachedRESULT_SET_LIMIT = 10000 #Maximum Number Of cached ResultsetsRESULT_SET_FOR_QUERY = 0 #Whether to generate result set for non-query statementSESSION_RESULT_SET_LIMIT = 10000 #Maximum number of cached result sets for each session, 0 means unlimitedBUILD_FORWARD_RS = 0 #Whether to generate result set for forward only cursorMAX_OPT_N_TABLES = 6 #Maximum Number Of Tables For Query OptimizationMAX_N_GRP_PUSH_DOWN = 5 #Maximum Number Of Rels For Group push down OptimizationCNNTB_MAX_LEVEL = 20000 #Maximum Level Of Hierarchical QueryCTE_MAXRECURSION = 100 #Maximum recursive Level Of Common Expression TableCTE_OPT_FLAG = 1 #Optimize recursive with, 0: false, 1: convert refed subquery to invocationBATCH_PARAM_OPT = 0 #optimize flag for DML with batch binded paramsCLT_CONST_TO_PARAM = 0 #Whether to convert constant to parameterLIKE_OPT_FLAG = 127 #the optimized flag of LIKE expression FILTER_PUSH_DOWN = 0 #whether push down filter to base tableUSE_MCLCT = 2 #mclct use flag for replace mgat PHF_NTTS_OPT = 1 #phf ntts opt flagMPP_MOTION_SYNC = 200 #mpp motion sync check numberUPD_DEL_OPT = 2 #update&delete opt flag, 0: false, 1: opt, 2: opt & ntts optENABLE_INJECT_HINT = 0 #enable inject hintFETCH_PACKAGE_SIZE = 512 #command fetch package sizeUPD_QRY_LOCK_MODE = 0 #lock mode of FOR UPDATE queryENABLE_DIST_IN_SUBQUERY_OPT = 0 #Whether to enable in-subquery optimizationMAX_OPT_N_OR_BEXPS = 7 #maximum number of OR bool expressions for query optimizationUSE_HAGR_FLAG = 0 #Whether to use HAGR operator when can't use SAGR operatorDTABLE_PULLUP_FLAG = 1 #the flag of pulling up derived tableVIEW_PULLUP_FLAG = 0 #the flag of pulling up viewGROUP_OPT_FLAG = 52 #the flag of opt groupFROM_OPT_FLAG = 0 #the flag of opt fromHAGR_PARALLEL_OPT_FLAG = 0 #the flag of opt hagr in mpp or parallelHAGR_DISTINCT_OPT_FLAG = 2 #the flag of opt hagr distinct in mppREFED_EXISTS_OPT_FLAG = 1 #Whether to optimize correlated exists-subquery into non-correlated in-subqueryREFED_OPS_SUBQUERY_OPT_FLAG = 0 #Whether to optimize correlated op all/some/all-subquery into exists-subqueryHASH_PLL_OPT_FLAG = 0 #the flag of cutting partitioned table when used hash joinPARTIAL_JOIN_EVALUATION_FLAG = 1 #Whether to convert join type when upper operator is DISTINCTUSE_FK_REMOVE_TABLES_FLAG = 1 #Whether to remove redundant join by taking advantage of foreign key constraintUSE_FJ_REMOVE_TABLE_FLAG = 0 #Whether to remove redundant join by taking advantage of filter joiningSLCT_ERR_PROCESS_FLAG = 0 #How to handle error when processing single rowMPP_HASH_LR_RATE = 10 #The ratio of left child's cost to right child's cost of hash join in MPP environment that can influence the execution planLPQ_HASH_LR_RATE = 30 #The ratio of left child's cost to right child's cost of hash join in LPQ environment that can influence the execution planUSE_HTAB = 1 #Whether to use HTAB operator for the whole planSEL_ITEM_HTAB_FLAG = 0 #Whether to use HTAB operator for correlated subquery in select itemsOR_CVT_HTAB_FLAG = 1 #Whether to use HTAB operator to optimizer or-expressionENHANCED_SUBQ_MERGING = 0 #Whether to use merging subquery optCASE_WHEN_CVT_IFUN = 9 #Flag of converting subquery in case-when expression to IF operatorOR_NBEXP_CVT_CASE_WHEN_FLAG = 0 #Whether to convert or-expression to case-when expressionNONCONST_OR_CVT_IN_LST_FLAG = 0 #Whether to convert nonconst or-expression to in lst expressionOUTER_CVT_INNER_PULL_UP_COND_FLAG = 3 #Whether to pull up join condition when outer join converts to inner joinOPT_OR_FOR_HUGE_TABLE_FLAG = 0 #Whether to use HFSEK to optimize or-expression for HUGE tableORDER_BY_NULLS_FLAG = 0 #Whether to place NULL values to the end of the result set when in ascending orderSUBQ_CVT_SPL_FLAG = 1 #Flag of indicating how to convert correlated subqueryENABLE_RQ_TO_SPL = 1 #Whether to convert correlated subquery to SPOOLMULTI_IN_CVT_EXISTS = 0 #Whether to convert multi-column-in subquery to exists subqueryPRJT_REPLACE_NPAR = 1 #Whether to replace NPAR tree in NSEL after projectionENABLE_RQ_TO_INV = 0 #Whether to convert correlated subquery to temporary functionSUBQ_EXP_CVT_FLAG = 0 #whether convert refered subquery exp to non-refered subquery expUSE_REFER_TAB_ONLY = 0 #Whether to pull down correlated table only when dealing with correlated subqueryREFED_SUBQ_CROSS_FLAG = 1 #Whether to replace hash join with cross join for correlated subqueryIN_LIST_AS_JOIN_KEY = 0 #Whether to use in-list expression as join keyOUTER_JOIN_FLATING_FLAG = 0 #Flag of indicating whether outer join will be flattenedTOP_ORDER_OPT_FLAG = 0 #The flag of optimizing the query with the top cluase and the order by clauseTOP_ORDER_ESTIMATE_CARD = 300 #The estimated card of leaf node when optimize the query with the top cluase and the order by clausePLACE_GROUP_BY_FLAG = 0 #The flag of optimizing the query with group_by and sfun by clauseTOP_DIS_HASH_FLAG = 1 #Flag of disable hash join in TOP-N queryENABLE_RQ_TO_NONREF_SPL = 0 #Whether to convert correlated query to non-correlated queryENABLE_CHOOSE_BY_ESTIMATE_FLAG = 0 #Whether to choose different plan by estimatingOPTIMIZER_MODE = 1 #Optimizer_modeNEW_MOTION = 0 #New MotionLDIS_NEW_FOLD_FUN = 0 #ldis use different fold fun with mdisDYNAMIC_CALC_NODES = 0 #different nodes of npln use different nubmer of calc sizes/threadsOPTIMIZER_MAX_PERM = 7200 #Optimizer_max permutationsENABLE_INDEX_FILTER = 0 #enable index filterOPTIMIZER_DYNAMIC_SAMPLING = 0 #Dynamic sampling levelTABLE_STAT_FLAG = 0 #How to use stat of tableAUTO_STAT_OBJ = 0 #Flag of automatically collecting statistics and recording DML changing rowsMONITOR_MODIFICATIONS = 0 #Flag of monitor statistics and recording DML modificationsMON_CHECK_INTERVAL = 3600 #Server flush monitor modifications data to disk intervalNONREFED_SUBQUERY_AS_CONST = 0 #Whether to convert non-correlated subquery to constHASH_CMP_OPT_FLAG = 0 #Flag of operators that enable optimization with static hash tableOUTER_OPT_NLO_FLAG = 0 #Flag of enable index join for those whose right child is not base tableDISTINCT_USE_INDEX_SKIP = 2 #Distinct whether to use index skip scanUSE_INDEX_SKIP_SCAN = 0 #Whether to use index skip scanINDEX_SKIP_SCAN_RATE = 0.0025 #Rate in index skip scanSPEED_SEMI_JOIN_PLAN = 9 #Flag of speeding up the generating process of semi join planCOMPLEX_VIEW_MERGING = 0 #Flag of merging complex view into query without complex viewHLSM_FLAG = 1 #Choose one method to realize hlsm operatorDEL_HP_OPT_FLAG = 4 #Optimize delete for horization partition tableOPTIMIZER_OR_NBEXP = 0 #Flag of or-expression optimization methodNPLN_OR_MAX_NODE = 20 #Max number of or-expression on join conditionCNNTB_OPT_FLAG = 0 #Optimize hierarchical queryADAPTIVE_NPLN_FLAG = 3 #Adaptive nplnMULTI_UPD_OPT_FLAG = 0 #Optimize multi column updateMULTI_UPD_MAX_COL_NUM = 128 #Max value of column counts when optimize multi column updateENHANCE_BIND_PEEKING = 0 #Enhanced bind peekingNBEXP_OPT_FLAG = 7 #Whether to enable optimization for bool expressionsHAGR_HASH_ALGORITHM_FLAG = 0 #HAGR hash algorithm choiceDIST_HASH_ALGORITHM_FLAG = 0 #Distinct hash algorithm choiceUNPIVOT_OPT_FLAG = 0 #Optimize UNPIVOT operatorVIEW_FILTER_MERGING = 138 #Flag of merging view filterENABLE_PARTITION_WISE_OPT = 0 #whether enable partition-wise optimizationOPT_MEM_CHECK = 0 #reduce search space when out of memoryENABLE_JOIN_FACTORIZATION = 0 #Whether to enable join factorizationEXPLAIN_SHOW_FACTOR = 1 #factor of explainERROR_COMPATIBLE_FLAG = 0 #enable/disable specified errors to be compatible with previous versionENABLE_NEST_LOOP_JOIN_CACHE = 0 #whether enable cache temporary result of nest loop join childENABLE_TABLE_EXP_REF_FLAG = 1 #Whether allow table expression to reference brother tablesVIEW_OPT_FLAG = 1 #flag of optimize viewSORT_ADAPTIVE_FLAG = 0 #sort buf adaptiveDPC_OPT_FLAG = 16383 #optimizer control for DPCDPC_SYNC_STEP = 16 #dpc motion sync check stepDPC_SYNC_TOTAL = 0 #dpc motion sync check totalXBOX_DUMP_THRESHOLD = 0 #The xbox_sys mem used threshold of dump xbox_msgSTMT_XBOX_REUSE = 1 #Xbox resuse flag on statementXBOX_SHORT_MSG_SIZE = 1024 #The xbox_sys short message threshold of dump xbox_msgMAX_HEAP_SIZE = 0 #Maximum heap size in megabyte allowed to use during analysis phase#checkpointCKPT_RLOG_SIZE = 128 #Checkpoint Rlog Size, 0: Ignore; else: Generate With Redo Log SizeCKPT_DIRTY_PAGES = 0 #Checkpoint Dirty Pages, 0: Ignore; else: Generate With Dirty PagesCKPT_INTERVAL = 180 #Checkpoint Interval In SecondsCKPT_FLUSH_RATE = 5.00 #Checkpoint Flush Rate(0.0-100.0)CKPT_FLUSH_PAGES = 1000 #Minimum number of flushed pages for checkpointsCKPT_WAIT_PAGES = 1024 #Maximum number of pages flushed for checkpointsFORCE_FLUSH_PAGES = 8 #number of periodic flushed pages#IODIRECT_IO = 0 #Flag For Io Mode(Non-Windows Only), 0: Using File System Cache; 1: Without Using File System CacheIO_THR_GROUPS = 8 #The Number Of Io Thread Groups(Non-Windows Only)HIO_THR_GROUPS = 2 #The Number Of Huge Io Thread Groups(Non-Windows Only)FIL_CHECK_INTERVAL = 0 #Check file interval in Second,0 means no_check(Non-Windows Only)FAST_EXTEND_WITH_DS = 1 #How To Extend File's Size (Non-Windows Only), 0: Extend File With Hole; 1: Extend File With Disk Space#databaseMAX_SESSIONS = 10000 #Maximum number of concurrent sessionsMAX_CONCURRENT_TRX = 0 #Maximum number of concurrent transactionsCONCURRENT_TRX_MODE = 0 #Concurrent transactions modeCONCURRENT_DELAY = 16 #Delay time in seconds for concurrent controlTRX_VIEW_SIZE = 512 #The buffer size of local transaction ids in TRX_VIEWTRX_VIEW_MODE = 1 #The transaction view mode, 0: Active ids snap; 1: Recycled id arrayTRX_CMTARR_SIZE = 10 #The size of transaction commitment status array in 1MMAX_SESSION_STATEMENT = 10000 #Maximum number of statement handles for each sessionMAX_SESSION_MEMORY = 0 #Maximum memory(In Megabytes) a single session can useMAX_CONCURRENT_OLAP_QUERY = 0 #Maximum number of concurrent OLAP queriesBIG_TABLE_THRESHHOLD = 1000 #Threshhold value of a big table in 10kMAX_EP_SITES = 64 #Maximum number of EP sites for MPPPORT_NUM = 5236 #Port number on which the database server will listenLISTEN_IP = #IP address from which the database server can acceptFAST_LOGIN = 0 #Whether to log information without loginDDL_AUTO_COMMIT = 1 #ddl auto commit mode, 0: not auto commit; 1: auto commitCOMPRESS_MODE = 0 #Default Compress Mode For Tables That Users Created, 0: Not Compress; 1: CompressPK_WITH_CLUSTER = 0 #Default Flag For Primary Key With Cluster, 0: Non-Cluster; 1: ClusterEXPR_N_LEVEL = 200 #Maximum nesting levels for expressionN_PARSE_LEVEL = 100 #Maximum nesting levels for parsing objectMAX_SQL_LEVEL = 500 #Maximum nesting levels of VM stack frame for sqlBDTA_SIZE = 300 #batch data processing size.SIZE OF BDTA(1-10000)OLAP_FLAG = 2 #OLAP FLAG, 1 means enable olapJOIN_HASH_SIZE = 500000 #the hash table size for hash joinHFILES_OPENED = 256 #maximum number of files can be opened at the same time for huge tableISO_IGNORE = 0 #ignore isolation level flagTEMP_SIZE = 10 #temporary file size in MegabytesTEMP_SPACE_LIMIT = 0 #temp space limit in megabytesFILE_TRACE = 0 #Whether to log operations of database filesCOMM_TRACE = 0 #Whether to log warning information of communicationERROR_TRACE = 0 #Whether to log error information, 1: NPAR ERRORCACHE_POOL_SIZE = 100 #SQL buffer size in megabytesPLN_DICT_HASH_THRESHOLD = 20 #Threshold in megabytes for plan dictionary hash table creatingSTAT_COLLECT_SIZE = 10000 #minimum collect size in rows for statisticsSTAT_ALL = 0 #if collect all the sub-tables of a partition tablePHC_MODE_ENFORCE = 0 #join modeENABLE_HASH_JOIN = 1 #enable hash joinENABLE_INDEX_JOIN = 1 #enable index joinENABLE_MERGE_JOIN = 1 #enable merge joinMPP_INDEX_JOIN_OPT_FLAG = 0 #enhance index inner join in mppMPP_NLI_OPT_FLAG = 0 #enhance nest loop inner join in mppMAX_PARALLEL_DEGREE = 1 #Maximum degree of parallel queryPARALLEL_POLICY = 0 #Parallel policyPARALLEL_THRD_NUM = 10 #Thread number for parallel taskPARALLEL_MODE_COMMON_DEGREE = 1 #the common degree of parallel query for parallel-modePUSH_SUBQ = 0 #Whether to push down semi join for correlated subqueryOPTIMIZER_AGGR_GROUPBY_ELIM = 1 #Whether to attemp to eliminate group-by aggregationsUPD_TAB_INFO = 0 #Whether to update table info when startupENABLE_IN_VALUE_LIST_OPT = 6 #Flag of optimization methods for in-list expressionENHANCED_BEXP_TRANS_GEN = 3 #Whether to enable enhanced transitive closure of boolean expressionsENABLE_DIST_VIEW_UPDATE = 0 #whether view with distinct can be updatedSTAR_TRANSFORMATION_ENABLED = 0 #Whether to enable star transformation for star join queriesMONITOR_INDEX_FLAG = 0 #monitor index flagAUTO_COMPILE_FLAG = 1 #Whether to compile the invalid objects when loading RAISE_CASE_NOT_FOUND = 0 #Whether raise CASE_NOT_FOUND exception for no case item matched FIRST_ROWS = 100 #maximum number of rows when first returned to clientsLIST_TABLE = 0 #Whether to convert tables to LIST tables when createdENABLE_SPACELIMIT_CHECK = 1 #flag for the space limit check, 0: disable 1: enableBUILD_VERTICAL_PK_BTREE = 0 #Whether to build physical B-tree for primary key on huge tablesBDTA_PACKAGE_COMPRESS = 0 #Whether to compress BDTA packagesHFINS_PARALLEL_FLAG = 0 #Flag of parallel policy for inserting on huge tableHFINS_MAX_THRD_NUM = 100 #Maximum number of parallel threads that responsible for inserting on huge tableLINK_CONN_KEEP_TIME = 15 #Max idle time in minute for DBLINK before being closedDETERMIN_CACHE_SIZE = 5 #deterministic function results cache size(M)NTYPE_MAX_OBJ_NUM = 1000000 #Maximum number of objects and strings in composite data typeCTAB_SEL_WITH_CONS = 0 #Whether to build constraints when creating table by queryHLDR_BUF_SIZE = 8 #HUGE table fast loader buffer size in MegabytesHLDR_BUF_TOTAL_SIZE = 4294967294 #HUGE table fast loader buffer total size in MegabytesHLDR_REPAIR_FLAG = 0 #Flag of repairing huge table after exception, 0: NO 1: YESHLDR_FORCE_COLUMN_STORAGE = 1 #Whether force column storage for last section data, 0: NO 1: YESHLDR_FORCE_COLUMN_STORAGE_PERCENT = 80 #Minumun percent of unfully section data for huge force column storageHLDR_HOLD_RATE = 1.50 #THE minimum rate to hold hldr of column number(1-65535)HLDR_MAX_RATE = 2 #THE minimum rate to create hldr of column number(2-65535)HUGE_ACID = 0 #Flag of concurrent mechanism for HUGE tablesHUGE_STAT_MODE = 2 #Flag of default stat mode when create huge table, 0:NONE 1:NORMAL 2:ASYNCHRONOUSHFS_CHECK_SUM = 1 #Whether to check sum val for hfs dataHBUF_DATA_MODE = 0 #Whether to uncompress and decrypt data before read into HUGE bufferDBLINK_OPT_FLAG = 509 #optimize dblink query flagELOG_REPORT_LINK_SQL = 0 #Whether to write the SQLs that sent to remote database by DBLINKs into error log fileDBLINK_LOB_LEN = 8 #BLOB/TEXT buffer size(KB) for dblinkFILL_COL_DESC_FLAG = 0 #Whether to return columns description while database returns resultsBTR_SPLIT_MODE = 0 #Split mode for BTREE leaf, 0: split half and half, 1: split at insert pointTS_RESERVED_EXTENTS = 64 #Number of reserved extents for each tablespace when startupTS_SAFE_FREE_EXTENTS = 512 #Number of free extents which considered as safe value for each tablespaceTS_MAX_ID = 8192 #Maximum ID value for tablespaces in databaseTS_FIL_MAX_ID = 255 #Maximum ID value for files in tablespaceDECIMAL_FIX_STORAGE = 0 #Whether convert decimal data to fixed length storageSAVEPOINT_LIMIT = 512 #The upper limit of savepoint in a transactionSQL_SAFE_UPDATE_ROWS = 0 #Maximum rows can be effected in an update&delete statementENABLE_HUGE_SECIND = 1 #Whether support huge second index, 0: disable, 1: enableTRXID_UNCOVERED = 0 #Whether disable scanning 2nd index only when pseudo column trxid used, 0: disable, 1: enableLOB_MAX_INROW_LEN = 900 #Max lob data inrow lenRS_PRE_FETCH = 0 #Whether enable result pre-fetchDFS_PAGE_FLUSH_VALIDATE = 1 #Whether enable validate page when flush in DDFSGEN_SQL_MEM_RECLAIM = 1 #Whether reclaim memory space after generating each SQL's planTIMER_TRIG_CHECK_INTERVAL = 60 #Server check timer trigger intervalDFS_HUGE_SUPPORT = 1 #Whether support huge table operation in DDFSINNER_INDEX_DDL_SHOW = 1 #Whether to show inner index ddl.HP_STAT_SAMPLE_COUNT = 50 #Max sample count when stating on horizon partitionsMAX_SEC_INDEX_SIZE = 16384 #Maximum size of second indexENHANCE_RECLAIM = 1 #Whether enhance class instancesENABLE_PMEM = 0 #Whether allow to use persistent memoryHP_TAB_COUNT_PER_BP = 1 #hash partition count per BP when use DEFAULTSQC_GI_NUM_PER_TAB = 1 #sqc_gi_num_per_tabHP_DEF_LOCK_MODE = 0 #Default lock mode for partition table. 0:lock root, 1: lock partitionsCODE_CONVERSE_MODE = 1 #judge how dblink do with incomplete str bytes, 1 represents report err, 0 represents discard incomplete bytesDBLINK_USER_AS_SCHEMA = 1 #Whether use login name as default schema name for dblinkCTAB_MUST_PART = 0 #Whether to create partition user tableCTAB_WITH_LONG_ROW = 0 #Default Flag of using long row for create tableTMP_DEL_OPT = 1 #delete opt flag for temporary table, 0: false, 1: optLOGIN_FAIL_TRIGGER = 0 #Whether support trigger when login failedINDEX_PARALLEL_FILL = 0 #Enable index parallel fill rowsCIND_CHECK_DUP = 0 #Check index and unique constraint duplicates, 0: index same type and key is forbiden and ignore check unique constraint; 1: index same key is forbiden and check unique constraint#pre-loadLOAD_TABLE = #need to pre-load tableLOAD_HTABLE = #need to pre-load htable#client cacheCLT_CACHE_TABLES = #Tables that can be cached in client#redo logRLOG_BUF_SIZE = 1024 #The Number Of Log Pages In One Log BufferRLOG_POOL_SIZE = 256 #Redo Log Pool Size In MegabyteRLOG_PARALLEL_ENABLE = 0 #Whether to enable database to write redo logs in parallel modeRLOG_IGNORE_TABLE_SET = 1 #Whether ignore table setRLOG_APPEND_LOGIC = 0 #Type of logic records in redo logsRLOG_APPEND_SYSTAB_LOGIC = 0 #Whether to write logic records of system tables in redo logs when RLOG_APPEND_LOGIC is set as 1RLOG_SAFE_SPACE = 128 #Free redo log size in megabytes that can be considered as a save valueRLOG_RESERVE_THRESHOLD = 0 #Redo subsystem try to keep the used space of online redo less than this targetRLOG_RESERVE_SIZE = 4096 #Number of reserved redo log pages for each operationRLOG_SEND_APPLY_MON = 64 #Monitor recently send or apply rlog_buf infoRLOG_COMPRESS_LEVEL = 0 #The redo compress level,value in [0,10],0:do not compressRLOG_ENC_CMPR_THREAD = 4 #The redo log thread number of encrypt and compress task,value in [1,64],default 4RLOG_PKG_SEND_ECPR_ONLY = 0 #Only send encrypted or compressed data to standby instance without original dataDFS_RLOG_SEND_POLICY = 1 #DFS rlog send policy, 0: sync; 1:asyncRLOG_HASH_NAME = #The name of the hash algorithm used for Redo logDPC_LOG_INTERVAL = 0 #Only MP is valid, control MP broadcasts generating logs of specific DW type regularly, value range (0,1440), the unit is in minutes, and the value of 0 means not to generateRLOG_PKG_SEND_NUM = 1 #Need wait standby database's response message after the number of rlog packages were sent#redo redosREDO_PWR_OPT = 1 #Whether to enable PWR optimization when system restarted after failureREDO_IGNORE_DB_VERSION = 0 #Whether to check database version while database is redoing logsREDO_BUF_SIZE = 64 #The max buffer size of rlog redo In MegabyteREDOS_BUF_SIZE = 1024 #The max buffer size of rlog redo for standby In MegabyteREDOS_MAX_DELAY = 1800 #The permitted max delay for one rlog buf redo on standby In SecondREDOS_BUF_NUM = 4096 #The max apply rlog buffer num of standbyREDOS_PRE_LOAD = 0 #Number of pre-load apply rlog buffer for standbyREDOS_PARALLEL_NUM = 1 #The parallel redo thread numREDOS_ENABLE_SELECT = 1 #Enable select for standbyREDOS_FILE_PATH_POLICY = 0 #Data files' path policy when standby instance applies CREATE TABLESPACE redo log. 0:use the same file name under system path, 1:use the same file path under the system path#transactionISOLATION_LEVEL = 1 #Default Transaction Isolation Level, 1: Read Commited; 3: SerializableDDL_WAIT_TIME = 10 #Maximum waiting time in seconds for DDLsBLDR_WAIT_TIME = 10 #Maximum waiting time in seconds for BLDRMPP_WAIT_TIME = 10 #Maximum waiting time in seconds for locks on MPPFAST_RELEASE_SLOCK = 1 #Whether to release share lock as soon as possibleSESS_CHECK_INTERVAL = 3 #Interval time in seconds for checking status of sessionsLOCK_TID_MODE = 1 #Lock mode for select-for-update operationLOCK_TID_UPGRADE = 0 #Upgrade tid lock to X mode, 0:no, 1:yesNOWAIT_WHEN_UNIQUE_CONFLICT = 0 #Whether to return immediately when unique constraint violation conflict happensUNDO_EXTENT_NUM = 4 #Number of initial undo extents for each worker threadMAX_DE_TIMEOUT = 10 #Maximum external function wait time in SecondsTRX_RLOG_WAIT_MODE = 0 #Trx rlog wait modeTRANSACTIONS = 75 #Maximum number of concurrent transactionsMVCC_RETRY_TIMES = 5 #Maximum retry times while MVCC conflicts happenMVCC_PAGE_OPT = 1 #Page visible optimize for MVCCENABLE_FLASHBACK = 0 #Whether to enable flashback functionUNDO_RETENTION = 90.000 #Maximum retention time in seconds for undo pages since relative transaction is committedPARALLEL_PURGE_FLAG = 0 #flag for parallel purge of undo logsPURGE_WAIT_TIME = 500 #Maximum wait time in microseconds for purging undo pagesPSEG_RECV = 3 #Whether to rollback active transactions and purge committed transactions when system restarts after failureENABLE_IGNORE_PURGE_REC = 0 #Whether to ignore purged records when returning -7120ENABLE_TMP_TAB_ROLLBACK = 1 #enable temp table rollbackROLL_ON_ERR = 0 #Rollback mode on Error, 0: rollback current statement 1: rollback whole transactionXA_TRX_IDLE_TIME = 60 #Xa transaction idle timeXA_TRX_LIMIT = 1024 #Maximum number of Xa transactionLOB_MVCC = 1 #Whether LOB access in MVCC modeLOCK_DICT_OPT = 2 #lock dict optimizeTRX_DICT_LOCK_NUM = 64 #Maximum ignorable dict lock numberDEADLOCK_CHECK_INTERVAL = 1000 #Time interval of deadlock checkCOMMIT_WRITE = IMMEDIATE,WAIT #wait means normal commit; nowait may speed up commit, but may not assure ACID,immediate,batch just support syntax parsingDPC_2PC = 1 #enable two-phase commit for dpc, 0: disable, 1: enableSWITCH_CONN = 0 #switch connect flagUNDO_BATCH_FAST = 0 #Whether to undo batch insert fastFLDR_LOCK_RETRY_TIMES = 0 #Maximum retry times while MVCC conflicts happenDPC_TRX_TIMEOUT = 10 #Maximum trx waiting time in seconds for DPCSESSION_READONLY = 0 #session readonly parameter valueENABLE_ENCRYPT = 0 #Encrypt Mode For Communication, 0: Without Encryption; 1: SSL Encryption; 2: SSL AuthenticationCLIENT_UKEY = 0 #Client ukey, 0: all, active by Client; 1: Force client ukey AuthenticationMIN_SSL_VERSION = 771 #SSL minimum version For Communication, For example, 0: all, 0x0301: TLSv1, 0x0302: TLSv1.1, 0x0303: TLSv1.2, 0x0304: TLSv1.3ENABLE_UDP = 0 #Enable udp For Communication, 0: disable; 1: single; 2: multiUDP_MAX_IDLE = 15 #Udp max waiting time in secondUDP_BTU_COUNT = 8 #Count of udp batch transfer unitsENABLE_IPC = 0 #Enable ipc for communication, 0: disable; 1: enableAUDIT_FILE_FULL_MODE = 1 #operation mode when audit file is full,1: delete old file; 2: no longer to write audit recordsAUDIT_SPACE_LIMIT = 8192 #audit space limit in MegabytesAUDIT_MAX_FILE_SIZE = 100 #maximum audit file size in MegabytesAUDIT_IP_STYLE = 0 #IP style in audit record, 0: IP, 1: IP(hostname), default 0MSG_COMPRESS_TYPE = 2 #Flag of message compression modeLDAP_HOST = #LDAP Server ipCOMM_ENCRYPT_NAME = #Communication encrypt name, if it is null then the communication is not encryptedCOMM_VALIDATE = 1 #Whether to validate messageMESSAGE_CHECK = 0 #Whether to check message bodyEXTERNAL_JFUN_PORT = 6363 #DmAgent port for external java fun. EXTERNAL_AP_PORT = 4236 #DmAp port for external fun.ENABLE_PL_SYNONYM = 0 #Whether try to resolve PL object name by synonym. FORCE_CERTIFICATE_ENCRYPTION = 0 #Whether to encrypt login user name and password use certificateREGEXP_MATCH_PATTERN = 0 #Regular expression match pattern, 0: support non-greedy match; 1: only support greedy matchUNIX_SOCKET_PATHNAME = #Unix socket pathname.TCP_ACK_TIMEOUT = 0 #TCP ack timeout valueRESOURCE_FLAG = 0 #Flag of user's resources, 1: reset session connecting time with secondAUTH_ENCRYPT_NAME = #User password encrypt name#compatibilityBACKSLASH_ESCAPE = 0 #Escape Mode For Backslash, 0: Not Escape; 1: EscapeSTR_LIKE_IGNORE_MATCH_END_SPACE = 1 #Whether to ignore end space of strings in like clauseCLOB_LIKE_MAX_LEN = 10240 #Maximum length in kilobytes of CLOB data that can be filtered by like clauseEXCLUDE_DB_NAME = #THE db names which DM7 server can excludeMS_PARSE_PERMIT = 0 #Whether to support SQLSERVER's parse styleCOMPATIBLE_MODE = 0 #Server compatible mode, 0:none, 1:SQL92, 2:Oracle, 3:MS SQL Server, 4:MySQL, 5:DM6, 6:TeradataORA_DATE_FMT = 0 #Whether support oracle date fmt: 0:No, 1:Yes JSON_MODE = 0 #Json compatible mode, 0:Oracle, 1:PGDATETIME_FMT_MODE = 0 #Datetime fmt compatible mode, 0:none, 1:OracleDOUBLE_MODE = 0 #Calc double fold mode, 0:8bytes, 1:6bytesCASE_COMPATIBLE_MODE = 1 #Case compatible mode, 0:none, 1:Oracle, 2:Oracle(new rule)XA_COMPATIBLE_MODE = 0 #XA compatible mode, 0:none, 1:OracleEXCLUDE_RESERVED_WORDS = #Reserved words to be excludeCOUNT_64BIT = 1 #Whether to set data type for COUNT as BIGINTCALC_AS_DECIMAL = 0 #Whether integer CALC as decimal, 0: no, 1:only DIV, 2:all only has charactor, 3:all for digitCMP_AS_DECIMAL = 0 #Whether integer compare as decimal, 0: no, 1:part, 2:allCAST_VARCHAR_MODE = 1 #Whether to convert illegal string to special pseudo value when converting string to integerPL_SQLCODE_COMPATIBLE = 0 #Whether to set SQLCODE in PL/SQL compatible with ORACLE as far as possibleLEGACY_SEQUENCE = 0 #Whether sequence in legacy mode, 0: no, 1:yesDM6_TODATE_FMT = 0 #To Date' HH fmt hour system, 0: 12(defalut) , 1: 24(DM6)MILLISECOND_FMT = 1 #Whether To show TO_CHAR' millisecond, 0: no, 1:yesNLS_DATE_FORMAT = #Date format stringNLS_TIME_FORMAT = #Time format stringNLS_TIMESTAMP_FORMAT = #Timestamp format stringNLS_TIME_TZ_FORMAT = #Time_tz format stringNLS_TIMESTAMP_TZ_FORMAT = #Timestamp_tz format stringPK_MAP_TO_DIS = 0 #Whether map pk cols into distributed cols automaticallyPROXY_PROTOCOL_MODE = 0 #PROXY PROTOCOL mode, 0: close; 1: openSPACE_COMPARE_MODE = 0 #Whether to compare suffix space of strings, 0: default, 1: yesDATETIME_FAST_RESTRICT = 1 #Wherther To DATE's datetime with time.default:1. 1: No. 0: Yes.BASE64_LINE_FLAG = 1 #Whether base64 encode persection with line flag: CR and LF. default: 1. 1:YES. 2:NO.MY_STRICT_TABLES = 0 #Whether to set STRICT_XXX_TABLES in MYSQL compatible mode. default: 0. 1:YES. 0:NO.IN_CONTAINER = 0 #judge if dm is run in container.default:0.ENABLE_RLS = 0 #Whether enable rlsNVARCHAR_LENGTH_IN_CHAR = 1 #Whether nvarchar convert to varchar(n char) 1:yes,0:noPARAM_DOUBLE_TO_DEC = 0 #Whether to convert double parameter to decimal, 0: disable, 1: enable with check, 2, enable without checkINSERT_COLUMN_MATCH = 0 #Insert column match, 0: DM, 1: Aster, 2: PGIFUN_DATETIME_MODE = 1 #The definition of DATETIME in sys function, 0:DATATIME(6), 1:DATATIME(9)DROP_CASCADE_VIEW = 0 #Whether to drop cascade view while dropping table or viewNUMBER_MODE = 0 #NUMBER mode, 0:DM; 1:ORACLENLS_SORT_TYPE = 0 #Chinese sort type, 0:default 1:pinyin 2:stroke 3:radical#request traceSVR_LOG_NAME = SLOG_ALL #Using which sql log sys in sqllog.iniSVR_LOG = 0 #Whether the Sql Log sys Is open or close. 1:open, 0:close, 2:use switch and detail mode. 3:use not switch and simple mode. #system traceGDB_THREAD_INFO = 0 #Generate gdb thread info while dm_sys_halt. 1: yes; 0: noTRACE_PATH = #System trace path nameSVR_OUTPUT = 0 #Whether print at backgroundSVR_ELOG_FREQ = 0 #How to swith svr elog file. 0: by month. 1: by day, 2: by hour, 3, by limitENABLE_OSLOG = 0 #Whether to enable os logLOG_IN_SHOW_DEBUG = 2147483647 #Whether record log infoTRC_LOG = 0 #Whether the trace Log sys Is open or close. 0:close, other: switch_mod*4 + asyn_flag*2 + 1(switch_mode: 0:no_switch;1:by num;2:by size;3:by interval). ELOG_SWITCH_LIMIT = 0 #The upper limit to switch elogELOG_ERR_ARR = #dmerrs need to generate elog

#ecsENABLE_ECS = 0 #Whether to enable elastic calc systemECS_HB_INTERVAL = 60 #HB interval for elastic calc systemEP_GROUP_NAME = DMGROUP #Server group name as workerAP_GROUP_NAME = DMGROUP #Server group name as cooperative workerDCRS_IP = LOCALHOST #IP on which the dcrs server will listenDCRS_PORT_NUM = 6236 #Port number on which the database dcrs will listenAP_IP = LOCALHOST #The ap server IP for dcrsAP_PORT_NUM = 0 #Port number on which the database ap will listenAP_PARALLEL_DEGREE = 10 #Maximum degree of parallel for apENABLE_ECS_MSG_CHECK = 0 #Whether to enable elastic check msg accumulate#monitorENABLE_MONITOR = 1 #Whether to enable monitorMONITOR_TIME = 1 #Whether to enable monitor timingMONITOR_SYNC_EVENT = 0 #Whether to enable monitor sync eventMONITOR_SQL_EXEC = 0 #Whether to enable monitor sql executeENABLE_FREQROOTS = 0 #Whether to collect pages that used frequentlyMAX_FREQ_ROOTS = 200000 #Maximum number of frequently used pages that will be collectedMIN_FREQ_CNT = 100000 #Minimum access counts of page that will be collected as frequently used pagesLARGE_MEM_THRESHOLD = 10000 #Large mem used threshold by kENABLE_MONITOR_DMSQL = 1 #Flag of performance monitoring:sql or method exec time.0: NO. 1: YES.ENABLE_TIMER_TRIG_LOG = 0 #Whether to enable timer trigger logIO_TIMEOUT = 300 #Maximum time in seconds that read from disk or write to diskENABLE_MONITOR_BP = 1 #Whether to enable monitor bind param. 1: TRUE. 0:FALSE. default:1LONG_EXEC_SQLS_CNT = 1000 #Max row count of V$LONG_EXEC_SQLSSYSTEM_LONG_EXEC_SQLS_CNT = 20 #Max row count of V$SYSTEM_LONG_EXEC_SQLSSQL_HISTORY_CNT = 10000 #Max row count of V$SQL_HISTORY#data watchDW_MAX_SVR_WAIT_TIME = 0 #Maximum time in seconds that server will wait for DMWATCHER to startupDW_INACTIVE_INTERVAL = 60 #Time in seconds that used to determine whether DMWATCHER existDW_PORT = 0 #Instance tcp port for watch2ALTER_MODE_STATUS = 1 #Whether to permit database user to alter database mode and status by SQLs, 1: yes, 0: noENABLE_OFFLINE_TS = 1 #Whether tablespace can be offlineSESS_FREE_IN_SUSPEND = 60 #Time in seconds for releasing all sessions in suspend mode after archive failedSUSPEND_WORKER_TIMEOUT = 600 #Suspend worker thread timeout in secondsSUSPENDING_FORBIDDEN = 0 #Suspending thread is forbiddenDW_CONSISTENCY_CHECK = 0 #Whether to check consistency for standby database, 1: yes, 0: noDW_ARCH_SPACE_CHECK = 0 #Whether to check archive space for standby database, 1: yes, 0: no#for context indexCTI_HASH_SIZE = 100000 #the hash table size for context index queryCTI_HASH_BUF_SIZE = 50 #the hash table cache size in Megabytes for context index queryUSE_FORALL_ATTR = 0 #Whether to use cursor attributes of FORALL statementsALTER_TABLE_OPT = 0 #Whether to optimize ALTER TABLE operation(add, modify or drop column)USE_RDMA = 0 #Whether to use rdma#configuration fileMAL_INI = 0 #dmmal.iniARCH_INI = 0 #dmarch.iniREP_INI = 0 #dmrep.iniLLOG_INI = 0 #dmllog.iniTIMER_INI = 0 #dmtimer.iniMPP_INI = 0 #dmmpp.iniDFS_INI = 0 #dmdfs.iniDSC_N_CTLS = 10000 #Number Of LBS/GBS ctlsDSC_N_POOLS = 2 #Number Of LBS/GBS poolsDSC_USE_SBT = 1 #Use size balanced treeDSC_TRX_CMT_LSN_SYNC = 3 #Whether to adjust lsn when trx commitDSC_ENABLE_MONITOR = 1 #Whether to monitor request timeDSC_GBS_REVOKE_OPT = 1 #Whether to optimize GBS revokeDSC_TRX_VIEW_SYNC = 0 #Whether to wait response after broadcast trx view to other epDSC_TRX_VIEW_BRO_INTERVAL = 1000 #Time interval of trx view broadcastDSC_REMOTE_READ_MODE = 1 #PAGE remote read optimize modeDSC_FILE_INIT_ASYNC = 1 #DSC file init async flagDSC_TRX_CMT_OPT = 0 #Whether to wait rlog file flush when trx commit in DSCDSC_RESERVE_PERCENT = 0.080 #Start ctl reserve percentDSC_TABLESPACE_BALANCE = 0 #Enable DSC load balance by tablespaceDSC_FREQ_INTERVAL = 30 #High frequency conflict intervalsDSC_FREQ_CONFLICT = 8 #High frequency conflict countsDSC_FREQ_DELAY = 0 #High frequency conflict delayDSC_INSERT_LOCK_ROWS = 0 #Insert extra lock rows for DSCDSC_CRASH_RECV_POLICY = 0 #Policy of handling node crashDSC_LBS_REVOKE_DELAY = 0 #LBS revoke delay#otherIDLE_MEM_THRESHOLD = 50 #minimum free memory warning size in MegabytesIDLE_DISK_THRESHOLD = 1000 #minimum free disk space warning size in MegabytesIDLE_SESS_THRESHOLD = 5 #minimum available session threshold valueENABLE_PRISVC = 0 #Whether to enable priority serviceHA_INST_CHECK_IP = #HA instance check IPHA_INST_CHECK_PORT = 65534 #HA instance check portPWR_FLUSH_PAGES = 1000 #Make special PWR rrec when n pages flushedREDO_UNTIL_LSN = #redo until lsnIGNORE_FILE_SYS_CHECK = 1 #ignore file sys check while starupFILE_SCAN_PERCENT = 100.00 #percent of data scanned when calculating file used spaceSTARTUP_CHECKPOINT = 0 #checkpoint immediately when startup after redoCHECK_SVR_VERSION = 1 #Whether to check server versionID_RECYCLE_FLAG = 0 #Enable ID recycleBAK_USE_AP = 1 #backup use assistant plus-in, 1:use AP; 2:not use AP. default 1.ENABLE_DBLINK_TO_INV = 0 #Whether to convert correlated subquery which has dblink to temporary functionENABLE_BLOB_CMP_FLAG = 0 #Whether BLOB/TEXT types are allowed to be comparedENABLE_CREATE_BM_INDEX_FLAG = 1 #Allow bitmap index to be createdCVIEW_STAR_WITH_PREFIX = 1 #Whether append prefix for star item when create viewENABLE_ADJUST_NLI_COST = 1 #Whether adjust cost of nest loop inner joinENABLE_SEQ_REUSE = 0 #Whether allow reuse sequence expressionsRLS_CACHE_SIZE = 100000 #Max number of objects for RLS cache.ENABLE_ADJUST_DIST_COST = 0 #Whether adjust cost of distinct

在docker-compose中指定初始化文件位置(跟上面的區別只有最后一行新增的這個路徑):

services:dm8:image: dm8_single:v8.1.2.128_ent_x86_64_ctm_pack4container_name: dm8restart: alwaysprivileged: trueenvironment:LD_LIBRARY_PATH: /opt/dmdbms/binPAGE_SIZE: 16 # 頁大小EXTENT_SIZE: 32 # 簇大小LOG_SIZE: 1024INSTANCE_NAME: dm8CASE_SENSITIVE: 1 # 是否區分大小寫,0 表示不區分,1 表示區分UNICODE_FLAG: 0 # 字符集,0 GB18030,1 UTF-8SYSDBA_PWD: SYSDBA001 # 密碼長度為 9~48 個字符ports:- "5236:5236"volumes:- ./dm8:/opt/dmdbms/data- ./dm.ini:/opt/dmdbms/conf/dm.ini # 這里指定初始化位置重新啟動容器。

構造鄰接表(運用隊列實現搜索))

——第22天:面向對象之繼承與多繼承)