PyTorch RNN 名字分類器詳解

使用PyTorch實現的字符級RNN(循環神經網絡)項目,用于根據人名預測其所屬的語言/國家。該模型通過學習不同語言名字的字符模式,夠識別名字的語言起源。

環境設置

import torch

import string

import unicodedata

import glob

import os

import time

from torch.utils.data import Dataset, DataLoader

import torch.nn as nn

import torch.nn.functional as F

import matplotlib.pyplot as plt

import numpy as np

1. 數據預處理

1.1 字符編碼處理

# 定義允許的字符集(ASCII字母 + 標點符號 + 占位符)

allowed_characters = string.ascii_letters + " .,;'" + "_"

n_letters = len(allowed_characters) # 58個字符def unicodeToAscii(s):"""將Unicode字符串轉換為ASCII"""return ''.join(c for c in unicodedata.normalize('NFD', s)if unicodedata.category(c) != 'Mn' and c in allowed_characters)

關鍵點:

- 使用One-hot編碼表示每個字符

- 將非ASCII字符規范化(如 ‘?lusàrski’ → ‘Slusarski’)

- 未知字符用 “_” 表示

1.2 張量轉換

def letterToIndex(letter):"""將字母轉換為索引"""if letter not in allowed_characters:return allowed_characters.find("_")return allowed_characters.find(letter)def lineToTensor(line):"""將名字轉換為張量 <line_length x 1 x n_letters>"""tensor = torch.zeros(len(line), 1, n_letters)for li, letter in enumerate(line):tensor[li][0][letterToIndex(letter)] = 1return tensor

張量維度說明:

- 每個名字表示為3D張量:

[序列長度, 批次大小=1, 字符數=58] - 使用One-hot編碼:每個字符位置只有一個1,其余為0

2. 數據集構建

2.1 自定義Dataset類

class NamesDataset(Dataset):def __init__(self, data_dir):self.data = [] # 原始名字self.data_tensors = [] # 名字的張量表示self.labels = [] # 語言標簽self.labels_tensors = [] # 標簽的張量表示# 讀取所有.txt文件(每個文件代表一種語言)text_files = glob.glob(os.path.join(data_dir, '*.txt'))for filename in text_files:label = os.path.splitext(os.path.basename(filename))[0]lines = open(filename, encoding='utf-8').read().strip().split('\n')for name in lines:self.data.append(name)self.data_tensors.append(lineToTensor(name))self.labels.append(label)

2.2 數據集劃分

# 85/15 訓練/測試集劃分

train_set, test_set = torch.utils.data.random_split(alldata, [.85, .15], generator=torch.Generator(device=device).manual_seed(2024)

)

3. RNN模型架構

3.1 模型定義

class CharRNN(nn.Module):def __init__(self, input_size, hidden_size, output_size):super(CharRNN, self).__init__()# RNN層:輸入大小 → 隱藏層大小self.rnn = nn.RNN(input_size, hidden_size)# 輸出層:隱藏層 → 輸出類別self.h2o = nn.Linear(hidden_size, output_size)# LogSoftmax用于分類self.softmax = nn.LogSoftmax(dim=1)def forward(self, line_tensor):rnn_out, hidden = self.rnn(line_tensor)output = self.h2o(hidden[0])output = self.softmax(output)return output

模型參數:

- 輸入大小:58(字符數)

- 隱藏層大小:128

- 輸出大小:18(語言類別數)

4. 訓練過程

4.1 訓練函數

def train(rnn, training_data, n_epoch=10, n_batch_size=64, learning_rate=0.2, criterion=nn.NLLLoss()):rnn.train()optimizer = torch.optim.SGD(rnn.parameters(), lr=learning_rate)for iter in range(1, n_epoch + 1):# 創建小批量batches = list(range(len(training_data)))random.shuffle(batches)batches = np.array_split(batches, len(batches)//n_batch_size)for batch in batches:batch_loss = 0for i in batch:label_tensor, text_tensor, label, text = training_data[i]output = rnn.forward(text_tensor)loss = criterion(output, label_tensor)batch_loss += loss# 反向傳播和優化batch_loss.backward()nn.utils.clip_grad_norm_(rnn.parameters(), 3) # 梯度裁剪optimizer.step()optimizer.zero_grad()

訓練技巧:

- 使用SGD優化器,學習率0.15

- 梯度裁剪防止梯度爆炸

- 批量大小:64

5. 模型評估

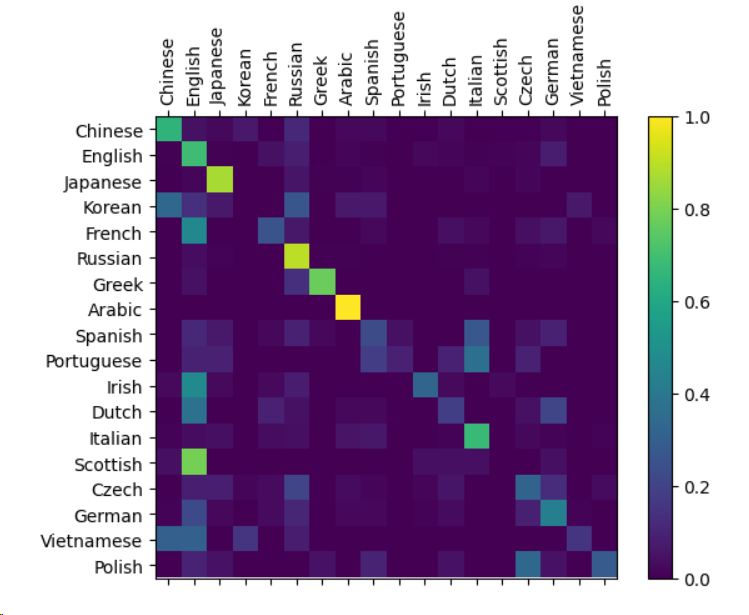

5.1 混淆矩陣可視化

def evaluate(rnn, testing_data, classes):confusion = torch.zeros(len(classes), len(classes))rnn.eval()with torch.no_grad():for i in range(len(testing_data)):label_tensor, text_tensor, label, text = testing_data[i]output = rnn(text_tensor)guess, guess_i = label_from_output(output, classes)label_i = classes.index(label)confusion[label_i][guess_i] += 1# 歸一化并可視化# ...

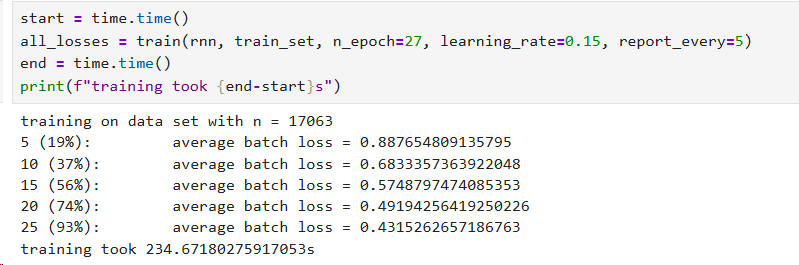

6. 訓練結果

- 訓練樣本數:17,063

- 測試樣本數:3,011

- 訓練輪數:27

- 最終損失:約0.43

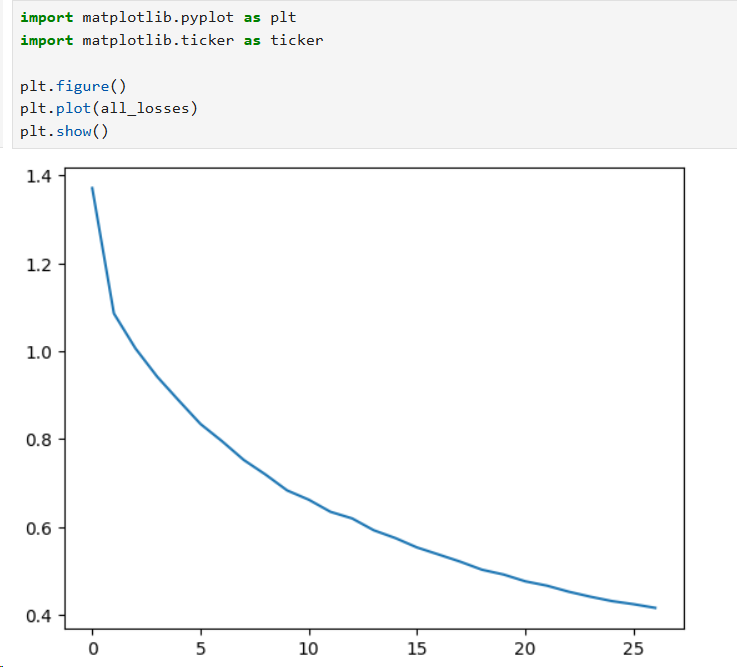

損失曲線顯示模型收斂良好,從初始的0.88降至0.43。

![[激光原理與應用-168]:光源 - 常見光源的分類、特性及應用場景的詳細解析,涵蓋技術原理、優缺點及典型應用領域](http://pic.xiahunao.cn/[激光原理與應用-168]:光源 - 常見光源的分類、特性及應用場景的詳細解析,涵蓋技術原理、優缺點及典型應用領域)

)

)