1.create a basic nerual network model with pytorch

數據集 Iris?UCI Machine Learning Repository

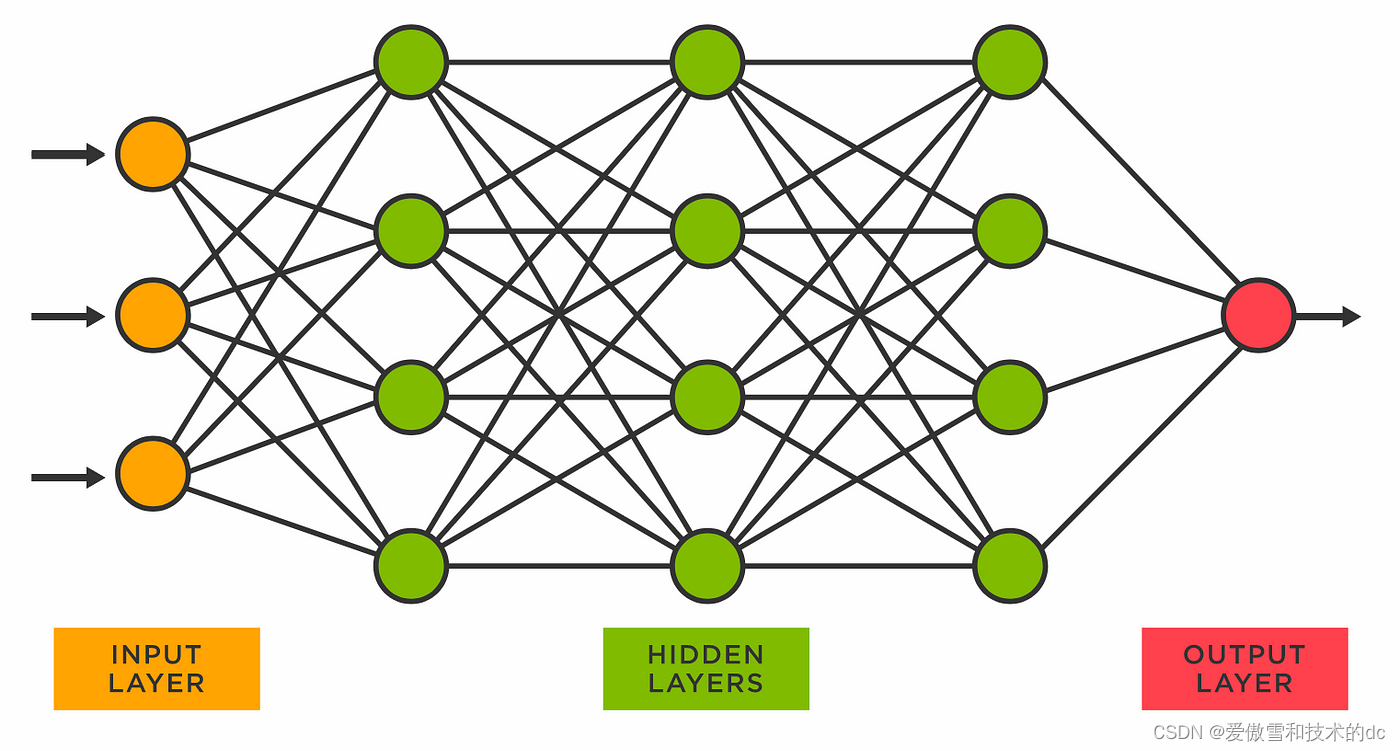

fully connected ?

目標:創建從輸入層的代碼開始,向前移動到隱藏層,最后到輸出層

# %%

import torch

import torch.nn as nn

import torch.nn.functional as F# %%

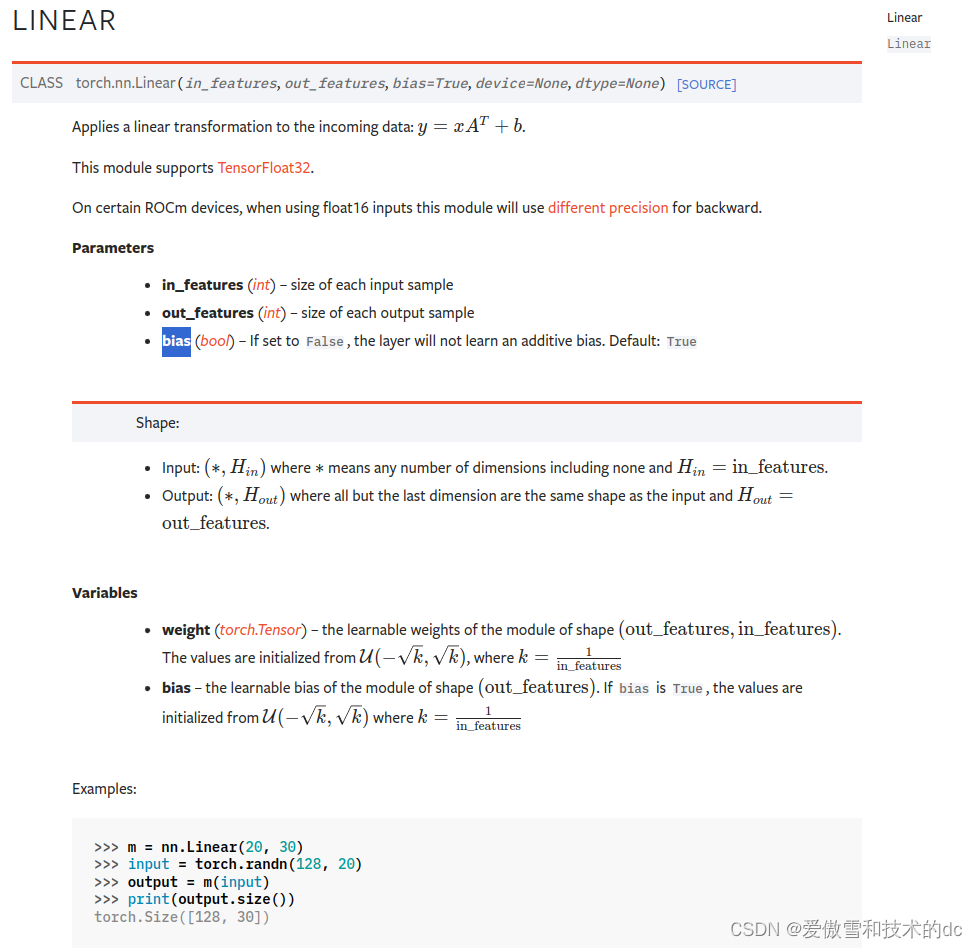

# create a model class that inherits nn.Module 這里是Module 不是model

class Model(nn.Module):#input layer (4 features of the flower) --># Hidden layer1 (number of neurons) --># H2(n) --> output (3 classed of iris flowers)def __init__(self, in_features = 4, h1 = 8, h2 = 9, out_features = 3):super().__init__() # instantiate out nn.Module 實例化self.fc1 = nn.Linear(in_features= in_features, out_features= h1)self.fc2 = nn.Linear(in_features= h1, out_features= h2)self.out = nn.Linear(in_features= h2, out_features= out_features)# moves everything forward def forward(self, x):# rectified linear unit 修正線性單元 大于0則保留,小于0另其等于0x = F.relu(self.fc1(x))x = F.relu(self.fc2(x))x = self.out(x)return x# %%

# before we turn it on we need to create a manual seed, because networks involve randomization every time.

# say hey start here and then go randomization, then we'll get basically close to the same outputs# pick a manual seed for randomization

torch.manual_seed(seed= 41)

# create an instance of model

model = Model()2.load data and train nerual network model?

torch.optim

torch.optim — PyTorch 2.2 documentation

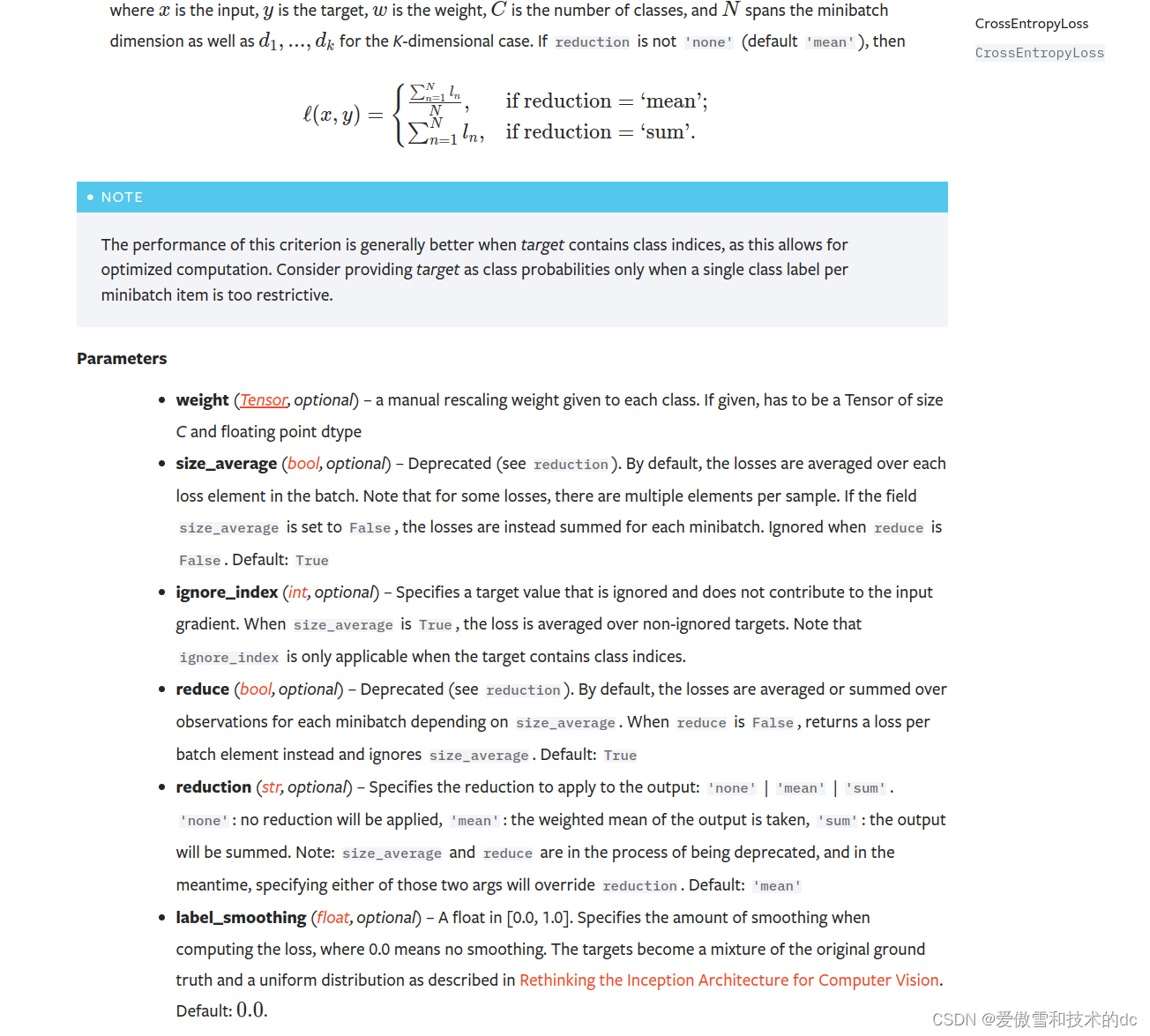

1. optimizer.zero_grad()

-

作用: 清零梯度。在訓練神經網絡時,每次參數更新前,需要將梯度清零。因為如果不清零,梯度會累加到已有的梯度上,這是PyTorch的設計決策,目的是為了處理像RNN這樣的網絡結構,它們在一個循環中多次計算梯度。

-

原理: PyTorch在進行反向傳播(

backward)時,會累計梯度,而不是替換掉當前的梯度值。因此,如果不手動清零,梯度值會不斷累積,導致訓練過程出錯。

2. loss.backward()

-

作用: 計算梯度。這一步會根據損失函數對模型參數進行梯度的計算。在神經網絡中,損失函數衡量的是模型輸出與真實標簽之間的差異,通過反向傳播算法,可以計算出損失函數關于模型各個參數的梯度。

-

原理: 反向傳播是一種有效計算梯度的算法,它首先計算輸出層的梯度,然后逆向逐層傳播至輸入層。這個過程依賴于鏈式法則,是深度學習訓練中的核心。

3. optimizer.step()

-

作用: 更新參數。基于計算出的梯度,更新模型的參數。這一步實際上是在執行優化算法(如SGD、Adam等),根據梯度方向和設定的學習率調整參數值,以減小損失函數的值。

-

原理: 優化器根據梯度下降(或其它優化算法)更新模型參數。梯度指示了損失函數增長最快的方向,因此通過向相反方向調整參數,模型的預測誤差會逐漸減小。

-

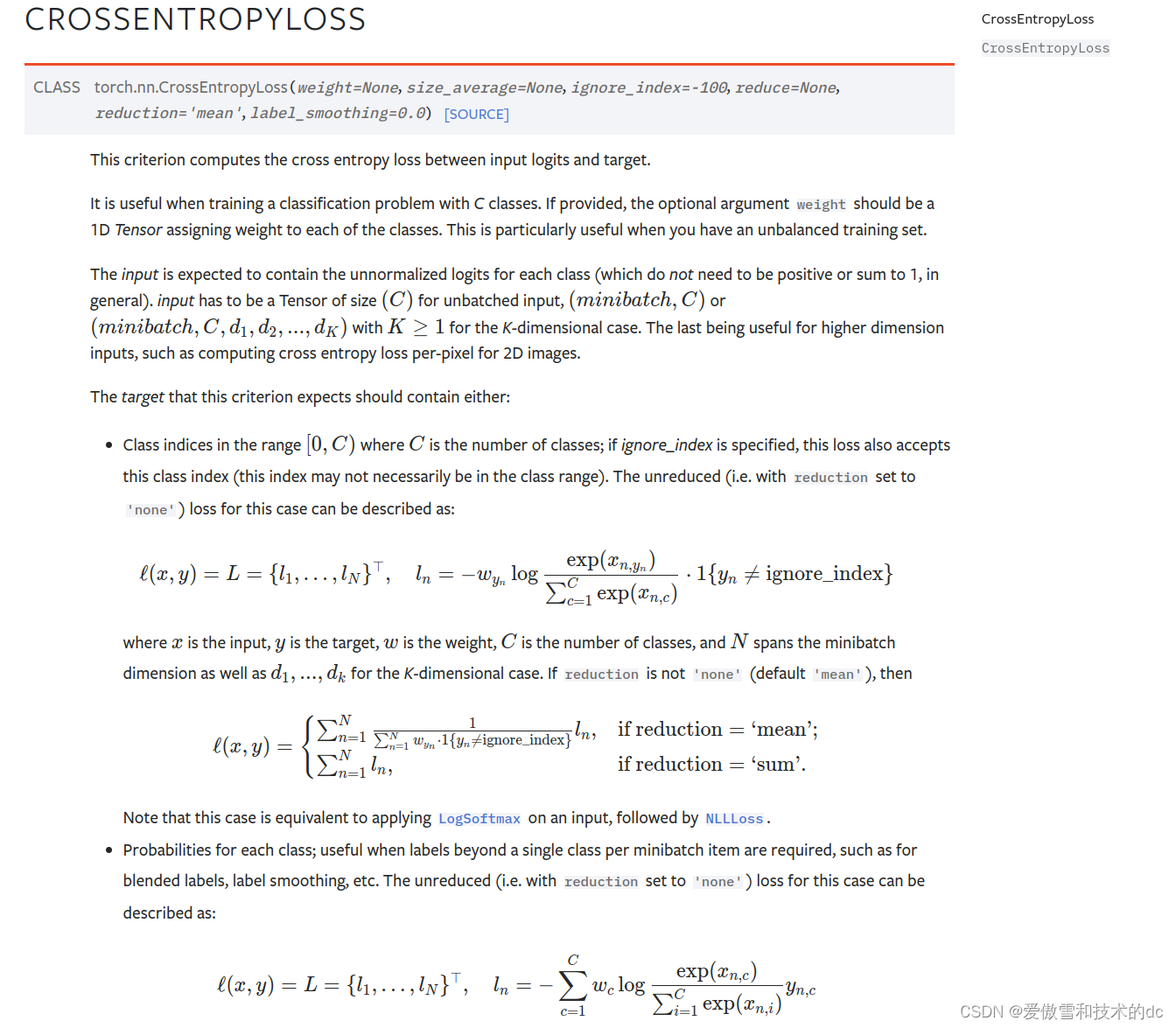

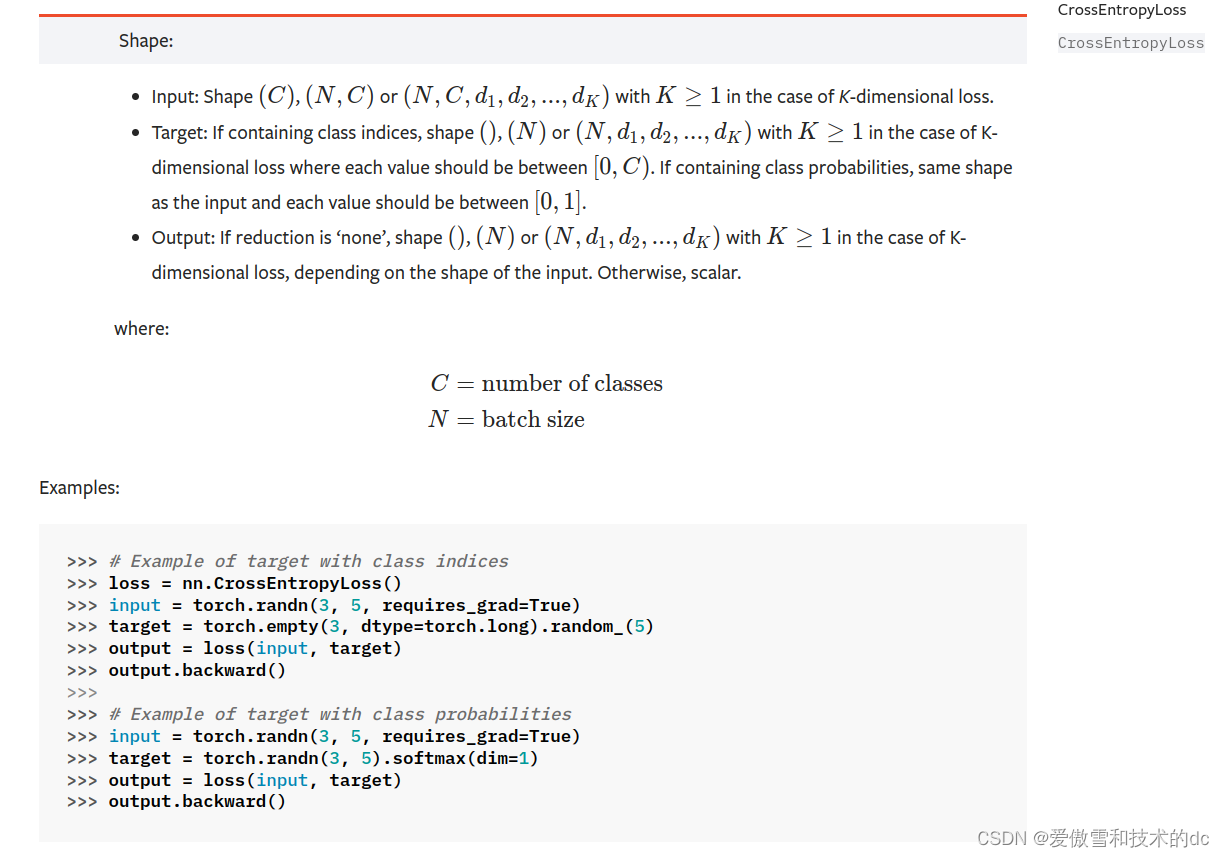

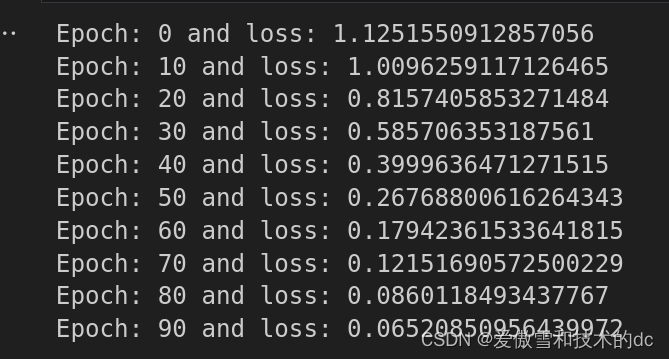

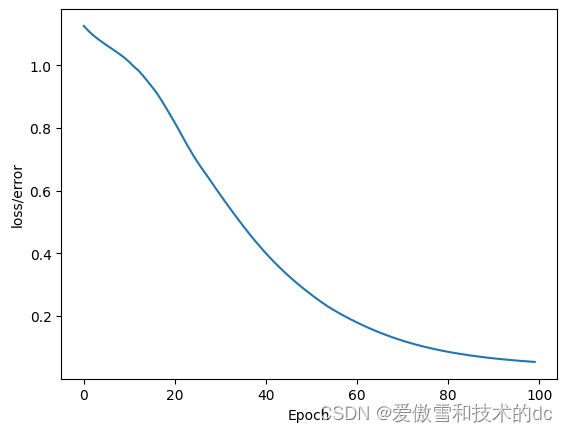

# %% import pandas as pd import matplotlib.pyplot as plt %matplotlib inline# %% # url = 'https://gist.githubusercontent.com/curran/a08a1080b88344b0c8a7/raw/0e7a9b0a5d22642a06d3d5b9bcbad9890c8ee534/iris.csv' my_df = pd.read_csv('dataset/iris.csv')# %% # change last column from strings to integers my_df['species'] = my_df['species'].replace('setosa', 0.0) my_df['species'] = my_df['species'].replace('versicolor', 1.0) my_df['species'] = my_df['species'].replace('virginica', 2.0) my_df# my_df.head() # my_df.tail()# %% # train test split ,set X,Y X = my_df.drop('species', axis = 1) # 刪除指定列 y = my_df['species']# %% #Convert these to numpy arrays X = X.values y = y.values # X# %% # train test split from sklearn.model_selection import train_test_split X_train, X_test, y_train, y_test = train_test_split(X, y, test_size= 0.2, random_state= 41)# %% # convert X features to float tensors X_train = torch.FloatTensor(X_train) X_test = torch.FloatTensor(X_test) #convert y labels to long tensors y_train = torch.LongTensor(y_train) y_test = torch.LongTensor(y_test)# %% # set the criterion of model to measure the error,how far off the predicitons are from the data criterion = nn.CrossEntropyLoss() # choose Adam optimizer, lr = learing rate (if error does not go down after a bunch of # iterations(epochs), lower our learning rate),學習率越低,學習所需時間越長 optimizer = torch.optim.Adam(model.parameters(), lr= 0.01) # 傳進去的參數包括fc1, fc2, out # model.parameters# %% # train our model # epochs? (one run through all the training data in out network ) epochs = 100 losses = [] for i in range(epochs):# go forward and get a predictiony_pred = model.forward(X_train) # get a predicted results#measure the loss/error, gonna be high at firstloss = criterion(y_pred, y_train) # predicted values vs y_train# keep track of our losses#detach()不再跟蹤計算圖中的梯度信息,numpy(): 這個方法將PyTorch張量轉換成NumPy數組。因為NumPy數組在Python科學計算中非常普遍,很多庫和函數需要用到NumPy數組作為輸入。losses.append(loss.detach().numpy()) #print every 10 epochesif i % 10 == 0:print(f'Epoch: {i} and loss: {loss}')# do some back propagation: take the error rate of forward propagation and feed it back# thru the network to fine tune the weights# optimizer.zero_grad() 清零梯度,為新的梯度計算做準備。# loss.backward() 計算梯度,即對損失函數進行微分,獲取參數的梯度。# optimizer.step() 更新參數,根據梯度和學習率調整參數值以最小化損失函數。optimizer.zero_grad()loss.backward()optimizer.step()# %% # graph it out plt.plot(range(epochs), losses) plt.ylabel("loss/error") plt.xlabel("Epoch")

-

------數據的復制)

利用pytorch復現全卷積神經網絡FCN)

)

)